Abstract

In this paper, we deal with a class of cellular neural networks with time-varying delays. Applying differential inequality strategies without assuming the boundedness conditions on the activation functions, we obtain a new sufficient condition that ensures that all solutions of the considered neural networks converge exponentially to the zero equilibrium point. We give an example to illustrate the effectiveness of the theoretical results. The results obtained in this paper are completely new and complement the previously known studies of Tang (Appl. Math. Lett. 21:872–876, 2008).

Similar content being viewed by others

1 Introduction

It is well known that cellular neural networks have attracted broad attention in numerous scientific fields due to their potential application prospect in psychophysics, speech, perception, robotics, pattern recognition, signal and image processing, optimization and population dynamics, and so on [2,3,4,5,6]. Noting that the design of cellular neural depends largely on the global exponential convergence natures, a lot of authors investigated the global exponential convergence of the equilibria and periodic solutions for cellular neural networks, and many outstanding achievements have been stated. For example, Zhang [7] focused on the exponential convergence for cellular neural networks with continuously distributed leakage delays. Applying the Lyapunov function method and differential inequality strategies, sufficient conditions that ensure that all solutions of the networks convergence exponentially to the zero equilibrium point are obtained. Liu [8] investigated the convergence for HCNNs with delays and oscillating coefficients in leakage terms. Using some suitable integral inequality technique, the authors established some sufficient conditions to ensure that all solutions of the networks convergence exponentially to the zero equilibrium point, Zhao and Wang [9] presented some sufficient conditions for exponential convergence via the Lyapunov functional method and differential inequality strategies for a SICNN with leakage delays and continuously distributed delays of neutral type. Chen and Yang [10] established an exponential convergence criteria for HRNNs with continuously distributed delays in the leakage terms by using the Lyapunov functional method and differential inequality strategies. For more results on this topic, we refer the readers to [11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42].

In 2008, Tang [1] considered the following delayed cellular neural networks with time-varying coefficients:

where \(i = 1, 2, \ldots, n\), \(t\in R\), n corresponds to the number of units in a neural network, \(x_{i} (t)\) denotes the state vector of the ith unit at the time t, \(c_{i} (t)>0\) denotes the rate with which the ith unit will reset its potential to the resting state in isolation when disconnected from the network and external inputs at the time t, \(a_{ij}(t)\) and \(b_{ij}(t)\) represent the connection weights at the time t, \(\tau_{ij}(t)\geq0\) denotes the transmission delay of the ith unit along the axon of the jth unit at the time t, \(I_{i}(t)\) denotes the external bias on the ith unit at the time t, \(f_{j}\) and \(g_{j}\) are activation functions of signal transmission, and \(K_{ij}(u)\) corresponds to the transmission delay kernel. Mathematical analysis technique was applied under the following conditions:

-

(A1)

For each \(j\in\{1,2,\ldots,n\}\), there exist nonnegative constants \(\bar{L}_{j}\) and \({L}_{j}\) such that

$$\bigl\vert f_{j}(u) \bigr\vert \leq\bar{L}_{j} \vert u \vert ,\qquad \bigl\vert g_{j}(u) \bigr\vert \leq{L}_{j} \vert u \vert \quad \mbox{for all } u\in R. $$ -

(A2)

For \(i\in\{1,2,\ldots,n\}\), there exist constants \(T_{0}>0\), \(\eta>0\), \(\lambda>0\), and \({\xi}_{0}>0\) such that

$$\begin{aligned}& -\bigl[c_{i}(t)-\lambda\bigr]\xi_{i}+\sum _{j=1}^{N} \bigl\vert a_{ij}(t) \bigr\vert e^{\lambda\tau}\bar {L}_{j}\xi_{j}+\sum _{j=1}^{N} \bigl\vert b_{ij}(t) \bigr\vert \int_{0}^{\infty}K_{ij}(u)e^{\lambda u}\,du {L}_{j}\xi_{j} \\& \quad < -\eta< 0\quad \mbox{for all } t>T_{0}, \end{aligned}$$where \(\tau=\max_{1\leq i,j\leq n}\{\sup_{t\in R}\tau_{ij}(t)\}\).

-

(A3)

For \(i\in\{1,2,\ldots,n\}\), \(I_{i}(t)=O(e^{-\lambda t})\).

Some sufficient conditions ensuring that all solutions of system (1.1) converge exponentially to zero equilibrium point were obtained. Here we would like to point out that Tang [1] investigated the exponential convergence by assuming that the leakage term coefficient functions \(c_{i}(t)\) are not oscillating, that is, \(c_{i}(t)>0\), \(i=1,2,\ldots,n\). However, in many cases, oscillating coefficients usually occur in linearizations of population dynamics models due to seasonal fluctuations, for example, in winter the death rate maybe greater than the birth rate [5, 43]. Thus the study on the exponential convergence for cellular neural networks with oscillating coefficients in the leakage terms has important principle value and important realistic significance.

In this paper, we further consider the exponential convergence for cellular neural networks (1.1). The initial conditions associated with (1.1) are given by

where BC denotes the set of all real-valued bounded and continuous functions defined on \((-\infty,0]\). Differently from the assumptions in [1], we establish other sufficient conditions that guarantee that all solutions of the considered neural networks converge exponentially to the zero equilibrium point. We believe that this research on the exponential convergence for cellular neural networks plays an important role in designing the cellular neural networks with time-varying delays. Our results are new and a good complement to the work of [1].

For simplicity, we denote by \(R^{p}\) (\(R=R^{1}\)) the set of all p-dimensional real vectors (real numbers). Set \(x(t)=(x_{1}(t),x_{2}(t),\ldots,x_{n}(t))^{T}\in R^{n}\). For any \(x(t)\in R^{n}\), we let \(|x|\) denote the absolute value vector given by \(|x|=(|x_{1}|,|x_{2}|,\ldots,|x_{n}|)^{T}\) and define \(\|x\|=\max_{1\leq i\leq n}|x_{i}(t)|\). For \(f\in BC\), we denote \(f^{+}=\sup_{t\in R}|f(t)|\) and \(f^{-}=\inf_{t\in R}|f(t)|\). Let \(\tau^{+}=\max_{1\leq i,j\leq n}\{\sup_{t\in R}\tau_{ij}(t)\}\).

Throughout this paper, we assume that the following conditions are satisfied:

-

(H1)

For \(i = 1, 2, \ldots, n\), there exist constants \(\bar{c}_{i}>0\) and \(M>0\) such that

$$e^{-\int_{s}^{t} c_{i}(u)\,du} \leq M e^{-(t-s)\bar{c}_{i}}\quad \mbox{for all } t,s \in R \mbox{ such that } t-s\geq0. $$ -

(H2)

For \(j= 1, 2, \ldots, n\), there exist positive constants \(L_{j}^{f} \) and \(L_{j}^{g}\) such that

$$\begin{aligned}& \bigl\vert f_{j}(u)-f_{j}(v) \bigr\vert \leq L_{j}^{f} \vert u-v \vert , \qquad \bigl\vert g_{j}(u)-g_{j}(v) \bigr\vert \leq L_{j}^{g} \vert u-v \vert , \\& f_{j}(0)=0,\qquad g_{j}(0)=0 \end{aligned}$$for \(u,v\in R\).

-

(H3)

For \(i,j = 1, 2, \ldots, n\), the delay kernel \(K_{ij}: [0,\infty)\rightarrow R\) is continuous and absolutely integrable.

-

(H4)

For \(i= 1, 2, \ldots, n\), there exists a positive constant \(\mu_{0}\) such that

$$I_{i}(t)=O\bigl(e^{-\mu_{0} t}\bigr) \quad (t\rightarrow+\infty),\qquad \frac{MG_{i}}{\bar{c}_{i}}< 1, $$where

$$G_{i}=\sum_{j=1}^{n}a_{ij}^{+}L_{j}^{f} +\sum_{j=1}^{n}b_{ij}^{+}L_{j}^{g} \int_{0}^{\infty}K_{ij}(u)\,du. $$

The remainder of the paper is organized as follows. In Sect. 2, we establish a sufficient condition which ensures the exponential convergence of all solutions of the considered neural networks. In Sect. 3, we give an example that illustrates the theoretical findings. The paper ends with a brief conclusion in Sect. 4.

2 Global exponential convergence

Theorem 2.1

If (H1)–(H4) hold, then for every solution \(x(t)=(x_{1}(t),x_{2}(t), \ldots,x_{n}(t))^{T}\) of system (1.1) with any initial value conditions (1.2), there exists a positive constant λ such that \(x_{i}(t)=O(e^{-\lambda t})\) as \(t\rightarrow+\infty\), \(i=1,2,\ldots,n\).

Proof

We first define the continuous function

where \(\epsilon\in[0, \min\{\mu_{0},\min_{1\leq i\leq n} \bar{c}_{i}\})\). By (H4) we get

In view of the continuity of \(\varTheta_{i}(\epsilon)\), we can choose a constant \(\lambda\in[0, \min\{\mu_{0},\min_{1\leq i\leq n} \bar{c}_{i}\})\) such that

where

Let \(x(t)=(x_{1}(t),x_{2}(t),\ldots,x_{n}(t))^{T}\) be the solution of system (1.1) with any initial value \(\varphi(t)=(\varphi_{1}(t),\varphi_{2}(t),\ldots,\varphi_{n}(t))^{T}\) satisfying (1.2) and \(\|\varphi\|_{0}=\sup_{t\leq0}\max_{1\leq i\leq n}|\varphi_{i}(t)|\). For any \(\varepsilon>0\), we have

where Ω is a sufficiently large constant such that

and

It follows from (2.3) and (2.6) that

Now we will prove that

If (2.8) does not hold, then there must exist i and \(t_{0}\) such that

Notice that

Multiplying both sides of (2.10) by \(e^{-\int_{0}^{s} c_{i}(u)\,du}\) and then integrating on \([0,t]\), we have

and

By (H1)–(H4) and (2.12) we get

By (2.3), (2.6), (2.7), and (2.13) we have

which contradicts (2.9). So (2.8) holds. Letting \(\varepsilon\rightarrow0^{+}\), it follows from (2.8) that

The proof of Theorem 2.1 is complete. □

Remark 2.1

Tang [1] analyzed the exponential convergence for cellular neural network model (1.1) under conditions (A1)–(A3). In this paper, we discuss the exponential convergence for cellular neural network model (1.1) under conditions (H1)–(H4). Moreover, the analysis method is different from that in [1].

3 Example

In this section, we present an example to verify the analytical predictions obtained in the previous section. Consider the following cellular neural networks with time-varying delays:

where \(g_{j}(x)=f_{j}(x)=0.03\sin x^{2}\) (\(j=1,2,3\)) and

Then \(\bar{c}_{1}=0.2\), \(\bar{c}_{2}=0.1\), \(\bar{c}_{3}=0.1\), \(L_{j}^{f}=L_{j}^{g}=0.03\), and

Let \(M=e^{0.01}\). Then

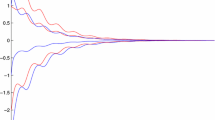

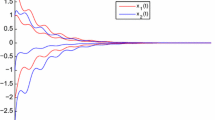

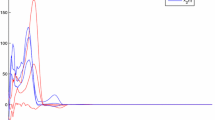

Thus all the conditions of Theorem 2.1 are satisfied. So we can conclude that all solutions of (3.1) converge exponentially to the zero equilibrium point \((0,0,0)^{T}\). This result is shown by computer simulation in Figs. 1–3.

Time history of model (3.1)

Time history of system (3.1)

Time history of model (3.1)

4 Conclusions

In this paper, we are concerned with a class of cellular neural networks with time-varying delays. Using the differential inequality under the unboundedness conditions of the activation functions, we establish a sufficient condition guaranteeing that all solutions of the considered neural networks converge exponentially to the zero equilibrium point. The obtained sufficient condition is easy to check in practice. The results derived in this paper are completely new and complement the previously known ones [1]. We present an example to illustrate the effectiveness of our theoretical results. The obtained results play a key role in designing neural networks and can be applied in many areas such as artificial intelligence, image recognition, disease diagnosis, and so on. Recently, pseudo-almost periodic solutions of cellular neural networks have also become a hot issue. However, there are rare results on pseudo-almost periodic solutions of cellular neural networks, which are worth studying in near future.

References

Tang, Y.: Exponential convergence of delayed cellular neural networks with time-varying coefficients. Appl. Math. Lett. 21, 872–876 (2008)

Bouzerdoum, A., Pinter, R.B.: Shunting inhibitory cellular neural networks: derivation and stability analysis. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 40(3), 215–221 (1993)

Bouzerdoum, A., Pinter, R.B.: Analysis and analog implementation of directionally sensitive shunting inhibitory cellular neural networks. In: Visual Information Processing: From Neurons to Chips, vol. SPIE–1473, pp. 29–38 (1991)

Bouzerdoum, A., Pinter, R.B.: Nonlinear lateral inhibition applied to motion detection in the fly visual system. In: Pinter, R.B., Nabet, B. (eds.) Nonlinear Vision, pp. 423–450. CRC Press, Boca Raton (1992)

Jiang, A.: Exponential convergence for shunting inhibitory cellular neural networks with oscillating coefficients in leakage terms. Neurocomputing 165, 159–162 (2015)

Barbagallo, A., Ragusa, M.A.: On Lagrange duality theory for dynamics vaccination games. Ric. Mat. 67(2), 969–982 (2018)

Zhang, A.P.: New results on exponential convergence for cellular neural networks with continuously distributed leakage delays. Neural Process. Lett. 41, 421–433 (2015)

Liu, X.J.: Improved convergence criteria for HCNNs with delays and oscillating coefficients in leakage terms. Neural Comput. Appl. 27(4), 917–925 (2016)

Zhao, C.H., Wang, Z.Y.: Exponential convergence of a SICNN with leakage delays and continuously distributed delays of neutral type. Neural Process. Lett. 41, 239–247 (2015)

Chen, Z.B., Yang, M.Q.: Exponential convergence for HRNNs with continuously distributed delays in the leakage terms. Neural Comput. Appl. 23, 2221–2229 (2013)

Long, S.J., Li, H.H., Zhang, Y.X.: Dynamic behavior of nonautonomous cellular neural networks with time-varying delays. Neurocomputing 168, 846–852 (2015)

Sayli, M., Yilmaz, E.: Periodic solution for state-dependent impulsive shunting inhibitory CNNs with time-varying delays. Neural Netw. 68, 1–11 (2015)

Liang, S., Wu, R.C., Chen, L.P.: Comparison principles and stability of nonlinear fractional-order cellular neural networks with multiple time delays. Neurocomputing 168, 618–625 (2015)

Wang, P., Li, B., Li, Y.K.: Square-mean almost periodic solutions for impulsive stochastic shunting inhibitory cellular neural networks with delays. Neurocomputing 167, 76–82 (2015)

Qin, S.T., Wang, J., Xue, X.Q.: Convergence and attractivity of memristor-based cellular neural networks with time delays. Neural Netw. 63, 223–233 (2015)

Abdurahman, A., Jiang, H.J., Teng, Z.D.: Finite-time synchronization for fuzzy cellular neural networks with time-varying delays. Fuzzy Sets Syst. 297, 96–111 (2016)

Long, S.J., Xu, D.Y.: Global exponential p-stability of stochastic non-autonomous Takagi–Sugeno fuzzy cellular neural networks with time-varying delays and impulses. Fuzzy Sets Syst. 253, 82–100 (2014)

Gao, J., Wang, Q.R., Zhang, L.W.: Existence and stability of almost-periodic solutions for cellular neural networks with time-varying delays in leakage terms on time scales. Appl. Math. Comput. 237, 639–649 (2014)

Zhou, L.Q., Chen, X.B., Yang, Y.X.: Asymptotic stability of cellular neural networks with multiple proportional delays. Appl. Math. Comput. 229, 457–466 (2014)

Rakkiyappan, R., Sakthivel, N., Park, J.H., Kwon, O.M.: Sampled-data state estimation for Markovian jumping fuzzy cellular neural networks with mode-dependent probabilistic time-varying delays. Appl. Math. Comput. 221, 741–769 (2013)

Tang, Q., Jian, J.G.: Global exponential convergence for impulsive inertial complex-valued neural networks with time-varying delays. Math. Comput. Simul. 159, 39–56 (2019)

Jian, J.Q., Wan, P.: Global exponential convergence of fuzzy complex-valued neural networks with time-varying delays and impulsive effects. Fuzzy Sets Syst. 338, 23–39 (2018)

Tang, X.H., Chen, S.T.: Ground state solutions of Nehari–Pohoz̆aev type for Kirchhoff-type problems with general potentials. Calc. Var. Partial Differ. Equ. 56(4), 1–25 (2017)

Chen, S.T., Tang, X.H.: Improved results for Klein–Gordon–Maxwell systems with general nonlinearity. Discrete Contin. Dyn. Syst. 38(5), 2333–2348 (2018)

Chen, S.T., Tang, X.H.: Geometrically distinct solutions for Klein–Gordon–Maxwell systems with super-linear nonlinearities. Appl. Math. Lett. 90, 188–193 (2019)

Tang, X.H., Chen, S.T.: Ground state solutions of Schrödinger–Poisson systems with variable potential and convolution nonlinearity. J. Math. Anal. Appl. 473(1), 87–111 (2019)

Chen, J., Zhang, N.: Infinitely many geometrically distinct solutions for periodic Schrödinger–Poisson systems. Bound. Value Probl. 2019, 64 (2019)

Zuo, M.Y., Hao, X.A., Liu, L.S., Cui, Y.J.: Existence results for impulsive fractional integro-differential equation of mixed type with constant coefficient and antiperiodic boundary conditions. Bound. Value Probl. 2017, 161 (2017)

Wang, Y., Jiang, J.Q.: Existence and nonexistence of positive solutions for the fractional coupled system involving generalized p-Laplacian. Adv. Differ. Equ. 2017, 337 (2017)

Feng, Q.H., Meng, F.W.: Traveling wave solutions for fractional partial differential equations arising in mathematical physics by an improved fractional Jacobi elliptic equation method. Math. Methods Appl. Sci. 40(10), 3676–3686 (2017)

Zhu, B., Liu, L.S., Wu, Y.H.: Existence and uniqueness of global mild solutions for a class of nonlinear fractional reaction–diffusion equations with delay. Comput. Math. Appl. (2016) in press

Li, M.M., Wang, J.R.: Exploring delayed Mittag-Leffler type matrix functions to study finite time stability of fractional delay differential equations. Appl. Math. Comput. 324, 254–265 (2018)

Wu, H.: Liouville-type theorem for a nonlinear degenerate parabolic system of inequalities. Math. Notes 103(1), 155–163 (2018)

Du, J.T., Li, S.X., Zhang, Y.H.: Essential norm of weighted composition operators on Zygmund-type spaces with normal weight. Math. Inequal. Appl. 21(3), 701–714 (2018)

Yan, F.L., Zuo, M.Y., Hao, X.: Positive solution for a fractional singular boundary value problem with p-Laplacian operator. Bound. Value Probl. 2018, 51 (2018)

Zhang, J., Wang, J.R.: Numerical analysis for Navier–Stokes equations with time fractional derivatives. Appl. Math. Comput. 336, 481–489 (2018)

Zhang, J., Lou, Z.L., Jia, Y.J., Shao, W.: Ground state of Kirchhoff type fractional Schrödinger equations with critical growth. J. Math. Anal. Appl. 462(1), 57–83 (2018)

Zhang, X.G., Liu, L.S., Wu, Y.H., Wiwatanapataphee, B.: Nontrivial solutions for a fractional advection dispersion equation in anomalous diffusion. Appl. Math. Lett. 66, 1–8 (2017)

Wang, Y.Q., Liu, L.S.: Positive solutions for a class of fractional 3-point boundary value problems at resonance. Adv. Differ. Equ. 2017, 7 (2017)

Li, X., Ho, D., Cao, J.: Finite-time stability and settling-time estimation of nonlinear impulsive systems. Automatica 99, 361–368 (2019)

Li, X., Yang, X., Song, S.: Lyapunov conditions for finite-time stability of time-varying time-delay systems. Automatica 103, 135–140 (2019)

Li, X., Wu, J.: Stability of nonlinear differential systems with state-dependent delayed impulses. Automatica 64, 63–69 (2016)

Berezansky, L., Braverman, E.: On exponential stability of a linear delay differential equation with an oscillating coefficient. Appl. Math. Lett. 22, 1833–1837 (2009)

Acknowledgements

The work is supported by National National Natural Science Foundation of China (No. 61673008), Project of High-level Innovative Talents of Guizhou Province ([2016]5651), Major Research Project of The Innovation Group of The Education Department of Guizhou Province ([2017]039), Foundation of Science and Technology of Guizhou Province ([2018]1025), Project of Key Laboratory of Guizhou Province with Financial and Physical Features ([2017]004) and Hunan Provincial Key Laboratory of Mathematical Modeling and Analysis in Engineering (Changsha University of Science & Technology) (2018MMAEZD21). The authors would like to thank the referees and the editor for helpful suggestions incorporated into this paper.

Availability of data and materials

Not applicable.

Authors’ information

Changjin Xu’s research interests are the bifurcation theory of delayed differential equations. Peiluan Li’s research topics are nonlinear systems, functional differential equations, boundary value problems.

Funding

The work is supported by National National Natural Science Foundation of China (No. 61673008), Project of High-Level Innovative Talents of Guizhou Province ([2016]5651), Major Research Project of The Innovation Group of The Education Department of Guizhou Province ([2017]039), Project of Key Laboratory of Guizhou Province with Financial and Physical Features ([2017]004), and Hunan Provincial Key Laboratory of Mathematical Modeling and Analysis in Engineering (Changsha University of Science & Technology) (2018MMAEZD21).

Author information

Authors and Affiliations

Contributions

Both authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xu, C., Li, P. New proof on exponential convergence for cellular neural networks with time-varying delays. Bound Value Probl 2019, 123 (2019). https://doi.org/10.1186/s13661-019-1235-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13661-019-1235-8