Abstract

Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal{H}\) with inner product \(\langle \cdot , \cdot \rangle \), and let \(f: \mathcal{H}\rightarrow \mathcal{H}\) be a nonlinear operator. Consider the inverse variational inequality (in short, \(\operatorname{IVI}(C,f)\)) problem of finding a point \(\xi ^{*}\in \mathcal{H}\) such that

In this paper, we prove that \(\operatorname{IVI}(C,f)\) has a unique solution if f is Lipschitz continuous and strongly monotone, which essentially improves the relevant result in (Luo and Yang in Optim. Lett. 8:1261–1272, 2014). Based on this result, an iterative algorithm, named the alternating contraction projection method (ACPM), is proposed for solving Lipschitz continuous and strongly monotone inverse variational inequalities. The strong convergence of the ACPM is proved and the convergence rate estimate is obtained. Furthermore, for the case that the structure of C is very complex and the projection operator \(P_{C}\) is difficult to calculate, we introduce the alternating contraction relaxation projection method (ACRPM) and prove its strong convergence. Some numerical experiments are provided to show the practicability and effectiveness of our algorithms. Our results in this paper extend and improve the related existing results.

Similar content being viewed by others

1 Introduction

Let \(\mathcal{H}\) be a real Hilbert space with inner product \(\langle \cdot ,\cdot \rangle \) and induced norm \(\Vert \cdot \Vert \). Recall that the metric projection operator of a nonempty closed convex subset C of \(\mathcal{H}\), \(P_{C}:\mathcal{H}\rightarrow C\), is defined by

Let C be a nonempty closed convex subset of \(\mathcal{H}\), and let \(F: C\rightarrow \mathcal{H}\) be a nonlinear operator. The so-called variational inequality (in short, \(\operatorname{VI}(C,F)\)) problem is to find a point \(u^{*}\in C\) such that

The variational inequalities have many important applications in different fields and have been studied intensively, see [1, 2, 4, 7,8,9,10,11,12,13,14, 17, 24, 26, 27, 31, 32, 36, 38,39,40, 42,43,44,45,46], and the references therein.

It is easy to verify that \(u^{*}\) solves \(\operatorname{VI}(C,F)\) if and only if \(u^{*}\) is a solution of the fixed point equation

where I is the identity operator on \(\mathcal{H}\) and λ is an arbitrary positive constant.

A class of variant variational inequalities is the inverse variational inequality (in short \(\operatorname{IVI}(C,f)\)) problem [19], which is to find a point \(\xi ^{*}\in \mathcal{H}\) such that

where \(f: \mathcal{H}\rightarrow \mathcal{H}\) is a nonlinear operator. The inverse variational inequalities are also widely used in many different fields such as the transportation system operation, control policies, and the electrical power network management [20, 22, 41].

Now we give a brief overview of the properties and algorithms of inverse variational inequalities. For the properties of inverse variational inequalities, Han et al. [16] proved that the solution set of any monotone inverse variational inequality is convex. He [18] proved that the inverse variational inequality \(\operatorname{IVI}(C,f)\) is equivalent to the following projection equation:

where β is an arbitrary positive constant. Consequently, the problem \(\operatorname{IVI}(C,f)\) equals the fixed point problem of the mapping

The following lemma reveals the intrinsic relationship between variational inequalities and inverse variational inequalities.

Lemma 1.1

If \(f: \mathcal{H}\rightarrow \mathcal{H}\) is a one-to-one correspondence, then \(\xi ^{*}\in \mathcal{H}\) is a solution of \(\operatorname{IVI}(C,f)\) if and only if \(u^{*}=f(\xi ^{*})\) is a solution of \(\operatorname{VI}(C,f ^{-1})\).

As for the existence and uniqueness of solutions for Lipschitz continuous and strongly monotone inverse variational inequalities, Luo et al. [34] proved the following result.

Lemma 1.2

([34, Lemma 1.3])

If \(f: \mathcal{H}\rightarrow \mathcal{H}\) is L-Lipschitz continuous and η-strongly monotone, and there exists some positive constant β such that

and

then T is a strict contraction with the coefficient

Hence the inverse variational inequality \(\operatorname{IVI}(C,f)\) has one and only one solution.

It is easy to see that conditions (4)–(6) are not only rather harsh, but also nonessential.

The main iterative algorithms to approximate the inverse variational inequalities (3) are projection methods [28]. He et al. [21, 23] introduced PPA-based methods, exact proximal point algorithm and inexact proximal point algorithm, for monotone inverse variational inequalities and constrained ‘black-box’ inverse variational inequalities, respectively. They also gave the prediction-correction proximal point algorithm and the adaptive prediction-correction proximal point algorithm. Under certain conditions, the convergence rate of these algorithms is proved to be linear. Based on Lemma 1.2, Luo et al. [34] introduced several regularized iterative algorithms to solve monotone and Lipschitz continuous inverse variational inequalities.

There is also a lot of research on the properties of the inverse variational inequalities. We refer the reader to the papers [29, 30, 35, 37], and the references therein for the well-posedness of inverse variational inequalities. Very recently, Chen et al. [6] obtained the optimality conditions for solutions of constrained inverse vector variational inequalities by means of nonlinear scalarization.

Although inverse variational inequalities have a wide range of applications, they have not received enough attention. For example, some fundamental problems, including the existence and uniqueness of solutions, still need further study.

In this paper, based on Lemma 1.1, we firstly prove that \(\operatorname{IVI}(C,f)\) has a unique solution if f is Lipschitz continuous and strongly monotone. This means that conditions (4)–(6) are all redundant and therefore can be eliminated. By making full use of the existing results, an iterative algorithm, named alternating contraction projection method (ACPM), is proposed for solving Lipschitz continuous and strongly monotone inverse variational inequalities. The strong convergence of the ACPM is proved and the convergence rate estimate is obtained. Furthermore, for the case that the structure of C is very complex and the projection operator \(P_{C}\) is difficult to calculate, we introduce the alternating contraction relaxation projection method (ACRPM) and prove its strong convergence. Some numerical experiments, which show advantages of the proposed algorithms, are provided. The results in this paper extend and improve the related existing results.

2 Preliminaries

In this section, we list some concepts and tools that will be used in the proofs of the main results. In the sequel, we use the notations:

-

(i)

→ denotes strong convergence;

-

(ii)

⇀ denotes weak convergence;

-

(iii)

\(\omega _{w}(x_{n}) =\{x\mid \exists \{x_{n_{k}}\}_{k=1} ^{\infty }\subset \{x_{n}\}_{n=1}^{\infty }\text{ such that } x_{n_{k}} \rightharpoonup x\}\) denotes the weak ω-limit set of \(\{x_{n}\}_{n=1}^{\infty }\).

The next inequality is trivial but in common use.

Lemma 2.1

Definition 2.1

Let \(f: \mathcal{H}\rightarrow \mathcal{H}\) be a single-valued mapping. f is said to be

-

(i)

monotone if

$$ \bigl\langle f(x)-f(y),x-y \bigr\rangle \geq 0, \quad \forall x, y\in \mathcal{H}; $$ -

(ii)

η-strongly monotone if there exists a constant \(\eta > 0\) such that

$$ \bigl\langle f(x)-f(y),x-y \bigr\rangle \geq \eta \Vert x-y \Vert ^{2} , \quad \forall x, y \in \mathcal{H}; $$ -

(iii)

L-Lipschitz continuous if there exists a constant \(L > 0\) such that

$$ \bigl\Vert f(x)-f(y) \bigr\Vert \leq L \Vert x-y \Vert , \quad \forall x, y\in \mathcal{H}; $$ -

(iv)

nonexpansive if

$$ \bigl\Vert f(x)-f(y) \bigr\Vert \leq \Vert x-y \Vert , \quad \forall x, y\in \mathcal{H}; $$ -

(v)

firmly nonexpansive if

$$ \bigl\Vert f(x)-f(y) \bigr\Vert ^{2}\leq \Vert x-y \Vert ^{2}- \bigl\Vert \bigl(x-f(x)\bigr)-\bigl(y-f(y)\bigr) \bigr\Vert ^{2} , \quad \forall x, y\in \mathcal{H}. $$

It is well known that \(P_{C}\) is also firmly nonexpansive.

For the projection operator \(P_{C}\), the following characteristic inequality holds.

Lemma 2.2

([15, Sect. 3])

Let \(z\in \mathcal{H}\) and \(u\in C\). Then \(u=P_{C}z\) if and only if

By using Lemma 2.2 and the definition of variational inequality (1), we get the following results.

Lemma 2.3

\(u\in C\) is a solution of \(\operatorname{VI}(C,F)\) if and only if u satisfies the fixed-point equation

where μ is an arbitrary positive constant.

Lemma 2.4

([5, Theorem 5])

Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal{H}\), and let \(F: \mathcal{H}\rightarrow \mathcal{H}\) be L-Lipschitz continuous and η-strongly monotone. Let λ and μ be constants such that \(\lambda \in (0,1) \) and \(\mu \in (0, \frac{2\eta }{L^{2}})\), respectively, and let \(T^{\mu }=P_{C}( I-\mu F)\) (or \(I-\mu F \)) and \(T^{\lambda ,\mu }=P _{C}( I-\lambda \mu F)\) (or \(I-\lambda \mu F \)). Then \(T^{\mu }\) and \(T^{\lambda ,\mu } \) are all strict contractions with coefficients \(1-\tau \) and \(1-\lambda \tau \), respectively, where \(\tau = \frac{1}{2}\mu (2\eta -\mu L^{2})\).

The following two lemmas are crucial for the analysis of the proposed algorithms.

Lemma 2.5

([33])

Assume that \(\{a_{n}\}_{n=0}^{\infty }\) is a sequence of nonnegative real numbers such that

where \(\{\gamma _{n}\}_{n=0}^{\infty }\) is a sequence in (0,1) and \(\{\delta _{n}\}_{n=0}^{\infty }\) is a real sequence such that

-

(i)

\(\sum_{n=0}^{\infty } \gamma _{n}=\infty \);

-

(ii)

\(\limsup_{n\rightarrow \infty }\delta _{n} \leq 0 \) or \(\sum_{n=0}^{\infty } \vert \gamma _{n}\delta _{n} \vert <\infty \).

Then \(\lim_{n\rightarrow \infty } a_{n}=0\).

Lemma 2.6

([27])

Assume that \(\{s_{n}\}_{n=0}^{\infty }\) is a sequence of nonnegative real numbers such that

where \(\{\gamma _{n}\}_{n=0}^{\infty }\) is a sequence in \((0,1)\), \(\{\eta _{n}\}_{n=0}^{\infty }\) is a sequence of nonnegative real numbers, and \(\{\delta _{n}\}_{n=0}^{\infty }\) and \(\{\alpha _{n}\}_{n=0} ^{\infty }\) are two sequences in \(\mathbb{R}\) such that

-

(i)

\(\sum_{n=0}^{\infty } \gamma _{n}=\infty \),

-

(ii)

\(\lim_{n\rightarrow \infty } \alpha _{n}=0\),

-

(iii)

\(\lim_{k\rightarrow \infty }\eta _{n_{k}}=0\) implies \(\limsup_{k\rightarrow \infty }\delta _{n_{k}}\leq 0\) for any subsequence \(\{n_{k}\}_{k=0}^{\infty }\subset \{n\}_{n=0}^{\infty }\).

Then \(\lim_{n\rightarrow \infty } s_{n}=0\).

Recall that a function \(\varphi : \mathcal{H}\rightarrow \mathbb{R}\) is called convex if

Recall that an element \(g\in \mathcal{H}\) is said to be a subgradient of a convex function \(\varphi : \mathcal{H} \rightarrow \mathbb{R}\) at u if

A convex function \(\varphi : \mathcal{H}\rightarrow \mathbb{R}\) is said to be subdifferentiable at u, if it has at least one subgradient at u. The set of subgradients of φ at u is called the subdifferential of φ at u, which is denoted by \(\partial \varphi (u)\). Relation (7) is called the subdifferential inequality of φ at u. A function φ is called subdifferentiable, if it is subdifferentiable at every \(u\in \mathcal{H}\). If a convex function φ is differentiable, then its gradient and subgradient coincide.

Recall that a function \(\varphi : \mathcal{H}\rightarrow \mathbb{R}\) is said to be weakly lower semi-continuous (w-lsc) at u if \(u_{n}\rightharpoonup u\) implies

3 An existence and uniqueness theorem

In this section, with the help of Lemma 1.1, an existence and uniqueness theorem of solutions for inverse variational inequalities is established.

Firstly, applying Lemma 2.3, Lemma 2.4, and Banach’s contraction mapping principle, it is not difficult to get the following well-known result.

Theorem 3.1

Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal{H}\), and let \(F: C \rightarrow \mathcal{H}\) be a Lipschitz continuous and strongly monotone operator. Then the variational inequality \(\operatorname{VI}(C,F)\) has a unique solution. Furthermore, if \(F: C \rightarrow \mathcal{H}\) is L-Lipschitz continuous and η-strongly monotone, then for any \(\mu \in (0, \frac{2\eta }{L ^{2}})\), \(P_{C}(I-\mu F): C\rightarrow C\) is a strict contraction and the sequence \(\{x_{n}\}_{n=0}^{\infty }\) generated by the gradient projection method

converges strongly to the unique solution of \(\operatorname{VI}(C,F)\), where the initial guess \(x_{0}\) can be selected in \(\mathcal{H}\) arbitrarily.

Secondly, we show the following two facts.

Lemma 3.1

If \(f: \mathcal{H}\rightarrow \mathcal{H}\) is Lipschitz continuous and strongly monotone, then \(f: \mathcal{H}\rightarrow \mathcal{H}\) is a bijection and thus \(f^{-1}: \mathcal{H}\rightarrow \mathcal{H}\) is a single-valued mapping.

Proof

In order to complete the proof, it suffices to verify that, for any \(v\in \mathcal{H}\), there exists only one \(u\in \mathcal{H}\) such that \(f(u)=v\). Suppose that f is L-Lipschitz continuous and η-strongly monotone with \(L>0\) and \(\eta >0\). Take \(\mu \in (0, \frac{2\eta }{L^{2}})\) and set \(T=(I-\mu f)+\mu v: \mathcal{H}\rightarrow \mathcal{H}\). It is easy to verify that \(u\in \mathcal{H}\) solves the equation \(f(u)=v\) if and only if \(u\in \mathcal{H}\) is a fixed point of T. Using Lemma 2.4, T is a strict contraction and hence T has only one fixed point. Consequently, the equation \(f(u)=v\) has only one solution and this completes the proof. □

Lemma 3.2

If \(f:\mathcal{H}\rightarrow \mathcal{H}\) is L-Lipschitz continuous and η-strongly monotone, then \(f^{-1}: \mathcal{H}\rightarrow \mathcal{H}\) is \(\frac{1}{\eta }\)-Lipschitz continuous and \(\frac{ \eta }{L^{2}}\)-strongly monotone.

Proof

For any \(x,y\in \mathcal{H}\), setting \(f^{-1}(x)=u\) and \(f^{-1}(y)=v\), and using the strong monotonicity of f, we have

Consequently,

which implies that \(f^{-1}\) is \(\frac{1}{\eta }\)-Lipschitz continuous.

On the other hand, noting that f is L-Lipschitz continuous, we obtain

which yields that \(f^{-1}\) is \(\frac{\eta }{L^{2}}\)-strongly monotone. □

Theorem 3.2

Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal{H}\), and let \(f: \mathcal{H}\rightarrow \mathcal{H}\) be a Lipschitz continuous and strongly monotone operator. Then the inverse variational inequality \(\operatorname{IVI}(C,f)\) has a unique solution.

Proof

From Lemma 3.1 and Lemma 3.2, we have that \(f^{-1}\) is single-valued, \(\frac{1}{\eta }\)-Lipschitz continuous, and \(\frac{\eta }{L^{2}}\)-strongly monotone. Thus, by using Theorem 3.1, we assert that \(\operatorname{VI}(C, f^{-1})\) has a unique solution. From Lemma 1.1, it follows that the inverse variational inequality problem \(\operatorname{IVI}(C,f)\) also has a unique solution. □

Remark 3.1

In Theorem 3.2, we just need that \(f: \mathcal{H}\rightarrow \mathcal{H}\) is Lipschitz continuous and strongly monotone and do not need (4)–(6). So, our result essentially improves Lemma 1.2.

4 An alternating contraction projection method

Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal{H}\), and let \(f: \mathcal{H}\rightarrow \mathcal{H}\) be an L-Lipschitz continuous and η-strongly monotone operator. Using Theorem 3.2, we assert that the inverse variational inequality \(\operatorname{IVI}(C,f)\) has a unique solution, which is denoted by \(\xi ^{*}\). According to Lemma 1.1, \(u^{*}=f(\xi ^{*})\) is the unique solution of \(\operatorname{VI}(C,f^{-1})\). Based on this fundamental fact and the gradient projection method for solving \(\operatorname{VI}(C,f^{-1})\), in this section, we introduce an iterative algorithm for finding the unique solution \(\xi ^{*}\) of \(\operatorname{IVI}(C,f)\).

Set \(\tilde{L}=\frac{ 1}{ \eta }\) and \(\tilde{\eta }=\frac{ \eta }{ L^{2}}\). Take two positive constants μ and α such that \(0 < \mu <\frac{ 2\tilde{\eta }}{ \tilde{L^{2}}}\) and \(0 < \alpha < \frac{ 2\eta }{ L^{2}}\), respectively and a sequence of positive numbers \(\{ \varepsilon _{n}\}_{n=0}^{\infty }\) such that \(\varepsilon _{n}\rightarrow 0\) as \(n \rightarrow \infty \). The alternating contraction projection method (ACPM) is defined as follows.

Algorithm 4.1

(The alternating contraction projection method)

-

Step 1:

Take \(u_{0}\in C\) and \(\xi _{0}^{(0)}\in \mathcal{H}\) arbitrarily and set \(n:=0\).

-

Step 2:

For the current \(u_{n}\) and \(\xi _{n}^{(0)}\) (\(n\geq 0\)), calculate

$$ \xi ^{(m+1)}_{n}=\xi ^{(m)}_{n}- \alpha f\bigl(\xi ^{(m)}_{n} \bigr)+\alpha u_{n}, \quad m=0,1,\ldots,m _{n}, $$(9)where \(m_{n}\) is the smallest positive integer such that

$$ \frac{(1-\tau )^{m_{n}+1}}{\tau } \bigl\Vert \xi ^{(1)}_{n}- \xi ^{(0)}_{n} \bigr\Vert \leq \varepsilon _{n}, $$(10)where \(\tau =\frac{1}{2}\alpha (2\eta -\alpha L^{2})\).

Set

$$ \xi _{n}=\xi ^{(m_{n}+1)}_{n}. $$(11) -

Step 3:

Calculate

$$ u_{n+1}=P_{C}(u_{n}-\mu \xi _{n}), $$(12)and set

$$ \xi ^{(0)}_{n+1}=\xi _{n}, $$(13)\(n:=n+1\) and return to Step 2.

We now establish the strong convergence of Algorithm 4.1.

Theorem 4.1

Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal{H}\), and let \(f: \mathcal{H}\rightarrow \mathcal{H}\) be an L-Lipschitz continuous and η-strongly monotone operator. Then the two sequences \(\{\xi _{n}\}_{n=0}^{\infty }\) and \(\{u_{n}\}_{n=0} ^{\infty }\) generated by Algorithm 4.1 converge strongly to the unique solution \(\xi ^{*} \) of \(\operatorname{IVI}(C,f)\) and the unique solution \(u^{*}\) of \(\operatorname{VI}(C,f^{-1})\), respectively.

Proof

First of all, for each \(n\geq 0\) and \(u_{n}\in C\), we define a mapping \(T_{n}: \mathcal{H}\rightarrow \mathcal{H}\) by

From Lemma 2.4, \(T_{n}\) is a strict contraction with the coefficient \(1-\tau \). Moreover, Banach’s contraction mapping principle implies that the sequence \(\{\xi _{n}^{(m)}\}_{m=0}^{\infty }\) generated by (9) converges strongly to \(f^{-1}(u_{n})\) as \(m\rightarrow \infty \) and there exists the error estimate

From (9), we have

Secondly, using Lemma 2.4 again, we claim that the mapping \(P_{C}(I-\mu f^{-1}): C\rightarrow C\) is also a strict contraction with the coefficient \(1-\tilde{\tau }\), where \(\tilde{\tau }=\frac{1}{2}\mu (2\tilde{\eta }-\mu \tilde{L}^{2})\). Based on these facts and noting \(u^{*}=P_{C}(u^{*}-\mu f^{-1}(u^{*}))\), we have from (15) that

Applying Lemma 2.5 to (16), we obtain that \(\Vert u_{n}-u^{*} \Vert \rightarrow 0\) as \(n\rightarrow \infty \).

Finally, noting that \(\xi ^{*}=f^{-1}(u^{*})\) and \(f^{-1}\) is L̃-Lipschitz continuous, we have from (15) that

Thus it concludes from (17) that \(\Vert \xi _{n}-\xi ^{*} \Vert \rightarrow 0\) holds as \(n\rightarrow \infty \). □

As for the convergence rate of Algorithm 4.1, we have the following result.

Theorem 4.2

Under the conditions of Theorem 4.1, we obtain the following estimates of convergence rate for Algorithm 4.1:

and

In particular, if we take \(\varepsilon _{n}=(1- \tilde{\tau })^{n+1}\) (\(n\geq 0\)), then there hold

and

Proof

Estimate (18) can be obtained easily by using (16) repeatedly. By combining (18) and (17), we have (19). (20) and (21) can be gotten by substituting \(\varepsilon _{n}=(1- \tilde{\tau })^{n+1}\) into (18) and (19), respectively. □

5 An alternating contraction relaxation projection method

Algorithm 4.1 (ACPM) can be well implemented if the structure of the set C is very simple and the projection operator \(P_{C}\) is easy to calculate. However, the calculation of a projection onto a closed convex subset is generally difficult. To overcome this difficulty, Fukushima [13] suggested a relaxation projection method to calculate the projection onto a level set of a convex function by computing a sequence of projections onto half-spaces containing the original level set. Since its inception, the relaxation technique has received much attention and has been used by lots of authors to construct iterative algorithms for solving nonlinear problems, see [25] and the references therein.

We now consider the inverse variational inequality problem \(\operatorname{IVI}(C,f)\), where \(f: \mathcal{H}\rightarrow \mathcal{H}\) is a Lipschitz continuous and strongly monotone operator. Let the closed convex subset C be the level set of a convex function, i.e.,

where \(c: \mathcal{H}\rightarrow \mathbb{R}\) is a convex function. We always assume that c is weakly lower semi-continuous, subdifferentiable on \(\mathcal{H}\), and ∂c is a bounded operator (i.e., bounded on bounded sets). It is worth noting that the subdifferential operator is bounded for a convex function defined on a finite dimensional Hilbert space (see [3, Corollary 7.9]).

Take the constants \(\tilde{L}=\frac{1}{\eta }\), \(\tilde{\eta }=\frac{\eta }{L^{2}}\), μ, and α and the sequence of positive numbers \(\{\varepsilon _{n}\}_{n=0}^{\infty }\) as in the last section.

Adopting the relaxation technique of Fukushima [13], we introduce a relaxed projection algorithm for computing the unique solution \(\xi ^{*}\) of \(\operatorname{IVI}(C,f)\), where C is given as in (22).

Algorithm 5.1

(The alternating contraction relaxation projection method)

-

Step 1:

Take \(u_{0}\in C\) and \(\xi _{0}^{(0)}\in \mathcal{H}\) arbitrarily and set \(n:=0\).

-

Step 2:

For the current \(u_{n}\) and \(\xi _{n}^{(0)}\) (\(n\geq 0\)), calculate

$$ \xi ^{(m+1)}_{n}=\xi ^{(m)}_{n}- \alpha f\bigl(\xi ^{(m)}_{n} \bigr)+\alpha u_{n}, \quad m=0,1,\ldots,m _{n}, $$(23)where \(m_{n}\) is the smallest positive integer such that

$$ \frac{(1-\tau )^{m_{n}+1}}{\tau } \bigl\Vert \xi ^{(1)}_{n}- \xi ^{(0)}_{n} \bigr\Vert \leq \varepsilon _{n}, $$(24)where \(\tau =\frac{1}{2}\alpha (2\eta -\alpha L^{2})\).

Set

$$ \xi _{n}=\xi ^{(m_{n}+1)}_{n}. $$(25) -

Step 3:

Calculate

$$ u_{n+1}=P_{C_{n}}(u_{n}-\lambda _{n}\mu \xi _{n}), $$(26)where

$$\begin{aligned}& \begin{aligned}[b] &C_{n}=\bigl\{ z\in \mathcal{H}\mid c(u_{n})+ \langle \nu _{n}, z-u_{n}\rangle \leq 0\bigr\} , \\&\quad \nu _{n}\in \partial c(u_{n}) \quad \text{and} \quad \lambda _{n}\in (0, 1).\end{aligned} \end{aligned}$$(27)Set

$$ \xi ^{(0)}_{n+1}=\xi _{n}, $$\(n:=n+1\) and return to Step 2.

Next theorem establishes the strong convergence of Algorithm 4.1.

Theorem 5.1

Let C be given by (22), and let \(f: \mathcal{H}\rightarrow \mathcal{H}\) be an L-Lipschitz continuous and η-strongly monotone operator. Assume that \(c: \mathcal{H}\rightarrow \mathbb{R}\) is weakly lower semi-continuous and subdifferentiable on \(\mathcal{H}\) and ∂c is a bounded operator. Suppose that the sequence \(\{\lambda _{n}\}_{n=0}^{\infty }\subset (0, 1)\) satisfies (i) \(\lambda _{n}\rightarrow 0\) as \(n\rightarrow \infty \) and (ii) \(\sum_{n=0}^{\infty }\lambda _{n}=\infty \). Then the two sequences \(\{\xi _{n}\}_{n=0}^{\infty }\) and \(\{u_{n}\}_{n=0}^{ \infty }\) generated by Algorithm 5.1 converge strongly to the unique solution \(\xi ^{*} \) of \(\operatorname{IVI}(C,f)\) and the unique solution \(u^{*}\) of \(\operatorname{VI}(C,f^{-1})\), respectively.

Proof

For convenience, we denote by M a positive constant, which represents different values in different places. Firstly, we verify that \(\{u_{n}\}_{n=0}^{\infty }\) is bounded. Indeed, from the subdifferential inequality (7) and the definition of \(C_{n}\), it is easy to verify that \(C_{n}\supset C\) for all \(n\geq 0\). Similar to (15), we also have

Noting that the projection operator \(P_{C_{n}}\) is nonexpansive, we obtain from (26), (28), and Lemma 2.4 that

where \(\tilde{\tau }=\frac{1}{2}\mu (2\tilde{\eta }-\mu \tilde{L}^{2})\). Inductively, it turns out that

which implies that \(\{u_{n}\}_{n=0}^{\infty }\) is bounded and so is \(\{f^{-1}(u_{n})\}_{n=0}^{\infty }\). Similar to (17), we have

which implies that \(\{\xi _{n}\}_{n=0}^{\infty }\) is bounded. Using (26), (28), Lemma 2.1, and Lemma 2.4, we have

Since the projection operator \(P_{C_{n}}\) is firmly nonexpansive, we get

Setting

then (30) and (32) can be rewritten as the following forms, respectively:

and

From the conditions \(\lambda _{n}\rightarrow 0\) and \(\sum^{+\infty }_{n=1} \lambda _{n}=\infty \), it follows \(\alpha _{n} \rightarrow 0\) and \(\sum^{\infty }_{n=1} \gamma _{n}=\infty \). So, in order to use Lemma 2.6 to complete the proof, it suffices to verify that

implies

for any subsequence \(\{n_{k}\}_{k=0}^{\infty }\subset \{n\}_{n=0}^{ \infty }\). In fact, from \(\Vert u_{n_{k}}-P_{C_{n_{k}}}u _{n_{k}} \Vert \rightarrow 0\) and the fact that ∂c is bounded on bounded sets, it follows that there exists a constant \(\delta > 0 \) such that \(\Vert \nu _{n_{k}} \Vert \leq \delta \) for all \(k\geq 0\). Using (27) and the trivial fact that \(P_{C_{n_{k}}}u_{n_{k}} \in C_{n_{k}}\), we have

For any \(u^{\prime }\in \omega _{\omega }(u_{n_{k}})\), without loss of generality, we assume that \(u_{n_{k}} \rightharpoonup u^{\prime }\). Using w-lsc of c and (35), we have

which implies that \(u^{\prime }\in C\). Hence \(\omega _{\omega }(u_{n_{k}})\subset C\).

Noting that \(u^{*}\) is the unique solution of \(\operatorname{VI}(C,f^{-1})\), it turns out that

Since \(\lambda _{n}\rightarrow 0\), \(\varepsilon _{n}\rightarrow 0\), and \(\{f^{-1}(u_{n})\}_{n=0}^{\infty }\) is bounded, it is easy to verify that \(\limsup_{k\rightarrow 0} \delta _{n_{k}}\leq 0\). Therefore, by using Lemma 2.6 we get that \(u_{n}\rightarrow u^{*}\) as \(n\rightarrow \infty \). Consequently, this together with (29) leads to \(\xi _{n}\rightarrow \xi ^{*}\) and the proof is completed. □

Next we estimate the convergence rate of Algorithm 5.1. Note that the conditions \(\lambda _{n}\rightarrow 0\) and \(\sum_{n=0}^{\infty }\lambda _{n}=\infty \) guarantee the strong convergence, but slow down the convergence rate. Since it is difficult to estimate the asymptotic convergence rate of Algorithm 5.1, we will focus on the convergence rate of Algorithm 5.1 in the non-asymptotic sense. Based on Lemma 1.1 and Theorem 5.1, estimating the convergence rate of Algorithm 5.1 for \(\operatorname{IVI}(C,f)\) is equivalent to estimating the convergence rate of Algorithm 5.1 for \(\operatorname{VI}(C,f^{-1})\), so we will analyze the convergence rate of Algorithm 5.1 for \(\operatorname{VI}(C,f^{-1})\).

The analysis of the convergence rate is based on the fundamental equivalence: a point \(u\in C\) is a solution of \(\operatorname{VI}(C,f^{-1})\) if and only if \(\langle f^{-1}(v),v-u\rangle \geq 0\) holds for all \(v\in C\cap S(u,1)\), where \(S(u,1)\) is the closed sphere with the center u and the radius one (see [4] and [10] for details).

A useful inequality for estimating the convergence rate of Algorithm 5.1 is given as follows.

Lemma 5.1

Let \(\{u_{n}\}_{n=1}^{\infty }\) be the sequence generated by Algorithm 5.1. Assume that the conditions in Theorem 5.1 hold. Suppose \(\sum_{n=0}^{\infty }\lambda _{n}^{2}<\infty \) and \(\sum_{n=0}^{\infty }\lambda _{n}\varepsilon _{n}<\infty \). Then, for any integer \(n\geq 1\), we have a sequence \(\{w_{n}\}_{n=1}^{ \infty }\) which converges strongly to the unique solution \(u^{*}\) of \(\operatorname{VI}(C,f^{-1})\) and

where

Proof

For each \(k\geq 0\) and any \(v\in C\), using (26) and (28), we have

Consequently, we obtain

which together with the monotonicity of \(f^{-1}\) yields

Note the fact that \(\{u_{k}\}_{k=0}^{\infty }\) and \(\{ \Vert f^{-1}(u_{k}) \Vert \}_{k=0}^{\infty }\) are all bounded. So, from the conditions \(\sum_{k=0}^{\infty }\lambda _{k}^{2}<\infty \) and \(\sum_{k=0}^{\infty }\lambda _{k}\varepsilon _{k}<\infty \), it follows that \(\sigma _{k}<\infty \), \(k=1,2,3,4\). Summing inequality (39) over \(k=0,\ldots,n\), we get

Thus (36) follows from (37) and (40).

By Theorem 5.1, \(\{u_{n}\}_{n=0}^{\infty }\) converges strongly to the unique solution \(u^{*}\) of \(\operatorname{VI}(C,f^{-1})\). Since \(w_{n}\) is a convex combination of \(u_{0}, u_{1},\ldots, u_{n}\), it is easy to see that \(\{w_{n}\}_{n=1}^{\infty }\) also converges strongly to \(u^{*}\). □

Finally we are in a position to estimate the convergence rate of Algorithm 5.1.

Theorem 5.2

Assume that the conditions in Theorem 5.1 hold and the condition \(\sum_{n=0}^{\infty }\lambda _{n}\varepsilon _{n}<\infty \) is satisfied. Then, in the ergodic sense, Algorithm 5.1 has the \(O (\frac{1}{n^{1-\alpha }} )\) convergence rate if \(\{\lambda _{n}\}_{n=1}^{\infty }=\{\frac{1}{n^{\alpha }}\}_{n=1}^{ \infty }\) with \(\frac{1}{2}<\alpha <1\) and \(\lambda _{0}=\frac{1}{1- \alpha }\), and has the \(O (\frac{1}{\ln n} )\) convergence rate if \(\{\lambda _{n}\}_{n=1}^{\infty }=\{\frac{1}{n}\}_{n=1}^{ \infty }\).

Proof

For \(\{\lambda _{n}\}_{n=1}^{\infty }=\{\frac{1}{n^{ \alpha }}\}_{n=1}^{\infty }\) with \(\frac{1}{2}<\alpha <1\), one has that \(\sum_{n=1}^{\infty }\lambda _{n}=\infty \) and \(\sum_{n=1}^{\infty } \lambda _{n}^{2}< \infty \). For any integer \(k\geq 1\), it is easy to verify that

Consequently, for all \(n\geq 1\), we have

It concludes from (37) and (41) that

which implies that Algorithm 5.1 has the \(O (\frac{1}{n^{1-\alpha }} )\) convergence rate. In fact, for any bounded subset \(D\subset C\), put \(\gamma =\sup \{ \Vert v \Vert \mid v\in D\}\), then from (36) and (42), we obtain

The conclusion can be similarly proved for \(\{\lambda _{n}\}_{n=1}^{\infty }=\{\frac{1}{n}\}_{n=1}^{\infty }\). □

6 Numerical experiments

In this section, in order to show the practicability and effectiveness of Algorithm 4.1 (ACPM), we present two examples in the setting of finite dimensional Hilbert spaces. The codes were written in Matlab 2009a and run on personal computer.

In the following two examples, we denote by \(\{u_{n}\} _{n=0}^{\infty }\) and \(\{\xi _{n}\}_{n=0}^{\infty }\) the two sequences generated by Algorithm 4.1. Take L, η, α, μ, τ, and τ̃ as in Sect. 4 and \(\varepsilon _{n}:=(1- \tilde{\tau })^{n+1}\) (\(n\geq 0\)). Since we do not know the exact solution \(\xi ^{*}\) of \(\operatorname{IVI}(C,f)\), we use \(E_{n}=\frac{ \Vert \xi _{n+1}-\xi _{n} \Vert }{ \Vert \xi _{n} \Vert }\) to measure the error of the nth step iteration.

It is worth noting that for the following two examples, condition (6) is not satisfied, so the method proposed by Luo et al. [34] could not be used. However, Algorithm 4.1 can be implemented easily.

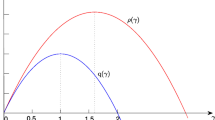

Example 6.1

Let \(f: \mathbb{R}^{1}\rightarrow \mathbb{R}^{1}\) be defined by

and let \(C=[1,10]\subset \mathbb{R}^{1}\). Obviously, f is 3-Lipschitz continuous and 1-strongly monotone. Hence, \(L=3\) and \(\eta =1\). Choose \(\alpha =\mu =\frac{1}{9}\), \(u_{0}=5\), \(\xi _{0}^{(0)}=100\).

The numerical results generated by implementing Algorithm 4.1 are provided in Fig. 1, from which we observe that

-

(a)

\(m_{n}\) is 1 when \(n<33\) and becomes 0 when \(n\geq 33\). Hence the calculation to find suitable \(m_{n}\) is not needed when \(n\geq 33\). This is a feature of our algorithms, which is different with other line search techniques.

-

(b)

\(\xi _{n}\) deceases with n and equals 3.3541803 when \(n\geq 69\).

-

(c)

Except the first steps, the error \(E_{n}\) decreases linearly.

Example 6.2

Let \(f: \mathbb{R}^{2}\rightarrow \mathbb{R}^{2}\) be defined by

and let \(C=[1,10]\times [1,10]\subset \mathbb{R}^{2}\). It is easy to directly verify that f is \(3\sqrt{2}\)-Lipschitz continuous and 1-strongly monotone. So we have \(L=3\sqrt{2}\) and \(\eta =1\). Select \(\alpha =\mu =\frac{1}{18}\), \(u_{0}=(3,4)^{\top }\), and \(\xi _{0}^{(0)}=(20,20)^{ \top }\).

From Fig. 2, we observe that: (a) \(m_{n}\) is 1 when \(n<222\) and \(m_{n}\) becomes 0 when \(n\geq 222\); (b) the vectors of \(\xi _{n}\) do not decease as Example 6.1, and \(\xi _{n}\) equals \([0.07546639, 0.38683623]^{T}\) when \(n\geq 290\); (c) except the first steps, the decrease of error \(E_{n}\) is piecewise linear.

7 Concluding remarks

In this paper, we give an existence-uniqueness theorem for the inverse variational inequalities, whose conditions are weaker than those of Luo et al. [34]. Based on the existence-uniqueness theorem, we introduce an alternating contraction projection method and its relaxed version and show their strong convergence. The convergence rates of the alternating contraction projection method and its relaxed version are both presented. Comparing with the alternating contraction projection method, the convergence conditions of the alternating contraction relaxation projection method are stronger, but the alternating contraction relaxation projection method is indeed easy to implement when the projection operator \(P_{C}\) is difficult to calculate.

References

Akram, M., Chen, J.W., Dilshad, M.: Generalized Yosida approximation operator with an application to a system of Yosida inclusions. J. Nonlinear Funct. Anal. 2018, Article ID 17 (2018)

Baiocchi, C., Capelo, A.: Variational and Quasi Variational Inequalities. Wiley, New York (1984)

Bauschke, H.H., Borwein, J.M.: On projection algorithms for solving convex feasibility problems. SIAM Rev. 38, 367–426 (1996)

Cai, X.J., Gu, G.Y., He, B.S.: On the \(O({1}/{t})\) convergence rate of the projection and contraction methods for variational inequalities with Lipschitz continuous monotone operators. Comput. Optim. Appl. 57, 339–363 (2014)

Cegielski, A., Zalas, R.: Methods for variational inequality problem over the intersection of fixed point sets of quasi-nonexpansive operators. Numer. Funct. Anal. Optim. 34, 255–283 (2013)

Chen, J.W., Kobis, E., Kobis, M.A., Yao, J.C.: Optimality conditions for solutions of constrained inverse vector variational inequalities by means of nonlinear scalarization. J. Nonlinear Var. Anal. 1, 145–158 (2017)

Cottle, R.W., Giannessi, F., Lions, J.L.: Variational Inequalities and Complementarity Problems. Theory and Applications. Wiley, New York (1980)

Dong, Q.L., Cho, Y.J., Zhong, L.L., Rassias, Th.M.: Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 70, 687–704 (2018)

Dong, Q.L., Lu, Y.Y., Yang, J.: The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 65, 2217–2226 (2016)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problem, Vol. I and II. Springer Series in Operations Research. Springer, New York (2003)

Fichera, G.: Sul problema elastostatico di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei, VIII. Ser., Rend., Cl. Sci. Fis. Mat. Nat. 34, 138–142 (1963)

Fichera, G.: Problemi elastostatici con vincoli unilaterali: il problema di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei, Mem., Cl. Sci. Fis. Mat. Nat., Sez. I, VIII. Ser. 7, 91–140 (1964)

Fukushima, M.: A relaxed projection method for variational inequalities. Math. Program. 35, 58–70 (1986)

Glowinski, R., Lions, J.L., Tremoliers, R.: Numerical Analysis of Variational Inequalities. North-Holland, Amsterdam (1981)

Goebel, K., Reich, S.: Uniform Convexity, Hyperbolic Geometry and Nonexpansive Mappings. Dekker, New York (1984)

Han, Q.M., He, B.S.: A predict-correct method for a variant monotone variational inequality problem. Chin. Sci. Bull. 43, 1264–1267 (1998)

Harker, P.T., Pang, J.S.: Finite-dimensional variational inequality and nonlinear complementarity problems: a survey of theory, algorithms and applications. Math. Program. 48, 161–220 (1990)

He, B.S.: Inexact implicit methods for monotone general variational inequalities. Math. Program. 86, 199–217 (1999)

He, B.S.: A Goldstein’s type projection method for a class of variant variational inequalities. J. Comput. Math. 17, 425–434 (1999)

He, B.S., He, X.Z., Liu, H.X.: Solving a class of constrained ‘black-box’ inverse variational inequalities. Eur. J. Oper. Res. 204, 391–401 (2010)

He, B.S., He, X.Z., Liu, H.X.: Solving a class of constrained ‘black-box’ inverse variational inequalities. Eur. J. Oper. Res. 204(3), 391–401 (2010)

He, B.S., Liu, X.H.: Inverse variational inequalities in the economic field: applications and algorithms (2006). http://www.paper.edu.cn/releasepaper/content/200609-260 (in Chinese)

He, B.S., Liu, X.H., Li, M., He, X.Z.: PPA-based methods for monotone inverse variational inequalities (2006). http://www.paper.edu.cn/releasepaper/content/200606–219

He, S.N., Tian, H.L.: Selective projection methods for solving a class of variational inequalities. Numer. Algorithms. https://doi.org/10.1007/s11075-018-0499-x

He, S.N., Tian, H.L., Xu, H.K.: The selective projection method for convex feasibility and split feasibility problems. J. Nonlinear Convex Anal. 19, 1199–1215 (2018)

He, S.N., Xu, H.K.: Variational inequalities governed by boundedly Lipschitzian and strongly monotone operators. Fixed Point Theory 10, 245–0258 (2009)

He, S.N., Yang, C.P.: Solving the variational inequality problem defined on intersection of finite level sets. Abstr. Appl. Anal. 2013, 942315 (2013)

He, X.Z., Liu, H.X.: Inverse variational inequalities with projection-based solution methods. Eur. J. Oper. Res. 208(1), 12–18 (2011)

Hu, R., Fang, Y.P.: Well-posedness of inverse variational inequalities. J. Convex Anal. 15(2), 427–437 (2008)

Hu, R., Fang, Y.P.: Levitin–Polyak well-posedness by perturbations of inverse variational inequalities. Optim. Lett. 7(2), 343–359 (2013)

Khan, A.A., Tammer, C., Zalinescu, C.: Regularization of quasi-variational inequalities. Optimization 64, 1703–1724 (2015)

Lions, J.L., Stampacchia, G.: Variational inequalities. Commun. Pure Appl. Math. 20, 493–512 (1967)

Liu, L.S.: Ishikawa and Mann iterative processes with errors for nonlinear strongly accretive mapping in Banach spaces. J. Math. Anal. Appl. 194, 114–125 (1995)

Luo, X.P., Yang, J.: Regularization and iterative methods for monotone inverse variational inequalities. Optim. Lett. 8, 1261–1272 (2014)

Pang, J.S., Yao, J.C.: On a generalization of a normal map and equation. SIAM J. Control Optim. 33, 168–184 (1995)

Qin, X., Yao, J.C.: Projection splitting algorithms for nonself operators. J. Nonlinear Convex Anal. 18, 925–935 (2017)

Scrimali, L.: An inverse variational inequality approach to the evolutionary spatial price equilibrium problem. Optim. Eng. 13(3), 375–387 (2012)

Stampacchia, G.: Formes bilineaires coercivites sur les ensembles convexes. C. R. Acad. Sci. 258, 4413–4416 (1964)

Xu, H.K., Kim, T.H.: Convergence of hybrid steepest-descent methods for variational inequalities. J. Optim. Theory Appl. 119, 185–201 (2003)

Yamada, I.: The hybrid steepest-descent method for variational inequality problems over the intersection of the fixed point sets of nonexpansive mappings. In: Butnariu, D., Censor, Y., Reich, S. (eds.) Inherently Parallel Algorithms in Feasibility and Optimization and Their Applications, pp. 473–504. North-Holland, Amsterdam (2001)

Yang, H.M., Bell, G.H.: Traffic restraint, road pricing and network equilibrium. Transp. Res., Part B, Methodol. 31, 303–314 (1997)

Yao, Y.H., Chen, R.D., Xu, H.K.: Schemes for finding minimum-norm solutions of variational inequalities. Nonlinear Anal. 72, 3447–3456 (2010)

Yao, Y.H., Liou, Y.C., Kang, S.M.: Approach to common elements of variational inequality problems and fixed point problems via a relaxed extragradient method. Comput. Math. Appl. 59, 3472–3480 (2010)

Yao, Y.H., Liou, Y.C., Yao, J.C.: Iterative algorithms for the split variational inequality and fixed point problems under nonlinear transformations. J. Nonlinear Sci. Appl. 10, 843–854 (2017)

Yao, Y.H., Shahzad, N.: Strong convergence of a proximal point algorithm with general errors. Optim. Lett. 6, 621–628 (2012)

Zegeye, H., Shahzad, N., Yao, Y.H.: Minimum-norm solution of variational inequality and fixed point problem in Banach spaces. Optimization 64, 453–471 (2015)

Funding

This work was supported by the scientific research project of Tianjin Municipal Education Commission (No. 2018KJ253).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

He, S., Dong, QL. An existence-uniqueness theorem and alternating contraction projection methods for inverse variational inequalities. J Inequal Appl 2018, 351 (2018). https://doi.org/10.1186/s13660-018-1943-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-018-1943-0