Abstract

In this paper, we are concerned with the split equality problem (SEP) in Hilbert spaces. By converting it to a coupled fixed-point equation, we propose a new algorithm for solving the SEP. Whenever the convex sets involved are level sets of given convex functionals, we propose two new relaxed alternating algorithms for the SEP. The first relaxed algorithm is shown to be weakly convergent and the second strongly convergent. A new idea is introduced in order to prove strong convergence of the second relaxed algorithm, which gives an affirmative answer to Moudafi’s question. Finally, preliminary numerical results show the efficiency of the proposed algorithms.

Similar content being viewed by others

1 Introduction

The split feasibility problem (SFP) was first introduced by Censor and Elfving [5]. It models various inverse problems arising from phase retrievals and medical image reconstruction [3]. More specifically, the SFP requires to find a point \(x\in H_{1}\) satisfying the property

where C and Q are nonempty, closed and convex subsets of real Hilbert spaces \(H_{1}\) and \(H_{2}\), respectively, and \(A: H_{1}\rightarrow H_{2}\) is a bounded linear operator.

Various iterative methods have been constructed to solve the SFP (1.1); see [3,4,5, 16, 19, 20, 22,23,24,25, 28]. One of the well-known methods appearing in the literature for solving the SFP is Byrne’s CQ algorithm [3, 4], which generates a sequence \(\{x_{n}\}\) by the recursive procedure

where \(\gamma\in(0,\frac{2}{\|A\|^{2}})\), \(P_{C}\) and \(P_{Q}\) are projections onto C and Q, respectively, I denotes the identity operator, and \(A^{*}\) denotes the adjoint of A. The SFP can be also solved by a different method [17, 27], namely

where γ is a properly chosen parameter. In Hilbert spaces, both (1.2) and (1.3) converge weakly to a solution of the SFP whenever such a solution exists.

Recently, Moudafi [11] introduced the split equality problem (SEP):

where \(H_{1}\), \(H_{2}\), \(H_{3}\) are real Hilbert spaces, \(C\subseteq H_{1}\), \(Q\subseteq H_{2}\) are two nonempty, closed and convex subsets, and \(A: H_{1}\rightarrow H_{3}\), \(B: H_{2}\rightarrow H_{3}\) are two bounded linear operators. It is clear that the SEP is more general than the SFP. As a matter of fact, if \(B=I\) and \(H_{3}=H_{2}\), then the SEP (1.4) reduces to the SFP (1.1). Algorithms for solving the SEP have received great attention; see, for instance, [6, 7, 10,11,12, 14, 18]. Among these works, Moudafi [11] introduced the alternating CQ-algorithm (ACQA), namely

It is shown that the sequence \(\{(x_{n}, y_{n})\}\) produced by ACQA converges weakly to a solution of (1.4) provided that the solution set \(S=\{(x, y)\in C \times Q \mid Ax=By\}\) is nonempty and \(\{\gamma_{n}\}\) is a positive nondecreasing sequence such that \(\gamma_{n}\in (\epsilon, \min(\frac{1}{\|A\|^{2}}, \frac{1}{\|B\|^{2}})-\epsilon )\) for a small enough \(\epsilon>0\).

However, the ACQA might be hard to implement whenever \(P_{C}\) or \(P_{Q}\) fails to have a closed-form expression. A typical example of such a situation is the level set of convex functions. Indeed, Moudafi [10] considered the case when C and Q are level sets:

and

where \(c:H_{1}\rightarrow\mathbb{R}\) and \(q:H_{2}\rightarrow \mathbb{R}\) are two convex and subdifferentiable functions on \(H_{1}\) and \(H_{2}\), respectively. Here the subdifferential operators ∂c and ∂q of c and q are assumed to be bounded, i.e., bounded on bounded sets. In this case, it is known that the associated projections are very hard to calculate. To overcome this difficulty, Moudafi [10] presented the relaxed alternating CQ-algorithm (RACQA):

where \(\gamma\in (0, \min(\frac{1}{\|A\|^{2}}, \frac{1}{\|B\|^{2}}) )\), \(\{C_{n}\}\) and \(\{Q_{n}\}\) are two sequences of closed convex sets defined by

and

Since \(C_{n}\) and \(Q_{n}\) are clearly half-spaces, the associated projections thus have closed form expressions. This indicates that the implementation of RACQA is very easy. Under suitable conditions, Moudafi [10] proved that the sequence \(\{(x_{n}, y_{n})\}\) generated by the RACQA converges weakly to a solution of (1.4). Meanwhile, he raised the following open question in [10].

Question 1.1

Is there any strong convergence theorem of an alternating algorithm for the SEP (1.4) in real Hilbert spaces?

Motivated by the works mentioned above, we continue to study the SEP. We will treat the SEP in a different way. Indeed, we will prove that the SEP amounts to solving the coupled fixed point equation:

where τ is a positive real number. This equation enables us to propose a new algorithm for solving the SEP. We also consider the case when the convex sets involved are level sets of given convex functionals. Inspired by (1.11) and the relaxed projection algorithm, we propose two new relaxed alternating algorithms for the SEP governed by level sets, which present an affirmative answer to Moudafi’s question. Finally, we give numerical results for the split equality problem to demonstrate the feasibility and efficiency of the proposed algorithms.

2 Preliminaries

Throughout this paper, we always assume that H is a real Hilbert space with the inner product \(\langle\cdot, \cdot\rangle\) and norm \(\|\cdot\|\). We denote by I the identity operator on H, and by \(\operatorname{Fix}(T)\) the set of the fixed points of an operator T. The notation → stands for strong convergence and ⇀ stands for weak convergence.

Definition 2.1

Let \(T:H\rightarrow H\) be an operator. Then T is

-

(1)

nonexpansive if

$$\|Tx-Ty\| \leq\|x-y\|,\quad \forall x, y\in H; $$ -

(2)

firmly nonexpansive if

$$\Vert Tx-Ty \Vert ^{2}\leq \Vert x-y \Vert ^{2}- \bigl\Vert (I-T)x-(I-T)y \bigr\Vert ^{2},\quad \forall x,y\in H. $$

Let C be a nonempty, closed and convex subset of H. For any \(x\in H\), the projection onto C is defined as

The projection \(P_{C}\) has the following well-known properties.

Lemma 2.2

For all \(x,y\in H\),

-

(1)

\(\langle x-P_{C} x, z-P_{C} x\rangle\leq0\), \(\forall z\in C\);

-

(2)

\(P_{C}\) is nonexpansive;

-

(3)

\(P_{C}\) is firmly nonexpansive;

-

(4)

\(\langle P_{C}x-P_{C}y, x-y\rangle\geq\|P_{C}x-P_{C}y\|^{2}\).

Definition 2.3

Let \(T: H\rightarrow H\) be an operator with \(\operatorname{Fix}(T)\neq \emptyset\). Then \(I-T\) is said to be demiclosed at zero, if, for any \(\{x_{n}\}\) in H, the following implication holds:

It is well known that if T is a nonexpansive operator, then \(I-T\) is demiclosed at zero. Since the projection \(P_{C}\) is nonexpansive, then \(I-P_{C}\) is demiclosed at zero.

Definition 2.4

Let \(\lambda\in(0,1)\) and \(f: H\rightarrow(-\infty, +\infty]\) be a proper function.

-

(1)

f is convex if

$$f\bigl(\lambda x+(1-\lambda)y\bigr)\leq\lambda f(x)+(1-\lambda)f(y),\quad \forall x,y\in H. $$ -

(2)

A vector \(u\in H\) is a subgradient of f at a point x if

$$f(y)\geq f(x)+\langle u, y-x\rangle,\quad \forall y\in H. $$ -

(3)

The set of all subgradients of f at x, denoted by \(\partial f(x)\), is called the subdifferential of f.

To prove our main results, we need the following lemmas.

Lemma 2.5

For all \(x, y\in H\), we have

Lemma 2.6

([21])

Let \(\{a_{n}\}\) be a sequence of nonnegative real numbers such that

where \(\{\gamma_{n}\}\) is a sequence in \((0, 1)\) and \(\{\delta_{n}\}\) is a sequence in \(\mathbb{R}\) such that

-

(1)

\(\sum_{n=0}^{\infty}\gamma_{n}=\infty\);

-

(2)

\(\limsup_{n\rightarrow\infty}\delta_{n}\leq0\) or \(\sum_{n=0}^{\infty}|\delta_{n}\gamma_{n}|<\infty\).

Then, \(\lim_{n\rightarrow\infty}a_{n}=0\).

3 A new alternating CQ-algorithm

In what follows, we always assume that the solution set of the SEP is nonempty, i.e., \(S=\{(x, y)\in C \times Q \mid Ax=By\}\neq\emptyset\). In order to solve problem (1.4), we need the following lemma, which has as a key role in later developments.

Lemma 3.1

An element \((x, y)\in H_{1}\times H_{2}\) solves (1.4) if and only if it solves the fixed point equation (1.11).

Proof

If \((x, y)\) solves (1.4), then \(x=P_{C}x\), \(y=P_{Q}y\) and \(Ax=By\). It is obvious that the fixed point equation (1.11) holds.

To see the converse, let \((x, y)\) be a solution of equation (1.11). Then,

Choosing any \((\tilde{x}, \tilde{y})\in S\), we get

and

Adding the above two equalities, we have

Thus, \(x=P_{C}x\), \(y=P_{Q}y\) and \(Ax=By\). That is, \((x, y)\) solves (1.4), and the proof is complete. □

Applying Lemma 3.1, we introduce a new alternating CQ-algorithm for the SEP (1.4).

Algorithm 3.2

Let \((x_{0}, y_{0})\in H_{1}\times H_{2}\) be arbitrary. Given \((x_{n}, y_{n})\), construct \((x_{n+1}, y_{n+1})\) via the formula

where \(0<\tau<(1+c)^{-1}\) with \(c=\max(\|A\|^{2}, \|B\|^{2})\).

Theorem 3.3

Let \(\{(x_{n}, y_{n})\}\) be the sequence generated by Algorithm 3.2. Then \(\{(x_{n}, y_{n})\}\) converges weakly to a solution of the SEP (1.4).

Proof

Let \((x^{*}, y^{*})\in S\). Then \(x^{*}\in C\), \(y^{*}\in Q\) and \(Ax^{*}=By^{*}\). In view of (3.2), Lemma 2.2 and Young’s inequality, we conclude that

Similarly, we obtain

On the other hand, we have

and

Altogether, we have

and

Adding the two last inequalities, we obtain

Let \(\varGamma_{n}(x^{*},y^{*})=\|x_{n}-x^{*}\|^{2}+\|y_{n}-y^{*}\|^{2}-\tau\|Ax_{n}-Ax^{*}\|^{2}\). Then \(\tau\|Ax_{n}-Ax^{*}\|^{2}\leq\tau\|A\|^{2}\|x_{n}-x^{*}\|^{2}\), which implies

In view of (3.3), we obtain the following inequality:

This, together with (3.4), implies that the sequence \(\{\varGamma_{n}(x^{*}, y^{*})\}\) is bounded and converges to some finite limit \(\gamma(x^{*}, y^{*})\). By passing to the limit in (3.5) and by taking into account the assumption on τ, we finally obtain

and

We next prove that any weak cluster point of the sequence \(\{(x_{n}, y_{n})\}\) is a solution of the SEP (1.4). Since \(\{\varGamma_{n}(x^{*}, y^{*})\}\) is bounded, in view of (3.4), the sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) are also bounded. Let x̄ and ȳ be weak cluster points of the sequences \(\{x_{n}\}\) and \(\{y_{n}\}\), respectively. Without loss of generality, we assume that \(x_{n}\rightharpoonup\bar{x}\) and \(y_{n}\rightharpoonup\bar{y}\). Since \(I-P_{C}\) and \(I-P_{Q}\) are demiclosed at zero, from (3.6), we obtain \(\bar{x}=P_{C}\bar{x}\) and \(\bar{y}=P_{C}\bar{y}\), i.e., \(\bar{x}\in C\) and \(\bar{y}\in Q\). On the other hand, since \(x_{n}\rightharpoonup\bar{x}\) and \(y_{n}\rightharpoonup\bar{y}\), we deduce that \(Ax_{n}-By_{n}\rightharpoonup A\bar{x}-B\bar{y}\). The weak lower semicontinuity of the squared norm implies

hence \((\bar{x}, \bar{y})\in S\).

We finally show the weak convergence of the sequence \(\{(x_{n}, y_{n})\}\). Assume on the contrary that \((\hat{x},\hat{y})\) is another weak cluster point of \(\{(x_{n},y_{n})\}\). By the definition of \(\varGamma_{n}\), we have

By passing to the limit in the above, we obtain

By adding the last two equalities, we obtain

which clearly yields \(\bar{x}=\hat{x}\) and \(\bar{y}=\hat{y}\). This in particular implies that the weak cluster point of the sequence \(\{(x_{n}, y_{n})\}\) is unique. Consequently, the whole sequence \(\{(x_{n}, y_{n})\}\) converges weakly to a solution of problem (1.4). □

4 A relaxed alternating CQ-algorithm

When C and Q are level sets, the projections in Algorithm 3.2 might be hard to be implemented (see [1, 8, 9, 13, 25, 26]). To overcome this difficulty, we propose a relaxed alternating CQ-algorithm, which is inspired by methods (1.8) and (3.2). In what follows, we will treat the SEP (1.4) under the following assumptions:

-

(A1)

The sets C and Q are given by (1.6) and (1.7), respectively.

-

(A2)

For any \(x\in H_{1}\) and \(y\in H_{2}\), at least one subgradient \(\xi\in \partial c(x)\) and \(\eta\in\partial q(y)\) can be calculated.

We now present a new relaxed alternative CQ algorithm for solving the SEP (1.4).

Algorithm 4.1

Let \((x_{0}, y_{0})\) be arbitrary. Given \((x_{n}, y_{n})\), construct \((x_{n+1}, y_{n+1})\) via the formula

where \(0<\tau<(1+c)^{-1}\) with \(c=\max(\|A\|^{2}, \|B\|^{2})\), and \(C_{n}\) and \(Q_{n}\) are given as (1.9) and (1.10), respectively.

Remark 4.2

By the definition of the subgradient, it is clear that \(C\subseteq C_{n}\) and \(Q\subseteq Q_{n}\) for all \(n\geq0\). Since \(C_{n}\) and \(Q_{n}\) are both half-spaces, the projections onto \(C_{n}\) and \(Q_{n}\) can be easily calculated. Thus Algorithm 4.1 is easily implementable.

Theorem 4.3

Let \(\{(x_{n}, y_{n})\}\) be the sequence generated by Algorithm 4.1. Then \(\{(x_{n}, y_{n})\}\) converges weakly to a solution of the SEP (1.4).

Proof

Taking \((x^{*}, y^{*})\in S\), i.e., \(x^{*}\in C\) (and thus \(x^{*}\in C_{n}\)), \(y^{*}\in Q\) (and thus \(y^{*}\in Q_{n}\)), we have \(Ax^{*}=By^{*}\). Let \(\varGamma_{n} (x^{*},y^{*})=\|x_{n}-x^{*}\|^{2}+\|y_{n}-y^{*}\|^{2}-\tau\|Ax_{n}-Ax^{*}\|^{2}\). Similarly as in Theorem 3.3, we obtain the following inequality:

In addition, we have

It follows that the sequence \(\{\varGamma_{n}(x^{*}, y^{*})\}\) is bounded and converges to some finite limit \(\gamma(x^{*}, y^{*})\), which yields

and

From (4.1), we obtain

and

We next prove that any weak cluster point of the sequence \(\{(x_{n}, y_{n})\}\) is a solution of the SEP (1.4). Since \(\{\varGamma_{n}(x^{*}, y^{*})\}\) is bounded, in view of (4.3), the sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) are also bounded. Let x̄ and ȳ be weak cluster points of the sequences \(\{x_{n}\}\) and \(\{y_{n}\}\), respectively. Without loss of generality, we assume that \(x_{n}\rightharpoonup\bar{x}\) and \(y_{n}\rightharpoonup\bar{y}\). Since ∂c is bounded on bounded sets, there is a constant \(\delta_{1} >0\) such that \(\|\xi_{n}\|\leq\delta_{1}\) for all \(n\geq0\). From (4.1), we have

This implies that

Thus

The weak lower semicontinuity of c leads to

and therefore \(\bar{x}\in C\). Likewise, since ∂q is bounded on bounded sets, there is a constant \(\delta_{2} >0\) such that \(\|\eta_{n}\|\leq\delta_{2}\) for all \(n\geq0\). From (4.1), we have

This implies that

Hence

Again, the weak lower semicontinuity of q leads to

and therefore \(\bar{y}\in Q\). Furthermore, the weak convergence of \(\{Ax_{n}-By_{n}\}\) to \(A\bar{x}-B\bar{y}\) and the weak lower semicontinuity of the squared norm imply

Hence \((\bar{x}, \bar{y})\in S\).

The proof of the uniqueness of the weak cluster point is analogous to that of Theorem 3.3. Therefore, the whole sequence \(\{(x_{n}, y_{n})\}\) converges weakly to a solution of problem (1.4). This completes the proof. □

5 A strongly convergent algorithm

As we see from the previous section, the sequence generated by Algorithm 4.1 is only weakly convergent. So, the aim of this section is to modify Algorithm 4.1 so that it generates a strongly convergent sequence. This provides an affirmative answer to the open question raised by Moudafi [10].

Algorithm 5.1

Let \((u, v)\in H_{1}\times H_{2}\) be fixed and start with an initial guess \((x_{0}, y_{0})\in H_{1}\times H_{2}\). Given \((x_{n}, y_{n})\), construct \((x_{n+1}, y_{n+1})\) via the formula

where \(\{\alpha_{n}\}\) is a sequence in \([0,1]\), \(0<\tau<(1+c)^{-1}\) with \(c=\max(\|A\|^{2}, \|B\|^{2})\), and \(C_{n}\) and \(Q_{n}\) are given as (1.9) and (1.10), respectively.

Theorem 5.2

Let \(\{(x_{n}, y_{n})\}\) be the sequence generated by Algorithm 5.1. If \(\{\alpha_{n}\}\) satisfies the following conditions:

then \(\{(x_{n}, y_{n})\}\) converges strongly to a solution \((x^{*}, y^{*})\) of the SEP (1.4), where \((x^{*}, y^{*})=P_{S}(u,v)\).

Proof

Since \((x^{*}, y^{*})=P_{S}(u,v)\in S\), we have \(x^{*}\in C\) (and thus \(x^{*}\in C_{n}\)), \(y^{*}\in Q\) (and thus \(y^{*}\in Q_{n}\)), \(Ax^{*}=By^{*}\). In what follows, we divide the proof into four steps.

Step 1. We prove that the sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) are bounded. By the same argument as in the proof of Theorem 3.3, we arrive at

In view of (5.1) and the convexity of the squared norm, we obtain

This, along with (5.2), implies that

Now, by setting

we have

In view of (5.3), we conclude that

This implies

By induction, we obtain

for all \(n\geq0\). This implies that the sequence \(\{\varGamma_{n}(x^{*}, y^{*})\}\) is bounded. Hence, in view of (5.4), the sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) are bounded, too.

Step 2. We show that the following inequality holds:

where

Indeed, by Lemma 2.5, we have

Again from (5.2), we obtain

This implies

Hence, the desired inequality follows at once.

Step 3. We show that \(\limsup_{n\rightarrow \infty}\delta_{n}\) is finite. Since \(\{x_{n}\}\) and \(\{y_{n}\}\) are bounded, we have

This implies that \(\limsup_{n\rightarrow \infty}\delta_{n}<\infty\). We now show \(\limsup_{n\rightarrow \infty}\delta_{n}\geq-1\) by contradiction. If we assume on the contrary that \(\limsup_{n\rightarrow\infty}\delta_{n}<-1\), then there exists \(n_{0}\) such that \(\delta_{n}\leq-1\) for all \(n\geq n_{0}\). It then follows from (5.5) that

for all \(n\geq n_{0}\). By induction, we have

Since \(\sum_{i=n_{0}}^{\infty}\alpha_{i}=\infty\), there exists \(N>n_{0}\) such that \(\sum_{i=n_{0}}^{N}\alpha_{i}>\varGamma_{n_{0}}(x^{*}, y^{*})\). Therefore, we have

which clearly contradicts the fact that \(\varGamma_{n}(x^{*}, y^{*})\) is a nonnegative real sequence. Thus, \(\limsup_{n\rightarrow\infty}\delta_{n}\geq-1\) and it is finite.

Step 4. We show that \(\limsup_{n\rightarrow \infty}\delta_{n}\leq0\) and \(\{(x_{n}, y_{n})\}\) converges strongly to \((x^{*}, y^{*})\). Since \(\limsup_{n\rightarrow\infty}\delta_{n}\) is finite, we can take a subsequence \(\{n_{k}\}\) such that

Since \(\langle u-x^{*}, x_{n+1}-x^{*}\rangle\) and \(\langle v-y^{*}, y_{n+1}-y^{*}\rangle\) are bounded, without loss of generality, we may assume the existence of the limits

Hence, from (5.6), the following limit also exists:

Since \(\lim_{k\rightarrow\infty}\alpha_{n_{k}}=0\), we get \(\lim_{k\rightarrow \infty}\frac{1-\alpha_{n_{k}}}{\alpha_{n_{k}}}=\infty\). This implies that

So, by taking into account the assumption on τ, we have

and

From (5.1), we deduce that

and

Similarly as in the proof of Theorem 4.3, we conclude that any weak cluster point of \(\{(x_{n_{k}}, y_{n_{k}})\}\) belongs to S.

Since the sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) are bounded, one gets

and

This implies that any weak cluster point of \(\{(x_{n_{k}+1}, y_{n_{k}+1})\}\) also belongs to S. Without loss of generality, we assume that \(\{(x_{n_{k}+1}, y_{n_{k}+1})\}\) converges weakly to \((\hat{x}, \hat{y})\in S\). Now by (5.6), Lemma 2.2 and the fact that \((x^{*}, y^{*})=P_{S}(u,v)\), we obtain

Applying Lemma 2.6 to (5.5), we have \(\lim_{n\rightarrow\infty}\varGamma_{n}(x^{*}, y^{*})=0\). Finally, by (5.4), we infer that

which ends the proof. □

6 Numerical results

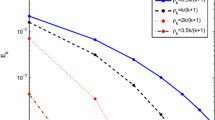

In this section, we verify the feasibility and efficiency of our algorithms through an example. The whole codes are written in Matlab R2012b on a personal computer with Inter(R) Core(TM) i5-4590 CPU, 3.30 GHz and 4 GB RAM.

Example 6.1

Let \(H_{1}=H_{2}=H_{3}=\mathbb{R}^{3}\),

\(C=\{x\in\mathbb{R}^{3}\mid x=(u, v, w)^{T}, v^{2}+w^{2}-1\leq0\}\), and \(Q=\{ y\in\mathbb{R}^{3}\mid y=(u, v, w)^{T}, u^{2}-v+5\leq0\}\). Find \(x\in C\), \(y\in Q\) such that \(Ax=By\).

It is easy to verify that this problem has a unique solution \((\bar{x}, \bar{y})\in\mathbb{R}^{3}\times \mathbb{R}^{3}\), where \(\bar{x}=(0, 1, 0)^{T}\), \(\bar{y}=(0, 5, 0)^{T}\). In the experiments, we take \(\gamma=0.9\times\min(\frac{1}{\|A\|^{2}},\frac{1}{\|B\|^{2}})\) in RACQA algorithm (1.8) and \(\tau=0.9\times(1+c)^{-1}\) with \(c=\max(\|A\|^{2}, \|B\|^{2})\) in Algorithm 4.1. The stopping criterion is \(\|x_{k+1}-x_{k}\|+\|y_{k+1}-y_{k}\|<10^{-3}\) and \(\|Ax_{k}-By_{k}\|<10^{-3}\). The numerical results can be seen from Tables 1–3. It is worth noting that the initial point in Table 3 is generated randomly. From Tables 1–3, we can see that the CPU time and iteration number of Algorithm 4.1 are less than that of RACQA algorithm (1.8).

References

Bauschke, H.H., Borwein, J.M.: On projection algorithms for solving convex feasibility problems. SIAM Rev. 38, 367–426 (1996)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Space. Springer, New York (2011)

Byrne, C.: Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 18, 441–453 (2002)

Byrne, C.: A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 20, 103–120 (2004)

Censor, Y., Elfving, T.: A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 8, 221–239 (1994)

Chuang, C., Du, W.: Hybrid simultaneous algorithms for the split equality problem with applications. J. Inequal. Appl. 2016(1), 198 (2016)

Dong, Q., He, S., Zhao, J.: Solving the split equality problem without prior knowledge of operator norms. Optimization 64(9), 1887–1906 (2015)

Fukushima, M.: A relaxed projection method for variational inequalities. Math. Program. 35, 58–70 (1986)

He, B.S.: Inexact implicit methods for monotone general variational inequalities. Math. Program., Ser. A 86, 199–217 (1999)

Moudafi, A.: A relaxed alternating CQ-algorithm for convex feasibility problems. Nonlinear Anal. 79, 117–121 (2013)

Moudafi, A.: Alternating CQ-algorithm for convex feasibility and split fixed-point problems. J. Nonlinear Convex Anal. 15, 809–818 (2014)

Naraghirad, E.: On an open question of Moudafi for convex feasibility problem in Hilbert spaces. Taiwan. J. Math. 18(2), 371–408 (2014)

Qu, B., Xiu, N.H.: A new half-space-relaxation projection method for the split feasibility problem. Linear Algebra Appl. 428, 1218–1229 (2008)

Shi, L., Chen, R., Wu, Y.: Strong convergence of iterative algorithms for the split equality problem. J. Inequal. Appl. 2014(1), 478 (2014)

Takahashi, W.: Nonlinear Functional Analysis, Fixed Point Theory and Its Applications. Yokahama Publishers, Yokahama (2000)

Tang, Y., Liu, L.: Iterative methods of strong convergence theorems for the split feasibility problem in Hilbert spaces. J. Inequal. Appl. 2016(1), 284 (2016)

Wang, F.: A new iterative method for the split common fixed point problem in Hilbert spaces. Optimization 66, 407–415 (2017)

Wang, F.: On the convergence of CQ algorithm with variable steps for the split equality problem. Numer. Algorithms 74, 927–935 (2017)

Wang, F.: Polyak’s gradient method for split feasibility problem constrained by level sets. Numer. Algorithms 77, 925–938 (2018)

Wang, F., Xu, H.K.: Cyclic algorithms for split feasibility problems in Hilbert spaces. Nonlinear Anal. 74, 4105–4111 (2011)

Xu, H.K.: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 66, 240–256 (2002)

Xu, H.K.: A variable Krasnosel’skii–Mann algorithm and the multiple-set split feasibility problem. Inverse Probl. 22, 2021–2034 (2006)

Xu, H.K.: Iterative methods for the split feasibility problem in infinite-dimensional Hilbert spaces. Inverse Probl. 26, 105018 (2010)

Xu, H.K.: Properties and iterative methods for the lasso and its variants. Chin. Ann. Math., Ser. B 35(3), 501–518 (2014)

Yang, Q.: The relaxed CQ algorithm solving the split feasibility problem. Inverse Probl. 20, 1261–1266 (2004)

Yang, Q.: On variable-step relaxed projection algorithm for variational inequalities. J. Math. Anal. Appl. 302, 166–179 (2005)

Yao, Y., Liou, Y., Postolache, M.: Self-adaptive algorithms for the split problem of the demicontractive operators. Optimization (2017). https://doi.org/10.1080/02331934.2017.1390747

Yu, H., Zhan, W., Wang, F.: The ball-relaxed CQ algorithms for the split feasibility problem. Optimization (2018). https://doi.org/10.1080/02331934.2018.1485677

Acknowledgements

Not applicable.

Availability of data and materials

Not applicable.

Funding

This work was supported by the National Natural Science Foundation of China (Nos. 11301253, 11571005), Program for Science and Technology Innovation Talents in the Universities of Henan Province (Grant No. 15HASTIT013), Innovation Scientists and Technicians Troop Construction Projects of Henan Province (Grant No. CXTD20150027) and Foundation of He’nan Educational Committee (Nos. 16A520064, 15A520087).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Yu, H., Wang, F. Relaxed alternating CQ algorithms for the split equality problem in Hilbert spaces. J Inequal Appl 2018, 335 (2018). https://doi.org/10.1186/s13660-018-1933-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-018-1933-2