Abstract

In this paper we study the bounded perturbation resilience of the extragradient and the subgradient extragradient methods for solving a variational inequality (VI) problem in real Hilbert spaces. This is an important property of algorithms which guarantees the convergence of the scheme under summable errors, meaning that an inexact version of the methods can also be considered. Moreover, once an algorithm is proved to be bounded perturbation resilience, superiorization can be used, and this allows flexibility in choosing the bounded perturbations in order to obtain a superior solution, as well explained in the paper. We also discuss some inertial extragradient methods. Under mild and standard assumptions of monotonicity and Lipschitz continuity of the VI’s associated mapping, convergence of the perturbed extragradient and subgradient extragradient methods is proved. In addition we show that the perturbed algorithms converge at the rate of \(O(1/t)\). Numerical illustrations are given to demonstrate the performances of the algorithms.

Similar content being viewed by others

1 Introduction

In this paper we are concerned with the variational inequality (VI) problem of finding a point \(x^{\ast}\) such that

where \(C\subseteq\mathcal{H}\) is a nonempty, closed and convex set in a real Hilbert space \(\mathcal{H}\), \(\langle\cdot,\cdot\rangle\) denotes the inner product in \(\mathcal{H}\), and \(F:\mathcal{H}\rightarrow\mathcal{H}\) is a given mapping. This problem is a fundamental problem in optimization theory, and it captures various applications such as partial differential equations, optimal control and mathematical programming; for the theory and application of VIs and related problems, the reader is referred, for example, to the works of Ceng et al. [1], Zegeye et al. [2], the papers of Yao et al. [3–5] and the many references therein.

Many algorithms for solving VI (1.1) are projection algorithms that employ projections onto the feasible set C of VI (1.1), or onto some related set, in order to reach iteratively a solution. Korpelevich [6] and Antipin [7] proposed an algorithm for solving (1.1), known as the extragradient method, see also Facchinei and Pang [8, Chapter 12]. In each iteration of the algorithm, in order to get the next iterate \(x^{k+1}\), two orthogonal projections onto C are calculated according to the following iterative step. Given the current iterate \(x^{k}\), calculate

where \(\gamma_{k}\in(0,1/L)\), and L is the Lipschitz constant of F, or \(\gamma_{k}\) is updated by the following adaptive procedure:

In the extragradient method there is the need to calculate twice the orthogonal projection onto C in each iteration. In case that the set C is simple enough so that projections onto it can be easily computed, this method is particularly useful; but if C is a general closed and convex set, a minimal distance problem has to be solved (twice) in order to obtain the next iterate. This might seriously affect the efficiency of the extragradient method. Hence, Censor et al. in [9–11] presented a method called the subgradient extragradient method, in which the second projection (1.2) onto C is replaced by a specific subgradient projection which can be easily calculated. The iterative step has the following form:

where \(T_{k}\) is the set defined as

and \(\gamma\in(0,1/L)\).

In this manuscript we prove that the above methods, the extragradient and the subgradient extragradient methods, are bounded perturbation resilient, and the perturbed methods have the convergence rate of \(O(1/t)\). This means that that will show that an inexact version of the algorithms allows incorporating summable errors that also converge to a solution of VI (1.1) and, moreover, their superiorized version can be introduced by choosing the perturbations. In order to obtain a superior solution with respect to some new objective function, for example, by choosing the norm, we can obtain a solution to VI (1.1) which is closer to the origin.

Our paper is organized as follows. In Section 2 we present the preliminaries. In Section 3 we study the convergence of the extragradient method with outer perturbations. Later, in Section 4, the bounded perturbation resilience of the extragradient method as well as the construction of the inertial extragradient methods are presented.

In the spirit of the previous sections, in Section 5 we study the convergence of the subgradient extragradient method with outer perturbations, show its bounded perturbation resilience and the construction of the inertial subgradient extragradient methods. Finally, in Section 6 we present numerical examples in signal processing which demonstrate the performances of the perturbed algorithms.

2 Preliminaries

Let \(\mathcal{H}\) be a real Hilbert space with the inner product \(\langle\cdot ,\cdot\rangle\) and the induced norm \(\Vert\cdot\Vert\), and let D be a nonempty, closed and convex subset of \(\mathcal{H}\). We write \(x^{k}\rightharpoonup x\) to indicate that the sequence \(\{ x^{k} \} _{k=0}^{\infty}\) converges weakly to x and \(x^{k}\rightarrow x\) to indicate that the sequence \(\{ x^{k} \} _{k=0}^{\infty}\) converges strongly to x. Given a sequence \(\{ x^{k} \} _{k=0}^{\infty}\), denote by \(\omega_{w}(x^{k})\) its weak ω-limit set, that is, any \(x\in\omega_{w}(x^{k})\) such that there exists a subsequence \(\{ x^{k_{j}} \} _{j=0}^{\infty}\) of \(\{ x^{k} \} _{k=0}^{\infty}\) which converges weakly to x.

For each point \(x\in\mathcal{H}\), there exists a unique nearest point in D denoted by \(P_{D}(x)\). That is,

The mapping \(P_{D}:\mathcal{H}\rightarrow D\) is called the metric projection of \(\mathcal{H}\) onto D. It is well known that \(P_{D}\) is a nonexpansive mapping of \(\mathcal{H}\) onto D, i.e., and even firmly nonexpansive mapping. This is captured in the next lemma.

Lemma 2.1

For any \(x,y\in\mathcal{H}\) and \(z\in D\), it holds

-

\(\Vert P_{D}(x)-P_{D}(y)\Vert^{2}\leq\Vert x-y\Vert\);

-

\(\Vert P_{D}(x)-z\Vert^{2}\leq\Vert x-z\Vert^{2}-\Vert P_{D}(x)-x\Vert^{2}\).

The characterization of the metric projection \(P_{D}\) [12, Section 3] is given by the following two properties in this lemma.

Lemma 2.2

Given \(x\in\mathcal{H}\) and \(z\in D\). Then \(z=P_{D} ( x ) \) if and only if

and

Definition 2.3

The normal cone of D at \(v\in D\) denoted by \(N_{D} ( v ) \) is defined as

Definition 2.4

Let \(B:\mathcal{H}\rightrightarrows2^{\mathcal{H}}\) be a point-to-set operator defined on a real Hilbert space \(\mathcal{H}\). The operator B is called a maximal monotone operator if B is monotone, i.e.,

and the graph \(G(B)\) of B,

is not properly contained in the graph of any other monotone operator.

Based on Rockafellar [13, Theorem 3], a monotone mapping B is maximal if and only if, for any \(( x,u ) \in\mathcal{H}\times \mathcal{H}\), if \(\langle u-v,x-y \rangle\geq0\) for all \(( v,y ) \in G(B)\), then it follows that \(u\in B(x)\).

Definition 2.5

The subdifferential set of a convex function c at a point x is defined as

For \(z\in\mathcal{H}\), take any \(\xi\in\partial c(z)\) and define

This is a half-space, the bounding hyperplane of which separates the set D from the point z if \(\xi\neq0\); otherwise \(T(z)=\mathcal{H}\); see, e.g., [14, Lemma 7.3].

Lemma 2.6

([15])

Let D be a nonempty, closed and convex subset of a Hilbert space \(\mathcal{H}\). Let \(\{x^{k}\}_{k=0}^{\infty}\) be a bounded sequence which satisfies the following properties:

-

every limit point of \(\{x^{k}\}_{k=0}^{\infty}\) lies in D;

-

\(\lim_{n\rightarrow\infty}\Vert x^{k}-x\Vert\) exists for every \(x\in D\).

Then \(\{x^{k}\}_{k=0}^{\infty}\) converges to a point in D.

Lemma 2.7

Assume that \(\{a_{k}\}_{k=0}^{\infty}\) is a sequence of nonnegative real numbers such that

where the nonnegative sequences \(\{\gamma_{k}\}_{k=0}^{\infty}\) and \(\{\delta_{k}\}_{k=0}^{\infty}\) satisfy \(\sum_{k=0}^{\infty}\gamma _{k}<+\infty\) and \(\sum_{k=0}^{\infty}\delta_{k}<+\infty\), respectively. Then \(\lim_{k\rightarrow\infty}a_{k}\) exists.

3 The extragradient method with outer perturbations

In order to discuss the convergence of the extragradient method with outer perturbations, we make the following assumptions.

Condition 3.1

The solution set of (1.1), denoted by \(\mathit{SOL}(C,F)\), is nonempty.

Condition 3.2

The mapping F is monotone on C, i.e.,

Condition 3.3

The mapping F is Lipschitz continuous on C with the Lipschitz constant \(L>0\), i.e.,

Observe that while Censor et al. in [10, Theorem 3.1] showed the weak convergence of the extragradient method (1.2) in Hilbert spaces for a fixed step size \(\gamma_{k}=\gamma\in(0,1/L)\), this can be easily improved in case that the adaptive rule (1.3) is used. The next theorem shows this, and its proof can easily be derived by following similar lines of the proof of [10, Theorem 3.1].

Theorem 3.4

Assume that Conditions 3.1-3.3 hold. Then any sequence \(\{x^{k}\}_{k=0}^{\infty}\) generated by the extragradient method (1.2) with the adaptive rule (1.3) weakly converges to a solution of the variational inequality (1.1).

Denote \(e_{i}^{k}:=e_{i}(x^{k})\), \(i=1,2\). The sequences of perturbations \(\{e_{i}^{k}\}_{k=0}^{\infty}\), \(i=1,2\), are assumed to be summable, i.e.,

Now we consider the extragradient method with outer perturbations.

Algorithm 3.5

The extragradient method with outer perturbations:

Step 0: Select a starting point \(x^{0}\in C\) and set \(k=0\).

Step 1: Given the current iterate \(x^{k}\), compute

where \(\gamma_{k}=\sigma\rho^{m_{k}}\), \(\sigma>0\), \(\rho\in(0,1)\) and \(m_{k}\) is the smallest nonnegative integer such that (see [16])

Calculate the next iterate

Step 2: If \(x^{k}=y^{k}\), then stop. Otherwise, set \(k\leftarrow(k+1)\) and return to Step 1.

3.1 Convergence analysis

Lemma 3.6

([17])

The Armijo-like search rule (3.5) is well defined. Besides, \(\underline{\gamma}\leq\gamma_{k}\leq\sigma\), where \(\underline{\gamma}=\min\{\sigma,\frac{\mu\rho}{L}\}\).

Theorem 3.7

Assume that Conditions 3.1-3.3 hold. Then the sequence \(\{x^{k}\}_{k=0}^{\infty}\) generated by Algorithm 3.5 converges weakly to a solution of the variational inequality (1.1).

Proof

Take \(x^{*}\in \mathit{SOL}(C,F)\). From (3.6) and Lemma 2.1(ii), we have

From the Cauchy-Schwarz inequality and the mean value inequality, it follows

Using \(x^{*}\in \mathit{SOL}(C,F)\) and the monotone property of F, we have \(\langle y^{k}-x^{*},F(y^{k})\rangle\geq0\) and, consequently, we get

Thus, we have

where the equality comes from

Using \(x^{k+1}\in C\), the definition of \(y^{k}\) and Lemma 2.2, we have

So, we obtain

From (3.3), it follows

Therefor, we assume \(\|e_{1}^{k}\|\in[0,1- \mu-\nu)\) and \(\|e_{2}^{k}\|\in [0,1/2)\), \(k\geq0\), where \(\nu\in(0,1-\mu)\). So, using (3.13), we get

Combining (3.7)-(3.10) and (3.15), we obtain

where

From (3.16), it follows

Since \(\|e_{2}^{k}\|\in[0,1/2)\), \(k\geq0\), we get

So, from (3.18), we have

Using (3.3) and Lemma 2.7, we get the existence of \(\lim_{k\rightarrow\infty}\|x^{k}-x^{*}\|^{2}\) and then the boundedness of \(\{x^{k}\}_{k=0}^{\infty}\). From (3.20), it follows

which means that

Thus, we obtain

and consequently,

Now, we are to show \(\omega_{w}(x^{k})\subseteq \mathit{SOL}(C,F)\). Due to the boundedness of \(\{x^{k}\}_{k=0}^{\infty}\), it has at least one weak accumulation point. Let \(\hat{x}\in\omega_{w}(x^{k})\). Then there exists a subsequence \(\{x^{k_{i}}\} _{i=0}^{\infty}\) of \(\{x^{k}\}_{k=0}^{\infty}\) which converges weakly to x̂. From (3.23), it follows that \(\{y^{k_{i}}\}_{i=0}^{\infty}\) also converges weakly to x̂.

We will show that x̂ is a solution of the variational inequality (1.1). Let

where \(N_{C}(v)\) is the normal cone of C at \(v\in C\). It is known that A is a maximal monotone operator and \(A^{-1}(0)=\mathit{SOL}(C,F)\). If \((v,w)\in G(A)\), then we have \(w-F(v)\in N_{C}(v)\) since \(w\in A(v)=F(v)+N_{C}(v)\). Thus it follows that

Since \(y^{k_{i}}\in C\), we have

On the other hand, by the definition of \(y^{k}\) and Lemma 2.2, it follows that

and consequently,

Hence we have

which implies

Taking the limit as \(i\rightarrow\infty\) in the above inequality and using Lemma 3.6, we obtain

Since A is a maximal monotone operator, it follows that \(\hat{x}\in A^{-1}(0) = \mathit{SOL}(C,F)\). So, \(\omega_{w}(x^{k})\subseteq \mathit{SOL}(C,F)\).

Since \(\lim_{k\rightarrow\infty}\|x^{k}-x^{*}\|\) exists and \(\omega_{w}(x^{k})\subseteq \mathit{SOL}(C,F)\), using Lemma 2.6, we conclude that \(\{x^{k}\}_{k=0}^{\infty}\) weakly converges to a solution of the variational inequality (1.1). This completes the proof. □

3.2 Convergence rate

Nemirovski [18] and Tseng [19] proved the \(O(1/t) \) convergence rate of the extragradient method. In this subsection, we present the convergence rate of Algorithm 3.5.

Theorem 3.8

Assume that Conditions 3.1-3.3 hold. Let the sequences \(\{x^{k}\}_{k=0}^{\infty}\) and \(\{y^{k}\}_{k=0}^{\infty}\) be generated by Algorithm 3.5. For any integer \(t>0\), we have \(y_{t}\in C\) which satisfies

where

and

Proof

Take arbitrarily \(x\in C\). From Conditions 3.2 and 3.3, we have

By (3.6) and Lemma 2.2, we get

Identifying \(x^{*}\) with x in (3.7) and (3.8), and combining (3.36) and (3.37), we get

Thus, we have

where \(M^{\prime}(x)=\sup_{k}\{\max\{\|x^{k+1}-y^{k}\|,\|x^{k+1}-x\|\}\} <+\infty\). Summing inequality (3.39) over \(k = 0,\ldots, t\), we obtain

Using the notations of \(\Upsilon_{t}\) and \(y^{t}\) in the above inequality, we derive

The proof is complete. □

Remark 3.9

From Lemma 3.6, it follows

thus Algorithm 3.5 has \(O(1/t)\) convergence rate. In fact, for any bounded subset \(D \subset C\) and given accuracy \(\epsilon> 0\), our algorithm achieves

in at most

iterations, where \(y_{t}\) is defined by (3.34) and \(m=\sup\{\| x-x^{0}\|^{2}+M(x)\mid x\in D\}\).

4 The bounded perturbation resilience of the extragradient method

In this section, we prove the bounded perturbation resilience (BPR) of the extragradient method. This property is fundamental for the application of the superiorization methodology (SM) to them.

The superiorization methodology of [20–22], which originates in the papers by Butnariu, Reich and Zaslavski [23–25], is intended for constrained minimization (CM) problems of the form

where \(\phi:H\rightarrow\mathbb{R}\) is an objective function and \(\Psi \subseteq H\) is the solution set of another problem. Here, we assume \(\Psi\neq \emptyset\) throughout this paper. Assume that the set Ψ is a closed convex subset of a Hilbert space H, the minimization problem (4.1) becomes a standard CM problem. Here, we are interested in the case wherein Ψ is the solution set of another CM of the form

i.e., we wish to look at

provided that Ψ is nonempty. If f is differentiable, and let \(F=\nabla f\), then CM (4.2) equals the following variational inequality: to find a point \(x^{\ast}\in C\) such that

The superiorization methodology (SM) strives not to solve (4.1) but rather the task is to find a point in Ψ which is superior, i.e., has a lower, but not necessarily minimal, value of the objective function ϕ. This is done in the SM by first investigating the bounded perturbation resilience of an algorithm designed to solve (4.2) and then proactively using such permitted perturbations in order to steer the iterates of such an algorithm toward lower values of the ϕ objective function while not loosing the overall convergence to a point in Ψ.

In this paper, we do not investigate superiorization of the extragradient method. We prepare for such an application by proving the bounded perturbation resilience that is needed in order to do superiorization.

Algorithm 4.1

The basic algorithm:

Initialization: \(x^{0} \in\Theta\) is arbitrary;

Iterative step: Given the current iterate vector \(x^{k}\), calculate the next iterate \(x^{k+1}\) via

The bounded perturbation resilience (henceforth abbreviated to BPR) of such a basic algorithm is defined next.

Definition 4.2

An algorithmic operator \(\mathbf{A}_{\Psi}: H\rightarrow \Theta\) is said to be bounded perturbations resilient if the following is true. If Algorithm 4.5 generates sequences \(\{x^{k}\}_{k=0}^{\infty}\) with \(x^{0}\in\Theta\) that converge to points in Ψ, then any sequence \(\{y^{k}\}_{k=0}^{\infty}\) starting from any \(y^{0}\in\Theta\), generated by

also converges to a point in Ψ, provided that (i) the sequence \(\{v^{k}\}_{k=0}^{\infty}\) is bounded, and (ii) the scalars \(\{\lambda_{k}\} _{k=0}^{\infty}\) are such that \(\lambda_{k}\geq0\) for all \(k \geq0\), and \(\sum_{k=0}^{\infty}\lambda_{k}<+\infty\), and (iii) \(y^{k}+\lambda_{k}v^{k}\in \Theta\) for all \(k \geq0\).

Definition 4.2 is nontrivial only if \(\Theta\neq\mathcal{H}\), in which condition (iii) is enforced in the superiorized version of the basic algorithm, see step (xiv) in the ‘Superiorized Version of Algorithm P’ in ([26], p.5537) and step (14) in ‘Superiorized Version of the ML-EM Algorithm’ in ([27], Subsection II.B). This will be the case in the present work.

Treating the extragradient method as the Basic Algorithm \(\mathbf {A}_{\Psi}\), our strategy is to first prove convergence of the iterative step (1.2) with bounded perturbations. We show next how the convergence of this yields BPR according to Definition 4.2.

A superiorized version of any Basic Algorithm employs the perturbed version of the Basic Algorithm as in (4.6). A certificate to do so in the superiorization method, see [28], is gained by showing that the Basic Algorithm is BPR. Therefore, proving the BPR of an algorithm is the first step toward superiorizing it. This is done for the extragradient method in the next subsection.

4.1 The BPR of the extragradient method

In this subsection, we investigate the bounded perturbation resilience of the extragradient method whose iterative step is given by (1.2).

To this end, we treat the right-hand side of (1.2) as the algorithmic operator \(\mathbf{A}_{\Psi}\) of Definition 4.2, namely, we define, for all \(k\geq0\),

and identify the solution set Ψ with the solution set of the variational inequality (1.1) and identify the additional set Θ with C.

According to Definition 4.2, we need to show the convergence of the sequence \(\{x^{k}\}_{k=0}^{\infty}\) that, starting from any \(x^{0}\in C\), is generated by

which can be rewritten as

where \(\gamma_{k}=\sigma\rho^{m_{k}}\), \(\sigma>0\), \(\rho\in(0,1)\) and \(m_{k}\) is the smallest nonnegative integer such that

The sequences \(\{v^{k}\}_{k=0}^{\infty}\) and \(\{\lambda_{k}\} _{k=0}^{\infty}\) obey conditions (i) and (ii) in Definition 4.2, respectively, and also (iii) in Definition 4.2 is satisfied.

The next theorem establishes the bounded perturbation resilience of the extragradient method. The proof idea is to build a relationship between BPR and the convergence of the iterative step (1.2).

Theorem 4.3

Assume that Conditions 3.1-3.3 hold. Assume the sequence \(\{v^{k}\}_{k=0}^{\infty}\) is bounded, and the scalars \(\{\lambda_{k}\}_{k=0}^{\infty}\) are such that \(\lambda_{k}\geq0\) for all \(k \geq 0 \), and \(\sum_{k=0}^{\infty}\lambda_{k}<+\infty\). Then the sequence \(\{ x^{k}\}_{k=0}^{\infty}\) generated by (4.9) and (4.10) converges weakly to a solution of the variational inequality (1.1).

Proof

Take \(x^{*}\in \mathit{SOL}(C,F)\). From \(\sum_{k=0}^{\infty}\lambda_{k}<+\infty\) and that \(\{v^{k}\}_{k=0}^{\infty}\) is bounded, we have

which means

So, we assume \(\lambda_{k}\|v^{k}\|\in[0,(1-\mu-\nu)/2)\), where \(\nu\in [0,1-\mu)\). Identifying \(e_{2}^{k}\) with \(\lambda_{k}v^{k}\) in (3.7) and (3.8) and using (3.10), we get

From \(x^{k+1}\in C\), the definition of \(y^{k}\) and Lemma 2.2, we have

So, we obtain

We have

Similar to (3.8), we can show

Combining (4.14)-(4.16), we get

where the last inequality comes from \(\lambda_{k}\Vert v^{k}\Vert <(1-\mu )/2\) and \(\mu<1\). Substituting (4.17) into (4.13), we get

Following the proof line of Theorem 3.7, we get \(\{x^{k}\} _{k=0}^{\infty}\) weakly converges to a solution of the variational equality (1.1). □

By using Theorems 3.8 and 4.3, we obtain the convergence rate of the extragradient method with BP.

Theorem 4.4

Assume that Conditions 3.1-3.3 hold. Assume the sequence \(\{v^{k}\}_{k=0}^{\infty}\) is bounded, and the scalars \(\{\lambda_{k}\}_{k=0}^{\infty}\) are such that \(\lambda_{k}\geq0\) for all \(k \geq 0 \), and \(\sum_{k=0}^{\infty}\lambda_{k}<+\infty\). Let the sequences \(\{ x^{k}\}_{k=0}^{\infty}\) and \(\{y^{k}\}_{k=0}^{\infty}\) be generated by (4.9) and (4.10). For any integer \(t > 0\), we have \(y_{t}\in C\) which satisfies

where

and

4.2 Construction of the inertial extragradient methods by BPR

In this subsection, we construct two classes of inertial extragradient methods by using BPR, i.e., identifying \(e_{i}^{k}\), \(k=1,2\), and \(\lambda_{k}\), \(v^{k}\) with special values.

Polyak [29, 30] first introduced the inertial-type algorithms by using the heavy ball method of the second-order dynamical systems in time. Since the inertial-type algorithms speed up the original algorithms without the inertial effects, recently there has been increasing interest in studying inertial-type algorithms (see, e.g., [31–34]). The authors [35] introduced an inertial extragradient method as follows:

for each \(k\geq1\), where \(\gamma\in(0,1/L)\), \(\{\alpha_{k}\}\) is nondecreasing with \(\alpha_{1}=0\) and \(0\leq\alpha_{k}\leq\alpha <1\) for each \(k\geq1\) and \(\lambda,\sigma,\delta>0\) are such that

and

where L is the Lipschitz constant of F.

Based on the iterative step (1.2), we construct the following inertial extragradient method:

where

Theorem 4.5

Assume that Conditions 3.1-3.3 hold. Assume that the sequences \(\{\beta_{k}^{(i)}\}_{k=0}^{\infty}\), \(i=1,2\), satisfy \(\sum_{k=1}^{\infty}\beta_{k}^{(i)}<\infty\), \(i=1,2\). Then the sequence \(\{x^{k}\}_{k=0}^{\infty}\) generated by the inertial extragradient method (4.24) converges weakly to a solution of the variational inequality (1.1).

Proof

Let \(e_{i}^{k}=\beta_{k}^{(i)}v^{k}\), \(i=1,2\), where

It is obvious that \(\Vert v^{k}\Vert\leq1\). So, it follows that \(\{e_{i}^{k}\}\), \(i=1,2\), satisfy (3.3) from the condition on \(\{\beta_{k}^{(i)}\}\). Using Theorem 3.7, we complete the proof. □

Remark 4.6

From (3.24), we have \(\|x^{k}- x^{k-1}\|\leq1\) for big enough k, that is, \(\alpha_{k}^{(i)}=\beta_{k}^{(i)}\).

Using the extragradient method with bounded perturbations (4.9), we construct the following inertial extragradient method:

where

We extend Theorem 4.3 to the convergence of the inertial extragradient method (4.27).

Theorem 4.7

Assume that Conditions 3.1-3.3 hold. Assume that the sequence \(\{\beta_{k}\}_{k=0}^{\infty}\) satisfies \(\sum_{k=1}^{\infty }\beta_{k}<\infty\). Then the sequence \(\{x^{k}\}_{k=0}^{\infty}\) generated by the inertial extragradient method (4.27) converges weakly to a solution of the variational inequality (1.1).

Remark 4.8

The inertial parameter \(\alpha_{k}\) in the inertial extragradient method (4.24) is bigger than that of the inertial extragradient method (4.27). The inertial extragradient method (4.24) becomes the inertial extragradient method (4.27) when \(\lambda_{k}=1\).

5 The extension to the subgradient extragradient method

In this section, we generalize the results of extragradient method proposed in the previous sections to the subgradient extragradient method.

Censor et al. [9] presented the subgradient extragradient method (1.4). In their method the step size is fixed \(\gamma\in(0,1/L)\), where L is a Lipschitz constant of F. So, in order to determine the stepsize \(\gamma_{k}\), one needs first calculate (or estimate) L, which might be difficult or even impossible in general. So, in order to overcome this, the Armijo-like search rule can be used:

To discuss the convergence of the subgradient extragradient method, we make the following assumptions.

Condition 5.1

The mapping F is monotone on \(\mathcal{H}\), i.e.,

Condition 5.2

The mapping F is Lipschitz continuous on \(\mathcal{H}\) with the Lipschitz constant \(L>0\), i.e.,

As before, Censor et al.’s subgradient extragradient method [10, Theorem 3.1] can be easily generalized by using some adaptive step rule, for example, (5.1). This result is captured in the next theorem.

Theorem 5.3

Assume that Conditions 3.1, 5.1 and 5.2 hold. Then the sequence \(\{x^{k}\}_{k=0}^{\infty}\) generated by the subgradient extragradient methods (1.4) and (5.1) weakly converges to a solution of the variational inequality (1.1).

5.1 The subgradient extragradient method with outer perturbations

In this subsection, we present the subgradient extragradient method with outer perturbations.

Algorithm 5.4

The subgradient extragradient method with outer perturbations:

Step 0: Select a starting point \(x^{0}\in\mathcal{H}\) and set \(k=0\).

Step 1: Given the current iterate \(x^{k}\), compute

where \(\gamma_{k}=\sigma\rho^{m_{k}}\), \(\sigma>0\), \(\rho\in(0,1)\) and \(m_{k}\) is the smallest nonnegative integer such that (see [16])

Construct the set

and calculate

Step 2: If \(x^{k}=y^{k}\), then stop. Otherwise, set \(k\leftarrow(k+1)\) and return to Step 1.

Denote \(e_{i}^{k}:=e_{i}(x^{k})\), \(i=1,2\). The sequences of perturbations \(\{e_{i}^{k}\}_{k=0}^{\infty}\), \(i=1,2\), are assumed to be summable, i.e.,

Following the proof of Theorems 3.7 and 3.8, we get the convergence analysis and convergence rate of Algorithm 5.4.

Theorem 5.5

Assume that Conditions 3.1, 5.1 and 5.2 hold. Then the sequence \(\{x^{k}\}_{k=0}^{\infty}\) generated by Algorithm 5.4 converges weakly to a solution of the variational inequality (1.1).

Theorem 5.6

Assume that Conditions 3.1, 5.1 and 5.2 hold. Let the sequences \(\{x^{k}\}_{k=0}^{\infty}\) and \(\{y^{k}\}_{k=0}^{\infty}\) be generated by Algorithm 5.4. For any integer \(t > 0\), we have \(y_{t}\in C\) which satisfies

where

and

5.2 The BPR of the subgradient extragradient method

In this subsection, we investigate the bounded perturbation resilience of the subgradient extragradient method (1.4).

To this end, we treat the right-hand side of (1.4) as the algorithmic operator \(\mathbf{A}_{\Psi}\) of Definition 4.2, namely, we define, for all \(k\geq0\),

where \(\gamma_{k}\) satisfies (5.1) and

Identify the solution set Ψ with the solution set of the variational inequality (1.1) and identify the additional set Θ with C.

According to Definition 4.2, we need to show the convergence of the sequence \(\{x^{k}\}_{k=0}^{\infty}\) that, starting from any \(x^{0}\in \mathcal{H}\), is generated by

which can be rewritten as

where \(\gamma_{k}=\sigma\rho^{m_{k}}\), \(\sigma>0\), \(\rho\in(0,1)\) and \(m_{k}\) is the smallest nonnegative integer such that

The sequences \(\{v^{k}\}_{k=0}^{\infty}\) and \(\{\lambda_{k}\} _{k=0}^{\infty}\) obey conditions (i) and (ii) in Definition 4.2, respectively, and also (iii) in Definition 4.2 is satisfied.

The next theorem establishes the bounded perturbation resilience of the subgradient extragradient method. Since its proof is similar to that of Theorem 4.3, we omit it.

Theorem 5.7

Assume that Conditions 3.1, 5.1 and 5.2 hold. Assume the sequence \(\{v^{k}\}_{k=0}^{\infty}\) is bounded, and the scalars \(\{\lambda_{k}\}_{k=0}^{\infty}\) are such that \(\lambda_{k}\geq0\) for all \(k \geq 0 \), and \(\sum_{k=0}^{\infty}\lambda_{k}<+\infty\). Then the sequence \(\{ x^{k}\}_{k=0}^{\infty}\) generated by (5.15) and (5.16) converges weakly to a solution of the variational inequality (1.1).

We also get the convergence rate of the subgradient extragradient methods with BP (5.15) and (5.16).

Theorem 5.8

Assume that Conditions 3.1, 5.1 and 5.2 hold. Assume the sequence \(\{v^{k}\}_{k=0}^{\infty}\) is bounded, and the scalars \(\{\lambda_{k}\}_{k=0}^{\infty}\) are such that \(\lambda_{k}\geq0\) for all \(k \geq 0 \), and \(\sum_{k=0}^{\infty}\lambda_{k}<+\infty\). Let the sequences \(\{ x^{k}\}_{k=0}^{\infty}\) and \(\{y^{k}\}_{k=0}^{\infty}\) be generated by (5.15) and (5.16). For any integer \(t > 0\), we have \(y_{t}\in C\) which satisfies

where

and

5.3 Construction of the inertial subgradient extragradient methods by BPR

In this subsection, we construct two classes of inertial subgradient extragradient methods by using BPR, i.e., identifying \(e_{i}^{k}\), \(k=1,2\), and \(\lambda_{k}\), \(v^{k}\) with special values.

Based on Algorithm 5.4, we construct the following inertial subgradient extragradient method:

where \(\gamma_{k}\) satisfies (5.16) and

Similar to the proof of Theorem 4.5, we get the convergence of the inertial subgradient extragradient method (5.20).

Theorem 5.9

Assume that Conditions 3.1, 5.1 and 5.2 hold. Assume that the sequences \(\{\beta_{k}^{(i)}\}_{k=0}^{\infty}\), \(i=1,2\), satisfy \(\sum_{k=1}^{\infty}\beta_{k}^{(i)}<\infty\), \(i=1,2\). Then the sequence \(\{x^{k}\}_{k=0}^{\infty}\) generated by the inertial subgradient extragradient method (5.20) converges weakly to a solution of the variational inequality (1.1).

Using the subgradient extragradient method with bounded perturbations (5.15), we construct the following inertial subgradient extragradient method:

where \(\gamma_{k}=\sigma\rho^{m_{k}}\), \(\sigma>0\), \(\rho\in(0,1)\) and \(m_{k}\) is the smallest nonnegative integer such that

and

We extend Theorem 4.3 to the convergence of the inertial subgradient extragradient method (5.22).

Theorem 5.10

Assume that Conditions 3.1, 5.1 and 5.2 hold. Assume that the sequence \(\{\beta_{k}\}_{k=0}^{\infty}\) satisfies \(\sum_{k=1}^{\infty }\beta_{k}<\infty\). Then the sequence \(\{x^{k}\}_{k=0}^{\infty}\) generated by the inertial subgradient extragradient method (5.22) converges weakly to a solution of the variational inequality (1.1).

6 Numerical experiments

In this section, we provide three examples to compare the inertial extragradient method (4.22) (iEG1), the inertial extragradient method (4.24) (iEG2), the inertial extragradient method (4.27) (iEG), the extragradient method (1.2), the inertial subgradient extragradient method (5.20) (iSEG1), the inertial subgradient extragradient method (5.22) (iSEG2) and the subgradient extragradient method (1.4).

In the first example, we consider a typical sparse signal recovery problem. We choose the following set of parameters. Take \(\sigma=5\), \(\rho=0.9\) and \(\mu=0.7\). Set

in the inertial extragradient methods (4.22) and (4.24), and the inertial subgradient extragradient methods (5.20) and (5.22). Choose \(\alpha_{k}=0.35\) and \(\lambda _{k}=0.8\) in the inertial extragradient method (4.24).

Example 6.1

Let \(x_{0} \in R^{n}\) be a K-sparse signal, \(K\ll n\). The sampling matrix \(A \in R^{m\times n}\) (\(m< n\)) is stimulated by the standard Gaussian distribution and a vector \(b = Ax_{0} + e\), where e is additive noise. When \(e=0\), it means that there is no noise to the observed data. Our task is to recover the signal \(x_{0}\) from the data b.

It is well known that the sparse signal \(x_{0}\) can be recovered by solving the following LASSO problem [36]:

where \(t >0\). It is easy to see that the optimization problem (6.1) is a special case of the variational inequality problem (1.1), where \(F(x) = A^{T}(Ax-b)\) and \(C = \{ x \mid \|x\|_{1} \leq t \} \). We can use the proposed iterative algorithms to solve the optimization problem (6.1). Although the orthogonal projection onto the closed convex set C does not have a closed-form solution, the projection operator \(P_{C}\) can be precisely computed in a polynomial time. We include the details of computing \(P_{C}\) in Appendix. We conduct plenty of simulations to compare the performances of the proposed iterative algorithms. The following inequality was defined as the stopping criterion:

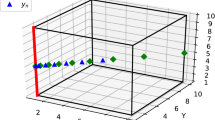

where \(\epsilon>0\) is a given small constant. ‘Iter’ denotes the iteration numbers. ‘Obj’ represents the objective function value and ‘Err’ is the 2-norm error between the recovered signal and the true K-sparse signal. We divide the experiments into two parts. One task is to recover the sparse signal \(x_{0}\) from noise observation vector b, and the other is to recover the sparse signal from noiseless data b. For the noiseless case, the obtained numerical results are reported in Table 1. To visually view the results, Figure 1 shows the recovered signal compared with the true signal \(x_{0}\) when \(K=30\). We can see from Figure 1 that the recovered signal is the same as the true signal. Further, Figure 2 presents the objective function value versus the iteration numbers.

For the noise observation b, we assume that the vector e is corrupted by Gaussian noise with zero mean and β variances. The system matrix A is the same as in the noiseless case, and the sparsity level \(K=30\). We list the numerical results for different noise level β in Table 2. When the noise \(\beta= 0.02\), Figure 3 shows the objective function value versus the iteration numbers. Figure 4 shows the recovered signal vs the true signal in the noise case.

Example 6.2

Let \(F:\mathbb{R}^{2} \rightarrow \mathbb{R}^{2}\) be defined by

The authors [37] proved that F is Lipschitz continuous with \(L=\sqrt{26}\) and 1-strongly monotone. Therefore the variational inequality (1.1) has a unique solution, and \((0,0)\) is its solution.

Let \(C=\{x\in\mathbb{R}^{2}\mid e_{1}\leq x\leq e_{2}\}\), where \(e_{1}=(-10,-10)\) and \(e_{2}=(100,100)\). Take the initial point \(x_{0}=(-100,10)\in\mathbb{R}^{2}\). Since \((0,0)\) is the unique solution of the variational inequality (1.1), denote by \(D_{k}:=\|x^{k}\|\leq10^{-5}\) the stopping criterion.

Example 6.3

Let \(F: \mathbb{R}^{n} \rightarrow\mathbb{R}^{n}\) defined by \(F(x)=Ax+b\), where \(A=Z^{T}Z\), \(Z=(z_{ij})_{n\times n}\) and \(b=(b_{i})\in\mathbb{R}^{n}\), where \(z_{ij}\in(0,1)\) and \(b_{i}\in(0,1)\) are generated randomly.

It is easy to verify that F is L-Lipschitz continuous and η-strongly monotone with \(L=\max(\operatorname{eig}(A))\) and \(\eta=\min(\operatorname{eig}(A))\).

Let \(C:=\{x\in\mathbb{R}^{n}\mid\Vert x-d\Vert\leq r\}\), where the center

and radius \(r\in(0,10)\) are randomly chosen. Take the initial point \(x_{0}=(c_{i})\in\mathbb{R}^{n}\), where \(c_{i}\in{}[0,2]\) is generated randomly. Set \(n=100\). Take \(\rho=0.4\) and other parameters are set the same values as in Example 6.2. Although the variational inequality (1.1) has a unique solution, it is difficult to get the exact solution. So, denote by \(D_{k}:=\Vert x^{k+1}-x^{k}\Vert\leq10^{-5}\) the stopping criterion.

From Figures 5 and 6, we conclude: (i) the inertial-type algorithms improve the original algorithms; (ii) the performances of the inertial extragradient methods (4.22) and (4.24) are almost the same; (iii) the inertial subgradient extragradient method (5.20) performs better than the inertial subgradient extragradient method (5.22) for Example 6.1, while they are almost the same for Example 6.2; (iv) the (inertial) extragradient methods behave better than the (inertial) subgradient extragradient methods since the sets C in Examples 6.2 and 6.3 are simple, and hence the computation load of the projection onto it is small; (v) the inertial extragradient method (4.22) has an advantage over the inertial extragradient methods (4.22) and (4.24). The reason may be that it takes a bigger inertial parameter \(\alpha_{k}\).

Comparison of the number of iterations of different methods for Example 6.2 .

Comparison of the number of iterations of different methods for Example 6.3 .

7 Conclusions

In this research article we study an important property of iterative algorithms for solving variational inequality (VI) problems which is called bounded perturbation resilience. In particular, we focus on extragradient-type methods. This enables us to develop inexact versions of the methods as well as apply the superiorization methodology in order to obtain a ‘superior’ solution to the original problem. In addition, some inertial extragradient methods are also derived. All the presented methods converge at the rate of \(O(1/t)\), and three numerical examples illustrate, demonstrate and compare the performances of all the algorithms.

References

Ceng, LC, Liou, YC, Yao, JC, Yao, YH: Well-posedness for systems of time-dependent hemivariational inequalities in Banach spaces. J. Nonlinear Sci. Appl. 10, 4318-4336 (2017)

Zegeye, H, Shahzad, N, Yao, YH: Minimum-norm solution of variational inequality and fixed point problem in Banach spaces. Optimization 64, 453-471 (2015)

Yao, YH, Liou, YC, Yao, JC: Iterative algorithms for the split variational inequality and fixed point problems under nonlinear transformations. J. Nonlinear Sci. Appl. 10, 843-854 (2017)

Yao, YH, Noor, MA, Liou, YC, Kang, SM: Iterative algorithms for general multi-valued variational inequalities. Abstr. Appl. Anal. 2012, Article ID 768272 (2012)

Yao, YH, Postolache, M, Liou, YC, Yao, Z-S: Construction algorithms for a class of monotone variational inequalities. Optim. Lett. 10, 1519-1528 (2016)

Korpelevich, GM: The extragradient method for finding saddle points and other problems. Èkon. Mat. Metody 12, 747-756 (1976)

Antipin, AS: On a method for convex programs using a symmetrical modification of the Lagrange function. Èkon. Mat. Metody 12, 1164-1173 (1976)

Facchinei, F, Pang, JS: Finite-Dimensional Variational Inequalities and Complementarity Problems, vol. II. Springer, New York (2003)

Censor, Y, Gibali, A, Reich, S: The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 148, 318-335 (2011)

Censor, Y, Gibali, A, Reich, S: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 6, 827-845 (2011)

Censor, Y, Gibali, A, Reich, S: Extensions of Korpelevich’s extragradient method for solving the variational inequality problem in Euclidean space. Optimization 61, 1119-1132 (2012)

Goebel, K, Reich, S: Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings. Marcel Dekker, New York (1984)

Rockafellar, RT: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877-898 (1976)

Bauschke, HH, Borwein, JM: On projection algorithms for solving convex feasibility problems. SIAM Rev. 38, 367-426 (1996)

Bauschke, HH, Combettes, PL: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, Berlin (2011)

Khobotov, EN: Modification of the extragradient method for solving variational inequalities and certain optimization problems. USSR Comput. Math. Math. Phys. 27, 120-127 (1987)

Zhao, J, Yang, Q: Self-adaptive projection methods for the multiple-sets split feasibility problem. Inverse Probl. 27, Article ID 035009 (2011)

Nemirovski, A: Prox-method with rate of convergence \(O(1/t)\) for variational inequality with Lipschitz continuous monotone operators and smooth convex-concave saddle point problems. SIAM J. Optim. 15, 229-251 (2005)

Tseng, P: On accelerated proximal gradient methods for convex-concave optimization. Department of Mathematics, University of Washington, Seattle, WA 98195, USA (2008)

Censor, Y, Zaslavski, AJ: Strict Fejér monotonicity by superiorization of feasibility-seeking projection methods. J. Optim. Theory Appl. 165, 172-187 (2015)

Censor, Y, Davidi, R, Herman, GT: Perturbation resilience and superiorization of iterative algorithms. Inverse Probl. 26, Article ID 065008 (2010)

Herman, GT, Davidi, R: Image reconstruction from a small number of projections. Inverse Probl. 24, Article ID 045011 (2008)

Butnariu, D, Reich, S, Zaslavski, AJ: Convergence to fixed points of inexact orbits of Bregman-monotone and of nonexpansive operators in Banach spaces. In: Fixed Point Theory and Its Applications, pp. 11-32. Yokohama Publishers, Yokohama (2006)

Butnariu, D, Reich, S, Zaslavski, AJ: Asymptotic behavior of inexact orbits for a class of operators in complete metric spaces. J. Appl. Anal. 13, 1-11 (2007)

Butnariu, D, Reich, S, Zaslavski, AJ: Stable convergence theorems for infinite products and powers of nonexpansive mappings. Numer. Funct. Anal. Optim. 29, 304-323 (2008)

Herman, GT, Garduño, E, Davidi, R, Censor, Y: Superiorization: an optimization heuristic for medical physics. Med. Phys. 39, 5532-5546 (2012)

Garduño, E, Herman, GT: Superiorization of the ML-EM algorithm. IEEE Trans. Nucl. Sci. 61, 162-172 (2014)

Dong, QL, Lu, YY, Yang, J: The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 65, 2217-2226 (2016)

Polyak, BT: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1-17 (1964)

Polyak, BT: Introduction to Optimization. Optimization Software, New York (1987)

Alvarez, F: Weak convergence of a relaxed and inertial hybrid projection-proximal point algorithm for maximal monotone operators in Hilbert space. SIAM J. Optim. 14(3), 773-782 (2004)

Attouch, H, Peypouquet, J, Redont, P: A dynamical approach to an inertial forward-backward algorithm for convex minimization. SIAM J. Optim. 24(1), 232-256 (2014)

Bot, RI, Csetnek, ER: A hybrid proximal-extragradient algorithm with inertial effects. Numer. Funct. Anal. Optim. 36, 951-963 (2015)

Ochs, P, Brox, T, Pock, T: iPiasco: inertial proximal algorithm for strongly convex optimization. J. Math. Imaging Vis. 53, 171-181 (2015)

Dong, QL, Yang, J, Yuan, HB: The projection and contraction algorithm for solving variational inequality problems in Hilbert spaces. J. Nonlinear Convex Anal. (accepted)

Tibshirani, R: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B 58, 267-288 (1996)

Dong, QL, Cho, YJ, Zhong, LL, Rassias, ThM: Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. (accepted)

Acknowledgements

We wish to thank the anonymous referees for their thorough analysis and review. All their comments and suggestions helped tremendously in improving the quality of this paper and made it suitable for publication.

Funding

The first author is supported by the National Natural Science Foundation of China (No. 61379102) and the Open Fund of Tianjin Key Lab for Advanced Signal Processing (No. 2016ASP-TJ01). The third author is supported by the EU FP7 IRSES program STREVCOMS, grant no. PIRSES-GA-2013-612669. The fourth author is supported by Visiting Scholarship of Academy of Mathematics and Systems Science, Chinese Academy of Sciences (AM201622C04) and the National Natural Science Foundation of China (11401293,11661056), the Natural Science Foundation of Jiangxi Province (20151BAB211010).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this part, we present the details of computing a vector \(y\in R^{n}\) onto the \(\ell_{1}\)-norm ball constraint. For convenience, we consider projection onto the unit \(\ell_{1}\)-norm ball first. Then we extend it to the general \(\ell_{1}\)-norm ball constraint.

The projection onto the unit \(\ell_{1}\)-norm ball is to solve the optimization problem

The above optimization problem is a typical constrained optimization problem, we consider solving it based on the Lagrangian method. Define the Lagrangian function \(L(x,\lambda)\) as

Let \((x^{*}, \lambda^{*})\) be the optimal primal and dual pair. It satisfies the KKT conditions of

It is easy to check that if \(\|y\|_{1} \leq1\), then \(x^{*} = y\) and \(\lambda^{*} =0\). In the following, we assume \(\|y\|_{1} >1\). Based on the KKT conditions, we obtain \(\lambda^{*} >0\) and \(\|x^{*}\|_{1} = 1\). From the first order optimality, we have \(x^{*} = \max\{ |y|-\lambda^{*}, 0 \} \otimes \operatorname{Sign}(y)\), where ⊗ represents element-wise multiplication and \(\operatorname{Sign}(\cdot)\) denotes the symbol function, i.e., \(\operatorname{Sign}(y_{i}) = 1\) if \(y_{i} \geq0\); otherwise \(\operatorname{Sign}(y_{i}) = -1\).

Define a function \(f(\lambda) = \| x(\lambda) \|_{1}\), where \(x(\lambda) = S_{\lambda}(y) = \max\{ |y|-\lambda, 0 \}\otimes \operatorname{Sign}(y)\). We prove the following lemma.

Lemma A.1

For the function \(f(\lambda)\), there must exist \(\lambda^{*} >0\) such that \(f(\lambda^{*}) = 1\).

Proof

Since \(f(0) = \| S_{0}(y) \|_{1} = \|y\|_{1} >1\). Let \(\lambda^{+} = \max_{1\leq i \leq n}\{ |y_{i}| \}\), then \(f(\lambda^{+}) =0 <1\). Notice that \(f(\lambda)\) is decreasing and convex. Therefore, by the intermediate value theorem, there exists \(\lambda^{*} >0\) such that \(f(\lambda^{*}) = 1\). □

To find \(\lambda^{*}\) such that \(f(\lambda^{*}) =1\), we follow the following steps.

Step 1. Define a vector y̅ with the same element as \(|y|\), which was sorted in descending order. That is, \(\overline {y}_{1} \geq\overline{y}_{2} \geq\cdots\geq\overline{y}_{n} \geq0\).

Step 2. For every \(k= 1,2, \ldots, n\), solve the equation \(\sum_{i=1}^{k}\overline{y}_{i} - k \lambda=1\). Stop search until the solution \(\lambda^{*}\) belongs to the interval \([\overline{y}_{k+1}, \overline{y}_{k}]\).

In conclusion, the optimal \(x^{*}\) can be computed by \(x^{*} = \max\{ |y|-\lambda^{*}, 0 \}\otimes \operatorname{Sign}(y)\). The next lemma extends the projection onto the unit \(\ell_{1}\)-norm ball to the general \(\ell _{1}\)-norm ball constraint.

Lemma A.2

Let \(C_{1} = \{ x \mid \|x\|_{1} \leq1 \}\). For any \(t>0\), define a general \(\ell_{1}\)-norm ball constraint set \(C = \{ x \mid \|x \|_{1} \leq t \}\). Then, for any vector \(y\in R^{n}\), we have

Proof

To compute the projection \(P_{C}(y)\) is to solve the optimization problem

For any \(x\in C\), let \(\overline{x} = \frac{x}{t}\), it follows that \(\overline{x}\in C_{1}\). The optimal solution \(x^{*}\) of the above optimization problem satisfies \(x^{*} = P_{C}(y) = t \overline{x}^{*}\), where \(\overline{x}^{*}\) is the optimal solution of the optimization problem of

It is observed that \(\overline{x}^{*}\) is an exact projection onto the closed convex set \(C_{1}\). That is, \(\overline{x}^{*} = P_{C_{1}}(\frac {y}{t})\). This completes the proof. □

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Dong, QL., Gibali, A., Jiang, D. et al. Bounded perturbation resilience of extragradient-type methods and their applications. J Inequal Appl 2017, 280 (2017). https://doi.org/10.1186/s13660-017-1555-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-017-1555-0