Abstract

We prove the finite convergence of the sequences generated by some extragradient-type methods solving variational inequalities under the weakly sharp condition of the solution set. In addition, we provide estimations for the number of iterations to guarantee the sequence converges to a point in the solution set and prove that these estimations are optimal. Numerical examples are presented to illustrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The variational inequality (VI) problem has been studied by many researchers because of its wide applications in optimization problems, complementary problems, fixed point problems and many more (Facchinei and Pang 2003). To solve a VI, numerous algorithms have been suggested, especially projection-type algorithms like projection, extragradient-type algorithms and its variants.

Let us briefly recall some fundamental methods for solving (pseudo)-monotone variational inequality problems which will be (re)-considered in this paper. One of the most well-known algorithms is the extragradient method (Korpelevich 1976) and its variant algorithms proposed by Censor et al. (2011a, b, 2012). The extragradient was proposed by Korpelevich for solving monotone variational inequalities and saddle point problem in finite dimensional spaces (Korpelevich 1976) and then extended to infinite dimensional Hilbert spaces in Khanh (2016) for solving monotone VIs and in Vuong (2018) for solving pseudo-monotone VIs. One of its important variants is the subgradient extragradient considered by Censor et al. (2012), which reduced the number of projections onto the feasible set. The Forward–Backward–Forward (FBF) method was proposed originally by Tseng (2000) for solving monotone inclusions, a more general model than VIs. The applicability of FBF method for solving pseudo-monotone VIs was studied recently in Boţ et al. (2020). Last, we take the Popov’s method (see Popov (1980)) and its modified version proposed by Malitsky and Semenov (2014) into account due to merits within every single iterations. One of them is that we just need compute one value of operator instead of two as in extragradient-type method.

To gain deeper insight for VIs, many researchers considered the weak sharp condition of solution set of VIs. Weak sharp solutions and its geometry condition were firstly introduced by Burke and Ferris for mathematical programming solving an optimization problem (Burke and Ferris 1993). Later, Marcotte and Zhu (1998) modified this geometry condition and introduced weak sharp solutions for VIs, simultaneously presented the finite convergence of algorithms for solving VIs. They also proved the equivalence between the weak sharpness of solution set with the dual gap function. Liu and Wu (2016a, 2016b) further studied the weak sharp of solution set of VIs with respect to primal gap function. Recently, Al-Homidan et al. (2016) used weak sharp solutions for the VIs without considering the primal or dual gap function to studied the finite termination property of sequences generated by iterative methods, such as the proximal point method, inexact proximal point method and gradient projection method. These results were also extended to non-smooth VIs as well as VIs on Hadamard manifolds and equilibrium problems (Al-Homidan et al. 2017; Kolobov et al. 2022, 2021; Nguyen et al. 2020, 2021).

In this paper, we discuss the finite convergence of projection-type methods for solving VIs problem such as extragradient method, Forward-Backward-Forward method, Popov method and their variants. For each method, we also provide an estimation for the number of iterations to guarantee the sequence converges to a solution of the VI problem. Moreover, we prove that this estimations is tight. The rest of this article is organized as follows. Section 2 introduces some preliminaries. Section 3 presents the finite convergence of the extragradient method to a weakly sharp solution set. Section 4 contains the finite convergence results of the Forward-Backward-Forward and the subgradient-extragradient methods. The finite convergence results of Popov’s method and its variants are established in Sect. 5. Finally, we provide numerical examples in the last Section.

2 Preliminaries

Let H be real Hilbert space with the inner product \(\left<\cdot ,\cdot \right>\) and a generated norm \(\Vert \cdot \Vert \). Let C be a nonempty closed convex subset of H and let F be a mapping from H to H. We consider the variational inequality problem, denoted by VI (C, F), which is to find \(x^*\in C\) such that

We denote the solution set of VI (C, F) is \(C^*\). In this article, we assume that the \(C^*\) is nonempty and we will recall some definitions about Lipschitz continuity and monotonicity of F (Karamardian and Schaible 1990) as follows

-

F is Lipschitz continuous on H if there exists \(L>0\) such that \(\Vert F(x)-F(y)\Vert \le L\Vert x-y\Vert \) for all \(x, y\in H\);

-

F is pseudo-monotone on C if there is any \(x,y\in C\) such that \(\langle F(y),x-y\rangle \ge 0\) then \(\langle F(x),x-y\rangle \ge 0\);

-

F is monotone on C if for any \(x,y\in C\) we have \(\langle F(x)-F(y),x-y\rangle \ge 0\). Obviously, if F is monotone on C, then F is pseudo-monotone on C.

The metric projection of element x in real Hilbert space H on closed convex subset C, denoted by \(P_C(x)\), is a unique element of C such that \(\Vert x-P_C(x)\Vert \le \Vert x-y\Vert \) for all \(y\in C\). We also denote dist\((x,C)=\Vert x-P_C(x)\Vert \). The metric projection has three important properties as follows (Goebel and Reich 1984).

Theorem 2.1

For any \(x, z\in H\) and \(y\in C\) we have

-

(a)

\(\Vert P_C(x)-P_C(z)\Vert \le \Vert x-z\Vert \) (nonexpansivity of \(P_C(.)\));

-

(b)

\(\langle x-P_C(x),y-P_C(x)\rangle \le 0\);

-

(c)

\(\Vert P_C(x)-y\Vert ^2\le \Vert x-y\Vert ^2-\Vert x-P_C(x)\Vert ^2\).

We recall the definition of weak sharp solution with respect to geometry condition and equivalent conditions as in Marcotte and Zhu (1998). Firstly, we denote by \({\mathbb {B}}\) the unit ball in H. For a given set X in H, we denote by intX the interior of X and by clX the closure of X. The polar \(X^o\) is defined by

Let C be a nonempty, closed, convex subset of H. The tangent cone to C at a point \(x\in C\) is defined by

The normal cone to \(x\in C\) is defined by

The solution set \(C^*\) of VI(C, F) is weakly sharp if we have, for any \(x^*\in C^*\),

From (2) we have that if \(C^*\) is weakly sharp, then there exists a constant \(\alpha >0\) such that

It is equivalent to say that (see (Marcotte and Zhu 1998, Theorem 4.1)) for each \(x^*\in C^*\),

We will need the following important theorem (see (Al-Homidan et al. 2016, Theorem 2)) in the proof of finite convergence.

Theorem 2.2

Let C be a nonempty, closed, convex subset of Hilbert space H and \(F:C\rightarrow H\) be a mapping. Assume that the solution \(C^*\) of VI(C, F) is nonempty, closed and convex.

-

(a)

If \(C^*\) is weakly sharp and F is monotone, then there exists a positive constant \(\alpha >0\) such that

$$\begin{aligned} \langle F(x), x-P_{C^*}(x)\rangle \ge \alpha \text {dist(}x,C^*\text {), for all } x\in C. \end{aligned}$$(5) -

(b)

If F is constant on \(C^*\) and continuous on C and (5) holds for some \(\alpha >0\), then \(C^*\) is weakly sharp.

In the rest of the paper, we will prove the finite convergence of sequences generated by three fundamental algorithms: The (Subgradient) Extragradient, the Forward-Backward-Forward and the Popov Algorithms under the monotonicity of F and weak sharp condition of the solution set \(C^*\) of VI(C, F).

3 Extragradient method

In this part, we consider the extragradient algorithm as follows (Korpelevich 1976)

Firstly, we recall the important inequality relating the distances from the points generated by the extragradient algorithm to the point \(x^*\) of the solution set \(C^*\). The proof presented here is shorter than the one in Khanh (2016).

Lemma 3.1

Let \(F:C\rightarrow H\) be pseudo-monotone and Lipschitz continuous with constant L and \(x^*\) be a point in solution set \(C^*\). Let \(\{x_n\}\) and \(\{y_n\}\) be sequences generated by extragradient algorithm. Then the following inequality holds

Proof

Since \(x^*\in C^*\subset C,\, x_{n+1}=P_C(x_n-\lambda F(y_n)\), we have from Theorem 2.1 (c) that

We have from \(x^*\in C^*\), \(y_n \in C\) and (1) that \(\langle F(x^*),y_n-x^*\rangle \ge 0\). Due to pseudo-monotonicity of F, we can infer that

Using Theorem 2.1 (b), since \(y_n=P_C(x_n-\lambda F(y_n))\), we obtain

This is equivalent to

Since F is Lipschitz continuous on H with constant L, we have

Combining (8), (9), (10) with (7), we get

By using the Cauchy-Schwarz inequality \(2 \Vert x_n-y_n\Vert \Vert x_{n+1}-y_n\Vert \le \Vert x_n-y_n\Vert ^2+\Vert x_{n+1}-y_n\Vert ^2\) in right hand side of above inequality, we obtain

\(\square \)

Under the weak sharp condition of solution set \(C^*\), we will show the finite convergence of the sequence \(\{x_n\}\) generated by extragradient algorithm.

Theorem 3.1

Let \(F:C \rightarrow H\) be monotone, Lipschitz continuous with constant L and assume that the solution set \(C^*\) be weakly sharp with modulus \(\alpha >0\). Let \(\{x_n\} \) be the sequence generated by extragradient algorithm with \(0<\lambda < 1/L\). Then, \(\{x_n\}\) converges strongly to a point in \(C^*\) in atmost \((k+1)\) iterations with

Moreover, the estimation in (12) is tight.

Proof

Since

for all \(u\in C\), we have

Then, we get

On the other hand, from Theorem 3.1, let \(x^*\) be a point of solution set \(C^*\), we have

This implies that \(\{\Vert x_n-x^*\Vert \}\) is non-increasing sequence , therefore \(\lim _{n\rightarrow \infty }\Vert x_n-x^*\Vert \) exists. Moreover, we also have

Noticing that \(1-\lambda L>0\), we infer

Letting \(n\rightarrow \infty \) and taking the limits in the both sides of (15), we deduce that \(\lim _{n\rightarrow \infty }\Vert x_{n+1}-y_n\Vert =0\) and \(\lim _{n\rightarrow \infty }\Vert x_{n+1}-x_n\Vert =0\).

For \(0<N\in {\mathbb {N}}\), we have from (15) that

Since above inequality holds with any \(x^*\in C^*\), we obtain

Let k be the smallest integer such that

Since \(\frac{1}{\lambda }>L>0\), we can infer

We assume that \(x_{k+1} \notin C^*\) and set \(t_{k+1}=P_{C^*}(x_{k+1})\in C\). Then, by the weak sharpness of the solution set \(C^*\) and Theorem 2.2 (a), the Lipschitz continuity and monotonicity of F and inequality (13), we have

This implies that \(\frac{1}{\lambda }\Vert x_{k+1}-x_k\Vert +L\Vert x_{k+1}-y_k\Vert \ge \alpha \), which contradicts (18). Hence, \(x_{k+1}\in C^*\). It follows from (16) that

where the last inequality is deduced by (17). So, we obtain

To show that the above estimation is tight, let us consider a simple counter example. Let \(H={\mathbb {R}}\), \(C=[0, +\infty )\) and \(F(x) = 1\) for all \(x\in C\). Then it is clear that F is monotone and Lipschitz continuous on C with any modulus \(L>0\). The problem VI(F, C) has a unique solution \(x^* = 0\), i.e. \(C^* = \{0\}\). Then (5) holds with \(\alpha =1\) and it follows from Theorem 2.2 that \(C^*\) is weakly sharp, hence (19) holds whenever \(\lambda <1/L\). Since F is Lipschitz continuous with all \(L > 0\), we deduce that (19) holds for all \(\lambda >0\). Let \(\lambda L =\frac{1}{2}\), from (19) we have that \(k\le \frac{\text {12dist}(x_0,C^*)^2}{\lambda ^2}\). Taking \(\lambda \) large enough, we conclude from (19) that \(k=0\), i.e. the algorithm converges to the solution in one step. Indeed, taking \(x_0 = a \in C\) and \(\lambda = a\), we obtain

\(\square \)

4 Forward–Backward–Forward method

We consider the Forward–Backward–Forward algorithm proposed by Tseng (2000) as follows

Like previous part, we recall and prove the main inequality relating the distances from the points generated by Forward-Backward-Forward algorithm to the point \(x^*\) in the solution set \(C^*\). The proof for monotone VIs was proposed in Tseng (2000).

Lemma 4.1

Let \(F:H\rightarrow H\) be pseudo-monotone and Lipschitz continuous with constant L. Let C be a nonempty closed convex subset of H and \(x^*\) be a point in solution set \(C^*\) of VI(C, F). Let \(\{x_n\}\) and \(\{y_n\}\) be sequences generated by Forward–Backward–Forward algorithm. Then the following inequality holds

Proof

From the equation

and noticing that \(x_n-x_{n+1}=x_n-\lambda F(x_n)-y_n+\lambda F(y_n)\), we have

Since \(y_n=P_C(x_n-\lambda F(x_n))\) and \(x^*\in C^*\subset C\), we have from Theorem 2.1 (b) that

On the other hand, since \(x^*\) is a point of solution set \(C^*\), \(y_n\in C\) and (1)

In addition, F is pseudo-monotone, hence

or equivalently

Next, we estimation the term \(2\langle x_n-x_{n+1},y_n-x_{n+1}\rangle \) by the Lipschitz continuity of F as follows

Combining (22), (23), (24) with (21), we get

\(\square \)

Under the weak sharp condition of solution set \(C^*\), we also show the finite convergence of the feasible sequence \(\{y_n\}\) to solution set \(C^*\) of this algorithm.

Theorem 4.1

Let \(F:H\rightarrow H\) be monotone, Lipschitz continuous with constant L and assume that the solution set \(C^*\) of VI(C, F) be weakly sharp with modulus \(\alpha >0\). Let \(\{x_n\},\{y_n\} \) be the sequences generated by Forward-Backward-Forward algorithm with \(0<\lambda < 1/L\). Then, \(\{y_n\}\) converges strongly to a point in \(C^*\) in at most k iterations with

Moreover, the estimation (25) is tight.

Proof

Since

for all \(w\in C\), we get

Therefore,

On the other hand, since F is pseudo-monotone, let \(x^*\) be a point of solution set \(C^*\), from Theorem 4.1 we have

Since \(0<\lambda <\frac{1}{L}\), (27) implies that \(\{\Vert x_n-x^*\Vert ^2\}\) is non-increasing sequence, therefore \(\lim _{n\rightarrow \infty }\Vert x_n-x^*\Vert \) exists. Moreover, we also have

Letting \(n\rightarrow \infty \) and taking the limits in the both sides of (28), we deduce that \(\lim _{n\rightarrow \infty }\Vert x_{n}-y_n\Vert =0\).

For \(0<N\in {\mathbb {N}}\), we have from (28) that

Since above inequality holds with any \(x^*\in C^*\), we get

Since \(\lim _{n\rightarrow \infty }\Vert x_{n}-y_n\Vert =0\), we choose k be the smallest integer such that

We assume that \(y_{k} \notin C^*\) and set \(t_{k}=P_{C^*}(y_{k})\in C\). Then, by the weak sharpness of the solution set \(C^*\), the Lipschitz continuity and monotone property of F and inequality (26), we have

This implies that \(\Vert x_{k}-y_k\Vert \ge \frac{\alpha \lambda }{\lambda L+1}\), which contradicts (30). Hence, \(y_{k}\in C^*\). It follows from (29) that

where the last inequality is deduced by (30). Therefore, we get

\(\square \)

To show that the above estimation is tight, let us consider again the simple counter example as in Theorem 3.1. Let \(\lambda L =\frac{1}{2} < 1\) we deduce

Hence, choosing \(\lambda \) large enough, we get \(k=0\), i.e. \(y_0 \in C^*\). Indeed, taking \(x_0 = a \in C\) and \(\lambda = a\), we obtain

\(\square \)

Remark 4.1

In subgradient extragradient method (Censor et al. 2012), \(\{x_n\}, \{y_n\}\) are generated by the following algorithm

The advantage of the subgradient extragradient method is that the projection onto the half-space \(T_n\) has an explicit formula. We have from the proof of Lemma 3.2 in Censor et al. (2012) that

Hence, as in Theorem 4.1, with \(0<\lambda <\frac{1}{L}\), if \(F: H\rightarrow H \) is monotone and Lipschitz continuous and \(C^*\) is weakly sharp, then \(\{y_n\}\) converges to a point in \(C^*\) in at most k iterations with

Moreover, the above estimation is tight. We omit the detailed proof.

5 Popov’s method

We continue employing above method for showing the finite convergence of the following Popov’s algorithm (Popov 1980):

under the weak sharp condition of solution set \(C^*\). Like above parts, our proof is based on the following inequality, which slightly improves the main estimation in Popov (1980). This estimation allows us to choose larger stepsize, i.e., \(\lambda \in \left( 0, \frac{1}{(1+\sqrt{2})L}\right) \) instead of \(\lambda \in \left( 0, \frac{1}{3L}\right) \) as in Popov (1980).

Lemma 5.1

Let \(F:C\rightarrow H\) be pseudo-monotone and Lipschitz continuous with constant L and C be a nonempty closed convex subset of H. Let \(x^*\) be a point in solution set \(C^*\) of VI(C, F). Let \(\{x_n\}\) and \(\{y_n\}\) be sequences generated by Popov’s algorithm. Then the following inequality holds

Proof

Since \(x^*\) is a point in solution set \(C^*\), \(y_n\in C\) and F is pseudo-monotone on C

Therefore,

Since \(x_{n+1}=P_C(x_n-\lambda F(y_n))\), by Theorem 2.1 (c) we have

Combining the above inequality with (32) we get

Using Theorem 2.1 (b), since \(y_n=P_C(x_n-\lambda F(y_{n-1}))\) and \(x_{n+1}\in C\) we have

We estimation the term \(2\lambda \langle F(y_n)-F(y_{n-1}), y_n-x_{n+1}\rangle \) as follows:

Apply estimations (34) and (35) into the right side of inequality (33), we obtain

which is equivalent to

\(\square \)

The following theorem will show the finite convergence of Popov’s method if the solution set \(C^*\) is weakly sharp.

Theorem 5.1

Let \(F:C\rightarrow H\) be monotone, Lipschitz continuous with constant L and C be a nonempty closed convex subset of H. Assume that the solution set \(C^*\) of VI(C, F) is weakly sharp with modulus \(\alpha >0\). Let \(\{x_n\},\{y_n\} \) be the sequences generated by Popov’s algorithm with \(0<\lambda < 1/(1+\sqrt{2})L\). Then, \(\{x_n\}\) converges strongly to a point in \(C^*\) in at most \((k+1)\) iterations with

Moreover, the estimation in (36) is tight.

Proof

Since

for all \(u\in C\), we also have the similar result in (13)

Since F is pseudo-monotone, let \(x^*\) be a point of solution set \(C^*\), from Theorem 5.1 we get

This implies that \(\{a_n\}=\{\Vert x_n-x^*\Vert ^2+\lambda L \Vert x_n-y_{n-1}\Vert ^2\}\) is non-increasing sequence , therefore \(\lim _{n\rightarrow \infty }a_n\) exists. Moreover, we also have

Noticing that \(0<\lambda <\frac{1}{(1+\sqrt{2})L}\), we infer

Letting \(n\rightarrow \infty \) and taking the limits in the both sides of (40), we deduce that \(\lim _{n\rightarrow \infty }\Vert x_{n+1}-y_n\Vert =0\) and \(\lim _{n\rightarrow \infty }\Vert x_{n+1}-x_n\Vert =0\).

For \(0<N\in {\mathbb {N}}\), we have from (40) that

Since above inequality holds with any \(x^*\in C^*\), we obtain

Let k be the smallest integer such that

Since \(\frac{1}{\lambda }>(1+\sqrt{2})L>L\), we can infer

We assume that \(x_{k+1} \notin C^*\) and set \(t_{k+1}=P_{C^*}(x_{k+1})\in C\). Then, by the weak sharpness of the solution set \(C^*\), the Lipschitz continuity and monotonicity of F and inequality (37), we have

This implies that \(\frac{1}{\lambda }\Vert x_{k+1}-x_k\Vert +L\Vert x_{k+1}-y_k\Vert \ge \alpha \), which contradicts (43). Hence, \(x_{k+1}\in C^*\). It follows from (41) that

where the last inequality is deduced by (42). Therefore, we obtain

To show that the above estimation is tight, let us consider again the simple counter example as in Theorem 3.1. Let \(\lambda L = 1/3\) and \(\lambda \) large enough, we can deduce that \(k=1\). Taking \(x_0=a \in C\) , \(\lambda =a/2=y_0\), we obtain

which means that the algorithm converges to the solution in at-most two steps. \(\square \)

Remark 5.1

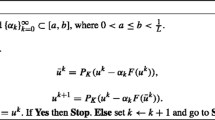

Malitsky and Semenov (2014) modified Popov’s algorithm by using the technique of the subgradient extragradient method:

Using the similar technique as in Theorem 5.1, we can prove that the sequence \(\{y_n\}\) generated by the above modified Popov’s algorithm converges to a point in the solution set if n is sufficient large.

6 Numerical illustration

In this section, we illustrate a general example in \({\mathbb {R}}^n\) space and two particular cases to show the finite convergence of sequences generated by above algorithms.

Example 6.1

Let \(H={\mathbb {R}}^n\) endowed with inner product \(\langle \cdot ,\cdot \rangle \) and corresponding norm \(\Vert \cdot \Vert =\Vert \cdot \Vert _2\). Let \(C=\{(x_1,x_2,\ldots ,x_{n}): 0< a\le x_i\le b, i= \overline{1,n}\}\) is closed, convex subset of \({\mathbb {R}}^n\) and \(F:C\rightarrow {\mathbb {R}}^{n}\), defined by

We consider the variational inequality problem VI(C, F).

Obviously, for \(x=(x_1, x_2,\ldots ,x_n), y=(y_1, y_2,\ldots ,y_n) \in {\mathbb {R}}^n\) we have

then F is Lipschitz continuous with \(L=1\).

On the other hand, we have that the problem which is to minimize the function \(f:{\mathbb {R}}^n\rightarrow R\) defined by

over C has same solution set with VI(C, F) because f is convex, differentiable function and \(F=\nabla f\). Hence, we can see the solution set of VI(C, F) is

To check the weakly sharp property of \(C^*\), we use Theorem 2.2 (b). It is obvious that \(F(x)=(a,a,\ldots ,a,0) \ \forall \ x\in C^*\), therefore F is constant on \(C^*\). Let \(x=(x_1,x_2,\ldots ,x_n)\in C\), thus \(a\le x_1,x_2, \ldots ,x_n\le b \), we have

Using inequality 44, noticing that \(P_{C^*}(x)=(a,a,\ldots ,a,x_n)\) and \( x-P_C{^*}(x)=(x_1-a,x_2-a,\ldots ,x_{n-1}-a,0)\), we get

which means the inequality in Theorem 2.2 (b) is satisfied with \(\alpha =a>0\). Thus, \(C^*\) is weakly sharp.

To show our results visually, we consider two following examples in \({\mathbb {R}}^3\) and \({\mathbb {R}}^{100}\).

Let \(H={\mathbb {R}}^3\) and \(a=1, b=10\), then \(C=[1,10]\times [1,10]\times [1,10]\) is a cube in \({\mathbb {R}}^3\), \(F: C \rightarrow {\mathbb {R}}^3\) is defined by the formula \(F(x)=(x_1,x_2,0)\) where \(x=(x_1,x_2,x_3)\in C\) , which means F is the projection from a point to Oxy-plane. We consider the variational inequality problem VI(C, F).

We choose starting point \(x_0=(10,10,5)\) for ExtraGradient algorithm and Forward-Backward-Forward algorithm with step size \(\lambda =0.5<1/L\). For Popov’s algorithm, we take \(x_0=(10,10,5)\), \(y_0=(10,10,1)\) and \(\lambda =0.3 < (\sqrt{2}-1)/L\). It is clear from Fig. 1 that for ExtraGradient algorithm, the sequence \(\{x_n\}\) converges to point \((1,1,5) \in C^*\) after 8 iterations. Similar results are obtained with the iterative sequences generated by Forward-Backward-Forward algorithm and Popov’s algorithm, as displayed in Figs. 2 and 3, respectively.

In the second experiment, we take \(H={\mathbb {R}}^{100}\) and \(a=1, b=100\). We choose the same random starting point \(x_0\) for ExtraGradient, Forward-Backward-Forward algorithm and Popov’s algorithm with \(\lambda =0.5<1/L\) , and one more random starting point \(y_0\) for Popov’s algorithm with \(\lambda =0.4<(\sqrt{2}-1)/L\). After applying three above algorithms, the result is demonstrated in Fig. 4. The x-axis stands for the number of steps while y-axis stands for the distance from points generated by above algorithms to solution set \(C^*\).

We can see from from Fig. 4 that, both ExtraGradient and Forward-Backward-Forward algorithms terminate after 11 steps, meanwhile the Popov’s algorithm shows a faster convergence rate and terminates after 7 steps. It is also noticed that in this particular example, the ExtraGradient and Forward-Backward-Forward algorithms are identical. The reason is that since the vector \(x_n - \lambda F(x_n) \in C\) for all n, the projection operator \(P_C\) does not contribute to the iteration process.

References

Al-Homidan S, Ansari QH, Nguyen LV (2016) Finite convergence analysis and weak sharp solutions for variational inequalities. Optim Lett. https://doi.org/10.1007/s11590-016-1076-7

Al-Homidan S, Ansari QH, Nguyen LV (2017) Weak sharp solutions for nonsmooth variational inequalities. J Optim Theory Appl 175:683–701

Boţ RI, Csetnek ER, Vuong PT (2020) The forward-backward-forward method from continuous and discrete perspective for pseudo-monotone variational inequalities in Hilbert spaces. Eur J Oper Res 287:49–60

Burke JV, Ferris MC (1993) Weak sharp minima in mathematical programming. SIAM J Control Optim 31:1340–1359

Censor Y, Gibali A, Reich S (2011) The subgradient extragradient method for solving variational inequalities in Hilbert space. J Optim Theory Appl 148:318–335

Censor Y, Gibali A, Reich S (2011) Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert Space. Optim Methods Softw 26:827–845

Censor Y, Gibali A, Reich S (2012) Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 61:1119–1132

Facchinei F, Pang J-S (2003) Finite-Dimensional Variational Inequalities and Complementarity Problems, vol I and II. Springer, New York

Goebel K, Reich S (1984) Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings. Marcel Dekker, New York

Karamardian S, Schaible S (1990) Seven kinds of monotone maps. J Optim Theory Appl 66:37–46

Khanh PD (2016) A modified extragradient method for infinite-dimensional variational inequalities. Acta Math Vietnam 41:251–263

Kolobov VI, Reich S, Zalas R (2021) Finitely convergent iterative methods with overrelaxations revisited. J Fixed Point Theory Appl 23:57–78

Kolobov VI, Reich S, Zalas R (2022) Finitely convergent deterministic and stochastic iterative methods for solving convex feasibility problems. Math Program Ser A 194:1163–1183

Korpelevich GM (1976) The extragradient method for finding saddle points and other problems. Ekonomika i Mat Metody 12:747–756

Liu YN, Wu ZL (2016) Characterization of weakly sharp solutions of a variational inequality by its primal gap function. Optim Lett 10:563–576

Liu YN, Wu ZL (2016) Weakly sharp solutions of primal and dual variational inequality problems. Pac J Optim 12:207–220

Malitsky YV, Semenov VV (2014) An extragradient for monotone variational inequalities. Cybernet Syst Anal 50:271–277

Marcotte P, Zhu DL (1998) Weak sharp solutions of variational inequalities. SIAM J Optim 9:179–189

Nguyen LV, Ansari QH, Qin X (2020) Linear conditioning, weak sharpness and finite convergence for equilibrium problems. J Global Optim 77:405–424

Nguyen LV, Ansari QH, Qin X (2021) Weak sharpness and finite convergence for solutions of nonsmooth variational inequalities in Hilbert spaces. Appl Math Optimiz 84:807–828

Popov LD (1980) A modification of the Arrow–Hurwicz method for search of saddle points. Math Notes Acad Sci USSR 28:845–848

Tseng P (2000) A modified forward-backward splitting method for maximal monotone mappings. SIAM J Control Optim 38:431–446

Vuong PT (2018) On the weak convergence of the extragradient method for solving pseudo-monotone variational inequalities. J Optim Theory Appl 176:399–409

Acknowledgements

The first and the second authors would like to thank Van Lang University, Vietnam for funding this work. The authors would like to thank referees for highly valuable comments and suggestions to help us improving the presentation of this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Communicated by Carlos Conca.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Trinh, T.Q., Vinh, L.V. & Vuong, P.T. Finite convergence of extragradient-type methods for solving variational inequalities under weak sharp condition. Comp. Appl. Math. 41, 400 (2022). https://doi.org/10.1007/s40314-022-02110-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-022-02110-y