Abstract

A new eigenvalue localization set for tensors is given and proved to be tighter than those presented by Li et al. (Linear Algebra Appl. 481:36-53, 2015) and Huang et al. (J. Inequal. Appl. 2016:254, 2016). As an application of this set, new bounds for the minimum eigenvalue of \(\mathcal{M}\)-tensors are established and proved to be sharper than some known results. Compared with the results obtained by Huang et al., the advantage of our results is that, without considering the selection of nonempty proper subsets S of \(N=\{1,2,\ldots,n\}\), we can obtain a tighter eigenvalue localization set for tensors and sharper bounds for the minimum eigenvalue of \(\mathcal{M}\)-tensors. Finally, numerical examples are given to verify the theoretical results.

Similar content being viewed by others

1 Introduction

For a positive integer n, \(n\geq2\), N denotes the set \(\{1,2,\ldots ,n\}\). \(\mathbb{C}\) (respectively, \(\mathbb{R}\)) denotes the set of all complex (respectively, real) numbers. We call \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\) a complex (real) tensor of order m dimension n, denoted by \(\mathbb{C}^{[m,n]}(\mathbb {R}^{[m,n]})\), if

where \(i_{j}\in{N}\) for \(j=1,2,\ldots,m\). \(\mathcal{A}\) is called reducible if there exists a nonempty proper index subset \(\mathbb{J}\subset N\) such that

If \(\mathcal{A}\) is not reducible, then we call \(\mathcal{A}\) irreducible [3].

Given a tensor \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in\mathbb {C}^{[m,n]}\), if there are \(\lambda\in\mathbb{C}\) and \(x=(x_{1},x_{2},\ldots,x_{n})^{T}\in\mathbb{C}\backslash\{0\}\) such that

then λ is called an eigenvalue of \(\mathcal{A}\) and x an eigenvector of \(\mathcal{A}\) associated with λ, where \(\mathcal{A}x^{m-1}\) is an n dimension vector whose ith component is

and

If λ and x are all real, then λ is called an H-eigenvalue of \(\mathcal {A}\) and x an H-eigenvector of \(\mathcal{A}\) associated with λ; see [4, 5]. Moreover, the spectral radius \(\rho(\mathcal{A})\) of \(\mathcal{A}\) is defined as

where \(\sigma(\mathcal{A})\) is the spectrum of \(\mathcal{A}\), that is, \(\sigma(\mathcal{A})=\{\lambda:\lambda \mbox{ is an eigenvalue of } \mathcal{A}\}\); see [3, 6].

A real tensor \(\mathcal{A}\) is called an \(\mathcal{M}\)-tensor if there exist a nonnegative tensor \(\mathcal{B}\) and a positive number \(\alpha>\rho(\mathcal{B})\) such that \(\mathcal {A}=\alpha\mathcal{I}-\mathcal{B}\), where \(\mathcal{I}\) is called the unit tensor with its entries

Denote by \(\tau(\mathcal{A})\) the minimal value of the real part of all eigenvalues of an \(\mathcal{M}\)-tensor \(\mathcal{A}\). Then \(\tau (\mathcal{A})>0\) is an eigenvalue of \(\mathcal{A}\) with a nonnegative eigenvector. If \(\mathcal{A}\) is irreducible, then \(\tau(\mathcal{A})\) is the unique eigenvalue with a positive eigenvector [7–9].

Recently, many people have focused on locating eigenvalues of tensors and using obtained eigenvalue inclusion theorems to determine the positive definiteness of an even-order real symmetric tensor or to give the lower and upper bounds for the spectral radius of nonnegative tensors and the minimum eigenvalue of \(\mathcal {M}\)-tensors. For details, see [1, 2, 10–14].

In 2015, Li et al. [1] proposed the following Brauer-type eigenvalue localization set for tensors.

Theorem 1

[1], Theorem 6

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\). Then

where

To reduce computations, Huang et al. [2] presented an S-type eigenvalue localization set by breaking N into disjoint subsets S and S̄, where S̄ is the complement of S in N.

Theorem 2

[2], Theorem 3.1

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\), S be a nonempty proper subset of N, S̄ be the complement of S in N. Then

Based on Theorem 2, Huang et al. [2] obtained the following lower and upper bounds for the minimum eigenvalue of \(\mathcal{M}\)-tensors.

Theorem 3

[2], Theorem 3.6

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in\mathbb{R}^{[m, n]}\) be an \(\mathcal{M}\)-tensor, S be a nonempty proper subset of N, S̄ be the complement of S in N. Then

where

The main aim of this paper is to give a new eigenvalue inclusion set for tensors and prove that this set is tighter than those in Theorems 1 and 2 without considering the selection of S. And then we use this set to obtain new lower and upper bounds for the minimum eigenvalue of \(\mathcal{M}\)-tensors and prove that new bounds are sharper than those in Theorem 3.

2 Main results

Now, we give a new eigenvalue inclusion set for tensors and establish the comparison between this set with those in Theorems 1 and 2.

Theorem 4

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\). Then

Proof

For any \(\lambda\in\sigma(\mathcal{A})\), let \(x=(x_{1},\ldots ,x_{n})^{T}\in{\mathbb{C}}^{n}\backslash\{0\}\) be an associated eigenvector, i.e.,

Let \(\vert x_{p}\vert =\max\{\vert x_{i}\vert :i \in N\}\). Then \(\vert x_{p}\vert >0\). For any \(j\in N, j\neq p\), then from (1) we have

and

equivalently,

and

Solving \(x_{p}^{m-1}\) from (2) and (3), we get

Taking absolute values and using the triangle inequality yields

Furthermore, by \(\vert x_{p}\vert >0\), we have

which implies that \(\lambda\in\Delta_{p}^{j}(\mathcal{A})\). From the arbitrariness of j, we have \(\lambda\in\bigcap_{j\in N, j\neq p}\Delta_{p}^{j}(\mathcal{A})\). Furthermore, we have \(\lambda\in\bigcup_{i\in N}\bigcap_{j\in N, j\neq i}\Delta _{i}^{j}(\mathcal{A})\). The conclusion follows. □

Next, a comparison theorem is given for Theorems 1, 2 and 4.

Theorem 5

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\), S be a nonempty proper subset of N. Then

Proof

By Theorem 3.2 in [2], \(\Delta^{S}(\mathcal{A})\subseteq\Delta(\mathcal{A})\). Here, only \(\Delta^{\cap}(\mathcal{A})\subseteq\Delta^{S}(\mathcal{A})\) is proved. Let \(z\in\Delta^{\cap}(\mathcal{A})\), then there exists some \(i_{0}\in N\) such that \(z\in\Delta_{i_{0}}^{j}(\mathcal {A}),\forall j\in N, j\neq i_{0}\). Let S̄ be the complement of S in N. If \(i_{0}\in S\), then taking \(j\in\bar{S}\), obviously, \(z\in\bigcup_{i_{0}\in S,j\in\bar{S}}\Delta_{i_{0}}^{j}(\mathcal{A})\subseteq \Delta^{S}(\mathcal{A})\). If \(i_{0}\in\bar{S}\), then taking \(j\in S\), obviously, \(z\in\bigcup_{i_{0}\in\bar{S},j\in S}\Delta_{i_{0}}^{j}(\mathcal {A})\subseteq\Delta^{S}(\mathcal{A})\). The conclusion follows. □

Remark 1

Theorem 5 shows that the set \(\Delta^{\cap}(\mathcal{A})\) in Theorem 4 is tighter than those in Theorems 1 and 2, that is, \(\Delta^{\cap}(\mathcal{A})\) can capture all eigenvalues of \(\mathcal {A}\) more precisely than \(\Delta(\mathcal{A})\) and \(\Delta^{S}(\mathcal{A})\).

In the following, we give new lower and upper bounds for the minimum eigenvalue of \(\mathcal{M}\)-tensors.

Theorem 6

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in\mathbb{R}^{[m, n]}\) be an irreducible \(\mathcal{M}\)-tensor. Then

Proof

Let \(x=(x_{1},x_{2},\ldots,x_{n})^{T}\) be an associated positive eigenvector of \(\mathcal{A}\) corresponding to \(\tau(\mathcal{A})\), i.e.,

(I) Let \(x_{q}=\min\{x_{i}:i\in N\}\). For any \(j\in N, j\neq q\), we have by (4) that

and

equivalently,

and

Solving \(x_{q}^{m-1}\) by (5) and (6), we get

From Theorem 2.1 in [9], we have \(\tau(\mathcal{A})\leq\min_{i\in N}a_{i\cdots i}\) and

Hence,

From \(x_{q}>0\), we have

equivalently,

that is,

Solving for \(\tau(\mathcal{A})\) gives

For the arbitrariness of j, we have \(\tau(\mathcal{A})\leq\min_{j\neq q}L_{qj}(\mathcal{A})\). Furthermore, we have

(II) Let \(x_{p}=\max\{x_{i}:i\in N\}\). Similar to (I), we have

The conclusion follows from (I) and (II). □

Similar to the proof of Theorem 3.6 in [2], we can extend the results of Theorem 6 to a more general case.

Theorem 7

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in\mathbb{R}^{[m, n]}\) be an \(\mathcal{M}\)-tensor. Then

By Theorems 3, 6 and 7 in [13], the following comparison theorem is obtained easily.

Theorem 8

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in\mathbb{R}^{[m, n]}\) be an \(\mathcal{M}\)-tensor, S be a nonempty proper subset of N, S̄ be the complement of S in N. Then

where \(R_{i}(\mathcal{A})=\sum_{i_{2},\ldots,i_{m}\in N}a_{ii_{2}\cdots i_{m}}\).

Remark 2

Theorem 8 shows that the bounds in Theorem 7 are shaper than those in Theorem 3, Theorem 2.1 of [9] and Theorem 4 of [13] without considering the selection of S, which is also the advantage of our results.

3 Numerical examples

In this section, two numerical examples are given to verify the theoretical results.

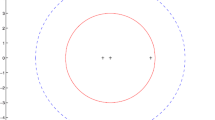

Example 1

Let \(\mathcal{A}=(a_{ijk})\in\mathbb{R}^{[3, 4]}\) be an irreducible \(\mathcal{M}\)-tensor with elements defined as follows:

By Theorem 2.1 in [9], we have

By Theorem 4 in [13], we have

By Theorem 3, we have

By Theorem 7, we have

In fact, \(\tau(\mathcal{A})=14.4049\). Hence, this example verifies Theorem 8 and Remark 2, that is, the bounds in Theorem 7 are sharper than those in Theorem 3, Theorem 2.1 of [9] and Theorem 4 of [13] without considering the selection of S.

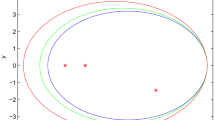

Example 2

Let \(\mathcal{A}=(a_{ijkl})\in\mathbb{R}^{[4, 2]}\) be an \(\mathcal {M}\)-tensor with elements defined as follows:

other \(a_{ijkl}=0\). By Theorem 7, we have

In fact, \(\tau(\mathcal{A})=4\).

4 Conclusions

In this paper, we give a new eigenvalue inclusion set for tensors and prove that this set is tighter than those in [1, 2]. As an application, we obtain new lower and upper bounds for the minimum eigenvalue of \(\mathcal{M}\)-tensors and prove that the new bounds are sharper than those in [2, 9, 13]. Compared with the results in [2], the advantage of our results is that, without considering the selection of S, we can obtain a tighter eigenvalue localization set for tensors and sharper bounds for the minimum eigenvalue of \(\mathcal{M}\)-tensors.

References

Li, CQ, Chen, Z, Li, YT: A new eigenvalue inclusion set for tensors and its applications. Linear Algebra Appl. 481, 36-53 (2015)

Huang, ZG, Wang, LG, Xu, Z, Cui, JJ: A new S-type eigenvalue inclusion set for tensors and its applications. J. Inequal. Appl. 2016, 254 (2016)

Chang, KQ, Zhang, T, Pearson, K: Perron-Frobenius theorem for nonnegative tensors. Commun. Math. Sci. 6, 507-520 (2008)

Qi, LQ: Eigenvalues of a real supersymmetric tensor. J. Symb. Comput. 40, 1302-1324 (2005)

Lim, LH: Singular values and eigenvalues of tensors: a variational approach. In: Proceedings of the IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing. CAMSAP, vol. 05, pp. 129-132 (2005)

Yang, YN, Yang, QZ: Further results for Perron-Frobenius theorem for nonnegative tensors. SIAM J. Matrix Anal. Appl. 31, 2517-2530 (2010)

Ding, WY, Qi, LQ, Wei, YM: \(\mathcal{M}\)-tensors and nonsingular \(\mathcal{M}\)-tensors. Linear Algebra Appl. 439, 3264-3278 (2013)

Zhang, LP, Qi, LQ, Zhou, GL: \(\mathcal{M}\)-tensors and some applications. SIAM J. Matrix Anal. Appl. 35, 437-452 (2014)

He, J, Huang, TZ: Inequalities for \(\mathcal{M}\)-tensors. J. Inequal. Appl. 2014, 114 (2014)

Li, CQ, Li, YT, Kong, X: New eigenvalue inclusion sets for tensors. Numer. Linear Algebra Appl. 21, 39-50 (2014)

Li, CQ, Li, YT: An eigenvalue localization set for tensor with applications to determine the positive (semi-)definiteness of tensors. Linear Multilinear Algebra 64(4), 587-601 (2016)

Li, CQ, Jiao, AQ, Li, YT: An S-type eigenvalue location set for tensors. Linear Algebra Appl. 493, 469-483 (2016)

Zhao, JX, Sang, CL: Two new lower bounds for the minimum eigenvalue of \(\mathcal{M}\)-tensors. J. Inequal. Appl. 2016, 268 (2016)

He, J: Bounds for the largest eigenvalue of nonnegative tensors. J. Comput. Anal. Appl. 20(7), 1290-1301 (2016)

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Nos. 11361074, 11501141), the Foundation of Guizhou Science and Technology Department (Grant No. [2015]2073) and the Natural Science Programs of Education Department of Guizhou Province (Grant No. [2016]066).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to this work. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhao, J., Sang, C. An eigenvalue localization set for tensors and its applications. J Inequal Appl 2017, 59 (2017). https://doi.org/10.1186/s13660-017-1331-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-017-1331-1