Abstract

In this paper, a new S-type eigenvalue localization set for a tensor is derived by dividing \(N=\{1,2,\ldots,n\}\) into disjoint subsets S and its complement. It is proved that this new set is sharper than those presented by Qi (J. Symb. Comput. 40:1302-1324, 2005), Li et al. (Numer. Linear Algebra Appl. 21:39-50, 2014) and Li et al. (Linear Algebra Appl. 481:36-53, 2015). As applications of the results, new bounds for the spectral radius of nonnegative tensors and the minimum H-eigenvalue of strong M-tensors are established, and we prove that these bounds are tighter than those obtained by Li et al. (Numer. Linear Algebra Appl. 21:39-50, 2014) and He and Huang (J. Inequal. Appl. 2014:114, 2014).

Similar content being viewed by others

1 Introduction

Eigenvalue problems of higher order tensors have become an important topic in the applied mathematics branch of numerical multilinear algebra, and they have a wide range of practical applications, such as best-rank one approximation in data analysis [5], higher order Markov chains [6], molecular conformation [7], and so forth. In recent years, tensor eigenvalues have caused concern of lots of researchers [1, 3, 4, 8–20].

One of many practical applications of eigenvalues of tensors is that one can identify the positive (semi-)definiteness for an even-order real symmetric tensor by using the smallest H-eigenvalue of a tensor, consequently, one can identify the positive (semi-)definiteness of the multivariate homogeneous polynomial determined by this tensor; for details, see [1, 21, 22].

However, as mentioned in [21, 23, 24], it is not easy to compute the smallest H-eigenvalue of tensors when the order and dimension are very large, we always try to give a set including all eigenvalues in the complex. Some sets including all eigenvalues of tensors have been presented by some researchers [1–3, 21–24]. In particular, if one of these sets for an even-order real symmetric tensor is in the right-half complex plane, then we can conclude that the smallest H-eigenvalue is positive, consequently, the corresponding tensor is positive definite. Therefore, the main aim of this paper is to study the new eigenvalue inclusion set for tensors called the new S-type eigenvalue inclusion set, which is sharper than some existing ones.

For a positive integer n, N denotes the set \(N=\{1,2,\ldots,n\}\). The set of all real numbers is denoted by \(\mathbb{R}\), and \(\mathbb{C}\) denotes the set of all complex numbers. Here, we call \(\mathcal {A}=(a_{i_{1}\cdots i_{m}})\) a complex (real) tensor of order m dimension n, denoted by \(\mathbb{C}^{[m,n]}(\mathbb{R}^{[m,n]})\), if \(a_{i_{1}\cdots i_{m}}\in{\mathbb{C}}(\mathbb{R})\), where \(i_{j}\in{N}\) for \(j=1,2,\ldots,m\) [23].

Let \(\mathcal{A}\in\mathbb{R}^{[m,n]}\), and \(x\in{\mathbb{C}}^{n}\). Then

a pair \((\lambda,x)\in{\mathbb{C}}\times(\mathbb{C}^{n}/\{0\})\) is called an eigenpair of \(\mathcal{A}\) [18] if

where \(x^{[m-1]}=(x_{1}^{m-1},x_{2}^{m-1},\ldots,x_{n}^{m-1})^{T}\) [25]. Furthermore, we call \((\lambda,x)\) an H-eigenpair, if both λ and x are real [1].

A real tensor of order m dimension n is called the unit tensor [21], denoted by \(\mathcal{I}\), if its entries are \(\delta_{i_{1}\cdots i_{m}}\) for \(i_{1},\ldots, i_{m}\in{N}\), where

An m-order n-dimensional tensor \(\mathcal{A}\) is called nonnegative [9, 10, 13, 14, 26], if each entry is nonnegative. We call a tensor \(\mathcal{A}\) a Z-tensor, if all of its off-diagonal entries are non-positive, which is equivalent to writing \(\mathcal{A}=s\mathcal {I}-\mathcal{B}\), where \(s>0\) and \(\mathcal{B}\) is a nonnegative tensor (\(\mathcal{B}\geq{0}\)), denoted by \(\mathbb{Z}\) the set of m-order and n-dimensional Z-tensors. A Z-tensor \(\mathcal{A}=s\mathcal{I}-\mathcal{B}\) is an M-tensor if \(s\geq\rho (\mathcal{B})\), and it is a nonsingular (strong) M-tensor if \(s>\rho (\mathcal{B})\) [20, 27].

The tensor \(\mathcal{A}\) is called reducible if there exists a nonempty proper index subset \(\mathbb{J}\subset N\) such that \(a_{i_{1}i_{2}\cdots i_{m}}=0\), \(\forall i_{1}\in\mathbb{J}\), \(\forall i_{2},\ldots,i_{m}\notin\mathbb{J}\). If \(\mathcal{A}\) is not reducible, then we call \(\mathcal{A}\) is irreducible [19]. The spectral radius \(\rho(\mathcal{A})\) [14] of the tensor \(\mathcal {A}\) is defined as

Denote by \(\tau(\mathcal{A})\) the minimum value of the real part of all eigenvalues of the nonsingular M-tensor \(\mathcal{A}\) [4]. A real tensor \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\) is called symmetric [1–3, 13, 22, 23] if

where \(\Pi_{m}\) is the permutation group of m indices.

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{R}}^{[m,n]}\). For \(i,j\in{N}\), \(j\neq{i}\), denote

Recently, much literature has focused on the bounds of the spectral radius of nonnegative tensor in [2, 3, 14, 15, 17–19, 24, 28]. In addition, in [4], He and Huang obtained the upper and lower bounds for the minimum H-eigenvalue of nonsingular M-tensors. Wang and Wei [16] presented some new bounds for the minimum H-eigenvalue of nonsingular M-tensors, and they showed those are better than the ones in [4] in some cases. As applications of the new S-type eigenvalue inclusion set, the other main results of this paper is to provide sharper bounds for the spectral radius of nonnegative tensors and the minimum H-eigenvalue of nonsingular M-tensors, which improve some existing ones.

Before presenting our results, we review the existing results that relate to the eigenvalue inclusion sets for tensors. In 2005, Qi [1] generalized the Geršgorin eigenvalue inclusion theorem from matrices to real supersymmetric tensors, which can be easily extended to general tensors [2, 13].

Lemma 1.1

[1]

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\), \(n\geq {2}\). Then

where \(\sigma(\mathcal{A})\) is the set of all the eigenvalues of \(\mathcal{A}\) and

To get sharper eigenvalue inclusion sets than \(\Gamma(\mathcal{A})\), Li et al. [2] extended the Brauer eigenvalue localization set of matrices [29, 30] and proposed the following Brauer-type eigenvalue localization sets for tensors.

Lemma 1.2

[2]

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\), \(n\geq {2}\). Then

where

In addition, in order to reduce computations of determining the sets \(\sigma(\mathcal{A})\), Li et al. [2] also presented the following S-type eigenvalue localization set by breaking N into disjoint subsets S and S̄, where S̄ is the complement of S in N.

Lemma 1.3

[2]

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\), \(n\geq {2}\), and S be a nonempty proper subset of N. Then

where \(\mathcal{K}_{i,j}(\mathcal{A})\) (\(i\in{S}\), \(j\in{\bar{S}}\) or \(i\in{\bar{S}}\), \(j\in{{S}}\)) is defined as in Lemma 1.2.

Based on the results of [2], in the sequel, Li et al. [3] exhibited a new tensor eigenvalue inclusion set, which is proved to be tighter than the sets in Lemma 1.2.

Lemma 1.4

[3]

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\), \(n\geq {2}\), and S be a nonempty proper subset of N. Then

where

In this paper, we continue this research on the eigenvalue inclusion sets for tensors; inspired by the ideas of [2, 3], we obtain a new S-type eigenvalue inclusion set for tensors. It is proved to be tighter than the tensor Geršgorin eigenvalue inclusion set \(\Gamma(\mathcal{A})\) in Lemma 1.1, the Brauer eigenvalue localization set \(\mathcal{K}(\mathcal{A})\) in Lemma 1.2, the S-type eigenvalue localization set \(\mathcal{K}^{S}(\mathcal{A})\) in Lemma 1.3, and the set \(\Delta(\mathcal{A})\) in Lemma 1.4. As applications, we establish some new bounds for spectral radius of nonnegative tensors and the minimum H-eigenvalue of strong M-tensors. Numerical examples are implemented to illustrate this fact.

The remainder of this paper is organized as follows. In Section 2, we recollect some useful lemmas on tensors which are utilized in the next sections. In Section 3.1, a new S-type eigenvalue inclusion set for tensors is given, and proved to be tighter than the existing ones derived in Lemmas 1.1-1.4. Based on the results of Section 3.1, we propose a new upper bound for the spectral radius of nonnegative tensors in Section 3.2; comparison results for this new bound and that derived in [2] are also investigated in this section. Section 3.3 is devoted to the exhibition of new upper and lower bounds for the minimum H-eigenvalue of strong M-tensors, which are proved to be sharper than the ones obtained by He and Huang [4]. Finally, some concluding remarks are given to end this paper in Section 4.

2 Preliminaries

In this section, we start with some lemmas on tensors. They will be useful in the following proofs.

Lemma 2.1

[16]

If \(\mathcal{A}\in{\mathbb{R}}^{[m,n]}\) is irreducible nonnegative, then \(\rho(\mathcal{A})\) is a positive eigenvalue with an entrywise positive eigenvector x, i.e., \(x>0\), corresponding to it.

Lemma 2.2

[2]

Let \(\mathcal{A}\in{\mathbb{R}}^{[m,n]}\) be a nonnegative tensor. Then \(\rho(\mathcal{A})\geq\max_{i\in{N}}\{a_{i\cdots i}\}\).

Lemma 2.3

[13]

Suppose that \(0\leq\mathcal{A}<\mathcal{C}\). Then \(\rho(\mathcal{A})\leq \rho(\mathcal{C})\).

Lemma 2.4

[4]

Let \(\mathcal{A}\) be a strong M-tensor and denoted by \(\tau(\mathcal {A})\) the minimum value of the real part of all eigenvalues of \(\mathcal {A}\). Then \(\tau(\mathcal{A})\) is an eigenvalue of \(\mathcal{A}\) with a nonnegative eigenvector. Moreover, if \(\mathcal{A}\) is irreducible, then \(\tau(\mathcal{A})\) is a unique eigenvalue with a positive eigenvector.

Lemma 2.5

[4]

Let \(\mathcal{A}\) be an irreducible strong M-tensor. Then \(\tau(\mathcal {A})\leq\min_{i\in{N}}\{a_{i\cdots i}\}\).

Lemma 2.6

[20]

A tensor \(\mathcal{A}\) is semi-positive if and only if there exists \(x\geq{0}\) such that \(\mathcal{A}x^{m-1}>0\).

Lemma 2.7

[20]

A Z-tensor is a nonsingular M-tensor if and only if it is semi-positive.

Lemma 2.8

[4]

Let \(\mathcal{A},\mathcal{B}\in{\mathbb{Z}}\), assume that \(\mathcal{A}\) is an M-tensor and \(\mathcal{B}\geq{\mathcal{A}}\). Then \(\mathcal{B}\) is an M-tensor, and \(\tau(\mathcal{A})\leq\tau(\mathcal{B})\).

3 Main results

3.1 A new S-type eigenvalue inclusion set for tensors

In this section, we propose a new S-type eigenvalue set for tensors and establish the comparisons between this new set with those in Lemmas 1.1-1.4.

Theorem 3.1

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\) with \(n\geq{2}\). And let S be a nonempty proper subset of N. Then

where

Proof

For any \(\lambda\in\sigma(\mathcal{A})\), let \(x=(x_{1},\ldots,x_{n})^{T}\in{\mathbb{C}}^{n}/\{0\}\) be an eigenvector corresponding to λ, i.e.,

Let \(|x_{p}|=\max_{i\in{S}}\{|x_{i}|\}\) and \(|x_{q}|=\max_{i\in{\bar {S}}}\{|x_{i}|\}\). Then, \(x_{p}\neq{0}\) or \(x_{q}\neq{0}\). Now, let us distinguish two cases to prove.

(i) \(|x_{p}|\geq|x_{q}|\), so \(|x_{p}|=\max_{i\in{N}}\{|x_{i}|\}\) and \(|x_{p}|>0\). For any \(j\in{\bar{S}}\), it follows from (2) that

Hence, we have

i.e.,

Premultiplying by \((\lambda-a_{j\cdots{j}})\) in the first equation of (3) results in

Combining (4) and the second equation of (3) one derives

Taking absolute values and using the triangle inequality yield

Note that \(|x_{p}|>0\), thus

which implies that \(\lambda\in\Upsilon_{p}^{j}(\mathcal{A})\subseteq \bigcup_{i\in{S},j\in{\bar{S}}}\Upsilon_{i}^{j}(\mathcal{A})\subseteq \Upsilon^{S}(\mathcal{A})\).

(ii) \(|x_{p}|\leq|x_{q}|\), so \(|x_{q}|=\max_{i\in{N}}\{|x_{i}|\}\) and \(|x_{q}|>0\). For any \(i\in{S}\), it follows from (2) that

Using the same method as the proof in (i), we deduce that

Taking the modulus in the above equation and using the triangle inequality we obtain

Note that \(|x_{q}|>0\), thus

This means that \(\lambda\in\Upsilon_{q}^{i}(\mathcal{A})\subseteq \bigcup_{i\in{\bar{S}},j\in{S}}\Upsilon_{i}^{j}(\mathcal{A})\subseteq \Upsilon^{S}(\mathcal{A})\). This completes our proof of Theorem 3.1. □

Remark 3.1

Note that \(|S|< n\), where \(|S|\) is the cardinality of S. If \(n=2\), then \(|S|=1\) and \(n(n-1)=2|S|(n-|S|)=2\), which implies that

Besides, if \(n\geq{3}\), \(2|S|(n-|S|)< n(n-1)\), then \(\Upsilon ^{S}(\mathcal{A})\subset\Delta(\mathcal{A})\) if \(\Delta _{i_{1}}^{j_{1}}(\mathcal{A})\cap\Delta_{i_{2}}^{j_{2}}(\mathcal {A})=\varnothing\) for any \(i_{1},i_{2},j_{1},j_{2}\in{N}\), \(i_{1}\neq {i_{2}}\) or \(j_{1}\neq{j_{2}}\). Furthermore, how to choose S to make \(\Upsilon^{S}(\mathcal{A})\) as sharp as possible is very interesting and important. However, this work is difficult especially the dimension of the tensor \(\mathcal{A}\) is large. At present, it is very difficult for us to research this problem, we will continue to study this problem in the future.

Next, we establish a comparison theorem for the new S-type eigenvalue inclusion set derived in this paper and those in Lemmas 1.1-1.4.

Theorem 3.2

Let \(\mathcal{A}=(a_{i_{1}\cdots i_{m}})\in{\mathbb{C}}^{[m,n]}\) with \(n\geq{2}\). Then

Proof

According to Remark 3.1, it is obvious that \(\Upsilon ^{S}(\mathcal{A})\subseteq\Delta(\mathcal{A})\). By Theorem 2.3 in [2], we know that \(\mathcal{K}^{S}(\mathcal{A})\subseteq\mathcal {K}(\mathcal{A})\subseteq\Gamma(\mathcal{A})\). Hence, we only prove \(\Upsilon^{S}(\mathcal{A})\subseteq\mathcal{K}^{S}(\mathcal{A})\). Let \(z\in{\Upsilon^{S}(\mathcal{A})}\), then

Without loss of generality, we assume that \(z\in\bigcup_{i\in{S},j\in {\bar{S}}}\Upsilon_{i}^{j}(\mathcal{A})\) (we can prove it similarly if \(z\in\bigcup_{i\in{\bar{S}},j\in{S}}\Upsilon_{i}^{j}(\mathcal{A})\)). Then there exist \(p\in{S}\) and \(q\in{\bar{S}}\) such that \(z\in\Upsilon _{p}^{q}(\mathcal{A})\), that is,

Inasmuch as

z satisfies

which yields

This means that

which implies that

This proof is completed. □

3.2 A new upper bound for the spectral radius of nonnegative tensors

Based on the results of Section 3.1, we discuss the spectral radius of nonnegative tensors, and we give their upper bounds, which are better than those of Theorem 3.4 in [2].

Theorem 3.3

Let \(\mathcal{A}\in{\mathbb{R}}^{[m,n]}\) be an irreducible nonnegative tensor with \(n\geq{2}\). And let S be a nonempty proper subset of N. Then

where

with

Proof

Since \(\mathcal{A}\) is an irreducible nonnegative tensor, by Lemma 2.1, there exists \(x=(x_{1},\ldots,x_{n})^{T}>{0}\) such that

Let \(x_{p}=\max_{i\in{S}}\{x_{i}\}\) and \(x_{q}=\max_{i\in{\bar{S}}}\{ x_{i}\}\). Below we distinguish two cases to prove.

(i) \(x_{p}\geq x_{q}>0\), so \(x_{p}=\max_{i\in{N}}\{x_{i}\}\). For any \(j\in{\bar{S}}\), it follows from (9) that

Hence, we have

Premultiplying by \((\rho(\mathcal{A})-a_{j\cdots{j}})\) in the first equation of (10) results in

It follows from (11) and the second equation of (10) that

Note that \(x_{p}\geq x_{j}\) for any \(j\in{\bar{S}}\) and by Lemma 2.2, we deduce that

i.e.,

Solving the quadratic inequality (12) yields

It is not difficult to verify that (13) can be true for any \(j\in{\bar {S}}\). Thus

which implies that

(ii) \(x_{q}\geq x_{p}>0\), so \(x_{q}=\max_{i\in{N}}\{x_{i}\}\). For any \(i\in{S}\), it follows from (9) that

So we obtain

In a similar manner to the proof of (i)

i.e.,

which yields

It is easy to see that (17) can be true for any \(j\in{S}\). Thus

which implies that

This completes our proof in this theorem. □

Next, we extend the results of Theorem 3.3 to general nonnegative tensors; without the condition of irreducibility, compare with Theorem 3.3.

Theorem 3.4

Let \(\mathcal{A}\in{\mathbb{R}}^{[m,n]}\) be a nonnegative tensor with \(n\geq{2}\). And let S be a nonempty proper subset of N. Then

where

with

Proof

Let \(\mathcal{A}_{k}=\mathcal{A}+\frac{1}{k}\varepsilon \), where \(k=1,2,\ldots\) , and ε denotes the tensor with every entry being 1. Then \(\mathcal{A}_{k}\) is a sequence of positive tensors satisfying

By Lemma 2.3, \(\{\rho(\mathcal{A}_{k})\}\) is a monotone decreasing sequence with lower bound \(\rho(\mathcal{A})\). So \(\rho(\mathcal {A}_{k})\) has a limit. Let

By Lemma 2.1, we see that \(\rho(\mathcal{A}_{k})\) is the eigenvalue of \(\mathcal{A}_{k}\) with a positive eigenvector \(y_{k}\), i.e., \(\mathcal{A}_{k}y_{k}^{m-1}=\rho(\mathcal {A}_{k})y_{k}^{[m-1]}\). In a manner similar to Theorem 2.3 in [13], we have

And we denote \(\Psi_{i,j}(\mathcal{A})=\frac{1}{2}\{a_{i\cdots i}+a_{j\cdots j}+r_{i}^{j} (\mathcal{A})+\Phi_{i,j}^{\frac {1}{2}}(\mathcal{A})\}\) (\(i\in{S}\), \(j\in{\bar{S}}\) or \(i\in{\bar{S}}\), \(j\in {{S}}\)). Then

where

As m and n are finite numbers, then by the properties of the sequence, it is easy to see that

Furthermore, since \(\mathcal{A}_{k}\) is an irreducible nonnegative tensor, it follows from Theorem 3.3 that

Letting \(k\rightarrow+\infty\) results in

from which one may get the desired bound (19). □

Remark 3.2

Now, we compare the upper bound in Theorem 3.4 with that in Theorem 3.4 in [2]. It is not difficult to see that

and

This shows that the upper bound in Theorem 3.4 improves the corresponding one in Theorem 3.4 of [2].

We have showed that our bound is sharper than the existing one in [2]. Now we take an example to show the efficiency of the new upper bound established in this paper.

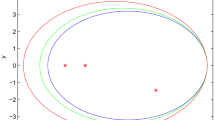

Example 3.1

Let \(\mathcal{A}=(a_{ijk})\in{\mathbb{R}}^{[3,3]}\) be nonnegative with entries defined as follows: \(a_{111}=a_{122}=a_{222}=a_{233}=a_{312}=a_{322}=a_{333}=1\), \(a_{123}=a_{133}=a_{211}=2\), \(a_{213}=3\), \(a_{311}=20\), and the other \(a_{ijk}=0\). It is easy to compute

We choose \(S=\{1,2\}\). Evidently, \(\bar{S}=\{3\}\). By Theorem 3.4 of [2], we have

By Theorem 3.4, we obtain

which means that the upper bound in Theorem 3.4 is much better than that in Theorem 3.4 of [2].

3.3 New upper and lower bounds for the minimum H-eigenvalue of nonsingular M-tensors

In this section, by making use of the results in Section 3.1, we investigate the bounds for the minimum H-eigenvalue of strong M-tensors and derive sharper bounds for that. This bounds are proved to be tighter than those in Theorem 2.2 of [4].

Theorem 3.5

Let \(\mathcal{A}\in{\mathbb{R}}^{[m,n]}\) be an irreducible nonsingular M-tensor with \(n\geq{2}\). And let S be a nonempty proper subset of N. Then

where

and

with

Proof

Since \(\mathcal{A}\) is an irreducible nonsingular M-tensor, by Lemma 2.4, there exists \(x=(x_{1},\ldots,x_{n})^{T}>{0}\) such that

Let \(x_{p}=\max_{i\in{S}}\{x_{i}\}\) and \(x_{q}=\max_{i\in{\bar{S}}}\{ x_{i}\}\). We distinguish two cases to prove.

(i) \(x_{p}\geq x_{q}>0\), so \(x_{p}=\max_{i\in{N}}\{x_{i}\}\). For any \(j\in{\bar{S}}\), it follows from (23) that

Hence, we have

Premultiplying by \((a_{j\cdots{j}}-\tau(\mathcal{A}))\) in the first equation of (24) results in

It follows from (25) and the second equation of (24) that

Combining \(x_{p}\geq x_{j}\) for any \(j\in{\bar{S}}\) with Lemma 2.5 results in

i.e.,

Solving the quadratic inequality (26) yields

It is not difficult to verify that (27) can be true for any \(j\in{\bar {S}}\). Thus

and therefore

(ii) \(x_{q}\geq x_{p}>0\), so \(x_{q}=\max_{i\in{N}}\{x_{i}\}\). For any \(i\in{S}\), it follows from (23) that

So we obtain

Using the same technique as the proof of (i), we have

which is equivalent to

which results in

It is not difficult to verify that (31) can be true for any \(j\in{S}\). Thus

which implies that

Let \(x_{k}=\min_{i\in{S}}\{x_{i}\}\) and \(x_{l}=\min_{i\in{\bar{S}}}\{ x_{i}\}\). With a strategy quite similar to the one utilized in the above proof, we can prove that

which implies this theorem. □

Remark 3.3

We next prove the bounds in Theorem 3.5 are sharper than those of Theorem 2.2 in [4]; it is easy to see that

and

which implies that

In the same manner as applied in the above proof, we can deduce the following results:

where \(\theta^{S}(\mathcal{A})=\frac{1}{2}\max_{i\in{S},j\in {\bar{S}}}\{a_{i\cdots i}+a_{j\cdots j}-r_{i}^{j}(\mathcal{A})-\Theta _{i,j}^{\frac{1}{2}}(\mathcal{A})\}\). Therefore, the conclusions follow from the above discussions.

Example 3.2

Consider the following irreducible nonsingular M-tensor:

where

We compare the results derived in Theorem 3.5 with those in Theorem 2.1 of [4] and Theorem 4.5 of [16] in the correct forms. Let \(S=\{ 1,2\}\), then \(\bar{S}=\{3\}\). By Theorem 2.1 of [4], we have

By Theorem 4.5 of [16], we get

By Theorem 3.5, we obtain

This shows that the upper and lower bounds in Theorem 3.5 are sharper than those in Theorem 2.1 of [4] and Theorem 4.5 of [16].

In the sequel, we extend the results of Theorem 3.5 to a more general case, which needs a weaker condition compared with Theorem 3.5.

Theorem 3.6

Let \(\mathcal{A}\in{\mathbb{R}}^{[m,n]}\) be a nonsingular M-tensor with \(n\geq{2}\). And let S be a nonempty proper subset of N. Then

where

and

with

Proof

Since \(\mathcal{A}\) is a nonsingular M-tensor, \(\mathcal{A}\in{\mathbb {Z}}\), by Lemma 2.7 and Lemma 2.6, there exists \(x=(x_{1},\ldots ,x_{n})^{T}\geq{0}\) such that \(\mathcal{A}x^{m-1}>0\), that is, for any \(i_{1}\in{N}\),

Let

So \(x_{\mathrm{max}}>0\) by \(x\geq{0}\). By replacing the zero entries of \(\mathcal {A}\) with \(-\frac{1}{k}\), where k is a positive integer, we see that the Z-tensor \(\mathcal{A}_{k}\) is irreducible. Here, we use \(a_{i_{1}\cdots i_{m}}(-\frac{1}{k})\) to denote the entries of \(\mathcal {A}_{k}\). We choose \(k>[\frac{(n^{m-1}-1)x_{\mathrm{max}}^{m-1}}{g}]+1\), then, for any \(i_{1}\in{N}\), we have

which implies that \(\mathcal{A}_{k}x^{m-1}>0\) and, by Lemma 2.6 and Lemma 2.7, we infer that \(\mathcal{A}_{k}\) is an irreducible nonsingular M-tensor if \(k>[\frac{(n^{m-1}-1)x_{\mathrm{max}}^{m-1}}{g}]+1\). It follows from the above discussions that \(\mathcal{A}_{k}\) (\(k>[\frac {(n^{m-1}-1)x_{\mathrm{max}}^{m-1}}{g}]+1\)) is a sequence of irreducible nonsingular M-tensors satisfying

By Lemma 2.8, \(\{\tau(\mathcal{A}_{k})\}\) is a monotone increasing sequence with upper bound \(\tau(\mathcal{A})\) so that \(\tau(\mathcal {A}_{k})\) has a limit. Let

By Lemma 2.4, we see that \(\tau(\mathcal{A}_{k})\) is the eigenvalue of \(\mathcal{A}_{k}\) with a positive eigenvector \(y_{k}\), i.e., \(\mathcal{A}_{k}y_{k}^{m-1}=\tau(\mathcal {A}_{k})y_{k}^{[m-1]}\). As homogeneous multivariable polynomials, we can restrict \(y_{k}\) to a ball; that is, \(\|y_{k}\|=1\). Then \(\{y_{k}\} \) is a bounded sequence, so it has a convergent subsequence. Without loss of generality, we can suppose it is the sequence itself. Let \(y_{k}\rightarrow{y}\) as \(k\rightarrow+\infty\), we get \(y\geq{0}\) and \(\|y\|=1\). By \(\mathcal{A}_{k}y_{k}^{m-1}=\tau(\mathcal {A}_{k})y_{k}^{[m-1]}\) and letting \(k\rightarrow+\infty\), we have \(\mathcal{A}y=\lambda y^{[m-1]}\). Thus λ is an eigenvalue of \(\mathcal{A}\), thus \(\lambda\geq\tau(\mathcal{A})\). Together with (34) this results in \(\lambda=\tau(\mathcal{A})\), which means that

Besides, for \(i\in{S}\), \(j\in{\bar{S}}\) (for \(i\in{\bar{S}}\), \(j\in{S}\), we can define \(M_{i}^{j}\) and \(M_{j}\) similarly), we define the following sets:

Let the numbers of entries in \(M_{i}^{j}\) and \(M_{j}\) be \(n_{i}^{j}\) and \(n_{j}\), respectively, and we denote \(\Lambda_{i,j}(\mathcal {A})=\frac{1}{2}\{a_{i\cdots i}+a_{j\cdots j}-r_{i}^{j}(\mathcal {A})-\Theta_{i,j}^{\frac{1}{2}}(\mathcal{A})\}\). Then

where

with

By the properties of the sequence, it is not difficult to verify that

Furthermore, since \(\mathcal{A}_{k}\) is an irreducible nonsingular M-tensor for \(k>[\frac{(n^{m-1}-1)x_{\mathrm{max}}^{m-1}}{g}]+1\), by Theorem 3.5, we have

Letting \(k\rightarrow+\infty\) results in

This completes our proof of Theorem 3.6. □

4 Concluding remarks

In this paper, a new S-type eigenvalue inclusion set for tensors is presented, which is proved to be sharper than the ones in [2, 3]. As applications, we give new bounds for the spectral radius of nonnegative tensors and the minimum H-eigenvalue of strong M-tensors, these bounds improve some existing ones obtained by Li et al. [2] and He and Huang [4]. In addition, we extend these new bounds to more general cases.

However, the new S-type eigenvalue inclusion set and the derived bounds depend on the set S. How to choose S to make \(\Upsilon ^{S}(\mathcal{A})\) and the bounds exhibited in this paper as tight as possible is very important and interesting, while if the dimension of the tensor \(\mathcal{A}\) is large, this work is very difficult. Therefore, future work will include numerical or theoretical studies for finding the best choice for S.

References

Qi, L-Q: Eigenvalues of a real supersymmetric tensor. J. Symb. Comput. 40(6), 1302-1324 (2005)

Li, C-Q, Li, Y-T, Kong, X: New eigenvalue inclusion sets for tensors. Numer. Linear Algebra Appl. 21, 39-50 (2014)

Li, C-Q, Chen, Z, Li, Y-T: A new eigenvalue inclusion set for tensors and its applications. Linear Algebra Appl. 481, 36-53 (2015)

He, J, Huang, T-Z: Inequalities for M-tensors. J. Inequal. Appl. 2014, 114 (2014)

Zhang, T, Golub, GH: Rank-1 approximation of higher-order tensors. SIAM J. Matrix Anal. Appl. 23, 534-550 (2001)

Ng, M, Qi, L-Q, Zhou, G-L: Finding the largest eigenvalue of a nonnegative tensor. SIAM J. Matrix Anal. Appl. 31, 1090-1099 (2009)

Diamond, R: A note on the rotational superposition problem. Acta Crystallogr. 44, 211-216 (1988)

Qi, L-Q: Symmetric nonnegative tensors and copositive tensors. Linear Algebra Appl. 439, 228-238 (2013)

Qi, L-Q: Eigenvalues and invariants of tensors. J. Math. Anal. Appl. 325(2), 1363-1377 (2007)

Chang, K-C, Pearson, KJ, Zhang, T: On eigenvalue problems of real symmetric tensors. J. Math. Anal. Appl. 350, 416-422 (2009)

Chang, K-C, Pearson, KJ, Zhang, T: Primitivity, the convergence of the NQZ method, and the largest eigenvalue for nonnegative tensors. SIAM J. Matrix Anal. Appl. 32(3), 806-819 (2011)

Chang, K-C, Pearson, KJ, Zhang, T: Some variational principles for Z-eigenvalues of nonnegative tensors. Linear Algebra Appl. 438(11), 4166-4182 (2013)

Yang, Y-N, Yang, Q-Z: Further results for Perron-Frobenius theorem for nonnegative tensors. SIAM J. Matrix Anal. Appl. 31(5), 2517-2530 (2010)

Yang, Y-N, Yang, Q-Z: Further results for Perron-Frobenius theorem for nonnegative tensors II. SIAM J. Matrix Anal. Appl. 32(4), 1236-1250 (2011)

He, J, Huang, T-Z: Upper bound for the largest Z-eigenvalue of positive tensors. Appl. Math. Lett. 38, 110-114 (2014)

Wang, X-Z, Wei, Y-M: Bounds for eigenvalues of nonsingular H-tensor. Electron. J. Linear Algebra 29(1), 3-16 (2015)

Li, L-X, Li, C-Q: New bounds for the spectral radius for nonnegative tensors. J. Inequal. Appl. 2015, 166 (2015)

Li, W, Ng, MK: Some bounds for the spectral radius of nonnegative tensors. Numer. Math. 130, 315-335 (2015)

Li, W, Liu, D-D, Vong, S-W: Z-Eigenpair bounds for an irreducible nonnegative tensor. Linear Algebra Appl. 483, 182-199 (2015)

Ding, W-Y, Qi, L-Q, Wei, Y-M: M-Tensors and nonsingular M-tensors. Linear Algebra Appl. 439(10), 3264-3278 (2013)

Li, C-Q, Li, Y-T: An eigenvalue localization set for tensors with applications to determine the positive (semi-)definiteness of tensors. Linear Multilinear Algebra 64, 587-601 (2016). doi:10.1080/03081087.2015.1049582

Li, C-Q, Wang, F, Zhao, J-X, Zhu, Y, Li, Y-T: Criterions for the positive definiteness of real supersymmetric tensors. J. Comput. Appl. Math. 255, 1-14 (2014)

Li, C-Q, Jiao, A-Q, Li, Y-T: An S-type eigenvalue localization set for tensors. Linear Algebra Appl. 493, 469-483 (2016)

Li, C-Q, Zhou, J-J, Li, Y-T: A new Brauer-type eigenvalue localization set for tensors. Linear Multilinear Algebra 64, 727-736 (2016). doi:10.1080/03081087.2015.1119779

Bu, C-J, Wei, Y-P, Sun, L-Z, Zhou, J: Brualdi-type eigenvalue inclusion sets of tensors. Linear Algebra Appl. 480, 168-175 (2015)

Hu, S-L, Huang, Z-H, Qi, L-Q: Strictly nonnegative tensors and nonnegative tensor partition. Sci. China Math. 57(1), 181-195 (2014)

Zhang, L-P, Qi, L-Q, Zhou, G-L: M-Tensors and some applications. SIAM J. Matrix Anal. Appl. 35(2), 437-452 (2014)

Li, C-Q, Wang, Y-Q, Yi, J-Y, Li, Y-T: Bounds for the spectral radius of nonnegative tensors. J. Ind. Manag. Optim. 12(3), 975-990 (2016)

Varga, RS: Geršgorin and His Circles. Springer, Berlin (2004)

Brauer, A: Limits for the characteristic roots of a matrix II. Duke Math. J. 14, 21-26 (1947)

Acknowledgements

This work is supported by the National Natural Science Foundations of China (No. 11171273) and sponsored by Innovation Foundation for Doctor Dissertation of Northwestern Polytechnical University (No. CX201628).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to this work. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Huang, ZG., Wang, LG., Xu, Z. et al. A new S-type eigenvalue inclusion set for tensors and its applications. J Inequal Appl 2016, 254 (2016). https://doi.org/10.1186/s13660-016-1200-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-016-1200-3