Abstract

Conjugate gradient methods play an important role in many fields of application due to their simplicity, low memory requirements, and global convergence properties. In this paper, we propose an efficient three-term conjugate gradient method by utilizing the DFP update for the inverse Hessian approximation which satisfies both the sufficient descent and the conjugacy conditions. The basic philosophy is that the DFP update is restarted with a multiple of the identity matrix in every iteration. An acceleration scheme is incorporated in the proposed method to enhance the reduction in function value. Numerical results from an implementation of the proposed method on some standard unconstrained optimization problem show that the proposed method is promising and exhibits a superior numerical performance in comparison with other well-known conjugate gradient methods.

Similar content being viewed by others

1 Introduction

In this paper, we are interested in solving nonlinear large scale unconstrained optimization problems of the form

where \(f:\Re^{n}\rightarrow\Re\) is an at least twice continuously differentiable function. A nonlinear conjugate gradient method is an iterative scheme that generates a sequence \(\{x_{k}\}\) of an approximation to the solution of (1), using the recurrence

where \(\alpha_{k}>0\) is the steplength determined by a line search strategy which either minimizes the function or reduces it sufficiently along the search direction and \(d_{k}\) is the search direction defined by

where \(g_{k}\) is the gradient of f at a point \(x_{k}\) and \(\beta_{k}\) is a scalar known as the conjugate gradient parameter. For example, Fletcher and Reeves (FR) [1], Polak-Ribiere-Polyak (PRP) [2], Liu and Storey (LS) [3], Hestenes and Stiefel (HS) [4], Dai and Yuan (DY) [5] and Fletcher (CD) [6] used an update parameter, respectively, given by

where \(y_{k-1}=g_{k}-g_{k-1}\). If the objective function is quadratic, with an exact line search the performances of these methods are equivalent. For a nonlinear objective function different \(\beta_{k}\) lead to a different performance in practice. Over the years, after the practical convergence result of Al-Baali [7] and later of Gilbert and Nocedal [8] attention of researchers has been on developing on conjugate gradient methods that possesses the sufficient descent condition

for some constant \(c> 0\). For instance the CG-DESCENT of Hager and Zhang [9]

where

and

which is based on the modification of HS method. Another important class of conjugate gradient methods is the so-called three-term conjugate gradient method in which the search direction is determined as a linear combination of \(g_{k}\), \(s_{k}\), and \(y_{k}\) as

where \(\tau_{1}\) and \(\tau_{2}\) are scalar. Among the generated three-term conjugate gradient methods in the literature we have the three-term conjugate methods proposed by Zhang et al. [10, 11] by considering a descent modified PRP and also a descent modified HS conjugate gradient method as

and

where \(s_{k}=x_{k+1}-x_{k}\). An attractive property of these methods is that at each iteration, the search direction satisfies the descent condition, namely \(g_{k}^{T} d_{k}= -c\Vert g_{k}\Vert ^{2}\) for some constant \(c> 0\). In the same manner, Andrei [12] considers the development of a three-term conjugate gradient method from the BFGS updating scheme of the inverse Hessian approximation restarted as an identity matrix at every iteration where the search direction is given by

An interesting feature of this method is that both the sufficient and the conjugacy conditions are satisfied and we have global convergence for a uniformly convex function. Motivated by the good performance of the three-term conjugate gradient method, we are interested in developing a three-term conjugate gradient method which satisfies both the sufficient descent condition, the conjugacy condition, and global convergence. The remaining part of this paper is structured as follows: Section 2 deals with the derivation of the proposed method. In Section 3, we present the global convergence properties. The numerical results and discussion are reported in Section 4. Finally, a concluding remark is given in the last section.

2 Conjugate gradient method via memoryless quasi-Newton method

In this section, we describe the proposed method which would satisfied both the sufficient descent and the conjugacy conditions. Let us consider the DFP method, which is a quasi-Newton method belonging to the Broyden class [13]. The search direction in the quasi-Newton methods is given by

where \(H_{k}\) is the inverse Hessian approximation updated by the Broyden class. This class consists of several updating schemes, the most famous being the BFGS and the DFP; if \(H_{k}\) is updated by the DFP then

such that the secant equation

is satisfied. This method is also known as a variable metric method, developed by Davidon [14], Fletcher and Powell [15]. A remarkable property of this method is that it is a conjugate direction method and one of the best quasi-Newton methods that encompassed the advantage of both the Newton method and the steepest descent method, while avoiding their shortcomings [16]. Memoryless quasi-Newton methods are other techniques for solving (1), where at every step the inverse Hessian approximation is updated as an identity matrix. Thus, the search direction can be determined without requiring the storage of any matrix. It was proposed by Shanno [17] and Perry [18]. The classical conjugate gradient methods PRP [2] and FR [1] can be seen as memoryless BFGS (see Shanno [17]). We proposed our three-term conjugate gradient method by incorporating the DFP updating scheme of the inverse Hessian approximation (7), within the frame of a memoryless quasi-Newton method where at each iteration the inverse Hessian approximation is restarted as a multiple of the identity matrix with a positive scaling parameter as

and thus, the search direction is given by

Various strategies can be considered in deriving the scaling parameter \(\mu_{k}\); we prefer the following which is due to Wolkowicz [19]:

The new search direction is then given by

where

and

We present the algorithm of the proposed method as follows.

2.1 Algorithm (STCG)

In this section, we present the algorithm of the proposed method. It has been reported that the line search in conjugate gradient method performs more function evaluations so as to obtain a desirable steplength \(\alpha_{k}\) due to poor scaling of the search direction (see Nocedal [20]). As a consequence, we incorporate the acceleration scheme proposed by Andrei [21], so as to have some reduction in the function evaluations. The new approximation to the minimum instead of (2) is determined by

where \(\vartheta_{k}=\frac{-r_{k}}{q_{k}}\), \(r_{k}=\alpha_{k} g_{k}^{T}d_{k}\), \(q_{k}=-\alpha_{k} ( g_{k}-g_{z} )d_{k}=-\alpha_{k} y_{k} d_{k} \), \(g_{z}=\nabla f ( z )\) and \(z=x_{k}+\alpha_{k} d_{k}\).

Algorithm 1

- Step 1.:

-

Select an initial point \(x_{o}\) and determine \(f (x_{o} )\) and \(g (x_{o} )\). Set \(d_{o}=-g_{o}\) and \(k=0\).

- Step 2.:

-

Test the stopping criterion \(\Vert g_{k}\Vert \) ≤ ϵ, if satisfied stop. Else go to Step 3.

- Step 3.:

-

Determine the steplength \(\alpha_{k}\) as follows:

Given \(\delta\in ( 0,1 ) \) and \(p_{1},p_{2}\), with \(0< p_{1}< p_{2}<1\).

-

(i)

Set \(\alpha=1\).

-

(ii)

Test the relation

$$ f (x+\alpha d_{k} )-f (x_{k} )\leq\alpha \delta g^{T}_{k} d_{k}. $$(16) -

(iii)

If (16) is satisfied, then \(\alpha_{k}=\alpha\) and go to Step 4 else choose a new \(\alpha\in [p_{1}\alpha,p_{2}\alpha ]\) and go to (ii).

-

(i)

- Step 4.:

-

Determine \(z=x_{k}+\alpha_{k} d_{k}\), compute \(g_{z}=\nabla f (z )\) and \(y_{k}=g_{k}-g_{z}\).

- Step 5.:

-

Determine \(r_{k}=\alpha_{k} g^{T}_{k} d_{k}\) and \(q_{k}=-\alpha_{k} y^{T}_{k} d_{k}\).

- Step 6.:

-

If \(q_{k} \neq0\), then \(\vartheta_{k}=\frac{r_{k}}{q_{k}}\), \(x_{k+1}=x_{k}+\vartheta_{k}\alpha_{k} d_{k}\) else \(x_{k+1}=x_{k}+\alpha_{k} d_{k}\).

- Step 7.:

-

Determine the search direction \(d_{k+1}\) by (12) where \(\mu_{k}\), \(\varphi_{1}\), and \(\varphi_{2}\) are computed by (11), (13), and (14), respectively.

- Step 8.:

-

Set \(k:=k+1\) and go to Step 2.

3 Convergence analysis

In this section, we analyze the global convergence of the propose method, where we assume that \(g_{k}\neq0\) for all \(k\geq0\) else a stationary point is obtained. First of all, we show that the search direction satisfies the sufficient descent and the conjugacy conditions. In order to present the results, the following assumptions are needed.

Assumption 1

The objective function f is convex and the gradient g is Lipschitz continuous on the level set

Then there exist some positive constants \(\psi_{1}\), \(\psi_{2}\), and L such that

and

for all \(z \in R^{n}\) and \(x,y \in K\) where \(G(x)\) is the Hessian matrix of f.

Under Assumption 1, we can easily deduce that

where \(s^{T}_{k} y_{k}=s^{T}_{k} \bar{G} s_{k} \) and \(\bar{G}=\int_{0}^{1} G(x_{k} + \lambda s_{k}) s_{k} \,d \lambda\). We begin by showing that the updating matrix (9) is positive definite.

Lemma 3.1

Suppose that Assumption 1 holds; then the matrix (9) is positive definite.

Proof

In order to show that the matrix (9) is positive definite we need to show that \(\mu_{k}\) is well defined and bounded. First, by the Cauchy-Schwarz inequality we have

and this implies that the scaling parameter \(\mu_{k}\) is well defined. It follows that

When the scaling parameter is positive and bounded above, then for any non-zero vector \(p\in\Re^{n}\) we obtain

By the Cauchy-Schwarz inequality and (20), we have \((p^{T} p)(y_{k}^{T} y_{k})- (p^{T} y_{k} )(y_{k}^{T}p) \geq0 \) and \(y_{k}^{T} s_{k}>0\), which implies that the matrix (9) is positive definite \(\forall k\geq0\).

Observe also that

Now,

Thus, \(\operatorname{tr}(Q_{k+1})\) is bounded. On the other hand, by the Sherman-Morrison House-Holder formula (\(Q^{-1}_{k+1}\) is actually the memoryless updating matrix updated from \(\frac{1}{\mu_{k}} I \) using the direct DFP formula), we can obtain

We can also establish the boundedness of \(\operatorname{tr}(Q^{-1}_{k+1})\) as

where \(\omega=\frac{(n-2)}{\psi_{1}^{2}}+\frac{L^{2}}{\psi_{1}} +\frac {L^{2}}{\psi^{4}_{1}} >0\), for \(n \geq2\). □

Now, we shall state the sufficient descent property of the proposed search direction in the following lemma.

Lemma 3.2

Suppose that Assumption 1 holds on the objective function f then the search direction (12) satisfies the sufficient descent condition \(g_{k+1}^{T} d_{k+1}\leq-c\Vert g_{k+1}\Vert ^{2}\).

Proof

Since \(- g_{k+1}^{T}d_{k+1} \geq\frac{1}{\operatorname{tr}(Q^{-1}_{k+1})}\Vert g_{k+1}\Vert ^{2} \) (see for example Leong [22] and Babaie-Kafaki [23]), then by using (24) we have

where \(c=\min \{1,\frac{1}{\omega} \}\). Thus,

Dai-Liao [24] extended the classical conjugacy condition from \(y_{k} ^{T} d_{k+1}=0\) to

where \(t\geq0\). Thus, we can also show that our proposed method satisfies the above conjugacy condition. □

Lemma 3.3

Suppose that Assumption 1 holds, then the search direction (12) satisfies the conjugacy condition (27).

Proof

By (12), we obtain

where the result holds for \(t=1\). The following lemma gives the boundedness of the search direction. □

Lemma 3.4

Suppose that Assumption 1 holds then there exists a constant \(p>0\) such that \(\Vert d_{k+1}\Vert \leq P\Vert g_{k+1}\Vert \), where \(d_{k+1}\) is defined by (12).

Proof

A direct result of (10) and the boundedness of \(\operatorname{tr}(Q_{k+1})\) gives

where \(P= (\frac{\psi_{1}+n-1}{\psi_{1}^{2}} )\). □

In order to establish the convergence result, we give the following lemma.

Lemma 3.5

Suppose that Assumption 1 holds. Then there exist some positive constants \(\gamma_{1}\) and \(\gamma_{2}\) such that for any steplength \(\alpha_{k}\) generated by Step 3 of Algorithm 1 will satisfy either of the following:

or

Proof

Suppose that (16) is satisfied with \(\alpha_{k}=1\), then

implies that (30) is satisfied with \(\gamma_{2}=\delta\).

Suppose \(\alpha_{k}< 1\), and that (16) is not satisfied. Then for a steplength \(\alpha\leq\frac{\alpha_{k}}{p_{1}}\) we have

Now, by the mean-value theorem there exists a scalar \(\tau_{k}\in(0,1)\) such that

From (32) we have

which implies

Now,

Substituting (34) in (16) we have the following:

where

Therefore

□

Theorem 3.6

Suppose that Assumption 1 holds. Then Algorithm 1 generates a sequence of approximation \(\{x_{k} \}\) such that

Proof

As a direct consequence of Lemma 3.4, the sufficient descent property (26), and the boundedness of the search direction (28) we have

or

Hence, in either case, there exists a positive constant \(\gamma_{3}\) such that

Since the steplength \(\alpha_{k} \) generated by Algorithm 1 is bounded away from zero, (38) and (39) imply that \(f (x_{k} )\) is a non-increasing sequence. Thus, by the boundedness of \(f (x_{k} )\) we have

and as a result

□

4 Numerical results

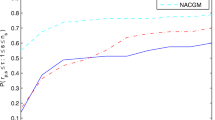

In this section, we present the results obtained from the numerical experiment of our proposed method in comparison with the CG-DESCENT (CG-DESC) [9], three-term Hestenes-Stiefel (TTHS) [11], three-term Polak-Ribiere-Polyak (TTPRP) [10], and TTCG [12] methods. We evaluate the performance of these methods based on iterations and function evaluations. By considering some standard unconstrained optimization test problems obtained from Andrei [25], we conducted ten numerical experiments for each test function with the size of the variable ranging from \(70\leq n \leq45\mbox{,}000\). The algorithms were implemented using Matlab subroutine programming on a PC (Intel(R) core(TM)2 Duo E8400 3.00 GHz 3 GB) 32-bit Operating system. The program terminates whenever \(\Vert g_{k}\Vert <\epsilon\) where \(\epsilon=10^{-6}\) or a method failed to converges within 2,000 iterations. The latter requirement is represented by the symbol ‘-’. An Armijo-type line search suggested by Byrd and Nocedal [26] was used for all the methods under consideration. Table 1 in the appendices gives the performance of the algorithms in terms of iterations and function evaluations. TTPRP solves 81% of the test problems, TTHS solves 88% of the test problems, CG-DESCENT solves 85% of the test problems, and STCG solves 90% of the test problems, whereas TTCG solves 85% of the test problems. The performance of STCG over TTPRP is that TTPRP needs 16% and 60% more, on average, in terms of the number of iterations and function evaluations, respectively, than STCG. The improvement of STCG over TTHS is that STCG needs 2% and 57% less, on average, in terms of number of iterations and function evaluations, respectively, than TTHS. The improvement of STCG over CG-DESCENT algorithms is that CG-DESCENT needs 10% and 70% more, on average, in terms of the number of iterations and function evaluations, respectively, than STCG. Similarly, the improvement of STCG over TTCG is that STCG needs 21% and 79% less, on average, in terms of the number of iterations and function evaluations, respectively, than TTCG. In order to further examine the performance of these methods, we employ the performance profile of Dolan and Moré [27]. Figures 1-2 give the performance profile plots of these methods in terms of iterations and function evaluations and the top curve corresponds to the method with the highest win which indicates that the performance of the proposed method is highly encouraging and substantially outperforms any of the other methods considered.

5 Conclusion

We have presented a new three-term conjugate gradient method for solving nonlinear large scale unconstrained optimization problems by considering a modification of the quasi-Newton memoryless DFP update of the inverse Hessian approximation. A remarkable property of the proposed method is that both the sufficient and the conjugacy conditions are satisfied and the global convergence is established under some mild assumption. The numerical results show that the proposed method is promising and more efficient than any of the other methods considered.

References

Fletcher, R, Reeves, CM: Function minimization by conjugate gradients. Comput. J. 7(2), 149-154 (1964)

Polak, E, Ribiere, G: Note sur la convergence de méthodes de directions conjuguées. ESAIM: Mathematical Modelling and Numerical Analysis - Modélisation Mathématique et Analyse Numérique 3(R1), 35-43 (1969)

Liu, Y, Storey, C: Efficient generalized conjugate gradient algorithms, part 1: theory. J. Optim. Theory Appl. 69(1), 129-137 (1991)

Hestenes, MR: The conjugate gradient method for solving linear systems. In: Proc. Symp. Appl. Math VI, American Mathematical Society, pp. 83-102 (1956)

Dai, Y-H, Yuan, Y: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177-182 (1999)

Fletcher, R: Practical Methods of Optimization. John Wiley & Sons, New York (2013)

Al-Baali, M: Descent property and global convergence of the Fletcher-Reeves method with inexact line search. IMA J. Numer. Anal. 5(1), 121-124 (1985)

Gilbert, JC, Nocedal, J: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2(1), 21-42 (1992)

Hager, WW, Zhang, H: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170-192 (2005)

Zhang, L, Zhou, W, Li, D-H: A descent modified Polak-Ribière-Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26(4), 629-640 (2006)

Zhang, L, Zhou, W, Li, D: Some descent three-term conjugate gradient methods and their global convergence. Optim. Methods Softw. 22(4), 697-711 (2007)

Andrei, N: On three-term conjugate gradient algorithms for unconstrained optimization. Appl. Math. Comput. 219(11), 6316-6327 (2013)

Broyden, C: Quasi-Newton methods and their application to function minimisation. Mathematics of Computation 21, 368-381 (1967)

Davidon, WC: Variable metric method for minimization. SIAM J. Optim. 1(1), 1-17 (1991)

Fletcher, R, Powell, MJ: A rapidly convergent descent method for minimization. Comput. J. 6(2), 163-168 (1963)

Goldfarb, D: Extension of Davidon’s variable metric method to maximization under linear inequality and equality constraints. SIAM J. Appl. Math. 17(4), 739-764 (1969)

Shanno, DF: Conjugate gradient methods with inexact searches. Math. Oper. Res. 3(3), 244-256 (1978)

Perry, JM: A class of conjugate gradient algorithms with a two step variable metric memory. Center for Mathematical Studies in Economies and Management Science. Northwestern University Press, Evanston (1977)

Wolkowicz, H: Measures for symmetric rank-one updates. Math. Oper. Res. 19(4), 815-830 (1994)

Nocedal, J: Conjugate gradient methods and nonlinear optimization. In: Linear and Nonlinear Conjugate Gradient-Related Methods, pp. 9-23 (1996)

Andrei, N: Acceleration of conjugate gradient algorithms for unconstrained optimization. Appl. Math. Comput. 213(2), 361-369 (2009)

Leong, WJ, San Goh, B: Convergence and stability of line search methods for unconstrained optimization. Acta Appl. Math. 127(1), 155-167 (2013)

Babaie-Kafaki, S: A modified scaled memoryless BFGS preconditioned conjugate gradient method for unconstrained optimization. 4OR 11(4), 361-374 (2013)

Dai, Y-H, Liao, L-Z: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43(1), 87-101 (2001)

Andrei, N: An unconstrained optimization test functions collection. Adv. Model. Optim. 10(1), 147-161 (2008)

Byrd, RH, Nocedal, J: A tool for the analysis of quasi-Newton methods with application to unconstrained minimization. SIAM J. Numer. Anal. 26(3), 727-739 (1989)

Dolan, ED, More, JJ: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201-213 (2002)

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

We hereby declare that there are no competing interests with regard to the manuscript.

Authors’ contributions

We all participated in the establishment of the basic concepts, the convergence properties of the proposed method and in the experimental result in comparison of the proposed method with order existing methods.

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Arzuka, I., Abu Bakar, M.R. & Leong, W.J. A scaled three-term conjugate gradient method for unconstrained optimization. J Inequal Appl 2016, 325 (2016). https://doi.org/10.1186/s13660-016-1239-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-016-1239-1