Abstract

A variational Bayesian blind restoration reconstruction based on shear wave transform for low-dose medical computed tomography (CT) image is proposed. The proposed algorithm eliminates the effects of the point spread function in the process of low-dose medical CT image reconstruction and improves the reconstructed image quality. The shear wave transform is used to sparsely represent the CT image, which can speed up the efficiency of image processing. In the Bayesian framework, a posteriori probability objective function with unknown parameters is constructed. These unknown parameters include the shear wave coefficients, the point spread function, and the hyper parameters. It specified a Laplacian distribution model for the prior probability distribution of the shear wave coefficients. The autoregressive model is used as the prior model of the point spread function. All of hyper parameters follow the gamma distribution. The variational Bayesian method is used to estimate all of unknown parameters and solve the above posteriori probability objective function. These generalized parameter estimators are used to realize the low-dose CT image blind restoration reconstruction. Computer simulation results indicate that a good performance-reconstructed image can be obtained and some metrics such as peak signal-to-noise ratio (PSNR), universal image quality index (UIQI), structural similarity index metric (SSIM), and sum of square differences error (SSDE) are improved.

Similar content being viewed by others

1 Introduction

Previous studies have established that high doses of X-ray radiation can cause cancer, leukemia, or other genetic diseases [1,2,3]. To reduce the damage of X-ray to the human body, it is necessary to study the low-dose CT imaging problem without sacrificing the clinical diagnosis information. Low-dose medical CT imaging is to reconstruct CT image by incomplete projection data or coarse data. However, some reconstruction methods based on the Nyquist sampling theorem restrict low-dose medical CT reconstruction by incomplete projection data. Moreover, in the process of CT image formation, it is often affected by the point spread function of the system. This will result in image degradation and artifacts. In addition, when the image is reconstructed from incomplete projection data, the influence of noise will be more serious. Therefore, it is of great significance to study how to eliminate the effects of the point spread function and realize the accurate low-dose medical CT reconstruction.

The basic idea of compress sensing (CS) is that if there is a sparse signal or the signal is sparse in a transform domain, the original signal can be reconstructed through a small amount of samples with high precision [4, 5]. If CS is applied in the field of CT image reconstruction, the decrease of the measurement value can greatly shorten the scanning time and reduce the scanning dose. However, due to the fact that most of the CT images are not sparse, it is necessary to find the appropriate transform domain to achieve the sparsity. For CT medical imaging system, the finite difference is often treated as the sparse transform [6, 7], and it is only suitable for the CT image with local smoothness. For the actual human CT images, the use of finite difference transform for image sparse representation is not very ideal. To solve the above problem, many sparsity methods are used in the CT image reconstruction, such as wavelet transform [8] and dictionary learning [9, 10]. Furthermore, the abovementioned methods can easily lead to the phenomenon of over smoothness. This is attributed to the lack of statistical information about the specific signal and noise in the image reconstruction. The Bayesian inference offers the potential to exactly estimate the original signal or effectively reduce the radiation dose by compress sensing. Consequently, the Bayesian method can provide a variety of probability distribution prediction mechanisms to estimate the different parameters.

In low-dose medical CT image reconstruction, the ill-posed problem is often caused by noise. At present, in the CS framework, there are a lot of specialized CT denoising algorithms [11,12,13,14]. However, the single-CT denoising method will increase the complexity of CT image processing. If we can get the anti-noise ability of compressed sensing CT reconstruction algorithm, which will be more conducive to the development of CT technology. To solve the ill-posed problem caused by noise, some algorithms improve the performance by adding a priori regularization term in the objective function [15,16,17,18,19]. These methods are of great significance to avoid the ambiguity of the solution and obtain the high-precision reconstruction image. The Bayesian inference method based on statistical iteration can effectively utilize the physical effects of the system, the statistical properties of the projection data, and the noise [20,21,22,23,24]. The statistical iterative reconstruction method considers the statistical distribution of signal and noise, but it has the problem of high computational complexity and the slow convergence speed.

At present, the CT image reconstruction algorithm based on CS often does not consider the influence of the point spread function. However, this assumption is unreasonable. Because the CT image is often affected by the point spread function of the system, it will cause the image degradation [25]. Some literatures use an image blind restoration method to eliminate the effects of the point spread function [26, 27]. Therefore, under the CS framework, the existing CT image reconstruction algorithms have the problem of ignoring the effects of the point spread function.

Because the point spread function of CT system is often unknown, the effects of point spread function can be eliminated by blind image restoration in the Bayesian framework. To this end, this paper proposes a variational Bayesian blind restoration reconstruction based on shear wave transform for low-dose medical CT image. The key problem of medical CT image restoration and reconstruction by Bayesian compressed sensing is to establish an accurate prior distribution model, including sparse coefficients, the point spread function, model parameters, and so on. Therefore, a joint distribution model is established. In image reconstruction, the effects of point spread function is considered and eliminated. The shear wave transform is used for CT image sparse representation. The variational Bayesian method is used to estimate all of unknown parameters and speed up the convergence. Finally, the low-dose CT image blind restoration reconstruction is realized.

2 Method

2.1 Medical CT image blind restoration reconstruction

The following model of the complete CT imaging is used in vector form.

where P is the noisy projection; A is the system matrix of a CT scanning; H is the degradation matrix, which is composed of point spread function (h); ψ is the sparse transform matrix; α is the sparse transform coefficient matrix; and n is the noise matrix.

To get better performance, the reconstructed CT was represented by the shear wave transform. The reconstructed CT image is f = ψα. The sparse coefficient contains the maximal amount of necessary information.

The target of low-dose medical CT image blind restoration reconstruction based on Bayesian compressed sensing is that the values of the sparse coefficient (α) and the degradation matrix (H) are estimated based on the noisy projection (P) and parameters’ prior distributions. Different a priori models are adopted to describe the sparse coefficient (α) and the point spread function (h). The parameters of the model are defined (Ω). After defining the prior models, the hierarchical Bayesian model is used to establish the joint distribution model of the projection (P) and the other parameters.

The joint probability distribution model is given by

where p(Ω) is the prior distribution of the model parameters, p(α| Ω) is a priori distribution of the sparse coefficient, p(h| Ω) is the prior distribution of the point spread function, and p(P| α, h, Ω) is the prior distribution of the noisy projection.

The prior models of the noisy projection, the unknown sparse coefficients, and the point spread function are related to the unknown model parameters (Ω). The unknown model parameters are defined by hyper parameters, and the hierarchical Bayesian method is adopted to estimate parameters. Based on the noisy projection (P), the posterior distribution (p(α, h, Ω| P)) of unknown parameters is estimated by the variational Bayesian method. To accurately estimate the posterior distribution (p(α, h, Ω| P)), the paper assumes that the approximate distribution has a closed form solution. All of parameters (α, h, and Ω) are mutually independent.

On the basis of the maximum a posteriori estimation theory, the model parameters are obtained

Then, \( \widehat{\boldsymbol{\upalpha}} \) and \( \widehat{\mathbf{h}} \) are estimated by maximizing the posterior distribution of the model parameters (Ω) and the noisy projection. That is

2.2 Shear wave transform

The theoretical basis of the shear wave transform is the synthetic wavelet theory. Furthermore, the shear wave transform has shown better results than the traditional wavelet because of its characteristics. These characteristics include multi-scale, multi-directional, and multi-precision. The matrix ψ represents a set of basis function, which is obtained by scaling, translation, and rotation transformation of function ψ. Then, the image f can be represented by the sparse shear wave. The optimization problem can be described as follows:

where α is a sparse shear wave coefficient matrix, in which only a small amount of nonzero elements exists.

In the paper, the reconstructed image is projected into the shear wave domain, and the Laplacian distribution model is used to characterize the shear wave coefficients. The Laplacian distribution model is used as the prior probability density function of the shear wave coefficients.

2.3 Prior distribution

Based on the Bayesian approach, CT image blind restoration reconstruction is realized. It is important that the likelihood function and the prior distribution function of parameters are determined.

2.3.1 Priori distribution of the noisy projection

The probability density P(P|h, α) is usually derived from the noise.

where \( {\sigma}_n^2 \) is the variance of the noise and N is the number of the noisy projection.

2.3.2 Priori distribution of the coefficients from shear wave transform

For the shear wave transform, the coefficients from shear wave transform are self-similar and heavy-tail distributed. The Laplacian distribution model can be used to match the distribution of the coefficients. The Laplacian density function is defined as follows:

where \( {\sigma}_{co}^2 \) is the variance of α.

2.3.3 Priori distribution of the point spread function

The autoregressive model is used as the prior model of the point spread function. As the statistical distribution of the point spread function, the probability density function of the Gauss distribution is

where C is the Laplacian operator, \( {\sigma}_{bl}^2 \) is the variance of the Gauss distribution, \( {\sigma}_{bl}^2 \) is the other model parameters, and M is the size of the point spread function h.

2.3.4 Priori distribution of the model parameters

To estimate the model parameters in the above mathematical expression, the hierarchical Bayesian model is used to estimate the model parameters \( {\sigma}_{co}^2 \), \( {\sigma}_{bl}^2 \), and \( {\sigma}_n^2 \). It is assumed that the unknown model parameters \( {\sigma}_{co}^2 \), \( {\sigma}_{bl}^2 \), and \( {\sigma}_n^2 \) are independent. Then, the joint distribution

The prior distribution and the posterior distribution derived from the prior distribution. Therefore, when the unknown model parameters are estimated in the Bayesian framework, the prior distribution can be described as a conjugate prior distribution. In the algorithm, Γ distribution is used as the prior distribution of the unknown model parameters. Γ distribution is defined as follows:

where \( \omega \in \left\{{\sigma}_{co}^2,{\sigma}_{bl}^2,{\sigma}_n^2\right\} \) represents a parameter, \( {b}_{\omega}^o \) is the scale parameter (\( {b}_{\omega}^o>0 \)), and \( {a}_{\omega}^o \) is the shape parameter(\( {a}_{\omega}^o>0 \)).

2.4 Variational Bayesian medical CT image blind restoration reconstruction

Equation (9) is transformed into another form, then

To facilitate the derivation of mathematical form, all unknown parameters in the algorithm are expressed as

Based on the Bayesian paradigm, the posterior distribution is deduced.

In this paper, the posterior distribution p(Θ|P) needs to be computed, so that the derivation of mathematical principle can be carried out. According to Eq. (13), once the posterior distribution p(Θ|P) can be calculated, α and h can be estimated by the posterior distribution \( p\left({\sigma}_{co}^2,{\sigma}_{bl}^2,{\sigma}_n^2\left|\mathbf{P}\right.\right) \) of the model parameters. In fact, it is difficult to obtain a closed form solution (p(P)), so that the posterior distribution p(Θ|P) cannot be expressed as a closed form.

The variational Bayesian method is often used to find the approximate distribution of the posterior distribution. Moreover, the approximate distribution has a closed form solution. The variational approximation method was used to approximate p(Θ|P) by finding an approximate posterior distribution (q(Θ)). In other words, when the Kullback-Leibler divergence between the two distributions attains a minimum, p(Θ|P) and q(Θ) are approximately equal. The Kullback-Leibler divergence between the two distributions is defined as follows:

where C KL (q(Θ)‖p(Θ|P)) is a nonnegative number. When q(Θ) = p(Θ|P), C KL (q(Θ)‖p(Θ|P)) = 0.

The variational method is used to estimate the posterior distribution p(Θ|P) by the approximate posterior distribution q(Θ). Then, q(Θ) is defined first. Assuming that all unknown parameters are independent of each other, that is

After defining the closed form of q(Θ) distribution, it is necessary to optimize the distribution to get the best model. Θ θ represents a subset of Θ that does not include the parameter θ. For example, θ = α, then \( {\Theta}_{\boldsymbol{\upalpha}}=\left({\sigma}_{co}^2,{\sigma}_{bl}^2,{\sigma}_n^2,\mathbf{h}\right) \). Thus, the Kullback-Leibler divergence of q(Θ) and p(Θ|P) is as follows:

where q(Θ θ ) = ∏ ρ ≠ θ q(ρ). The unknown parameters are independent to each other. If θ = α, then

Thus, for each unknown parameter, the approximate posterior distribution q(θ) can be solved by means of Eq. (18).

where \( E{\left[\log p\left(\Theta \right)p\left(\mathbf{P}\left|\Theta \right.\right)\right]}_{q\left({\Theta}_{\theta}\right)}=\int \log p\left(\Theta \right)p\left(\mathbf{P}\left|\Theta \right.\right)q\left({\Theta}_{\theta}\right)d{\Theta}_{\theta } \).

The smaller the value of C KL (q(θ)q(Θ θ )‖p(Θ|P)), the closer p(Θ|P) and q(θ)q(Θ θ ) become. In this way, after the initial values (\( {q}^1\left({\sigma}_{co}^2\right) \), \( {q}^1\left({\sigma}_{bl}^2\right) \), \( {q}^1\left({\sigma}_n^2\right) \)) of the model parameters \( {\sigma}_{co}^2 \), \( {\sigma}_{bl}^2 \), and \( {\sigma}_n^2 \) are defined, the posterior distributions of all of the unknown parameters are obtained by Eq. (25)

where k = 1, 2, 3… until the stop criterion is met.

The stopping criterion is

where ε is the specified accuracy range. If the criterion is satisfied, the iteration is stopped and the corresponding results are obtained. Otherwise, the iterations are repeated.

To compute the posterior distribution of the unknown parameters, the mean and covariance matrix of h in the kth iteration are assumed to be

Similarly, the mean values of the model parameters are

Then, according to Eq. (18), we can estimate the posterior conditional distribution of the sparse transform coefficient α. With Eqs. (6) and (7), the two sides of Eq. (18) carried out the logarithm

Suppose q k(α) = N(α|E k(α), covk(α)), the mean value of the normal distribution is the solution of \( \frac{\partial 2\log {q}^k\left(\boldsymbol{\upalpha} \right)}{\partial \boldsymbol{\upalpha}}=0 \). The covariance of the normal distribution is \( {\operatorname{cov}}^k\left(\boldsymbol{\upalpha} \right)={\left[-\frac{\partial^2\log {q}^k\left(\boldsymbol{\upalpha} \right)}{\partial {\boldsymbol{\upalpha}}^2}\right]}^{-1} \). This can be derived

q k(α) can be produced by the mean and covariance of α. Similarly, q k + 1(h) can also be calculated by the same procedure.

Based on the prior model defined by the former, Eq. (33) is used to solve the problem

According to the nature of mathematical expectation in probability theory, E[x 2] = E 2[x] + var(x), var(x) is the variance of x. trace(x) is the trace of x. Then

E k(α), covk(α), E k + 1(h), and covk + 1(h) have been obtained in Eqs. (28), (29), (31), and (32). E k + 1(H) and covk + 1(H) are composed of E k + 1(h) and covk + 1(h).

To calculate q k + 1(ω) (\( \omega \in \left\{{\sigma}_{co}^2,{\sigma}_{bl}^2,{\sigma}_n^2\right\} \)), we need to calculate the corresponding mean. In the paper, it is assumed that the model parameters \( {\sigma}_{co}^2 \), \( {\sigma}_{bl}^2 \), and \( {\sigma}_n^2 \) follow the Γ distribution. The mean of Γ distribution is \( E\left[\omega \right]=\frac{a_{\omega}^o}{b_{\omega}^o} \). When the model parameters are performed in the kth iteration, the Γ distribution is \( {q}^{k+1}\left(\omega \right)=\Gamma \left(\left.\omega \right|{a}_{\omega}^{k+1},{b}_{\omega}^{k+1}\right) \), and the mean value is \( E\left[\omega \right]=\frac{a_{\omega}^{k+1}}{b_{\omega}^{k+1}} \). By Eq. (33), we know

Then, the mean values of the model parameters in the kth iteration are as follows:

The mean values of the above model parameters are used in the iterative process of calculating the approximate distribution of α and h.

Equations (35), (38), (43), (44), and (45) are used to estimate all of unknown parameters. And then, f = ψα is used to obtain the reconstructed image.

3 Discussion and experiment results

To verify the validity of the proposed algorithm, a low-dose CT image model is proposed in the literature [28]. According to the literature, it is shown that the noisy projection (P) of low-dose CT image approximately follows the Gauss distribution, and the relationship between the mean and variance can be described as

where P j and \( {\sigma}_j^2 \) represent the mean and variance of pixel j, respectively, and g j and κ are two related parameters of the system. For a given system, the two can be regarded as a known quantity.

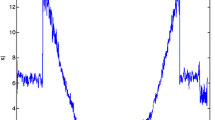

According to the above model, a low-dose CT image with a size of 512 × 512 pixels is designed to simulate the digital model of the human thorax, and the simulation CT phantom image is shown in Fig. 1. The fan beam scanning mode is used in the experiment. According to Eq. (46), the Gauss noise is added into the ideal projection data, and the low-dose noise projection data is simulated (g j = 200, κ = 4 × 104). The degradation process of CT image is approximated by a Gauss point spread function with σ 2 = 1. And, the Gauss white noise with the noise power of \( {\sigma}_n^2 \) is added. Signal-to-noise ratio (SNR) is defined as \( \mathrm{SNR}=10{\log}_{10}\left({\left\Vert \mathbf{f}\right\Vert}_2^2/{\sigma}_n^2\right) \). The experimental analysis is carried out under the condition of SNR of 20 and 40 dB, respectively (ε = 10−4). Under the same test condition, some commonly used algorithms and the proposed algorithm are used to reconstruct the low-dose CT images. These commonly used algorithms such as filtered back projection (FBP) [29], simultaneous algebraic reconstruction technique (SART) [30], and total variation (TV) regularization [31] combine iterative blind image restoration to obtain reconstruction image. MATLAB 2016a programming was used through the experiment, with the hardware environment for Intel (R) i7-4770 60GHz, 16G memory.

Obviously, in the case of sparse projection angles, an idea of using FBP algorithm and blind image restoration (BIR) is still easily affected by the noise. Especially, in low signal-to-noise ratio, the algorithm performance become worse rapidly. A method by combining SART with blind image restoration algorithm has good performance, but the artifacts are serious in image reconstruction from incomplete projections. A method combined by SART, TV regularization algorithm, and BIR can be used in the case of sparse projection angles, but it would result in a smooth image with missing details. The proposed algorithm eliminates the effects of the point spread function in the process of low-dose medical CT image reconstruction and improves the reconstructed image quality. With this approach, low-dose medical CT image reconstruction becomes an iterative process and a set of parameters is refined over successive optimization. By comparison, the proposed algorithm is the best in terms of clarity, contrast, and detail preservation.

To clearly see the details, local amplification of Figs. 2 and 3 is carried out for comprehensive evaluation, as shown in Figs. 4 and 5. As can be seen from the figure, the method can effectively suppress the noise and preserve the image details. The proposed method is superior to the other methods in the removal of artifacts and the preservation of edges.

To quantify the comparative algorithm performance, the external evaluation was useful, and peak signal-to-noise ratio (PSNR), universal image quality index (UIQI) [32], structural similarity index metric (SSIM) [33], and sum of square differences error (SSDE) are good criterions when improving a restoration reconstruction method. With regard to these four metrics, the ideal value of PSNR is +∞, the ideal values of both UIQI and SSIM are 1, and the ideal values of SSDE are 0. These four metrics can only be used in the simulated experiments because they require a referred image. The above four metrics tabulated for each experiment are the average values over 10 times repetitions. The results are shown in Tables 1 and 2.

Compared with the other methods, the proposed algorithm can obtain a better visual effect and can be more robust in detail reconstruction. It can be seen from Tables 1 and 2 that the proposed algorithm improves the objective image quality metrics such as the PSNR, SSIM, UIQI, SSDE, and so on. At the same time, under different parameters, the new algorithm has a better restoration and reconstruction effect. However, the initial estimation of the parameter is random. To obtain good estimation, we need to establish a relatively good estimation criterion and introduce the confidence parameter. It is helpful to eliminate the influence of various random factors and enhance the robustness of parameter estimation.

To verify the versatility, the second simulation experiment is completed to reconstruct the other CT images as shown in Fig. 6. The CT image size is 512 × 512 pixels.

The limited-angle projection was used to simulate the low-dose medical CT imaging. The number of projection angle was set as 180. FBP + BIR, SART + BIR, SART + TV + BIR, and the proposed method are used to realize low-dose medical CT image blind restoration reconstruction, respectively.

The reconstructed images are shown in Fig. 7. To observe the simulation procedure more directly, a part of an image is amplified, as shown in Fig. 8. As shown in Figs. 7 and 8, the reconstructed images by FBP + BIR and SART + BIR have some artifacts in the case of low-dose CT imaging. The problem with SART + TV + BIR is that the reconstructed image is too smooth, and some visible details will be lost. The proposed algorithm has the best effects such as sharpness, contrast, and detail preservation.

To quantitatively evaluate the effectiveness of the proposed algorithm, the reconstructed images shown in Fig. 7 and the ideal phantom shown in Fig. 6 are compared using SSIM, PSNR, UIQI, and SSDE, as shown in Table 3.

As is shown in Table 3, the value of SSIM by the proposed algorithm is closer to 1. By Comparison, PSNR, UIQI, and SSDE are improved. That means the method proposed can get a good reconstructed image that is highly similar to the ideal CT image.

4 Conclusion

This paper proposes a variational Bayesian blind restoration reconstruction based on shear wave transform for low-dose medical CT image. The shear wave coefficients are subject to Laplacian function. According to the Bayesian statistical theory, the parameters such as the hyper parameters of the shear wave coefficient, the parameters of the point spread function, and the inverse variance of the noise can be regarded as the random variables, and these parameters follow the gamma distribution. The values of the parameters are estimated by the maximum a posteriori estimation method. Finally, in the Bayesian framework, the CT restoration reconstruction of low-dose medical image is transformed into an optimization problem, and the variational approximation method is used to solve the problem. Experiments show that the Bayesian model can describe the local structure information adaptively. The proposed algorithm can preserve the image edge details and obtain the satisfactory visual effect. The proposed algorithm takes into account the noisy projection and eliminate the effects of the point spread function. The experimental results show that the proposed algorithm is superior to other algorithms in subjective visual effect. In the low signal-to-noise ratio environment, the proposed algorithm can reconstruct the high-quality image. At the same time, in the aspect of objective evaluation, the proposed algorithm improves the objective image quality metrics such as PSNR, SSIM, UIQI, SSDE, and so on. The convergence of the algorithm is greatly affected by the initial value. Several experiments show that similar initial values can improve the computational speed for the same type of image. Therefore, in order to improve the speed of the algorithm, the reference value can be provided in the same kind of image, also known as expert experience.

References

RW Harbron, EA Ainsbury, SD Bouffler, et al., Enhanced radiation dose and DNA damage associated with iodinated contrast media in diagnostic x-ray imaging. Br. J. Radiol. 90(1079), 20170028 (2017)

S Mein, R Gunasingha, M Nolan, et al., SU-C-204-06: Monte Carlo dose calculation for kilovoltage X-ray-psoralen activated cancer therapy (X-PACT): preliminary results. Med. Phys. 43(6), 3314–3315 (2016)

M Tamura, H Sakurai, M Mizumoto, et al., Lifetime attributable risk of radiation-induced secondary cancer from proton beam therapy compared with that of intensity-modulated X-ray therapy in randomly sampled pediatric cancer patients. J. Radiat. Res. 58(3), 363–371 (2017)

R Gu, A Dogandžić, Projected Nesterov’s proximal-gradient algorithm for sparse signal recovery. IEEE Trans. Signal Process. 65(13), 3510–3525 (2017)

C Liu, J Wang, W Wang, et al., Non-convex block-sparse compressed sensing with redundant dictionaries. IET Signal Proc. 11(2), 171–180 (2017)

EY Sidky, X Pan, Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization. Phys. Med. Biol. 53(17), 4777–4807 (2008)

J Chen, J Cong, LA Vese, et al., A hybrid architecture for compressive sensing 3-D CT reconstruction. IEEE J. Emerging Sel. Top. Circuits Syst. 2(3), 616–625 (2012)

B Vandeghinste, B Goossens, RV Holen, et al., Iterative CT reconstruction using shearlet-based regularization. IEEE Trans. Nucl. Sci. 60(5), 3305–3317 (2013)

H Zhang, L Zhang, Y Sun, et al., Low dose CT image statistical iterative reconstruction algorithms based on off-line dictionary sparse representation. Optik-Int. J Light Electron Opt. 131, 785–797 (2017)

Y Zhang, X Mou, G Wang, et al., Tensor-based dictionary learning for spectral CT reconstruction. IEEE Trans. Med. Imaging 36(1), 142–154 (2016)

M Pienn, K Bredies, R Stollberger, et al., Denoising algorithm for quantitative assessment of thoracic dual-energy computed tomography images. Wien. Klin. Wochenschr. 127(19–20), 815–815 (2015)

ME Lopes, Unknown sparsity in compressed sensing: denoising and inference. IEEE Trans. Inf. Theory 62(9), 5145–5166 (2016)

B Xing, J Wang, Denoising of medical CT image based on sparse decomposition. J. Biomed. Eng. 29(3), 456–459 (2012)

Y Zhu, L Luo, Toumoulin. Dictionary learning based denoising of low-dose X-ray CT image. J. Southeast Univ. 42(5), 864–868 (2012)

P Bannas, Y Li, U Motosugi, et al., Prior image constrained compressed sensing metal artifact reduction (PICCS-MAR): 2D and 3D image quality improvement with hip prostheses at CT colonography. Eur. Radiol. 26(7), 2039–2046 (2016)

T Yang, H Pen, D Wang, et al., Harmonic analysis in integrated energy system based on compressed sensing. Appl. Energy 165, 583–591 (2016)

H Yu, GA Wang, Soft-threshold filtering approach for reconstruction from a limited number of projections. Phys. Med. Biol. 55(13), 3905–3916 (2010)

W Yu, LA Zeng, Novel weighted total difference based image reconstruction algorithm for few-view computed tomography. PLoS One 9(10), e109345 (2014)

T Blumensath, R Boardman, Non-convexly constrained image reconstruction from nonlinear tomographic X-ray measurements. Philos. Trans. 373, 2043–2061 (2015)

G Zhang, H Pu, W He, et al., Bayesian framework based direct reconstruction of fluorescence parametric images. IEEE Trans. Med. Imaging 34(6), 1378–1391 (2015)

H Zhang, J Ma, J Wang, et al., Statistical image reconstruction for low-dose CT using nonlocal means-based regularization. Comput. Med. Imaging Graph. 38(6), 423–435 (2014)

Q Zhang, L Luo, Z Gui, Improved nonlocal prior-based Bayesian sinogram smoothing algorithm for low-dose CT. J. Southeast Univ. 44(3), 499–503 (2014)

C Cai, T Rodet, S Legoupil, et al., A full-spectral Bayesian reconstruction approach based on the material decomposition model applied in dual-energy computed tomography. Med. Phys. 40(11), 1161–1163 (2013)

Q Zhang, Z Gui, Y Chen, et al., Bayesian sinogram smoothing with an anisotropic diffusion weighted prior for low-dose X-ray computed tomography. Optik-Int. J. Light Electron Opt. 124(17), 2811–2816 (2013)

Y Hu, L Xie, L Luo, et al., L0 constrained sparse reconstruction for multi-slice helical CT reconstruction. Phys. Med. Biol. 56(4), 1173–1189 (2011)

M Jiang, G Wang, MW Skinner, et al., Blind deblurring of spiral CT images. IEEE Trans. Med. Imaging 22(7), 837–845 (2003)

J Xu, K Taguchi, BM Tsui, Statistical projection completion in X-ray CT using consistency conditions. IEEE Trans. Med. Imaging 29(8), 1528–1540 (2010)

J Wang, HB Lu, JH Wen, et al., Multiscale penalized weighted least-squares sinogram restoration for low-dose X-ray computed tomography. IEEE Trans. Biomed. Eng. 55(3), 1022–1031 (2008)

J You, GL Zeng, Hilbert transform based FBP algorithm for fan-beam CT full and partial scans. IEEE Trans. Med. Imaging 26(2), 190–199 (2007)

M Jiang, G Wang, Convergence of the simultaneous algebraic reconstruction technique (SART). IEEE Trans. Image Process. 12(8), 957 (2003)

X Yang, R Hofmann, R Dapp, et al., TV-based conjugate gradient method and discrete L-curve for few-view CT reconstruction of X-ray in vivo data. Opt. Express 23(5), 5368–5387 (2015)

AYA Yusra, CS Der, Comparison of image quality assessment: PSNR, HVS, SSIM, UIQI. Int. J. Sci. Eng. 3(1), 1–5 (2012)

Z Wang, AC Bovik, HR Sheikh, et al., Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Acknowledgements

The authors thank the editor and anonymous reviewers for their helpful comments and valuable suggestions.

Funding

This work was supported by the Tianjin Research Program of Application Foundation and Advanced Technology (Nos. 16JCYBJC28800 and 13JCYBJC15600), Tianjin Excellent Postdoctoral International Training Program, and Tianjin Commerce University Young and Middle-aged Teacher International Training Program.

Availability of data and materials

We can provide the data.

Author information

Authors and Affiliations

Contributions

YS and LZ conceived of and designed the study. XL and FT performed the experiments. XL wrote the paper. YS, LZ, and TF reviewed and edited the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Authors’ information

Yunshan Sun received the Ph.D. degree from the School of Electronic Information Engineering, Tianjin University, Tianjin, China, in 2012. His research interests are in medical CT image blind restoration and blind signal processing. Liyi Zhang is the corresponding author of this paper. He received the Ph.D. degree from the School of Electronic Information Engineering, Tianjin University, Tianjin, China, in 2012. His research interests are in signal process. Yuan Zhang is currently working toward the Ph.D. degree in the School of Electronic Information Engineering, Tianjin University, Tianjin, China. His research interests are in signal process and image processing. Xiaopei Liu is currently working toward the Ph.D. degree in the School of Electronic Information Engineering, Tianjin University, Tianjin, China. His research interests are in signal process and image processing.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sun, Y., Zhang, L., Fei, T. et al. Variational Bayesian blind restoration reconstruction based on shear wave transform for low-dose medical CT image. J Image Video Proc. 2017, 84 (2017). https://doi.org/10.1186/s13640-017-0234-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13640-017-0234-x