Abstract

Mutual coupling and gain-phase errors are very common in sensor channels for array signal processing, and they have serious impacts on the performance of most algorithms, especially in practical applications. Therefore, a new approach for direction of arrival (DOA) estimation of far-field sources in mixed far-field and near-field signals in the presence of mutual coupling and gain-phase imperfections is addressed. First, the model of received data with two kinds of array errors is founded. Then matrix transformation is used for simplifying the spectrum function according to the structure of the uniform linear array (ULA). At last, DOA of far-field signals can be obtained through searching the peaks of the modified spatial spectrum. The usefulness and behavior of the presented approach are illustrated by simulated experiments.

Similar content being viewed by others

1 Introduction

The traditional direction of arrival (DOA) estimation originates from 1960s; it is usually used in radar [1,2,3,4,5], underwater detection [6,7,8], and mobile communication [9,10,11,12,13,14,15]. Generally speaking, most of the direction finding algorithms need to know the accurate array manifold, and they are very sensitive to the errors in the sensor channels. However, due to the present processing technology, perturbations in applications are often inevitable, such as temperature, humidity, shake, and device aging, all of them will lead to the estimation performance deterioration. The main errors in array signal processing include mutual coupling, gain-phase uncertainty, and sensor position errors, so the array requires to be calibrated.

Existing calibrations can be categorized as active correction and self-correction; the former needs a correction source in known orientation; it has a low computation and wide calibration range, but there is often some deviation between the direction of the actual correction source and that of the preset value. While self-correction does not need the correction source, it usually evaluates the DOA and array errors simultaneously by some criteria; this kind of algorithms have small cost and a great potential of applications: Hawes introduced a Gibbs sampling approach based on Bayesian compressive sensing Kalman filter for the DOA estimation with mutual coupling effects; it is proved to be useful when the target moves into the endfire region of the array [16]. Rocca calculated the DOA of multiple sources by means of processing the data collected in a single time snapshot at the terminals of a linear antenna array with mutual coupling [17]. Based on sparse signal reconstruction, Basikolo developed a simple mutual coupling compensation method for nested sparse circular arrays; it is different from previous calibrations for uniform linear array (ULA) [18]. Elbir offered a new data transformation algorithm which is applicable for three-dimensional array via decomposition of the mutual coupling matrix [19, 20].

For the gain-phase error, Lee used the covariance approximation technique for spatial spectrum estimation with a ULA; it achieves DOA, together with gain-phase uncertainty of the array channels [21]. A F Liu introduced a calibration algorithm based on the eigendecomposition of the covariance matrix; it behaves independently of phase error and performs well in spite of array errors [22]. Cao addressed a direction finding method by fourth- order cumulant (FOC); it is suitable for the background of spatially colored noise [23]. In [24], the Toeplitz structure of array is employed to deal with the gain error, then sparse least squares is utilized for estimating the phase error. In recent years, the spatial spectrum estimation in the presence of multiple types of array errors has also been researched; Z M Liu described an eigenstructure-based algorithm which estimates DOA as well as corrections of mutual coupling and gain-phase of every channel [25]. Reference [26, 27] respectively discussed the calibration techniques for three kinds of errors existing in the array simultaneously. For the same questions, Boon obtained mutual coupling, gain-phase, and sensor position errors through maximum likelihood estimation, but it needs several calibration sources in known orientations [28].

For the past few years, DOA calculation for mixture far-field and near-field sources (FS and NS) has got more and more attentions and rapid development; Liang developed a two-stage MUSIC algorithm with cumulant which averts pairing parameters and loss of the aperture [29]. In [30], based on FOC and the estimation of signal parameters via rotational invariance techniques (ESPRIT), K Wang proposed a new localization algorithm for the mixed signals. In [31, 32], two localization methods based on sparse signal reconstruction are provided by Ye and B Wang respectively; they can achieve improved accuracy and resolve signals which are close to each other. The methods above only apply to the background of only FS, but there are rare published literatures of DOA estimation for mixed signals at the background of more than one kind of array error.

This paper considers the problem of DOA estimation of FS in mixed sources with mutual coupling and gain-phase error array. It skillfully separates the array error and spatial spectrum function by matrix transformation, then the DOA can be obtained through searching the peaks of the modified spatial spectrum, thus the process of array calibration is avoided; meanwhile, the approach is also suitable for the circumstance that the FS and NS are close to each other.

2 Methods

Before modeling, we assume that the array signal satisfies the following conditions:

-

1.

The incident signals are narrowband signals, they are independent of one another and stationary processes with zero-mean

-

2.

The noise on each sensor is zero mean white Gaussian process, they are independent of one another and the incident signals

-

3.

The sensor array is isotropic

-

4.

In order to assure that every column of array manifold is linear independent of one another, number of FS K1 and NS K2 are known beforehand, where that of FSK1 meets K1 < M, and K1 + K2 < 2M + 1, where 2M + 1is the number of sensors.

2.1 Data model

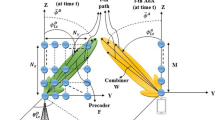

The data model is given in Fig. 1; consider K1 far-field signals \( {s}_{k_1}\left({k}_1=1,2,\cdots, {K}_1\right) \) and K2 near-field signals \( {s}_{k_2}\left({k}_2=1,2,\cdots, {K}_2\right) \) impinging on a 2M + 1-element array from \( \left[{\theta}_1,\cdots, {\theta}_{K_1},{\theta}_{K_1+1},\cdots, {\theta}_K\right] \), define 0th-element as the reference sensor; here, we have K = K1 + K2, d is the unit inter- element spacing, and it is equal to half of the signal wavelength, the range between \( {s}_{k_2} \)and reference sensor is \( {l}_{k_2} \), then the received data can be written

where

here, Xm(t) is the received data on the mth channel, andA(θ) is the array manifold

where \( {\mathbf{A}}_{FS}=\left[{\mathbf{a}}_{FS}\left({\theta}_1\right),\cdots, {\mathbf{a}}_{FS}\left({\theta}_{k_1}\right),\cdots, {\mathbf{a}}_{FS}\left({\theta}_{K_1}\right)\right] \) is the array manifold of FS for the ideal case, and \( {\mathbf{a}}_{FS}\left({\theta}_{k_1}\right) \)is the steering vector of \( {s}_{k_1} \); \( {\mathbf{A}}_{NS}=\left[{\mathbf{a}}_{NS}\left({\theta}_{K_1+1}\right),\cdots, {\mathbf{a}}_{NS}\left({\theta}_{k_2}\right),\cdots, {\mathbf{a}}_{NS}\left({\theta}_K\right)\right] \) is the array manifold of NS for the ideal case, and \( {\mathbf{a}}_{NS}\left({\theta}_{k_2}\right) \) is the steering vector of \( {s}_{k_2} \), therefore.

where f is the frequency, and

is the propagation delay for the k1 ‐ th (k1 = 1, 2, ⋯K1) FS at sensor m with respect to sensor 0, in the same way, we have.

by examining the geometry information in Fig. 1, we have

it is the propagation delay for NS \( {s}_{k_2} \) at sensor m with respect to sensor 0; Eq. (7) can be expressed as another form according to Taylor series [33].

in (1), signal matrix is

where \( {\mathbf{S}}_{FS}={\left[{\mathbf{S}}_1,\cdots, {\mathbf{S}}_{k_1},\cdots, {\mathbf{S}}_{K_1}\right]}^{\mathrm{T}} \) is matrix of FS, and \( {\mathbf{S}}_{NS}={\left[{\mathbf{S}}_{K_1+1},\cdots, {\mathbf{S}}_{k_2},\cdots, {\mathbf{S}}_K\right]}^{\mathrm{T}} \) is that of NS. N(t) is the Gaussian white noise matrix, so covariance of received data for the ideal case is

where

and B is the number of snapshots, I is the identity matrix with the dimension (2M + 1) × (2M + 1).

2.2 Array error model

The mutual coupling of ULA can be expressed by the following matrix W(1)

here, cq(q = 1, 2, ⋯, Q) denotes the mutual coupling coefficient, and Q represents the freedom degree.

The gain-phase perturbation is usually expressed as

where

ρm,ϕm are respectively the gain and phase errors, and they are independent with each other.

Therefore, the steering vector of the kth signal with mutual coupling and gain-phase errors is

here

Then the array manifold with array errors can be written

where

\( {\mathbf{a}}_{FS}^{\prime}\left({\theta}_{k_1}\right) \) is the steering vector of \( {s}_{k_1} \), and

\( {\mathbf{a}}_{NS}^{\prime}\left({\theta}_{k_2}\right) \) is the steering vector of \( {s}_{k_2}(t) \), thus the received data with array errors is

for the convenience of derivation below, we also define the vector of the two array errors as

2.3 Constructing spatial spectrum

The covariance with the two kinds of array imperfections is

where the covariance of the FS is

that of the NS is

so the noise eigenvector U′ can be acquired by decomposing R′, here, and then we are able to plot the spatial spectrum [34] as a function of DOA of FS

2.4 Transforming spectrum function

The denominator of (26) is equivalent to

transform (27) into another form

where

solving the peaks of (26) means minimizing (28). w ≠ 0, thus wHD(θ)w will be zero only if the determinant of D(θ) is 0, so θ equals the practical signals at this time, then \( {\theta}_1,\cdots {\theta}_{K_1} \) can be evaluated by plotting the modified spatial spectrum as a function of DOA of FS

where |D(θ)| stands for determinant of D(θ), the addressed approach is appropriate for FS in mixed signals, so it is called FM for short, and we know from the deduction above, the course of estimating array errors has been averted. According to the derivation, we know signal and sensor number must satisfy K < 2 M + 1, but there is no limitation to specific number of far-field and near-field signals. Then, FM can be summarized by the following Fig. 2:

3 Computation

Assume the region of DOA θ is limited in \( 0<\alpha <\theta <\beta <\frac{\uppi}{2} \), plotting step sizes of DOA is Δθ. The proposed FM approach involves computing (2M + 1) × (2M + 1) dimensional covariance matrices, determining their eigenvectors, solving one-dimensional spatial spectrums, and estimating local maximum values for FS; here, we just calculate the primary procedures for simplicity, so the computation is about \( {\left(2M+1\right)}^2Z+\frac{8}{3}{\left(2M+1\right)}^3+\frac{2\left(\beta -\alpha \right){\left(2M+1\right)}^2}{\Delta_{\theta }} \), and that of mixed near-field and far-field source localization based on uniform linear array partition (MULAP) [30] needs to form three 2M × 2Mfourth-order cumulant matrices, decompose a 4M × 4M matrix. Then using the ESPRIT to estimate the DOA with decomposing two 2(M − 1) × 2(M − 1) matrices, so it is nearly \( 3{(2M)}^2B+\frac{4}{3}{(4M)}^3+\frac{8}{3}{\left(2M-1\right)}^3 \).

4 Results and discussion

In this section, simulation results are used for the provided approach; first, let us consider four uncorrelated FS and three NS impinging on an eleven-element array from (13∘, 35∘, 50∘, 68∘) and(25∘, 60∘, 85∘); their frequencies are 3 GHz, the array signal model is shown in Fig. 1, and the sixth sensor is deemed as the reference. In view of complexity of the array imperfections, the establishment of error model will be simplified, assuming c1 = a1 + b1j,c2 = a2 + b2j, Q = 2,a1 and b1 distribute in (−0.5~0.5), a2 and b2 is selected in (‐0.25~0.25) uniformly. Gain and phase errors are respectively chosen in [0, 1.6] and [−24∘, 24∘] randomly, α = 0∘, β = 90∘, Δθ = 0.1∘, 500 independent trials are run for each scenario. And the estimation error is defined as

where θi is the true DOA of the ith FS, and \( {\widehat{\theta}}_i \) is the corresponding estimated value. Sparse Bayesian array calibration (SBAC) [25], MULAP, and FM are compared for the simulations.

First, Fig. 3 demonstrates the modified spatial spectrum of uncorrelated FS; it can be observed that the four peaks correspond the actual DOA, and Fig. 4 illustrates the estimation accuracy versus signal-to-noise ratio (SNR) when number of snapshots B is 25, then Fig. 5 describes that versus number of snapshots B when SNR is 8 dB. As it is seen in Figs. 4 and 5, all the three algorithms fail to estimate the results at lower SNR, and they perform better as the SNR or number of snapshots increases, finally converge to some certain value. As MULAP is not suitable for super-resolution direction finding in the presence of array imperfections, a large error still exists even if SNR is high or number of snapshots is large enough. And SBAC needs the array calibration ahead of estimating DOA, but the procedure of mutual coupling and gain-phase uncertainty estimations also introduces some error. Comparatively speaking, FM avoids the process of array correction before deciding FS, so it outperforms SBAC and MULAP in most cases, but when SNR is lower than−6 dB, as the signal subspace is not completely orthogonal to the noise subspace, its performance is poorer than SBAC.

In the second section, we will discuss the performance for the circumstance of far-field DOA estimation when FS and NS are close to each other; consider four FS and three NS impinging on an eleven-element array from (3∘, 12∘, 20∘, 28∘), (8∘, 17∘, 33∘); other conditions are the same with the trial above.

Figure 6 demonstrates the modified spatial spectrum when FS and NS are close to each other, and it can be seen, DOA of the FS is still resolved successfully by the proposed FM. Then Fig. 7 gives the estimation accuracy versus SNR when number of snapshots B is 25, and Fig. 8 demonstrates that versus number of snapshots B when SNR is 8 dB. It is noted that the three algorithms perform almost the same with the circumstance when FS and NS are close to each other; we can properly enhance the SNR or number of snapshots to improve their performance.

5 Conclusions

This paper introduces the DOA estimation problem of FS in mixed FS and NS with mutual coupling and gain-phase error array. The approach avoids array calibration by spectrum function transformation according to the structure of the array, so as to lessen the computational load to a great extent. Then we will concentrate on calculating these parameters of array imperfections and locating NS in the future.

Abbreviations

- DOA:

-

Direction of arrival

- EM:

-

Expectation-maximization

- ESPRIT:

-

Estimation of signal parameters via rotational invariance techniques

- FM:

-

FS in mixed signals

- FOC:

-

Fourth-order cumulant

- FS:

-

Far-field signals

- MULAP:

-

Mixed near-field and far-field source localization based on uniform linear array partition

- NS:

-

Near-field signals

- SBAC:

-

Sparse Bayesian array calibration

- SBL:

-

Sparse Bayesian learning

- SNR:

-

Signal-to-noise ratio

- ULA:

-

Uniform linear array

References

U. Nielsen, J. Dall, Direction-of-arrival estimation for radar ice sounding surface clutter suppression. IEEE Trans. Geosci. Remote Sens. 53, 5170–5179 (2015). https://doi.org/10.1109/TGRS.2015.2418221

S. Ebihara, Y. Kimura, T. Shimomura, R. Uchimura, H. Choshi, Coaxial-fed circular dipole array antenna with ferrite loading for thin directional borehole radar Sonde. IEEE Trans. Geosci. Remote Sens. 53, 1842–1854 (2015). https://doi.org/10.1109/TGRS.2014.2349921

R. Takahashi, T. Inaba, T. Takahashi, H. Tasaki, Digital monopulse beamforming for achieving the CRLB for angle accuracy. IEEE Trans. Aerosp. Electron. Syst. 54, 315–323 (2018). https://doi.org/10.1109/TAES.2017.2756519

D. Oh, Y. Ju, H. Nam, J.H. Lee, Dual smoothing DOA estimation of two-channel FMCW radar. IEEE Trans. Aerosp. Electron. Syst. 52, 904–917 (2016). https://doi.org/10.1109/TAES.2016.140282

A. Khabbazibasmenj, A. Hassanien, S.A. Vorobyov, M.W. Morency, Efficient transmit beamspace design for search-free based DOA estimation in MIMO radar. IEEE Trans. Signal Process. 62, 1490–1500 (2014). https://doi.org/10.1109/TSP.2014.2299513

A.A. Saucan, T. Chonavel, C. Sintes, J.M.L. Caillec, CPHD-DOA tracking of multiple extended sonar targets in impulsive environments. IEEE Trans. Signal Process. 64, 1147–1160 (2016). https://doi.org/10.1109/TSP.2015.2504349

A. Gholipour, B. Zakeri, K. Mafinezhad, Non-stationary additive noise modelling in direction-of-arrival estimation. IET Commun. 10, 2054–2059 (2016). https://doi.org/10.1049/iet-com.2016.0233

H.S. Lim, P.N. Boon, V.V. Reddy, Generalized MUSIC-like Array processing for underwater environments. IEEE J. Ocean. Eng. 42, 124–134 (2017). https://doi.org/10.1109/JOE.2016.2542644

T. Basikolo, H. Arai, APRD-MUSIC algorithm DOA estimation for reactance based uniform circular array. IEEE Trans. Antennas Propag. 64, 4415–4422 (2016). https://doi.org/10.1109/TAP.2016.2593738

Z.Y. Na, Z. Pan, M.D. Xiong, X. Liu, W.D. Lu, Turbo receiver channel estimation for GFDM-based cognitive radio networks. IEEE Access 6, 9926–9935 (2018). https://doi.org/10.1109/ACCESS.2018.2803742

R. Pec, B.W. Ku, K.S. Kim, Y.S. Cho, Receive beamforming techniques for an LTE-based mobile relay station with a uniform linear array. IEEE Trans. Veh. Technol. 64, 3299–3304 (2015). https://doi.org/10.1109/TVT.2014.2352675

A. Gaber, A. Omar, A study of wireless indoor positioning based on joint TDOA and DOA estimation using 2-D matrix pencil algorithms and IEEE 802.11ac. IEEE Trans. Wirel. Commun. 14, 2440–2454 (2015). https://doi.org/10.1109/TWC.2014.2386869

X. Liu, M. Jia, X.Y. Zhang, W.D. Lu, A novel multi-channel internet of things based on dynamic spectrum sharing in 5G communication. IEEE Internet Things J. (2018). https://doi.org/10.1109/JIOT.2018.2847731

X. Liu, F. Li, Z.Y. Na, Optimal resource allocation in simultaneous cooperative spectrum sensing and energy harvesting for multichannel cognitive radio. IEEE Access. 5, 3801–3812 (2017). https://doi.org/10.1109/ACCESS.2017.2677976

X. Liu, M. Jia, Z.Y. Na, W.D. Lu, F. Li, Multi-modal cooperative spectrum sensing based on Dempster-Shafer fusion in 5G-based cognitive radio. IEEE Access 6, 199–208 (2018). https://doi.org/10.1109/ACCESS.2017.2761910

M. Hawes, L. Mihaylova, F. Septier, S. Godsill, Bayesian compressive sensing approaches for direction of arrival estimation with mutual coupling effects. IEEE Trans. Antennas Propag. 65, 1357–1368 (2017). https://doi.org/10.1109/TAP.2017.2655013

P. Rocca, M.A. Hannan, M. Salucci, Single-snapshot DOA estimation in array antennas with Mutual coupling through a multiscaling BCS strategy. IEEE Trans. Antennas Propag. 65, 3203–3213 (2017). https://doi.org/10.1109/TAP.2017.2684137

T. Basikolo, K. Ichige, H. Arai, A. Novel Mutual, Coupling compensation method for underdetermined direction of arrival estimation in nested sparse circular arrays. IEEE Trans. Antennas Propag. 66, 909–917 (2018). https://doi.org/10.1109/TAP.2017.2778767

A.M. Elbir, A novel data transformation approach for DOA estimation with 3-D antenna arrays in the presence of mutual coupling. IEEE Antennas and Wireless Propagation Letters 16, 2118–2121 (2017). https://doi.org/10.1109/LAWP.2017.2699292

A.M. Elbir, Direction finding in the presence of direction-dependent mutual coupling. IEEE Antennas and Wireless Propagation Letters 16, 1541–1544 (2017). https://doi.org/10.1109/LAWP.2017.2647983

J.C. Lee, Y.C. Yeh, A covariance approximation method for near-field direction finding using a uniform linear array. IEEE Trans. Signal Process. 43, 1293–1298 (1995). https://doi.org/10.1109/78.382421

A.F. Liu, G.S. Liao, C. Zeng, An Eigenstructure method for estimating DOA and sensor gain-phase errors. IEEE Trans. Signal Process. 59, 5944–5956 (2011). https://doi.org/10.1109/TSP.2011.2165064

S.H. Cao, Z.F. Ye, N. Hu, DOA estimation based on fourth-order cumulants in the presence of sensor gain-phase errors. Signal Process. 93, 2581–2585 (2013). https://doi.org/10.1016/j.sigpro.2013.03.007 Accessed 3 May 2018

K.Y. Han, P. Yang, A. Nehorai, Calibrating nested sensor arrays with model errors. IEEE Trans. Antennas Propag. 63, 4739–4748 (2015). https://doi.org/10.1109/TAP.2015.2477411

Z.M. Liu, Y.Y. Zhou, A unified framework and sparse Bayesian perspective for direction-of-arrival estimation in the presence of array imperfections. IEEE Trans. Signal Process. 61, 3786–3798 (2013). https://doi.org/10.1109/TSP.2013.2262682

Y. Song, K.T. Wong, F.J. Chen, Quasi-blind calibration of an array of acoustic vector-sensors that are subject to gain errors/mis-lo-ation/mis-orientation. IEEE Transactions on Signal Processing 62, 2330–2344 (2014). https://doi.org/10.1109/TSP.2014.2307837

C.M.S. See, Method for array calibration in high-resolution sensor array processing. IEE Proceedings-Radar, Sonar and Navigation. 142, 90–96 (1995). https://doi.org/10.1049/ip-rsn:19951793

C.N. Boon, C.M.S. See, Sensor-array calibration using a maximum-likelihood approach. IEEE Transactions on Antennas Propagations. 44, 827–835 (1996). https://doi.org/10.1109/8.509886

J.L. Liang, D. Liu, Passive localization of mixed near-field and far-field sources using two-stage MUSIC algorithm. IEEE Trans. Signal Process. 58, 108–120 (2010). https://doi.org/10.1109/TSP.2009.2029723

K. Wang, L. Wang, J.R. Shang, Mixed near-field and far-field source localization based on uniform linear Array partition. IEEE Sensors J. 16, 8083–8090 (2016). https://doi.org/10.1109/JSEN.2016.2603182

T. Ye, X.Y. Sun, Mixed sources localisation using a sparse representation of cumulant vectors. IET Signal Processing 8, 606–611 (2014). https://doi.org/10.1049/iet-spr.2013.0271

B. Wang, J.J. Liu, X.Y. Sun, Mixed sources localization based on sparse signal reconstruction. IEEE Signal Processing Letters 19, 487–490 (2012). https://doi.org/10.1109/LSP.2012.2204248

E. Zeidler, Teubner Taschenbuch der Mathematik (Oxford University Press, Oxford, 2003)

R.O. Schmidt, Multiple emitter location and signal parameter estimation. IEEE Trans. Antennas Propag. 34, 276–280 (1986). https://doi.org/10.1109/TAP.1986.1143830

Acknowledgments

The authors would like to thank all the paper reviewers and Heilongjiang province ordinary college electronic engineering laboratory of Heilongjiang University.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 61501176, Natural Science Foundation of Heilongjiang Province F2018025, University Nursing Program for Young Scholars with Creative Talents in Heilongjiang Province UNPYSCT-2016017, and the postdoctoral scientific research developmental fund of Heilongjiang Province in 2017 LBH-Q17149.

Availability of data and materials

All data are fully available without restriction.

Author information

Authors and Affiliations

Contributions

Jiaqi Zhen conceived and designed the algorithm and the experiments. Baoyu Guo gives the revised version. Both of the authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhen, J., Guo, B. DOA estimation for far-field sources in mixed signals with mutual coupling and gain-phase error array. J Wireless Com Network 2018, 295 (2018). https://doi.org/10.1186/s13638-018-1324-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13638-018-1324-x