Abstract

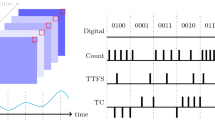

Layer II stellate cells in the medial enthorinal cortex (MEC) express hyperpolarisation-activated cyclic-nucleotide-gated (HCN) channels that allow for rebound spiking via an \(I_{\text{h}}\) current in response to hyperpolarising synaptic input. A computational modelling study by Hasselmo (Philos. Trans. R. Soc. Lond. B, Biol. Sci. 369:20120523, 2013) showed that an inhibitory network of such cells can support periodic travelling waves with a period that is controlled by the dynamics of the \(I_{\text{h}}\) current. Hasselmo has suggested that these waves can underlie the generation of grid cells, and that the known difference in \(I_{\text{h}}\) resonance frequency along the dorsal to ventral axis can explain the observed size and spacing between grid cell firing fields. Here we develop a biophysical spiking model within a framework that allows for analytical tractability. We combine the simplicity of integrate-and-fire neurons with a piecewise linear caricature of the gating dynamics for HCN channels to develop a spiking neural field model of MEC. Using techniques primarily drawn from the field of nonsmooth dynamical systems we show how to construct periodic travelling waves, and in particular the dispersion curve that determines how wave speed varies as a function of period. This exhibits a wide range of long wavelength solutions, reinforcing the idea that rebound spiking is a candidate mechanism for generating grid cell firing patterns. Importantly we develop a wave stability analysis to show how the maximum allowed period is controlled by the dynamical properties of the \(I_{\text{h}}\) current. Our theoretical work is validated by numerical simulations of the spiking model in both one and two dimensions.

Similar content being viewed by others

1 Introduction

The ability to remember specific events occurring at specific places and times plays a major role in our everyday life. The question of how such memories are established remains an active area of research, but several key facts are now known. In particular, the 2004 discovery of grid cells in the medial enthorinal cortex (MEC) by Fyhn et al. [2], supports the notion of a cognitive map for navigation. This is a mental representation whereby individuals can acquire, code, store, recall, and decode information about relative spatial locations in their environment. The concept was introduced by Tolman in 1948 [3], with the first neural correlate being identified as the place cell system in the hippocampus [4]. Place cells are found in the hippocampus and fire selectively to spatial locations, thereby forming a place field whose properties change from one environment to another. More recently, a second class of cells was identified that fire at the nodes of a hexagonal lattice tiling the surface of the environment covered by the animal—these are termed grid cells [5]. As an animal approaches the centre of a grid cell firing field, the spiking output of grid cell will increase in frequency. The grid field size and spacing increases from dorsal to ventral positions along the MEC and is independent of the animal’s speed and direction (even in the absence of visual input) and independent of the arena size. In rats, the grid field spacing can range from roughly 30 cm up to several meters [6]. Other grid cell properties include firing field patterns that manifest instantly in novel environments and maintain alignment with visual landmarks. Furthermore, neighbouring grid cells have firing fields with different spatial phases, whilst grid cells with a common spacing also have a common orientation (overturning an original suggestion that they have different orientations) [7].

May-Britt Moser and Edvard Moser shared the 2014 Nobel Prize in Physiology or Medicine with John O’Keefe for their discoveries of cells (grid and place cells, respectively) that subserve the brain’s internal positioning system. From a modelling perspective grid cells have attracted a lot of attention, due in part to their relatively recent and unexpected discovery, but also due to the very geometric firing patterns that they generate. There are now three main competing mathematical models for the generation of grid-like firing patterns: oscillatory interference models, continuous attractor network models, and “self-organised” models—see Giocomo et al. [8] and Schmidt-Hieber and Häusser [9] for excellent reviews. The first class of model uses interference patterns generated by multiple oscillations to explain grid formation [10]. They have been especially fruitful in addressing the theta rhythmic firing of grid cells (5–12 Hz) and their phase precession. Here spikes occur at successively earlier phases of the theta rhythm during a grid field traversal, suggestive of a spike-timing code [11]. The second class uses activity in local networks with specific connectivity to generate the grid pattern and its spacing. In this category, the models can be further sub-divided into those that utilise spatial pattern formation across the whole tissue (possibly arising via a Turing instability), such as in the work of Fuhs and Touretzky [12] and Burak and Fiete [13], and those that rely only upon spatially localised pattern states (or bumps) in models with (twisted) toroidal connectivity as described by McNaughton et al. [14] and Guanella et al. [15]. The third class proposes that grid cells are formed by a self-organised learning process that borrows elements from both former classes [16–18]. Recent experiments revealing the in vivo intracellular signatures of grid cells, the primarily inhibitory recurrent connectivity of grid cells, and the topographic organisation of grid cells within anatomically overlapping modules of multiple spatial scales along the dorsoventral axis of MEC provide strong constraints and challenges to all three classes of models [18–20]. This has led to a variety of new models, each with a focus on one or more aspects of biophysical reality that might underlie the functionality of grid cell response. For example Couey et al. [21] have shown that a continuous attractor network with pure inhibition can support grid cell firing, with the caveat that there is sufficient excitatory input to the MEC, supposedly from hippocampus, to cause principal cells to fire. However, recent optogenetic and electrophysiological experiments have challenged this simple description [22], highlighting the importance of intrinsic nonlinear ionic currents and their distribution amongst the main cell types in MEC.

Stellate and pyramidal cells constitute the principal neurons in layer II of medial enthorinal cortex (MEC II) that exhibit grid cell firing. The former comprise approximately 70% of the total MEC II neural population and are believed to represent the majority of the grid cell population. Even before the discovery of grid cells stellate cells were thoroughly studied because of their rapid membrane time constants and resonant behaviour. Indeed, they are well known to support oscillations in the theta frequency range [23, 24]. Interestingly the frequency of these intrinsic oscillations decreases along the dorsal-ventral axis of MEC II [25], suggestive of a role in grid field spacing. The resonant properties of stellate cells have been directly linked to a high density of hyperpolarisation-activated cyclic-nucleotide-gated (HCN) channels [26], underlying the so-called \(I_{\text{h}}\) current. The time constant of both the fast and slow component of \(I_{\text{h}}\) is significantly faster for dorsal versus ventral stellate cells, providing a potential mechanism for the observed difference in the resonant frequency along the dorsal-ventral axis. However, perhaps of more importance is the fact the \(I_{\text{h}}\) current can cause a depolarising rebound spike following a hyperpolarising current injection. Given that stellate cells are mainly interconnected by inhibitory interneurons [21], this means that rebound can play an important role in shaping spatio-temporal network rhythms. The inclusion of important intrinsic biophysical properties into a network has been emphasised by several authors, such as Navratilova et al. [27] regarding the contribution of after-spike potentials of stellate cells to theta phase precession, and perhaps most notably by Hasselmo and colleagues for the inclusion of HCN channels [28–31]. This has culminated in a spiking network model of MEC that supports patterns whose periodicity is controlled by a neuronal resonance frequency arising from an \(I_{\text{h}}\) current [1]. The model includes many of the features present in the three classes described above, and is able to replicate behaviour from several experiments, including phase precession in response to a phasic medial septum input, theta cycle skipping, and the loss of the spatial periodicity of grid cell firing fields upon a reduction of input from the medial septum. Simulations of the model in one spatial dimension show that the spacing of grid firing fields can be controlled by manipulating the speed of the rebound response. We note that a change that affects the rebound response would also affect the resonant properties of the cell. In contrast to continuous attractor models that rely on the spatial scale of connectivity to control grid spacing, a change in rebound response provides a mechanism of local control via changes in the expression levels of HCN channels. This fascinating observation warrants a deeper mathematical analysis. In this paper we introduce a spiking network model that shares many of the features of the Hasselmo model [1], focussing on the formation of travelling waves that can arise in the absence of (medial septum) input. Importantly our bespoke model is built from piecewise linear and discontinuous elements that allows for an explicit analysis of the periodic waves that arise in an inhibitory network through rebound spiking. In particular our wave stability analysis demonstrates clearly that the maximum allowed period is strongly controlled by the properties of our model \(I_{\text{h}}\) current. This gives further credence to the hypothesis that HCN channels can control the properties of tissue level periodic waves that may underpin the spacing of grid cell firing in MEC.

In Sect. 2 we introduce our model of a network of stellate cells in MEC II. The single neuron model is a simple leaky integrate-and-fire (LIF) model with the inclusion of a synaptic current mediated by network firing events, and a model of \(I_{\text{h}}\) based on a single gating variable. For ease of mathematical analysis we focus on a continuum description, so that the model may be regarded as a spiking neural field. Simulations of the model in two spatial dimensions are used to highlight the genericity of spike rebound mediated spatio-temporal patterns. To uncover the systematic way in which a cellular \(I_{\text{h}}\) current can control the properties of patterns at the tissue level, we focus next on a one-dimensional version of the model without external input. By further developing a piecewise linear (pwl) caricature of the activation dynamics of a HCN channel we show in Sect. 3 how explicitly to construct the dispersion curve for periodic travelling waves. This gives the speed of a wave as a function of its period, and shows the possibility of a wide range of wave periods that could be selected. Next in Sect. 4 we exploit techniques from the analysis of nonsmooth systems to determine the Evans function for wave stability. Importantly an investigation of this function shows that the maximum allowed period can be controlled both by the overall conductance strength of the \(I_{\text{h}}\) current as well as the time-scale for activation of HCN channels. Finally in Sect. 5 we discuss natural extensions of our work, and its relevance to further models of grid cell firing.

2 The Model

The original work of Hasselmo [1] considered both simple resonant models as well as spiking nonlinear integrate-and-fire Izhikevich units to describe MEC stellate cells. The former incorporated a model for \(I_{\text{h}}\) using a single gating variable with a linear dynamics whilst the latter were tuned to capture the resonant and rebound spiking properties from experimental data. Synaptic interactions between cells in a discrete one-dimensional network were modelled with a simple voltage threshold process. These models were subsequently studied in more detail in [28], with a further focus on two-dimensional models and travelling waves. Here, we consider a biophysically realistic spiking model in a similar spirit to that of Hasselmo, but within a framework that will allow for a subsequent mathematical analysis. In particular, we consider a spiking model of stellate cells using a generalised LIF model that includes a nonlinear \(I_{\text{h}}\) current. Moreover, we opt for a description of synaptic interactions using an event-based scheme for modelling post-synaptic conductances.

We first consider a continuum description defined on the plane and introduce a voltage variable \(V=V(\boldsymbol {r},t)\), where \(\boldsymbol {r} \in \mathbb{R}^{2}\) and \(t \geq0\). The subthreshold LIF dynamics is given by

with a set of spike times at position r generated according to

Here \(\tau_{\text{R}}\) is a refractory time-scale. Upon reaching the threshold \(V_{\text{th}}\) the membrane potential is reset to the value \(V_{\text{r}} < V_{\text{th}}\). The infimum operation ensures that a firing time is determined by the first time that the voltage variable (at a fixed position) crosses threshold (from below, remembering the IF reset process) subject to refractoriness. To model the refractory process we hold the voltage variable at the reset value \(V_{\text{r}}\) for a duration \(\tau_{\text{R}}\) after a firing event. The left-hand side of (1) describes a membrane current with capacitance C. The first term on the right-hand side of (1) represents a leak with a constant conductance \(g_{l}\) (and we have set the leakage reversal potential to zero without loss of generality). The terms \(I_{\text{h}}\), \(I_{\text{syn}}\), and \(I_{\text{hd}}\) represent currents arising from HCN channels, synaptic input, and head-direction input, respectively. \(I_{\text{h}}\) is a slow inward current with a reversal potential \(V_{\text{h}}\) that is substantially above resting levels, but which requires hyperpolarisation to become active; that is, the activation curve is monotone decreasing in V. Furthermore, the \(I_{\text{h}}\) current does not inactivate, even with prolonged (minutes) hyperpolarisation. Thus it is often modelled with a single gating variable \(n_{\text{h}}\) such that \(I_{\text{h}} (\boldsymbol {r},t)= g_{\text{h}} n _{\text{h}} (\boldsymbol {r},t) (V_{\text{h}}-V (\boldsymbol {r},t))\), where

Here the shape for the activation function \(n_{\text{h},\infty}\) is the sigmoid:

and fits to experimental data give \(V_{1/2}\approx-10\mbox{ mV}\) (with respect to rest) and \(k\approx10\) [32, 33]. The time constant for activation and deactivation can vary from tens to hundreds of milliseconds [34]; however, here we fix \(\tau_{\text{h}}=\text{constant}\) for simplicity and ignore any detailed dependence on voltage. To model synaptic interactions we consider a simple effective anatomical model whereby stellate cells interact directly through inhibition. In reality inhibitory interactions are actually mediated by interneurons and there is no direct synaptic coupling between stellate cells. Introducing an overall strength of synaptic conductance \(g_{\text{syn}}\) we then write \(I_{\text{syn}}(\boldsymbol {r},t)=g_{\text{syn}} \psi(\boldsymbol {r},t)\), where

where the function W represents anatomical connectivity, \(\Omega \subseteq \mathbb{R}^{2}\) is the spatial domain, and the function E represents the shape of a post-synaptic response to a train of incoming spikes. We write this in the form

for a given temporal filter shape η with the property that \(\eta (t)=0\) for \(t<0\) (so that interactions are causal). For convenience we will work with normalised responses such that \(\int_{0}^{\infty} \mathrm {d}t \eta(t) = 1\). As a concrete choice for the function W we shall take a smoothed bump shape \(W(\boldsymbol {r},\boldsymbol {r}')=w(|\boldsymbol {r}-\boldsymbol {r}'|)\), with

Here \(w_{0}<0\) in accordance with the predominantly inhibitory interactions of MEC, σ controls the spatial scale of interaction, and β the steepness of the surround inhibition function as shown in Fig. 1. The model is completed with the choice of the synaptic filter η, which we shall take to be an α-function of the form

where \(\alpha^{-1}\) is the time-to-peak of the synapse, and H is the Heaviside step function. Note that we work in a regime where the model is excitable, as we do not include any background drive that would be able to make the single neuron model fire in the absence of synaptic coupling or head-direction input.

Connectivity function w in (7), with \(\sigma= 25\), \(\beta= 0.5\) and \(w_{0} = -10\)

An animal’s speed \(v=v(t)\) and direction of motion \(\varPhi=\varPhi(t)\) generates input to the MEC that is modelled by the head-direction current \(I_{\text{hd}}\). For example, this could be of the form \(I_{\text{hd}}(\boldsymbol {r},t)= \boldsymbol {v} \cdot \boldsymbol {r}_{\phi}\) where \(\boldsymbol {v} = v(\cos{\varPhi}, \sin{\varPhi})\) and \(\boldsymbol {r}_{\phi}= l(\cos\phi(\boldsymbol {r}), \sin\phi(\boldsymbol {r}))\) describes a head-direction preference for the orientation \(\phi(\boldsymbol {r})\) at position r [13]. Here l is a constant that sets the magnitude of the head-direction current. The hidden assumption here is that head direction matches the direction of motion. However, this is not necessarily true behaviourally, and head direction cells may not code for motion direction [35]. Nonetheless it is a common assumption in most grid cell models, and so we adopt it here too. Fuhs and Touretzky [12] have shown that choosing an anisotropic anatomical connectivity of the particular form \(W(\boldsymbol {r},\boldsymbol {r}') = w(|\boldsymbol {r}-\boldsymbol {r}_{\phi}-\boldsymbol {r}'|)\) can then induce a spatio-temporal network pattern to flow in accordance with the pattern of head-direction information generated when traversing an environment. For continuous attractor network models that can generate, via a Turing instability, static hexagonal patterns with regions of high activity at the nodes of a triangular lattice, the induced movement of these hot-spots over a given point in the tissue gives rise to grid-like firing patterns. In this case the spacing of the firing field is hard to change, as it is mainly fixed by the spatial scale of the chosen connectivity; however, the mechanism of inducing pattern flow is robust to how the tissue pattern is generated. Thus, given the established success of the Fuhs and Touretzky mechanism we will not focus on this here, and instead turn our attention to the formation of relevant tissue patterns and, in particular, how local control of firing field spacing may be effected.

To investigate the types of solution the spiking neural field model supports, we perform simulations over a two-dimensional square domain. Since the action of the head-direction current is merely to induce a flow of emergent patterns we restrict our attention to the case without such input, i.e. \(I_{\text{hd}}=0\). See Appendix A for details of our bespoke numerical scheme implemented on a GPU architecture, and Additional file 1 for C++/CUDA code. We observe three general classes of coherent behaviour that take the form of spatially periodic non-travelling structures (though which oscillate in time), travelling periodic waves, and lurching waves. The latter are also generically found in neural systems with rebound currents, such as in models of thalamic slices [36–38]. Unlike traditional smoothly propagating waves, which exhibit a stationary profile in a co-moving frame, lurching waves consist of patterns of activity in a localised region of the domain, which after some period of time, decay and an adjacent region of the domain becomes active. These waves appear to ‘lurch’ across the domain. Whilst interesting in their own right, we focus in this article on the analysis of smoothly propagating periodic waves, since these have been suggested by Hasselmo [1] to play a dominant role in the formation of grid-like firing patterns in MEC. We show an example of a non-travelling periodic structure in Fig. 2, at two different time points to illustrate that the pattern is not static, but oscillates in time. An example of a smoothly propagating travelling wave is shown in Fig. 3. The model also supports more exotic spatio-temporal structures, including hexagonal patterns, and for a movie showing a dynamic state with a hexagonal sub-structure see Additional file 2.

A simulation of spatially periodic non-travelling patterns in a two-dimensional spiking neural field model with an \(I_{\mathrm{h}}\) current, solved on a spatial grid of 1000 × 1000 points. Displayed is the voltage component across the entire network at \(t=7000\mbox{ ms}\) (left) and \(t=10\mbox{,}000\mbox{ ms}\) (right). The model supports periodic patterns of localised activity. Note that these patterns are not static, but oscillate in time. Parameters: \(C = 1\mbox{ }\upmu \mbox{Fcm}^{-2}\), \(\tau_{\text{h}}=400\mbox{ ms}\), \(V_{\text{h}}=40 \mbox{ mV}\), \(g_{l} = 0.25\mbox{ mS/cm}^{-2}\), \(g_{h} = 1\mbox{ mS/cm}^{-2}\), \(\tau_{\mathrm{R}} = 200\mbox{ ms}\), \(V_{\text{th}} = 14.5\mbox{ mV}\), \(V_{\text{r}} = 0\mbox{ mV}\), \(V_{1/2} = -10\mbox{ mV}\), \(k=10\), \(g_{\text{syn}} = 15\mbox{ mS/cm}^{-2}\), \(w_{0} = -10\), \(\sigma= 25\), \(\beta ^{-1}=0\), and \(\alpha^{-1} = 20\mbox{ ms}\). The choice of a long refractory time-scale in the model is useful for eliciting a single (rather than multiple) spike rebound response. Spatial domain \(\Omega= [-L,L] \times[-L,L]\) where \(L=10 \sigma\). See also the video in Additional file 2, showing the emergence of more exotic spatio-temporal structures, including hexagons

Periodic travelling wave solutions in the spiking neural field model, solved on a spatial grid of 1000 × 1000 points. Displayed is the voltage component across the entire network at \(t=7000\mbox{ ms}\) (left) and \(t=10\mbox{,}000\mbox{ ms}\) (right). Using different initial data, the spatially periodic pattern observed in Fig. 2 is replaced by a periodic travelling wave (moving downward in the figure). Parameters as in Fig. 2, with \(w_{0}=-0.1\)

3 Wave Construction

To understand more fully how \(I_{\text{h}}\) controls the emergent scale of periodic waves seen in the simulations of Sect. 2 we now turn to a one-dimensional version of the model defined on the infinite domain. As in Sect. 2, we consider \(I_{\text{hd}}=0\), in which case the model is isotropic and (5) reduces to

To reduce the model to a more mathematically convenient form we make two observations about the \(I_{\text{h}}\) current. The first is that \(V_{\text{h}}\) is typically larger than V, which suggests the approximation \(V_{\text{h}} - V \simeq V_{\text{h}}\). The second is that the nonlinear activation function \(n_{\text{h},\infty}\) can be approximated by a pwl function, as illustrated in Fig. 4. Here we match the slope at \(V=V_{1/2}\), and otherwise saturate the function to one or zero, so that

Red line: Nonlinear activation function for \(n_{\text{h}}\) with \(V_{1/2}=-10\mbox{ mV}\) and \(k=10\). Green line: Piecewise linear fit of \(n_{\text{h},\infty}\) given by Eq. (10)

Simulations of the model with the reduced form of the \(I_{\text{h}}\) are in qualitative agreement with simulations of the full nonlinear model, and indeed wherever tested the same repertoire of behaviour is always found. In both instances, travelling wave behaviour with a well-defined speed and period is easily initiated; Figs. 5 and 6 compare simulation results arising in the fully nonlinear and reduced pwl model.

Simulations of a one-dimensional spiking neural field model with an \(I_{\text{h}}\) current solved on a spatial grid of 5000 points. Left: simulations of the model with the full nonlinear dynamics for \(I_{\text{h}}\). Right: simulations of the model with the reduced pwl dynamics for \(I_{\text{h}}\) given by (10). Here we show the voltage traces at an illustrative set of locations throughout the system as a function of time. Note that each single spike is mediated by rebound in response to inhibitory synaptic input. Parameters are as in Fig. 2 with \(\beta=0.5\), \(V_{\text{th}} = 14\mbox{ mV}\). The activity propagates from bottom to top after an initial hyperpolarisation current of \(-30\mbox{ mV}\) is given to a set of neurons (in yellow) from \(t=1000\mbox{ ms}\) to \(t=1250\mbox{ ms}\)

Voltage traces observed at \(x=0\), when the periodic travelling wave is fully developed. Top: simulations of the model with the reduced pwl dynamics for \(I_{\text{h}}\) given by (10). Bottom: simulations of the model with the full nonlinear dynamics for \(I_{\text{h}}\). All parameters as in Fig. 5

If we introduce the vector \(X=(V,n_{\text{h}}) \in \mathbb{R}^{2}\) then we may write the reduced model in a more abstract setting, namely in terms of the pwl evolution equation that governs the system behaviour between one spiking event and the next:

In (11), A is a \(2 \times2\) matrix that is defined in a piecewise constant fashion, with dependence on the value of the voltage (in particular, which of the three domains detailed in equation (10) pertains), or whether the system is in the refractory state. In the latter case, A is defined according to

while for \(T^{m-1}(x) + \tau_{\text{R}} \leq t < T^{m}(x)\)

where we have assumed the ordering \(V_{-} < V_{\text{r}} < V_{+} < V_{\text{th}}\), introduced \(\tau=C/g_{\text{l}}\), and absorbed the factor \(V_{\text{h}}\) within \(g_{\text{h}}\). Similarly we define Ψ according to

and, for \(T^{m-1}(x) + \tau_{\text{R}} \leq t < T^{m}(x)\),

where

We highlight that in (12)–(16) we have introduced the subscripts \(\{R,0,-,+ \}\) to indicate the state of the system, namely whether it is refractory (labelled by R) or is not refractory and has a voltage in the range \((V_{-},V_{+})\) (labelled by 0), \((-\infty,V_{-}]\) (labelled by −), \([V_{+},\infty)\) (labelled by +).

3.1 Travelling Wave Analysis

We now seek travelling wave solutions of (11) of the form \(\widehat{X}(\xi,t)\), where \(\xi=t-x/c\) and c is the (constant) wave speed. In this case (11) transforms to

A stationary travelling wave \(\widehat{X}(\xi,t) = Q(\xi) = (V(\xi ),n_{\text{h}}(\xi))\) satisfies the travelling wave equation

In terms of firing events a periodic wave is described by \(T^{m}(x) = x/c + m \varDelta\), where Δ is the period of the wave such that \(Q(\xi+\varDelta) = Q(\xi)\). Substitution of this firing ansatz into (9) allows us to determine the function \(\widehat{\psi}(\xi ) = \psi(x,t) \vert _{t=T^{m}(x)}\) where \(\widehat{\Psi}(\xi )\) is obtained from (15) under the replacement of ψ by ψ̂. The function ψ̂ is easily determined as

and is Δ-periodic, and can therefore be expressed in terms of a Fourier series as

In (20) tildes denote the Fourier integral representation, such that for a given function \(a(x)\)

and we have made use of the result that \(c \varDelta\sum_{m} \mathrm {e}^{ikcm \varDelta} = 2 \pi\sum_{p} \delta(k-2 \pi p/(c \varDelta))\).

For the bump function (7) and the α-function (8) we have

Thus, given the decay properties of (22) as a function of k, the sum in (20) can be naturally truncated.

The formal solution to (18) can be constructed using variation of parameters as

where G is a matrix exponential given by

Here \(\mathcal{T}\) is a time-ordering operator \(\mathcal {T} A(t) A(s) = H(t -s)A(t) A(s) + H(s-t) A(s)A(t)\). In general the issue of time-ordering makes it very difficult to evaluate G. However, in our case A is piecewise constant and so we easily may break the solution up into parts distinguished by the label \(\mu\in\{R, 0, -,+\}\). In each case trajectories are given explicitly by (23) with \(G(\xi,\xi')=G(\xi-\xi')\) and \(G=G_{\mu}\) where \(G_{\mu}(\xi) = \exp(A_{\mu}\xi)\). A global trajectory may then be obtained by patching together solutions, denoted by \(Q_{\mu}\), from each domain. It is in this fashion that we now construct the shape of a periodic travelling wave in a self-consistent manner. Of use will be matrix exponential decomposition \(\mathrm {e}^{A t} = P \mathrm {e}^{\Lambda t} P^{-1}\), where \(\Lambda= \operatorname{diag} (\lambda^{+},\lambda^{-})\) are the eigenvalues of A with associated eigenvectors \(q^{\pm}= (1, (\lambda^{\pm}- A_{11})/A_{12}, )^{T}\). Here the eigenvalues of A are given explicitly by

Using (20) we may then write a domain specific trajectory for \(\mu\in\{0,+,-\}\) in the form

where

Here \(\Lambda_{\mu}= \operatorname{diag} (\lambda^{+}_{\mu},\lambda ^{-}_{\mu})\) with \(\lambda^{\pm}_{\mu}\) representing the eigenvalues of \(A_{\mu}\) and

When \(\mu=R\) we adopt an alternative strategy (since \(A_{R}\) is singular), remembering that when the system is refractory then \(V(\xi )\) is clamped at the value \(V=V_{\text{r}}\). In this case, we only have to consider the evolution of the gating variable \(n_{\text{h}}\), which is obtained from (3) and (10) to give

Now let us consider the form of a periodic wave which elicits a single spike for every period, much like the ones seen in Fig. 5. An example of such a travelling wave orbit in the \((V,n_{\text{h}})\) phase plane is shown in Fig. 7. The corresponding evolution of \(V(\xi)\) and \(n_{\text{h}}(\xi)\) is shown in Fig. 8.

Orbit of a periodic travelling wave. Parameters as in Fig. 5, with \(\varDelta= 450\), \(c = 0.0669\), \(n_{h}(0) = 0.3815\) and \(\xi_{1} = 225.4223\). The travelling wave starts at \(\xi=0\) where \(V=V_{r}\) is clamped and \(n_{h}\) evolves according to (29) until \(\xi= \tau_{\mathrm{R}}\) and the system is released from the refractory period (blue line). Then it evolves clockwise according to (26) with \(\mu= 0\) until \(V(\tau_{\mathrm{R}} + \xi_{1})=V_{+}\) when it switches (red line) to \(\mu=+\) (green line). The orbit ends when \(V=V_{\text{th}}\) and V is reset. The green horizontal line for \(V > V_{\text{th}}\) is not part of the solution. It is simply a marker for the spiking event (and the model does not generate an explicit shape for an action potential). Black dotted lines represent (from left to right) \(V=V_{-}\), \(V=V_{+}\) and \(V=V_{\text{th}}\)

Profile of the two components of the periodic travelling wave \(Q(\xi)\) defined by (26) and illustrated in Fig. 7. Solid lines correspond to \(V(\xi)\) (left-hand axis) and dotted ones to \(n_{h}(\xi)\) (right-hand axis). Colour-code and parameters as in Fig. 7. Dotted black lines indicate the values where the system changes dynamics

Given the translational invariance of the system we are free to choose a travelling wave origin such that \(\xi=0\) corresponds to the system immediately after firing. For a duration \(\tau_{\text{R}}\) it will then remain clamped at \(V_{\text{r}}\) with \(n_{\text{h}}\) evolving according to (29) with \(\xi_{0}=0\) (as shown in blue in Fig. 7 and Fig. 8). From here it then evolves according to (26) with \(\mu=0\), with initial data determined by \(Q_{R}(\xi_{0})=(V_{\text{r}},n_{\text{h}}(\tau_{\text{R}}))\), until \(V(\xi)\) reaches \(V_{\pm}\), after which we set \(\mu=-\) or \(\mu =+\) in (26) and select appropriate initial data for (26), depending on the value of V achieved first. For simplicity, and since this is reliably observed in numerical simulations for a wide range of parameters (red line in Fig. 7 and Fig. 8, though the argument is easily generalised), we assume \(V_{+}\) is the relevant choice. The final piece of the orbit is then obtained from (26) with \(\mu=+\) and initial data determined by \(Q_{+}(\xi_{0})=Q_{0}(\xi_{1}+\tau _{\text{R}})\) (green line in Fig. 7 and Fig. 8) and evolving the system until \(V(\xi)=V_{\text{th}}\). Denoting the time of flight for the trajectory such that \(V_{\text{r}} \leq V < V_{+}\) by \(\xi_{1}\), and that, for \(V_{+} \leq V < V_{\text{th}}\) by \(\xi_{2}\), the period of the orbit is given by \(\varDelta=\tau_{\text{R}} + \xi_{1} + \xi_{2}\). We note that the orbit is discontinuous because of the reset of the voltage variable after one period. Thus we have four unknowns \((n_{\text{h}}(0),c,\xi_{1},\varDelta)\) related by three nonlinear algebraic equations

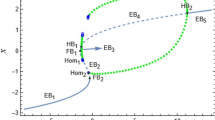

whose simultaneous solution determines the dispersion relationship for the wave speed as a function of the period \(c=c(\varDelta)\). An example of a dispersion curve constructed in this way is shown in Fig. 9. Here we see that a wide range of allowed wavelengths can co-exist (with differing speeds). Note that in Fig. 9 we also include solutions that visit the region of phase-space where \(V < V_{-}\), and these solutions typically only occur for small values of Δ. Our constructive theory does not provide a wave selection principle; however, by varying initial data in direct numerical simulations we were able to find solutions in excellent agreement with the theoretical predictions up to some maximal value of Δ. The determination of this value is the subject of the next section, where we show how to analyse wave stability.

Dispersion curve \(c=c(\varDelta)\) for a periodic travelling wave. Here c is the speed of a wave with period Δ. For small periods and on the upper branch waves are constructed that visit the domain where \(V< V_{-}\). Parameters as in Fig. 5. Solid lines represent periods where the system is stable while dashed lines represent where it is unstable; red dots represent the maximum stable period of the orbit, highlighting its increase with \(\tau_{h}\). Note that waves (on the lower branch of solutions) are stable for a large range of wave periods, and that the actual value of \((c,\varDelta)\) that would be observed in a simulation are dependent upon the choice of initial data

4 Wave Stability

To determine the stability of a periodic travelling wave we must not only treat perturbations of the state variables, but also the associated effects on the times of firing. Moreover, one must remember that because the model is nonsmooth (due to the switch at \(V=V_{\pm}\)) and discontinuous (because of reset whenever \(V=V_{\text{th}}\)) standard approaches for analysing smooth dynamical systems cannot be immediately applied. Nonetheless, as we show below, the wave stability can in fact be explicitly determined. We do this by constructing the so-called Evans function. This has a long history of use in the analysis of wave solutions to partial differential equations, dating back to the work of Evans on the stability of action potentials in the Hodgkin–Huxley model of a nerve fibre [39], has been extended to certain classes of firing rate neural field model [40], and is developed here for spiking neural fields.

We begin our analysis by exposing the spike-train that determines the synaptic drive in (9) by writing it in the equivalent form

where the firing times are defined according to the threshold condition \(V(x,T^{m}(x)) = V_{\text{th}}\). We may relate spike times to voltage threshold conditions using the result that for fixed x

and \(V_{t}\) denotes partial differentiation of V with respect to t. Hence

Consider again travelling wave solutions of the form \(V(x,t)=\widehat {V}(\xi,t)\), where \(\xi=t-x/c\). In this co-moving frame we can define a set of firing event functions \(\xi^{m}(t)\) according to the threshold condition \(\widehat{V}(\xi^{m}(t),t)=V_{\text{th}}\). These event times can be related to the co-moving voltage threshold condition using the result that, for fixed t,

Substitution into (33) and using \(\widehat{V}_{\xi}\simeq V_{t}\) close to a periodic orbit we find

Noting that, for a periodic wave \(\xi^{m}(t) = m \varDelta\), ψ̂ is independent of t and Eq. (35) recovers Eq. (19) as expected.

We now analyse the stability of such a periodic wave by perturbations such that \(\xi^{m}(t) = m \varDelta+ \delta\xi^{m}(t)\), with \(|\delta\xi ^{m}(t)| \ll1\). Writing the corresponding perturbation of \(\widehat {\psi}(\xi,t)\) as \(\widehat{\psi}(\xi,t) = \widehat{\psi}(\xi) + \delta\widehat{\psi}(\xi,t)\) we find

It remains to determine the relationship between \(\delta\xi^{m}(t)\) and the perturbations of the shape of the travelling wave. In Appendix B we show that we can relate \(\delta\xi^{m}(t)\) to the deviation in the voltage, denoted by \(\delta\widehat{V}(m \varDelta,t)\), via the simple relationship

Thus for solutions of the form \(\delta\widehat{V}(\xi,t) = \delta \widehat{V}(\xi) \mathrm {e}^{\lambda t}\), \(\delta\widehat{V}(\xi) = \delta\widehat{V}(\xi+\varDelta)\) we find \(\delta\widehat{\psi}(\xi,t) = \delta\widehat{\psi}(\xi;\lambda ) \mathrm {e}^{\lambda t}\), with \(\delta\widehat{\psi}(\xi;\lambda) =\delta\widehat{V}(0) f(\xi ;\lambda)\), and

Returning to the more abstract setting given by Eq. (11) we linearise around the travelling wave by setting \(\widehat{X}(\xi,t) = Q(\xi) + \delta\widehat{X}(\xi) \mathrm {e}^{\lambda t}\), with \(\delta \widehat{X}(\xi)=\delta\widehat{X}(\xi+\varDelta)\). This yields the variational equation

where \(A(\xi;\lambda) = A(Q(\xi)) -\lambda I_{2}\) (and we use the argument of A to emphasise that it depends on position along the periodic orbit). We may write the solution to (39) in much the same way as for the periodic orbit problem given by (18), namely with the use of a variation of parameters formula, matrix exponentials and (38):

Here \(G_{\mu}(\xi;\lambda)=\exp([A_{\mu}- \lambda I_{2} ] \xi)\) and

However, the evolution of perturbations through the switching manifolds \(V=V_{\pm}\), the firing threshold \(V=V_{\text{th}}\) and the release from the refractory state requires care, since in all these cases there is a jump in the Jacobian. The theory of nonsmooth dynamical systems gives a prescription for handling this using so-called saltation matrices dating back to the work of Aizerman and Gantmakher in the 1950s [41]. For a more recent perspective we recommend the paper by Leine et al. [42] and the book by di Bernardo et al. [43], particularly in engineering applications, and the paper by Coombes et al. [44] for applications in neuroscience. The \(2 \times2\) saltation matrices for handling switching, firing, and refractoriness are constructed in Appendix C and denoted \(K_{\text{switch}}\), \(K_{\text{fire}}\), and \(K_{\text{ref}}\), respectively. In essence they map perturbations through the region of nonsmooth behaviour to give \(\delta\widehat{X}(0_{+}) = K_{\text{fire}} \delta \widehat{X}(0)\), \(\delta\widehat{X}({\tau_{\text{R}}}_{+}) = \delta \widehat{X}({\tau_{\text{R}}})+ K_{\text{ref}} \delta\widehat {X}(0)\), and \(\delta\widehat{X}((\tau_{\text{R}}+\xi_{1})_{+}) = K_{\text{switch}} \delta\widehat{X}(\tau_{\text{R}}+\xi_{1})\). The saltation matrices are given explicitly by \(K_{\text{switch}} = I_{2}\) and

If we now introduce the function \(\mathcal{F}_{\mu}(\xi,\xi_{0};\lambda)\):

then Eq. (40) may be used to generate the perturbation after one period as \(\delta\widehat{X}(\varDelta) = \varGamma(\lambda,\varDelta) \delta\widehat{X}(0)\), where

Enforcing that perturbations be Δ-periodic (i.e. \(\delta\widehat{X}(\varDelta) = \delta\widehat{X}(0)\)) we obtain the spectral condition \(\mathcal{E}(\lambda, \varDelta) = 0\) where

We identify (45) as the Evans function for the periodic wave. To determine (43) in a computationally useful form we use a Fourier representation to represent (38) (cf. (20) from (19)) as \(f(\xi ;\lambda) = \sum_{p \in \mathbb{Z}} f_{p}(\lambda) \exp(- 2 \pi i p \xi /\varDelta)\) where

for \(\operatorname{Re} (\lambda+\alpha) >0\). Then in a similar way to the construction of (26) we find the useful representation for (43) as

where

The eigenvalues of the spectral problem can be practically constructed by considering the decomposition \(\lambda= \nu+ i \omega\) and simultaneously solving the pair of equations \(\mathcal{G}(\nu, \omega )=0\) and \(\mathcal{H}(\nu, \omega)=0\), where \(\mathcal{G}(\nu, \omega)=\operatorname{Re} \mathcal{E}(\nu+ i \omega, \varDelta)\) and \(\mathcal{H}(\nu, \omega)=\operatorname{Im} \mathcal{E}(\nu+ i \omega, \varDelta)\), subject to the constraint \(\nu+\alpha>0\). Figures 10 and 11 show the zero level sets of \(\mathcal {G}\) and \(\mathcal{H}\) in the \((\nu,\omega)\) plane for two different points on the dispersion curve of Fig. 9. The intercepts when \(\nu+\alpha>0\) provide the zeros of the Evans function and here highlight clearly that, for Δ sufficiently large, the zeros of the Evans function can cross to the right-hand complex plane signalling a wave instability.

Zeros of the Evans function (45) with \(\varDelta= 460\). These occur at the intersection (green dots) of \(\mathcal{G}(\nu, \omega)=0\) (red curve) and \(\mathcal{H}(\nu, \omega)=0\) (blue curve) where \(\mathcal{G}\) is the real part of \({\mathcal{E}}\) whereas \(\mathcal {H}\) is the imaginary part. Here we can see that all the eigenvalues, except the zero eigenvalue, have negative real part, so that the periodic wave is stable. Parameters as in Fig. 5

Zeros of the Evans function (45) with \(\varDelta= 470\). These occur at the intersection (green dots) of \(\mathcal{G}(\nu, \omega)=0\) (red curve) and \(\mathcal{H}(\nu, \omega)=0\) (blue curve) where \(\mathcal{G}\) is the real part of \({\mathcal{E}}\) whereas \(\mathcal{H}\) is the imaginary part. Here we see a complex conjugate pair of eigenvalues with positive real part, so that the periodic wave is unstable. Parameters as in Fig. 5

Figure 10 also shows that there is always a zero eigenvalue. It is simple to establish the persistence of this zero under parameter variation. Differentiating (18) with respect to ξ gives

From (38) and (19) we may establish that

Hence for \(\lambda=0\) we see that a solution to (39) is the eigenfunction

as expected from translation invariance of the system (so that a perturbation tangential to the travelling wave orbit is neutrally stable).

5 Discussion

This paper is motivated by recent work in computational neuroscience that has highlighted rebound firing as a mechanism for wave generation in spiking neural networks that can underlie the formation of grid cell firing fields in MEC [1]. We have presented a simple spiking model with inhibitory synaptic connections and an \(I_{\text{h}}\) current that can generate smoothly propagating activity waves via post-inhibitory rebound. These are qualitatively of the type observed in previous computational studies [36, 37], yet are amenable to an explicit mathematical analysis. This is possible because we have chosen to work with piecewise linear discontinuous models, and exploited methodologies from the theory of nonsmooth systems. In particular we have exploited the linearity of our model between events (for firing, switching, and release from a refractory state) to construct periodic solutions in a travelling wave frame. To assess the stability of these orbits we have treated the propagation of perturbations through event manifolds using saltation operators. Using this we have constructed dispersion curves showing a wide range of stable periods, with a maximum period controlled by the time-scale of the rebound current. This gives further credence to the idea that the change in grid field scale along the dorsal-ventral axis of the MEC is under local control by HCN channels.

A number of natural extensions of the work in this paper suggest themselves. We briefly outline them here, and in no particular order. For simplicity we have focussed on the analysis of waves in a homogeneous model with only one spatial dimension. The analysis of the corresponding travelling waves, with hexagonal structure, in two spatial dimensions is more challenging, though is an important requirement for a complete model of grid cell firing. Moreover, the assumption of homogeneity should be relaxed. In this regard it would be of interest to understand the effects of a heterogeneity in the time constants (for voltage response, synaptic time-scale, and the time-scale of the \(I_{\text{h}}\) current) on the properties of spatio-temporal patterns. Furthermore, it would be biologically more realistic to consider two sets of inhibitory interneurons, as in [1, 28, 30]. As well as depending on the \(I_{\text{h}}\) current, grid field spacing changes as a function of behavioural context. This is believed to occur through the activation of neuromodulators [32], and simple regulation of our \(I_{\text{h}}\) current model would allow a systematic study of this, even before considering the more important issue of structured input. The work in this paper has focussed on spontaneous pattern formation that occurs in the absence of such input. Given the importance of the head-direction input for driving grid cell firing fields it would be natural to consider a mathematical analysis for the case with \(I_{\text{hd}} \neq0\). For the standard Fuhs–Touretzky mechanism of inducing firing patterns this would further require the treatment of an anisotropic interaction kernel with a dependence on a head-direction preference map. One way to address this would be via a perturbation theory around the limiting case treated in this paper, and use this to calculate a tissue response parametrised by the animal’s speed and direction of motion. The same methodology would also allow an investigation of phase precession during a grid field traversal. Our simulations have also shown the possibility of ‘lurching waves’ and it would be interesting, at least from a mathematical perspective, to analyse their properties (speed and stability) and compare them to the co-existing smoothly propagating waves. It would further be pertinent in this case to pay closer attention to any possible nonsmooth bifurcations that could give rise to wave instabilities, such as grazing bifurcations. Finally we note that grid cells are grouped in discrete modules with common grid spacing and orientation [20]. It has recently been suggested that coupling between modules or via feedback loops to the hippocampus may help to suppress noise and underpin a robust code (with a large capacity) for the representation of position [45]. Another extension of the work in this paper would thus be to consider the dynamics of networks of interacting modules, paying closer attention to the details of MEC microcircuitry [46].

References

Hasselmo ME. Neuronal rebound spiking, resonance frequency and theta cycle skipping may contribute to grid cell firing in medial entorhinal cortex. Philos Trans R Soc Lond B, Biol Sci. 2013;369:20120523.

Fyhn M, Molden S, Witter MP, Moser EI, Moser M-B. Spatial representation in the entorhinal cortex. Science. 2004;305:1258–64.

Tolman EC. Cognitive maps in rats and men. Psychol Rev. 1948;55:189–208.

O’Keefe J, Nadal L. The hippocampus as a cognitive map. Oxford: Clarendon; 1978.

Hafting T, Fyhn M, Molden S, Moser M-B, Moser EI. Microstructure of a spatial map in the entorhinal cortex. Nature. 2005;436:801–6.

Brun VH, Solstad T, Kjelstrup KB, Fyhn M, Witter MP, Moser EI, Moser M-B. Progressive increase in grid scale from dorsal to ventral medial entorhinal cortex. Hippocampus. 2008;18:1200–12.

Rowland DC, Roudi Y, Moser M-B, Moser EI. Ten years of grid cells. Annu Rev Neurosci. 2016;39:19–40.

Giocomo LM, Moser M-B, Moser EI. Computational models of grid cells. Neuron. 2011;71(4):589–603.

Schmidt-Hieber C, Häusser M. How to build a grid cell. Philos Trans R Soc Lond B, Biol Sci. 2014;369:20120520.

Burgess N, Barry C, O’Keefe J. An oscillatory interference model of grid cell firing. Hippocampus. 2007;17:801–12.

Reifenstein ET, Kempter R, Schreiber S, Stemmler MB, Herz AVM. Grid cells in rat entorhinal cortex encode physical space with independent firing fields and phase precession at the single-trial level. Proc Natl Acad Sci USA. 2012;109:6301–6.

Fuhs MC, Touretzky DS. A spin glass model of path integration in rat medial entorhinal cortex. J Neurosci. 2006;26:4266–76.

Burak Y, Fiete IR. Accurate path integration in continuous attractor network models of grid cells. PLoS Comput Biol. 2009;5:e1000291.

McNaughton BL, Battaglia FP, Jensen O, Moser EI, Moser M-B. Path integration and the neural basis of the ‘cognitive map’. Nat Rev Neurosci. 2006;7:663–78.

Guanella A, Kiper D, Verschure P. A model of grid cells based on a twisted torus topology. Int J Neural Syst. 2007;17:231–40.

Mhatre H, Gorchetchnikov A, Grossberg S. Grid cell hexagonal patterns formed by fast self-organized learning within entorhinal cortex. Hippocampus. 2012;22:320–34.

Pilly PK, Grossberg S. Spiking neurons in a hierarchical self-organizing map model can learn to develop spatial and temporal properties of entorhinal grid cells and hippocampal place cells. PLoS ONE. 2013;8(4):e60599.

Pilly PK, Grossberg S. How does the modular organization of entorhinal grid cells develop? Front Human Neurosci. 2014;8:337.

Barry C, Hayman R, Burgess N, Jeffery KJ. Experience-dependent rescaling of entorhinal grids. Nat Neurosci. 2007;10:682–4.

Stensola H, Stensola T, Solstad T, Frøland K, Moser M-B, Moser EI. The entorhinal grid map is discretized. Nature. 2012;492:72–8.

Couey JJ, Witoelar A, Zhang S-J, Zheng K, Ye J, Dunn B, Czajkowski R, Moser M-B, Moser EI, Roudi Y, Witter MP. Recurrent inhibitory circuitry as a mechanism for grid formation. Nat Neurosci. 2013;16:318–24.

Buetfering C, Allen K, Monyer H. Parvalbumin interneurons provide grid cell-driven recurrent inhibition in the medial entorhinal cortex. Nat Neurosci. 2014;17:710–8.

Alonso A, Llinás RR. Subthreshold Na+-dependent theta-like rhythmicity in stellate cells of entorhinal cortex layer II. Nature. 1989;342:175–7.

Pastoll H, Ramsden HL, Nolan MF. Intrinsic electrophysiological properties of entorhinal cortex stellate cells and their contribution to grid cell firing fields. Front Neural Circuits. 2012;6:17.

Giocomo LM, Zilli EA, Fransén E, Hasselmo ME. Temporal frequency of subthreshold oscillations scales with entorhinal grid cell field spacing. Science. 2007;315:1719–22.

Nolan MF, Dudman JT, Dodson PD, Santoro B. HCN1 channels control resting and active integrative properties of stellate cells from layer II of the entorhinal cortex. J Neurosci. 2007;27:12440–51

Navratilova Z, Giocomo LM, Fellous J-M, Hasselmo ME, McNaughton BL. Phase precession and variable spatial scaling in a periodic attractor map model of medial entorhinal grid cells with realistic after-spike dynamics. Hippocampus. 2012;22:772–89.

Hasselmo ME, Shay CF. Grid cell firing patterns may arise from feedback interaction between intrinsic rebound spiking and transverse traveling waves with multiple heading angles. Front Syst Neurosci. 2014;8:201.

Tsuno Y, Chapman GW, Hasselmo ME. Rebound spiking properties of mouse medial entorhinal cortex neurons in vivo. Eur J Neurosci. 2015;42:2974–84.

Shay CF, Ferrante M, Chapman GW, Hasselmo ME. Rebound spiking in layer II medial entorhinal cortex stellate cells: possible mechanism of grid cell function. Neurobiol Learn Mem. 2015;129:83–98.

Ferrante M, Shay CF, Tsuno Y, Chapman GW, Hasselmo ME. Post-inhibitory rebound spikes in rat medial entorhinal layer II/III principal cells: in vivo, in vitro, and computational modeling characterization. Cereb Cortex. 2017;27(3):2111–25.

Heys JG, Hasselmo ME. Neuromodulation of \(I_{h}\) in layer II medial entorhinal cortex stellate cells: a voltage clamp study. J Neurosci. 2012;32:9066–72.

Shay CF, Boardman IS, James NM, Hasselmo ME. Voltage dependence of subthreshold resonance frequency in layer II of medial entorhinal cortex. Hippocampus. 2012;22:1733–49.

Giocomo LM, Hasselmo ME. Time constants of h current in layer II stellate cells differ along the dorsal to ventral axis of medial entorhinal cortex. J Neurosci. 2008;28:9414–25.

Raudies F, Brandon MP, Chapman GW, Hasselmo ME. Head direction is coded more strongly than movement direction in a population of entorhinal neurons. Brain Res. 2015;1621:355–67.

Rinzel J, Terman D, Wang X-J, Ermentrout B. Propagating activity patterns in large-scale inhibitory neuronal networks. Science. 1998;279:1351–5.

Coombes S. Dynamics of synaptically coupled integrate-and-fire-or-burst neurons. Phys Rev E. 2003;67:041910.

Wasylenko TM, Cisternas JE, Laing CR, Kevrekidis IG. Bifurcations of lurching waves in a thalamic neuronal network. Biol Cybern. 2010;103:447–62.

Evans J. Nerve axon equations: IV the stable and unstable impulse. Indiana Univ Math J. 1975;24:1169–90.

Coombes S, Owen MR. Evans functions for integral neural field equations with Heaviside firing rate function. SIAM J Appl Dyn Syst. 2004;34:574–600.

Aizerman MA, Gantmakher FR. On the stability of periodic motions. J Appl Math Mech. 1958;22:1065–78. (Translated from Russian.)

Leine RI, Van Campen DH, Van de Vrande BL. Bifurcations in nonlinear discontinuous systems. Nonlinear Dyn. 2000;23:105–64.

di Bernardo M, Budd C, Champneys AR, Kowalczyk P. Piecewise-smooth dynamical systems: theory and applications. London: Springer; 2008. (Applied mathematical sciences).

Coombes S, Thul R, Wedgwood KCA. Nonsmooth dynamics in spiking neuron models. Physica D. 2012;241:2042–57.

Sreenivasan S, Fiete I. Grid cells generate an analog error-correcting code for singularly precise neural computation. Nat Neurosci. 2011;14:1330–7.

Burgalossi A, Herfst L, von Heimendahl M, Förste H, Haskic K, Schmidt M, Brecht M. Microcircuits of functionally identified neurons in the rat medial entorhinal cortex. Neuron. 2011;70:773–86.

Acknowledgements

SC was supported by the European Commission through the FP7 Marie Curie Initial Training Network 289146, NETT: Neural Engineering Transformative Technologies. We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Tesla K40 GPU used for this research. KCAW was generously supported by the Wellcome Trust Institutional Strategic Support Award (WT105618MA). MBQ gratefully acknowledge the support of CONACyT and The University of Nottingham during her PhD studies. We thank the anonymous referees for their helpful comments on our manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

MBQ, KCAW, RDO and SC contributed equally. All authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic Supplementary Material

Below are the links to the electronic supplementary material.

13408_2017_51_MOESM1_ESM.zip

Code for 2D simulations. Code written in C++/CUDA programming language. Equipment requirements: Nvidia CUDA drivers and Nvidia graphics card with compute capability ≥3.5. The Armadillo C++ package must be installed. Run commands: $ cmake . $ make $./SpatialNavigationCUDA. (zip)

13408_2017_51_MOESM2_ESM.m4v

Movie for 2D patterned state evolution. A movie of the voltage component in a planar spiking neural field model with an \(I_{\mathrm{h}}\) current. Here we see the emergence of a rich patterned state. Parameters as in Fig. 2 and the system is initialised with a localised region of hyperpolarisation. Activity spreads from this initial state generating spatio-temporal patterns that interact in a complicated fashion to generate hexagonal structures. (m4v)

Appendices

Appendix A: Numerical Scheme

Here, we describe the numerical scheme that we have developed to evolve the spiking neural field model. Given the large computational overheads for simulating the latter we have focussed on implementing the algorithm on a GPU system. In essence, we exploit the piecewise linear nature of the dynamics to obtain trajectories in closed form and then use a root-finding scheme to find the timings of events (switching, firing or refractory). The dynamics is updated at an event (generating synaptic currents and resetting at firing events, changing the gating dynamics at switching events, and releasing from reset at refractory events), and the process repeated. For clarity we describe our approach for two spatial dimensions as it is easily adapted to treat just one spatial dimension.

1.1 A.1 Algorithm

We solve the system over a 2D discretised grid of size \(L \times L\) with N (numerical) cells in each spatial dimension and enforce periodic boundary conditions. Cells are equi-spaced with a spatial separation of \(\varDelta x = 2L/N\) and the state of the cells in the network are evaluated over \(T+1\) time steps, discretising time as \(t_{i} = i \Delta t\), \(i=0,\dots,T\). To evaluate these states, we analytically integrate (11) to provide a closed-form expression for the trajectory of the system. Our closed-form expression allows us, for cell j, to write its state at time \(t_{i+1}\) as a function of its state at time \(t_{i}\):

where \(\varphi_{h}\) is the evolution operator acting over a time h. Note that this expression assumes that no firing events have occurred between \(t_{i}\) and \(t_{i+1}\), but can account for switching events, as detailed below. We shall describe later how the algorithm handles firing events.

For convenience, we shall divide the state space of an individual cell into three regions, based on the instantaneous value of V: Region I, with \(V\leq V_{-}\); Region II, with \(V_{-}< V\leq V_{+}\); and Region III, with \(V>V_{+}\). In addition, we define Region IV to be that where the cell is in the refractory state (recall that a cell is in the refractory state at time t if \(T^{m-1}\geq t+\tau_{\mathrm{R}}\), where \(T^{m-1}< t\) is the last spiking time of that cell). In each of these regions, the equation governing the dynamics for the gating variable \(n_{\mathrm{h}}\) is different, and this needs to be reflected in our algorithm.

At a given time t, each cell in the network is in precisely one of the regions outlined in the preceding paragraph. We store a global array that tracks which region each of the cells is in and use this to select the appropriate equation to integrate, based on those presented in (13). Over an interval Δt, the region of a subset of the cells may change as the local voltage variable, V, crosses switching manifolds. Note that the continuity of trajectories restricts which transitions between regions are possible (for example, a transition between Region I and Region III is not permitted). Our evolution operator \(\varphi_{h}\) must account for this.

We identify where switching events occur by comparing the region of all of the cells before and after the operator \(\varphi_{\Delta t}\) is applied. Where switching events have occurred, we locate the switching time for cell j by searching for roots of the transcendental equation \(X_{j}^{1}(s) - V_{x} = 0\), \(t_{i} \leq s < t_{i+1}\) using Newton’s method, where the superscript denotes that we only consider the V component of the state vector \(X_{j}\) and \(V_{x}\) is the switching manifold that has been crossed. We remark that the Fréchet derivatives for use in Newton’s method are obtainable in closed form, and so the application of Newton’s method does not rely on numerical finite difference approximations for these derivatives.

After the switching time, s, has been found, the state of the cell is updated via \(X_{j}(s) = \varphi_{\Delta s}(t_{i},X_{j}(t_{i}))\), where \(\Delta s = s-t_{i}\), according to the governing equation for that region. Following this, the regional variable is updated to reflect the fact that the cell has transitioned to a new region. Finally, the remainder of the update step is taken, according to \(X_{j}(t_{i+1}) = \varphi_{\Delta s^{*}} (s,X_{j}(s))\), where \(\Delta s^{*} = t_{i+1}-s\), by integrating the governing equation for new region. It is possible that between times s and \(t_{i+1}\), the cell passes through other switching manifolds, in which case, the procedure for identifying switching times and updating the region variable is repeated as many times as necessary.

Cells in Region IV are in the refractory state, governed by (12) and (14), in which the voltage variable is held fixed at \(V=V_{\mathrm{r}}\) for a total duration of length \(\tau_{\mathrm{R}}\). To account for this, we introduce for each cell, a counter that tracks how much time a cell has spent in the refractory period. Following a firing event, the spiking cell enters the refractory state, whereupon this counter is reset to zero (and the cell’s region is changed to Region IV). The cell will remain in the refractory state until the counter reaches \(\tau_{\mathrm{R}}\). At this time, the region variable for that cell is updated to the appropriate value, based on the location of \(V_{\mathrm{r}}\) relative to \(V_{-}\) and \(V_{+}\). For computational efficiency, we can take advantage of the fact that V is unchanging in the refractory period by replacing the voltage dynamics with \(\dot{V}=1\), thus letting V implement our refractory time counter in Region IV. Thus, if the cell spikes at time \(T^{m}\), we have \(V(T^{m})=0\) upon entering Region IV. To ensure continuity of solutions upon exiting the refractory period, we must also ensure to set \(V(T^{m}+\tau_{\mathrm{R}}) = V_{\mathrm{r}}\).

Since cells are independent of one another outside of firing times, this above computation to update the state of the system can be performed in parallel across the GPU architecture in the absence of firing events. Where firing events occur, we must ensure that we correctly update the state of the network at the firing time to reflect the coupling in the network. As for the switching times, we determine firing times by searching for roots of the transcendental equation \(X_{j}^{1}(s) - V_{\text{th}} = 0\), \(t_{i} \leq s < t_{i+1}\) using Newton’s method. We then amalgamate all firing events between times \(t_{i}\) and \(t_{i+1}\) and find the minimum firing time, \(t^{*}\), of this list. The states of all cells are updated to this time from \(t_{i}\):

where \(\Delta t^{*} = t^{*}-t_{i}\) and the superscript denotes that this corresponds to the state of the network in the limit as t approaches \(t^{*}\) from the left. Following this, the reset conditions are applied across the entire domain. Our approach allows for simultaneous firing events to occur by also applying reset conditions for all events that occurred with the time interval \([t^{*},t^{*}+\varepsilon)\), where ε is a small positive real number. After the reset conditions have been applied, the state of the network is updated again as

where \(\Delta t^{*}_{c} = t_{i+1} - t^{*}\) and the superscript denotes that the state is evaluated after the reset conditions have been applied. Note that during the completion of the timestep, we must again check to see if other cells have reached threshold, and apply reset conditions as necessary. At the completion of a timestep, the state of the network is saved and the state at the next time step is computed.

We note that a further improvement in computational efficiency can be achieved in the limit of high gain for the kernel function (7). Namely in the limit \(\beta\rightarrow\infty\) the bump function becomes a Top-hat function:

Thus, cells are only coupled with a single strength \(w_{0}\), over a finite distance, σ, and we need only consider the behaviour of other cells within this distance when applying reset conditions.

1.2 A.2 Implementation

To take full advantage of the parallel capabilities of GPUs, we must sensibly organise the processes associated with simulating the network through the construction of appropriate kernels to best to perform tasks in parallel. In addition to this, the memory associated with state variables must be managed appropriately given that memory access can present a significant bottleneck during GPU-based computations.

As discussed in the preceding section, the evolution operator \(\varphi _{h}\) has to account for the differing functional forms of the governing equations as V crosses thresholds. This is done by using Newton’s method to find the times of any crossings and then by ‘patching’ together solution orbits on either side of the threshold crossing time. Associated with Newton’s method is a tolerance for finding the crossing times, which must be specified along with other parameters. In our implementation, we take advantage of the double precision capability of our GPU. Since we are using closed-form solutions for the orbits of our system, the only source of numerical error other than machine error arises due to the tolerance selected when computing the threshold crossing times for V. In all of our simulations, we prescribe this tolerance to be 10−10 so that we have precise specification of these times.

During an update step, there may be multiple cells that reach the firing threshold at differing times. To preserve the correct dynamics in spite of this, we need to update the state of the network to the minimum of this set of firing times. To identify this, we define a variable that stores the minimum firing time across the network, which is updated by the subset of spiking cells as firing events are found. Without intervention, this procedure can lead to race conditions, in which one spiking cell will query the stored value of the minimum firing time (to compare with its own), whilst another spiking cell is replacing that same value. Race conditions mean that we cannot guarantee that the value stored in our firing time variable truly represents the minimum over all firing events. To address this problem, we use atomic events, which allow only one cell to read from and write to the stored firing time variable at once. In this way, we are guaranteed to find the true minimum across the set of firing times.

In Algorithms 1 and 2, we detail how to evolve the dynamics of the 2D network. Note that all of the for loops, and the use of \(\varphi _{h}\) to update the state of the network are performed in parallel using the GPU. The full code for our simulations, written in the C++/CUDA programming language, is available in the supplementary material.

Appendix B: Perturbations at Switching Conditions

The model undergoes switching in its dynamics as the voltage variable passes through the values V+, \(V_{-}\), and \(V_{\text{th}}\). With the introduction of a set of indicator functions \(h(X(\xi,t);\mu ) = V(\xi,t)-V_{\mu}\), where \(\mu\in\{+,-,\text{th} \}\) we can define the travelling wave coordinate values at which these switching events occur according to \(h(X(\xi,t);\mu) = 0\). Now suppose that we have two trajectories: an unperturbed trajectory \(X(\xi,t)=(V(\xi ,t),n_{\text{h}}(\xi,t))\) and a perturbed trajectory \(\widetilde {X}(\xi,t)\) such that \(\delta{X}(\xi,t) = \widetilde{X}(\xi ,t)-X(\xi,t)\), with δX small. Moreover, let us consider the unperturbed trajectory to pass through the switching manifold when \(\xi = \xi^{m}(t)\), \(m \in \mathbb{Z}\). Similarly we shall consider the perturbed trajectory to switch when \(\xi= \widetilde{\xi}^{m}(t) = {\xi}^{m}(t) +\delta\xi^{m}(t)\). The indicator function for the perturbed trajectory may be Taylor expanded as (suppressing the dependence on μ for clarity):

Here we have introduced the notation \(X({\xi}^{m}_{\pm},t) = \lim_{\epsilon\searrow0} X({\xi}^{m} \pm\epsilon,t)\), to make sure that the partial derivative in ξ is well defined. Using the fact that \(h(\widetilde{X}(\widetilde{\xi }^{m},t))=0=h({X}({\xi}^{m},t))\) we obtain

Using the result that \(\nabla_{X} h(X;\mu) = (\partial_{V},\partial _{n_{\text{h}}}) (V-V_{\mu})=(1,0)\) the above can be re-arranged to give the perturbation in the switching coordinate in terms of the difference between the perturbed and unperturbed trajectories as

Appendix C: Saltation Matrices

Saltation matrices allow us to handle any jumps in the system (or its linearisation) when it changes from one dynamical regime to another. As well as occurring at the switching manifolds this also happens when the voltage is unclamped and released from the refractory state. Using the notation of Appendix B let us first consider the deviation between the two trajectories at a switching event defined by \(\xi=\xi _{\text{s}}\), with a set of perturbed switching events at \(\xi=\xi _{\text{s}} + \delta\xi\), as

If \(\delta\xi>0\) then the unperturbed trajectory will already have transitioned through the switch (from below), in which case the two trajectories are governed by different dynamics. A similar argument holds for \(\delta\xi<0\). Thus we may write

We may write the above in matrix form as

or equivalently as \(\delta X(\xi_{\text{s}}+\delta\xi,t)=K(\xi_{\text{s}}) \delta X(\xi_{\text{s}},t)\) with

Now since the voltage is clamped immediately after a firing event (so that \({V_{\xi}({\xi_{\text{s}}}_{+},t)}=0\)) and the dynamics for \(n_{\text{h}}\) jumps (since it depends on V which is discontinuously reset from \(V_{\text{th}}\) to \(V_{\text{r}}\)) then the saltation matrix for firing is given by

At a switching event whenever \(V=V_{\pm}\) we note that the voltage and gating dynamics are both continuous. Thus the saltation matrix for switching is given simply by \(K_{\text{switch}}(\xi_{\text{s}})=I_{2}\), namely there is no effect.

The use of saltation matrices to propagate perturbations through the refractory state is a little more subtle than through switching and firing events, since the former occur over a finite time-scale \(\tau_{\text{R}}\) whilst the latter are instantaneous. In this case the perturbation δξ at a firing event \(\xi=\xi_{\text{f}}\) is propagated for a time \(\tau_{\text{R}}\) before a new dynamical regime is encountered. From (55) \(\delta\xi= - \delta V(\xi_{\text{f}},t)/V_{\xi}({\xi_{\text{f}}}_{-},t)\). Setting \(\xi_{\text{s}}=\xi_{\text{f}}+\tau_{\text{R}}\) and combining the above with (57) gives

Hence we see that \(\delta X({\xi_{\text{s}}}_{+},t) = \delta X(\xi_{\text{s}},t) + K_{\text{ref}}(\xi_{\text{s}}) \delta X(\xi_{\text{f}},t)\) where

Here we have used the fact that \({V_{\xi}({\xi_{\text{s}}}_{-},t)}=0\) (since the system is in its refractory state), and that the dynamics for \(n_{\text{h}}\) is continuous at \(\xi=\xi_{\text{s}}\).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bonilla-Quintana, M., Wedgwood, K.C.A., O’Dea, R.D. et al. An Analysis of Waves Underlying Grid Cell Firing in the Medial Enthorinal Cortex. J. Math. Neurosc. 7, 9 (2017). https://doi.org/10.1186/s13408-017-0051-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13408-017-0051-7