Abstract

Background

Alexithymia, a personality trait characterized by difficulties interpreting one’s own emotional states, is commonly elevated in autistic adults, and a growing body of literature suggests that this trait underlies a number of cognitive and emotional differences previously attributed to autism, such as difficulties in facial emotion recognition and reduced empathy. Although questionnaires such as the twenty-item Toronto Alexithymia Scale (TAS-20) are frequently used to measure alexithymia in the autistic population, few studies have attempted to determine the psychometric properties of these questionnaires in autistic adults, including whether differential item functioning (I-DIF) exists between autistic and general population adults.

Methods

We conducted an in-depth psychometric analysis of the TAS-20 in a large sample of 743 verbal autistic adults recruited from the Simons Foundation SPARK participant pool and 721 general population controls enrolled in a large international psychological study (the Human Penguin Project). The factor structure of the TAS-20 was examined using confirmatory factor analysis, and item response theory was used to further refine the scale based on local model misfit and I-DIF between the groups. Correlations between alexithymia and other clinical outcomes such as autistic traits, anxiety, and quality-of-life were used to assess the nomological validity of the revised alexithymia scale in the SPARK sample.

Results

The TAS-20 did not exhibit adequate global model fit in either the autistic or general population samples. Empirically driven item reduction was undertaken, resulting in an eight-item unidimensional scale (TAS-8) with sound psychometric properties and practically ignorable I-DIF between diagnostic groups. Correlational analyses indicated that TAS-8 scores meaningfully predict autistic trait levels, anxiety and depression symptoms, and quality of life, even after controlling for trait neuroticism.

Limitations

Limitations of the current study include a sample of autistic adults that was overwhelmingly female, later-diagnosed, and well-educated; clinical and control groups drawn from different studies with variable measures; and an inability to test several other important psychometric characteristics of the TAS-8, including sensitivity to change and I-DIF across multiple administrations.

Conclusions

These results indicate the potential of the TAS-8 as a psychometrically robust tool to measure alexithymia in both autistic and non-autistic adults. A free online score calculator has been created to facilitate the use of norm-referenced TAS-8 latent trait scores in research applications (available at http://asdmeasures.shinyapps.io/TAS8_Score).

Similar content being viewed by others

Background

Alexithymia is a subclinical construct characterized by difficulties in identifying and describing one’s own emotional state [1, 2]. Individuals scoring high on measures of alexithymia exhibit difficulties recognizing and labeling their internal emotional states, discriminating between different emotions of the same affective valence, and describing and communicating their emotional states to others. These individuals also tend to exhibit a reduction in imaginal processes and a stimulus-bound, externally oriented style of thinking (i.e., “concrete thinking”). Alexithymia is not itself considered a psychiatric diagnosis; rather, the condition can better be described as a dimensional personality trait that is expressed to varying degrees in the general population and associated with a host of medical, psychiatric, and psychosomatic conditions [2,3,4,5,6,7,8,9,10,11,12,13,14]. Although there is taxometric evidence to suggest that alexithymia is a dimensional rather than categorical construct [15,16,17], researchers frequently categorize a portion of individuals as having “high alexithymia” based on questionnaire scores above a certain threshold, with upwards of 10% of the general population exceeding these thresholds [18,19,20]. Over the last 5 decades, a large body of research has emerged to suggest that alexithymia is a transdiagnostic predictor of important clinical outcomes, such as the presence of psychiatric and psychosomatic disorders, suicidal ideation and behavior, non-suicidal self-injury, risky drinking, and reduced response to various medical and psychotherapeutic treatments [21,22,23,24,25,26].

Alexithymia is a construct of particular interest in research on autism spectrum disorder (hereafter “autism), a condition frequently associated with difficulties in processing, recognizing, communicating, and regulating emotions [27,28,29,30,31,32]. A recent meta-analysis of published studies identified large differences between autistic adolescents/adults and neurotypical controls on self-reported alexithymia as measured by the Toronto Alexithymia Scale (TAS [2, 33, 34]), with an estimated 49.93% of autistic individuals exceeding cutoffs for “high alexithymia” on the twenty-item TAS (TAS-20), compared to only 4.89% of controls [3]. Alexithymia has also been suggested to be part of the “Broader Autism Phenotype” [35,36,37], the cluster of personality characteristics observed in parents of autistic children and other individuals with high-levels of subclinical autistic traits [38]. Along with verbal IQ, self-reported alexithymia is one of the stronger predictors of task-based emotion processing ability in the autistic population [29], and a number of studies measuring both alexithymia and core autism symptoms have concluded that alexithymia accounts for some or all of the emotion-processing differences associated with the categorical diagnosis of autism, such as impaired facial emotion recognition and differences in empathetic responses [39,40,41,42,43,44,45,46,47,48,49,50,51,52]. Within the autistic population, alexithymia is also a meaningful predictor of the severity of co-occurring mental health conditions, showing relationships with symptoms of depression, general anxiety, social anxiety, non-suicidal self-injury, and suicidality [53,54,55,56,57,58,59,60].

Despite the impressive body of literature on alexithymia in autistic individuals and its relationships with other constructs, there has been surprisingly little investigation into the measurement properties of alexithymia measures in the autistic population [61]. One small study by Berthoz and Hill [62] addressed the validity of two common alexithymia scales (the TAS-20 and Bermond–Vorst Alexithymia Questionnaire-Form B [BVAQ-B] [63]) in a sample of 27 autistic adults and 35 neurotypical controls. In this small sample, the investigators found that autistic adults adequately comprehended the content of the alexithymia questionnaires, also noting high correlations between the two measures in both diagnostic groups. A subset of the sample also completed the same forms 4–12 months later, and test–retest reliability coefficients for both the TAS-20 and BVAQ-B in autistic adults were deemed adequate (test–retest Pearson r = 0.92 and 0.81 for the TAS-20 and BVAQ-B total scores, respectively, with all subscale rs > 0.62). The internal consistency of the TAS-20 and its three subscales has also been reported in a sample of 27 autistic adults by Samson et al. [64], who reported adequate reliability for the TAS-20 total score (α = 0.84), “difficulty identifying feelings” (DIF) subscale (α = 0.76), and “difficulty describing feelings” (DDF) subscale (α = 0.81) subscales, but subpar reliability for the TAS-20 “externally oriented thinking” (EOT) subscale (α = 0.65). Additional studies have also replicated the high correlations between TAS-20 and BVAQ scores in autistic adults [42] and demonstrated the TAS-20 total score and combined DIF/DDF subscales to be reliable in samples of cognitively able autistic adolescents [51, 57]. Nevertheless, we are unaware of any study to date systematically investigating the psychometric properties of the TAS-20 or any other alexithymia measure in autistic individuals using large-sample latent variable modeling techniques.

Given the prominence of the TAS-20 as the primary alexithymia measure employed in autism literature [3, 29, 61], the remainder of this paper will focus specifically on this scale. Although the TAS-20 is extensively used in research on alexithymia in a number of clinical and non-clinical populations [2], a number of psychometric concerns have been raised about the measure’s factor structure, reliability, utility in specific populations, and confounding by general psychological distress [2, 65,66,67,68,69,70,71]. In particular, the original three-factor structure of the TAS-20 (consisting of DIF, DDF, and EOT) often fails to achieve adequate model fit, although the use of a bifactor structure and/or removal of reverse-coded items may alleviate this issue [2, 66, 71]. Most of the psychometric problems associated with the TAS-20 are driven by the EOT subscale, which often exhibits subpar internal consistency (including in the autistic sample reported by Samson et al. [64]), contains several items that relate poorly to the overall construct, and seems to be particularly problematic when the scale is used in samples of children and adolescents [2, 65, 67, 68, 72].

Another issue raised in the literature is the relatively high correlation between TAS-20 scores and trait neuroticism/general psychological distress [2, 69, 70]. Although the creators of the TAS-20 have argued that the relationship between alexithymia and neuroticism is in line with theoretical predictions [2], interview measures of alexithymia such as the Toronto Structured Interview for Alexithymia (TSIA [73]) do not correlate highly with neuroticism, potentially indicating that the previously observed correlation between TAS-20 scores and neuroticism reflects a response bias on self-report items rather than the a true relationship between neuroticism and the alexithymia construct [74, 75]. Regardless of the true nature of this relationship, a high correlation between the TAS-20 and neuroticism remains problematic, as a sizable portion of the ability of the TAS-20 score to predict various clinical outcomes may be driven by neuroticism, which is itself a strong predictor of a number of different psychopathologies [76,77,78,79]. Notably, given the paucity of alexithymia measurement studies in samples of autistic individuals, no study to date has determined whether the TAS-20 continues to exhibit these same measurement issues in the autistic population.

Another major psychometric issue that has yet to be addressed in the alexithymia literature is the comparability of item responses between autistic and neurotypical respondents. Differential item functioning (referred to here as “item DIF” [I-DIF] to avoid confusion with the DIF TAS-20 subscale) is often present when comparing questionnaire scores between autistic and non-autistic individuals [80,81,82], indicating differences in the ways item responses relate to underlying traits (i.e., certain response options may be more easily endorsed at lower trait levels in one group). In cases where I-DIF is present, an autistic and neurotypicals with the same “true” alexithymia levels could systematically differ in their observed scores, resulting in incorrect conclusions about the rank order of alexithymia scores in a given sample. Moreover, I-DIF analyses test whether differences in observed scores between multiple groups (e.g., autistic and neurotypical adults) can be explained solely by group differences on the latent trait of interest or whether some trait-irrelevant factor is systematically biasing item scores in one direction or the other for a specific group. I-DIF is important to consider when comparing test scores between groups, as it has the potential to obscure the magnitude of existing group differences, either creating artifactual group differences when none exist or masking small but meaningful differences between two groups [83, 84].

Although the large differences between autistic and neurotypical individuals on measures of alexithymia are unlikely to be entirely due to I-DIF, it remains possible that I-DIF may substantially bias between-group effect sizes in either direction. Furthermore, previous investigations of measurement invariance of the TAS-20 between general population samples and clinical samples of psychiatric patients have often only found evidence for partial invariance across groups [2], suggesting that I-DIF likely exists between autistic and non-autistic adults on at least some of the TAS-20 items. I-DIF may also exist between specific subgroups of the autistic population (e.g., based on age, sex, education level, or presence of comorbidities), and explicit testing of this psychometric property is necessary to determine whether a given measure can be considered equivalent across multiple sociodemographic categories. Notably, while the I-DIF null hypothesis of complete equivalence of all parameters between groups is always false at the population level [85], the effects of I-DIF may be small enough to be practically ignorable, allowing for reasonably accurate between-group comparisons [86, 87]. Thus, an important step of I-DIF analysis is the calculation of effect sizes, which help to determine whether the observed I-DIF is large enough to bias item or scales scores to a practically meaningful extent.

Given the importance of the alexithymia construct in the autism literature and the many unanswered questions regarding the adequacy of the TAS-20 in multiple populations, there is a substantial need to determine whether the TAS-20 is an adequate measure of alexithymia in the autistic population. Thus, in the current study, we comprehensively evaluated the psychometric properties of the TAS-20 in a large sample of autistic adults, assessing the measure’s latent structure, reliability, and differential item functioning by diagnosis and across multiple subgroups of the autistic population. Additionally, as a secondary aim, we sought to remove poorly fitting items and items exhibiting I-DIF by diagnosis, creating a shortened version of the TAS with strong psychometric properties and the ability to accurately reflect true latent trait differences between autistic and non-autistic adults. We further established the nomological validity of the refined TAS by confirming hypothesized relationships with core autism features, co-occurring psychopathology, trait neuroticism, demographic features, and quality of life. Lastly, in order to more fully interrogate the relationships between trait neuroticism and alexithymia in the autistic population, we conducted additional analyses to determine whether our reduced TAS form was able to predict additional variance in autism features, psychopathology, and quality of life once controlling for levels of neuroticism.

Methods

The current investigation was a secondary data analysis of TAS-20 responses collected as a part of multiple online survey studies (See “Participants” section for more details on each study). Participants reporting professional diagnoses of autism spectrum disorder were recruited from the Simons Foundation Powering Autism Research for Knowledge (SPARK) cohort, a US-based online community that allows autistic individuals and their families to participate in autism research studies [88]. In order to compare TAS scores and item responses between autistic and non-autistic individuals, we combined the SPARK sample with open data from the Human Penguin Project [89, 90], a large multinational survey study investigating the relationships between core body temperature, social network structure, and a number of other variables (including alexithymia measured using the TAS) in adults from the general population. The addition of a control group provides a substantial amount of additional information, allowing us to assess I-DIF across diagnostic groups, assess the psychometric properties of any newly created TAS short forms in the general population, and generate normative scores for these short forms based on the distribution of TAS scores in this sample. Although autism status was not assessed in the control sample, the general population prevalence of approximately 2% autistic adults [91] does not cause enough “diagnostic noise” in an otherwise non-autistic sample to meaningfully bias item parameter estimates or alter tests of differential item functioning [80].

Participants

SPARK (Autism) sample

Using the SPARK Research Match service, we invited autistic adults between the ages of 18 and 45 years to take place in our study via the SPARK research portal. All individuals self-reported a prior professional diagnosis of autism spectrum disorder or equivalent condition (e.g., Asperger syndrome, PDD-NOS). Notably, although these diagnoses are not independently validated by SPARK, the majority of participants are recruited from university autism clinics and thus have a very high likelihood of valid autism diagnosis [88]. Furthermore, validation of diagnoses in the Interactive Autism Network, a similar participant pool now incorporated into SPARK, found that 98% of registry participants were able to produce valid clinical documentation of self-reported diagnoses when requested [92]. Autistic participants in our study completed a series of surveys via the SPARK platform that included the TAS-20, additionally providing demographics, current and lifetime psychiatric diagnoses, and scores on self-report questionnaires measuring autism severity, quality of life, co-occurring psychiatric symptoms, and a number of other clinical variables (see “Measures” section for descriptions of the questionnaires analyzed in the current study). These data were collected during winter and spring of 2019 as part of a larger study on repetitive thinking in autistic adults (project number RM0030Gotham), and the SPARK participants in the current study are a subset of those described by Williams et al. [80]. Participants received a total of $50 in Amazon gift cards for completion of the study. A total of 1012 individuals enrolled in the study, 743 of whom were included in the current analyses. Participants were excluded if they (a) did not self-report a professional diagnosis of autism on the demographics form, (b) did not complete the TAS-20, (c) indicated careless responding as determined by incorrect answers to two instructed-response items (e.g., Please respond “Strongly Agree” to this question.), or (d) answered “Yes” or “Suspected” to a question regarding being diagnosed with Alzheimer’s disease (which given the age of participants in our study almost certainly indicated random or careless responding). All participants gave informed consent, and all study procedures were approved by the institutional review board at Vanderbilt University Medical Center.

Human Penguin Project (general population) Sample

Data from a general population control sample were derived from an open dataset generated from the Human Penguin Project (HPP) [89, 90], a multinational survey study designed to test the theory of social thermoregulation [93]. Because the full details of this sample have been reported elsewhere [89, 90], we provide only a brief overview, focusing primarily on the participants whose data were utilized in the current study. The HPP sample was collected in two separate studies in 2015–2016: one online pilot study (N = 232) that recruited participants from Amazon’s Mechanical Turk and the similar crowdsourcing platform Prolific Academic [94, 95] and a larger cross-national study (12 countries, total N = 1523) that recruited subjects from 15 separate university-based research groups. In order to eliminate problems due to the non-equivalence of TAS items in different languages, we used only those data where the TAS-16 was administered in English (i.e., all crowdsourced pilot data, as well as cross-national data from the University of Oxford, Virginia Commonwealth University, University of Southampton, Singapore Management University, and University of California, Santa Barbara). Additionally, in order to match the HPP and SPARK samples on mean age, we excluded all HPP participants over the age of 60. Notably, individuals aged 45–60 were included due to the relative excess of individuals aged 20–30 in the HPP sample, which caused the subsample of 18–45-year-old HPP participants to be several years younger on average than the SPARK sample. The final HPP sample thus consisted of a total of 721 English-speaking adults aged 18–60 (MTurk n = 122; Prolific n = 84; Oxford n = 129; Virginia n = 148; Southampton n = 6; Singapore n = 132; Santa Barbara n = 100). As a part of this study, all participants completed a 16-item version of the TAS (TAS-16) that excludes four TAS-20 items [16,17,18, 20] on the basis of poor factor loadings in the psychometric study of Kooiman et al. [65]. In addition to item-level data from the TAS-16, we extracted the following variables: age (calculated from birth year), sex, and site of recruitment. The HPP was approved under an “umbrella” ethics proposal at Vrije Universiteit, Amsterdam, and separately at each contributing site. All study procedures complied with the ethics code outlined in the Declaration of Helsinki.

Measures

Toronto Alexithymia Scale (TAS)

The TAS [2, 33] is the most frequently and widely used self-report measure of alexithymia, as well as the most commonly administered alexithymia measure in the autism literature [3]. The most popular version of this form, the TAS-20 has been used in medical, psychiatric, and general-population samples as a composite measure of alexithymia for over 25 years [2], and this form has been translated into over 30 languages/dialects. The TAS-20 contains twenty items rated on a five-point Likert scale items from Strongly Disagree to Strongly Agree. The TAS-20 is organized into three subscales, difficulty identifying feelings (DIF; 7 items), difficulty describing feelings (DDF; 5 items), and externally oriented thinking (EOT; 8 items), corresponding to three of the four components of the alexithymia construct defined by Nemiah, Freyberger, and Sifneos [1]. Notably, the fourth component, difficulty fantasizing (DFAN), was also included in the original 26-item version of the TAS [34], but this subscale showed poor coherency with the other three and was ultimately dropped from the measure [2]. The sum of items on the TAS-20 is often used as an overall measure of alexithymia, and scores of 61 or higher are typically used to create binary alexithymia classifications in both general population and clinical samples.

As noted earlier, neurotypical participants in the HPP sample filled out the TAS-16, a version of the TAS-20 in which four problematic items have been removed from the scale [65]. However, as we wished to compare total scores from the TAS-20 between HPP and SPARK samples, we conducted single imputation for missing items in both groups using a random-forest algorithm implemented in the R missForest package [96,97,98]. Such item-level imputation allowed for us to approximate the TAS-20 score distribution of the HPP participants, including the proportion of individuals exceeding the “high alexithymia” cutoff of 61. Notably, although the “high alexithymia” cutoff is theoretically questionable given the taxometric evidence for alexithymia as a purely dimensional construct [2], we chose to calculate this measure to facilitate comparisons with prior literature that primarily reported the proportion of autistic adults exceeding this cutoff [3]. To further validate the group comparisons derived from these imputed data, we additionally calculated prorated TAS-16 total scores by taking the mean of all 16 items administered to all participants, which was subsequently multiplied by 20 for comparability with the TAS-20 total score. These scores were then compared between groups, and the proportion of individuals in each group with prorated scores ≥ 61 was also compared to the proportions derived from (imputed) TAS-20 scores.

Clinical measures for validity testing

In addition to the TAS-20, individuals in the SPARK sample completed a number of other self-report questionnaires, including measures of autism symptomatology, co-occurring psychopathology, trait neuroticism, and autism-related quality of life. Measures of autistic traits included the Social Responsiveness Scale-Second Edition (SRS-2) total T-score [99] and a self-report version of the Repetitive Behavior Scale-Revised (RBS-R) [100, 101], from which we derived measures of “lower-order” and “higher-order” repetitive behaviors (i.e., the sensory motor [SM] and ritualistic/sameness [RS] subscales reported by McDermott et al. [100]). Depression was measured using autism-specific scores on the Beck Depression Inventory-II (BDI-II) [80, 102], and we additionally used BDI-II item 9 (Suicidal Thoughts or Wishes) to quantify current suicidality. We additionally assessed generalized and social anxiety using the Generalized Anxiety Disorder-7 (GAD-7) [103] and Brief Fear of Negative Evaluation Scale-Short Form (BFNE-S) [104, 105], respectively. Somatization was quantified using a modified version of the Patient Health Questionnaire-15 (PHQ-15) [106, 107], which extended the symptom recall period to three months and excluded the two symptoms of dyspareunia and menstrual problems. We measured trait neuroticism using ten items from the international personality item pool [108], originally from the Multidimensional Personality Questionnaire’s “Stress Reaction” subscale [109] and referred to here as the IPIP-N10. Lastly, autism-related quality of life was measured using the Autism Spectrum Quality of Life (ASQoL) questionnaire [110]. More in-depth descriptions of all measures analyzed in the current study, including reliability estimates in the SPARK sample, can be found in the Additional file 1: Methods.

Statistical analyses

Confirmatory factor analysis and model-based bifactor coefficients

All statistical analyses were performed in the R statistical computing environment [111]. In order to test the appropriateness of the proposed TAS-20 factor structure in autistic adults, we performed a confirmatory factor analysis (CFA) on TAS-20 item responses in our SPARK sample. The measurement model in our CFA included a bifactor structure with one “general alexithymia” factor onto which all items loaded, as well as four “specific” factors representing the three subscales of the TAS-20 and the common method factor for the reverse-coded items [71]. In addition, given the previously identified problems with the EOT subscale and the reverse-coded items [2], we additionally examined a bifactor model fit only to the forward-coded DIF and DDF items, removing both the EOT and reverse-coded items. Although not the focus of the current investigation, we also fit the original and reduced TAS factor models in the HPP sample in order to determine whether any identified model misfit was present only in autistic adults or more generally across both samples. We fit the model using a diagonally weighted least squares estimator [112] with a mean- and variance-corrected test statistic (i.e., “WLSMV” estimation), as implemented in the R package lavaan [113]. Very few of the item responses in our dataset contained missing values (0.16% missing item responses in the SPARK sample, no missing TAS-16 data in HPP sample), and missing values were singly imputed using missForest [96,97,98].

Model fit was evaluated using the Chi-square test of exact fit, comparative fit index (CFI; [114]), Tucker-Lewis index (TLI; [115]), root mean square error of approximation (RMSEA; [116]), standardized root mean square residual (SRMR; [117]), and weighted root mean square residual (WRMR; [118, 119]). The categorical maximum likelihood (cML) estimator proposed by Savalei [120] was used to calculate the CFI, TLI, and RMSEA, as these indices better approximate the population values of the maximum likelihood-based fit indices used in linear CFA than analogous measures calculated from the WLSMV test statistic [121]. Moreover, the SRMR was calculated using the unbiased estimator (i.e., SRMRu) proposed by Maydeu-Olivares [122, 123] and implemented in lavaan for categorical estimators. CFIcML/TLIcML values greater than 0.95, RMSEAcML values less than 0.06, SRMRu values less than 0.08, and WRMR values less than 1.0 were defined as indicating adequate global model fit, based on standard rules of thumb employed in the structural equation modeling literature [117,118,119]. In addition to the aforementioned global fit indices, we checked for localized areas of model misfit based on examination of the residual correlations [124], with residuals greater than 0.1 indicating areas of potentially significant misfit and/or violations of local independence [125].

Confirmatory bifactor models were further interrogated with the calculation of several model-based coefficients [126,127,128] including (a) coefficient omega total (ωT), a measure of the reliability of the multidimensional TAS-20 total score, (b) coefficient omega hierarchical (ωH), a measure of general factor saturation (i.e., the proportion of total score variance attributable to the general factor), (c) coefficient omega subscale (ωS), a measure of the reliability for each individual subscale, (d) coefficient omega hierarchical subscale (ωHS), a measure of the proportion of subscale variance attributable to the specific factor, (e) the explained common variance (ECV; the ratio of general factor variance to group factor variance) for the total score and each item separately, and (f) the percentage of uncontaminated correlations (PUC), a supplementary index used in tandem with total ECV to determine whether a scale can be considered “essentially unidimensional” [127, 129]. Omega coefficients calculated in the current study were based on the categorical data estimator proposed by Green and Yang [130]. ECV coefficients were also calculated for individual subscales (S-ECV) as an additional measure of subscale general factor saturation.

Item response theory and differential item functioning analyses

After selecting an appropriate factor model, we evaluated the ECV and PUC coefficients to determine whether the model could be reasonably well-approximated by a unidimensional item response theory (IRT) model. We then fit the data from the TAS items included in the best-fitting factor model to a graded response model [131] in our SPARK sample using maximum marginal likelihood estimation [132], as implemented in the mirt R package [133]. Model fit was assessed using the limited-information C2 statistic [134, 135], as well as C2-based approximate fit indices and SRMR. Based on previously published guidelines [136], we defined values of CFIC2 > 0.975, RMSEAC2 < 0.089, and SRMR < 0.05 as indicative of good model fit. Residual correlations were examined to determine areas of local dependence, with values greater than ± 0.1 indicative of potential misfit. Items with multiple large residual correlations were flagged for removal, and the IRT model was then re-fit and iteratively tested until all areas of local misfit were removed.

After refining the unidimensional TAS model in the SPARK sample, we further investigated the same model in the HPP sample. Once a structural model was found to fit in both samples, we fit a multi-group graded response model to the full dataset, using this model to examine I-DIF between groups. I-DIF was tested using a version of the iterative Wald procedure proposed by Cao et al. [137] and implemented in R by the first author [138], using the Oakes identity approximation method to calculate standard errors [139,140,141]. The Benjamini–Hochberg [142] false discovery rate (FDR) correction was applied to all omnibus Wald tests, and only those with pFDR < 0.05 were flagged as demonstrating significant I-DIF. Significant omnibus Wald tests were followed up with tests of individual item parameters to determine which parameters significantly differed between groups [143]. Notably, this I-DIF procedure is quite powerful in large sample sizes, potentially revealing trivial group differences, and thus I-DIF effect-size indices were used to determine whether the differential functioning of a given item was small enough to be ignorable in practice. In particular, we used the weighted area between curves (wABC) as a measure of I-DIF magnitude, with values greater than 0.30 indicative of practically significant I-DIF [87]. We additionally reported the expected score standardized difference (ESSD), a standardized effect size interpretable on the metric of Cohen’s d [86]. Items exhibiting practically significant I-DIF between autistic and non-autistic adults were further flagged for removal, and this process was repeated iteratively until the resulting TAS short form contained no items with practically significant I-DIF by diagnostic group. The total effect of all I-DIF (i.e., differential test functioning [DTF]) was then estimated using the unsigned expected test score difference in the sample (UETSDS), the expected absolute difference in manifest test scores between individuals of different groups possessing the same underlying trait level [87].

After removing items based on between-group I-DIF, we then examined I-DIF of the resulting short form across subsets of the autistic population. Using the same iterative Wald procedure and effect size criteria as the between-group analyses, we tested whether TAS items functioned differently across groups based on sex, gender, age (> 30 vs. ≤ 30 years), race (non-Hispanic White vs. Other), level of education (any higher education vs. no higher education), age of autism diagnosis (≥ 18 years old vs. < 18 years), self-reported co-occurring conditions (current depressive disorder, current anxiety disorder, and lifetime attention deficit hyperactivity disorder [ADHD]). Although many fewer stratification variables were collected in the HPP sample, I-DIF was also examined within that sample according to age (> 30 vs. ≤ 30 years), sex, and phase of the project (i.e., pilot study vs. multi-site study). These I-DIF results were used to further refine the measure such that the resulting TAS short form exhibited I-DIF across all groups that was small enough to be practically ignorable. All items retained in the TAS form at this stage were incorporated into the final measure.

Once the TAS short form was finalized, we then fit an additional multi-group graded response model on only those final items, constraining item parameters to be equal between groups and setting the scale of the latent variable by constraining the general population sample to have a mean of 0 and standard deviation of 1. Using this model, we then estimated maximum a-posteriori (MAP) TAS latent trait scores for each individual, which were interpretable as Z-scores relative to the general population (i.e., a score of 1 is one full standard deviation above the mean of our non-autistic normative sample). Individual reliability coefficients were also examined, with values greater than 0.7 being deemed sufficiently reliable for interpretation at the individual level.

Validity testing

To further test the validity of the newly generated TAS latent trait scores in autistic adults, we investigated the relationships between these scores and a number of clinical variables that have previously demonstrated relationships with alexithymia in either autistic adults or the general population. Based on previous literature [59], we hypothesized that alexithymia would show moderate to strong positive correlations with neuroticism (IPIP-N10), autistic traits (SRS-2), repetitive behavior (RBS-R), depression (BDI-II), generalized anxiety (GAD-7), social anxiety (BFNE-S), suicidality (BDI item 9), and somatic symptom burden (PHQ-15), as well as moderate negative correlations with autism-specific QoL (ASQoL). Given the documented relationships between neuroticism and alexithymia, we further examined the magnitude of these correlations after controlling for levels of neuroticism. We additionally examined relationships between alexithymia scores and demographic variables, including age, sex, race/ethnicity, age of autism diagnosis, and level of education. Notably, alexithymia is correlated with older age, male sex, and lower education level in the general population [144,145,146], and we expected that these relationships would replicate in the current SPARK sample (with the exception of the correlation with age, given the restricted age range in our current sample). We did not, however, expect to find significant associations between alexithymia and race/ethnicity or age of autism diagnosis.

Relationships between alexithymia and external variables were examined using robust Bayesian variants of the Pearson correlation coefficient (for continuous variables, e.g., SRS-2 scores), polyserial correlation coefficient (for ordinal variables, such as the BDI-II suicidality item and education level), partial correlation coefficient (when testing relationships after controlling for neuroticism), and unequal-variances t test [147,148,149], as implemented using custom R code [150] and the brms package [151]. Additional technical details regarding model estimation procedures and prior distributions can be found in the Additional file 1: Methods. Standardized effect sizes produced by these methods (i.e., r, rp, and d) were summarized using the posterior median and 95% highest-density credible interval (CrI).

In addition to estimating the magnitude of each effect size, we tested these effects for “practical significance” [152] within a Bayesian hypothesis testing framework. To do this, we defined interval null hypotheses within which all effect sizes were deemed too small to be practically meaningful. This interval, termed the region of practical equivalence (ROPE) [153], was defined in the current study as the interval d = [− 0.2, 0.2] for t tests, r = [− 0.2, 0.2] for bivariate correlations, and rp = [− 0.1, 0.1] for partial correlations. Evidence both for or against this interval null hypothesis can be quantified by calculating the ROPE Bayes factor (BFROPE), which is defined as the odds of the prior effect size distribution falling within the ROPE divided by the odds of the posterior effect size distribution falling within the ROPE [154, 155]. In accordance with standard interpretation of Bayes factor values [156, 157], we defined BFROPE values greater than 3 as providing substantial evidence for \({\mathcal{H}}_{1}\) (i.e., the true population effect lies outside the ROPE) and BFROPE values less than 0.333 as providing substantial evidence for \({\mathcal{H}}_{0}\) (i.e., the true population effect lies within the ROPE and thus is not practically meaningful).

Values of BFROPE between 0.333 and 3 are typically considered inconclusive, providing only “anecdotal” evidence for either \({\mathcal{H}}_{0}\) or \({\mathcal{H}}_{1}\) [156].

Readability analysis

As a supplemental analysis, we evaluated the readability of the TAS-20 and the newly derived short form using the FORCAST formula (158). This formula is well-suited for questionnaire material, as it ignores the number of sentences, average sentence length, or hard punctuation (standard metrics for text in prose form), instead focusing exclusively on the number of monosyllabic words [159]. FORCAST grade level equivalent was calculated for both the TAS-20 (excluding the questionnaire directions) and the short form derived in the current study.

Additionally, in order to compare our results with prior work on the readability of the TAS-20, we calculated the Flesch–Kincaid Grade Level (FKGL) and Flesch reading ease (FRE) scores [160, 161] for both the TAS-20 and short form. All readability analyses were conducted using Readability Studio version 2019.3 (Oleander Software, Ltd, Vandalia, OH, USA). Although we did not attempt to select items based on readability, this analysis was constructed to ensure that shortening of the TAS questionnaire did not substantially increase the reading level, thereby making the short form measure less accessible to younger or less educated respondents.

Results

Participants and demographics

In total, our sample included TAS data from 1464 unique individuals across the two data sources (Table 1). Autistic adults in the SPARK sample (n = 743, age = 30.91 ± 7.02 years, 63.5% female sex) were predominantly non-Hispanic White (79.4%) and college-educated (46.4% with a 2- or 4-year college degree, and an additional 26.5% with some college but no degree), similar to the previous sample drawn from this same SPARK project [80]. The median age of autism diagnosis was 19.17 years (IQR = [10.33, 28.79]), indicating that the majority of individuals in the sample were diagnosed in adulthood. The majority of participants reported a current depressive or anxiety disorder (defined as symptoms in the past three months or an individual currently being treated for one of these disorders), with depression present in 59.2% and anxiety present in 71.7%. TAS-20 scores in the SPARK sample were present across the full range of trait levels (M = 60.55, SD = 13.11), and just over half of the sample (54.5%) was classified as “high alexithymia” based on TAS-20 total scores greater than or equal to 61. Less demographic information was available for the general population adults in the HPP sample (n = 721, age = 30.92 ± 13.01 years, 64.9% female), but the available demographics indicated that these individuals were well-matched to the SPARK sample on age and sex. Partially imputed TAS-20 scores in the HPP sample were slightly higher than other general population samples (M = 50.21, SD = 11.21), and based on these scores, 17.1% of HPP participants were classified as having “high alexithymia.” Prorated TAS-16 total scores in the HPP sample (M = 51.38, SD = 10.92) were similar in magnitude to the imputed TAS-20 scores, with a slightly larger proportion of the HPP sample (19.1%) classified as “high alexithymia” using this method. As anticipated, large differences in both TAS-20 total scores (d = 0.880, 95% CrI [0.767, 0.995]) and prorated TAS-16 total scores (d = 0.811, 95% CrI [0.697, 0.922]) were present between groups.

Confirmatory factor analysis

Within the SPARK sample, the confirmatory factor model for the full TAS-20 exhibited subpar model fit, with only the SRMRu meeting a priori fit index cutoff values (Table 2). Additionally, examination of residual correlations revealed five values greater than 0.1, indicating a non-ignorable degree of local model misfit. Model-based bifactor coefficients indicated strong reliability and general factor saturation of the TAS-20 composite (ωT = 0.912, ωH = 0.773), though the ECV/PUC indicated that the scale could not be considered “essentially unidimensional” (ECV = 0.635, PUC = 66.8%). Both the DIF and DDF subscales exhibited good composite score reliability (ωS = 0.906 and 0.854, respectively), although omega hierarchical coefficients indicated that the vast majority of reliable variance in each subscale was due to the “general alexithymia” factor (DIF: ωHS = 0.162, S-ECV = 0.753; DDF: ωHS = 0.145, S-ECV = 0.768, respectively). Conversely, the EOT subscale exhibited very poor reliability, with only one fourth of common subscale variance attributable to the general factor (ωS = 0.451, ωHS = 0.300, S-ECV = 0.245). Examination of the factor loadings further confirmed the inadequacy of the EOT subscale, as seven of the eight EOT items [5, 8, 10, 15, 16, 18,19,20] loaded poorly onto the “general alexithymia” factor (λG = − 0.116 to 0.311; Additional file 1: Table S1). Notably, these psychometric issues were not limited to autistic adults. The fit of the TAS-20 CFA model in the HPP sample was equally poor, and bifactor coefficients indicating the psychometric inadequacy of the EOT and reverse-scored items were replicated in this sample as well (Table 2).

Following the removal of the EOT and reverse-coded items from the TAS-20, we fit a bifactor model with two specific factors (DIF and DDF) to the remaining 11 items in our SPARK sample. The fit of this model was substantially improved over the TAS-20, with all indices except RMSEAcML exceeding a priori designated cutoffs (Table 2) and all residuals correlations below 0.1. Moreover, model-based coefficients (ECV = 0.815; PUC = 50.9%) indicated that the 11-item TAS was unidimensional enough to be fit by a standard graded response model with little parameter bias. Notably, the estimated reliability and general factor saturation of the 11-item TAS composite score were higher than those of the 20-item composite (ωT = 0.925, ωH = 0.852), suggesting that the inclusion of EOT and reverse-coded items on the scale actually reduces the amount of scale variance attributable to the underlying alexithymia construct. Fit of the 11-item TAS model in the HPP sample was equally strong (Table 2), with an approximately equal ECV (0.793) supporting the essential unidimensionality of this scale in both samples.

Item response theory analyses

A unidimensional graded response model fit to the 11-item TAS short form did not display adequate fit according to a priori fit index guidelines (C2(44) = 485.7, p < 0.001, CFIC2 = 0.955, RMSEAC2 = 0.116, SRMR = 0.068). Examination of residual correlations indicated that item 7 (I am often puzzled by sensations in my body) was particularly problematic, exhibiting a very large residual correlation of 0.259 with item 3 as well as two other residuals greater than 0.1. Removal of this item caused the resulting 10-item graded response model to approximately meet the minimum standards for adequate fit (C2(35) = 485.7, p < 0.001, CFIC2 = 0.976, RMSEAC2 = 0.086, SRMR = 0.051), with all remaining residual correlations below 0.1. The overall fit of this 10-item model was somewhat worse in the HPP sample (C2(35) = 319.9, p < 0.001, CFIC2 = 0.960, RMSEAC2 = 0.106, SRMR = 0.065); however, it is notable that this model contained item 17, which was not contained within the TAS-16 and was thus fully imputed in the HPP sample. Removal of this item resulted in a substantial improvement in fit in the HPP sample (C2(27) = 169.1, p < 0.001, CFIC2 = 0.974, RMSEAC2 = 0.086, SRMR = 0.058), with fit indices approximately reaching the a priori cutoffs. As the 9-item TAS also exhibited good fit in the SPARK sample (C2(27) = 161.7, p < 0.001, CFIC2 = 0.980, RMSEAC2 = 0.082, SRMR = 0.049), we chose this version of the measure to test I-DIF between autistic and general population adults.

For the remaining nine TAS items, I-DIF was evaluated across diagnostic groups using the iterative Wald test procedure. Significant I-DIF was found in eight of the nine items (all except item 6) at the p < 0.05 level (Table 3); however, effect size indices suggested that practically significant I-DIF was only present in item 3 (I have physical sensations that even doctors don’t understand; wABC = 0.433, ESSD = 0.670). The remaining items all exhibited I-DIF with small standardized effect sizes (all wABC < 0.165, all |ESSD| < 0.187), allowing these effects to be ignored in practice [87]. After removal of item 3, we re-tested I-DIF the resulting eight-item scale (TAS-8), producing nearly identical results (significant I-DIF for all items except 6; all wABC < 0.167, all |ESSD| < 0.186). The overall DTF of the TAS-8 was also small enough to be ignorable, with the average difference in total scores between autistic and non-autistic adults of the same trait level being less than 0.5 scale points (UETSDS = 0.460, ETSSD = − 0.011).

After establishing practical equivalence in item parameters between the two diagnostic groups, we then tested I-DIF for the TAS-8 for a number of subgroups within the HPP and SPARK samples. Within the general population HPP sample, all eight TAS-8 items displayed no significant I-DIF across by sex, age (≥ 30 vs. < 30), or phase of the HPP study (all ps > 0.131). Similarly, in the SPARK sample, there was no significant I-DIF by sex, gender, race, education level, current anxiety disorder, history of ADHD, or current suicidality (all ps > 0.105). However, significant I-DIF was found across several demographics, including age (item 6; wABC = 0.0543, ESSD = − 0.045), age of autism diagnosis (items 2, 6, and 14; all wABC < 0.267, all |ESSD| < 0.135), and current depressive disorder (item 13; wABC = 0.274, ESSD = 0.361), although wABC values for these items indicated that the degree of I-DIF was ignorable in practice.

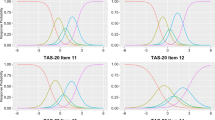

As no items of the TAS-8 exhibited practically significant I-DIF across any of the tested contrasts, we retained all eight items for the final TAS short form. A graded response model fit to the full sample exhibited adequate fit (C2(20) = 240.4, p < 0.001, CFIC2 = 0.983, RMSEAC2 = 0.087, SRMR = 0.045) and no residual correlations greater than 0.1. A multi-group model with freely estimated mean/variance for the autistic group was used to calculate the final item parameters (Table 4), as well as individual latent trait scores. Item characteristic curves indicated that all TAS-8 items behaved appropriately, although the middle response option was insufficiently utilized for three of the eight items (Fig. 1). The MAP-estimated latent trait scores for the TAS-8 showed strong marginal reliability (ρxx = 0.895, 95% bootstrapped CI: [0.895, 0.916]), and individual reliabilities were greater than the minimally acceptable 0.7 for the full range of possible TAS-8 scores (i.e., latent trait values between − 2.19 and 3.52; Fig. 2a). Item information plots for the eight TAS-8 items (Fig. 2b) indicated that all items contributed meaningful information to the overall test along the full trait distribution of interest. TAS-8 latent trait scores were also highly correlated with total scores on the TAS-20 (r = 0.910, 95% CrI [0.897, 0.922]), indicating that the general alexithymia factor being assessed by this short form is strongly related to the alexithymia construct as operationalized by the TAS-20 total score. Diagnostic group differences in TAS-8 latent trait scores remained large, with autistic individuals demonstrating substantially elevated levels of alexithymia on this measure (d = 1.014 [0.887, 1.139]).

Item category characteristic curves (i.e., “trace lines”) for the eight TAS-8 items. Three of the items (TAS-20 items 11, 12, and 14) had neutral (“3”) responses that were not the most probable response at any point along the latent trait continuum, indicating that these response options were underutilized in our combined sample

a Estimated reliability of TAS-8 latent trait scores across the full latent alexithymia continuum. The horizontal dashed line indicates rxx = 0.7, the a priori threshold for acceptable score reliability. Individual reliabilities for trait scores between − 2.43 and 3.53 are all greater than or equal to this cutoff, including all trait levels estimable by the TAS-8 (i.e., θ between − 2.19 and 3.52). b Item-level information functions for TAS-8 items. Vertical dashed lines indicate the trait levels captured by the minimum TAS-8 score (all “0” responses, θ = − 2.19) and the maximum TAS-8 score (all “5” responses, θ = 3.52). The sum of all item information functions equals the test information function

Validity analyses

Overall, the TAS-8 latent trait scores demonstrated a pattern of correlations with other variables that generally resembled the relationships seen in other clinical and non-clinical samples (Table 5). The TAS-8 latent trait score was highly correlated with autistic traits as measured by the SRS-2 (r = 0.642 [0.598, 0.686]), additionally exhibiting moderate correlations with lower-order (r = 0.386 [0.320, 0.450]) and higher-order (r = 0.432 [0.372, 0.494]) repetitive behaviors as measured by the RBS-R. TAS-8 latent trait scores were also correlated with psychopathology measures, exhibiting the hypothesized pattern of correlations with depression, anxiety, somatic symptom burden, social anxiety, and suicidality (rs = 0.275–0.423), as well as lower autism-related quality of life (r = −0.442 [− 0.503, − 0.385]). As with other versions of the TAS, the TAS-8 displayed a moderate-to-large correlation with trait neuroticism (r = 0.475 [0.416, 0.531]), raising the possibility that relationships between TAS-8 scores and internalizing psychopathology are driven by neuroticism rather than alexithymia per se. To investigate this possibility further, we calculated partial correlations between the TAS-8 and other variables after controlling for IPIP-N10 scores, using a Bayes factor to test the interval null hypothesis that rp falls between − 0.1 and 0.1 (i.e., < 1% of additional variance in the outcome is explained by the TAS-8 score after accounting for neuroticism). Bayes factors provided substantial evidence that the partial correlations between the TAS-8 and SRS-2, RBS-R subscales, BDI-II, and ASQoL exceeded the ROPE. Additionally, while partial correlations with the BFNE-S, PHQ-15, and BDI suicidality item were all greater than zero, Bayes factors suggested that all three of these correlations were more likely to lie within the ROPE than outside of it (all BFROPE < 0.258). There was only anecdotal evidence that the partial correlation between the TAS-8 and GAD-7 exceeded the ROPE (BFROPE = 2.18). However, there was a 91.3% posterior probability of that correlation exceeding the ROPE, suggesting that there was a strong likelihood of alexithymia explaining a meaningful amount of additional variance in anxiety symptoms beyond that accounted for by neuroticism.

The relationships between TAS-8 scores and demographic variables were also examined in order to determine whether relationships found in the general population apply to autistic adults. As hypothesized, TAS-8 scores showed a small and practically insignificant correlation with age (r = 0.032 [− 0.041, 0.104], BFROPE = 5.77 × 10–6), likely due to the absence of older adults (i.e., ages 60+) in our sample. Alexithymia also showed a nonzero negative correlation with education level, although the magnitude of this relationship was small enough to not be practically significant (rpoly = − 0.089 [− 0.163, − 0.017], BFROPE = 0.045). Unlike in the general population, females in the SPARK sample had slightly higher TAS-8 scores (d = 0.183 [0.022, 0.343]), although this difference was small and not practically significant (BFROPE = 0.265). Additionally, there was an absence of practically significant differences in alexithymia by race/ethnicity (d = − 0.052 [− 0.247, 0.141], BFROPE = 0.029). Lastly, age of autism diagnosis was positively correlated with TAS-8 scores (r = 0.133 [0.06, 0.204]), although this correlation was also small enough to not be practically significant (BFROPE = 0.014).

Readability analysis

Using the FORCAST algorithm, we calculated the equivalent grade level of the full TAS-20 (including instructions) to be 10.2 (i.e., appropriate for individuals at the reading level of an American 10th-grader [chronological age 15–16 years] after the second month of class). This estimate was several grades higher than that produced using the Flesch–Kincaid algorithm (FKGL = 6.7; FRE = 73: “Fairly Easy”). Using the FORCAST algorithm, the TAS-8 items demonstrated a grade level of 8.8, indicating a moderate decrease in word difficulty. This decreased reading level compared to the TAS-20 was also reflected in the Flesch–Kincaid measures (FKGL = 4.5; FRE = 86: “Easy”). Thus, in addition to improving the psychometric properties of the measure, our item reduction procedure seemingly improved the overall readability of the TAS.

Discussion

While alexithymia is theorized to account for many traits associated with the autism phenotype [39,40,41,42,43,44,45,46,47,48,49,50,51], studies to date have not typically assessed the psychometric properties of alexithymia measures in the autistic population, and the suitability of most alexithymia measures for comparing autistic and non-autistic individuals in an unbiased manner remains largely unknown. In the current study, we performed a rigorous examination of the psychometric properties of the TAS-20, the most widely used measure of self-reported alexithymia, in a large and diverse sample of autistic adults. Overall, we found the TAS-20 questionnaire to have a number of psychometric issues, including a poorly fitting measurement model, several items that are minimally related to the overall alexithymia construct, and items that function differentially when answered by autistic and non-autistic adults. In response to these issues, we performed an empirically based item reduction of the TAS-20 questionnaire, which resulted in an eight-item unidimensional TAS short form (TAS-8). In addition to reducing participant burden compared to the TAS-20, the TAS-8 was found to be a psychometrically robust instrument in both general population and autistic samples, displaying strong model-data fit to a unidimensional structure, high score reliability, strong nomological validity, and practically ignorable amounts of I-DIF between diagnostic groups and subgroups of autistic and general population adults. Item reduction also significantly reduced the reading level of the TAS-8 compared to the TAS-20, indicating that this form may be more comprehensible by younger, less educated, or less cognitively able respondents. In sum, our findings suggest that the TAS-8 is a reliable and valid measure of alexithymia suitable for use by autistic adults as well as adults in the general population.

While the 20-item TAS possessed adequate composite score reliability in our sample, bifactor confirmatory factor models failed to support the theorized structure of the questionnaire in the autistic population. The TAS-20 items assessing the EOT facet of the alexithymia construct and the form’s reverse-coded items were particularly problematic, both exhibiting poor subscale reliabilities and contributing little common variance to the general alexithymia factor. These psychometric issues were further confirmed in our general population HPP sample, indicating that these problems were not unique to the autistic population. Removal of the EOT and reverse-coded items from the model greatly improved overall fit, but three additional items needed to be removed in order to meet our a priori standards of adequate IRT model fit and negligible I-DIF by diagnostic group. The final TAS-8 short form consisted of five DIF items [1, 6, 9, 13, 14] and three DDF items [2, 11, 12] that ostensibly form the core of the “general alexithymia” construct measured by the TAS-20 total score. Using item response theory, we generated norm-referenced TAS-8 scores that are immediately interpretable on the scale of a Z-score (i.e., M = 0, SD = 1) and can similarly be scaled to the familiar T-score metric (M = 50, SD = 10). As scores on the TAS-8 are both norm-referenced and psychometrically robust, we believe they present a viable alternative to TAS-20 total scores in any study protocol that includes the TAS-20 or one of its short forms (notably, these scores can be calculated from any subset of the eight TAS-8 items). To facilitate the calculation and use of the TAS-8 latent trait scores in alexithymia research, we have created an easy-to-use online scoring tool (available at http://asdmeasures.shinyapps.io/TAS8_Score) that converts TAS-8 item responses into general population-normed latent trait scores and corresponding T-scores.

In addition to deriving a psychometrically robust short version of the TAS-20, the current study also sheds light on the areas of the form that are most psychometrically problematic, notably the EOT subscale. This subscale was the primary driver of poor TAS-20 model fit in the current study, and even when method factors were appropriately modeled, the reliability of the EOT subscale score was unacceptably low. Notably, it is not uncommon for researchers to perform subscale-level analyses using the TAS-20, examining correlations between DIF/DDF/EOT subscale scores and other constructs of theoretical interest [2, 60]. As the EOT scale of the TAS-20 does not appear to measure a single coherent construct (or alexithymia itself, in the current samples), we strongly question the validity of analyses conducted using this subscale by itself and recommend that researchers restrict their use of the TAS-20 to only the total score and potentially the DIF/DDF subscales.

Tests of convergent and divergent validity of the TAS-8 score were largely in line with prior results, indicating that self-reported alexithymia is moderately to strongly correlated with autistic traits, repetitive behaviors, internalizing psychopathology, suicidality, and poorer quality of life. Relationships were also observed between TAS-8 scores and sex, age of autism diagnosis, and education level, although these effects were small enough to be practically insignificant (i.e., |r|s < 0.2 and |d|s < 0.2). Moreover, despite a fairly large correlation between TAS-8 scores and neuroticism, partial correlation analyses demonstrated that alexithymia still explained substantial unique variance in autism symptomatology, depression, generalized anxiety, and quality of life over and above that accounted for by neuroticism. However, partial correlations with somatic symptom burden, social anxiety, and suicidal ideation failed to exceed the pre-specified interval null hypothesis, indicating that alexithymia in the autistic population only predicts these symptom domains insofar as it correlates positively with trait neuroticism. A particularly important future direction in alexithymia research will be to re-examine studies wherein alexithymia was found to be a “more useful predictor” of some clinical outcome when compared to autistic traits [60]; to date, these studies have not taken trait neuroticism in account, and we believe that it is quite likely that alexithymia may no longer be a stronger predictor of many other constructs once variance attributable to neuroticism is partialed out. Moreover, as alternative measures of alexithymia such as the TSIA [73], BVAQ, and Perth Alexithymia Questionnaire (PAQ) [72] do not correlate highly with neuroticism [69, 74, 75], future research should also investigate the degree to which alexithymia measured multimodally continues to predict internalizing psychopathology in the autistic population and other clinical groups of interest.

One particularly surprising finding is the poor correlation between alexithymia and somatic symptom burden, given the theoretical status of alexithymia as a potential driver of somatization and a large literature showing relationships between these constructs [2]. One particular reason that this correlation may be substantially attenuated is that our short form removed the psychometrically problematic TAS-20 item 3 (I have physical sensations that even doctors don’t understand.), which in addition to assessing the experience of undifferentiated emotions common in alexithymia also seemingly captures the phenomenon of medically unexplained symptoms. We confirmed that this was in fact the case in our SPARK sample, as the polyserial correlation between this item and PHQ-15 total scores was very high (rpoly = 0.492 [0.435, 0.543]) and very minimally attenuated after controlling for overall alexithymia as measured by the TAS-8 latent trait score (rp,poly = 0.424 [0.364, 0.485], BFROPE = 4.79 × 1010). Notably, a recent study has found that item 3 of the TAS-20 is the single most important item when discriminating individuals with a functional somatic condition (fibromyalgia) from healthy controls [162], providing additional evidence to support our suspicion that this particular item drives much of the correlation between the TAS-20 and somatic symptomatology. Additional work in this area should attempt to measure alexithymia in a multimodal manner (e.g., simultaneously administering the TAS-8, a second self-report questionnaire such as the BVAQ [63] or PAQ [72], an observer-report measure such as the Observer Alexithymia Scale [163], and an interview measure such as the TSIA), as such multi-method studies are able to separate out the degree of variance in these measures due to alexithymia versus construct-irrelevant method factors (such as self-report questionnaire response styles). Multi-method alexithymia work is almost entirely absent from the autism literature [164], although such work on a larger scale (i.e., with samples large enough to fit latent variable models) is necessary to determine which relationships between alexithymia and important covariates of interest (e.g., somatization, neuroticism, autism symptoms, emotion recognition, and psychopathology) are due to the latent alexithymia construct or measurement artifacts specific to certain alexithymia assessments or response modalities.

This work has meaningful implications for the study of alexithymia in the autistic population and in general, as it provides strong psychometric support for the TAS-8 questionnaire as a general-purpose measure of alexithymia across multiple clinical and non-clinical populations. These findings are particularly useful for autism research, as they indicate that the TAS-8 can be used to compare levels of alexithymia between autistic and general-population samples without worry that differences in scores are significantly biased by qualitative differences in the ways individuals in each group answer the questionnaire items. Moreover, the between-group difference in TAS-8 scores (d = 1.014) was approximately 15% larger than the same group difference in TAS-20 scores (d = 0.880), indicating that the TAS-8 is better able to discriminate between autistic and non-autistic adults than its parent form. Although the current study did not validate this form for use in other clinical populations where alexithymia is a trait of interest (e.g., individuals with eating disorders, functional somatic syndromes, substance abuse disorders, or general medical conditions), future studies in these populations are warranted to determine whether the improved measurement properties of the TAS-8 are useful in improving inferences about alexithymia in those groups as well.

Limitations

This study has a number of strengths, including its large and diverse sample of both autistic and non-autistic participants, robust statistical methodology, wide array of clinical measures with which to assess the validity of the TAS-8, and consideration of the role that neuroticism plays in explaining relationships between alexithymia and internalizing psychopathology. However, this investigation is not without limitations. Most notably, the two samples of participants (from SPARK and HPP, respectively), while both recruited online, were drawn from different studies with dissimilar protocols and different versions of the TAS questionnaire. The HPP sample completed the TAS-16 questionnaire, which omits four of the more poorly performing items of the original TAS-20. Thus, in order to estimate TAS-20 total scores in this group of individuals, we were required to impute those items for all 721 participants with an unknown degree of error. Interestingly, the HPP sample reported TAS-20 scores that were 1.5–6 points larger on average than previous large-scale general-population studies using the TAS-20 [18, 165], and it is thus unclear whether the imputation of four items using data from an autistic sample artificially inflated these scores. However, as the TAS-8 did not include any of the imputed items, we can be reasonably confident that the scores on this measure genuinely reflect the true underlying alexithymia construct levels in the current general population sample. Moreover, supplemental analyses using only the 16 completed items in both groups were nearly identical to those conducted using the imputed scores, further supporting the validity of our conclusions.

An additional limitation is that the HPP sample was not screened for autism diagnoses, and there remains a possibility that some of these individuals could have met diagnostic criteria for autism or had a first-degree relative on the autism spectrum. However, previous studies have indicated that a small portion of autistic individuals (i.e., approximately 2% per current prevalence estimates [91]) in an otherwise neurotypical sample is insufficient to substantially bias parameter estimates or attenuate differential item functioning [80], leading us to believe that the current group comparisons remain valid. Nevertheless, the HPP sample was only assessed on a small number of relevant demographic domains, leaving unanswered questions about the relationships between alexithymia as measured by the TAS-8 and many demographic and clinical variables of interest in general-population adults. Individuals in the HPP sample also did not complete measures of psychopathology or neuroticism, which may account for a substantial portion of the diagnostic group difference in alexithymia scores. Fortunately, as the TAS-8 score can be calculated from item-level TAS-20 data, many extant datasets currently exist that can provide answers to these questions, further supporting or refuting the validity of the TAS-8 as a measure of alexithymia in the general population.

In addition to the limitations of the HPP sample, several limitations of the better-characterized SPARK sample were also present. As discussed in our previous work with this sample [80], it is not representative of the autistic population, having a higher proportion of females, a higher average education level, later mean age of autism diagnosis, and a higher prevalence of co-occurring anxiety and depressive disorders than is expected in this population [166]. The sex ratio of this sample is particularly divergent from that seen in most clinical samples (i.e., 3–4:1 male-to-female ratio [167]), and thus, the over-representation of females may affect group-level parameters such as the mean alexithymia score modeled for the autistic population in this sample. Nevertheless, a strength of the IRT method is the fact that unrepresentative samples are able to still provide unbiased item parameter estimates provided that there is minimal I-DIF between subgroups of the population of interest [168]. As we found little meaningful I-DIF within autistic adults across numerous demographic and clinical groupings, we feel very confident that the parameter estimates generated from the current study will generalize well to future samples. In addition, as SPARK does not include data on cognitive functioning, we were unable to determine whether the TAS-8 demonstrated relationships with verbal IQ, as has been previously reported with TAS-20 scores in the autistic population [51]. It remains unclear whether this relationship is an artifact of the generally high reading level of the TAS-20 (which would ideally be attenuated using the TAS-8) or a manifestation of some other relationship between alexithymia and verbal intelligence (e.g., language impairment [reflected in reduced verbal intelligence] is a developmental precursor of alexithymia, as posited by the recently proposed “alexithymia–language hypothesis” [169]). Future studies of alexithymia in the autistic population should incorporate measures of verbal and nonverbal cognitive performance, examining the relationships between these constructs and alexithymia and additionally testing whether self-report measures such as the TAS-8 function equivalently in autistic adults with higher and lower verbal abilities.

Another limitation concerns the correspondence of the TAS-8 to the theoretical alexithymia construct itself, as initially proposed by Sifneos and colleagues [1, 170]. As noted previously, alexithymia is made up of four interrelated facets: DIF, DDF, EOT, and difficulty fantasizing (DFAN), the latter two of which are not measured directly by the TAS-8. Because of this, the questionnaire arguably lacks content validity compared to the full TAS-20 or four-dimensional measures such as the TSIA. However, our results indicated that the EOT factor measured by the TAS was not highly correlated with the “general alexithymia” factor (which had its highest loadings on DIF/DDF items) and therefore does not adequately measure this facet of the alexithymia construct. Other measures, such as the PAQ [72], have found that a more restricted EOT factor (primarily reflecting one’s tendency to not focus attention on one’s own emotions) correlates much more highly with other measures of the alexithymia construct, likely representing a better operationalization of the EOT facet of alexithymia. In addition, items reflecting the DFAN dimension of alexithymia have displayed poor psychometric properties in both questionnaire and interview measures, and there is currently debate as to whether these items truly measure part of the alexithymia construct [2, 33, 171,172,173,174]. Moreover, studies in the autism population examining the correlates of alexithymia have found the DIF and DDF subscales to be most important in predicting clinically meaningful outcomes such as depression, anxiety, and social communication difficulties [59]. Thus, it is our belief that the “core” of alexithymia (consisting of difficulty identifying and describing emotional experiences) is likely sufficient to represent this construct, particularly when options to measure the EOT and DFAN facets are psychometrically inadequate. Although there is ongoing debate over whether the definition of alexithymia should be changed to exclude certain historically relevant facets of the construct [170, 174], we believe that construct definitions should change over time, incorporating relevant findings such as empirical tests of latent variable models. Future research in alexithymia would greatly benefit from additional psychometric studies that aim to generate optimal instruments to measure all facets of the alexithymia construct, coupled with tests of the incremental validity of the EOT/DFAN trait facets over and above a score composed of solely DIF/DDF items.

A final limitation of our study is the fact that we were unable to test all meaningful psychometric properties of the TAS-8. In particular, our study was cross-sectional, necessarily prohibiting us from assessing test–retest reliability, temporal stability, and I-DIF across repeated test administrations. Additionally, as alexithymia appears to be amenable to change with psychological interventions [175, 176], future studies should also investigate whether the TAS-8 latent trait score is sensitive to change, and if so, determine the minimal clinically important difference in this score. Additional psychometric characteristics that could be tested include convergent validity with other alexithymia measures such as the PAQ or TSIA, predictive validity for clinically meaningful outcomes, and I-DIF across language, culture, medium of administration (e.g., pen and paper vs. electronic), age group (e.g., adolescents vs. adults), and other diagnostic contrasts beyond the autism population. As inferences in the psychological science are only as reliable and valid as the measures they utilize [177], we encourage autism researchers and individuals in psychological science more broadly to consider the importance of measurement in their science and to devote more effort to investigating and justifying the ways in which complex psychological constructs such as alexithymia are operationalized.

Conclusions

The TAS-20 is a widely used measure of alexithymia that has more recently become the de facto measure of choice for this construct in the autism literature. However, this measure has so far lacked robust psychometric evidence for its reliability and validity in the population of autistic adults. Leveraging two large datasets of autistic and general-population adults, we performed an in-depth investigation of the TAS-20 and its measurement properties in autistic adults, revealing several psychometric shortcomings of this commonly used questionnaire. By reducing the number of items on the measure, we were able to produce a unidimensional short form, the TAS-8, which exhibited superior psychometric properties to the TAS-20 in samples of both autistic and non-autistic adults. Furthermore, in order to allow others to utilize the population-normed latent trait scores generated by our IRT model, we have created a user-friendly online score calculator for the TAS-8 that is freely available to interested researchers (https://asdmeasures.shinyapps.io/TAS8_Score/). Although the measurement properties of the TAS-8 were strong in this study we stress that this single measure should not be considered the “gold standard” of alexithymia measurement in autism or any other population. In agreement with the original authors of the TAS [2], we recommend that researchers interested in robustly measuring the alexithymia construct use multiple measures that include both self- and proxy-report questionnaires, ideally supplemented by observational or interview measures. Additional studies are still needed to fully explore the psychometric properties of the TAS-8, but in light of the current study, we believe that this revised questionnaire has potential to greatly improve the measurement of alexithymia both within and outside the field of autism research.

Availability of data and materials

Approved researchers can obtain the SPARK population data set described in this study by applying at https://base.sfari.org. Open data from the Human Penguin Project can be downloaded from Open Science Framework (https://osf.io/2rm5b/). Custom R code to perform the analyses in this paper can be found on the ResearchGate profile of the corresponding author (https://www.researchgate.net/profile/Zachary_Williams19/publications). The remainder of research materials can be obtained from the corresponding author upon request.

Change history

12 August 2021

Editor's Note: Readers are informed that the revised manuscript referred to in the retraction notice for this article is now published at https://doi.org/10.1186/s13229-021-00463-5

30 May 2021

A Correction to this paper has been published: https://doi.org/10.1186/s13229-021-00446-6

Abbreviations

- ADHD:

-

Attention deficit hyperactivity disorder

- ASQoL:

-

Autism Spectrum Quality of Life

- BDI-II:

-

Beck Depression Inventory-II

- BVAQ:

-

Bermond–Vorst Alexithymia Questionnaire

- BFNE-S:

-

Brief Fear of Negative Evaluation-Short

- cML:

-

Categorical maximum likelihood

- CFA:

-

Confirmatory factor analysis

- CFI:

-

Comparative fit index

- CrI:

-

Credible interval

- I-DIF:

-

Differential item functioning

- DDF:

-

Difficulty describing feelings

- DFAN:

-

Difficulty fantasizing

- DIF:

-

Difficulty identifying feelings

- ESSD:

-

Expected score standardized difference

- ETSSD:

-

Expected test score standardized difference

- ECV:

-

Explained common variance

- EOT:

-

Externally oriented thinking

- FDR:

-

False discovery rate