Abstract

Background

Large multicentre trials are complex and expensive projects. A key factor for their successful planning and delivery is how well sites meet their targets in recruiting and retaining participants, and in collecting high-quality, complete data in a timely manner. Collecting and monitoring easily accessible data relevant to performance of sites has the potential to improve trial management efficiency. The aim of this systematic review was to identify metrics that have either been proposed or used for monitoring site performance in multicentre trials.

Methods

We searched the Cochrane Library, five biomedical bibliographic databases (CINAHL, EMBASE, Medline, PsychINFO and SCOPUS) and Google Scholar for studies describing ways of monitoring or measuring individual site performance in multicentre randomised trials. Records identified were screened for eligibility. For included studies, data on study content were extracted independently by two reviewers, and disagreements resolved by discussion.

Results

After removing duplicate citations, we identified 3188 records. Of these, 21 were eligible for inclusion and yielded 117 performance metrics. The median number of metrics reported per paper was 8, range 1–16. Metrics broadly fell into six categories: site potential; recruitment; retention; data collection; trial conduct and trial safety.

Conclusions

This review identifies a list of metrics to monitor site performance within multicentre randomised trials. Those that would be easy to collect, and for which monitoring might trigger actions to mitigate problems at site level, merit further evaluation.

Similar content being viewed by others

Background

Multicentre randomised trials are complex and expensive projects. Improving the efficiency and quality of trial conduct is important, for patients, funders, researchers, clinicians and policy-makers [1]. A key factor in successful planning and delivery of multicentre trials is how well sites meet their targets in recruiting and retaining participants, and in collecting high-quality, complete data in a timely manner [2]. Collecting and monitoring easily accessible data relevant to performance of sites has the potential to improve the efficiency and success of trial management. Ideally, such performance metrics should provide information that quickly identifies potential problems so they can be mitigated or avoided, hence minimising their impact and improving the efficiency of trial conduct.

We are not aware of any standardised metrics for monitoring site performance in multicentre trials. A recent query to all UK Clinical Research Collaboration (UKCRC), registered Clinical Trials Units (CTUs) revealed that many units routinely collect and report data for each site in a trial; such as numbers randomised, case report forms (CRFs) returned, data quality, missing primary outcome data, and serious breaches. How such data are used to assess and manage performance varies widely however [3,4,5,6,7]. Agreeing a small number of metrics for site performance that could be easily collected, presented and monitored in a standardised way by a trial manager or trial co-ordinator would be a potentially useful tool to improve efficient trial conduct.

Currently, trial teams, sponsors, funders and oversight committees monitor site performance and trial conduct based primarily on recruitment [8]. Whilst clearly important, recruitment is not the only performance indicator that matters for a successful trial. Using a range of additional metrics that include data quality, protocol compliance and participant retention would give a better overall measure of the performance of each trial site, and the trial overall. To be low cost and efficient, the number of metrics monitored at any one time should be limited to no more than 8 to 12 [9]. We conducted a systematic review to identify performance metrics that have been used, or proposed, for monitoring or measuring performance at sites in multicentre randomised trials.

Methods

We performed a systematic review to identify metrics that have been used or proposed for monitoring or measuring performance at individual sites in multicentre randomised trials.

Criteria for potentially eligible studies

Studies were potentially eligible for inclusion if they:

-

Reported one or more site performance metric, either used or proposed for use, specifically for the purpose of measuring individual site performance

-

Were multicentre randomised trials, or concerning multicentre trials

-

Were published in English

-

Related to randomised trials involving humans

Studies where the strategy for monitoring site performance was randomly allocated were included. We anticipated that there might be studies where the adoption of an individual performance metric might have been tested by randomly allocating sites to using that particular metric or not. Studies relevant to both publically funded and industry-funded trials were included.

Search strategy

We searched the Cochrane Library and five biomedical bibliographic databases (CINAHL, Excerpta Medica database (EMBASE), Medical Literature Analysis and Retrieval System Online (Medline), Psychological Information Database (PsychINFO) and SCOPUS) and Google Scholar from 1980 to 2017 week 07. The search strategy is provided as an Appendix (Table 3).

Selection of studies

Two reviewers (KW, JT) independently assessed for inclusion the titles and abstracts identified by the search strategy. If there was disagreement about whether a record should be included, we obtained the full text.

We sought full-text copies for all potentially eligible records, and two reviewers (KW, JT) independently assessed these for inclusion. Disagreements were resolved by discussion, and if agreement could not be reached the study was independently assessed by a third reviewer (LD). Multiple reports of the same study were linked together.

Data extraction and data entry

Two reviewers (KW, JT) extracted data independently onto a specifically designed data extraction form. In the few cases where full text was not available (n = 9), data were extracted using the title and abstract only. Data were entered into an Excel spreadsheet, and checked.

Data were extracted on the design of the randomised trial (participants, intervention, control, number of sites and target sample size); whether the performance metric/s was theoretical or applied. For each performance metric we collected data that included: a verbatim description of the metric; how the metric was measured or expressed; timing of the measurement and during which phase of the study; who measured the metric; if a threshold exists to trigger action, what the threshold was and what action it triggers; and whether the metric was recommended by the authors.

Data analysis

We described the flow of studies through the review, with reasons for being removed or excluded, using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidance [10]. Characteristics of each study were described and tabulated. Analyses were descriptive only, with no statistical analyses anticipated.

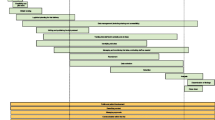

Results

The database search identified 3365 records, of which 177 were duplicates, leaving 3188 screened for eligibility (Fig. 1). At screening, we obtained full-text copies for 147 records to determine eligibility. For a further seven records full-text copies were unavailable, and so screened was based on the abstract only. Of those full-text copies and abstracts (for papers where the full text was unavailable), there was disagreement on three papers. Following discussion two papers were accepted for inclusion [11, 12] and one paper was excluded [13].

Twenty-one studies were agreed for inclusion, of which 14 were studies proposing performance metrics and seven were studies using performance metrics (Table 1). These 21 studies reported a total of 117 performance metrics. The median number of performance metrics reported per study was 8, with the range being 1–16. Those 117 metrics were then screened, to exclude any judged as: lacking sufficient clarity; being unrelated to individual site performance; being too specific to an individual trial methodology or pertaining to clinical outcomes not trial performance. This left 87 performance metrics to be considered for use in day-to-day trial management. The metrics broadly fell into six main categories: assessing site potential before recruitment starts; and monitoring recruitment, retention, quality of data collection, quality of trial conduct, and trial safety (Table 2).

Discussion

As far as we are aware, this is the first systematic review to identify and describe proposed or utilised metrics to monitor site performance in multicentre randomised trials. It provides a list of performance metrics, which can be used to contribute to developing and agreed a proposed set of performance metrics for use in day-to-day trial management. We identified 87 performance metrics which fell broadly into six main categories.

A strength of our study was the comprehensive search of the literature.

In planning this systematic review we envisaged that studies would be identified that had evaluated individual performance metrics either by implementation mid-way through a study, or ideally by randomising individual sites to use of a particular metric or not. Unfortunately, there was a paucity of such studies. Most studies suggested performance metrics on a purely theoretical basis, and did not provide data on the actual use of suggested metrics. The main limitations of our study were the lack of studies implementing performance metrics and reporting the effects of their utilisation, and that published work on this topic is limited, which is perhaps surprising as informal assessment of how sites perform in multicentre trials is common.

This list of performance metrics contributed to development of a Delphi survey sent to trial managers, UKCRC CTU directors and key clinical trial stakeholders, which is reported elsewhere. They were invited to participate through the UK Trial Managers’ Network (UK TMN) and UK Clinical Research Collaboration (UKCRC CTU) Network. Three Delphi rounds were used to steer the groups to consensus, refining the list of performance metrics. The reasons for their decisions were documented. Finally, data from the Delphi survey was presented to stakeholders in a priority setting expert workshop, providing participants with the opportunity to express their views, hear different perspectives and think more widely about monitoring of site performance. This was used to establish a consensus among experts on the top key performance metrics, expected to number around 8–12.

Conclusions

This study provides trialists for the first time with a comprehensive description of performance metrics described in the literature that have been proposed or used in the context of multicentre randomised trials. It will assist future work to develop a concise, practical list of performance metrics which could be used in day-to-day trial management to improve the performance of individual sites. This has the potential to reduce both the financial cost of delivering a multicentre trial, and the research waste and delay in scientific progress that results when trials fail to meet their recruitment target, are poorly conducted, or have inadequate data.

Abbreviations

- CINAHL:

-

Cumulative Index to Nursing and Allied Healthy Literature

- CRF:

-

Case report form

- CTUs:

-

Clinical Trials Units

- EMBASE:

-

Excerpta Medica database

- Medline:

-

Medical Literature Analysis and Retrieval System Online

- NIHR:

-

National Institute for Health Research

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PsychINFO:

-

Psychological Information Database

- UK TMN:

-

UK Trial Managers’ Network

- UKCRC:

-

UK Clinical Research Collaboration

References

Duley L, Antman K, Arena J, Avezum A, Blumenthal M, Bosch J, Chrolavicius S, Li T, Ounpuu S, Perez AC, et al. Specific barriers to the conduct of randomized trials. Clin Trials. 2008;5(1):40–8.

Berthon-Jones N, Courtney-Vega K, Donaldson A, Haskelberg H, Emery S, Puls R. Assessing site performance in the Altair study, a multinational clinical trial. Trials. 2015;16(1):138 (no pagination).

Coleby D, Whitham D, Duley L. Can site performance be predicted? Results of an evaluation of the performance of a site selection questionnaire in five multicentre trials. Trials. 2015;16(Suppl 2):176.

Kirkwood AA, Cox T, Hackshaw A. Application of methods for central statistical monitoring in clinical trials. Clin Trials. 2013;10(5):783–806.

Bakobaki JM, Rauchenberger M, Joffe N, et al. The potential for central monitoring techniques to replace on-site monitoring: Findings from an international multi-centre clinical trial. Clin Trials 2012;9:257–64.

Timmermans C, Venet D, Burzykowski T. Data-driven risk identification in phase III clinical trials using central statistical monitoring. Int J Clin Oncol. 2016;21(1):38–45.

Tantsyura V, Dunn IM, Fendt K, Kim YJ, Waters J, Mitchel J. Risk-based monitoring: a closer statistical look at source document verification, queries, study size effects, and data quality. Ther Innov Regul Sci. 2015;49(6):903–10.

Smith B, Martin L, Martin S, Denslow M, Hutchens M, Hawkins C, Panier V, Ringel MS. What drives site performance in clinical trials? Nat Rev Drug Discov. 2018;17(6):389–90.

Dorricott K. Using metrics to direct performance improvement efforts in clinical trial management. Monitor. 2012;26(4):9–13.

Moher D, Liberati A, Tetzlaff J, Altman DG, Grp P. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: the PRISMA Statement. J Clin Epidemiol. 2009;62(10):1006–12.

Hanna M, Minga A, Fao P, Borand L, Diouf A, Mben JM, Gad RR, Anglaret X, Bazin B, Chene G. Development of a checklist of quality indicators for clinical trials in resource-limited countries: The French National Agency for Research on AIDS and Viral Hepatitis (ANRS) experience. Clin Trials. 2013;10(2):300–18.

Thom E. A center performance assessment tool in a multicenter clinical trials network. Clin Trials. 2011;8(4):519.

Hullsiek KH, Wyman N, Kagan J, Grarup J, Carey C, Hudson F, Finley E, Belloso W. Design of an international cluster-randomized trial comparing two data monitoring practices. Clin Trials. 2013;10:S32–3.

Bose A, Das S. Trial analytics—A tool for clinical trial management. Acta Poloniae Pharmaceutica - Drug Research. 2012;69(3):523–33.

Djali S, Janssens S, Van Yper S, Van Parijs J. How a data-driven quality management system can manage compliance risk in clinical trials. Drug Inform J. 2010;44(4):359–73.

Elsa VM, Jemma HC, Martin L, Jane A. A key risk indicator approach to central statistical monitoring in multicentre clinical trials: method development in the context of an ongoing large-scale randomized trial. Trials Conference: Clinical Trials Methodology Conference. 2011;12:1.

Glass HE, DiFrancesco JJ. Understanding site performance differences in multinational phase III clinical trials. Int J Pharmaceutical Med. 2007;21(4):279–86.

Jou JH, Sulkowski MS, Noviello S, Long J, Pedicone LD, McHutchison JG, Muir AJ. Analysis of site performance in academic-based and community-based centers in the IDEAL study. J Clin Gastroenterol. 2013;47(10):e91–5.

Khatawkar S, Bhatt A, Shetty R, Dsilva P. Analysis of data query as parameter of quality. Perspect Clin Res. 2014;5(3):121–4.

Lee HJ, Lee S. An exploratory evaluation framework for e-clinical data management performance. Drug Inf J. 2012;46(5):555–64.

Rojavin MA. Recruitment index as a measure of patient recruitment activity in clinical trials. Contemp Clin Trials. 2005;26(5):552–6.

Rosendorf LL, Dafni U, Amato DA, Lunghofer B, Bartlett JG, Leedom JM, Wara DW, Armstrong JA, Godfrey E, Sukkestad E, et al. Performance evaluation in multicenter clinical trials: Development of a model by the AIDS Clinical Trials Group. Control Clin Trials. 1993;14(6):523–37.

Sweetman EA, Doig GS. Failure to report protocol violations in clinical trials: a threat to internal validity? Trials. 2011;12:214 (no pagination).

Tudur Smith C, Williamson P, Jones A, Smyth A, Hewer SL, Gamble C. Risk-proportionate clinical trial monitoring: an example approach from a non-commercial trials unit. Trials. 2014;15(1):127 (no pagination).

Wilson B, Provencher T, Gough J, Clark S, Abdrachitov R, de Roeck K, Constantine SJ, Knepper D, Lawton A. Defining a central monitoring capability: sharing the experience of TransCelerate BioPharma's approach, Part 1. Ther Innov Regul Sci. 2014;48(5):529–35.

Katz N. Development and validation of a clinical trial data surveillance method to improve assay sensitivity in clinical trials. J Pain. 2015;1:S88.

Kim J, Zhao W, Pauls K, Goddard T. Integration of site performance monitoring module in web-based CTMS for a global trial. Clin Trials. 2011;8(4):450.

Rifkind BM. Participant recruitment to the coronary primary prevention trial. J Chronic Dis. 1983;36(6):451–65.

Saunders L, Clarke F, Hand L, Jakab M, Watpool I, Good J, Heels-Ansdell D. Screening weeks: a pilot trial management metric. Crit Care Med. 2015;1:330.

Sun J, Wang J, Liu G. Evaluation of the quality of investigative centers using clinical ratings and compliance data. Contemp Clin Trials. 2008;29(2):252–8.

Wear S, Richardson PG, Revta C, Vij R, Fiala M, Lonial S, Francis D, DiCapua Siegel DS, Schramm A, Jakubowiak AJ, et al. The multiple myeloma research consortium (MMRC) model: Reduced time to trial activation and improved accrual metrics. Blood Conference: 52nd Annual Meeting of the American Society of Hematology, ASH. 2010;116(21):3803.

Funding

This work was funded by NIHR CTU Support funding. The views expressed are those of the author(s) and not necessarily those of the National Health Service (NHS), the National Institute for Health Research (NIHR) or the Department of Health.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Contributions

LD and DW conceived the study. LD, DW, JT, KW and AM designed the study and wrote the protocol. JT and KW performed the search and collected the data. KW analysed the data and drafted the paper with input from LD and JT. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Walker, K.F., Turzanski, J., Whitham, D. et al. Monitoring performance of sites within multicentre randomised trials: a systematic review of performance metrics. Trials 19, 562 (2018). https://doi.org/10.1186/s13063-018-2941-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13063-018-2941-8