Abstract

Background

Quality improvement collaboratives are widely used to improve health care in both high-income and low and middle-income settings. Teams from multiple health facilities share learning on a given topic and apply a structured cycle of change testing. Previous systematic reviews reported positive effects on target outcomes, but the role of context and mechanism of change is underexplored. This realist-inspired systematic review aims to analyse contextual factors influencing intended outcomes and to identify how quality improvement collaboratives may result in improved adherence to evidence-based practices.

Methods

We built an initial conceptual framework to drive our enquiry, focusing on three context domains: health facility setting; project-specific factors; wider organisational and external factors; and two further domains pertaining to mechanisms: intra-organisational and inter-organisational changes. We systematically searched five databases and grey literature for publications relating to quality improvement collaboratives in a healthcare setting and containing data on context or mechanisms. We analysed and reported findings thematically and refined the programme theory.

Results

We screened 962 abstracts of which 88 met the inclusion criteria, and we retained 32 for analysis. Adequacy and appropriateness of external support, functionality of quality improvement teams, leadership characteristics and alignment with national systems and priorities may influence outcomes of quality improvement collaboratives, but the strength and quality of the evidence is weak. Participation in quality improvement collaborative activities may improve health professionals’ knowledge, problem-solving skills and attitude; teamwork; shared leadership and habits for improvement. Interaction across quality improvement teams may generate normative pressure and opportunities for capacity building and peer recognition.

Conclusion

Our review offers a novel programme theory to unpack the complexity of quality improvement collaboratives by exploring the relationship between context, mechanisms and outcomes. There remains a need for greater use of behaviour change and organisational psychology theory to improve design, adaptation and evaluation of the collaborative quality improvement approach and to test its effectiveness. Further research is needed to determine whether certain contextual factors related to capacity should be a precondition to the quality improvement collaborative approach and to test the emerging programme theory using rigorous research designs.

Similar content being viewed by others

Background

Improving quality of care is essential to achieve Universal Health Coverage [1]. One strategy for quality improvement is quality improvement collaboratives (QIC) defined by the Breakthrough Collaborative approach [2]. This entails teams from multiple health facilities working together to improve performance on a given topic supported by experts who share evidence on best practices. Over a short period, usually 9–18 months, quality improvement coaches support teams to use rapid cycle tests of change to achieve a given improvement aim. Teams also attend “learning sessions” to share improvement ideas, experience and data on performance [2,3,4]. Collaboration between teams is assumed to shorten the time required for teams to diagnose a problem and identify a solution and to provide an external stimulus for innovation [2, 3].

QICs are widely used in high-income countries and proliferating in low- and middle-income countries (LMICs), although solid evidence of their effectiveness is limited [5,6,7,8,9,10,11]. A systematic review on the effects of QICs, largely focused on high-income settings, found that three quarters of studies reported improvement in at least half of the primary outcomes [7]. A previous review suggested that evidence on QICs effectiveness is positive but highly contextual [5], and a review of the effects of QICs in LMICs reported a positive and sustained effect on most indicators [12]. However, there are important limitations. First, with one exception [11], systematic reviews define QIC effectiveness on the basis of statistically significant improvement in at least one, or at least half of “primary” outcomes [7, 12] neglecting the heterogeneity of outcomes and the magnitude of change. Second, studies included in the reviews are weak, most commonly before-after designs, while most randomised studies give insufficient detail of randomisation and concealment procedures [7], thus potentially overestimating the effects [13]. Third, most studies use self-reported clinical data, introducing reporting bias [8,9,10]. Fourth, studies generally draw conclusions based on facilities that completed the programme, introducing selection bias. Recent well-designed studies support a cautious assessment of QIC effectiveness: a stepped wedge randomised controlled trial of a QIC intervention aimed at reducing mortality after abdominal surgery in the UK found no evidence of a benefit on survival [14]. The most robust systematic review of QICs to date reports little effect on patient health outcomes (median effect size (MES) less than 2 percentage points), large variability in effect sizes for different types of outcomes, and a much larger effect if QICs are combined with training (MES 111.6 percentage points for patient health outcomes; and MES of 52.4 to 63.4 percentage points for health worker practice outcomes) [11]. A review of group problem-solving including QIC strategies to improve healthcare provider performance in LMICs, although mainly based on low-quality studies, suggested that these may be more effective in moderate-resource than in low-resource settings and their effect smaller with higher baseline performance levels [6].

Critiques of quality improvement suggest that the mixed results can be partly explained by a tendency to reproduce QIC activities without attempting to modify the functioning, interactions or culture in a clinical team, thus overlooking the mechanisms of change [15]. QIC implementation reports generally do not discuss how changes were achieved, and lack explicit assumptions on what contextual factors would enable them; the primary rationale for using a QIC often being that it has been used successfully elsewhere [7] . In view of the global interest in QICs, better understanding of the influence of context and of mechanisms of change is needed to conceptualise and improve QIC design and evaluation [6, 7]. In relation to context, a previous systematic review explored determinants of QIC success, reporting whether an association was found between any single contextual factor and any effect parameter. The evidence was inconclusive, and the review lacked an explanatory framework on the role of context for QIC success [16]. Mechanisms have been documented in single case studies [17] but not systematically reviewed.

In this review, we aim to analyse contextual factors influencing intended outcomes and to identify how quality improvement collaboratives may result in improved adherence to evidence-based practices, i.e. the mechanisms of change.

Methods

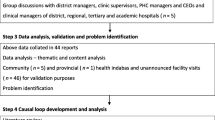

This review is inspired by the realist review approach, which enables researchers to explore how, why and in what contexts complex interventions may work (or not) by focusing on the relationships between context, mechanisms and outcomes [18,19,20]. The realist review process consists of 5 methodological steps (Fig. 1). We broadly follow this methodological guidance with some important points of departure from it. We had limited expert engagement in developing our theory of change, and our preliminary conceptual framework was conceived as a programme theory [21] rather than as a set of context-mechanism-outcomes configurations (step 1) [22]. We followed a systematic search strategy driven by the intervention definition with few iterative searches [19], and we included a quality appraisal of the literature because the body of evidence on our questions is generally limited by self-reporting of outcomes, selection and publication bias [7, 9, 15].

Realist review process, adapted from Pawson R. et al. 2015 [18]

Clarifying scope of the review

We built an initial conceptual framework to drive our enquiry (Fig. 2) in the form of a preliminary programme theory [21, 23]. We adapted the Medical Research Council process evaluation framework [24] using findings from previous studies [8, 16, 25, 26] to conceptualise relationships between contextual factors, mechanisms of change and outcomes. We defined context as “factors external to the intervention which may influence its implementation” [24].We drew from Kaplan’s framework to understand context for quality improvement (MUSIQ), which is widely used in high-income countries, and shows promise for LMIC settings [27, 28]. We identified three domains for analysis: the healthcare setting in which a quality improvement intervention is introduced; the project-specific context, e.g. characteristics of quality improvement teams, leadership in the implementing unit, nature of external support; and the wider organisational context and external environment [29].

We defined mechanisms of change as the “underlying entities, processes, or structures which operate in particular contexts to generate outcomes of interest” [30]. Our definition implies that mechanisms are distinct from, but linked to, intervention activities: intervention activities are a resource offered by the programme to which participants respond through cognitive, emotional or organisational processes, influenced by contextual factors [31]. We conceptualised the collaborative approach as a structured intervention or resource to embed innovative practices into healthcare organisations and accelerate diffusion of innovations based on seminal publications on QICs [2, 3]. Strategies described in relation to implementation of a change, e.g. “making a change the normal way” that an activity is done [3], implicitly relate to normalisation process theory [17, 32] . Spreading improvement is explicitly inspired by the diffusion of innovation theory, attributing to early adopters the role of assessing and adapting innovations to facilitate their spread, and the role of champions for innovation, exercising positive peer pressure in the collaborative [3, 17, 33]. Therefore, we identified two domains for analysis of mechanisms of change: we postulated that QIC outcomes may be generated by mechanisms activated within each organisation (intra-organisational mechanisms) and through their collaboration (inter-organisational mechanisms). When we refer to QIC outcomes, we refer to measures which an intervention aimed to influence, including measures of clinical processes, perceptions of care, patient recovery, or other quality measures, e.g. self-reported patient safety climate.

KZ and JS discussed the initial programme theory with two quality improvement experts acknowledged at the end of this paper. They suggested alignment with the MUSIQ framework and commented on the research questions, which were as follows:

Context

-

1.

In what kind of health facility settings may QICs work (or not)? (focus on characteristics of the health facility setting)

-

2.

What defines an enabling environment for QICs? (focus on proximate project-specific factors and on wider organisational context and external environment)

Mechanisms

-

3.

How may engagement in QICs influence health workers and the organisational context to promote better adherence to evidence-based guidelines? (focus on intra-organisational mechanisms)

-

4.

What is it about collaboration with other facilities that may lead to better outcomes? (focus on inter-organisational mechanisms)

Search strategy

The search strategy is outlined in Fig. 3 and detailed in Additional file 1. Studies were included if they (i) referred to the quality improvement collaborative approach [2, 5, 8, 16], defined in line with previous reviews as consisting of all the following elements: a specified topic; clinical and quality improvement experts working together; multi-professional quality improvement teams in multiple sites; using multiple rapid tests of change; and a series of structured collaborative activities in a given timeframe involving learning sessions and visits from mentors or facilitators (ii) were published in English, French or Spanish, from 1997 to June 2018; and (iii) referred to a health facility setting, as opposed to community, administrative or educational setting.

Studies were excluded if they focused on a chronic condition, palliative care, or administrative topics, and if they did not contain primary quantitative or qualitative data on process of implementation, i.e. the search excluded systematic reviews; protocol papers, editorials, commentaries, methodological papers and studies reporting exclusively outcomes of QIC collaboratives or exclusively describing implementation without consideration of context or mechanisms of change.

Screening

We applied inclusion and exclusion criteria to titles and abstracts and subsequently to the full text. We identified additional studies through references of included publications and backward and forward citation tracking.

Data collection

We developed and piloted data extraction forms in MS Excel. We classified studies based on whether they focused on context or mechanisms of change and captured qualitative and quantitative data under each component. Data extraction also captured the interaction between implementation, context and mechanisms, anticipating that factors may not fit neatly into single categories [18, 19].

KZ and MT independently conducted a structured quality appraisal process using the STROBE checklist for quantitative observational studies, the Critical Appraisal Skills Programme checklist for qualitative studies and the Mixed Methods Appraisal Tool for mixed method studies [34,35,36,37] and resolving disagreement by consensus. To aid comparability, given the heterogeneity of study designs, a score of 1 was assigned to each item in the checklist, and a total score was calculated for each paper. Quality was rated low, medium or high for papers scoring in the bottom half, between 50 and 80%, or above 80% of the maximum score. We did not exclude studies because of low quality: in all such cases, both authors agreed on the study’s relative contribution to the research questions [19, 38].

Synthesis and reporting of results

Analysis was informed by the preliminary conceptual framework (Fig. 2) and conducted thematically by framework domain by the lead author. We clustered studies into context and mechanism. Under context, we first analysed quantitative data to identify factors related to the framework and evidence of their associations with mechanisms and outcomes. Then, from the qualitative evidence, we extracted supportive or dissonant data on the same factors. Under mechanisms, we identified themes under the two framework domains using thematic analysis. We generated a preliminary coding framework for context and mechanism data in MS Excel. UB reviewed a third of included studies, drawn randomly from the list stratified by study design, and independently coded data following the same process. Disagreements were resolved through discussion. We developed a final coding framework, which formed the basis of our narrative synthesis of qualitative and quantitative data.

We followed the RAMESES reporting checklist, which is modelled on the PRISMA statement [39] and tailored for reviews aiming to highlight relationships between context, mechanisms and outcomes [40] (Additional file 2). All included studies reported having received ethical clearance.

Results

Search results

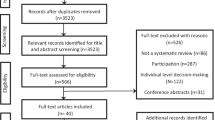

Searches generated 1,332 results. After removal of duplicates (370), 962 abstracts were screened of which 88 met the inclusion criteria. During the eligibility review process, we identified 15 papers through bibliographies of eligible papers and authors’ suggestions. Of the 103 papers reviewed in full, 32 met inclusion criteria and were retained for analysis (Table 1). Figure 4 summarises the search results.

Characteristics of included studies

Included studies comprised QIC process evaluations using quantitative, qualitative, and mixed methods designs, as well as case descriptions in the form of programme reviews by implementers or external evaluators, termed internal and independent programme reviews, respectively. While the application of QIC has grown in LMICs, evidence remains dominated by experiences from high-income settings: only 9 out of 32 studies were from a LMIC setting of which 4 were in the grey literature (Table 2).

Most papers focused on mechanisms of change, either as a sole focus (38%) or in combination with implementation or contextual factors (72%) and were explored mostly through qualitative studies or programme reviews. The relative paucity of evidence on the role of context in relation to QIC reflects the gaps identified by other systematic reviews [7]. We identified 15 studies containing data on context of which 8 quantitatively tested the association between a single contextual factor and outcomes. Most studies were rated as medium quality (53%) with low ratings attributed to all internal and external programme reviews (Additional file 3). However, these were retained for analysis because of their rich accounts on the relationship between context, mechanisms and outcomes and the relative scarcity of higher quality evaluations taking into account this complexity [41].

Context

We present results by research question in line with the conceptual framework (Fig. 2). We identified two research questions to explore three types of contextual factors (Table 3).

In what kind of facility setting may QICs work (or not)?

The literature explored four healthcare setting characteristics: facility size, voluntary or compulsory participation in the QIC programme, baseline performance and factors related to health facility readiness. We found no conclusive evidence that facility size [42], voluntary or compulsory participation in the QIC programme [44], and baseline performance influence QIC outcomes [43]. For each of these aspects, we identified only one study, and those identified were not designed to demonstrate causality and lacked a pre-specified hypothesis on why the contextual factors studied would influence outcomes. As for heath facility readiness, this encompassed multiple factors perceived as programme preconditions, such as health information systems [42, 45, 47], human resources [42, 45, 46, 48] and senior level commitment to the target [42, 45]. There was inconclusive evidence on the relationships between these factors and QIC outcomes: the studies exploring this association quantitatively had mixed results and generally explored one factor each. A composite organisational readiness construct, combining the above-mentioned programme preconditions, was investigated in two cross-sectional studies from the same collaborative in a high-income setting. No evidence of an association with patient safety climate and capability was found, but this may have been due to limitations of the statistical model or of data collection on the composite construct and outcome measures [42, 45]. However, qualitative evidence from programme reviews and mixed-methods process evaluations of QIC programmes suggests that negative perceptions of the adequacy of available resources, low staff morale and limited availability of relevant clinical skills may contribute to negative perceptions of organisational readiness, particularly in LMIC settings. High-intensity support and partnership with other programmes may be necessary to fill clinical knowledge gaps [46, 48]. Bottom-up leadership may foster positive perceptions of organisational readiness for quality improvement [42, 46, 48].

What defines an enabling environment for QICs?

This question explored two categories in our conceptual framework: project-specific and wider organisational contextual factors. Project-specific contextual factors relate to the immediate unit in which a QIC intervention is introduced, and the characteristics of the QIC intervention that may influence its implementation [29]. We found mixed evidence that adequacy and appropriateness of external support for QIC and functionality of quality improvement teams may influence outcomes.

Medium-high quality quantitative studies suggest that the quality, intensity and appropriateness of quality improvement support may contribute to perceived improvement of outcomes, but not, where measured, actual improvement [42, 46, 48,49,50,51]. This may be partly explained by the number of ideas for improvement tested [49]. In other words, the more quality improvement teams perceive the approach to be relevant, credible and adequate, the more they may be willing to use the quality improvement approach, which in turn contributes to a positive perception of improvement. In relation to attributes of quality improvement teams, studies stress the importance of team stability, multi-disciplinary composition, involvement of opinion leaders and previous experience in quality improvement, but there is inconclusive evidence that these attributes are associated with better outcomes [49, 52,53,54]. Particularly in LMICs, alignment with existing supervisory structures may be the key to achieve a functional team [46, 48, 51, 57, 58].

Wider organisational contextual factors refer to characteristics of the organisation in which a QIC intervention is implemented, and the external system in which the facility operates [29]. Two factors emerge from the literature. Firstly, the nature of leadership has a key role in motivating health professionals to test and adopt new ideas and is crucial to develop “habits for improvement”, such as evidence-based practice, systems thinking and team problem-solving [49, 51, 54,55,56]. Secondly, alignment with national priorities, quality strategies, financial incentive systems or performance management targets may mobilise leadership and promote facility engagement in QIC programmes, particularly in LMIC settings [46, 48, 50, 51]; however, quality of this evidence is medium-low.

Mechanisms of change

In relation to mechanisms of change, we identified two research questions to explore one domain each.

How may engagement in QICs influence health workers and the organisational context to promote better adherence to evidence-based practices?

We identified six mechanisms of change within an organisation (Table 4). First, participation in QIC activities may increase their commitment to change by increasing confidence in using data to make decisions and identifying clinical challenges and their potential solutions within their reach [17, 49, 51, 55, 56, 60,61,62]. Second, it may improve accountability by making standards explicit, thus enabling constructive challenge among health workers when these are not met [17, 62, 64,65,66]. A relatively high number of qualitative and mixed-methods studies of medium–high quality support these two themes. Other mechanisms, supported by fewer and lower quality studies, include improving health workers’ knowledge and problem-solving skills by providing opportunities for peer reflection [46, 48, 64, 67]; improving organisational climate by promoting teamwork, shared responsibility and bottom up discussion [60,61,62, 67]; strengthening a culture of joint problem solving [48, 63]; and supporting an organisational cultural shift through the development of “habits for improvement” that promote adherence to evidence-based practices [17, 56, 62].

The available literature highlights three key contextual enablers of these mechanisms: the appropriateness of mentoring and external support, leadership characteristics and adequacy of clinical skills. The literature suggests that external mentoring and support is appropriate if it includes a mix of clinical and non-clinical coaching, which ensures the support is acceptable and valued by teams, and if it is highly intensive, particularly in low-income settings that are relatively new to using data for decision-making and may have low data literacy [46, 48, 51, 58]. For example, in Nigeria, Osibo et al. suggests that reducing resistance to use of data for decision-making may be an intervention in itself and a pre-condition for use of quality improvement methods [58]. As for leadership characteristics, the literature stresses the role of hospital leadership in fostering a culture of performance improvement, promoting open dialogue, bottom-up problem solving, which may facilitate a collective sense of responsibility and engagement in quality improvement. Alignment with broader strategic priorities and previous success in quality improvement may further motivate leadership engagement [46, 48, 50, 51]. Adequacy of clinical skills emerges as an enabler particularly in LMICs, where implementation reports observed limited scope for problem-solving given the low competences of health workers [46] and the need for partnership with training programmes to complement clinical skills gaps [48].

What is it about collaboration with other hospitals that may lead to better outcomes?

This question explored inter-organisational mechanisms of change. Four themes emerged from the literature (Table 5). Firstly, collaboration may create or reinforce a community of practice, which exerts a normative pressure on hospitals to engage in quality improvement, [17, 46, 50, 63, 67,68,69]. Secondly, it may promote friendly competition and create isomorphic pressures on hospital leaders, i.e. pressure to imitate other facilities’ success because they would find it damaging not to. In reverse, sharing performance data with other hospitals offers a potential reputational gain for well-performing hospitals and for individual clinicians seeking peer recognition [17, 46, 63, 68, 69, 72] . A relatively high number of medium-high quality studies support these two themes. Thirdly, collaboration may provide a platform for capacity building by disseminating success stories and methodologies for improvement [51, 67,68,69,70]. Finally, collaboration with other hospitals may demonstrate the feasibility of improvement to both hospital leaders and health workers. This, in turn, may galvanise action within each hospital by reinforcing intra-organisational change mechanisms outlined above [51, 63, 71]. However, evidence for this comes from low-quality studies.

Key contextual enablers for these inter-organisational mechanisms include adequate external support to facilitate sharing of success stories in contextually appropriate ways and alignment with systemic pressures on hospital leadership. For example, a study on a Canadian QIC in intensive care units found that pressure to centralise services undermined collaboration because hospitals’ primary goal and hidden agenda for collaboration were to access information on their potential competitors [72]. The activation of isomorphic pressures also assumes that a community of practice exists or can be created. This may not necessarily be the case, particularly in LMICs where isolated working is common: a study in Malawi attributed the disappointing QIC outcomes partly to the intervention’s inability to activate friendly competition mechanisms due the weakness of clinical networks [46].

The relative benefit of collaboration was questioned in both high and low-income settings: less importance was attached to learning sessions than mentoring by participants in a study in Tanzania [57]. Hospitals may fear exposure and reputational risks [68], and high-performing hospitals may see little advantage in their participation in a collaborative [68, 72]. Hospitals may also make less effort when working collaboratively or use collaboration for self-interest as opposed to for sharing their learning [69].

Figure 5 offers a visual representation of the identified intra- and inter-organisational mechanisms of change and their relationship to the intervention strategy and expected outcomes.

Discussion

To the best of our knowledge, this is the first review to systematically explore the role of context and the mechanisms of change in QICs, which can aid their implementation design and evaluation. This is particularly important for a complex intervention, such as QICs, whose effectiveness remains to be demonstrated [6, 7, 11]. We offer an initial programme theory to understand whose behaviours ought to change, at what level, and how this might support the creation of social norms promoting adherence to evidence-based practice. Crucially, we also link intra-organisational change to the position that organisations have in a health system [33].

The growing number of publications on mechanisms of change highlights interest in the process of change. We found that participation in quality improvement collaborative activities may improve health professionals’ knowledge, problem-solving skills and attitude; teamwork; shared leadership and habits for improvement. Interaction across quality improvement teams may generate normative pressure and opportunities for capacity building and peer recognition. However, the literature generally lacks reference to any theory in the conceptualisation and description of mechanisms of change [7]. This is surprising given the clear theoretical underpinnings of the QIC approach, including normalisation process theory in relation to changes within each organisation, and diffusion of innovation theory in relation to changes arising from collaborative activities [32, 33]. We see three key opportunities to fill this theoretical gap. First, more systematic application of the Theoretical Domains Framework in design and evaluation of QICs and in future reviews. This is a synthesis of over 120 constructs from 33 behaviour change theories and is highly relevant because the emerging mechanisms of change pertain to seven of its domains: knowledge, skills, reinforcement, intentions, behaviour regulation, social influences and environmental context and resources [73, 74]. Its use would allow specification of target behaviours to change, i.e. who should do what differently, where, how and with whom, to consider the influences on those behaviours, and to prioritise targeting behaviours that are modifiable as well as central to achieving change in clinical practice [75]. Second, we recognise that emphasis on individual behaviour change theories may mask the complexity of change [76]. Organisational and social psychology offer important perspectives for theory building, for example, postulating that motivation is the product of intrinsic and extrinsic factors [77, 78], or that group norms that discourage dissent, for example, by not encouraging or not rewarding constructive criticism act as a key barrier to individual behaviour change [79]. This warrants further exploration. Third, engaging with the broader literature on learning collaboratives may also help develop the programme theory further and widen its application.

Our findings on contextual enablers complement previous reviews [16, 80]. We highlight that activating mechanisms of change may be influenced by the appropriateness of external support, leadership characteristics, quality improvement capacity and alignment with systemic pressures and incentives. This has important implications for QIC implementation. For example, for external support to be of high intensity, the balance of clinical and non-clinical support to quality improvement teams will need contextual adaptation, since different skills mixes will be acceptable and relevant in different clinical contexts. Particularly in LMICs, alignment with existing supervisory structures may be the key to achieve a functional quality improvement team [46, 48, 51, 57, 58].

Our review offers a more nuanced understanding of the role of leadership in QICs compared to previous concepts [8, 25]. We suggest that the activation of the mechanisms of change, and therefore potentially QIC success, rests on the ability to engage leaders, and therefore leadership engagement can be viewed as a key part of the QIC intervention package. In line with organisational learning theory, the leaders’ role is to facilitate a data-informed analysis of practice and act as “designers, teachers and stewards” to move closer to a shared vision [81]. This requires considerable new skills and a shift away from traditional authoritarian leadership models [81]. This may be more easily achieved where some of the “habits for improvement” already exist (13), or where organisational structures, for example, decentralised decision-making or non-hierarchical teams, allow bottom-up problem solving. Leadership engagement in QIC programmes can be developed through alignment with national priorities or quality strategies, alignment with financial incentive systems or facility performance management targets, particularly as external pressures may compete with QIC aims. Therefore, QICs design and evaluation would benefit from situating these interventions in the health system in which they occur.

Improving skills and competencies in using quality improvement methods is integral to the implementation of QIC interventions; however, the analysis of contextual factors suggests that efforts to strengthen quality improvement capacity may need to consider other factors as well as the following: firstly, the availability and usability of health information systems. Secondly, health workers’ data literacy, i.e. their confidence, skills and attitudes towards the use of data for decision-making. Thirdly, adequacy of health workers’ clinical competences. Fourth, leaders’ attitudes to team problem solving and open debate, particularly in settings where organisational culture may be a barrier to individual reflection and initiative. The specific contextual challenges emerging from studies from LMICs, such as low staffing levels and low competence of health workers, poor data systems, and lack of leadership echo findings on the limitations of quality improvement approaches at facility-level in resource constrained health systems [1, 82]. These may explain why group-problem solving strategies, including QICs, may be more effective in moderate-resource than in low-resource settings, and their effect larger when combined with training [11]. The analysis on the role of context in activating mechanisms for change suggests the need for more explicit assumptions about context-mechanism-outcome relationships in QIC intervention design and evaluation [15, 83]. Further analysis is needed to determine whether certain contextual factors related to capacity should be a precondition to justify the QIC approach (an “investment viability threshold”) [84], and what aspects of quality improvement capacity a QIC intervention can realistically modify in the relatively short implementation timeframes available.

While we do not suggest that our programme theory is relevant to all QIC interventions, in realist terms, this may be generalizable at the level of theory [18, 20] offering context-mechanism-outcome hypotheses that can inform QIC design and be tested through rigorous evaluations, for example, through realist trials [85, 86]. In particular, there is a need for quantitative analysis of hypothesised mechanisms of change of QICs, since the available evidence is primarily from qualitative or cross-sectional designs.

Our review balances principles of systematic reviews, including a comprehensive literature search, double abstraction, and quality appraisal, with the reflective realist review approach [19]. The realist-inspired search methodology allowed us to identify a higher number of papers compared to a previous review with similar inclusion criteria [16] through active search of qualitative studies and grey literature and inclusion of low quality literature that would have otherwise been excluded [41]. This also allowed us to interrogate what did not work, as much as what did work [19, 22]. By reviewing literature with a wide range of designs against a preliminary conceptual framework, by including literature spanning both high- and low-resource settings and by exploring dissonant experiences, we contribute to understanding QICs as “disruptive events within systems” [87].

Our review may have missed some papers, particularly because QIC programme descriptions are often limited [7]; however, we used a stringent QIC definition aligned with previous reviews, and we are confident that thematic saturation was achieved with the available studies. We encountered a challenge in categorising data as “context” or “mechanism”. This is not unique and was anticipated [88]. Double review of papers in our research team minimised subjectivity of interpretation and allowed a deep reflection on the role of the factors that appeared under both dimensions.

Conclusion

We found some evidence that appropriateness of external support, functionality of quality improvement teams, leadership characteristics and alignment with national systems and priorities may influence QIC outcomes, but the strength and quality of the evidence is weak. We explored how QIC outcomes may be generated and found that health professionals’ participation in QIC activities may improve their knowledge, problem-solving skills and attitude; team work; shared leadership and the development of habits for improvement. Interaction across quality improvement teams may generate normative pressure and opportunities for capacity building and peer recognition. Activation of mechanisms of change may be influenced by the appropriateness of external support, leadership characteristics, the adequacy of clinical skills and alignment with systemic pressure and incentives.

There is a need for explicit assumptions about context-mechanism-outcome relationships in QIC design and evaluation. Our review offers an initial programme theory to aid this. Further research should explore whether certain contextual factors related to capacity should be a precondition to justify the QIC approach, test the emerging programme theory through empirical studies and refine it through greater use of individual behaviour change and organisational theory in intervention design and evaluation.

Abbreviations

- IQR:

-

Inter-quartile range

- LMIC:

-

Low and middle-income country

- MES:

-

Median effect size

- MUSIQ:

-

Model for understanding success in improving quality

- QIC:

-

Quality improvement collaborative

- STROBE:

-

Strengthening the reporting of observational studies in epidemiology

References

Kruk ME, Gage AD, Arsenault C, Jordan K, Leslie HH, Roder-DeWan S, et al. High-quality health systems in the Sustainable Development Goals era: time for a revolution. Lancet Glob Health. 2018;6(11):E1196–E252.

Kilo CM. A framework for collaborative improvement: lessons from the Institute for Healthcare Improvement's Breakthrough Series. Qual Manag Health Care. 1998;6(4):1–13.

Langley GJ, Moen R, Nolan KM, Nolan TW, Norman CL, Provost LP. The improvement guide: a practical approach to enhancing organizational performance: Wiley; 2009.

Wilson T, Berwick DM, Cleary PD. What do collaborative improvement projects do? Experience from seven countries. Jt Comm J Qual Saf. 2003;29(2):85–93.

Schouten LMT, Hulscher MEJL, van Everdingen JJE, Huijsman R, Grol RPTM. Evidence for the impact of quality improvement collaboratives: systematic review. BMJ. 2008;336(7659):1491.

Rowe AK, Rowe SY, Peters DH, Holloway KA, Chalker J, Ross-Degnan D. Effectiveness of strategies to improve health-care provider practices in low-income and middle-income countries: a systematic review. Lancet Glob Health. 2018;6(11):E1163–E75.

Wells S, Tamir O, Gray J, Naidoo D, Bekhit M, Goldmann D. Are quality improvement collaboratives effective? A systematic review. BMJ Qual Saf. 2018;27(3):226–40.

Øvretveit J, Bate P, Cleary P, Cretin S, Gustafson D, McInnes K, et al. Quality collaboratives: lessons from research. Qual Saf Health Care. 2002;11(4):345–51.

Shojania KG, Grimshaw JM. Evidence-based quality improvement: the state of the science. Health Aff. 2005;24(1):138–50.

Mittman BS. Creating the evidence base for quality improvement collaboratives. Ann Intern Med. 2004;140(11):897–901.

Garcia-Elorrio E, Rowe SY, Teijeiro ME, Ciapponi A, Rowe AK. The effectiveness of the quality improvement collaborative strategy in low- and middle-income countries: a systematic review and meta-analysis. PLoS One. 2019;14(10):e0221919.

Franco LM, Marquez L. Effectiveness of collaborative improvement: evidence from 27 applications in 12 less-developed and middle-income countries. BMJ Qual Saf. 2011;20(8):658–65.

Schulz KF, Chalmers I, Hayes RJ, Altman DG. Empirical evidence of bias. Dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA. 1995;273(5):408–12.

Peden CJ, Stephens T, Martin G, Kahan BC, Thomson A, Rivett K, et al. Effectiveness of a national quality improvement programme to improve survival after emergency abdominal surgery (EPOCH): a stepped-wedge cluster-randomised trial. Lancet. 2019.

Dixon-Woods MaM GP. Does quality improvement improve quality? Future Hosp J. 2016;3(3):191–4.

Hulscher MEJL, Schouten LMT, Grol RPTM, Buchan H. Determinants of success of quality improvement collaboratives: what does the literature show? BMJ Qual Saf. 2013;22(1):19–31.

Dixon-Woods M, Bosk CL, Aveling EL, Goeschel CA, Pronovost PJ. Explaining Michigan: developing an ex post theory of a quality improvement program. Milbank Q. 2011;89(2):167–205.

Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review--a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005;10(Suppl 1):21–34.

Rycroft-Malone J, McCormack B, Hutchinson AM, DeCorby K, Bucknall TK, Kent B, et al. Realist synthesis: illustrating the method for implementation research. Implement Sci. 2012;7(1):33.

Pawson R, Tilley N. Realistic evaluation. London: Sage; 1997.

De Silva MJ, Breuer E, Lee L, Asher L, Chowdhary N, Lund C, et al. Theory of Change: a theory-driven approach to enhance the Medical Research Council’s framework for complex interventions. Trials. 2014;15.

Blamey A, Mackenzie M. Theories of Change and Realistic Evaluation. Evaluation. 2016;13(4):439–55.

Breuer E, Lee L, De Silva M, Lund C. Using theory of change to design and evaluate public health interventions: a systematic review. Implement Sci. 2016;11.

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350.

de Silva D. Improvement collaboratives in health care. Evidence scan July 2014. London: The Health Foundation; 2014. Available from: http://www.health.org.uk/publication/improvement-collaboratives-health-care.

Kringos DS, Sunol R, Wagner C, Mannion R, Michel P, Klazinga NS, et al. The influence of context on the effectiveness of hospital quality improvement strategies: a review of systematic reviews. BMC Health Serv Res. 2015;15:277.

Kaplan HC, Provost LP, Froehle CM, Margolis PA. The Model for Understanding Success in Quality (MUSIQ): building a theory of context in healthcare quality improvement. BMJ Qual Saf. 2012;21(1):13–20.

Reed J, Ramaswamy R, Parry G, Sax S, Kaplan H. Context matters: adapting the Model for Understanding Success in Quality Improvement (MUSIQ) for low and middle income countries. Implement Sci. 2017;12((Suppl 1)(48)):23.

Reed JE, Kaplan HC, Ismail SA. A new typology for understanding context: qualitative exploration of the model for understanding success in quality (MUSIQ). BMC Health Serv Res. 2018;18.

Astbury B, Leeuw FL. Unpacking black boxes: mechanisms and theory building in evaluation. Am J Eval. 2010;31(3):363–81.

Dalkin SM, Greenhalgh J, Jones D, Cunningham B, Lhussier M. What's in a mechanism? Development of a key concept in realist evaluation. Implement Sci. 2015;10.

May C, Finch T. Implementing, embedding, and integrating practices: an outline of normalisation process theory. Sociology. 2009;43.

Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82(4):581–629.

von Elm E, Altman DG, Egger M, Pocock SJ, Gotzsche PC, Vandenbroucke JP. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. Lancet. 2007;370(9596):1453–7.

Critical appraisal skills programme. CASP Qualitative Checklist 2007 [8th January 2019]. Available from: https://casp-uk.net/wp-content/uploads/2018/01/CASP-Qualitative-Checklist-2018.pdf.

Hong QN PP, Fàbregues S, Bartlett G, Boardman F, Cargo M, Dagenais P, Gagnon, M-P GF, Nicolau B, O’Cathain A, Rousseau M-C, Vedel I. Mixed Methods Appraisal Tool (MMAT), version 2018. Registration of Copyright (#1148552), Canadian Intellectual Property Office, Industry Canada 2018 [8th January 2019]. Available from: http://mixedmethodsappraisaltoolpublic.pbworks.com/w/file/fetch/127916259/MMAT_2018_criteria-manual_2018-08-01_ENG.pdf.

Hong QN, Gonzalez-Reyes A, Pluye P. Improving the usefulness of a tool for appraising the quality of qualitative, quantitative and mixed methods studies, the Mixed Methods Appraisal Tool (MMAT). J Eval Clin Pract. 2018;24(3):459–67.

Hannes K. Chapter 4: Critical appraisal of qualitative research. In: NJ BA, Hannes K, Harden A, Harris J, Lewin S, Lockwood C, editors. Supplementary Guidance for Inclusion of Qualitative Research in Cochrane Systematic Reviews of Interventions Version 1 (updated August 2011): Cochrane Collaboration Qualitative Methods Group; 2011.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009;6(7):e1000100.

Wong G, Greenhalgh T, Westhorp G, Buckingham J, Pawson R. RAMESES publication standards: realist syntheses. BMC Med. 2013;11(1):21.

Pawson R. Digging for nuggets: how ‘bad’ research can yield ‘good’ evidence. Int J Soc Res Methodol. 2006;9(2):127–42.

Benn J, Burnett S, Parand A, Pinto A, Vincent C. Factors predicting change in hospital safety climate and capability in a multi-site patient safety collaborative: a longitudinal survey study. BMJ Qual Saf. 2012;21(7):559–68.

Linnander E, McNatt Z, Sipsma H, Tatek D, Abebe Y, Endeshaw A, et al. Use of a national collaborative to improve hospital quality in a low-income setting. Int Health. 2016;8(2):148–53.

McInnes DK, Landon BE, Wilson IB, Hirschhorn LR, Marsden PV, Malitz F, et al. The impact of a quality improvement program on systems, processes, and structures in medical clinics. Med Care. 2007;45(5):463–71.

Burnett S, Benn J, Pinto A, Parand A, Iskander S, Vincent C. Organisational readiness: exploring the preconditions for success in organisation-wide patient safety improvement programmes. Qual Saf Health Care. 2010;19(4):313–7.

Colbourn TNB, Costello A. MaiKhanda - Final evaluation report. The impact of quality improvement at health facilities and community mobilisation by women’s groups on birth outcomes: an effectiveness study in three districts of Malawi. London: Health Foundation; 2013.

Amarasingham R, Pronovost PJ, Diener-West M, Goeschel C, Dorman T, Thiemann DR, et al. Measuring clinical information technology in the ICU setting: application in a quality improvement collaborative. J Am Med Inform Assoc. 2007;14(3):288–94.

Sodzi-Tettey ST-DN, Mobisson-Etuk N, Macy LH, Roessner J, Barker PM. Lessons learned from Ghana’s Project Fives Alive! A practical guide for designing and executing large-scale improvement initiatives. Cambridge: Institute for Healthcare Improvement; 2015.

Duckers ML, Spreeuwenberg P, Wagner C, Groenewegen PP. Exploring the black box of quality improvement collaboratives: modelling relations between conditions, applied changes and outcomes. Implement Sci. 2009;4:74.

Catsambas TT, Franco LM, Gutmann M, Knebel E, Hill P, Lin Y-S. Evaluating health care collaboratives: the experience of the quality assurance project. Bethesda: USAID Health Care Improvement Project; 2008.

Marquez L, Holschneider S, Broughton E, Hiltebeitel S. Improving health care: the results and legacy of the USAID Health Care Improvement Project. Bethesda: University Research Co., LLC (URC). USAID Health Care Improvement Project; 2014.

Schouten LM, Hulscher ME, Akkermans R, van Everdingen JJ, Grol RP, Huijsman R. Factors that influence the stroke care team’s effectiveness in reducing the length of hospital stay. Stroke. 2008;39(9):2515–21.

Mills PD, Weeks WB. Characteristics of successful quality improvement teams: lessons from five collaborative projects in the VHA. Jt Comm J Qual Saf. 2004;30(3):152–62.

Carlhed R, Bojestig M, Wallentin L, Lindstrom G, Peterson A, Aberg C, et al. Improved adherence to Swedish national guidelines for acute myocardial infarction: the Quality Improvement in Coronary Care (QUICC) study. Am Heart J. 2006;152(6):1175–81.

Duckers MLA, Stegeman I, Spreeuwenberg P, Wagner C, Sanders K, Groenewegen PP. Consensus on the leadership of hospital CEOs and its impact on the participation of physicians in improvement projects. Health Policy. 2009;91(3):306–13.

Horbar JD, Plsek PE, Leahy K. Nic/Q. NIC/Q 2000: establishing habits for improvement in neonatal intensive care units. Pediatrics. 2003;111(4 Pt 2):e397–410.

Baker U, Petro A, Marchant T, Peterson S, Manzi F, Bergstrom A, et al. Health workers’ experiences of collaborative quality improvement for maternal and newborn care in rural Tanzanian health facilities: a process evaluation using the integrated 'promoting action on research implementation in health services’ framework. PLoS One. 2018;13:12.

Osibo B, Oronsaye F, Alo OD, Phillips A, Becquet R, Shaffer N, et al. Using small tests of change to improve PMTCT services in northern Nigeria: experiences from implementation of a continuous quality improvement and breakthrough series program. J Acquir Immune Defic Syndr. 2017;75(Suppl 2):S165–s72.

Pinto A, Benn J, Burnett S, Parand A, Vincent C. Predictors of the perceived impact of a patient safety collaborative: an exploratory study. Int J Qual Health Care. 2011;23(2):173–81.

Benn J, Burnett S, Parand A, Pinto A, Iskander S, Vincent C. Perceptions of the impact of a large-scale collaborative improvement programme: experience in the UK Safer Patients Initiative. J Eval Clin Pract. 2009;15(3):524–40.

Rahimzai M, Naeem AJ, Holschneider S, Hekmati AK. Engaging frontline health providers in improving the quality of health care using facility-based improvement collaboratives in Afghanistan: case study. Confl Heal. 2014;8:21.

Stone S, Lee HC, Sharek PJ. Perceived factors associated with sustained improvement following participation in a multicenter quality improvement collaborative. Jt Comm J Qual Patient Saf. 2016;42(7):309–15.

Feldman-Winter L, Ustianov J. Lessons learned from hospital leaders who participated in a national effort to improve maternity care practices and breastfeeding. Breastfeed Med. 2016;11(4):166–72.

Ament SM, Gillissen F, Moser A, Maessen JM, Dirksen CD, von Meyenfeldt MF, et al. Identification of promising strategies to sustain improvements in hospital practice: a qualitative case study. BMC Health Serv Res. 2014;14:641.

Duckers ML, Wagner C, Vos L, Groenewegen PP. Understanding organisational development, sustainability, and diffusion of innovations within hospitals participating in a multilevel quality collaborative. Implement Sci. 2011;6:18.

Parand A, Benn J, Burnett S, Pinto A, Vincent C. Strategies for sustaining a quality improvement collaborative and its patient safety gains. Int J Qual Health Care. 2012;24(4):380–90.

Jaribu J, Penfold S, Manzi F, Schellenberg J, Pfeiffer C. Improving institutional childbirth services in rural Southern Tanzania: a qualitative study of healthcare workers’ perspective. BMJ Open. 2016;6:9.

Nembhard IM. Learning and improving in quality improvement collaboratives: which collaborative features do participants value most? Health Serv Res. 2009;44(2 Pt 1):359–78.

Carter P, Ozieranski P, McNicol S, Power M, Dixon-Woods M. How collaborative are quality improvement collaboratives: a qualitative study in stroke care. Implement Sci. 2014;9(1):32.

Nembhard IM. All teach, all learn, all improve?: the role of interorganizational learning in quality improvement collaboratives. Health Care Manag Rev. 2012;37(2):154–64.

Duckers ML, Groenewegen PP, Wagner C. Quality improvement collaboratives and the wisdom of crowds: spread explained by perceived success at group level. Implement Sci. 2014;9:91.

Dainty KN, Scales DC, Sinuff T, Zwarenstein M. Competition in collaborative clothing: a qualitative case study of influences on collaborative quality improvement in the ICU. BMJ Qual Saf. 2013;22(4):317–23.

Michie S, Johnston M, Abraham C, Lawton R, Parker D, Walker A, et al. Making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care. 2005;14(1):26–33.

Cane J, O’Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci. 2012;7.

Atkins L, Francis J, Islam R, O'Connor D, Patey A, Ivers N, et al. A guide to using the Theoretical Domains Framework of behaviour change to investigate implementation problems. Implement Sci. 2017;12.

Herzer KR, Pronovost PJ. Physician motivation: listening to what pay-for-performance programs and quality improvement collaboratives are telling us. Jt Comm J Qual Patient Saf. 2015;41(11):522–8.

Herzberg F. One more time - how do you motivate employees. Harv Bus Rev. 1987;65(5):109–20.

Nickelsen NCM. Five Currents of Organizational Psychology-from Group Norms to Enforced Change. Nord J Work Life Stud. 2017;7(1):87–106.

Dixon-Woods M. The problem of context in quality improvement. In: Health Foundation, editor. Perspectives on context. London: Health Foundation; 2014. p. 87–101.

Senge P. Building learning organizations. In: Pugh DS, editor. Organization Theory - Selected Classic Readings. 5th ed. London: Penguin; 2007. p. 486–514.

Waiswa P, Manzi F, Mbaruku G, Rowe AK, Marx M, Tomson G, et al. Effects of the EQUIP quasi-experimental study testing a collaborative quality improvement approach for maternal and newborn health care in Tanzania and Uganda. Implement Sci. 2017;12(1):89.

Rowe AK, Labadie G, Jackson D, Vivas-Torrealba C, Simon J. Improving health worker performance: an ongoing challenge for meeting the sustainable development goals. BMJ Br Med J. 2018;362.

Colbourn T, Nambiar B, Bondo A, Makwenda C, Tsetekani E, Makonda-Ridley A, et al. Effects of quality improvement in health facilities and community mobilization through women's groups on maternal, neonatal and perinatal mortality in three districts of Malawi: MaiKhanda, a cluster randomized controlled effectiveness trial. Int Health. 2013:iht011.

Bonell C, Warren E, Fletcher A, Viner R. Realist trials and the testing of context-mechanism-outcome configurations: a response to Van Belle et al. Trials. 2016;17(1):478.

Hanson C, Zamboni K, Prabhakar V, Sudke A, Shukla R, Tyagi M, et al. Evaluation of the Safe Care, Saving Lives (SCSL) quality improvement collaborative for neonatal health in Telangana and Andhra Pradesh, India: a study protocol. Glob Health Action. 2019;12(1):1581466.

Moore GF, Evans RE, Hawkins J, Littlecott H, Melendez-Torres GJ, Bonell C, et al. From complex social interventions to interventions in complex social systems: future directions and unresolved questions for intervention development and evaluation. Evaluation (Lond). 2019;25(1):23–45.

Moore GF, Evans RE. What theory, for whom and in which context? Reflections on the application of theory in the development and evaluation of complex population health interventions. SSM Popul Health. 2017;3:132–5.

Shaw J, Gray CS, Baker GR, Denis JL, Breton M, Gutberg J, et al. Mechanisms, contexts and points of contention: operationalizing realist-informed research for complex health interventions. BMC Med Res Methodol. 2018;18(1):178.

Acknowledgements

We thank Alex Rowe MD, MPH at the Centre for Disease Control and Prevention and Commissioner at the Lancet Global Health Commission for Quality Health Systems for the informal discussions that helped conceptualise the study and frame the research questions in the early phases of this work and for access to the Healthcare Provider Database. We also thank Will Warburton at the Health Foundation, London, UK, for his input in refining research questions in the light of QIC experience in the UK and the Safe Care Saving Lives implementation team from ACCESS Health International for their reflections on the implementation of a quality improvement collaborative, which helped refine the theory of change.

Data availability statement

The datasets analysed during the current study are available in the LSHTM repository, Data Compass.

Funding

This research was made possible by funding from the Medical Research Council [Grant no. MR/N013638/1] and the Children’s Investment Fund Foundation [Grant no. G-1601-00920]. The funder had no role in the design, collection, analysis and interpretation of data or the writing of the manuscript in the commissioning of the study or in the decision to submit this manuscript for publication.

Author information

Authors and Affiliations

Contributions

KZ, CH, ZH and JS conceived and designed the study. KZ performed the searches. KZ, UB and MT analysed data. MT and KZ completed quality assessment of included papers. KZ, UB, CH, MT, ZH and JS wrote the paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

N/A

Consent for publication

Consent for publication was received from all individuals mentioned in the acknowledgement section.

Competing interests

The authors declare that they have no competing interests

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

Search terms used.

Additional file 2.

Systematic review alignment with RAMESES publication standards checklist.

Additional file 3.

Quality appraisal of included studies.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Zamboni, K., Baker, U., Tyagi, M. et al. How and under what circumstances do quality improvement collaboratives lead to better outcomes? A systematic review. Implementation Sci 15, 27 (2020). https://doi.org/10.1186/s13012-020-0978-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-020-0978-z