Abstract

Background

Few training opportunities are available for implementation practitioners; we designed the Practicing Knowledge Translation (PKT) to address this gap. The goal of PKT is to train practitioners to use evidence and apply implementation science in healthcare settings. The aim of this study was to describe PKT and evaluate participant use of implementation science theories, models, and frameworks (TMFs), knowledge, self-efficacy, and satisfaction and feedback on the course.

Methods

PKT was delivered to implementation practitioners between September 2015 and February 2016 through a 3-day workshop, 11 webinars. We assessed PKT using an uncontrolled before and after study design, using convergent parallel mixed methods. The primary outcome was use of TMFs in implementation projects. Secondary outcomes were knowledge and self-efficacy across six core competencies, factors related to each of the outcomes, and satisfaction with the course. Participants completed online surveys and semi-structured interviews at baseline, 3, 6, and 12 months.

Results

Participants (n = 15) reported an increase in their use of implementation TMFs (mean = 2.11; estimate = 2.11; standard error (SE) = 0.4; p = 0.03). There was a significant increase in participants’ knowledge of developing an evidence-informed, theory-driven program (ETP) (estimate = 4.10; SE = 0.37; p = 0.002); evidence implementation (estimate = 2.68; SE = 0.42; p < 0.001); evaluation (estimate = 4.43; SE = 0.36; p < 0.001); sustainability, scale, and spread (estimate = 2.55; SE = 0.34; p < 0.001); and context assessment (estimate = 3.86; SE = 0.32; p < 0.001). There was a significant increase in participants’ self-efficacy in developing an ETP (estimate = 3.81; SE = 0.34; p < 0.001); implementation (estimate = 3.01; SE = 0.36; p < 0.001); evaluation (estimate = 3.83; SE = 0.39; p = 0.002); sustainability, scale, and spread (estimate = 3.06; SE = 0.46; p = 0.003); and context assessment (estimate = 4.05; SE = 0.38; p = 0.016).

Conclusion

Process and outcome measures collected indicated that PKT participants increased use of, knowledge of, self-efficacy in KT. Our findings highlight the importance of longitudinal evaluations of training initiatives to inform how to build capacity for implementers.

Similar content being viewed by others

Background

Implementation science is a rapidly growing field in which significant advances in the methods and effectiveness of implementation interventions have been made [1,2,3]. To keep abreast with the rapid advances in implementation science, there is a need to train implementers (i.e., those tasked with implementing healthcare evidence) to use the outputs of this science. While capacity building in implementation science is expanding, the targets of these courses are typically researchers and not implementers [4,5,6,7,8,9]. Courses that target implementers are largely focused on interpreting evidence and facilitating dissemination [10,11,12,13,14,15]. These courses do not address an expressed need: in one survey of implementers, nearly 80% of respondents wanted to improve knowledge and skills related to implementation [16]. Through surveys, interviews, and an in-person meeting conducted with over 200 Canadian implementers [17], key barriers to applying evidence in practice were lack of organizational capacity (i.e., knowledge and skills) and organizational infrastructure to support implementation. Competency-based training for evidence implementers, which uses consistent standards and objective parameters to assess changes in participants’ knowledge, skills, and outcomes [18, 19], is one approach to meet the capacity demand. When we delivered this course, there were no existing core competencies for the practice of implementation. Core competencies for implementation science have been identified by various groups, which can serve as the basis for implementation practice core competencies [20,21,22,23,24]. More recently, the National Implementation Research Network developed a working draft of core competencies for an implementation specialist [25].

There is also a gap in the literature describing evaluations of training activities for implementation scientists and practitioners. In response to an editorial in Implementation Science that emphasized the need to rigorously evaluate capacity building initiatives to advance the science and practice of dissemination and implementation [26], several evaluations of training initiatives were published [8, 22, 24, 27]. However, to date, many of these studies evaluated training initiatives that targeted researchers with fewer focused on evidence implementation [28].

Recognizing the need to provide training for implementers and the scarcity of courses, we developed and evaluated the Foundations in Knowledge Translation (KT) Training Initiative [29] and used the findings from its evaluation to inform ongoing KT training initiatives. KT is the term used in Canada to describe dissemination and implementation. We designed the Practicing Knowledge Translation (PKT) course to build on what was learned from the Foundations in KT Training Initiative such as the importance of engaging teams, ensuring opportunities for long-term training and coaching, and tailoring the course to the needs of the team members. Furthermore, for the PKT course, we added modules with more detail on mapping interventions to meet participants’ needs that were identified in the Foundations of KT course. The goal of the PKT course is to provide training in how to use evidence and apply implementation science in healthcare settings. The aim of this study was to describe the PKT course and evaluate participant use of implementation science theories, models, and frameworks (TMFs), knowledge of, self-efficacy in KT, and satisfaction and feedback on the course.

Methods

Intervention development

Course content

The goal of the course was for participants to increase their use of TMFs, knowledge, skills, and self-efficacy in KT intervention development and implementation. Course content was framed using the knowledge-to-action (KTA) [30] cycle, which guides implementation and incorporates behavior change theories, frameworks, and evaluation models [31]. The KTA cycle was selected because it was developed from a review of more than 30 planned action theories. PKT content was organized into four overarching topics: KT basics, developing an intervention, implementation, and evaluation and sustainability. Since operationalizing the KTA requires adding TMFs, we used Nilsen’s taxonomy as the foundation for understanding and appropriately applying TMFs to each stages of the KTA [31]. When possible, we used TMFs that were developed from reviews of the literature to inform course content. Additional file 1 presents the methods and results to develop core competencies in implementation practice, which were used to inform course content.

Course structure and delivery

The overall course structure and delivery was informed by behavior change theory (the capability, opportunity, motivation–behavior theory [32]), adult learning theory and practices [33,34,35,36,37], evidence of effectiveness from systematic reviews [38,39,40], and feedback from previous KT training initiatives [29]. We have provided a summary of course structure and delivery using components of the TIDieR framework [41] (Table 1). More information on the PKT course is publically available online https://knowledgetranslation.net/education-training/pkt/.

The course developers and facilitators had a wide range of experience including training in the fields of medicine, public health, adult education; expertise and academic training in conducting quantitative and qualitative research; and practical experience with implementing health and social services with multiple stakeholders in local, national, and international settings (Table 2).

Course participants and recruitment

The PKT course was advertised between June and August 2015 using recruitment emails shared with the course developers’ circle of contacts (e.g., implementation researchers, healthcare professionals, project and grant collaborators, participants of previous KT training initiatives). Recruitment ads were posted in online forums and newsletters (e.g., KT Canada Newsletter, Canadian Knowledge Transfer and Exchange Community of Practice, British Columbia Knowledge Translation Community of Practice etc.). Interested participants were invited to complete an application describing their roles, previous experience with implementation, and their interest in participating in PKT. They were also asked to briefly describe project(s) they worked on, their learning goals, and anticipated benefits of participating in PKT. Two course developers reviewed the 19 applications received to assess alignment of the course objectives with applicants’ learning goals and interest in participating, scope and relevance of the identified project(s), and applicants’ position to impact healthcare outcomes. Eligible participants included clinicians, researchers, healthcare managers, and policy makers.

Evaluation

The PKT course was offered between September 2015 and February 2016 and was assessed using an uncontrolled before and after study design and a process evaluation, using convergent parallel mixed methods [42, 43]. Quantitative and qualitative data were collected concurrently to converge findings and provide a robust analysis.

Outcomes

The primary outcome was participants’ use of TMFs in implementation projects. We developed a six-item questionnaire to assess participant use of implementation TMFs (Additional file 2: Part 3—Current KT practice). Each question aligned with one core competency (e.g., use behavior change theory to develop a program). Participants responded to questions using a 5-point scale from “never” to “always.” Secondary outcomes were knowledge and self-efficacy across the six core KT competencies (Additional file 2: Part 1—Knowledge; Part 2—Self-efficacy). These knowledge and self-efficacy questions mapped to the core competencies and indicators (Additional file 1). There were 4 to 11 questions per core competency; mean scores were created for each core competency at each time point. For each knowledge question, participants were asked to rate “I currently have a high level of knowledge in…” for each core competency indicator on a scale from 1 to 7 (strongly disagree to strongly agree). For self-efficacy questions, they were asked to respond to the statement “I am confident in my ability to do the following activities in practice” using a similar Likert scale from 1 to 7. Participants were asked seven questions about course satisfaction (Additional file 2: Part 4—Participant satisfaction).

Data collection

Participants completed online surveys on FluidSurveys at baseline, 3, 6, and 12 months after the start of the course to assess use of TMFs, knowledge, self-efficacy, and factors affecting each of these outcomes, using Likert scales from 1 (strongly disagree) to 7 (strongly agree). To maximize response rates, reminders were sent at 1-, 3-, and 7-week intervals after the initial email [44]. Demographic data included the number of years participants had worked in healthcare (Table 3). Participants participated in semi-structured interviews at 3, 6, and 12 months after the start of the course to explore changes in use of TMFs, knowledge, self-efficacy, factors related to use of TMFs, knowledge, and self-efficacy. The semi-structured interview guide was developed to elicit feedback on the participant’s implementation project, use of implementation TMFs, knowledge, self-efficacy, and course satisfaction (see Additional file 3). All interviews were audio recorded and transcribed; transcripts comprised the primary source of data.

Data analysis

Quantitative analyses were conducted using SPSS v 20 (Chicago, IL). To determine if participant outcomes differed over time, multilevel modeling was used with a first-order autoregressive covariance matrix. We used a maximum likelihood estimation model, estimated multiple models to determine the correct error structure using a two-level model with “time” at level 1 and “between-person” variance at level 2; models were chosen based on the Schwarz’s Bayesian criterion. Qualitative analyses were conducted with QSR NVivo 10 using a double-coded, thematic analysis approach [45]. Two independent analysts read four interview transcripts and developed an initial coding tree. Analysts read the four transcripts to familiarize themselves with the data and generated a list of initial codes to apply to the data. This list of codes formed the coding tree, which was then applied to the remaining transcripts, and the coding tree was iteratively refined through discussion. The codebook was systematically applied to the remainder of transcripts, and if any emergent themes appeared in the data, the coding tree was expanded. All data were double coded, and inter-rater reliability between the analysts was calculated using Cohen’s kappa. Any coding discrepancies of between − 1 and 0.6 were discussed and resolved. Once all data were double coded, analysts used charting and visualization tools in NVivo to further explore the data and derive the main themes.

Ethics approval

Ethics approval was obtained from the St. Michaels Hospital Research Ethics Board (#15-204). Written consent to participate in the course evaluation was obtained from all participants before course onset. Participation in the course evaluation was voluntary and not connected to course performance; no monetary compensation was awarded.

Results

Evaluation

Participants

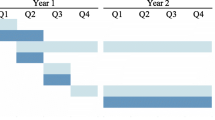

Of the 17 participants enrolled in the PKT course, 15 consented to participate in the course evaluation. The majority of evaluation participants had more than 10 years’ experience working in healthcare (53%), were female (73%), and based in Canada (67%) (Table 3). Participants’ attendance in webinars/workshops and assignment completion decreased over the course duration (Fig. 1).

Participants’ attendance and assignment completion over the PKT course duration. *Attendance reflects the number of “live” attendees during a webinar. It is possible that some course participants accessed the webinar recordings post-webinar and viewed them asynchronously; those numbers are not reflected in this figure

Response rates

Survey response rates among PKT participants decreased over the four time points; response was 80% at baseline (n = 12), 80% at 3 months (n = 12), 53% at 6 months (n = 8), and 40% at 12 months (n = 6). Of the survey participants, 87% completed surveys at two or more time points. Interview participation varied across the three time points: 40% at 3 months (n = 6), 53% at 6 months (n = 8), and 33% at 12 months (n = 5). Of the interview participants, 56% participated in an interview at more than one time point (Fig. 2).

Change in participants’ use of implementation TMFs

Participants reported an increase in their current use of implementation TMFs (mean = 2.11; estimate = 2.11; standard error (SE) = 0.4; p = 0.03). Through the interviews, participants described a change in the way they designed, implemented, and assessed interventions and that they approached these activities in a more rigorous and systematic way since participating in the course (Table 4). At 3 months, some participants described that they sought opportunities to apply KT concepts and use resources provided in the workshop in their work. At 6 months, some participants reported applying TMFs directly in their work. At 12 months, some participants conveyed that they were completing steps of the KTA cycle they had not previously performed (e.g., conducting barrier/facilitator assessments, using frameworks such as the Theoretical Domains Framework and the Consolidated Framework for Implementation Research, developing evidence-informed, theory-driven programs (ETPs) and KT tools etc.). They also described using course resources on a regular basis to inform their work.

Participants described assessing projects underway in their organizations’ to see if projects had been planned and implemented using implementation TMFs. Participants explained that they looked for ways to introduce KT concepts in their organization (e.g., through informal conversations, lunch and learn sessions, workshops). See Table 4 for examples of participants’ use of implementation TMFs at an organizational level.

Factors influencing participants’ use of implementation TMFs

Complexity of KT

Participants said that it was challenging to apply implementation TMFs, since it was a slow, iterative, and complex process. Participants described requiring dedicated time to think through and systematically apply KT content learned through the course. Additionally, they thought their environment was not always conducive to applying KT, as they worked in a healthcare system with constant pressure to deliver quickly, and to make quick decisions and trade-offs to optimize time and resources. In some cases, applying implementation TMFs to projects that were mid-stream required participants to change the original plan (i.e., going backwards before being able to move forward). Participants described KT concepts as being helpful for project planning, but they found practical application to be challenging. Despite these challenges, participants recognized the benefits of applying implementation TMFs and how using KT concepts helped them make progress with their project, which reinforced their intention to use these concepts over time.

Organization valuing KT

Using KT concepts within an organization required staff at home organizations, who did not participate in the course, to recognize the value of KT. A few participants mentioned challenges within their organizations, for example, feeling like they were a “lone ranger” as level of receptivity and support for KT varied. Complexity of KT concepts meant it was challenging for participants to find a balance between sharing in-depth information about KT versus providing sufficient information for others to use KT principles. Working with individuals in their organization who were knowledgeable about KT was perceived as being helpful in applying implementation TMFs at an organizational level.

Organizational support and resources

Use of implementation TMFs at the organizational level was influenced by the alignment of KT with organizational priorities and interest (e.g., whether the organization prioritized the use of evidence-based KT interventions, placed emphasis on performance measurements and evaluation, had a supportive learning culture emphasizing continued professional development etc.). Some organizations prioritized KT (e.g., were tasked with a provincial agenda to give people KT support as part of their organization’s portfolio). Other participants reported having support from their organization and leadership to use KT approaches, yet did not necessarily feel that there was understanding and support for the time and resources required to build KT capacity and infrastructure to conduct KT activities. Some participants realized that many of their organizations’ projects were focused on and/or were funded for dissemination rather than implementation. Others stated that their organization had competing priorities, such that non-KT projects took precedence.

Change in knowledge

Across all time points, there was a significant increase in participants’ knowledge in the following core competencies: developing an ETP (estimate = 4.10; SE = 0.37; p = 0.002); evidence implementation (estimate = 2.68; SE = 0.42; p < 0.001); evaluation (estimate = 4.43; SE = 0.36; p < 0.001); sustainability, scale, and spread (estimate = 2.55; SE = 0.34; p < 0.001); and context assessment (estimate = 3.86; SE = 0.32; p < 0.001; Table 5). Participants did not report an increase in knowledge in the core competency of stakeholder engagement and relationship building. During interviews, participants expressed that the course enabled them to deepen their understanding of KT (Table 4). At 3 months, participants described experiencing a deeper understanding of what KT is and how to approach it, particularly the KT steps. At 6 months, these participants continued to express an increase in knowledge, shifting from a theoretical understanding to a more practical understanding as they were now incorporating KT concepts into their work. They recognized the need to be more systematic in thinking through the complexities and planning for effective implementation. At 12 months, participants emphasized their increased knowledge and described how they better understood the importance of using a theory to underpin activities for behavior change and assessing context, and of developing relationships and engaging end-users when planning for implementation, when compared to course onset.

Factors influencing participants’ change in knowledge

During the interviews, a number of participants stated that they had no prior exposure to KT; some said that they had started the course not knowing what KT was, yet knowing that they needed to learn it. Others described having no previous knowledge of KT, yet were able to recognize how some of the concepts overlapped with their previous training and experience in other fields (e.g., continuing professional development, project management, quality improvement, change management, behavior psychology etc.). Most of the participants who were aware of KT mentioned how they were already aware of and/or applying some of these concepts (e.g., using high-quality evidence for program selection, using an evidence-based approach for program implementation, assessing context, and engaging stakeholders etc.) in their work but had not identified these as KT concepts or were more familiar with using other terms to describe these approaches. They were able to link PKT course content to their previous learning, which helped confirm and refine some of the work they were doing. A few participants had background knowledge of KT but described how they thought of KT in a slightly different way at course onset; for example, they had learned about KT from a theoretical perspective and were less familiar with its practical application. Participants who had received previous KT training mentioned that they had not been aware of the distinction between dissemination and implementation, and most of them were more knowledgeable about dissemination than implementation.

Change in self-efficacy

There was a significant increase in participants’ self-efficacy in developing an ETP (estimate = 3.81; SE = 0.34; p < 0.001); implementation (estimate = 3.01; SE = 0.36; p < 0.001); evaluation (estimate = 3.83; SE = 0.39; p = 0.002); sustainability, scale, and spread (estimate = 3.06; SE = 0.46; p = 0.003); and context assessment (estimate = 4.05; SE = 0.38; p = 0.016; Table 5). At 3 months, participants indicated that although they were comfortable with course concepts, they required opportunities for application of these concepts to enhance their confidence in applying TMFs. During the 6-month interviews, participants, particularly those who had identified as not having previous KT experience, noted an increase in confidence in their KT work. Some participants expressed that they did not yet feel comfortable leading a KT project on their own but would feel comfortable participating in conversations about KT and contributing ideas in meetings in their home organizations. At 12 months, participants indicated gaining confidence in performing KT activities and feeling like they were prepared to use KT concepts when needed. Participants anticipated gaining experiential self-efficacy through opportunities to apply their course learning to their work (Table 4).

Factors influencing participants’ change in self-efficacy

Although there was a reported increase in self-efficacy across all core competencies, participants reported needing direction and support from KT experts to reinforce confidence, particularly when building KT capacity in their home organizations. Participants indicated that their confidence in applying implementation TMFs was influenced by their roles (e.g., whether or not they had roles, which involved applying implementation TMFs as part of their daily work), level of experience within their role (e.g., whether they were advanced practitioners or beginners), and scope of practice (e.g., whether they were frontline implementers themselves or responsible for supporting front-line implementers). For example, one participant described how her role was to be a KT resource person for her organization and participate in tasks such as providing feedback on KT sections on grant proposals; she perceived this role helped to reinforce self-efficacy in providing a KT perspective on projects. The opportunity to apply KT concepts to specific implementation projects was similarly perceived to increase participants’ self-efficacy. Participants explained how participating in the course with a specific implementation project was helpful in allowing them to directly apply course concepts. Simple projects with a defined practice change and target audience were perceived to be easier than complex projects that required organizational and system-level change. Since having an active implementation project was recommended but not required for course participants, those participants without implementation projects said it would have been helpful to have an implementation project to make assignments more meaningful. These participants described how using hypothetical examples and hearing about other participants’ projects helped demonstrate how concepts could be applied to the projects they were envisioning. Similarly, exposure to a variety of projects at different stages of implementation, through their own and other participants’ projects, enabled participants to recognize how content could be applied at each stage and across different projects.

Course satisfaction

Participant satisfaction was high across all time points, means ranged from 6.25 to 6.63 on a 7-point scale. Participants highlighted key strengths of the course including its focus on having a practical lens to implementation, providing access to course facilitators, and the opportunity to network and learn from others. Participants expressed that they were highly satisfied with both the content and the format of the course, as the course allowed them to learn and feel prepared and confident to practice KT. They described that a key highlight of the course was the facilitators’ encouragement throughout the course and the use of examples and analogies to explain complex concepts so that they were understandable and applicable.

Participants reported that the webinars and bi-weekly assignments helped them to stay abreast with course content, although this was reported by those who continued to complete webinars and assignments. The online learning component was perceived to provide easy access to course materials. The course materials were described as being very helpful and a good balance between in-depth theory and practical examples. Access to an implementation facilitator was perceived to have been a critical support for directly applying implementation TMFs to participants’ own work. Participants appreciated feedback on assignments, particularly input on their ETP development and KT plans. Qualitative data on participant satisfaction is presented in Additional file 4.

Participants shared suggestions for improving the course (Table 6). Since delivering and evaluating this iteration of the PKT course, we delivered the course 7 times with an effort to address these improvement suggestions. Changes included an increased focus on engaging stakeholders, building relationships and gaining implementation buy-in. We encouraged applicants from the same organizations to participate together to optimize impact within the organization. Within the larger workshops, we organized participants at similar levels and roles (e.g., policy makers vs. researchers vs. health care managers etc.) for small group activities to foster discussions based on collective experiences. We continue to identify ways to increase the affordability and sustainability of this course, such as providing online courses.

Discussion

Six core competencies were used to develop, deliver, and evaluate the PKT course (see Additional file 1). Participation in the PKT course was associated with increased use of implementation TMFs, knowledge, and self-efficacy over the duration of the course, and these changes were sustained 12 months after course onset. After the course was completed, we observed statistically significant differences in all measured core competencies except stakeholder engagement and relationship building. We likely did not see a change in stakeholder engagement and relationship building because this was the least covered topic in the course with the least amount of concrete approaches that result in positive outcomes. Our observed outcomes were influenced by factors such as participant’s role in their organization, stage and scope of their projects, and level of organizational and leadership support for using KT.

Findings from this evaluation are comparable with other studies evaluating dissemination and implementation training initiatives and identified a number of factors that can be leveraged to enhance future initiatives for implementers [22, 24, 27]. Course facilitators can consider participants’ previous experience with KT (e.g., beginner, intermediate, advanced) [46] and fit with participants’ previous learning and experience from other fields (e.g., terminology and concepts from physician education, project management, quality improvement, change management, behavior psychology etc.). Building upon previous knowledge can help scaffold and reinforce learning [34, 47]. To help increase self-efficacy in KT, training initiatives can link to participants’ scope of practice (e.g., whether they are intervention developers, frontline implementers, or responsible for supporting front-line implementers) and opportunities to apply KT concepts. This is consistent with findings from other evaluations of dissemination and implementation research training programs [2]. In addition, experiential learning techniques such as problem-based learning can be leveraged to maximize skill transfer [48, 49], and implementation researchers and practitioners appreciate an emphasis on the application of theories through problem-based scenarios [27]. This research is reflected in PKT course participants’ reports that it was helpful to have their own project to work on throughout the course.

Consistent with other studies evaluating capacity building programs [6, 24, 27], PKT participants valued informal opportunities to network with other implementers and navigate through challenges associated with using TMFs (e.g., how to identify and select appropriate TMFs, when and how to use them etc.) with others in the course. Several participants continued to connect and meet, and there was a course participant who switched organizations to work with another course participant. These networking opportunities can be used to leverage the knowledge and skills acquired through the course. Similar to other studies that have assessed the use of mentorship approaches in implementation science capacity building initiatives [6, 46, 50], PKT participants valued having access to an implementation facilitator to provide support in applying course content to their work through regular check-ins and feedback.

This evaluation was unique in that we assessed reported use of TMFs at the organizational level, going beyond individual measures of knowledge and self-efficacy. Although all participants had some level of organizational support (i.e., the organization paid for them to attend), there was variability in organizational learning and absorptive capacity [51, 52]. The absorptive capacity of the organizations (including organizational priorities, organizational readiness, and buy-in) in combination with the participants’ organization roles (e.g., being responsible for KT, having the influence or capacity to transform learning and processes embedded within the organization) affected participants’ experiences in championing KT within the organization. These findings have implications for which participants/organizations should be accepted and for organizations in how they support participants after PKT.

Some limitations should be noted. First, we had a small sample size; the number of course participants was intentionally small to allow for individualized learning, support, and coaching. Second, survey and interview respondents’ response rates decreased over time. Not all participants completed the surveys or interviews. It should be noted that the response rate was highest at the 3-month time point, suggesting there was greater engagement immediately after the workshop. It is possible that participants who were less engaged in the course did not participate in the assignments, surveys, and interviews; as a result, their perspectives and outcomes were not captured. There was also attrition in survey and interview participation over time due to changes in participant’s role in their organization or changes in their employment status. Third, we used a quasi-experimental research design without the use of a control group. For this reason, causal inferences regarding predictors and outcomes cannot be made. Fourth, our data were collected using self-report measures, some of which had not been assessed for validity, specifically those measuring knowledge, self-efficacy, and use of TMFs. However, the survey results were consistent with the interview results. Fifth, we assessed outcomes at the level of individual course participants; outcomes at an organizational level warrant further exploration.

As healthcare systems seek new approaches to increase capacity in effective evidence-based implementation, the PKT course can be used as a model to inform how to develop, deliver, and tailor continuing professional development initiatives to help build capacity at individual and organizational levels. The core competencies identified for the practice of implementation can help organizations and decision makers assess and monitor competencies of practitioners so they can identify and address capacity gaps and needs to enhance their implementation efforts. Next steps for future research include developing a valid and reliable measure of practitioners’ knowledge and self-efficacy and adapting valid, reliable tools to measure change in behavior, intention to use, and actual use of implementation science.

Conclusion

Process and outcome measures collected over 12 months indicated that participation in the PKT course increased participants’ knowledge, self-efficacy, and use of implementation TMFs and the factors that moderated these outcomes such as participation in a network of learners. Our findings highlight the importance of conducting longitudinal evaluations of training initiatives to help inform how to build capacity for implementers.

Abbreviations

- ETP:

-

Evidence-informed, theory-driven program

- KT:

-

Knowledge translation

- KTA:

-

Knowledge-to-action

- PKT:

-

Practicing knowledge translation

- SE:

-

Standard error

- TMFs:

-

Theories, models, and frameworks

References

Norton WE, Lungeanu A, Chambers D, Contractor N. Mapping the growing discipline of dissemination and implementation science in health. 2017;112 https://doi.org/10.1007/s11192-017-2455-2.

Chambers DA, Proctor EK, Brownson RC, Straus SE. Mapping training needs for dissemination and implementation research: lessons from a synthesis of existing D&I research training programs. Translational Behavioral Medicine. 2017;7:593–601. https://doi.org/10.1007/s13142-016-0399-3.

Wensing M. Implementation science in healthcare: introduction and perspective. Z Evid Fortbild Qual Gesundhwes. 2015;109:97–102. https://doi.org/10.1016/j.zefq.2015.02.014.

Morrato EH, Rabin B, Proctor J, Cicutto LC, Battaglia CT, Lambert-Kerzner A, et al. Bringing it home: expanding the local reach of dissemination and implementation training via a university-based workshop. Implement Sci. 2015;10:94. https://doi.org/10.1186/s13012-015-0281-6.

Meissner HI, Glasgow RE, Vinson CA, Chambers D, Brownson RC, Green LW, et al. The U.S. training institute for dissemination and implementation research in health. Implementation Science. 2013;8:12. https://doi.org/10.1186/1748-5908-8-12.

Proctor EK, Landsverk J, Baumann AA, Mittman BS, Aarons GA, Brownson RC, et al. The implementation research institute: training mental health implementation researchers in the United States. Implement Sci. 2013;8:105. https://doi.org/10.1186/1748-5908-8-105.

What is the MT-DIRC. MT-DIRC. 2018. http://mtdirc.org/. Accessed 24 Nov 2017.

Marriott BR, Rodriguez AL, Landes SJ, Lewis CC, Comtois KA. A methodology for enhancing implementation science proposals: comparison of face-to-face versus virtual workshops. Implementation Science : IS. 2016;11:62. https://doi.org/10.1186/s13012-016-0429-z.

Implementation Science Masterclass Tuesday 11 and Wednesday 12 July 2017. Collaboration for Leadership in Applied Health Research and Care South London. 2017. http://www.clahrc-southlondon.nihr.ac.uk/events/2017/implementation-science-masterclass-tuesday-11-and-wednesday-12-july-2017. Accessed 12 Nov 2017.

UCSF Online implementation science courses and certificate program, in implementation science news.2017.

Brownson RC, Fielding JE, Green LW. Building capacity for evidence-based public health: reconciling the pulls of practice and the push of research. Annu Rev Public Health. 2017; https://doi.org/10.1146/annurev-publhealth-040617-014746.

Scientist knowledge translation training workshop. Knowledge Translation Programs. 2018 http://www.sickkids.ca/Learning/AbouttheInstitute/Programs/Knowledge-Translation/2-Day-Scientist-Knowledge-Translation-Training/index.html. Accessed 15 Feb 2018.

Dunagan PB, Kimble LP, Gunby SS, Andrews MM. Attitudes of prejudice as a predictor of cultural competence among baccalaureate nursing students. J Nurs Educ. 2014;53(6):320–8.

EBCP workshop. 2018. http://ebm.mcmaster.ca/. Accessed 15 Feb 2018.

Educational program & training. 2018. https://www.cebm.net/education-and-training/. Accessed 15 Feb 2018.

The Michael Smith Foundation for Health Research. Training and resources to support research use: a BC needs assessment; 2012.

Knowledge Translation Program. breaKThrough: a knowledge translation support service report on findings of a needs assessment and exploration of growth opportunities. Toronto: St. Michael’s Hospital; 2013.

Leung WC. Competency based medical training: review. BMJ. 2002;325:693–6.

Thacker SB, Brownson RC Practicing Epidemiology. How competent are we? Public Health Rep. 2008;123:4–5.

Padek M, Colditz G, Dobbins M, Koscielniak N, Proctor EK, Sales AE, et al. Developing educational competencies for dissemination and implementation research training programs: an exploratory analysis using card sorts. Implement Sci. 2015;10:114. https://doi.org/10.1186/s13012-015-0304-3.

Straus SE, Brouwers M, Johnson D, Lavis JN, Légaré F, Majumdar SR, et al. Core competencies in the science and practice of knowledge translation: description of a Canadian strategic training initiative. Implement Sci. 2011;6(1):127.

Ullrich C, Mahler C, Forstner J, Szecsenyi J, Wensing M. Teaching implementation science in a new Master of Science Program in Germany: a survey of stakeholder expectations. Implement Sci. 2017;12:55. https://doi.org/10.1186/s13012-017-0583-y.

Gonzales R, Handley MA, Ackerman S, O’Sullivan PS. Increasing the translation of evidence into practice, policy, and public health improvements: a framework for training health professionals in implementation and dissemination science. Acad Med. 2012;87:271–8. https://doi.org/10.1097/ACM.0b013e3182449d33.

Brownson RC, Proctor EK, Luke DA, Baumann AA, Staub M, Brown MT, et al. Building capacity for dissemination and implementation research: one university’s experience. Implementation Science : IS. 2017;12:104. https://doi.org/10.1186/s13012-017-0634-4.

Metz A, Louison L, Ward C, and Burke K. Global implementation specialist practice profile. 2018. https://nirn.fpg.unc.edu/resources/is-practice-profile.

Straus SE, Sales A, Wensing M, Michie S, Kent B, Foy R. Education and training for implementation science: our interest in manuscripts describing education and training materials. Implement Sci. 2015;10:136.

Carlfjord S, Roback K, Nilsen P. Five years’ experience of an annual course on implementation science: an evaluation among course participants. Implement Sci. 2017;12:101. https://doi.org/10.1186/s13012-017-0618-4.

EXTRA Program for Healthcare Improvement. Foundation for Healthcare Improvement (CFHI) 2018. https://www.cfhi-fcass.ca/WhatWeDo/extra. Accessed 15 Feb 2018.

Park JS, Moore JE, Sayal R, Holmes BJ, Scarrow G, Graham ID, et al. Evaluation of the “Foundations in Knowledge Translation” training initiative: preparing end users to practice KT. Implement Sci. 2018;13(1):63.

Graham ID, Logan J, Harrison MB, Straus SE, Tetroe J, Caswell W, et al. Lost in knowledge translation: time for a map? J Contin Educ Health Prof. 2006;26:13–24.

Nilsen P Making sense of implementation theories, models and frameworks. Implementation Science 2015; 10: 53.

Michie S, van Stralen MM, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implementation science. 2011;6:42.

Mezirow J. Transformative dimensions of adult learning: San Francisco: Jossey-Bass; 1991.

Fosnot CT, Perry RS. Constructivism: theory, perspectives, and practice. Vol. 2. New York: Teachers College Press; 2005. p. 8–33.

Kolb DA. Experiential learning: experience as the source of learning and development. Englewood Cliffs: Prentice-Hall; 1984.

Conrad R-M, Donaldson JA. Continuing to engage the online learner: more activities and resources for creative instruction. Vol. 35. New York: Wiley; 2012.

Lehman RM, Conceição SC. Motivating and retaining online students: research-based strategies that work: San Francisco: Jossey-Bass; 2013.

Forsetlund L, Bjorndal A, Rashidian A, Jamtvedt G, O’Brien MA, Wolf F, et al. Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2009;2:CD003030

Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300:1181–96.

Giguère A, Légaré F, Grimshaw J, Turcotte S, Fiander M, Grudniewicz A, et al. Printed educational materials: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;(10):CD004398.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687.

Palinkas LA, Aarons GA, Horwitz S, Chamberlain P, Hurlburt M, Landsverk J. Mixed method designs in implementation research. Admin Pol Ment Health. 2011;38(1):44–53.

Fetters MD, Curry LA, Creswell JW. Achieving integration in mixed methods designs—principles and practices. Health services research. 2013;48(6pt2):2134–56.

Dillman DA. Mail and telephone surveys: the total design method. Vol. 19. New York: Wiley; 1978.

Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3:77–101.

Padek M, Mir N, Jacob RR, Chambers DA, Dobbins M, Emmons KM, et al. Training scholars in dissemination and implementation research for cancer prevention and control: a mentored approach. Implement Sci. 2018;13(1):18.

Salyers V, Carter L, Cairns S, and Durrer L The use of scaffolding and interactive learning strategies in online courses for working nurses: implications for adult and online education. 2014.

Hmelo-Silver CE. Problem-based learning: what and how do students learn? Educ Psychol Rev. 2004;16:235–66.

Furman N, Sibthorp J. Leveraging experiential learning techniques for transfer. New Directions for Adult and Continuing Education. 2013;2013:17–26.

Osanjo GO, Oyugi JO, Kibwage IO, Mwanda WO, Ngugi EN, Otieno FC, et al. Building capacity in implementation science research training at the University of Nairobi. Implementation Science : IS. 2016;11:30. https://doi.org/10.1186/s13012-016-0395-5.

Hutchinson A, Estabrooks C. Theories of KT: educational theories. In: Straus SE, Tetroe J, Graham ID, editors. Knowledge translation in health care. Oxford: Wiley/Blackwell; 2009.

Heifetz R, Linsky M, Grashow A. Practice of adaptive leadership: tools and tactics for changing your organization and the world. Boston: Harvard Business Review Press; 2009.

Knowles MS, Holton EF, Holton E, Swanson RA. The adult learner: the definitive classic in adult education and human resource development. New York: Routledge; 2005.

Sachdeva AK. Use of effective feedback to facilitate adult learning. J Cancer Educ. 1996;11(2):106–18.

Wandersman A, Chien VH, Katz J. Toward an evidence-based system for innovation support for implementing innovations with quality: tools, training, technical assistance, and quality assurance/quality improvement. Am J Community Psychol. 2012;50:445–59.

Leeman J, Calancie L, Hartman MA, Escoffery CT, Herrmann AK, Tague LE, et al. What strategies are used to build practitioners’ capacity to implement community-based interventions and are they effective?: a systematic review. Implement Sci. 2015;10:80.

Practicing knowledge translation. 2015. Accessed 30 Mar 2018.

Bandura A. Social learning theory. General Learning Corporation. New York City: General Learning Press; 1971.

Acknowledgements

We would like to thank all of the participants in this study and would like to acknowledge Melissa and Sabrina Jassemi for all of their support and efforts in the organization of the “Practicing Knowledge Translation” course and in preparing the manuscript.

Funding

The project was funded by the Knowledge Translation Program.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Contributions

JEM and SS conceived the study. JEM, SK, and SS developed the PKT course. SR provided input on the study design. SR and JP completed the data collection and analysis. JEM, SR, and JP drafted and revised the manuscript. SK and SS reviewed and provided input to the manuscript. All authors reviewed and approved the final manuscript. This manuscript represents the views of the named authors only and not those of their organizations or institutions.

Corresponding author

Ethics declarations

Authors’ information

SS holds a Tier 1 Canada Research Chair in Knowledge Translation and Quality of Care and the Mary Trimmer Chair in Geriatric Medicine at the University of Toronto.

Ethics approval and consent to participate

Ethics approval was obtained from the St. Michaels Hospital research ethics board (#15-204).

Consent for publication

Written consent to participate in the course evaluation was obtained from all participants before the start of the course. Participation in the research evaluation was voluntary and not connected to course performance. No monetary compensation was awarded.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Implementation practice core competencies. (DOCX 22 kb)

Additional file 2:

PKT evaluation survey. (DOCX 550 kb)

Additional file 3:

PKT evaluation interview guide. (DOCX 29 kb)

Additional file 4:

Course satisfaction—qualitative data. (DOCX 14 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Moore, J.E., Rashid, S., Park, J.S. et al. Longitudinal evaluation of a course to build core competencies in implementation practice. Implementation Sci 13, 106 (2018). https://doi.org/10.1186/s13012-018-0800-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-018-0800-3