Abstract

The theory of Schur complement is very important in many fields such as control theory and computational mathematics. In this paper, applying the properties of Schur complement, utilizing some inequality techniques, some new estimates of diagonally dominant degree on the Schur complement of matrices are obtained, which improve some relative results. Further, as an application of these derived results, we present some distributions for the eigenvalues of the Schur complements. Finally, the numerical example is given to show the advantages of our derived results.

MSC:15A45, 15A48.

Similar content being viewed by others

1 Introduction

The Schur complement has been proved to be a useful tool in many fields such as numerical algebra and control theory. [1, 2] proposed a kind of iteration called the Schur-based iteration. Applying this method, we can solve large scale linear systems though reducing the order by the Schur complement. In addition, when utilizing the conjugate gradient method to solve large scale linear systems, if the eigenvalues of the system matrix are more concentrated, the convergent speed of the iterative method is faster (see, e.g., [[3], pp.312-317]). From [1, 2], it can be seen that for large scale linear systems, after applying the Schur-based iteration to reduce the order, the corresponding system matrix of linear equations is the Schur complement of the system matrix of original large scale linear systems and its eigenvalues are more concentrated than those of the original system matrix, leading to the Schur-based conjugate gradient method computing faster than the ordinary conjugate gradient method.

Hence, it is always interesting to know whether some important properties of matrices are inherited by their Schur complements. Clearly, the Schur complements of positive semidefinite matrices are positive semidefinite, the same is true for M-matrices, H-matrices and inverse M-matrices (see [4, 5]). Carlson and Markham showed that the Schur complements of strictly diagonally dominant matrices are diagonally dominant (see [6]). Li, Tsatsomeros and Ikramov independently proved the Schur complement of a strictly doubly diagonally dominant matrix is strictly doubly diagonally dominant (see [7, 8]). These properties have been repeatedly used for the convergence of iterations in numerical analysis and for deriving matrix inequalities in matrix analysis (see [3, 9, 10]). More importantly, the distribution for the eigenvalues of the Schur complement is of great significance, as shown in [1, 2, 8, 11–17]. The aim of this paper is to study the distributions for the eigenvalues of the Schur complement of some diagonally dominant matrices.

Denote by the set of all complex matrices. Let . For (), assume

Set

Let us recall that A is a (row) diagonally dominant matrix () if

A is a doubly diagonally dominant matrix () if

A is a γ-diagonally dominant matrix () if there exists such that

A is a product γ-diagonally dominant matrix () if there exists such that

If all inequalities in (1.1)-(1.4) are strict, then A is said to be a strictly (row) diagonally dominant matrix (), a strictly doubly diagonally dominant matrix (), a strictly γ-diagonally dominant matrix () and a strictly product γ-diagonally dominant matrix (), respectively.

Liu and Zhang in [14] have pointed out the fact as follows. If but , then there exists a unique index such that

As in [1, 2], for and , we call , and the i th (row) dominant degree, γ-dominant degree and product γ-dominant degree of A, respectively.

The comparison matrix of A, denoted by , is defined to be

A matrix A is an M-matrix if it can be written in the form of with P being nonnegative and , where denotes the spectral radius of P. A matrix A is a H-matrix if is a M-matrix. We denote by and the sets of H- and M-matrices, respectively.

For , denote by the cardinality of α and . If , then is the submatrix of A lying in the rows indicated by α and the columns indicated by β. In particular, is abbreviated to . Assume that is nonsingular. Then

is called the Schur complement of A with respect to .

The paper is organized as follows. In Section 2, we give several new estimates of diagonally dominant degree on the Schur complement of matrices, which improve some relative results. In Section 3, as an application of these derived results, the distributions for eigenvalues are obtained. In Section 4, we give a numerical example to show the advantages of our derived results.

2 The diagonally dominant degree for the Schur complement

In this section, we give several new estimates of diagonally dominant degree on the Schur complement of matrices, which improve some relative results.

Lemma 1 [4]

If A is an H-matrix, then .

Lemma 2 [4]

If or , then , i.e., .

Lemma 3 [11]

If or and , then the Schur complement of A is in or , where is the Schur complement of α in N and is the cardinality of .

Lemma 4 [1]

Let , , and . Then

Theorem 1 Let , , , and denote . Then for all ,

and

where

Proof According to Lemmas 1 and 2, we have . Thus, and ,

Denote

If

then

Choose such that

where we denote if . Set , where

Denote . If , then

otherwise,

Therefore, we have , and so . Note that . So,

Take in (2.5), then

Noting that , by (2.4) and (2.6), we have

Let , thus we easily get (2.1). Similarly, we obtain (2.2). □

Remark 1 Observe that

This means that Theorem 1 improves Theorem 1 of [14].

Theorem 2 Let , with the index satisfying (1.5), , and denote . Then for all ,

and

Proof For all , we have

Thus,

Take in (2.5). By (2.6), thus it is not difficult to get that (2.7) follows. Similarly, we obtain (2.8). □

It is known that the Schur complements of diagonally dominant matrices are diagonally dominant (see [12, 13]). However, this property is not always true for γ-diagonally dominant matrices and for product γ-diagonally dominant matrices, as shown in [1].

In the sequel, we obtain some disc separations for the γ-diagonally and product γ-diagonally dominant degree of the Schur complement, from which we provide that the Schur complement of the γ-diagonally and product γ-diagonally dominant matrices is also γ-diagonally dominant and product γ-diagonally under some restrictive conditions.

Theorem 3 Let , , , and denote . Then for all ,

and

where

Proof For all , we have

Similar as in the proof of Theorem 1, we easily obtain

Similarly,

Set

From (2.11), (2.12), (2.13), using Lemma 4, we have

Thus we get (2.9). Similarly, we have (2.10). □

Remark 2 Observe that

This means that Theorem 3 improves Theorem 2 of [1].

In a similar way to the proof of Theorem 3, we get the following theorem immediately.

Theorem 4 Let , , , and denote . Then for all ,

and

Corollary 1 Let and . Then for any with ,

Proof By (2.14), we have

□

Corollary 2 Let and . Then for any with ,

3 Distribution for eigenvalues

In this section, as an application of our results in Section 2, we present some locations for the eigenvalues of the Schur complements.

Theorem 5 Let , , . Then for each eigenvalue λ of , there exists such that

Proof Set . Using the Gerschgorin circle theorem, we know there exists such that

Thus

Hence, it is not difficult to get by (2.6) that

i.e.,

□

Remark 3 By Remark 2, it is obvious that Theorem 5 improves Theorem 3 of [1].

Corollary 3 Let , , . Then for each eigenvalue λ of , there exists such that

In a similar way to the proof of Theorem 5, we obtain the following theorem according to Theorem 2.

Theorem 6 Let , with the index satisfying (1.5), . Then for each eigenvalue λ of , there exists such that

Next, we obtain some distributions for the eigenvalues of the Schur complements of matrices under the conditions such as degree.

Lemma 5 [1]

Let and . Then for every eigenvalue λ of A, there exists such that

Theorem 7 Let , , , . Then for each eigenvalue λ of , there exists such that

Proof Set . From Lemma 5, we know that for each eigenvalue λ of , there exists such that

Hence,

From the proof of Theorem 3, we know

Therefore, from (3.6) we obtain

Thus (3.4) holds. □

Remark 4 By Remark 2, it is obvious that Theorem 7 improves Theorem 4 of [1].

Corollary 4 Let , , , . Then for each eigenvalue λ of , there exists such that

4 A numerical example

In this section, we show how to estimate the bounds for eigenvalues of the Schur complement with the elements of the original matrix to show the advantages of our results.

Example Let

If we estimate the bounds for eigenvalues of by the elements of , there would be great computations to do. However, as

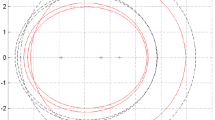

Since , according to Theorem 5, the eigenvalue z of satisfies

According to Theorem 3 in [1], the eigenvalue z of satisfies

Further, we use Figure 1 to illustrate (4.1) and (4.2).

It is clear that from both (4.1), (4.2) and Figure 1.

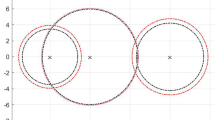

In addition, since , by taking in Theorem 7, the eigenvalue z of satisfies

According to Theorem 4 in [1], the eigenvalue z of satisfies

Further, we use Figure 2 to illustrate (4.3) and (4.4).

It is clear that from both (4.3), (4.4) and Figure 2.

References

Liu JZ, Huang ZJ: The Schur complements of γ -diagonally and product γ -diagonally dominant matrix and their disc separation. Linear Algebra Appl. 2010, 432: 1090–1104. 10.1016/j.laa.2009.10.021

Liu JZ, Huang ZJ, Zhang J: The dominant degree and disc theorem for the Schur complement. Appl. Math. Comput. 2010, 215: 4055–4066. 10.1016/j.amc.2009.12.063

Xiang SH, Zhang SL: A convergence analysis of block accelerated over-relaxation iterative methods for weak block H -matrices to partition π . Linear Algebra Appl. 2006, 418: 20–32. 10.1016/j.laa.2006.01.013

Horn RA, Johnson CR: Topics in Matrix Analysis. Cambridge University Press, New York; 1991.

Smith R: Some interlacing properties of the Schur complement of a Hermitian matrix. Linear Algebra Appl. 1992, 177: 137–144.

Carlson D, Markham T: Schur complements on diagonally dominant matrices. Czechoslov. Math. J. 1979, 29(104):246–251.

Ikramov KD: Invariance of the Brauer diagonal dominance in Gaussian elimination. Moscow. Univ. Comput. Math. Cybern. 1989, 2: 91–94.

Li B, Tsatsomeros M: Doubly diagonally dominant matrices. Linear Algebra Appl. 1997, 261: 221–235. 10.1016/S0024-3795(96)00406-5

Golub GH, Van Loan CF: Matrix Computations. 3rd edition. Johns Hopkins University Press, Baltimore; 1996.

Kress R: Numerical Analysis. Springer, New York; 1998.

Liu JZ, Li JC, Huang ZH, Kong X: Some properties on Schur complement and diagonal-Schur complement of some diagonally dominant matrices. Linear Algebra Appl. 2008, 428: 1009–1030. 10.1016/j.laa.2007.09.008

Liu JZ, Huang YQ: The Schur complements of generalized doubly diagonally dominant matrices. Linear Algebra Appl. 2004, 378: 231–244.

Liu JZ, Huang YQ: Some properties on Schur complements of H -matrices and diagonally dominant matrices. Linear Algebra Appl. 2004, 389: 365–380.

Liu JZ, Zhang FZ: Disc separation of the Schur complements of diagonally dominant matrices and determinantal bounds. SIAM J. Matrix Anal. Appl. 2005, 27: 665–674. 10.1137/040620369

Liu JZ: Some inequalities for singular values and eigenvalues of generalized Schur complements of products of matrices. Linear Algebra Appl. 1999, 286: 209–221. 10.1016/S0024-3795(98)10156-8

Liu JZ, Zhu L: A minimum principle and estimates of the eigenvalues for Schur complements of positive semidefinite Hermitian matrices. Linear Algebra Appl. 1997, 265: 123–145. 10.1016/S0024-3795(96)00595-2

Liu JZ, Zhang J, Liu Y: The Schur complement of strictly doubly diagonally dominant matrices and its application. Linear Algebra Appl. 2012, 437: 168–183. 10.1016/j.laa.2012.02.001

Acknowledgements

The work was supported in part by the National Natural Science Foundation of China (10971176), the Program for Changjiang Scholars and Innovative Research Team in University of China (No. IRT1179), the Key Project of Hunan Provincial Natural Science Foundation of China (10JJ2002), the Key Project of Hunan Provincial Education Department of China (12A137), the Hunan Provincial Innovation Foundation for Postgraduate (CX2011B242) and the Aid Program Science and Technology Innovative Research Team in Higher Educational Institutions of Hunan Province of China.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

JZ and JZL carried out the preparation, participated in the sequence alignment and drafted the manuscript. GT participated in its design and coordination. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Zhang, J., Liu, J. & Tu, G. The improved disc theorems for the Schur complements of diagonally dominant matrices. J Inequal Appl 2013, 2 (2013). https://doi.org/10.1186/1029-242X-2013-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1029-242X-2013-2