Abstract

The split feasibility problem (SFP) is finding a point in a given closed convex subset of a Hilbert space such that its image under a bounded linear operator belongs to a given closed convex subset of another Hilbert space. The most popular iterative method is Byrne’s CQ algorithm. López et al. proposed a relaxed CQ algorithm for solving SFP where the two closed convex sets are both level sets of convex functions. This algorithm can be implemented easily since it computes projections onto half-spaces and has no need to know a priori the norm of the bounded linear operator. However, their algorithm has only weak convergence in the setting of infinite-dimensional Hilbert spaces. In this paper, we introduce a new relaxed CQ algorithm such that the strong convergence is guaranteed. Our result extends and improves the corresponding results of López et al. and some others.

MSC:90C25, 90C30, 47J25.

Similar content being viewed by others

1 Introduction

The split feasibility problem (SFP) was proposed by Censer and Elfving in [1]. It can mathematically be formulated as the problem of finding a point x satisfying the property:

where A is a given real matrix (where is the transpose of A), C and Q are nonempty, closed and convex subsets in and , respectively. This problem has received much attention [2] due to its applications in signal processing and image reconstruction, with particular progress in intensity-modulated radiation therapy [3–5], and many other applied fields.

We assume the SFP (1.1) is consistent, and let S be the solution set, i.e.,

It is easily seen that S is closed convex. Many iterative methods can be used to solve the SFP (1.1); see [6–16]. Byrne [6, 17] was among others the first to propose the so-called CQ algorithm, which is defined as follows:

where , and and are the orthogonal projections onto the sets C and Q, respectively. Compared with Censer and Elfving’ algorithm [1], the Byrne’ algorithm is easily executed since it only deal with orthogonal projections with no need to compute matrix inverses.

The CQ algorithm (1.2) can be obtained from optimization. In fact, if we introduce the (convex) objective function

and analyze the minimization problem

then the CQ algorithm (1.2) comes immediately as a special case of the gradient projection algorithm (GPA)(For more details about the GPA, the reader is referred to [18]). Since the convex objective is differentiable, and has a Lipschitz gradient, which is given by

the GPA for solving the minimization problem (1.4) generates a sequence recursively as

where the stepsize is chosen in the interval , where L is the Lipschitz constant of ∇f.

Observe that in algorithms (1.2) and (1.6) mentioned above, in order to implement the CQ algorithm, one has to compute the operator (matrix) norm , which is in general not an easy work in practice. To overcome this difficulty, some authors proposed different adaptive choices of selecting the stepsize (see [6, 14, 19]). For instance, very recently López et al. introduced a new way of selecting the stepsize [19] as follows:

The computation of a projection onto a general closed convex subset is generally difficult. To overcome this difficulty, Fukushima [20] suggested a so-called relaxed projection method to calculate the projection onto a level set of a convex function by computing a sequence of projections onto half-spaces containing the original level set. In the setting of finite-dimensional Hilbert spaces, this idea was followed by Yang [13], who introduced the relaxed CQ algorithms for solving SFP (1.1) where the closed convex subsets C and Q are level sets of convex functions.

Recently, for the purpose of generality, the SFP (1.1) is studied in a more general setting. For instance, Xu [12] and López et al. [19] considered the SFP (1.1) in infinite-dimensional Hilbert spaces (i.e., the finite-dimensional Euclidean spaces and are replaced with general Hilbert spaces). Very recently, López et al. proposed a relaxed CQ algorithm with a new adaptive way of determining the stepsize sequence for solving the SFP (1.1) where the closed convex subsets C and Q are level sets of convex functions. This algorithm can be implemented easily since it computes projections onto half-spaces and has no need to know a priori the norm of the bounded linear operator. However, their algorithm has only weak convergence in the setting of infinite-dimensional Hilbert spaces. In this paper, we introduce a new relaxed CQ algorithm such that the strong convergence is guaranteed in infinite-dimensional Hilbert spaces. Our result extends and improves the corresponding results of López et al. and some others.

The rest of this paper is organized as follows. Some useful lemmas are listed in Section 2. In Section 3, the strong convergence of the new relaxed CQ algorithm of this paper is proved.

2 Preliminaries

Throughout the rest of this paper, we denote by H or K a Hilbert space, A is a bounded linear operator from H to K, and by I the identity operator on H or K. If is a differentiable function, then we denote by ∇f the gradient of the function f. We will also use the notations:

-

→ denotes strong convergence.

-

⇀ denotes weak convergence.

-

denotes the weak ω-limit set of .

Recall that a mapping is said to be nonexpansive if

is said to be firmly nonexpansive if, for ,

Recall that a function is called convex if

A differentiable function f is convex if and only if there holds the inequality:

Recall that an element is said to be a subgradient of at x if

This relation is called the subdifferentiable inequality.

A function is said to be subdifferentiable at x, if it has at least one subgradient at x. The set of subgradients of f at the point x is called the subdifferentiable of f at x, and it is denoted by . A function f is called subdifferentiable, if it is subdifferentiable at all . If a function f is differentiable and convex, then its gradient and subgradient coincide.

A function is said to be weakly lower semi-continuous (w-lsc) at x if implies

We know that the orthogonal projection from H onto a nonempty closed convex subset is a typical example of a firmly nonexpansive mapping, which is defined by

It is well known that is characterized by the inequality (for )

The following lemma is not hard to prove (see [17, 21]).

Lemma 2.1 Let f be given as in (1.3). Then

-

(i)

f is convex and differential.

-

(ii)

, .

-

(iii)

f is w-lsc on H.

-

(iv)

∇f is -Lipschitz: , .

The following are characterizations of firmly nanexpansive mappings (see [22]).

Lemma 2.2 Let be an operator. The following statements are equivalent.

-

(i)

T is firmly nonexpansive.

-

(ii)

, .

-

(iii)

is firmly nonexpansive.

Lemma 2.3 [23]

Assume is a sequence of nonnegative real numbers such that

where is a sequence in , and is a sequence in ℝ such that

-

(i)

.

-

(ii)

, or .

Then .

3 Iterative Algorithm

In this section, we turn to consider a new relaxed CQ algorithm in the setting of infinite-dimensional Hilbert spaces for solving SFP (1.1) where the closed convex subsets C and Q are of the particular structure, i.e. level sets of convex functions given as follows:

where and are convex functions. We assume that both c and q are subdifferentiable on H and K, respectively, and that ∂c and ∂q are bounded operators (i.e., bounded on bounded sets). By the way, we mention that every convex function defined on a finite-dimensional Hilbert space is subdifferentiable and its subdifferential operator is a bounded operator (see [24]).

Set

where , and

where .

Obviously, and are half-spaces and it is easy to verify that and hold for every from the subdifferentiable inequality. In what follows, we define

where is given as in (3.3). We then have

Firstly, we recall the relaxed CQ algorithm of López et al. [19] for solving the SFP (1.1) where C and Q are given in (3.1) as follows.

Algorithm 3.1 Choose an arbitrary initial guess . Assume has been constructed. If , then stop; otherwise, continue and construct via the formula

where is given as (3.2), and

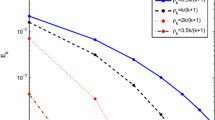

López et al. proved that under some certain conditions the sequence generated by Algorithm 3.1 converges weakly to a solution of the SFP (1.1). Since the projections onto half-spaces and have closed forms and is obtained adaptively via the formula (3.4) (no need to know a priori the norm of operator A), the above relaxed CQ algorithm 3.1 is implementable. But the weak convergence is its a weakness. To overcome this weakness, inspired by Algorithm 3.1, we will introduce a new relaxed CQ algorithm for solving the SFP (1.1) where C and Q are given in (3.1) so that the strong convergence is guaranteed.

It is well known that Halpern’s algorithm has a strong convergence for finding a fixed point of a nonexpansive mapping [25, 26]. Then we are in a position to give our algorithm. The algorithm given below is referred to as a Halpern-type algorithm [27].

Algorithm 3.2 Let , and start an initial guess arbitrarily. Assume that the n th iterate has been constructed. If , then stop ( is a approximate solution of SFP (1.1)). Otherwise, continue and calculate the th iterate via the formula:

where the sequence and and are given as in (3.4).

The convergence result of Algorithm 3.2 is stated in the next theorem.

Theorem 3.3 Assume that and satisfy the assumptions:

(a1) and .

(a2) .

Then the sequence generated by Algorithm 3.2 converges in norm to .

Proof We may assume that the sequence is infinite, that is, Algorithm 3.2 does not terminate in a finite number of iterations. Thus for all . Recall that S is the solution set of the SFP (1.1),

In the consistent case of the SFP (1.1), S is nonempty, closed and convex. Thus, the metric projection is well-defined. We set . Since and the projection operator is nonexpansive, we obtain

Note that is firmly nonexpansive and , it is deduced from Lemma 2.2 that

which implies that

Thus, we have

Now we prove is bounded. Indeed, we have from (3.6) that

and consequently

It turns out that

and inductively

and this means that is bounded. Since , with no loss of generality, we may assume that there is so that for all n. Setting , from the last inequality of (3.6), we get the following inequality:

Now, following an idea in [28], we prove by distinguishing two cases.

Case 1: is eventually decreasing (i.e. there exists such that holds for all ). In this case, must be convergent, and from (3.7) it follows that

where is a constant such that for all . Using the condition (a1), we have from (3.8) that . Thus, to verify that , it suffices to show that is bounded. In fact, it follows from Lemma 2.1 that (noting that due to )

This implies that is bounded and it yields , namely .

Since ∂q is bounded on bounded sets, there exists a constant such that for all . From (3.3) and the trivial fact that , it follows that

If , and is a subsequence of such that , then the w-lsc of q and (3.9) imply that

It turns out that . Next, we turn to prove . For convenience, we set . In fact, since the is firmly nonexpansive, it concludes that

On the other hand, we have

and

Noting that is bounded, we have from (3.10)-(3.12) that

where M is some positive constant. Clearly, from (3.13), it turns out that

Thus, we assert that due to the fact that . Moreover, by the definition of , we obtain

where δ is a constant such that for all . The w-lsc of c then implies that

Consequently, , and hence . Furthermore, due to (2.2), we get

Taking into account of (3.7), we have

Applying Lemma 2.3 to (3.16), we obtain .

Case 2: is not eventually decreasing, that is, we can find an integer such that . Now we define

It is easy to see that is nonempty and satisfies . Let

It is clear that as (otherwise, is eventually decreasing). It is also clear that for all . Moreover,

In fact, if , then the inequity (3.17) is trivial; if , from the definition of , there exists some such that , we deduce that

and the inequity (3.17) holds again. Since for all , it follows from (3.8) that

so that as (noting that is bounded). By the same argument to the proof in case 1, we have . On the other hand, noting again, we have from (3.12) and (3.14) that

where M is a positive constant. Letting yields that

from which one can deduce that

Since , it follows from (3.7) that

Combining (3.19) and (3.20) yields

and hence , which together with (3.18) implies that

which, together with (3.17), in turn implies that , that is, . □

Remark 3.4 Since u can be chosen in H arbitrarily, one can compute the minimum-norm solution of SFP (1.1) where C and Q are given in (3.1) by taking in Algorithm 3.2 whether or .

References

Censor Y, Elfving T: A multiprojection algorithm using Bregman projection in product space. Numer. Algorithms 1994, 8(2–4):221–239.

López G, Martín-Márquez V, Xu HK: Iterative algorithms for the multipul-sets split feasiblity problem. In Biomedical Mathematics Promising Directions in Imaging, Therapy Planning and Inverse Problems. Edited by: Censor Y, Jiang M, Wang G. Medical Physics Publishing, Madison, WI; 2010:243–279.

Censor Y, Bortfeld T, Martin B, Trofimov A: A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 2003, 51: 2353–2365.

Censor Y, Elfving T, Kopf N, Bortfeld T: The multiple-sets split feasibility problem and its applications for inverse problem. Inverse Probl. 2005, 21: 2071–2084. 10.1088/0266-5611/21/6/017

López G, Martín-Márquez V, Xu HK: Perturbation techniques for nonexpansive mappings with applications. Nonlinear Anal., Real World Appl. 2009, 10: 2369–2383. 10.1016/j.nonrwa.2008.04.020

Byrne C: Iterative oblique projection onto convex sets and the split feasibity problem. Inverse Probl. 2002, 18: 441–453. 10.1088/0266-5611/18/2/310

Dang Y, Gao Y: The strong convergence of a KM-CQ-like algorithm for a split feasibility problem. Inverse Probl. 2011., 27: Article ID 015007

Qu B, Xiu N: A note on the CQ algorithm for the split feasibility problem. Inverse Probl. 2005, 21: 1655–1665. 10.1088/0266-5611/21/5/009

Schöpfer F, Schuster T, Louis AK: An iterative regularization method for the solution of the split feasibility problem in Banach spaces. Inverse Probl. 2008., 24: Article ID 055008

Wang F, Xu HK: Approximating curve and strong convergence of the CQ algorithm for the split feasibility problem. J. Inequal. Appl. 2010., 2010: Article ID 102085

Xu HK: A variable Krasonosel’skii-Mann algorithm and the multiple-set split feasibility problem. Inverse Probl. 2006, 22: 2021–2034. 10.1088/0266-5611/22/6/007

Xu HK: Iterative methods for the split feasibility problem in infinite-dimensional Hilbert spaces. Inverse Probl. 2010., 26: Article ID 105018

Yang Q: The relaxed CQ algorithm for solving the split feasibility problem. Inverse Probl. 2004, 20: 1261–1266. 10.1088/0266-5611/20/4/014

Yang Q: On variable-set relaxed projection algorithm for variational inequalities. J. Math. Anal. Appl. 2005, 302: 166–179. 10.1016/j.jmaa.2004.07.048

Zhao J, Yang Q: Generalized KM theorems and their applications. Inverse Probl. 2006, 22(3):833–844. 10.1088/0266-5611/22/3/006

Zhao J, Yang Q: Self-adaptive projection methods for the multiple-sets split feasibility problem. Inverse Probl. 2011., 27: Article ID 035009

Byrne C: A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2004, 20(1):103–120. 10.1088/0266-5611/20/1/006

Figueiredo MA, Nowak RD, Wright SJ: Gradient projection for space reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 2007, 1: 586–598.

López G, Martín-Márquez V, Wang FH, Xu HK: Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 2012. doi:10.1088/0266–5611/28/8/085004

Fukushima M: A relaxed projection method for variational inequalities. Math. Program. 1986, 35: 58–70. 10.1007/BF01589441

Aubin JP: Optima and Equilibria: An Introduction to Nonlinear Analysis. Springer, Berlin; 1993.

Goebel K, Kirk WA: Topics on Metric Fixed Point Theory. Cambridge University Press, Cambridge; 1990.

Xu HK: Iterative algorithms for nonlinnear operators. J. Lond. Math. Soc. 2002, 66: 240–256. 10.1112/S0024610702003332

Bauschke HH, Borwein JM: On projection algorithms for solving convex feasibility problem. SIAM Rev. 1996, 38: 367–426. 10.1137/S0036144593251710

Suzuki T: A sufficient and necessary condition for Halpern-type strong convergence to fixed points of nonexpansive mappings. Proc. Am. Math. Soc. 2007, 135: 99–106.

Xu HK: Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 2004, 298: 279–291. 10.1016/j.jmaa.2004.04.059

Halpern B: Fixed points of nonexpanding maps. Bull. Am. Math. Soc. 1967, 73: 957–961. 10.1090/S0002-9904-1967-11864-0

Maingé PE: A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 2008, 47: 1499–1515. 10.1137/060675319

Acknowledgements

The authors wish to thank the referees for their helpful comments, which notably improved the presentation of this manuscript. This work was supported by the Fundamental Research Funds for the Central Universities (ZXH2012K001) and in part by the Foundation of Tianjin Key Lab for Advanced Signal Processing.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All the authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

He, S., Zhao, Z. Strong convergence of a relaxed CQ algorithm for the split feasibility problem. J Inequal Appl 2013, 197 (2013). https://doi.org/10.1186/1029-242X-2013-197

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1029-242X-2013-197