Abstract

We present a new way of performing hypothesis tests on scattering data, by means of a perturbatively calculable classifier. This classifier exploits the “history tree” of how the measured data point might have evolved out of any simpler (reconstructed) points along classical paths, while explicitly keeping quantum–mechanical interference effects by copiously employing complete leading-order matrix elements. This approach extends the standard Matrix Element Method to an arbitrary number of final state objects and to exclusive final states where reconstructed objects can be collinear or soft. We have implemented this method into the standalone package hytrees and have applied it to Higgs boson production in association with two jets, with subsequent decay into photons. hytrees allows to construct an optimal classifier to discriminate this process from large Standard Model backgrounds. It further allows to find the most sensitive kinematic regions that contribute to the classification.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The separation of interesting signal events from large Standard-Model induced backgrounds is one of the biggest challenges in searches for new physics and when measuring particle properties at the LHC. This problem is magnified when the final-states of interest have a large probability to be produced in proton–proton collisions according to the Standard Model. Typical classifications into signal and background events are based on observables that are characteristic of the quantum numbers of the particles involved in each hypothesis. For example, the quantum numbers (e.g. charges, spin and mass) of a resonance result in a specific radiation profile in the detector. The radiation induced by such a resonance is more likely to populate specific phase space regions. Thus, to infer if a process is induced by signal or by background, one wants to know how likely the measured radiation profile was induced by either hypothesis, i.e. the probability \(\mathcal {P}(\{p_i\}|S)\) for signal and \(\mathcal {P}(\{p_i\}|B)\) for background, where \(\{p_i\}\) denotes the set of 4-momenta measured in the detector. The Neyman–Pearson Lemma shows [1] that the ratio between both probabilities

yields an ideal classifier. This approach underlies the so-called Matrix Element Method (MEM) [2], which has been used in a large variety of contexts [3,4,5,6,7,8,9]. In the MEM, the probabilities \(\mathcal {P}(\{p_i\}|S)\) and \(\mathcal {P}(\{p_i\}|B)\) are calculated directly from the matrix elements of the respective “hard” processes. In [10, 11] the parton-level MEM has been extended to including the parton shower in the evaluation of the probabilities, and has been implemented in Shower [10,11,12] and Event [13,14,15] Deconstruction, thereby allowing for the analysis of an arbitrary number of final state objects. Information from the parton shower is particularly important in jet-rich final states and in the comparison of the substructure of jets for classification. Here exclusive fixed-order matrix elements do not provide a good description of nature, due to the appearance of collinear and soft divergences in the matrix elements.

Conversely, LHC signals and backgrounds are often predicted by using General-Purpose Event Generators (see e.g. [16]) to produce pseudo-data of scattering events. In this context, several frameworks to combine the parton shower with multiple hard matrix elements for multi-jet processes have been laid out [17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37]. Such merging schemes improve both the accuracy and the precision of event simulation tools. Double-counting between jets generated during the parton shower step or at the matrix element level is avoided by explicit vetoes and the inclusion of Sudakov factors or no-emission probabilities, such that multiple jets can simultaneously be described with matrix-element accuracy in one inclusive sample.

We propose to combine techniques used traditionally for merging schemes inspired by the CKKW-L method [35], and techniques of the iterated matrix-element correction approach of [37], and then use the resulting procedure to construct sophisticated perturbative weights for an input event, so that the weights may facilitate the classification between signal and background. To calculate \(\mathcal {P}(\{p_i\}|S)\) and \(\mathcal {P}(\{p_i\}|B)\), one will need to evaluate all possible combinations of parton shower and hard process histories that can give rise to the final state \(\{p_i\}\). Conceptually, such an analysis method is suitable for any final state of interest consisting of reconstructed objects, i.e. arbitrary numbers of isolated leptons, photons and jets. The approach, dubbed hytrees, is in line with the Shower/Event deconstruction method, but goes beyond these by including hard matrix elements with multiple jet emissions to calculate the weights of the event histories. We describe here the first implementation of such a method and showcase it in the context of a concrete example which is highly relevant for Higgs phenomenology, i.e. \(pp \rightarrow (\mathrm {H}\rightarrow \gamma \gamma ) + \mathrm {jets}\).

The outline of the paper is as follows. In Sect. 2 we discuss the details of the hytrees algorithm. hytrees relies on the Dire parton shower [38] to calculate the weights of the event histories. For details on the splitting probabilities used in the Dire dipole shower we refer to Appendix A. In Sect. 3 we apply hytrees to the study of the classification of the process \(pp \rightarrow (\mathrm {H}\rightarrow \gamma \gamma ) + \mathrm {jets}\) versus the processes without Higgs boson that lead to \(pp \rightarrow \gamma \gamma +\mathrm {jets}\). We offer conclusions in Sect. 4.

2 Implementation of hytrees

The definition of the classifier \(\chi \) suggested in Eq. (1) is in principle very intuitive. A practical implementation however requires assumptions and abstractions before the classifier can be calculated on experimental data. Thus, to test and develop the classifier, we will use event generator pseudo-data. We will evaluate the new classifier on this pseudo-data. To be concrete, we use realistic (showered and hadronised) events, i.e. each “event” consists of a collection of particles – photons, leptons, long-lived hadrons, etc. – with each particle represented by a 4-vector stored in the HepMc event format [39]. The hard processes underlying these events were generated using MadGraph [40], and showered and hadronised using Pythia [41].

These events are further processed to arrive at final states consisting of reconstructed objects, i.e. isolated leptons, isolated photons or jets. A lepton \((e,\mu )\) or photon is considered isolated by demanding that the total hadronic activity in a cone of radius \(R=0.3\) around the object must contain less than \(10\%\) of its \(p_T\), and the object is required to have \(p_T \ge 20\) GeV and \(|y|<2.5\). Jets are reconstructed using the anti-kT algorithm [42] as implemented in fastjet [43], with radius \(R=0.4\). We only consider events with at least two jets of \(p_{T,j} \ge 35\) GeV, since looser cuts are usually not considered in experimental analyses at the LHC. After these steps, the final state of interest is now considerably simplified compared to the particle-level final state, only consisting of \(\mathcal {O}(10)\) reconstructed objects. On these states, we will want to calculate \(\chi \) of Eq. (1) from first principles relying on perturbative methods. Thus, we want to be as insensitive as possible from experimental or non-perturbative effects, such as hadronisation or pileup-induced soft scatterings. Using reconstructed objects as input to our calculation protects us to a large degree from contributions that are theoretically poorly controllable.

To allow the calculation of the classifier to be as detailed and physical as possible, we will directly use a parton shower to calculate the necessary factors. For this, we identify the reconstructed objects in the event with partons of a parton shower, i.e. with the perturbative part of the event generation before hadronisation. The first necessary step is to redistribute momenta to ensure that all jet momenta can be mapped to on-shell parton momenta, and then adding beam momenta defined by momentum conservation in the center-of-mass frame. Each of these events is then translated to all possible partonic pseudo-events, by assigning all possible parton flavors and all possible color connections to the jets.Footnote 1 The resulting collection of events are then passed to the parton shower algorithmFootnote 2 to calculate all necessary weights.

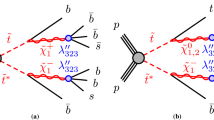

The general philosophy is illustrated in Fig. 1. A reasonable probability for the six configurations in the lowest layer should depend on the \(2\rightarrow 2\) matrix elements for particles connected to the “hard” scattering (grey blob). At the same time, the probability of the three configurations in the middle layer should be proportional to the \(2\rightarrow 3\) matrix elements for particles connected to the blob, and the overall probability of the top layer should be proportional to the \(2\rightarrow 4\) matrix elements. It is crucial to keep these conditions in mind when attempting a classification, since in general, the distinction between “hard scattering” and “subsequent radiation” is only well-defined in the phase-space region of ordered soft- and or collinear emissions. In such phase-space regions, the quantum–mechanical amplitudes factorize into independent building blocks (such as splitting functions or eikonal factors) that effectively make up a “classical” path. If the kinematics of the event is such that interference effects between the amplitudes for different paths (i.e. hypotheses) are sizable, then this needs to be reflected in the classifier. There should not be any discriminating power for such events. Here, we will build a classifier that does depend on assigning a classical path to phase-space points. The kinematics of each unique point will be used to calculate the rate of classical paths, such as the ones illustrated in Fig. 1. In phase-space regions that allow a (quantum–mechanically) sensible discrimination, the rates of the dominant paths will factorize into products of squared low-multiplicity matrix elements and approximate (splitting) kernels. In all other regions, we should be as agnostic as possible to the path. These two regions can be reconciled by always using the complete, non-factorized matrix elements to calculate the rate, and only employ the approximate (splitting) kernels to “project out” the rate of paths. This will guarantee that we minimize the dependence on assigning classical paths in inappropriate phase-space regions. We can succeed in defining the rate by the full non-factorized matrix element, for events of varying multiplicity, by employing the iterated matrix-element correction probabilities derived in [37] [see Eq. (15) therein] when calculating the probability of each path. The simultaneous use of matrix-elements for several different multiplicities is a significant improvement over traditional matrix-element methods, which only leverage matrix-elements for a single fixed multiplicity at a time. Extensions of MEM to NLO accuracy seem possible, and a worthwhile avenue to pursue [45, 46]. In this case, both Born-level and real-correction multiplicites can act in concert as a theoretically improved classifier for inclusive signal signatures.

The calculation of the classifier thus proceeds by constructing all possible ways how the partonic input state could have evolved out of a sequence of lower-multiplicity partonic states, by explicitly constructing all lower-multiplicity intermediate states via successive recombination of three into two particles, until no further recombination is possible. This construction of all “histories” follows closely the ideas used in matrix-element and parton shower merging methods [35]. The probability of an individual recombination sequence relies on full matrix elements as much as possible. In particular, we ensure that not only the probability of the lowest-multiplicity state is given by leading-order matrix elements, but that the probability of higher-multiplicity states is simultaneously determined by leading-order matrix elements. Further improvements of the method to incorporate running coupling effects, rescaling of parton distributions due to changes in initial-state longitudinal momentum components, as well as all-order corrections for momentum configurations with large scale hierarchies are discussed below.

Let us illustrate the calculation using the red paths in Fig. 1. One definite path (from dashed red through solid red to the top layer, e.g. following the rightmost lines in the figure) will contribute to the overall probability as

where \(P_{\text {X}}\) are approximate transition kernels, for example given by dipole splitting functions [47, 48]. The proof-of-principle implementation below uses the partial-fractioned dipole splitting kernels used in the Dire parton shower and documented in [38]. The necessary extensions of Dire to QED and Higgs splittings are outlined in Appendix A.

In order to construct the probabilities for the cases shown in Fig. 1, splitting functions for all QCD and QED vertices, as well as for Higgs–gluon, Higgs–fermion and Higgs–photon couplings have been calculated. When summing over the two dashed red paths, the full \(|\mathcal {M}({\mathrm {H}jj})^{}|^2\) is recovered, while summing over the dashed green and dashed blue paths yield the full mixed QCD/QED matrix elements \(|\mathcal {M}({\gamma \gamma j})^{}|^2\) and \(|\mathcal {M}({\gamma jj})^{}|^2\), respectively. The total sum of the probabilities of all paths reduces to \(|\mathcal {M}({\gamma \gamma jj})^{}|^2\), as desired. This discussion is complicated significantly by phase-space constraints, but can be generalized to an arbitrary multiplicity and to arbitrary splittings. We use the iterated ME correction approach of [37] in our proof-of-principle implementation below.

Note that it is straightforward to “tag” a path of recombinations as QCD-, QED- or Higgs-type by simply examining the intermediate configuration. The sum of all probabilities of all Higgs-type paths is an excellent measure of how Higgs-like the input state was, while the sum of all non-Higgs-type probabilities is an excellent measure of how background-like the input was. Following Eq. (1), it is thus natural to define the probability of the Higgs-hypothesis as

where the respective probabilities are defined as

A plethora of tags defining a hypothesis can be envisioned – once all paths of all intermediate states leading to the highest-multiplicity (input) state are known, it is straightforward to attribute a probability to each hypothesis. Of course, not all hypotheses are sensible from the quantum–mechanical perspective if interference effects are important. In this case, we expect that if the hypothesis is tested on pseudo-data with the hytrees method, the results are similar, irrespective of how the pseudo-data was generated. There should not be strong discrimination power for such problematic hypotheses.

Finally, a discrimination based on matrix elements alone is likely to give an unreasonable probability for multi-jet hadronic states, since e.g. large hierarchies in jet transverse momenta will not be described by fixed-order matrix elements alone, and because the overall flux of initial-state partons is tied to changes in the parton distribution functions. Thus, we include the all-order effects of the evolution between intermediate states into the probability of each path. We expect that these improvements will ameliorate the over-sensitivity of fixed-order matrix-element methods to small event distortions due to multiple soft and/or collinear emissions that were e.g. observed in [46]. For a path p of intermediate states \(S_i^{(p)}, i\in [1,n^{(p)}]\) that transition to the next higher multiplicity at scales \(t_i^{(p)}\), all-order evolution effects can be included by correcting the probability of each path to

\(\Pi (S_{i-1}^{(p)}; t_{i-1}^{(p)},t_i^{(p)})\) is the no-branching probability of state \(S_{i-1}^{(p)}\) between scales \(t_{i-1}^{(p)}\) and \(t_i^{(p)}\), which is directly related to Sudakov form factors [49,50,51]. We have also introduced the placeholder \(\alpha ^{\text { FIX}}(S_i^{p})\) for the coupling constant of the branching producing state \(S_i^{p}\) out of state \(S_{i-1}^{(p)}\), and \(\alpha (S_i^{(p)},t_i^{(p)})\) as a placeholder for the same coupling evaluated taking the kinematics of state \(S_i^{p}\) into account.Footnote 3 Finally, the parton luminosity appropriate for state \(S_{i-1}^{(p)}\), evaluated at longitudinal momentum fraction \(x_{i-1}^{(p)}\) and factorization scale \(t_{i-1}^{(p)}\) are collected in the factors \(f(S_{i-1}^{(p)}; x_{i-1}^{(p)},t_{i-1}^{(p)})\). Ratios of these factors account for the rescaling of the initial flux due to branchings. The weights \(w_p\) are also a key component of the CKKW-L algorithm, which employs trial showers to generate the no-branching probabilities, and attaches the PDF- and \(\alpha _s\) ratios as event weight to pretabulated fixed-order input events.

In hytrees, we also invoke trial showers to generate the no-branching factors, i.e. the calculation of the weights \(w_p\) is performed by directly using a realistic parton shower, specifically the Dire plugin to Pythia. The trial shower algorithm is directly based on the CKKW-L merging implementation in Pythia, and is discussed in some detail in [36]. To correctly calculate \(w_{p}\) for all possible paths, we extend this parton shower to include QED radiation (so that the shower can give a sensible all-order QED-resummed weight for the green paths in Fig. 1) and to allow the transitions \(q\rightarrow qH, g\rightarrow g H\) and \(H\rightarrow \gamma \gamma \) (in order to correctly assign the red clustering paths in Fig. 1). Details on these improvements, and on the use of matrix-element corrections in Dire , are given in Appendix A.

Non-normalized probabilities \(\mathcal {W}(\{p_i\}|\,\text {Hypothesis}) = \mathcal {P}(\{p_i\}|\,\text {Hypothesis}) \cdot \sum (\mathcal {P}_{\text {H}} + \mathcal {P}_{\text {QCD}} + \mathcal {P}_{\text {QED}})\) of Higgs and non-Higgs pseudodata to be tagged as Higgs or non-Higgs configuration, using different values for the argument of the QCD running coupling, both in the evaluation of coupling factors as well as the evaluation of no-branching probabilities

3 Application to \(\mathrm {H} \rightarrow \gamma \gamma \) + jets

To assess the performance of our approach in separating signal from background, and to showcase the scope of its potential applications, we study the signal process \(pp \rightarrow \mathrm {H}jj\) with subsequent decay of the Higgs boson into photons, \(\mathrm {H} \rightarrow \gamma \gamma \), at a center-of-mass energy of \(\sqrt{s} = 13\) TeV. This process is of importance in studying the quantum numbers of the Higgs boson, e.g. its couplings to other Standard Model particles [52,53,54,55] or its CP properties [56,57,58,59,60]. Just like for the Higgs discovery channel with an inclusive number of jets, \(pp \rightarrow (\mathrm {H} \rightarrow \gamma \gamma ) + X\), this channel suffers from a large Standard-Model continuum background. We generate signal and background events using MadGraph for the hard process cross section, and Pythia for showering and hadronisation. At the generation level, we apply minimal cuts for the photons (\(p_{T,\gamma } \ge 20\) GeV, \(|\eta | < 2.5\) and \(\Delta R_{\gamma \gamma } \ge 0.2\)), and on the final state partons j (\(p_{T,j} \ge 30\) GeV, \(|\eta | \le 4.5\) and \(\Delta R_{jj} \ge 0.4\)). While we do not consider detector efficiencies for the jets, we simulate the detector response in the reconstruction of the photons by smearing their energy such that the Breit-Wigner distributed invariant mass \(m^2_{\gamma \gamma }= (p_{\gamma ,1} + p_{\gamma ,2})^2\) has a width of 2 GeV after reconstruction. Under such inclusive cuts, the signal process receives contributions from gluon fusion, as well as from weak-boson fusion [61, 62]. Standard approaches to exploit this signal process often rely on the application of weak-boson-fusion cuts [63, 64], which render gluon-fusion contributions sub-dominant. Instead here, we will focus on the gluon-fusion contributions exclusively, aiming to apply hytrees to discriminate the continuum di-photon background from the gluon-fusion induced Higgs signal.Footnote 4

In Fig. 2, we show \(\log _{10}(\chi _{\text {H}})\), as calculated according to Eqs. (1) and (2), for Higgs-signal pseudo-data (left) and non-Higgs background samples (right). It is apparent that the observable \(\chi \) can discriminate between signal and background events. Signal events have on average large \(\chi _{\text {H}}\), i.e. they result in a relatively large value for \(\mathcal {P}(\{p_i\}|S)\) in comparison to \(\mathcal {P}(\{p_i\}|B)\), and vice versa for background events. Since the hytrees method is based on calculating well-defined perturbative factors, it goes beyond many existing classification methods by also providing an estimate of theoretical uncertainties of the hypothesis-testing variable \(\chi _{\text {H}}\). An exhaustive definition of the uncertainty of hytrees is extremely similar to the uncertainty of an event generator, in that it both perturbative ambiguities (of fixed-order matrix elements as well as all-order resummation) and non-perturbative variations contribute to overall uncertainty budget. In the context of event generators, uncertainties have recently received much attention (see e.g. the community effort [65, 66] or [34, 67,68,69]). No exhausive uncertainty budget of both perturbative and non-perturbative components of event generators has been presented so far. Here, for our proof-of-principles implementation, we use perturbative scale variations, applied both to fixed-order and all-order components of the hytrees method, as one example illustration of a source of theoretical uncertainty. We find that the theoretical uncertainty, estimated by varying the renormalisation scale between \(t/2 \le \mu _R \le 2 t\) (where t are the Dire parton-shower evolution variables given in Table 1, as necessary to evaluate running \(\alpha _s\) effects at the nodal splittings in the history tree, and to perform \(\mu _R\)-variations of the no-branching factors) are very small for \(\chi \) in our example. This is somewhat remarkable, as signal and background enter to lowest order at \(\mathcal {O}(\alpha ^2_s)\) for the hard process. As shown in Fig. 3, \(\mathcal {P}(\{p_i\}|S)\) and \(\mathcal {P}(\{p_i\}|B)\) separately (and multiplied by the total probability to ensure that no artificial numerator–denominator cancellations occur) show a large sensitivity on scale variations, which cancels when taking the ratio to calculate \(\chi _{\text {H}}\). This can also be understood in terms of a cancellation for the performance of the classifier. In the calculation of both the signal and the background hypotheses, partons are interpreted as emitted from the initial state partons, thus forming the final states with two (or more) jets. As the underlying dynamics is governed by QCD, this is very similar for signal and background, so that this part of the event does not contain much discriminative information. Furthermore, changing the argument of \(\alpha _s\) will affects signal and background in a similar way.

This raises the question whether all information used in discriminating signal from background is in fact contained in the electroweak part of the event, and could e.g. be captured by analyzing the invariant mass distribution \(m_{\gamma \gamma }\). We can investigate the effect of a mass-window cut within experimental uncertainties by selecting signal and background events that satisfy \(|m_{\gamma \gamma } - 125~\mathrm {GeV}| < 2~\mathrm {GeV}\), in line with the way we smeared the energy of the photons. Figure 4 shows when applying a mass cut, the normalised distributions of \(\chi _{\text {H}}\) overlap much more for signal and background samples, indicating that the very good separation observed in Fig. 2 rests largely on the fact that the photons in the signal arise due to the decay of a narrow resonance. Still, the signal samples result on average in a large value for \(\chi _{\text {H}}\) compared to the background samples and thus S / B can be improved with a cut on \(\chi _{\text {H}}\).

In order to construct the history tree for the hytrees method, it was necessary to introduce “Higgs splitting kernels” (cf. App. A) to define the probability of the \(\text {H}\rightarrow \gamma \gamma \) decay. In principle, it would be permissible to use the physical Higgs-boson width when calculating these splitting kernels. However, it is reasonable to expect that this might lead to an artificially strong discrimination power. Figure 4 shows that this is not the case, by varying the Higgs-boson width in the splitting kernel in a very large range.

The hytrees method effectively takes all possible observables into account to discriminate between two hypotheses. To investigate further how this relates to cutting on \(m_{\gamma \gamma }\), Fig. 5 shows the probabilities \(\mathcal {P}\) directly, binned in the differential distributions \(m_{\gamma \gamma }\) and \(m_{jj}\). This highlights that hytrees might also be useful to find optimal cuts in a cut-and-count analysis, since hytrees can quantify how much differential observables can discriminate between different hypotheses. As shown in Fig. 5, \(m_{jj}\) is very similar for signal and backgrounds, while \(m_{\gamma \gamma }\) is very discriminative. The sensitivity of any observable in classifying events can be studied in this way.

Classification with respect to Higgs or no-Higgs hypotheses is not the only application for hytrees in our example. One can imagine to construct different classification observables to test different hypotheses. For example, we could define \(\chi _{\text {QED}}\) and \(\chi _{\text {QCD}}\) in analogy to Eqs. (3) and (4), i.e.

with the probabilities

In Fig. 6, we show how the Higgs-signal and non-Higgs background samples fare regarding these three classification variables \(\chi _\text {H}\), \(\chi _\mathrm {QED}\) and \(\chi _\mathrm {QCD}\). The best discrimination between signal and background is observed in \(\chi _\text {H}\). This is not surprising, as \(\chi _\text {H}\) tests explicitly if there is a Higgs boson in the sample or not. \(\chi _\mathrm {QCD}\) and \(\chi _\mathrm {QED}\) perform as expected, yielding an on average larger value of \(\chi \) for the background sample, and smaller values for the events that do contain a Higgs boson. While \(\chi _\mathrm {QCD}\) retains some discriminative power between the Higgs and no-Higgs samples, the least discriminate variable is \(\chi _\mathrm {QED}\). Hence, with respect to the green path in Fig. 1, the signal and background samples provide very little separable kinematic features. The \(\mathrm {QED}\) hypothesis provides a very similar classifier, irrespective of the event sample, indicating that no “classical” path in the history tree is preferred, and that thus, interferences are relevant. It is comforting that in this case, the hytrees method does indeed, as desired, not produce an artificial discrimination power by referring to classical paths. In conclusion, by applying hytrees to known signal and background samples it is possible to optimise the discriminating observable, and to obtain an improved understanding of the kinematic features that allow a discrimination between signal and backgrounds.

4 Conclusions

The classification of events into signal and background is the basis for all searches and measurements at collider experiments. By building on the Event Deconstruction method [10, 13], CKKW-L merging [35] and the iterated matrix-element correction approach of [37], we have developed and implemented a novel way to classify realistic (i.e. fully showered and hadronised) final states according to different theory hypotheses. This method has been implemented in a standalone package, called hytrees, and will be made publicly available.

In principle this method is applicable to any final state and any theoretical hypotheses. However, there is a practical limitation due to the sharply increasing time it takes to evaluate complex final states with many (colored) particles. While invisible particles have not been implemented yet, approaches how to take them into account in the hypothesis testing exist [15] and will be included in a future release of hytrees.

We have applied hytrees to the gluon-fusion induced production of Hjj with subsequent decay H \( \rightarrow \gamma \gamma \). This process receives large backgrounds where the photons can either be produced in the hard interaction of the process \(pp \rightarrow \gamma \gamma jj\) or by being radiated off the final state or initial state quarks of the process \(pp \rightarrow jj\). Detector effects were rudimentarily taken into account by smearing the photon momenta. hytrees can directly calculate the probability of how likely an event was produced through a transition of interest. We have shown that hytrees can confidently separate between signal and background samples with respect to the Higgs or no-Higgs hypothesis. While the method takes into account all possible kinematic observables simultaneously to classify the event according to the hypotheses of consideration, it is also possible to study how much individual observables, or combinations of observables, contribute to the overall classification. Thus, hytrees can be used to optimise cuts for cut-and-count based analyses very efficiently. The flexible and first-principle calculation-based approach enables us to obtain an improved understanding of the kinematic features that allow us to discriminate between signal and backgrounds for very large classes of processes at any high-energy collider experiment.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The work is of theoretical nature, and thus no data will be deposited.]

Notes

We want to thank Valentin Hirschi for collaboration at an early stage of this project, and in particular for sharing a private code to generate all color connections in a parton ensemble.

These events are stored in Les Houches event files [44], and read by Pythia, which acts also as an interface to the parton shower.

For details on running coupling choices, see Appendix A.

References

F. James, Y. Perrin, L. Lyons (ed.) Workshop on Confidence Limits, CERN, Geneva, Switzerland, 17–18 Jan 2000: Proceedings (2000)

K. Kondo, J. Phys. Soc. Jpn. 57, 4126 (1988)

D0, V.M. Abazov et al., Nature 429, 638 (2004). arXiv:hep-ex/0406031

CDF, A. Abulencia et al., Phys. Rev. D73, 092002 (2006). arXiv:hep-ex/0512009

K. Cranmer, T. Plehn, Eur. Phys. J. C 51, 415 (2007). arXiv:hep-ph/0605268

Y. Gao et al., Phys. Rev. D 81, 075022 (2010). arXiv:1001.3396

J.R. Andersen, C. Englert, M. Spannowsky, Phys. Rev. D 87, 015019 (2013). arXiv:1211.3011

T. Martini, P. Uwer, JHEP 09, 083 (2015). arXiv:1506.08798

A.V. Gritsan, R. Röntsch, M. Schulze, M. Xiao, Phys. Rev. D 94, 055023 (2016). arXiv:1606.03107

D.E. Soper, M. Spannowsky, Phys. Rev. D 84, 074002 (2011). arXiv:1102.3480

D.E. Soper, M. Spannowsky, Phys. Rev. D 87, 054012 (2013). arXiv:1211.3140

D. Ferreira de Lima, P. Petrov, D. Soper, M. Spannowsky, Phys. Rev. D 95, 034001 (2017). arXiv:1607.06031

D.E. Soper, M. Spannowsky, Phys. Rev. D 89, 094005 (2014). arXiv:1402.1189

C. Englert, O. Mattelaer, M. Spannowsky, Phys. Lett. B 756, 103 (2016). arXiv:1512.03429

D.E. Ferreira de Lima, O. Mattelaer, M. Spannowsky, Phys. Lett. B 787, 100 (2018). arXiv:1712.03266

A. Buckley et al., Phys. Rep. 504, 145 (2011). arXiv:1101.2599

S. Catani, F. Krauss, R. Kuhn, B.R. Webber, JHEP 11, 063 (2001). arXiv:hep-ph/0109231

M.L. Mangano, M. Moretti, R. Pittau, Nucl. Phys. B 632, 343 (2002). arXiv:hep-ph/0108069

S. Mrenna, P. Richardson, JHEP 05, 040 (2004). arXiv:hep-ph/0312274

J. Alwall et al., Eur. Phys. J. C 53, 473 (2008). arXiv:0706.2569

K. Hamilton, P. Richardson, J. Tully, JHEP 11, 038 (2009). arXiv:0905.3072

K. Hamilton, P. Nason, JHEP 06, 039 (2010). arXiv:1004.1764

S. Höche, F. Krauss, M. Schönherr, F. Siegert, JHEP 08, 123 (2011). arXiv:1009.1127

N. Lavesson, L. Lönnblad, JHEP 12, 070 (2008). arXiv:0811.2912

L. Lönnblad, S. Prestel, JHEP 02, 094 (2013). arXiv:1211.4827

N. Lavesson, L. Lönnblad, JHEP 07, 054 (2005). arXiv:hep-ph/0503293

S. Plätzer, JHEP 08, 114 (2013). arXiv:1211.5467

T. Gehrmann, S. Höche, F. Krauss, M. Schönherr, F. Siegert, JHEP 01, 144 (2013). arXiv:1207.5031

S. Höche, F. Krauss, M. Schönherr, F. Siegert, JHEP 04, 027 (2013). arXiv:1207.5030

L. Lönnblad, S. Prestel, JHEP 03, 166 (2013). arXiv:1211.7278

R. Frederix, S. Frixione, JHEP 12, 061 (2012). arXiv:1209.6215

S. Alioli et al., JHEP 09, 120 (2013). arXiv:1211.7049

J. Bellm, S. Gieseke, S. Plätzer, Eur. Phys. J. C 78, 244 (2018). arXiv:1705.06700

W.T. Giele, D.A. Kosower, P.Z. Skands, Phys. Rev. D 84, 054003 (2011). arXiv:1102.2126

L. Lönnblad, JHEP 05, 046 (2002). arXiv:hep-ph/0112284

L. Lönnblad, S. Prestel, JHEP 03, 019 (2012). arXiv:1109.4829

N. Fischer, S. Prestel, Eur. Phys. J. C 77, 601 (2017). arXiv:1706.06218

S. Höche, S. Prestel, Eur. Phys. J. C 75, 461 (2015). arXiv:1506.05057

M. Dobbs, J.B. Hansen, Comput. Phys. Commun. 134, 41 (2001)

J. Alwall et al., JHEP 07, 079 (2014). arXiv:1405.0301

T. Sjöstrand et al., Comput. Phys. Commun. 191, 159 (2015). arXiv:1410.3012

M. Cacciari, G.P. Salam, G. Soyez, JHEP 04, 063 (2008). arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, Eur. Phys. J. C 72, 1111 (1896). 2012.6097

J. Alwall et al., Comput. Phys. Commun. 176, 300 (2007). arXiv:hep-ph/0609017

T. Martini, P. Uwer, JHEP 05, 141 (2018). arXiv:1712.04527

M. Kraus, T. Martini, P. Uwer, 1901, 08008 (2019)

G. Gustafson, U. Pettersson, Nucl. Phys. B 306, 746 (1988)

S. Catani, M.H. Seymour, Nucl. Phys. B 485, 291 (1997). (arXiv:hep-ph/9605323, [Erratum: Nucl. Phys.B510,503(1998)])

V.V. Sudakov, Sov. Phys. JETP 3, 65 (1956)

V.V. Sudakov, Zh Eksp, Teor. Fiz. 30, 87 (1956)

T. Sjöstrand, Phys. Lett. 157B, 321 (1985)

T. Corbett et al. (2015). arxiv: 1511.08188

C. Englert, R. Kogler, H. Schulz, M. Spannowsky, Eur. Phys. J. C 76, 393 (2016). arXiv:1511.05170

C. Englert, R. Kogler, H. Schulz, M. Spannowsky, Eur. Phys. J. C 77, 789 (2017). arXiv:1708.06355

J. Ellis, C.W. Murphy, V. Sanz, T. You, JHEP 06, 146 (2018). arXiv:1803.03252

T. Plehn, D.L. Rainwater, D. Zeppenfeld, Phys. Rev. Lett. 88, 051801 (2002). arXiv:hep-ph/0105325

C. Englert, M. Spannowsky, M. Takeuchi, JHEP 06, 108 (2012). arXiv:1203.5788

C. Englert, D. Goncalves-Netto, K. Mawatari, T. Plehn, JHEP 01, 148 (2013). arXiv:1212.0843

F.U. Bernlochner et al. (2018). arXiv:1808.06577

C. Englert, P. Galler, A. Pilkington, M. Spannowsky (2019). arXiv:1901.05982

V. Del Duca, W. Kilgore, C. Oleari, C. Schmidt, D. Zeppenfeld, Phys. Rev. Lett. 87, 122001 (2001). arXiv:hep-ph/0105129

G. Klamke, D. Zeppenfeld, JHEP 04, 052 (2007). arXiv:hep-ph/0703202

D.L. Rainwater, D. Zeppenfeld, K. Hagiwara, Phys. Rev. D 59, 014037 (1998). arXiv:hep-ph/9808468

T. Figy, C. Oleari, D. Zeppenfeld, Phys. Rev. D 68, 073005 (2003). arXiv:hep-ph/0306109

J.R. Andersen et al., Les Houches 2015: Physics at TeV Colliders Standard Model Working Group Report, in 9th Les Houches Workshop on Physics at TeV Colliders (PhysTeV 2015) Les Houches, France, June 1–19, 2015 (2016). arXiv:1605.04692

Les Houches 2017: Physics at TeV Colliders Standard Model Working Group Report, 1803.07977 (2018)

E. Bothmann, M. Schönherr, S. Schumann, Eur. Phys. J. C 76, 590 (2016). arXiv:1606.08753

J. Bellm, G. Nail, S. Plätzer, P. Schichtel, A. Siódmok, Eur. Phys. J. C 76, 665 (2016). arXiv:1605.01338

S. Mrenna, P. Skands, Phys. Rev. D 94, 074005 (2016). arXiv:1605.08352

B.A. Kniehl, L.Lönnblad, Renormalization scales in electroweak physics: and Photon radiation in the dipole model and in the Ariadne program, in Workshop on Photon Radiation from Quarks Annecy, France, December 2–3, 1991, pp. 109–112 (1992)

M. Schönherr, Eur. Phys. J. C 78, 119 (2018). arXiv:1712.07975

R. Kleiss, R. Verheyen, JHEP 11, 182 (2017). arXiv:1709.04485

S. Hoeche, S. Schumann, F. Siegert, Phys. Rev. D 81, 034026 (2010). arXiv:0912.3501

L. Lönnblad, Eur. Phys. J. C 73, 2350 (2013). arXiv:1211.7204

S. Catani, M. Grazzini, Nucl. Phys. B 570, 287 (2000). arXiv:hep-ph/9908523

F. Dulat, S. Höche, S. Prestel, Phys. Rev. D 98, 074013 (2018). arXiv:1805.03757

W. Giele, S. Prestel (publication in preparation)

Acknowledgements

We thank Valentin Hirschi for collaboration during an early stage of this project, by sharing a private code to generate all color connections, and for longstanding help with using MadGraph to generate the C++ matrix element code employed for matrix element corrections. MS is grateful to Dave Soper for a longstanding collaboration on the Shower/Event Deconstruction approach. MS thanks the University of Tuebingen and the Humboldt Society for support and hospitality during the finalisation of parts of this work. SP would like to thank Walter Giele for collaboration on dipole showers for QED splittings.

Author information

Authors and Affiliations

Corresponding author

QCD, QED and Higgs splittings in the Dire dipole shower

QCD, QED and Higgs splittings in the Dire dipole shower

Realistic classifications of final states containing jets and photons according to an hypothesis require the construction of all possible branching histories that could have produced the final states. Thus, all possible ways of splitting or recombining the particles in the final state have to be considered. For the problem at hand, this requires a simultaneous description of QCD- and QED branchings, both at fixed- and all-order perturbative accuracy. If an hypothesis does not only depend on the final-state particles alone, but rather infers reconstructed intermediate states, such as Higgs bosons, it is also necessary to incorporate the relevant intermediate branchings.

The description of QCD splittings used in this publication is implemented in the Dire plugin to Pythia , and consists of a partial-fractioned dipole parton shower including mass effects [38]. With the definitions listed in Table 1, the (unregulatized) splitting functions final-state radiation are given by

with the mass of the radiator after branching \(m_Q\), and where \(v_{\widetilde{\imath \jmath },\tilde{k}}\) and \(v_{ij,k}\) are the relative velocities between the emitter and the recoiler before and after the branching, determined by

The Jacobian factors \(J(z,\kappa ^2)\) are unity except for final-state emissions with final-state recoilers, where

Initial-state radiation with final-state recoilers is governed by the splitting functions

with the recoiler mass \(m_k\) and \(u=\frac{p_jp_a}{p_ap_j+p_ap_k}\). Note that initial-state particles are treated as zero-mass particles in the splitting kernels. Thus, initial-sate radition with an initial-state recoiler is governed by massless kernels [38]. For QCD splittings, we evaluate the running coupling at the evolution scale of the splitting, i.e. \(\alpha (S_i^{(p)},t_i^{(p)}) = \alpha _{s}(t_i^{(p)})\).

We implement QED emissions as an extension of the partial fractioned dipole shower of Dire , using the same evolution and energy sharing variables as well as kinematical splitting functions and mass corrections. The crucial difference to the treatment of QCD is that we allow all pairs of electric charges to form dipoles that coherently emit photons, similar to the ideas presented in [70] and more recently discussed in [71, 72]. At variance to the latter, we split the soft-photon radiation pattern into two pieces each assigned to one dipole splitting kernel. The color factors in the QCD splitting functions in [38] are further replaced by the electric (dipole) charge correlators (\(C_i\rightarrow \eta _{ij,k}= {Q_{ij}Q_{k}\theta _{ij}\theta _k} / {Q_{ij}^2}\) for photon emission, and \(\eta _{ij,k} = 1/[\)number of recoilers] for photon-to-fermion conversion), which can readily be negative. This inconvenience is addressed by using the weighted parton shower [73, 74] algorithm implemented in Dire . The assignment of recoilers for the \(\gamma \rightarrow f{\bar{f}}\) splitting takes guidance from the simultaneous emission of a soft quark pair in QCD (see e.g. [75]) which can be thought of being emitted from a parent color dipole [76]. The latter calculation is of course not directly applicable to QED. Nevertheless, in the absense of other concrete ideas, we allow all electrically charged particles to act as spectator for the \(\gamma \rightarrow f{\bar{f}}\) splitting. For all QED branchings, we do not employ a running coupling and instead fix \(\alpha \) to the Thompson value (cf. [70]), i.e. \(\alpha (S_i^{(p)},t_i^{(p)}) = \alpha _{em}(0) = 0.00729735\). More details on the formalism of QED showers will be presented elsewhere [77].

Since our QED splitting kernels can readily become negative, we expect that the event weight fluctuation due to the weighting algorithm can become a significant problem. This is however largely circumvented by including QCD and QED matrix element corrections up to \(pp\rightarrow \gamma \gamma j j j\) in the formalism of [37] into the parton shower: since the matrix-element corrections guarantee the correct radiation pattern irrespective of the splitting kernels, it is legitimate to enforce positive splitting kernels for splittings yielding states for which matrix-element corrections are available, thus not producing large weight fluctuations.

To allow testing the hypothesis of an intermediate Higgs boson, we further include the emission rate \(g\rightarrow g\)H and the decay rate H \(\rightarrow \gamma \gamma \) directly into the parton shower evolution. The emission rate \(q\rightarrow q\)H is omitted, since its contribution is only present for heavy quarks and is, due to the quark masses, further suppressed by phase space. The evolution variable and phase space mapping for the emission rate \(g\rightarrow g\)H is identical to that of (massive) QCD or QED splittings, and the splitting function is a simple uniform weight \(\Gamma _{\text {H}\rightarrow gg}(m_\mathrm {H})\). This allows to assign a probability to the production vertex of the Higgs boson, and is sufficient as long as the emission rate is effectively absent in the shower evolution. All gluons that can be reached by tracing leading-\(N_C\) color connections are possible spectators for this splitting. The coupling value \(\alpha (S_i^{(p)},t_i^{(p)})\) for the \(g\rightarrow g\)H emission is fixed to \(\alpha (S_i^{(p)},t_i^{(p)})=\Gamma _{\mathrm {H}\rightarrow gg}(m_\mathrm {H})\)

The virtuality of the photon pair serves as evolution variable for the H \(\rightarrow \gamma \gamma \) decay. In this case, the splitting kernel is defined by

where \(\mathcal {S}\) is the number of possible recoilers for this splitting. In line with the reasoning for the \(\gamma \rightarrow f{\bar{f}}\) splitting above, we allow all gluons as spectators for this splitting. Again, it worth noting that we do employ matrix-element corrections for shower splittings that produce \(pp\rightarrow \gamma \gamma j j j\) or less complicated states, such that for the purposes of this publication, the concrete prescription of the \(P_{\mathrm {H}\rightarrow \gamma \gamma }\) is of minor importance. The crucial point here is that the “estimate probability” \(P_{\mathrm {H}\rightarrow \gamma \gamma }\) will be replaced by the full tree-level matrix-element rate for the particle configuration (e.g. \(pp\rightarrow \gamma \gamma j j j\)) at hand, both in the cross-section and in the Sudakov-factor exponents. The coupling value \(\alpha (S_i^{(p)},t_i^{(p)})\) for the H \(\rightarrow \gamma \gamma \) decay is fixed to \(\alpha (S_i^{(p)},t_i^{(p)})=\Gamma _{\mathrm {H}\rightarrow \gamma \gamma }(m_\mathrm {H})\)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Prestel, S., Spannowsky, M. HYTREES: combining matrix elements and parton shower for hypothesis testing. Eur. Phys. J. C 79, 546 (2019). https://doi.org/10.1140/epjc/s10052-019-7030-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-019-7030-y