Abstract

The MEG experiment, designed to search for the \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) decay, completed data-taking in 2013 reaching a sensitivity level of \({5.3\times 10^{-13}}\) for the branching ratio. In order to increase the sensitivity reach of the experiment by an order of magnitude to the level of \(6\times 10^{-14}\), a total upgrade, involving substantial changes to the experiment, has been undertaken, known as MEG II. We present both the motivation for the upgrade and a detailed overview of the design of the experiment and of the expected detector performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Status of the MEG experiment in the framework of charged Lepton Flavour Violation (cLFV) searches

The experimental upper limits established in searching for cLFV processes with muons, including the \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) decay, are shown in Fig. 1 versus the year of the result publication. Historically, the negative results of these experiments led to the empirical inclusion of lepton flavor conservation in the Standard Model (SM) of elementary particle physics. During the past 35 years the experimental sensitivity to the \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) decay has improved by almost three orders of magnitude, mainly due to improvements in detector and beam technologies. In particular, ‘surface’ muon beams (i.e. beams of muons originating from stopped \(\pi ^+\)s decay in the surface layers of the pion production target) with virtually monochromatic momenta of \({\sim 29}\,{\hbox {MeV}/c}\), offer the highest muon stop densities obtainable at present in low-mass targets, allowing ultimate resolution in positron momentum and emission angle and suppressing the photon background production. The current most stringent limit is given by the MEG experiment [1] at the Paul Scherrer Institute (PSI, Switzerland) on the \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) decay branching ratio [2]:

at 90% confidence level (CL), based on the full data-set. Currently, the upgrade of the experiment, known as the MEG II experiment, is in preparation aiming for a sensitivity enhancement of one order of magnitude compared to the MEG final result.

The signal of the two-body \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) decay at rest can be distinguished from the background by measuring the photon energy \(E_{\mathrm {\gamma }}\), the positron momentum \(p_{\mathrm {e}^{+}}\), their relative angle \(\varTheta _{\mathrm {e}^+ \gamma }\) and timing \(t_{\mathrm {e}^+ \gamma }\) with the best possible resolutions.

The background comes either from radiative muon decays (RMD) \({\mu ^+ \rightarrow \hbox {e}^+ \nu \bar{\nu }\gamma }\) in which the neutrinos carry away a small amount of energy or from an accidental coincidence of an energetic positron from Michel decay \(\mu ^+ \rightarrow \mathrm {e}^+ \nu \bar{\nu }\) with a photon coming from RMD, bremsstrahlung or positron annihilation-in-flight (AIF) \({\hbox {e}^+ \hbox {e}^- \rightarrow \gamma \gamma }\). In experiments using high intensity beams, such as MEG, this latter background is dominant.

The keys for \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) search experiments achieving high sensitivities can be summarised as

-

1.

A high intensity continuous surface muon beam to gain the data statistics with minimising the accidental background rate.

-

2.

A low-mass positron detector with high rate capability to deal with the abundant positrons from muon decays.

-

3.

A high-resolution photon detector, especially in the energy measurement, to suppress the high-energy random photon background.

The MEG experiment uses one of the world’s most intense continuous surface muon beams, with maximum rate higher than \(10^{8}\,\upmu ^{+}\)/s but, for reasons explained in the following, the stopping intensity is limited to \(3\times 10^{7}\,\upmu ^{+}\)/s. The muons are stopped in a thin polyethylene target, placed at the centre of the experimental set-up which includes a positron spectrometer and a photon detector, as shown schematically in Fig. 2.

The positron spectrometer consists of a set of drift chambers and scintillating timing counters located inside a superconducting solenoid COBRA (COnstant Bending RAdius) with a gradient magnetic field along the beam axis, ranging from 1.27 T at the centre to 0.49 T at either end, that guarantees a bending radius of positrons weakly dependent on the polar angle. The gradient field is also designed to remove quickly spiralling positrons sweeping them outside the spectrometer to reduce the track density inside the tracking volume.

The photon detector, located outside of the solenoid, is a homogeneous volume of liquid xenon (LXe) viewed by photomultiplier tubes (PMTs) submerged in the liquid, that read the scintillating light from the LXe. The spectrometer measures the positron momentum vector and timing, while the LXe photon detector measures the photon energy as well as the position and time of its interaction in LXe. The photon direction is measured connecting the interaction vertex in the LXe photon detector with the positron vertex in the target obtained by extrapolating the positron track. All the signals are individually digitised by in-house designed waveform digitisers (DRS4) [3].

The number of expected signal events for a given branching ratio \( \mathcal{B} \) is related to the rate of stopping muons \(R_\mathrm {\mu ^+}\), the measurement time T, the solid angle \(\varOmega \) subtended by the photon and positron detectors, the efficiencies of these detectors (\(\epsilon _\mathrm {\gamma }, \epsilon _{\mathrm {e}^{+}}\)) and the efficiency of the selection criteria \(\epsilon _\mathrm {s}\)Footnote 1:

The single event sensitivity (SES) is defined as the \( \mathcal{B} \) for which the experiment would see one event. In principle the lowest SES, and therefore the largest possible \(R_\mathrm {\mu ^+}\), is desirable in order to be sensitive to the lowest possible \( \mathcal{B} \). The number of accidental coincidences \(N_\mathrm {acc}\), for given selection criteria, depends on the experimental resolutions (indicated as \(\varDelta \)) with which the four relevant quantities (\(E_{\mathrm {\gamma }}\), \(p_{\mathrm {e}^{+}}\), \(\varTheta _{\mathrm {e}^+ \gamma }\), \(t_{\mathrm {e}^+ \gamma }\)) are measured. By integrating the RMD photon and Michel positron spectra over respectively the photon energy and positron momentum resolution intervals, it can be shown that:

The number of RMD background events \(N_\mathrm {RMD}\) can be calculated by integrating the SM calculation of the RMD differential branching ratio [4] over the appropriate kinematic intervals, but there is no simple equation for \(N_\mathrm {RMD}\). In MEG, \(N_\mathrm {RMD}\) was more than ten times smaller than \(N_\mathrm {acc}\) [2]. Due to the dependence \(N_\mathrm {acc} \propto R_\mathrm {\mu ^+}^2\), in comparison with \(N_\mathrm {RMD} \propto R_\mathrm {\mu ^+}\), the accidental coincidences in MEG II, where \(R_\mathrm {\mu ^+}\) is about twice as large as in MEG, will dominate even more over the number of background events from RMD.

It is clear from Eqs. (1) and (2) that, for fixed experimental resolutions, the muon stopping rate cannot be increased arbitrarily but must be chosen in order to keep a reasonable signal to background ratio.

After the five-year data taking of MEG, only a limited gain in sensitivity could be achieved with further statistics due to the background (accidental) extending into the signal region. Therefore, the data-taking ceased in 2013, allowing the upgrade program to proceed with full impetus.

Other cLFV channels, complementary to \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) and being actively pursued are: \({\mu ^-N \rightarrow \hbox {e}^-N}\), \({\mu \rightarrow \hbox {3e}}\), \(\tau \rightarrow \ell \gamma \) and \(\tau \rightarrow 3\ell \) (\(\ell = \mathrm {e}\) or \({\upmu }\)).

In the \({\mu ^-N \rightarrow \hbox {e}^-N}\) conversion experiments, negative muons are stopped in a thin target and form muonic atoms. The conversion of the muon into an electron in the field of the nucleus results in the emission of a monochromatic electron of momentum \({\sim }100\) MeV/c, depending on the target nucleus used. Here the backgrounds to be rejected are totally different from the \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) case. The dominant background contributions are muon decay-in-orbit and those correlated with the presence of beam impurities, such as pions. In order to reduce these backgrounds the experiments planned at Fermilab (Mu2e) [5, 6] and J-PARC (COMET [7, 8] and DeeMe [9]) will use pulsed proton beams to produce their muons. Since muonic atoms have lifetimes ranging from hundreds of nanoseconds up to the free muon lifetime at low Z, the conversion electrons are therefore searched for in the intrabunch intervals.

The COMET collaboration plans to start the first phase of the experiment in 2018 with a sensitivity reach better than \(10^{-14}\), to be compared with the existing limit \(7\times 10^{-13}\) [10], followed by the second phase aiming for a goal sensitivity of \(7\times 10^{-17}\), while the Mu2e experiment is foreseen to start in 2021 with a first phase sensitivity goal of \(7\times 10^{-17}\). These experiments can in principle reach sensitivities below \(10^{-17}\) [11, 12].

The \({\mu \rightarrow \hbox {3e}}\) decay search is being pursued in a new experiment, proposed at PSI: Mu3e [13]. This plans a staged approach to reach its target a sensitivity of \(10^{-16}\), to be compared with the existing limit \(1\times 10^{-12}\) [14]. The initial stage involves sharing part of the MEG beam line and seeks a three orders-of-magnitude increase in sensitivity over the current limit, its goal being \(10^{-15}\). The final stage foresees muon stopping rates of the order of e9 \(\upmu ^{+}\)/s.

\(\tau \rightarrow \ell \gamma \) and \(\tau \rightarrow 3\ell \) will be explored by the Belle II experiment at SuperKEKB [15, 16] and a proposed experiment at the super Charm-Tau factory [17, 18] where sensitivities of the order of \(10^{-9}\) to the branching ratios for these channels are expected.

A comparison between the sensitivity planned for MEG II and that envisaged for the other above mentioned cLFV processes will be discussed in the next section after a very short introduction to cLFV predictions in theories beyond the SM.

1.2 Scientific merits of the MEG II experiment

Although the SM has proved to be extremely successful in explaining a wide variety of phenomena in the energy scale from sub-eV to \(O({1}\,{\hbox {TeV}})\), it is widely considered a low energy approximation of a more general theory. One of the attractive candidates for such theory is the grand-unified theory (GUT) [19] which unifies all the SM gauge groups into a single group as well as quarks and leptons into common multiplets of the group. In particular, the supersymmetric version (SUSY-GUT) has received a great amount of attention after the LEP experiments showed that a proper unification of the forces can be achieved at around a scale \(M_\mathrm {GUT}{\sim } 10^{16}\hbox { GeV}\) if SUSY particles exist at a scale \(\mathcal{O}({1}\,\hbox {Tev})\) [20]. The search for TeV-scale SUSY particles has been one of the goals of the LHC program. Results so far have been negative for masses up to 1–2 Tev [21, 22].

The experimentally measured phenomenon of neutrino oscillations [23,24,25] requires an extension of the SM. It demonstrates that lepton flavour is violated, and neutrinos have masses but they are orders of magnitude smaller than those of quarks and charged leptons. An appealing extension of the SM consists in introducing Majorana masses for neutrinos to naturally account for the tiny neutrino masses via the seesaw mechanism [26,27,28,29]. This approach predicts the existence of heavy right-handed Majorana neutrinosFootnote 2 in the range of \(10^{9}\)–\(10^{15}\) GeV. This ultra-high mass scale may be indicative of their connection to SUSY-GUT (e.g. all the SM fermions plus the right-handed neutrino in a generation can fit into a single multiplet in SO(10) GUT). The Majorana neutrinos violate the lepton number, and may account for the matter–antimatter asymmetry in the Universe [31].

It is generally difficult to detect, even indirectly, the effects of such ultra-high energy scale physics. However, the situation changes with SUSY, and cLFV signals provide a general test of SUSY-GUT and SUSY-seesaw as discussed below.

It is well known that cLFV is sensitive to SUSY [32,33,34]; in fact the parameter space for the minimal SUSY extension of the SM (MSSM) has largely been constrained by flavour- and CP-violation processes involving charged leptons and quarks [35,36,37,38]. These experimental observations lead to considering special mechanisms of SUSY breaking, requiring e.g. the universal condition of SUSY particles’ masses at some high scale. It was however shown that mixing in sleptons emerges unavoidably at low energy in SUSY-GUT [39] and SUSY-seesaw [40] models even if the lepton flavour is conserved at high scale. This is because flavour-violation sources, i.e. at least the quark and/or neutrino Yukawa interactions, do exist in the theory and radiatively contribute to the mass-squared matrices of sleptons during the evolution of the renormalisation-group equation.Footnote 3 As a result, \( \mathcal{B} ({\mu \rightarrow \hbox {e} \gamma })\) is predicted at an observable level \({10^{-11}}\)–\({10^{-14}}\) [42,43,44,45,46,47,48,49]. This theoretical framework motivated the MEG and MEG II experiment.

In order to appreciate this, we recall that the SM, even introducing massive neutrinos, practically forbids any observable rate of cLFV (\( \mathcal{B} ({\mu \rightarrow \hbox {e} \gamma }) < 10^{-50}\)) [50, 51]. Processes with cLFV are therefore clean channels to look for possible new physics beyond the SM, for which a positive signal would be unambiguous evidence.

Over the last 5 years, two epoch-making developments took place in particle physics: the discovery of Higgs boson [52, 53] and the measurement of the last unknown neutrino mixing angle \(\theta _{13}\) [54,55,56,57]. The mass of Higgs boson at 125 GeV [58], rather light, on one hand supports the SUSY-GUT scenario since it is in the predicted region [59]. On the other hand, it is relatively heavy in MSSM and suggests, together with the null results in the direct searches at LHC, that the SUSY particles would be heavier than expected. This implies that a smaller \( \mathcal{B} ({\mu \rightarrow \hbox {e} \gamma })\) is expected because of the approximate dependence \(\propto 1/M_\mathrm {SUSY}^4\). This might explain why MEG was not able to detect the signal as well as why other flavour observables, particularly \(\mathrm {b} \rightarrow \mathrm {s}\gamma \) [60] and \(B_\mathrm {s} \rightarrow \mu ^+ \mu ^-\) [61], have been measured to be consistent with the SM so far. In contrast, the observed large mixing angle \(\theta _{13} = {{8.3\pm 0.2}}^{\circ }\) [25] suggests higher \( \mathcal{B} ({\mu \rightarrow \hbox {e} \gamma })\) in many physics scenarios such as SUSY-seesaw.

Updated studies of SUSY-GUT/seesaw models taking those recent experimental results into account show that \( \mathcal{B} ({\mu \rightarrow \hbox {e} \gamma })\sim 10^{-13}\)–\(10^{-14}\) is possible up to SUSY particles’ masses around 5–10 TeV [62,63,64,65,66,67,68,69,70], well above the region where LHC (including HL-LHC) direct searches can reach. In addition, cLFV searches are sensitive to components which do not strongly interact (e.g. sleptons and electroweakinos in MSSM) and thus are not much constrained by the LHC results. In light of the above considerations, further exploration of the range \( \mathcal{B} ({\mu \rightarrow \hbox {e} \gamma })\sim O(10^{-14})\) in coincidence with the 14-TeV LHC run provides a unique and powerful probe, complementary and synergistic to LHC, to explore new physics.

So far, we discussed SUSY scenarios, the main motivation of MEG II, but many other scenarios, such as models with extra-dimensions [71,72,73], left-right symmetry [74,75,76,77], leptoquarks [78,79,80,81], and little Higgs [82,83,84,85], also predict observable rates of \({\mu \rightarrow \hbox {e} \gamma }\) within the reach of MEG II.

Comparison between different \(\mu \rightarrow \mathrm {e}\) transition processes can be done model independently by an effective-field-theory approach. Considering new physics, cLFV processes are generated by higher-dimensional operators; the lowest one that directly contributes to \({\mu \rightarrow \hbox {e} \gamma }\) is the following dimension-six (dipole-type) operator,

where \(\langle H \rangle \) is the vacuum expectation value of the Higgs field and \(F^{\mu \nu }\) is the field-strength tensor of photon. This operator also induces \({\mu \rightarrow \hbox {3e}}\) and \({\mu ^-N \rightarrow \hbox {e}^-N}\) via the propagation of a virtual photon. There are several other dimension-six operators which cause the \(\mu \rightarrow \mathrm {e}\) transitions, and their amplitudes to each of the three processes are model-dependent.Footnote 4

In many models, especially most of SUSY models including the above mentioned SUSY-GUT/seesaw models, the operator (3) dominates the \(\mu \rightarrow \mathrm {e}\) transitions. In such a case, the following relations hold independently of the parameters in the models [4, 87]:

Therefore, a search for \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) with a sensitivity of \({\sim } 6\times 10^{-14}\), which is the target of MEG II, with a much shorter timescale and a far lower budget than other future projects, is competitive not only with the second phase of the Mu3e experiment [13] but also with the COMET [7] and Mu2e [6] experiments. On the other hand, in case of discovery, we can benefit from a synergistic effect by the results from these experiments, providing a strong model-discriminant power; any observations of discrepancy from the relations (4) and (5) would suggest the existence of the contributions from operators other than (3).

The comparison between \(\mu \) and \(\tau \) processes is more model dependent. In the SUSY-seesaw models with and without GUT relations, the ratio \( \mathcal{B} (\tau \rightarrow \mu \gamma )/ \mathcal{B} ({\mu \rightarrow \hbox {e} \gamma })\) roughly ranges from 1 to \(10^{4}\).Footnote 5 Therefore, the present MEG bound on \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) already sets strong constraints on \(\tau \rightarrow \ell \gamma \) to be measured in the coming experiments [16]. If \(\tau \rightarrow \ell \gamma \) will be detected in these experiments without a discovery of \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) in MEG II, such models will be strongly disfavoured.

We finally note that MEG II will represent the best effort to address the search of the \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) rare decay with the available detector technology coupled with the most intense continuous muon beam in the world. Experience shows that to achieve any significant improvement in this field several years are required (more than one decade was necessary to pass from MEGA to MEG) and therefore we feel committed to push the sensitivity of the search to the ultimate limits.

1.3 Overview of the MEG II experiment

The MEG II experiment plans to continue the search for the \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) decay, aiming for a sensitivity enhancement of one order of magnitude compared to the final MEG result, i.e. down to \(6 \times 10^{-14}\) for \( \mathcal{B} ({\mu ^+ \rightarrow \hbox {e}^+ \gamma })\). Our proposal for upgrading MEG [88] was approved by the PSI research committee in 2013 and then, the details of the technical design has been fixed after intensive R&D and is reported in this paper.

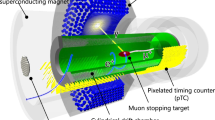

The basic idea of the MEG II experiment is to achieve the highest possible sensitivity by making maximum use of the available muon intensity at PSI with the basic principle of the MEG experiment but with improved detectors. A schematic view of MEG II is shown in Fig. 3.

A beam of surface \(\mathrm {\mu ^+}\) is extracted from the \(\pi \)E5 channel of the PSI high-intensity proton accelerator complex, as in MEG, but the intensity is increased to the maximum. After the MEG beam transport system, the muons are stopped in a target, which is thinner than the MEG one to reduce both multiple Coulomb scattering of the emitted positrons and photon background generated by them. The stopping rate becomes \(R_\mathrm {\mu ^+}= 7\times 10^{7}\hbox { s}^{-1}\), more than twice that of MEG (see Sect. 2).

The positron spectrometer uses the gradient magnetic field to sweep away the low-momentum \({\mathrm {e}^{+}}\). The COBRA magnet is retained from MEG, while the positron detectors inside are replaced by new ones. Positron tracks are measured by a newly designed single-volume cylindrical drift chamber (CDCH) able to sustain the required high rate. The resolution for the \({\mathrm {e}^{+}}\) momentum vector is improved with more hits per track by the high density of drift cells (see Sect. 4). The positron time is measured with improved accuracy by a new pixelated timing counter (pTC) based on scintillator tiles read out by SiPMs (see Sect. 5). The new design of the spectrometer increases the signal acceptance by more than a factor 2 due to the reduction of inactive materials between CDCH and pTC.

The photon energy, interaction point position and time are measured by an upgraded LXe photon detector. The energy and position resolutions are improved with a more uniform collection of scintillation light achieved by replacing the PMTs on the photon entrance face with new vacuum-ultraviolet (VUV) sensitive 12 \(\times \) 12 \(\hbox {mm}^{2}\) SiPMs (see Sect. 6).

A novel device for an active background suppression is newly introduced: the Radiative Decay Counter (RDC) which employs plastic scintillators for timing and scintillating crystals for energy measurement in order to identify low-momentum \({\mathrm {e}^{+}}\) associated to high-energy RMD photons (see Sect. 7).

The trigger and data-acquisition system (TDAQ) is also upgraded to meet the stringent requirements of an increased number of read-out channels and to cope with the required bandwidth by integrating the various functions of analogue signal processing, biasing for SiPMs, high-speed waveform digitisation, and trigger capability into one condensed unit (see Sect. 8).

In rare decay searches the capability of improving the experimental sensitivity depends on the use of intense beams and high performance detectors, accurately calibrated and monitored. This is the only way to ensure that the beam characteristics and the detector performances are reached and maintained over the experiment lifetime. To that purpose several complementary approaches have been developed with some of the methods requiring dedicated beams and/or auxiliary detectors. Many of them have been introduced and commissioned in MEG and will be inherited by MEG II with some modifications to match the upgrade. In addition new methods are introduced to meet the increased complexity of the new experiment.

Finally, the sensitivity of MEG II with a running time of three years is estimated in Sect. 9.

2 Beam line

2.1 MEG beam line layout

The main beam requirements for a high rate, high sensitivity, ultra-rare decay coincidence experiment such as MEG are:

-

high stopping intensity (\(R_\mathrm {\mu ^+}= 7\times 10^{7}\hbox { s}^{-1}\)) on target with high transmission optics,

-

small beam spot to minimise the stopping target size,

-

large momentum-byte \(\varDelta p_\mathrm {\mu ^+}/p_\mathrm {\mu ^+}\sim 7\%\) (FWHM) with an achromatic final focus, yielding an almost monochromatic beam with a high stop density for a thin target,

-

minimal and well separated beam-correlated backgrounds such as positrons from Michel decay or \(\pi ^0\)-decay in the production target or decay particles from along the beam line and

-

minimisation of material budget along the beam line to suppress multiple Coulomb scattering and photon production, use of vacuum or helium environments as far as possible.

Coupling the MEG COBRA spectrometer and LXe photon detector to the \(\pi \)E5 channel, which ends with the last dipole magnet ASC41 in the shielding wall, is achieved with a Wien-filter (cross-field separator) and two sets of quadrupole triplet magnets, as shown in Fig. 4. These front-elements of the MEG beam line allow a maximal transmission optics through the separator, followed by an achromatic focus at the intermediate collimator system. Here an optimal separation quality between surface muons and the eight-fold higher beam positron contamination from Michel positrons or positrons derived from \(\pi ^0\)-decay in the target and having the correct momentum, can be achieved (see Fig. 5) [1]. The muon range-momentum adjustment is made at the centre of the superconducting beam transport solenoid BTS where a Mylar® degrader system is placed at the central focus to minimise multiple Coulomb scattering. The degrader thickness of \(300\,\upmu \text {m}\) takes into account the remaining material budget of the vacuum window at the entrance to the COBRA magnet and the helium atmosphere inside, so adjusting the residual range of the muons to stop at the centre of a \(205\,\upmu \text {m}\) thick polyethylene target placed at 20.5\(^{\circ }\) to the axis.

The residual polarisation of the initially 100% polarised muons at production has been estimated by considering depolarising effect at production, during propagation and due to moderation in the stopping target. The net polarisation is seen in the asymmetry of the angular distribution of decay Michel positrons from the target. The estimate is consistent with measurements made using Michel positrons at the centre of the COBRA spectrometer [89], where the energy-dependent angular distributions were analysed. A high residual polarisation of \(P_{\mu ^+} = -0.86\pm 0.02~\mathrm {(stat.)} + 0.06 - 0.05~\mathrm {(syst.)}\) was found, with the single largest depolarising contribution coming from the cloud muon content of the beam. These are muons derived from pion decay-in-flight in and around the target and inherently have a low polarisation due to the widely differing acceptance kinematics. The cloud muon content in the \(28\) MeV/c surface muon beam was derived from measurements where the muon momentum spectrum was fitted with a constant cloud muon content over the limited region of the kinematic edge of the spectrum at \(29.79\) MeV/c. This was cross-checked against measurements at \(28\) MeV/c using a negative muon beam. In this case, there are no such surface muons (due to the formation of pionic atoms on stopping) and hence a clear cloud muon signal can be measured. When comparing the cross-sections and the kinematics of pions of both charge signs consistency is found, with a ratio of \(\sim 1.2\)% of negative cloud muons to surface muons at \(28\) MeV/c. This situation is not expected to change significantly for MEG II, apart from the slightly higher divergences expected due to the increased \(\varDelta p_\mathrm {\mu ^+}/p_\mathrm {\mu ^+}\) and a possible difference in the polarisation quenching properties of the target material in a magnetic field [90], which is still under investigation.

2.2 Upgrade concept

The increased sensitivity sought in MEG II will partially be realised by the full exploitation of the available beam intensity and partially by the increased detector performances, allowing the most significant contribution to the background from overlapping accidental events, to be managed, at the level of an order of magnitude higher sensitivity for the experiment. As outlined in Sect. 1.1 the accidental background has a quadratic dependence on the muon beam stopping rate, whereas the signal is directly proportional to the stopping rate. This puts stringent limits on the material budget and the suppression of beam-correlated backgrounds in the beam line, while having to allow for the flexibility and versatility of different beam modes required for calibration purposes. The three main modes required are:

-

stopped surface muon beam for normal data-taking at \(28\) MeV/c,

-

stopped negative pion beam of \(70.5\) MeV/c for charge-exchange \(\pi ^-\mathrm {p}\rightarrow \pi ^0\mathrm {n}\) (CEX) and radiative capture \(\pi ^-\mathrm {p}\rightarrow \gamma \mathrm {n}\) (RC) photons (see Sect. 6.4) and

-

a monochromatic positron beam of \(53\) MeV/c for Mott scattering calibrations (see Sect. 4.6.1).

For MEG II, the beam line components and optics will stay the same as for MEG, apart from the introduction of extra beam monitoring tools (cf. Sect. 2.3.2). However, the increased muon rate for MEG II, while maintaining the high transmission optics, can only be achieved by an increase in the momentum-byte \(\varDelta p_\mathrm {\mu ^+}/p_\mathrm {\mu ^+}\) i.e. by means of opening the \(\pi \)E5 channel momentum slits to their full extent. An increased \(\varDelta p_\mathrm {\mu ^+}\) however, implies an increased range straggling of the beam. A study undertaken for the MEG II upgrade proposal [88] looked at various beam/target scenarios comparing the use of a surface muon beam of \(28\) MeV/c (mean range \(\sim 125\) mg cm\(^{-2}\)) to that of a sub-surface beam of \(25\) MeV/c (mean range \(\sim \) 85 mg cm\(^{-2}\)). As the name implies, these are muons with a unique momentum of \(29.79\) MeV/c from stopped pion decay, which are selected from deeper within the target and lose some of their energy on exiting.

The potential advantage of such a sub-surface beam is then the reduced range straggling which is comprised of two components (cf. Eq. (6)). The first factor from energy-loss straggling of the intervening material, which at these momenta amounts to about 9% (FWHM) of the range [91] and the second from the momentum-byte \(\varDelta p_\mathrm {\mu ^+}/p_\mathrm {\mu ^+}\). However, the range and the straggling vary most strongly with momentum, being proportional to \(a\times p^{3.5}\), where ‘a’ is a material constant,

Therefore, the most efficient way to reduce the range straggling is by reducing the momentum rather than the \(\varDelta p_\mathrm {\mu ^+}/p_\mathrm {\mu ^+}\).

A momentum change has a direct impact on the target thickness, which is a balance between maximising the stop density and minimising the multiple Coulomb scattering of the out-going Michel positrons and the photon background produced in the target. Furthermore, the surface muon rate also decreases with \(p^{3.5}\) and therefore ultimately limits how low one can go down in momentum. This behaviour is shown in Fig. 6, where the measured muon momentum spectrum is fitted with a \(p^{3.5}\) power-law, folded with a Gaussian momentum resolution equivalent to the momentum-byte, plus a constant cloud muon content. The blue and the red (truncated) boxes show the \(\pm 3\sigma _{p_\mathrm {\mu ^+}}\) momentum acceptance for the surface/sub-surface beams, corresponding respectively to (\({\pm 2.7}/{\pm 2.5}\)) MeV/c. The optimal momentum yielding the highest intensity within the full momentum-byte is centred around \(28.5\) MeV/c. For each data-point the whole beam line must be optimised. The upgrade study [88] investigated various combinations of beam momentum and target parameters such as thickness which varied between 100–250 \({\upmu \hbox {m}}\) and orientation angle varying between \({15.0}^{\circ }\)–\({20.5}^{\circ }\). This resulted in only one really viable solution that could yield the required muon stopping intensity of 7e7 \(\upmu ^{+}\)/s suitable for achieving the goal sensitivity within a measuring period of \({\sim 3}\) years: a surface muon beam of \(28\) MeV/c with a polyethylene target of \(140\,\upmu \text {m}\) thickness, placed at an angle of 15.0\(^{\circ }\) to the axis.

A sub-surface beam solution was only able to meet the criteria by scaling-up the target thickness to \(160\,\upmu \text {m}\), which negated the principle. Hence the baseline solution chosen for MEG II was the surface muon beam solution due to the thinner target and higher achievable rate as well as its beneficial impact on the resolutions and background.

2.3 Beam monitoring

Two new detectors have been developed to measure the beam profile and rate: the sampling scintillating fibre beam monitoring (sampling SciFi) mounted at the entrance to the spectrometer and the luminophore foil detector (CsI on a Mylar support) coupled with a CCD camera installed at the intermediate focus collimator system.

2.3.1 The sampling SciFi beam monitoring detector

This detector is a quasi non-invasive, high rate sustainable beam monitoring tool, able to provide beam rate, profile measurements and particle identification in real time. It is based on scintillating fibres (SciFi) coupled to SiPMs; the usage of SiPMs allows for a detector able to work in high magnetic fields.

It consists of a grid of two orthogonal fibre layers: one with the fibres running along the x-axis and the other with the fibres along the y-axis. The detector is expected to be located at the end of the vacuum beam line, just in front of the spectrometer. A movable configuration allows the remote removal/insertion of the detector into the beam.

Figure 7 shows the built and tested full scale prototype. We used Saint-Gobain BCF-12, 250 \(\times \) 250 \(\upmu \hbox {m}^{2}\) double-cladding fibres [92], each one independently coupled at both ends to S13360-1350CS SiPMs from Hamamatsu Photonics [93] (with an active area of 1.3 \(\times \) 1.3 \(\hbox {mm}^{2}\) and a pixel size of 50 \(\times \) 50 \(\upmu \hbox {m}^{2}\)). The relative distance between adjacent fibres mounted in the same layer is equal to 4.75 mm, a pitch which satisfies the requirements for a precise measurement of the beam profile and rate. Furthermore a large detector transparency \(T > 92\%\) (where \(1-T =\) particles hitting the fibres/total incident particles) is achieved with a relatively small number of channels (\(\approx 100\)). In fact for this prototype we mounted 21 fibres per layer giving a total number of 84 channels. The signals are sent to the TDAQ prototype (see Sect. 8) that includes also the preamplifiers (with adjustable gain up to 100, which is what we used here) and the power supplies for the SiPMs (operated at \(\approx \) 55.6 V). The trigger used for the beam profile and rate measurements is the “OR” of all the “AND”s of the SiPMs coupled to the same fibre, with a common threshold for all channels \({\ge 0.5}\) photoelectrons.

Figure 8 shows the beam profile as measured with the detector mounted along the \(\pi \)E5 beam line. The incident particles are positive muons with an initial momentum of \(28\) MeV/c, after having left the \(190\,\upmu \text {m}\) Mylar window at the end of the vacuum beam line and travelling some 15 cm in air before traversing the \(25\,\upmu \text {m}\) of Tedlar\(^{\textregistered }\) used as a light tight shield. The corresponding total rate and beam profiles were \(R_\mathrm {\mu ^+}(\mathrm {at}~I_{\mathrm {p}}= {2.2}\,{\hbox {mA}}) = (1.11\pm 0.011)\times 10^{8}{\mu ^{+}/{s}}\) and \((\sigma _x,\sigma _y)= (18.1 \pm 0.1, 17.8 \pm 0.1)\hbox { mm}\), respectively. These measured numbers are consistent to within 5% or better with those provided by our “standard” beam monitoring tools (methods based on a 2D x–y scanner using a large depletion layer APD or a pill scintillator coupled to a miniature PMT). One of the most attractive features of this detector is its capability of providing the full beam characterisation in just tens of seconds with all the associated benefits such as faster beam tuning, real time feedback about a malfunctioning of the beam/apparatus, reduced systematic uncertainties etc..

Figure 9 shows the detected charge associated withpositrons of \(28\) MeV/c and stopping muons in the fibres. A clear separation between the positrons (which are minimum ionising particles m.i.p.) and the low energy muons can be seen.

Figure 10, finally, shows the capability of the detector to distinguish between high momentum particles (\(p=115\,\text {MeV}/\mathrm{c}\)) by plotting the measured charge associated to them versus their time-of-flight (the radio frequency of the main accelerator is used as a time reference). From left to right we have positrons, pions and muons.

2.3.2 An ultra-thin CsI(Tl) luminophore foil beam monitor

A new in-situ, high rate and non-destructive beam monitoring system based on a thin CsI(Tl) scintillation foil (luminophore) and a CCD camera system has been developed for MEG II. Initial tests as an external device able to measure both the beam intensity as well as giving a quantitative measure of the beam spot size have led to a permanent installation incorporated into the beam line vacuum at the MEG intermediate focus collimator system.

The advantages of such a system over the standard MEG pill-scintillator 2D x–y scanner system are four-fold: in-situ, non-destructive measurement of the beam characteristics, no dismantling of beam line components necessary, as in the case of the pill-scintillator scanner system; in vacuum measurement, no corrections needed for multiple Coulomb scattering in the vacuum window or air; comparatively fast measurement, multiple exposures each of 10–100 s compared with a pill-scintillator 2D “cross-scan” of 10 min or a 2D “raster-scan” of 90 min; continuous monitoring possible allowing online centring in the event of beam steering due to changes of the proton beam position on the muon production target E.

2.3.2.1 CsI(Tl) foils and CCD camera system CsI(Tl) is a well known and common inorganic scintillator with a relatively high light yield at more than \({5\times 10^{4}}\) ph/MeV of deposited energy. The peak emission of CsI(Tl) is approximately 560 nm and well suited for use in visible light imaging systems such as a CCD. The scintillation light decay constants (\(\sim 1\) \(\upmu \)s) are rather long compared to fast organic scintillators though not problematic for this application due to the much longer exposure times.

Four foils were constructed using a Lavsan (Mylar\(^{\textregistered }\) equivalent) base structure, where a thin layer of CsI(Tl) was applied using chemical vapour deposition. The precise CsI(Tl) layer thickness was varied between \(3.0\,\upmu \text {m}\) and \(5.2\,\upmu \text {m}\), allowing for the comparison and possible optimisation of layer thickness.

The imaging system used was a Hamamatsu ORCA FLASH4.0 camera providing 4.19 megapixels along with 16 bit pixel depth [94]. An internal Peltier cooling device as well as an external water cooling system allow the sensor temperature to be reduced to \(-30^{\circ }\)C and hence significantly reducing the thermal noise. The sensor’s peak quantum efficiency matches well to the CsI(Tl) peak emission near 560 nm.

2.3.2.2 Beam image analysis Beam profile imaging consists of multi-frame (typically 10) exposures each of 10 s length together with an equivalent set of background exposures taken with the beam-blocker closed, enabling stray ambient light and the inherent thermal noise of the sensor to be eliminated on subtraction.

All signal and background images are first summed and averaged and then subtracted to generate a calibrated signal image, from which a central region of interest is selected. This image is then fitted using a 2D correlated Gaussian function to obtain the beam position and widths in x and y as well as their correlations. The summed image intensity is normalised by the total proton current during the exposure period. The current measurement is initiated by a simultaneous external trigger of the proton signal scalar and the camera shutter. A typical image after processing is shown in Fig. 11.

2.3.2.3 Beam width A comparison of the beam spots as measured by the pill-scintillator to those obtained from x–y projections of the luminophore foil image are shown in Fig. 12 with good agreement within the fit widths. The difference in centroids is due to the difference in alignment between the two setups.

The spatial resolution of the luminophore foil system was determined by placing an Al grid just upstream of the foil, while irradiating with the muon beam. The grid edges of the resultant picture image, when fitted with a step-function convoluted with a Gaussian resolution function, yield an upper limit on the combined foil, camera and beam resolution of \(650\,\upmu \text {m}\) which includes beam divergence and range straggling effects, so that the intrinsic spatial resolution of the foil is much smaller.

2.3.2.4. Beam intensity A beam intensity comparison between the luminophore system and the pill-scintillator system was made by symmetrically opening the \(\pi \)E5 FS41L/R slit system in small steps, so scanning the full beam intensity over an order of magnitude. The comparative plot of relative intensity normalised to the proton beam intensity is shown in Fig. 13. Good agreement can be seen at the 5\(\%\) level which can be understood as being due to the difference in technique. The pill-scintillator measurement samples only a 2 mm diameter portion of the beam on the beam-axis, whereas the luminophore samples the entire beam spot which changes in size with slit opening, at the 10\(\%\) level over the entire range.

2.3.2.5 Beam line setup The initially developed external system has since been incorporated into the beam line vacuum as part of the intermediate focus collimator system shown in Fig. 14. The foil frame is attached to a drive shaft and pulley system that allows the foil to be rotated in and out of the beam while under vacuum. A calibration grid is attached to the surface of the frame to allow for a pixel-to-millimetre conversion. The foil and frame are viewed inside the beam pipe, under vacuum and imaged with the CCD camera via a mirror system and glass window on a side port. The interior of the vacuum pipe can be illuminated with a UV LED to conduct calibration measurements of the foil and CCD system within the light-tight region.

An example of the usefulness of such a system can be seen in Fig. 15, which shows the separation quality between muon and positron beam spots imaged at the collimator system with the luminophore foil. The separation quality has purposely been reduced by adjusting the parameters of the Wien filter in order that both spots can be seen simultaneously on the picture. The use of the luminophore allows a calibration of the spatial separation to be made effectively online.

2.3.2.6 Conclusions Thin CsI(Tl) luminophore foils offer fast, in-situ beam monitoring possibilities, with negligible impact on beam rate and emittance. The foils combined with a cooled camera system with sufficient resolution reproduces beam profile and rate measurements conducted with the scanning pill-scintillator. Full 2D beam measurement can be made approximately ten times faster while providing long-term non-destructive beam information. Furthermore, it allows a direct measure of beam parameters without the need for multiple Coulomb scattering corrections due to air or vacuum windows and allows direct feedback on external influences on the beam position or intensity.

3 Target

The basic requirements for a MEG stopping target are six-fold:

-

a high muon stopping density over a limited axial region centred on the COBRA fiducial volume,

-

minimisation of multiple Coulomb scattering for the outgoing positrons,

-

minimisation of photon conversions from RMD in the target,

-

minimisation of positron AIF or bremsstrahlung with photons entering the detector acceptance,

-

allow reconstruction of the positron decay vertex and initial direction at the vertex, onto the target plane and

-

mechanically stable with good planarity and remotely movable for compatibility with calibrations requiring other targets.

Owing to the thinner target, smaller angle for MEG II and the increased \(\varDelta p_\mathrm {\mu ^+}/p_\mathrm {\mu ^+}\), the remaining variable material budget consisting of degrader and COBRA helium environment, must then be matched to give an optimal residual range at the target. Figure 16 shows the simulation results for the optimal stopping efficiency versus degrader thickness for the previous MEG \(205\,\upmu \text {m}\) thick polyethylene target. Two different He-concentrations are shown, from which can be seen that 1% of air is equivalent to \(\sim 10\,\upmu \text {m}\) of Mylar.

For MEG II a separate target study was also undertaken to examine the material possibilities for a target equivalent to the baseline \(140\,\upmu \text {m}\) polyethylene (CH\(_2\)) target, placed at 15.0\(^{\circ }\) to the axis. The resulting set of candidate targets are listed in Table 1 below. Since the material thickness for each target is equivalent in terms of the surface density g \(\hbox {cm}^{-2}\), the residual range and hence the degrader thickness is therefore also the same.

The main properties affecting tracking and background production, as well as the target stopping efficiency show that there are no dramatic differences between the candidates, with multiple Coulomb scattering estimates varying less than 10% from the average, while the equivalent thickness in radiation lengths varies by about 15% from the average. A separate background study to estimate the number of background photons with energy \(E_{\mathrm {\gamma }}> 48\,\text {MeV}\) produced in the fiducial volume of COBRA per incident muon and entering the LXe photon detector gave values between \({(1.14\pm 0.05)\times 10^{-6}}\,{\gamma /\mu ^{+}}\) for the scintillation target and \({(1.22\pm 0.05)\times 10^{-6}}\,{\gamma /\mu ^{+}}\) for the Mylar target. The equivalent simulated optimised stopping efficiency in the case of the MEG II polyethylene target is shown in Fig. 17.

Table 1 shows that different materials outperform each other in different categories. In general, the beryllium target shows an overall good performance, though from the thickness and size required, as well as from the safety aspects it is not favoured. Diamond, which is mechanically stable and known to be more radiation tolerant has the smallest radiation length, as well as having scintillation properties. However, it is currently not commercially available in the size required for a MEG II target. The scintillation target (BC400B) from Saint-Gobain lies in the mid-range of the performance span, though with the lowest number of accepted background photons per muon of all targets. A very important and added advantage over the other non-scintillating targets is, the possibility of non-destructive beam intensity and profile measurements, using a CCD camera and optical system. This would allow corrections, caused by proton beam shifts on the main pion production target, to be made to the beam centring on the MEG muon target during data-taking. Two prototype targets have so far been implemented for the Pre-Engineering Runs 2015/2016, a polyethylene (PE) and a polyvinyltoluene (PVT) one. The prototype scintillation target (PVT) is seen in Fig. 18.

3.1 Scintillation target prototype

(Left) shows two sides of the prototype PVT target used during the 2016 Pre-Engineering Run. The calibration grid is used for the perspective transformation. The carbon-fibre/Rohacell® foam frame can be seen from the other side. (Right) shows the CCD setup and Mylar mirror at the downstream side (DS) of the COBRA magnet \(\sim \) 2.1 m DS of the target

Figure 18 shows the two sides of the prototype target used in the 2016 Pre-Engineering Run, the downstream CCD viewing side has a calibration grid as part of the frame to ensure a correct perspective transformation of the beam image. The frame is a sandwich of carbon-fibre and Rohacell foam ensuring a lightweight construction and strength, as can be seen from the lower image in Fig. 18 (left). The fiducial size of the scintillator, excluding the frame is 260 \(\times \) 70 \(\hbox {mm}^{2}\).

The bare setup including CCD camera, lens and thin Mylar mirror system placed \({\sim }2.1\) m away from the target, on the downstream-side (DS) of the COBRA magnet is shown in Fig. 18 (right). Analysed background subtracted, perspective corrected and 2D Gaussian fitted beam images (see Fig. 19) show that even with a non-ideal CCD camera (no cooling), and exposures of 100 s in a strong gradient magnetic field of several \(\sim \) T, comparable results, at the sub-millimetre level, to the usual 2D APD “raster scans” performed at the centre of COBRA, can be obtained, in a fraction of the time. Furthermore, it was demonstrated that the beam intensity could be measured over the dynamic range of a factor of 50 and reproduce results measured independently with the “pill scintillator” scanner system as shown in Fig. 20. The measurements were made by adjusting the opening of the FS41L/R momentum slits of the channel, so changing the intensity. Good agreement is seen.

Finally, a first radiation damage study was also undertaken during the 2016 run with about \(5.5\times 10^{13}\,\upmu ^{+}\) integrated, corresponding to an integrated dose of \(\sim 30\) kGy (3 Mrad). A loss in light yield was seen, though less than expected [95], which may be understood by the way in which the scintillation light is collected, namely through the very thin scintillator thickness thereby being less sensitive to attenuation. A fit to the data with an exponential decay law gives a decay constant of \(D={2.793\times 0.041\times 10^{14}}\,\upmu ^{+}\) as shown in Fig. 21. Extrapolating this to the longest MEG beam run of 2012 at the MEG II beam intensity as measured above, would lead to a light yield of \({\sim 14}\)% at the end of a 1-year period however, still yielding measurable profiles and intensities as demonstrated above. Normalising UV-LED measurements would be however required for a corrected intensity measurement. Furthermore, this would necessitate a new target for each year. Further radiation tests are envisaged to study the effect on the mechanical properties such as planarity, before a final decision on the target material is taken. A new CCD camera system for imaging the beam on target has now been procured, including cooling and a mechanical shutter which should significantly improve the image quality and the analysis procedure.

3.1.1 Target alignment

An important consideration for the target implementation is the accurate knowledge of the target position, in particular the knowledge of the target planarity and its perpendicular distance from its nominal position. Errors in this coordinate introduce a systematic error in the positron direction at the target due to the error in the path length of the curved positron trajectory projected on to the target plane. An offset of 1 mm in the target plane introduces a systematic error in the positron \(\phi \)-angle of 7–12 mrad, comparable to the \(\phi \) angular resolution achieved by MEG [2]. In MEG, this position was monitored by imaging small holes in the target foil. This monitoring was statistics limited in its ability to monitor deformation of the target foil during the run; lack of precise target position and shape information introduced a significant contribution to the systematic uncertainties in the positron angle measurement. With the anticipated improved angular resolution in MEG II, improved monitoring of the target position and shape is required, with a goal of monitoring the target planarity and transverse position to a precision \({<50}\,{\upmu \hbox {m}}\) and the axial position to precision \({< 100}\,{\upmu \hbox {m}}\).

It is envisaged, as in MEG, to implement both an optical survey for the determination of the target position, orientation, and shape and the software alignment method introduced above. The perpendicular distance of the target plane from the origin is determined by imaging the y-positions of a number of holes; there is a deficit of trajectories originating from the position of the holes. Any error in the perpendicular distance of the target from its nominal position results in the hole images varying in a systematic way depending on the value of \(\phi _{\mathrm {e}^{+}}\) (see [1] for a full description of this technique). An example of a reconstructed vertex plot of the target is shown in Fig. 22 corresponding to the 2011 run data. As in MEG, this technique will be statistics limited and not allow continuous monitoring of the target position and planarity.

A number of further improvements to the target and its optical imagery are planned and under study:

-

a distortion-free/distortion minimising target suspension system allowing minimal impact of the target frame on the target foil;

-

further investigations to understand the origin of the previous MEG target distortion (e.g. radiation damage, brittleness due to dry He-environment);

-

measurement of the target planarity both before and after exposure using a coordinate measuring machine with a precision better than 50 \({\upmu \hbox {m}}\);

-

determination of the target frame position in the experiment to a precision of \({{\sim }15}\,{\upmu \hbox {m}}\) using a laser survey technique with low-mass corner-cube reflectors mounted on the target frame;

-

photogrammetric monitoring of target position, orientation and shape. A series of printed patterns (dots) are optically monitored by CCD cameras viewing the target close to axially. Preliminary studies show a precision of \({{\sim }10}\,{\upmu \hbox {m}}\) in the transverse coordinate (x–y) and \({{\sim }100}\,{\upmu \hbox {m}}\) in the axial coordinate can be achieved. The current scintillator target with its printed pattern is shown in Fig. 23.

4 Cylindrical drift chamber

4.1 Cylindrical drift chamber overview

The MEG II Cylindrical Drift Chamber (CDCH) is a single volume detector, whose design was optimized to satisfy the fundamental requirements of high transparency and low multiple Coulomb scattering contribution for 50 MeV positrons, sustainable occupancy (at \({\sim } 7\times 10^{7}\,\upmu ^{+}\)/s stopped on target) and fast electronics for cluster timing capabilities [96]. Despite the fact that in MEG II the acceptance of the apparatus is dictated by the C-shaped LXe photon detector (see Sect. 6), CDCH has full coverage (\(2\pi \) in \(\phi \)), to avoid non-homogeneous and asymmetric electric fields.

The mechanical structure, shown in Fig. 24, consists of a 1.91 m long cylinder, inner radius of 17 cm and outer radius of 29 cm. It is composed of 10 concentric layers (see Fig. 25), azimuthally divided in 12 identical 30\(^{\circ }\) sectors per layer, 16 drift cells wide. Each drift cell layer consists of two criss-crossing field wires planes enclosing a sense wires plane at alternating signs stereo angles (approximately ranging from 6.0\(^{\circ }\) to 8.5\(^{\circ }\) while radius increases) with respect to contiguous layers for a precise reconstruction of the z-longitudinal coordinate.

The double readout of the wires with the techniques of charge division and of time propagation difference, together with the ability to implement the cluster counting-timing technique [96], will further improve the longitudinal coordinate measurement.

The stereo configuration of wires gives a hyperbolic profile to the active volume along the z-axis. The single drift cell (see Fig. 25) is approximately square, 6.6 mm (in the innermost layer) to 9.0 mm (in the outermost one) wide, with a \(20\,\upmu \text {m}\) diameter gold plated W sense wire surrounded by \(40\,\upmu \text {m}\) diameter silver plated Al field wires in a ratio of 5:1. For equalising the gains of the innermost and outermost layers, two guard wires layers (\(50\,\upmu \text {m}\) silver-plated Al) have been added at proper radii and at appropriate voltages. The total number of wires amounts to 13 056 for an equivalent radiation length per track turn of about \(1.58\times 10^{-3}\) X\(_{0}\) when the chamber is filled with an ultra-low mass gas mixture of helium and isobutane (C\(_4\)H\(_{10}\)) in the ratio 90:10 (compared with \(2.0\times 10^{-3}\) X\(_{0}\) in the MEG DCH [1]). The drift chamber is built by overlapping along the radius, alternatively, PC Boards (PCB), to which the ends of the wires are soldered, and PEEK®Footnote 6 spacers, to set the proper cell width, in each of the twelve sectors, between the spokes of the helm shaped end-plate (see Fig. 26). A carbon fibre support structure guarantees the proper wire tension and encloses the gas volume. At the innermost radius, an Al Mylar foil separates the drift chamber gas volume from the helium filled target region.

Prototypes have been built [97] to demonstrate that the design single hit resolution of the chamber (\(\sigma _r\simeq 110\,\upmu \text {m}\)) can be reached and the detector can be operated in the high particle flux environment of MEG II without a significant ageing, as detailed in Sect. 4.7.

4.2 The choice of the filling gas

CDCH uses a helium based gas mixture. The choice of helium is very advantageous, because of its large radiation length (\(\hbox {X}_0 {\sim } 5300\,\hbox {m}\) at STP), which ensures a small contribution in terms of multiple Coulomb scattering, a very important feature in low momentum measurements.

A small amount (\(10\%\)) of isobutane is required as a quencher to avoid self-sustained discharge. Such a percentage is sufficient as it raises the number of primary ionisation pairs to \({\sim }\) 13 \(\hbox {cm}^{-1}\) [98] though lowers the mixture radiation length to \(\hbox {X}_0 {\sim } 1300\,\hbox {m}\). Unfortunately, the use of an organic quencher also results in additional problems after exposure to high radiation fluxes. The recombination of dissociated organic molecules results in the formation of solid or liquid polymers which accumulate on the anodes and cathodes, contributing to the ageing of the chamber.

The fairly constant drift velocity in helium based gas mixtures assures a linear time-distance relation, up to very close distance to the sense wire. On the other hand, the high helium ionisation potential of 24.6 eV is such that a crossing particle produces only a small number of primary electronion pairs in helium based gas mixture. In combination with the small size of the drift cells, it enhances the contribution to the spatial resolutions coming from the statistical fluctuation of the primary ionisation along the track, if only the first arriving electrons are timed. An improvement can be obtained using the cluster timing technique, i.e. by timing all arriving ionisation clusters and so reconstructing their distribution along the ionisation track [96].

4.3 Electronics

In order to permit the detection of single ionisation clusters, the electronic read-out interface has to process high speed signals. For this purpose, a specific high performance 8-channels front-end electronics (FE) has been designed with commercial devices such as fast operational amplifiers. This FE was designed for a gain which must produce a suitable read-out signal for further processing, low power consumption, a bandwidth adequate to the expected signal spectral density and a fast pulse rise time response, to exploit the cluster timing technique [99, 100].

The FE single channel schematic is represented in Fig. 27. The input network provides decoupling and protection, while signal amplification is realized with a double gain stage made from ADA4927 and THS4509. Analog Device’s op-amp ADA4927 [101] works as a first gain stage: it is a low noise, ultra-low distortion, high speed, current feedback differential amplifier. The current feedback architecture provides a loop gain that is nearly independent of the closed-loop gain, achieving wide bandwidth, low distortion, low noise (input voltage noise of only 1.3nV/\(\sqrt{\hbox {Hz}}\) at high gains) and lower power consumption than comparable voltage feedback amplifiers. The THS4509 [102] by Texas Instruments is used as a second gain stage and output driver. It is a wide-band, fully differential operational amplifier with a very low noise (1.9 nV/\(\sqrt{\hbox {Hz}}\)), and extremely low harmonic distortion of \(-75\) dBc \(\mathrm {HD}_2\) and \(-80\) dBc \(\mathrm {HD}_3\) at 100 MHz. The slew-rate is 6600 V \(\upmu \hbox {s}^{-1}\) with a settling time of 2 ns to 1% for a 2 V step; it is ideal for pulsed applications. The output of the FE is differential, in order to improve the noise immunity and it is connected to the WaveDREAM Board [103] through a custom cable 5 m long, designed to have a stable, flat frequency response (Amphenol Spectra Strip SkewClear [104]). This cable is made from shielded parallel pairs, each pair being individually shielded; an overall ground jacket is also present, giving a maximum attenuation of 0.75 dB \(\hbox {m}^{-1}\) at 625 MHz.

In order to balance the attenuation of the output cable, a pre-emphasis on both gain stages has been implemented. The pre-emphasis introduces a high frequency peak that compensates the output cable losses resulting in a total bandwidth of nearly 1 GHz.

The FE electronics boards are placed in each sector of CDCH; in Fig. 28 the end-plate mechanical scheme, in which the boards will be inserted, is shown. Due to the area of the FE output connector socket and considering the available space between the layers, three different board versions have been designed, one with the output connector on the right, one in the centre and one on the left.

Pre-amplified differential signals are successively digitised by the WaveDREAM board at a (programmable) speed of 2 GSPS (Giga-samples per second) with an analogue bandwidth of 1 GHz [103].

The current consumption for each channel is 60 mA at a voltage supply of \(\pm \,2.5\) V; this correspond to a total power dissipation per end-plate of about 300 W, therefore an appropriate cooling system relying both on recirculation of coolant fluid and on forced air is foreseen.

4.4 The wiring procedure

A wiring system robot [105] has been designed and assembled in the clean room (see Fig. 29). It allows to automatically stretch the wires on PCB frames, keeping under control the wire tension and pitch parameters; moreover the system fixes the wires on the PCB by a contact-less soldering. Since CDCH has a high wire density (12 wires/\(\hbox {cm}^{2}\)), the classical feed-through technique, as a wire anchoring system, is hard to implement, therefore the development of a new wiring strategy was required.

The wiring robot has been designed with the following goals:

-

managing a very large number of densely spaced wires,

-

applying the wire mechanical tension and maintaining it constant and uniform throughout all the winding process,

-

monitoring the wire positions and their alignments within a few tens \(\upmu \hbox {m}\),

-

fixing the wires on the PCB with a contact-less soldering system and

-

monitoring the solder quality of the wires to the supporting PCBs.

These requirements are satisfied by the following three systems:

-

1.

A wiring system that uses a semi-automatic machine to simultaneously stretch the multi-wire layer with a high degree of control on the wire mechanical tension (better than 0.2 g) and on the wire position (of the order of \(20\,\upmu \text {m}\)) .

-

2.

A soldering system composed of an infrared (IR) laser soldering system and tin-feeder.

-

3.

An automatic handling system which extracts the multi-wire layers from the wiring system and places them in a storage/transport frame.

A dedicated LabView® software [105], based on a CompactRIO platform [106], controls the three systems simultaneously, sequencing and synchronising all the different operations.

4.4.1 Wiring system

The purpose of the wiring system is the winding of a multi-wire layer consisting of 32 parallel wires at any stereo angle. In order to achieve a multi-wire layer (see Fig. 30), two PCBs, aligned and oriented at the proper stereo angle, are placed back-to-back on the winding cylinder. The multi-wire layer is obtained in a single operation by winding along a helical path the same wire 32 times around the cylinder with a pitch corresponding to the wire PCBs spacing. The correct pitch is achieved by a system of synchronised stepping motors, through the CompactRIO system and controlled by a digital camera with position accuracy of the order of \(20\,\upmu \text {m}\). The wire mechanical tension is monitored by a high precision strain gauge and corrected with a real-time feedback system acting on the wire spool electromagnetic brake.

The wire tension variations are of the order of \(8\%\), without the feedback system, because of the mechanical tolerances. The feedback system reduces these variations to about \(1\%\), reaching the values listed in Table 2 (see Fig. 31).

4.4.2 Soldering system

The soldering phase is accomplished by an IR laser soldering system (LASCON Hybrid with a solder wire feeder [107]). Each wire is fixed at both ends while still constrained around the winding cylinder under its own tension. The laser system is controlled by the CompactRIO and it is synchronised with the positioning system by using a pattern matching software to localise the soldering pad. All the soldering parameters (temperature, soldering time, solder wire length and feeding speed) are defined through a proper script.

4.4.3 Automatic handling system

The wound layer of soldered wires around the cylinder is unrolled and detensioned for storage and transport. This is accomplished with an automatic device. The first wire PCB is lifted off from the cylinder surface with a linear actuator connected to a set of vacuum operated suction cups and placed on the storage and transport frame. The unrolling is accomplished by synchronising the cylinder rotation with the linear displacement of the frame. Once the layer of soldered wires is completely unrolled, the second wire PCB is lifted off from the cylinder, as the first one, and placed on the frame. The frame hosts two supports made of polycarbonate, dedicated to holding the wire PCBs at the correct position by means of nylon screws. One of the two supports can slide into the frame by adjusting the wire length, with a longitudinal threaded rod. The wiring information relative to each frame is stored in a database. Then the wires on the frame are examined, stored and prepared for transportation to the CDCH assembly station.

4.5 The assembling procedure

The assembly of the drift chamber is as critical as the wiring phase and has to be performed under very carefully controlled conditions [108]. In fact, to reach the required accuracy on the drift chamber geometry and to avoid over-tensioning of wires, it is necessary to measure the position of the end-plates to better than \(100\,\upmu \text {m}\). For example, an error of 1\(^{\circ }\) on the twist angle can correspond to an extra elongation of the wire of about 1 mm. It is therefore very important to have accurate position measurements over the chamber length of \({\sim }\) 2 m. For this reason, the assembly is performed by using a coordinate measuring machine; the machine, a DEA Ghibli [109], has a maximum machine travel distance of 2500 mm \( \times \) 1500 mm \( \times \) 1000 mm and a nominal accuracy of \(5\,\upmu \text {m}\) with a contact measuring tool. The measurements of the positions of the PCBs are performed using an optical tool for the identification of the cross marks placed on the PCBs. The accuracy of the optical measurement is \({\sim } 20\,\upmu \text {m}\) in the horizontal plane and (making use of the focal distance of the optics) \({\sim } 40\,\upmu \text {m}\) on the vertical axis.

The first test on the wire trays is a quick measurement of the elongation-tension curve in the proximity of the working point. In this test the wire elongation is measured with the optical tool of the measuring machine and the wire tension is measured both by acoustic and electrical methods. In the acoustic method a periodic signal at a frequency close to the wire resonance is measured in the readout circuit by applying a HV difference between two adjacent wires and by using an acoustic source to excite the wires’ oscillation. This system has the ability of measuring simultaneously up to 16 wires. In the electrical method the wire oscillation is forced by applying a HV signal at a known frequency. The mutual capacitance variation between two adjacent wires is then measured during a HV frequency scan on an external auto oscillating circuit connected to the wires.

The drift chamber assembly is performed in safe conditions with unstretched wires: the distance between the end-plates is fixed at 1906 mm, 6 mm less than the nominal length (1912 mm) and 2 mm less than the untensioned wire length. The positioning of the wire trays on the drift chamber is done in a well-constrained way using a rocker arm, shown in Fig. 26.

The wire tray is first engaged to the rocker arm by means of two precision pins fitting two PCB holes and a clip. The rocker arm is then engaged to a support that leaves it free to rotate and transfers the wire tray on the end-plates between two spokes. The final positioning is driven by hand though dedicated nippers. The wire PCBs are glued on the PEEK spacers with double sided tape previously applied on the inner layer. The PEEK spacers are needed to separate the layers at the right distance. Two pressing arches are used for ensuring a good adhesion of the tape.

In Fig. 32 we show the picture of the drift chamber after assembled the \(80\%\) of the layers, the crossing of the layers in the two stereo views is shown in the box, while Fig. 33 shows the hyperbolic profile of the drift chamber with all layers mounted.

4.6 Calibration and monitoring

Michel events represent the natural way to continuously and fully characterise the spectrometer with dedicated pre-scaled triggers. The Michel positrons at the edge of the continuous energy spectrum are actually used to perform the alignment of the spectrometer, to define the energy scale of the detector and to extract all the positron kinematic variable resolutions (energy, time and angular variable resolutions).

4.6.1 The Mott monochromatic positron beam

The continuous Michel positron spectrum makes the calibration difficult and subject to significant systematic errors, while delivering mono-energetic positrons would bring important advantages.

Positrons are an abundant component of the MEG/MEG II beam (eight times more intense than the \(\mathrm {\mu ^+}\)-surface component, but they are normally separated and rejected). Turning the muon beam into a positron beam line and tuning the positron momentum very close to the \({\mu ^+ \rightarrow \hbox {e}^+ \gamma }\) signal energy (\(p_{\mathrm {e}^{+}}{\sim } 53\,\text {MeV}/\mathrm{c}\)), a quasi-monochromatic intense beam (\(\sigma ^\mathrm {beam}_{p_\mathrm {e}^{+}} {\sim } 250,\text {keV}/\mathrm{c}\), \(I_{\mathrm {e}^{+}}\) \({\sim } 10^{7}\hbox {e}^{+}/\hbox {s}\)) can be Mott scattered on the light nuclei present in the muon stopping target, providing a very useful \({\mathrm {e}^{+}}\)-line for a full understanding of the spectrometer from alignment to the positron kinematic variables’ resolution.

The merits of the method, some of them unique, can be listed as

-

Spectrometer absolute energy scale determination.

-

Spectrometer alignment: the alignment is performed as an iterative procedure on the residuals of the expected and measured hits of the tracks. The alignment is executed with the detector under normal running conditions (i.e. with the magnetic field on) using curved tracks having monochromatic energy which simplify the procedure.

-

Spectrometer checks: the well known relative dependence of the Mott scattered positron-momentum on the angular variables \(\phi _{\mathrm {e}^{+}}\) and \(\theta _\mathrm {e}^{+}\) makes possible a detailed investigation of the spectrometer, any distortion would signal deviation from the expected detector behaviour.

-

Spectrometer acceptance: the well known Mott cross section permits the direct measurement of the spectrometer acceptance.

-

Independent check of the muon polarisation: the comparison of the Michel versus Mott \(\theta _\mathrm {e}^{+}\)-distribution, after taking into account the \(\theta \) cross-section dependence of the Mott events, allows a cross-check of the muon polarisation at the Mott positron energy.

-

Positron momentum and angular resolutions: positron momentum and angular resolutions are extracted using double-turn track events. The double-turn track is divided in two independent tracks, the two tracks are propagated towards the target and the difference between the relevant observable (i.e. the \(p_{\mathrm {e}^{+}}\), \(\phi _{\mathrm {e}^{+}}\) or \(\theta _\mathrm {e}^{+}\) variable) is computed.

As final remarks it should be noted that the high Mott positron rate enables for a fast calibration, the method does not require a dedicated target (i.e. the Mott target is the MEG II muon stopping target) and does not need additional beam infrastructures.

The potential of this method has been proven using dedicated beam tests performed at the \(\pi \)E5 beam line (i.e. the MEG II beam line) with the MEG spectrometer in 2012. Figure 34 shows the good agreement between the Mott \({\mathrm {e}^{+}}\)-line (black dot points) and the Monte Carlo (MC) simulation prediction (red dashed area). The data are fitted with a double Gaussian function: one taking into account the core of the distribution and one the low energy tail. With the beam momentum slits virtually “fully closed” we get a line centred at \(\hat{E}_{\mathrm {e}^{+}}= {(51.840\pm 0.003)}\,{\hbox {MeV}}\) with a width \(\sigma _{E_{\mathrm {e}^{+}}}^{\mathrm {core}} = (412\pm 10)\) KeV.

The ability of performing the spectrometer alignment and obtaining consistent results can be seen in Table 3 which shows a reconstructed set of Mott data taken in 2013 based on the Michel alignment versus Mott alignment: both the mean energy and width are compared. The two data sets are in good agreement. The two different methods allow different systematic errors to be identified.

Similarly a comparison between the \(p_{\mathrm {e}^{+}}\) and angular variable resolutions extracted using the double-turn track method applied to the Mott sample and the Michel sample has also been performed. An example of the \(\theta _\mathrm {e}^{+}\)-angular distribution obtained using the Mott sample and applying the double-turn method is shown in Fig. 35. Actually the double turn resolutions on all positron variables measured with the Mott sample were found to be similar or even better (up to \(20\%\)) than that measured in the Michel data. The difference has been understood in terms of the different pile-up conditions in which the spectrometer works in the two cases. This is another example in which independent methods complement each other for a better understanding of the detector. Figure 36 shows that the method is very sensitive to misalignment. The red points show the expected dependence of the reconstructed \(E_{\mathrm {e}^{+}}\) versus the reconstructed \(\phi _{\mathrm {e}^{+}}\); the green points show the same measurement in presence of an erroneous set of survey data used as input to the alignment procedure; the plot highlights unambiguously the problem. It is also possible to reproduce the plot in the simulation when using inconsistent alignment data (see the yellow points). These results validate the method as a standard calibration tool for MEG II.

The distribution of \(\mathrm {\varDelta } \theta = \theta _1 - \theta _2\) as obtained using the Mott data sample and the double-turn method, where \(\theta _1\) and \(\theta _2\) are the reconstructed \(\theta \)-angles associated with the first and second part of a double-turn track, respectively. The distribution is fitted with a double Gaussian function

Reconstructed Mott positron energy versus reconstructed \(\phi \)-angle. Under normal functioning conditions the trend of energy versus the \(\phi \)-angle is flat (red points). If some distortions are present, deviations are observed, as shown in the case of green and yellow points. See the text for more details

4.7 Expected performances

As preliminary tests, the spatial resolution and the ageing properties of the chamber have been measured on prototypes. For a precise measurement of the single-hit resolution, several drift chamber prototypes were tested in a cosmic ray facility set-up [97, 110], and an example result is shown in Fig. 37. In these tests, total bandwidth was 700 MHz, because of limitation in the waveform digitiser. Expected biases and resolution tails are observed, due to the poor ionisation statistics in the very light helium-based gas mixture. Despite the presence of these tails, the bulk of the resolution function has a Gaussian shape, with a width of \(\sigma _r\simeq 110\,\upmu \text {m}\), averaged over a large range of angles and impact parameters. Since the longitudinal coordinate of hits is determined by exploiting the stereo angle, the corresponding resolution is then expected to be \(\sigma _z=\sigma _r/\sin \theta _\mathrm {s}\simeq 1\,\mathrm{mm}\). However in the final chamber further improvements are expected due to the new front-end electronics with a 1 GHz bandwidth allowing for the exploitation of the cluster timing technique.

CDCH single hit resolution function, measured on a prototype in a cosmic ray facility, as the difference between the measured drift distance x and the particle’s impact parameter b. A fit is performed with a Gaussian core function of mean \(\mu \) and width \(\sigma \), analytically matched with an exponential tail starting at \(\mu + \delta \) (see [97] for more details)