Abstract

New agricultural systems are required to satisfy societal expectations such as higher quantity and quality of agricultural products, reducing environmental impacts, and more jobs. However, identifying and implementing more suitable agricultural systems is difficult due to conflicting objectives and to the wide diversity of scientific disciplines required to solve agricultural issues. Therefore, designing models to assess the sustainability of agricultural systems requires multi-criteria decision aid methods. The French agronomist community has recently developed 11 hierarchical and qualitative models to assess sustainability using the DEXi decision aid software. Here, we give guidelines to help designers to build their own specific models. First, we present the principles and applications of the DEXi software. Then, we provide guidance on the following steps of model designing: (1) initial analysis and planning of the design process, (2) selection and hierarchy of sustainability criteria, (3) indicator selection and building, (4) parameterization, (5) evaluation, and (6) model dissemination and uses. We then discuss advantages and drawbacks of this kind of modeling formalism, the role of a participatory approach, and the main properties to consider during the design process.

Similar content being viewed by others

Table of contents

-

Keywords

-

1. Introduction

-

3.1.2 Determining interaction procedures between these communities

-

3.1.3 Sharing the concept

-

3.2.3 Avoiding confusion between “criterion” and “indicator”

-

3.3.2 Seeking the best trade-off between accuracy and ease of use

-

3.5 Evaluation

-

4.1 Advantages/drawbacks of qualitative methods to assess agricultural system sustainability

-

4.2 Role and place of the participatory approach during the design process

-

5. Conclusions

-

Acknowledgements

-

References

1 Introduction

Farmers, extension agents, and agricultural researchers encounter a growing number of challenges, such as coping with increasing market volatility, producing raw materials to feed the Earth’s projected nine million inhabitants by 2050 and reducing the environmental impacts of agricultural practices (Tilman et al. 2002). To address these challenges, stakeholders from the agricultural sector worked actively to implement more efficient production systems at multiple levels. A variety of agricultural systems resulted from this effort, including popular systems such as organic farming, conservation farming, integrated farming, and precision farming. These systems have their own internal consistency and include a wide diversity of technical orientations that seek the best local trade-off between the diverse performances desired. This increasing number of agricultural paradigms led agricultural sector stakeholders and society to question the effectiveness of these systems. What are the most interesting combinations of practices that should be encouraged? What are their respective strengths and weaknesses? In what context are they the most appropriate? Providing answers to such crucial questions is difficult due to the diversity of technical options, challenges, and contexts. To address these difficulties, integrative assessment of the potential sustainability of agricultural systems is increasingly viewed as a key approach to help shift towards more suitable systems.

Different definitions of sustainability have been provided for the agricultural sector (e.g., Hansen 1996; Rigby and Caceres 2001; Smith and McDonald 1998). For instance, according to Ikerd (1993), sustainable agriculture should be able to maintain productivity and usefulness to society in the long term. This implies that it must be environmentally sound, resource-conserving, economically viable, and socially acceptable. Because these definitions in their very nature remain conceptual and therefore impossible to apply per se, researchers and extension agents have worked together to translate them into a more operational framework, such as indicators or assessment methods based on indicators (Alkan Olsson et al. 2009; Bockstaller et al. 2009; Rigby and Caceres 2001). Given the importance and difficulty of this task, designing models to assess sustainability is increasingly regarded as a typical and local decision-making problem that requires multi-criteria decision aid (MCDA) methods (Mendoza and Prabhu 2003). MCDAs are generally used to assess decision options (or alternatives), such as agricultural systems, and include multiple and possibly conflicting criteria (Bouyssou et al. 2006; Zopounidis and Pardalos 2010). In a comparative review of the main types of MCDA that focused on their relevance for sustainability assessment, Sadok et al. (2008) suggested that decision rule-based methods that manage qualitative input information are particularly relevant for handling the multi-dimensional constraints of sustainability assessment (i.e., incomparability, non-compensation, and incommensurability of the economic, social, and environmental dimensions).

Software using these methods, such as DEXi (Bohanec et al. 2013; Bohanec 2013; Žnidaršič et al. 2006), have proved suitable for creating such models. Over the past few years, the agronomic research community has developed several different DEXi-based models to assess sustainability of agricultural systems, such as for genetically modified crop systems (Bohanec et al. 2008), innovative arable cropping systems (Bohanec et al. 2007; Craheix et al. 2012; Pelzer et al. 2012; Sadok et al. 2009; Vasileiadis et al. 2013), and apple orchards (Mouron et al. 2012). Nonetheless, there is an increasing need to design assessment models for the agricultural sector, but no methodological guidelines have been written for using qualitative MCDA software such as DEXi in this design phase. This lack was highlighted by Cerf et al. (2012), who show that an increasing number of decision-making models are developed by the agronomic research community, but few studies explain the design of these models.

This article aims to offer guidelines to help future designers build their own models according to their specific needs. They are based on comparative analysis of ten models developed by several groups of researchers over the past 8 years as well as information from scientific literature available on similar modeling issues. In the first section, we describe the DEXi software as a typical example of decision support system software that enables the design of hierarchical and qualitative multi-criteria models (Fig. 1). We also provide a short illustration of a hierarchical tree designed with DEXi (the MASC model; Craheix et al. 2012; Sadok et al. 2009) and introduce our general approach to building new qualitative and hierarchical assessment models. In the second section, we describe the design steps and focus on the main aspects considered. In the final section, we discuss the pros and cons of such qualitative models, the role and place of participatory approaches during the design process (Fig. 2), and the main features expected from these kinds of models to implement them in practice.

2 Material and methods

2.1 The DEXi decision support system software

DEXi (http://www-ai.ijs.si/MarkoBohanec/dexi.html) is a decision support system software based on DEX methodology, whose original feature is that it deals with qualitative multi-criteria models (Bohanec 2013; Bohanec et al. 2013). It combines a hierarchical decision model with an expert system approach based on qualitative criteria whose values are discrete and usually take the form of words rather than numbers (e.g., “Low”, “High”, “Medium”). DEX methodology makes it possible to break down a decisional problem (the most aggregated criterion, Y in Fig. 1) into smaller, less complex sub-problems represented by criteria (Xi in Fig. 3). These criteria are organized hierarchically so that those at higher levels depend on those at lower levels. Each decision alternative is represented by a set of criteria that are first evaluated individually and then aggregated by the model up to the most aggregated criterion. One can thus distinguish the basic criteria, the aggregate criteria, and the most aggregated criterion of the hierarchy. Figure 1 shows a simple model that consists of five basic criteria X1 to X5, an aggregate criterion X6, and the most aggregated criterion Y. Decision alternatives are represented by a vector of values (αi). Criteria are aggregated by a utility function (F). This function takes the form of a lookup table using “IF-THEN” decision rules such as IF {X6 is “high”} AND IF {X4 is “high”} AND IF {X5 is “medium”} THEN {Y is “high”} (Fig. 1). Each utility function can be filled in manually or by using a semi-automatic approach that directly assigns a weight (expressed in %) to each criterion. Both ways enable partial transformation between criteria weights and decision rules (Bohanec 2013; Bohanec et al. 2013): (1) weights are estimated from decision rules defined by linear approximation and (2) values of undefined decision rules are based on predefined rules and user-specified weights.

Typical structure and functioning of a decision rule-based, qualitative hierarchical multi-attribute model (adapted from Bohanec et al. 2000). The inset in the top right corner shows an example of how a utility function is developed in the decision support system DEXi

The DEXi software (Bohanec 2013) embedded the DEX methodology offers a user-friendly interface that supports five basic tasks (Fig. 4): (1) development of qualitative multi-criteria models, (2) application of models to evaluate and analyze decision alternatives, (3) what-if analysis to study how model output varies with changes in input, and (4) graphical and (5) textual presentation of the model and its simulations. DEXi allows representing decision alternatives comparison either using graphical views such as radar plots or table representation with colors.

2.2 Example of the MASC model

MASC (“Multi-attribute Assessment of the Sustainability of Cropping systems”, Craheix et al. 2012; Sadok et al. 2009) is a model developed by researchers to assess sustainability of agricultural systems. Developed with the DEXi software, it can be used for both ex ante assessment (i.e., on newly designed or non-existant systems) or ex post assessment (i.e., on existing systems). Targeted end users are agricultural advisors working alone or with researchers. It operates at the cropping system level, defined as “a set of management procedures applied to a given, uniformly treated area, which may be a field or a group of fields” (Sebillotte 1990), and includes the crop sequence (rotation) and the management practices for each crop (e.g., tillage, sowing, fertilization, and protection).

MASC 2.0 (http://wiki.inra.fr/wiki/deximasc/Main/WebHome) is based on a hierarchical decision model with 39 basic criteria and 26 aggregated criteria. The most aggregated criterion (“Contribution to sustainable development”) is divided into three less aggregated criteria: “Economic Sustainability,” “Social Sustainability” and “Environmental Sustainability” (Fig. 5). Each criterion is qualified by three to seven classes that typically take the form of a “low→medium→high” progression with the addition of “very low” and “very high” in some cases. The basic criteria refer to elementary concerns of sustainable development (e.g., “profitability”) whose values are given by indicators proposed by the model designers (e.g., “economic gross margin” as a proxy of “profitability”). These indicators provide either qualitative or quantitative values and are based on operational models (e.g., INDIGO®; Bockstaller et al. 1997, 2009) or simple numerical algorithms (e.g., semi-net margin to fill the criterion “profitability”). Due to its target, indicators in MASC require detailed description of agricultural practices (new or existing) and technical and economic reference values. The indicators proposed in MASC are not mandatory.

The MASC 2.0 decision tree aims to assess overall sustainability of cropping systems. This complex decision problem is divided into economic (yellow), social (blue), and environmental (green) dimensions. Values in red boxes represent default aggregation weights (expressed in %) (from Craheix et al. 2012)

2.3 Review of DEXi-based assessment models

After an initial successful experience assessing ecological and economic impacts of genetically modified corn (the Grignon model; Bohanec and Zupan 2004; Bohanec et al. 2008), several models have been developed using the DEXi software (Table 1). They were developed to perform either ex post or ex ante assessments and address different agricultural systems (e.g., apple orchard, vineyards, rice, arable crops) at different locations (e.g., specific regions of France, French West Indies, Europe, Madagascar). The targeted end users were researchers, extension workers (from technical institutions, the agri-food chain, or resource managers), or farmers.

The models were designed by groups of varied composition that involved researchers and extension workers. Three methods were used to extend the models’ targets: (1) conducting expectation surveys to individually collect specific information from users, (2) obtaining users’ expectations through one or more consultation meetings, and (3) directly involving users during meetings to design the model.

Among these models, the number of basic criteria ranges from 20 to 61. Overall assessment is usually divided into three dimensions: economic, environmental, and social. Sometimes, the social dimension is removed, while in other models, a fourth dimension is added to represent soil fertility control. Basic criteria are given values via calculation, direct and qualitative expertise, or formal expertise based on a detailed disaggregation of basic criteria under the DEXi software.

Users have varying degrees of freedom to modify and calibrate the models: (1) modifying weights in the decision tree, (2) replacing proposed indicators to provide values for basic criteria, or (3) adapting threshold values to modify class settings.

This article uses these modeling experiences and other scientific literature available on sustainability assessment to offer guidelines that avoid being too normative, since design depends on local issues as well as research and stakeholder groups.

3 Methodological guidelines

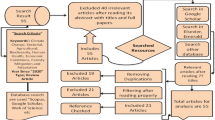

From our transversal analysis of these modeling experiences, we propose a six-step approach (Fig. 6). This step-by-step approach is largely iterative and involves trial and error that sometimes loops through certain steps many times (Jakeman et al. 2006; Prost et al. 2012).

3.1 Initial analysis and planning of the design process

The initial structure and set of problems addressed by the model are generally identified by an initial working group interested in improving a given type of production system (Colomb and Bergez 2013). Before starting to build the model, it is recommended to clarify issues that could strongly and permanently impact model design and its subsequent use.

3.1.1 Defining the different communities to involve

Insight from the literature coupled with our experiences using DEXi reinforces that it is highly suitable to establish a working arena in a transdisciplinary approach that brings together diverse representatives of four main communities. We first identified designers and users of the model. In this article, designers refer to those who make modeling choices, while users deal with model application and results. We then determined experts and stakeholders. Experts can provide reliable sources of scientific and/or technical knowledge, whereas stakeholders are those who can affect or are affected by implementation of the agricultural systems. It is necessary to identify the role of each person involved to clarify his or her membership to one or more of these communities. In our experience, this helps to identify which members of the working group should be involved at each stage of the design process according to the nature of the information used.

3.1.2 Determining interaction procedures between these communities

Various forms of participatory procedures should be considered from top-down to bottom-up approaches. To this end, it is classic to distinguish “contractual,” “consultative,” “collaborative,” and “collegiate” approaches (Biggs 1989). In the top-down approach, choices are made by a small group of designers composed of experts, while in the bottom-up approach, choices are made mainly by a larger group of potential users, including stakeholders (Pope et al. 2004; Singh et al. 2012). Based on multiple case studies (Table 1), while users’ expectations were almost always considered, the form and the degree of their involvement varied according to the type of users targeted, the sensitivity of designers to participatory approaches, and the closeness of the relationship between the working group and local stakeholders when the model was designed for a small agricultural region. According to several authors, it is important to involve potential users of an assessment model as much as possible in the working group. This allows consideration of local issues, which broadens the range of performances assessed and increases confidence in the results produced and facilitates future acceptance of the model (Burford et al. 2013; Colomb et al. 2013; Goma et al. 2001). Although collective construction has much promise, engaging many people makes organizing regular design workshops difficult due to temporal and geographical constraints. This observation demonstrates the importance of avoiding building a group that is too large and too dispersed. According to our experience, a core design group of less than ten people is sufficient to make the main modeling decisions. A larger group of stakeholders, experts, and users was then formed and occasionally asked about specific modeling aspects. This kind of organization combines synchronous meetings (face-to-face design or consultative meeting) and asynchronous meetings (expectation surveys, correspondence). Synchronous meetings are necessary for decisions that require debate and interpersonal contact, while asynchronous meetings address absent members or bilateral exchanges intended to extract specific information.

3.1.3 Sharing the concept

At this early step, assessing sustainability is not always an explicit goal. The initial advice is to further ensure that the sustainability concept is sufficiently understood and accepted by all participants involved in the design process (Barcellini et al. 2015). In our experience, this conceptual framework is often well accepted for its integrative nature, which allows users to organize and address a wide variety of concerns.

3.1.4 Targeting the model: scope and use case situations

Determining future use situations enables designers to determine early the nature and boundaries of the systems to be evaluated and the domain of use of the model. Systems under evaluation should be defined according to the type of production (e.g., arable crops, apple orchards, vineyards) and the most appropriate spatial scale (e.g., plot, cluster of homogeneous plots, farm, territory) and temporal scale (e.g., annual, multi-annual). This has large consequences on the choice of the criteria and indicators. At this stage, the designer group can still explicitly include production strategies that better represent their unique characteristics in the assessment (e.g., organic farming for the MASC-OF model; Colomb et al. 2013) or leave production strategies open for the user, to allow comparisons between different ones (i.e., MASC). The model should also be adapted to the geographical context in which and for which it will be designed (e.g., Northern Europe for MASC). Also, designers should decide whether the model will be used for ex ante and/or ex post assessment. Some groups consider that the real interest lies in the process of model design rather than in its use and reuse to assess agricultural systems. In these “constructivist approaches,” designers prefer to take advantage of the design stage to facilitate group learning through participatory approaches involving local stakeholders as much as possible (Barcellini et al. 2015). For example, stakeholders were largely involved in the design of the MASC-Madagascar model, which was clearly designed for advisors and with a small geographical scale focused on Lake Alaotra.

3.2 Selection and hierarchy of sustainability criteria

As emphasized by several authors (Burford et al. 2013; Moldan et al. 2012; Singh et al. 2012), the choice of evaluation criteria and how to structure them into a hierarchical tree gradually led designers to define their own visions of sustainable development and its contextualization. Therefore, results cannot be normalized, which implies representing both stakeholder preferences and the diversity of concerns associated with the production system targeted.

3.2.1 Considering complementary approaches

As proposed by Bohanec (2013), criteria can be chosen and organized in a hierarchy by following two complementary approaches in which certain criteria are progressively grouped together or further disaggregated into more basic concerns. To this end, we distinguish:

-

1.

The bottom-up approach, which gradually consolidates evaluation criteria into a single evaluation criterion, namely the sustainability of the production system.

-

2.

The top-down approach, which gradually disaggregates sustainability into several sub-criteria that are easier to quantify or qualify.

In an initial step that can be associated with the bottom-up approach, the designer group can list all basic evaluation criteria, without restriction, to minimize the risk of neglecting the sometimes unspoken expectations of experts and/or stakeholders. This thorough inventory can be supplemented by state-of-the-art criteria chosen in similar assessment models and literature reviews.

With the top-down approach, sustainable development is generally broken down into subcomponents based either on properties associated with the systems evaluated (e.g., resiliency, adaptability, and reliability; Hansen 1996; López-Ridaura et al. 2005; Valentin and Spangenberg 2000) or on the three pillars of sustainable development: economic, social, and environmental (Ikerd 1993; United Nations 1996). Models presented in this study are based on the latter approach for its simplicity. Sometimes, one of the three dimensions is removed (e.g., the social dimension in “the Grignon model”; Bohanec et al. 2008) or another dimension is added (e.g., the agronomic dimension in MASC-OF (Colomb et al. 2013) or MASC-Mada (Sester et al. 2014), depending on the emphasis on particular aspects of sustainability. Each main branch in the first level of disaggregation of the tree is then further divided into sub-branches that reflect stakeholder expectations and the impacts expected at various temporal scales, ranging from short term (e.g., nitrate losses) to long term (e.g., climate change).

3.2.2 Selecting the appropriate number of criteria

The number of basic criteria should not be too small to adequately represent the diversity of objectives of the systems evaluated. However, it should not be too large to avoid unnecessary complications when using the model and potentially reduce its ability to distinguish differences between systems (see section 3.5). To identify an appropriate number of criteria, it is recommended not to constrain participant creativity in the initial stage. One can develop different trees that represent the diversity of viewpoints or a tree with many branches (i.e., a heuristic model). In the second stage, it is advisable to focus on the main concerns to obtain a more functional model (see section 3.4).

3.2.3 Avoiding confusion between “criterion” and “indicator”

Difficulties may arise during group exchanges when criteria are not well formulated. Based on our experience, the most common source of misunderstanding between designers arises from confusion between “criterion,” which is a concern for sustainability (e.g., profitability), and “indicator,” which describes how to represent a basic criterion (e.g., the criterion “profitability” can be quantified by indicators of gross margin, semi-net margin, or wages). A particular concern reaches the status of a basic criterion when it cannot be further disaggregated into sub-concerns (e.g., profitability, nitrate losses, contribution to employment). López-Ridaura et al. (2005) indicate that it is important to first focus on the elementary concerns to stabilize the list of criteria. It is only during a second step that the designers search for suitable indicators to represent the basic criteria (see section 3.3). The evaluation criteria should be explained in the clearest way possible for future users who did not participate in the design group so that they can understand why they were chosen (Valentin and Spangenberg 2000).

3.2.4 Avoiding combinatory explosion

In such qualitative models, basic criteria are progressively aggregated in the tree using contingency tables, in which all combinations of values are analyzed to quantify or qualify the aggregated criteria. The number of decision rules that the user must define is the product (P) of the number of classes (n i ) of each criterion (sϵS) aggregated in a node:

To avoid “combinatory explosion,” it is important not to associate too many criteria with too many qualitative classes (Bohanec et al. 2008). For example, a contingency table for a criterion aggregating four sub-criteria, each with five classes, has 625 decision rules. In the models presented in this article, criteria were organized to ensure that contingency tables rarely exceed 64 decision rules (3 criteria with 4 classes each). However, the most aggregated criterion (i.e., overall sustainability) might have more classes to better differentiate results of the systems evaluated. In our DEXi model examples, overall sustainability is described by five to seven qualitative classes to increase the ability to distinguish systems. The structure of the decision tree thus results from a trade-off between model simplicity (e.g., the number of rules) and its ability to distinguish between similar systems.

3.3 Selecting and building the indicators

According to several authors, an indicator is an information that quantifies the degree to which a goal is achieved (Van der Werf and Petit 2002). It is also a variable that provides information about another variable that is more difficult to access and is used as a reference for decision-making (Gras et al. 1989). In the models considered in this article, indicators provide information about the basic criteria of the decision tree.

3.3.1 Identifying the most suitable formalism

Qualitative modeling makes it possible to convert quantitative data into qualitative data via threshold values. This flexibility takes advantage of several types of information, such as field measurements, predictions of simple or complex models, or empirical knowledge formulated directly into qualitative and linguistic values (Sadok et al. 2009). Consequently, designers are able to use various types of indicators and must pay attention when selecting or developing those most appropriate for their own evaluation. Clarification and recommendations are necessary for the three types of indicators in this modeling approach:

-

Quantitative indicators are based on measurements or data obtained from technical and economic references (e.g., phosphorus balance, semi-net margin) or variables from other quantitative models (e.g., indicators from INDIGO®; Bockstaller et al. 1997, 2009). For this type of indicator, threshold values are needed to translate quantitative variables into qualitative variables. For example, the semi-net margins can be translated into a qualitative variable using threshold values of 200 €/ha and 600 €/ha according to IF-THEN-ELSE rules: IF semi-net margin ≤200 €/ha, THEN “profitability” is “low” or IF semi-net margin ≥600 €/ha, THEN profitability is “high,” and ELSE “profitability” is “medium.” Quantitative indicators are often considered more objective and thus more accurate than qualitative indicators; however, they require collecting more detailed information about the system and its context (Floyd 1999). Quantitative indicators are more preferable for ex post than ex ante evaluations because the former has more of the data required by the model.

-

Qualitative indicators are developed from information gathered by experts or a qualitative description of the agricultural system and its environment. Designers often indicate the main elements to consider to help users assign qualitative classes during evaluations (e.g., to qualify the basic criterion “work difficulty” designers of MASC-listed agricultural practices known to improve difficult working conditions). This type of indicator is particularly useful for socioeconomic concerns when no mathematical calculation is available or when more subjective information is required (e.g., criteria such as “contribution to emergence of new industries” or “complexity of implementation”).

-

Mixed indicators are developed in hierarchical and qualitative decision support systems, such as DEXi, by disaggregating a criterion into easier-to-solve sub-criteria. This type of indicator creates a new independent tree (a “satellite tree”) that is used to fill in basic criteria (Fig. 5). As before, input of satellite trees is filled in with either qualitative and/or quantitative variables. These indicators are used for ex post evaluations to represent complex issues for which no readily-available indicators exist. They are also used in ex ante evaluations when innovative systems are tested and no quantitative data are available. In this case, the satellite trees developed are entirely filled in by qualitative variables. Although DEXi is technically able to directly link these indicators to the basic criteria by extending the branches, it is advisable to separate them from the main tree in the software. This separation helps users participate in model parameterization (see section 3.4) by preserving the homogeneity and consistency of each distinct entity (i.e., sustainability criteria vs. indicators).

3.3.2 Seeking the best trade-off between accuracy and ease of use

One of the major challenges in developing an evaluation method is identifying the proper trade-off between the conflicting demands for simple analysis and for accurate results. To illustrate this trade-off, Van der Werf and Petit (2002) distinguished “means-based indicators” from “effect-based indicators.” Means-based indicators describe agricultural practices (e.g., the amount of nitrogen applied to assess groundwater quality). They are easy to implement but generally not highly accurate. Conversely, effect-based indicators represent effects of agricultural practices according to the context in which they are implemented. They are more accurate but require more information about the production system, soil, climate, and sometimes the socioeconomic context (e.g., reflecting nitrate in the soil and losses to groundwater). In the models presented in this article and in agreement with Bockstaller et al. (2008), designers used effect-based indicators as much as possible by taking advantage of the available knowledge while maintaining relative simplicity in their use. Levels of precision (and complexity) that can be considered when looking for effect-based indicators are presented in the typology of Bockstaller et al. (2008). It is based on a cause-effect chain that characterizes the degree of process integration that connects practices to final impact.

3.4 Parameterization process

Designers of DEXi models have a certain degree of freedom to facilitate users’ contextualization and ownership of assessments. Local adaptations of parameter settings allow users to integrate soil, climate, and socioeconomic characteristics, as well as their own visions of sustainable development. The structure of the previously established criteria tree (see section 3.2) is generally viewed as generic, while the choice of indicators and thresholds and criteria weighting are mainly locally based references allowing contextual use of the model tree.

3.4.1 Flexibility in indicators

Models presented in this article generally allow users to replace default indicators by other indicators if they consider them better suited to climatic and soil conditions or more compatible with the information available (e.g., the shift between ex ante and ex post indicators). In this case, separating the satellite tree (i.e., mixed indicator) from the main tree (composed of criteria that refer to sustainable concerns) in the software makes user modification easier without removing sustainability criteria.

3.4.2 Flexibility in threshold values

Threshold values greatly influence the qualitative value (e.g., “favorable”/”unfavorable”) assigned to calculated or measured values (Lancker and Nijkamp 2000; Riley and Fielding 2001). Designers use two types of threshold values (Acosta-Alba and van der Werf 2011; Bockstaller et al. 2008):

-

Normative values are values which assess systems using predefined values from scientific or policy references (e.g., nitrate losses to water). These values should be set by designers rather than locally adapted by users.

-

Relative values are values which must be locally adapted by users either because the performance evaluated is inherently subjective and thus involves considering stakeholder preferences (e.g., threshold values for profitability), or because there is no standard value, and the performance is strongly associated with the assessment context (e.g., threshold values for irrigation pressure on local water resources). In the latter case, threshold values are set by positioning the performance among those locally observed or from similar systems.

3.4.3 Flexibility in the aggregation process

In the DEXi model, it is possible to determine the relative importance of each criterion to the total sustainability by adjusting its weight in the model. Since DEXi can assign weight as a percentage, it is strongly advised to check the underlying aggregation decision rules that correspond to this weighting in the contingency tables (see Fig. 1 and section 3.5). To facilitate future use of these models, default contingency tables usually place equal importance on each sub-criterion (e.g., 33 % for each main branch representing a pillar of sustainable development). However, as pointed out in Table 1 and by several authors (Andreoli and Tellarini 2000; Riley 2001), it is important not to normalize these weights so as to promote stakeholder participation and involvement by integrating their perception of sustainable development in the parameterization process. According to Sadok et al. (2009) and Pelzer et al. (2012), users should be prevented from modifying weights in two locations: (1) sustainability dimensions (no null values), to avoid distorting the sustainability intended by model designers, and (2) “satellite trees”, especially those that assess a biophysical process, since they were previously defined through expert/scientific knowledge. They are considered independent of the preferences of stakeholders and users. Producing a “satellite tree” to separate the main decision tree and its indicators in the same decision support system helps users modify weights (Fig. 7).

3.4.4 Paying attention to partners’ viewpoints

The parameterization process, whether performed by designers or users is usually based on consensus. Unfinished or poorly managed parameterization can yield inconsistent parameter values of stakeholders’ preferences. If consensus is not possible, different parameter sets can be tested to represent the diversity of viewpoints (Colomb et al. 2013; Munda et al. 1994). Regardless of the choices made at this stage, procedural transparency is absolutely necessary to avoid criticism during the assessment phase.

3.5 Evaluation

A variety of methods can verify the relevance and quality of the objectives of a parameterized model (Wallach et al. 2014). Although sustainability assessment models, mainly because of their inherent subjectivity, cannot be assessed in the same way as conventional simulation models, analysis of their quality is nonetheless important. In agreement with Bockstaller and Girardin (2003), we propose a three-step procedure that evaluates model (1) structure (2) outputs and (3) usefulness to users.

3.5.1 Evaluating the relevance of model structure

The first evaluation step is to check understanding of the model and its acceptance by potential users who were not involved in the design process. A second step is to use sensitivity analysis to clarify the effects of a tree’s structure on final results and assess its ability to distinguish the performance of production systems. Sensitivity analyses are one way to gain confidence in models and increase their transparency (Bergez 2013; Singh et al. 2012). To perform this step, sensitivity analysis tools adapted to hierarchical qualitative models built in DEXi were recently developed (Bergez 2013; Carpani et al. 2012) and are available to designers and users (http://wiki.inra.fr/wiki/deximasc/Interface+IZI-EVAL/Accueil). Although results of this type of analysis require case-by-case interpretation, some generic lessons can be drawn from their use on existing models: (1) the greater the number of criteria, the lower the influence of individual criteria on the overall result; (2) the greater the number of hierarchical levels, the lower the influence of the basic criteria on the overall result. Thus, a model will be more sensitive to change in input criteria values if its structure is simple and focused on the criteria of interest. In addition, the way in which utility functions are filled in also plays an important role. For a specific set of weights, vetoes can prevent one criterion’s high value from compensating another criterion’s low value. When dealing with social choices, the use of vetoes with low values is common when one considers the degree to which a goal is satisfied (Tsoukiàs et al. 2002). For example, Bockstaller et al. (1997) pointed out that there is no reason for a low value for one criteria (e.g., water quality) to be offset by a high value for another (e.g., air quality). However, while vetoes can express legitimate preferences when parameterizing a DEXi model, one should not overuse them to avoid always having low values for overall sustainability when one sub-criterion has a low value. This illustrates that design of a sustainability assessment model results from a trade-off between considering stakeholder preferences and the model’s ability to distinguish systems.

3.5.2 Evaluating the relevance of model outputs

Users’ full acceptance of outputs is based mainly on comparing them with ex post assessments of well-known systems by experts or stakeholders. For large and inexplicable discrepancies, one should review model structure and/or parameterization. This increases confidence in the model and acceptance of results for innovative, less well-known systems.

It is important to focus on more than just the relevance of the value of the model’s most aggregated criterion. The model also provides outputs for each aggregated criterion of the decision tree. Analyzing outputs of aggregated and basic criteria helps designers understand results of the most aggregated criteria and evaluate model quality. This in-depth analysis also provides an opportunity to study and compare strengths and weaknesses of systems.

As for other decision support system tools, evaluating the relevancy of the model outputs allows to increase credibility, saliency, legitimacy, and transparency of the all process (Cash et al. 2003).

3.5.3 Evaluating model usefulness in real situations

Beyond its ability to reproduce or provide consistent assessments through expert advice or stakeholders, one must ensure that the model is actually used and that its use helps the activity. This latter form of evaluation requires that designers ask for feedback from users (whether or not they participated in the design process) to analyze their feelings/requirements/questions about the evaluation method. To facilitate acquisition of feedback, most designers release prototypes of their models. Collection and analysis of feedback from MASC’s first users (Craheix et al. 2011) led to significant changes in MASC’s second version, such as a broader scope of model use (e.g., for ex post evaluation), more evaluation criteria, and more flexibility in parameterization to better reflect both stakeholder preferences and socioeconomic and contextual characteristics.

3.6 Model dissemination and uses

Dissemination and use approaches are often related to the design approach (Tsoukiàs et al. 2002). Models based on a constructivist approach are usually intended to be used only by those involved in the design process. The interest of such an approach lies in the design process itself, which strongly focuses on sharing knowledge and contextualizing preferences between participants. Conversely, in approaches that aim for more generic modeling, dissemination of the models developed depends on the type and potential number of users and on the resources available to provide access to the models. Models developed for researchers (e.g., DEXi-PM) are often disseminated less than those developed for extension agents (e.g., MASC). For the latter, diffusion is often accompanied by companion tools (e.g., website, license, training, user-friendly interfaces, tutorials). The models presented in this article were often used in applications more diverse than those originally envisaged by the designers (Craheix et al. 2012). Thus, while MASC 1.0 was initially developed to select sustainable systems before testing them to the field, the model was much more used in ex post assessment to make for instance the diagnosis of agricultural systems in an improvement process, to communicate results of field experiments, to find innovative systems on a given territory, to identify potential barriers to their adoption… This reinforces the importance of not standardizing model use and of leaving a degree of freedom in model parameterization to facilitate its adaptation to different contexts (see section 3.4).

4 General considerations

4.1 Advantages/drawbacks of qualitative methods to assess agricultural system sustainability

By analyzing the many and diverse uses of this qualitative decision aid method, this article confirms its overall relevance for assessing sustainability of agricultural systems. According to Sadok et al. (2008), the main features that motived selection of qualitative methods were effective in real-life situations. Unlike most simulation models or decision support methods, models such as those designed with DEXi can include and aggregate both qualitative and quantitative values after discretization. These features make qualitative decision aid methods highly suitable for designing sustainability assessment models by:

-

1.

Capturing uncertainties inherent in sustainability assessment, especially ex ante

-

2.

More realistically integrating the decision-maker’s own views, since they are not necessarily expressed with formal, quantitative models (Dent et al. 1995; Munda 2005);

-

3.

Handling incomparability, incommensurability, and non-compensation between sustainability dimensions more effectively than other, more “classic” MCDA approaches frequently used to assess sustainability, such as ELECTRE (Loyce et al. 2002; Mazzetto and Bonera 2003), AHP (Shrestha et al. 2004) or multi-objective optimization models (Dogliotti et al. 2004; Meyer-Aurich 2005).

However, the design of qualitative models can still be challenged. For instance, models based on qualitative and discrete variables are less sensitive than models based on quantitative and continuous variables (Bohanec et al. 2008). This indicates the need for additional vigilance when building models to limit this loss of sensitivity. The use of discrete variables sometimes causes abrupt transitions between classes, which can lead to ranking agricultural systems with similar performances differently. Correctly choosing the thresholds between qualitative classes is a major difficulty in this type of modeling.

4.2 Role and place of the participatory approach during the design process

An extensive literature review addresses the participatory processes involved in designing models for decision support, especially when the models aim for more sustainable development (Etienne et al. 2011; Valentin and Spangenberg 2000). Regarding decision support software in general, several authors stress the users’ lack of involvement in the design process to explain their less frequent adoption of models developed by agronomists acting as decision support system designers (Cerf et al. 2012; McCow et al. 1996; Prost et al. 2012). The most common reasons cited for these failures are inadequate consideration of the concerns and challenges users encounter, their technical or organizational constraints, and their expectations about the accuracy of results for decision-making. These observations argue for stronger dialogue between designers and users as early as possible in the design process.

Regarding sustainability assessment models, the literature is much more nuanced about the suitable degree of stakeholder involvement during the design process. Designing a method to assess sustainability involves combining both scientific and more subjective knowledge. The use of scientific norms is necessary to consider the requirements (economic, social, and environmental) for the long-term continuity of the system and the levels of organization with which it interacts. It is also essential to use more subjective information such as cultural, political, and ethical references when choosing the proper criteria, their relative importance, and how to evaluate them (Burford et al. 2013; Rametsteiner et al. 2011; Singh et al. 2012). The inevitable tension between expert knowledge and stakeholders’ preferences argues for a collaborative approach that lies between a “top-down” approach, which leaves decision-making power to experts, and a "bottom-up" approach, which leaves decision-making power to locally involved stakeholders.

More generally, and as illustrated by Funtowicz and Ravetz (Funtowicz and Ravetz 1994), when one encounters a decision problem characterized by strong social challenges and great uncertainty, as is the case for sustainability assessment, the quality of the process leading to the decision is as important as the decision itself. The model may act as a boundary object by creating a connection between the stakeholders involved in its design. Co-learning, which the model can facilitate, involves reframing beliefs, assumptions, and expectations about the problem and allows those involved to arrive at an increasingly shared understanding of it (Jakku and Thorburn 2010; Voinov and Bousquet 2010). The design process can be seen as an occasion for stakeholders to learn from each other and to better understand their interdependency.

4.3 Simplicity/flexibility/transparency

As noted above, a sustainability assessment model is more likely to be used if it is designed with a participatory approach. To this end, simplicity, flexibility, and transparency are key principles that must be considered as early as possible and at all stages of the design process (e.g., choice of computer formalism for decision support, choice of indicators, number and type of criteria to be considered).

Simplification, which is the very basis of the modeling exercise, plays an even more important role when designing a sustainability assessment model. In our experience, after gathering as much available knowledge as possible in the assessment, design groups generally refocus on the most important aspects. This search for simplicity is motivated by the desire to produce a “readable artifact” that will enable designers and users to better understand system complexity and guide their actions (Beguin et al. 2010). As mentioned by Bell and Morse (2008), modeling of sustainability must be a trade-off between necessary simplification and, at the same time, having models that are meaningful. The search for simplicity should also be considered when choosing the decision support system. To this end, DEXi software, with its user-friendly interface and simple aggregation approach, allows designers to quickly gain confidence in their ability to design new models (Colomb et al. 2013) and greatly facilitates model dissemination.

The flexibility in parameterization allows model design to better fit characteristics of the assessment exercise. This principle must be carefully considered when the assessment model is not designed for a single purpose but rather for use in different contexts (Jakku and Thorburn 2010). To this end and in agreement with Torres (2002), it is important to select parameters that refer to the mandatory dimensions of sustainability to guarantee continuity of the system and its environment over time. Conversely, one must keep adaptable all things that refer to stakeholder preferences and broader contextualization of the assessment. This aspect is essential because according to the considered agricultural regions, the priority stakes are not the same and do not express themselves with the same intensity. A given performance (measured or calculated) can be appreciated differently according to the potentialities and the possible room of improvement. Lastly, as observed in our diverse experiences, flexibility contributes strongly to the potential involvement of stakeholders in the evaluation process.

The search for maximum procedural transparency is a fundamental ethical principle (Valentin and Spangenberg 2000; Voinov and Bousquet 2010), especially when it is a collective modeling process based on subjectivity. In this respect, it is important to record the reasons behind modeling choices and voluntarily provide them for collective evaluation by designers and users. This reduces the suspicion of manipulation, increases stakeholder understanding, and promotes their involvement, particularly when they can modify some input values of the assessment in the event of disagreement (Etienne 2011). Transparency tends to improve the overall quality of the process by encouraging designers to formalize their thoughts more clearly.

5 Conclusions

Given the diversity and complexity of the challenges facing agriculture, sustainability assessment models are increasingly regarded as a suitable means for identifying pathways of progress. By reviewing multiple sustainability assessments from the literature, this article offers an approach to facilitate development of new models designed with the DEXi software. Its recommendations on the design process are relevant for other development software. According to this article, constructing a normative and universal assessment model of sustainability is clearly not possible because of the inherently abstract, context-dependent, and subjective nature of sustainability. These features, which are also the strength of sustainability, argue for creating design groups composed of experts and stakeholders with diverse knowledge and viewpoints. The resulting intersubjective nature of the model will then more easily outbalance the usual critique of subjectivity that is usually associated with model design. In this respect, the models and their results should not be presented as intangible material but rather as a medium for reflection and knowledge sharing that will guide changes.

References

Acosta-Alba I, van der Werf HMG (2011) The use of reference values in indicator-based methods for the environmental assessment of agricultural systems. Sustainability 3:424–442. doi:10.3390/su3020424

Alkan Olsson J, Bockstaller C, Stapleton L, Knapen R, Therond O, Turpin N, Geniaux G, Bellon S, Pinto Correia T, Bezlepkina I, Taverne M, Ewert F (2009) Indicator frameworks supporting ex-ante impact assessment of new policies for rural systems; a critical review of a goal oriented framework and its indicators. Environ Sci Policy 12:562–572

Andreoli M, Tellarini V (2000) Farm sustainability evaluation: methodology and practice. Agric, Ecosyst Environ 77:43–52. doi:10.1016/S0167-8809(99)00091-2

Barcellini F, Prost L, Cerf M (2015) Designers’ and users’ roles in participatory design: what is actually co-designed by participants? Appli Ergon 50:31–40. doi:10.1016/j.apergo.2015.02.005

Beguin P, Cerf M, Prost L (2010) Co-design as a distributed dialogical design. BOKU - University of Natural Resources and Applied Life Sciences, Vienna, Austria

Bell S, Morse S (2008) Sustainability indicators: measuring the immeasurable? Earthscan

Bergez JE (2013) Using a genetic algorithm to define worst-best and best-worst options of a DEXi-type model: application to the MASC model of cropping-system sustainability. Comput Electron Agric 90:93–98. doi:10.1016/j.compag.2012.08.010

Biggs S (1989) Resource-poor farmer participation in research: a synthesis of experiences from nine national agricultural research systems. OFCOR comparative study paper, vol. 3. International Service for National Agricultural Research, The Hague.

Bockstaller C, Girardin P (2003) How to validate environmental indicators. Agric Syst 76:639–653. doi:10.1016/S0308-521X(02)00053-7

Bockstaller C, Girardin P, van der Werf HM (1997) Use of agro-ecological indicators for the evaluation of farming systems. Eur J Agron 7:261–270. doi:10.1016/S1161-0301(97)00041-5

Bockstaller C, Guichard L, Makowski D, Aveline A, Girardin P, Plantureux S (2008) Agri-environmental indicators to assess cropping and farming systems. A review. Agron Sustain Dev 28:11. doi:10.1051/agro:2007052

Bockstaller C, Guichard L, Keichinger O, Girardin P, Galan M-B, Gaillard G (2009) Comparison of methods to assess the sustainability of agricultural systems. A review. Agron Sustain Dev 29:13. doi:10.1051/agro:2008058

Bohanec M (2013) DEXi: Program for multi-criteria decision making, user’s manual, Version 4.0. IJS Report DP-113401134011340, Jožef Stefan Institute, Ljubljana. Available at: http://kt.ijs.si/MarkoBohanec/pub/DEXiManual400DEXiManual400DEXiManual400.pdf

Bohanec M, Zupan B (2004) A function-decomposition method for development of hierarchical multi-attribute decision models. Decis Support Syst 36(3):215–233. doi:10.1016/S0167-9236(02)00148-3

Bohanec M, Zupan B, Rajkovic V (2000) Applications of qualitative multi attribute decision models in health care. Int J Med Informat 58–59:191–205.

Bohanec M, Cortet J, Griffiths B, Žnidaršič M, Debeljak M, Caul S, Thompson J, Krogh PH (2007) A qualitative multi-attribute model for assessing the impact of cropping systems on soil quality. Pedobiologia 51:239–250. doi:10.1016/j.pedobi.2007.03.006

Bohanec M, Messéan A, Scatasta S, Angevin F, Griffiths B, Krogh PH, Žnidaršič M, Džeroski S (2008) A qualitative multi-attribute model for economic and ecological assessment of genetically modified crops. Ecol Model 215:247–261. doi:10.1016/j.ecolmodel.2008.02.016

Bohanec M, Rajkovič V, Bratko I, Zupan B, Žnidaršič M (2013) DEX methodology: three decades of qualitative multi-attribute modelling. Informatica 37:49–54

Bouyssou D, Marchant T, Pirlot M, Tsoukiàs A, Vincke P (2006) Evaluation and decision models with multiple criteria: stepping stones for the analyst. 1st Edition. International Series in Operations Research and Management Science, Volume 86. Boston

Burford G, Hoover E, Velasco I, Janoušková S, Jimenez A, Piggot G, Podger D, Harder MK (2013) Bringing the “missing pillar” into sustainable development goals: towards intersubjective values-based indicators. Sustainability 5:3035–3059. doi:10.3390/su5073035

Carpani M, Bergez JE, Monod H (2012) Sensitivity analysis of a hierarchical qualitative model for sustainability assessment of cropping systems. Environ Model Softw 27(2):15–22. doi:10.1016/j.envsoft.2011.10.002

Cash DW, Clark WC, Alcock F, Dickson NM, Eckley N, Guston DH, Jäger J, Mitchell RB (2003) Knowledge systems for sustainable development. Proc Natl Acad Sci U S A 100:8086–8091

Cerf M, Jeuffroy MH, Prost L, Meynard JM (2012) Participatory design of agricultural decision support tools: taking account of the use situations. Agron Sustain Dev 32:899–910. doi:10.1007/s13593-012-0091-z

Colomb B, Bergez JE (2013) Construire une image globale des performances des systèmes de cultures par le biais d’une évaluation multicritère. Buts, principes généraux et exemple. Innovations Agronomiques, 31: 45-60. http://prodinra.inra.fr/record/253059

Colomb B, Carof M, Aveline A, Bergez JE (2013) Stockless organic farming: strengths and weaknesses evidenced by a multicriteria sustainability assessment model. Agron Sustain Dev 33:593–608. doi:10.1007/s13593-012-0126-5

Craheix D, Angevin F, Bergez JE, Bockstaller C, Colomb B, Guichard L, Reau R, Doré T (2011) MASC, a model to assess the sustainability of cropping systems: taking advantage of feedback from the first users, ESA 2012, Helsinki, Finlande (2012-08-21 - 2012-08-24).

Craheix D, Angevin F, Bergez JE, Bockstaller C, Colomb B, Guichard L, Reau R, Doré T (2012) MASC 2.0, un outil d’évaluation multicritère pour estimer la contribution des systèmes de culture au développement durable. Innovations Agronomiques 20: 35-48. http://www.inra.fr/ciag/revue/volume_20_juillet_2012. Accessed 15 Oct 2012

Decourtyre A, Gayrard M, Chabert A, Requier F, Rollin O, Odoux J-F, Henry M, Allier F, Cerrutti N, Chaigne G, Petrequin P, Plantureux S, Gaujour E, Emonet E, Bockstaller C, Aupinel P, Michel N, Bretagnolle V (2014) Concevoir des systèmes de cultures innovants favorables aux abeilles. Innovations Agronomiques 34:19–34

Dent JB, Edwards-Jones G, McGregor MJ (1995) Simulation of ecological, social and economic factors in agricultural systems. Agric Syst 49:337–351

Dogliotti S, Rossing W, Van Ittersum M (2004) Systematic design and evaluation of crop rotations enhancing soil conservation, soil fertility and farm income: a case study for vegetable farms in South Uruguay. Agric Syst 80:277–302

Dubuc M, Gary C, Métral R, Sauvage D (2014) DEXIPM Vigne, un outil pour aider les viticulteurs dans leurs démarches d’innovations techniques. Vinopôle Sud-Bourgogne, p. 21

Etienne M (Ed.) (2011) Companion modelling. A participatory approach to support sustainable development. Versailles: Quæ. 368pp. ISBN: 978-2-7592-0922-4

Etienne M, Du Toit DR, Pollard S (2011) ARDI: a co-construction method for participatory modeling in natural resources management. Ecol Soc 16:44

Floyd (1999) When is quantitative data collection appropriate in farmer participatory research and development? Who should analyze the data and how? Farmer participatory research and development. Agricultural research and extension network. Network Paper No. 92: 8-14

Funtowicz SO, Ravetz JR (1994) Uncertainty, complexity and post-normal science. Environ Toxicol Chem 13:1881–1885. doi:10.1002/etc.5620131203

Goma H, Rahim K, Nangendo G, Riley J, Stein A (2001) Participatory studies for agro-ecosystem evaluation. Agric Ecosyst Environ 87:179–190. doi:10.1016/S0167-8809(01)00277-8

Gras R, Benoit M, Deffontaines JP, Duru M, Lafarge M, Langlet A, Osty PL (1989) Le fait technique en agronomie. Activité agricole, concepts et méthodes d’étude. Institut National de la Recherche Agronomique, L’Harmattan, Paris, France.

Hansen J (1996) Is agricultural sustainability a useful concept? Agric Syst 50:117–143

Ikerd JE (1993) The need for a system approach to sustainable agriculture. Agric Ecosyst Environ 46:147–160. doi:10.1016/0167-8809(93)90020-P

Jakeman AJ, Letcher RA, Norton JP (2006) Ten iterative steps in development and evaluation of environmental models. Environ Model Softw 21:602–614. doi:10.1016/j.envsoft.2006.01.004

Jakku E, Thorburn PJ (2010) A conceptual framework for guiding the participatory development of agricultural decision support systems. Agric Syst 103:675–682. doi:10.1016/j.agsy.2010.08.007

Lancker E, Nijkamp P (2000) A policy scenario analysis of sustainable agricultural development options: a case study for Nepal. Impact Assess Project Appraisal 18:111–124. doi:10.3152/147154600781767493

López-ridaura S, Keulen HV, Ittersum MK, Leffelaar PA (2005) Multiscale methodological framework to derive criteria and indicators for sustainability evaluation of peasant natural resource management systems. Environ Dev Sustain 7:51–69. doi:10.1007/s10668-003-6976-x

Loyce C, Rellier J, Meynard J (2002) Management planning for winter wheat with multiple objectives (1): the BETHA system. Agric Syst 72:9–31

Mazzetto F, Bonera R (2003) MEACROS: a tool for multi-criteria evaluation of alternative cropping systems. Eur J Agron 18:379–387. doi:10.1016/S1161-0301(02)00127-2

McCow R, Hammer G, Hargreaves J, Holzworth D, Freebairn D (1996) APSIM: a novel software system for model development, model testing and simulation in agricultural systems research. Agric Syst 50:255–271

Mendoza GA, Prabhu R (2003) Qualitative multi-criteria approaches to assessing indicators of sustainable forest resource management. Forest Ecol Manag 174:329–343. doi:10.1016/S0378-1127(02)00044-0

Meyer-Aurich A (2005) Economic and environmental analysis of sustainable farming practices—a Bavarian case study. Agric Syst 86:190–206. doi:10.1016/j.agsy.2004.09.007

Moldan B, Janoušková S, Hák T (2012) How to understand and measure environmental sustainability: indicators and targets. Ecol Indic 17:4–13. doi:10.1016/j.ecolind.2011.04.033

Mouron P, Heijne B, Naef A, Strassemeyer J, Hayer F, Avilla J, Alaphilippe A, Höhn H, Hernandez J, Mack G, Gaillard G, Solé J, Sauphanor B, Patocchi A, Samietz J, Bravin E, Lavigne C, Bohanec M, Golla B, Scheer C, Aubert U, Bigler F (2012) Sustainability assessment of crop protection systems: SustainOS methodology and its application for apple orchards. Agric Syst 113:1–15. doi:10.1016/j.agsy.2012.07.004

Munda G (2005) Multiple criteria decision analysis and sustainable development, in: Multiple Criteria Decision Analysis: State of the Art Surveys, International Series in Operations Research & Management Science. Springer New York, pp. 953–986

Munda G, Nijkamp P, Rietveld P (1994) Qualitative multicriteria evaluation for environmental management. Ecol Econ 10:97–112

Pelzer E, Fortino G, Bockstaller C, Angevin F, Lamine C, Moonen C, Vasileiadis V, Guérin D, Guichard L, Reau R, Messéan A (2012) Assessing innovative cropping systems with DEXiPM, a qualitative multi-criteria assessment tool derived from DEXi. Ecol Indic 18:171–182. doi:10.1016/j.ecolind.2011.11.019

Pope J, Annandale D, Morrison-Saunders A (2004) Conceptualising sustainability assessment. Environ Impact Assess Rev 24:595–616. doi:10.1016/j.eiar.2004.03.001

Prost L, Cerf M, Jeuffroy MH (2012) Lack of consideration for end-users during the design of agronomic models. A review. Agronomy for Sustainable Development 32. doi:10.1007/s13593-011-0059-4

Rametsteiner E, Pülzl H, Alkan-Olsson J, Frederiksen P (2011) Sustainability indicator development—science or political negotiation? Ecol Indic 11:61–70. doi:10.1016/j.ecolind.2009.06.009

Rigby D, Caceres D (2001) Organic farming and the sustainability of agricultural systems. Agric Syst 68:21–40

Riley J (2001). Multidisciplinary indicators of impact and change: key issues for identification and summary. Agriculture, Ecosystems & Environment, Papers from the European Union Concerted Action: Unification of Indicator Quality for the Assessment of Impact of Multidisciplinary Systems (UNIQUAIMS) 87: 245–259. doi:10.1016/S0167-8809(01)00282-1

Riley J, Fielding WJ (2001). An illustrated review of some farmer participatory research techniques. Journal of Agricultural, Biological, and Environmental Statistics 6. doi:10.1198/108571101300325210

Sadok W, Angevin F, Bergez JE, Bockstaller C, Colomb B, Guichard L, Reau R, Doré T (2008) Ex ante assessment of the sustainability of alternative cropping systems: implications for using multi-criteria decision-aid methods. A review. Agron Sustain Dev 28:163–174. doi:10.1051/agro:2007043

Sadok W, Angevin F, Bergez J-E, Bockstaller C, Colomb B, Guichard L, Reau R, Messéan A, Doré T (2009) MASC, a qualitative multi-attribute decision model for ex ante assessment of the sustainability of cropping systems. Agron Sustain Dev 29:447–461. doi:10.1051/agro/2009006

Salles P (2007) Conception d’un outil d’évaluation multicritère de la durabilité des successions en systèmes légumiers de plein champ : DEXI-Légumes. Rennes, Agrocampus.

Sebillotte M (1990) Système de culture, un concept opératoire pour les agronomes. In: Combe L, Picard D (eds) Le point sur… les systèmes de culture. INRA Editions, Paris, pp 165–196

Sester M, Craheix D, Daudin G, Sirdey N, Scopel E, Angevin F (2015) Évaluer la durabilité de systèmes de culture en agriculture de conservation à Madagascar (région du lac Alaotra) avec MASC-Mada. Cahiers Agricultures 24:123–133

Shrestha RK, Alavalapati JRR, Kalmbacher RS (2004) Exploring the potential for silvopasture adoption in south-central Florida: an application of SWOT–AHP method. Agric Syst 81:185–199. doi:10.1016/j.agsy.2003.09.004

Singh RK, Murty HR, Gupta SK, Dikshit AK (2012) An overview of sustainability assessment methodologies. Ecol Indic 15:281–299. doi:10.1016/j.ecolind.2011.01.007

Smith C, McDonald G (1998) Assessing the sustainability of agriculture at the planning stage. J Environ Manage 52:15–37. doi:10.1006/jema.1997.0162

Tilman D, Cassman KG, Matson PA, Naylor R, Polasky S (2002) Agricultural sustainability and intensive production practices. Nature 418:671–677. doi:10.1038/nature01014

Tirolien J, Blazy JM (2010) Multi-attribute assessment of the sustainability of innovative banana cropping systems in Guadeloupe: adaptation and implementation of the MASC method. Poster, 46th Annual meeting of the Caribean Food Crop Society, Boca Chica, Dominican Republic, 11-17

Torres E (2002) Adapter localement la problématique du développement durable : rationalité procédurale et démarche-qualité. Développement durable et territoires. Économie, géographie, politique, droit, sociologie. doi:10.4000/developpementdurable.878

Tsoukiàs A, Perny P, Vincke P (2002) From concordance/discordance to the modelling of positive and negative reasons in decision aiding. In: Bouyssou D, Jacquet-Lagrèze E, Perny P, Slowinski R, Vanderpooten D, Vincke P (eds) Aiding decisions with multiple criteria: essays in honor of Bernard Roy. Kluwer, Dordrecht, pp 147–174, 2002

United Nations (1996) Indicators of sustainable development, frameworks and methodologies, New York: United Nations (sales no. E.96.II.A.16). ISBN: 9211044707

Valentin A, Spangenberg JH (2000) A guide to community sustainability indicators. Environ Impact Assess Rev 20:381–392. doi:10.1016/S0195-9255(00)00049-4

Van der Werf HM, Petit J (2002) Evaluation of the environmental impact of agriculture at the farm level: a comparison and analysis of 12 indicator-based methods. Agric Ecosyst Environ 93:131–145. doi:10.1016/S0167-8809(01)00354-1

Vasileiadis VP, Moonen AC, Sattin M, Otto S, Pons X, Kudsk P, Veres A, Dorner Z, van der Weide R, Marraccini E, Pelzer E, Angevin F, Kiss J (2013) Sustainability of european maize-based cropping systems: economic, environmental and social assessment of current and proposed innovative IPM-based systems. Eur J Agron 48:1–11

Voinov A, Bousquet F (2010) Modelling with stakeholders. Environ Model Softw Thematic Issue Model Stakeholders 25:1268–1281. doi:10.1016/j.envsoft.2010.03.007

Wallach D, Makowski D, Jones JW, Bruns F (2014) Editors, working with dynamic crop model: second edition. Elsevier, Amsterdam, The Netherlands. ISBN 978-0-444-52135-4

Žnidaršič M, Bohanec M, Zupan B (2006) ProDEX—a DSS tool for environmental decision-making. Environ Model Softw 21:1514–1516. doi:10.1016/j.envsoft.2006.04.003

Zopounidis C, Pardalos PM (2010) Handbook of multicriteria analysis. Springer, Berlin, Heidelberg

Acknowledgements

This research received funds from the European Union Sixth and Seventh Framework Programmes under grant agreements 031499 (ENDURE network) and 265865 (PURE project) from the French National Research Agency (ANR-05-PADD-004) and the French group of scientific interest “GCHP2E”. Further, the authors wish to thank those who contributed as designers of the models presented in this article, which made writing it possible.

Author information

Authors and Affiliations

Corresponding author

Additional information

Damien Craheix and Jacques-Eric Bergez contributed equally to this work.

About this article

Cite this article

Craheix, D., Bergez, JE., Angevin, F. et al. Guidelines to design models assessing agricultural sustainability, based upon feedbacks from the DEXi decision support system. Agron. Sustain. Dev. 35, 1431–1447 (2015). https://doi.org/10.1007/s13593-015-0315-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13593-015-0315-0