Abstract

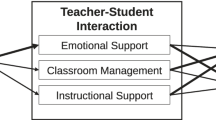

This mixed methods study examines one teacher preparation program’s use of Danielson’s 2007 Framework for Professional Practice, with an emphasis on how different stakeholders in the traditional student teaching triad rated student teachers, called residents, and justified their ratings. Data sources include biannual self-assessments of each resident as well as assessments by the residents’ cooperating teachers and university supervisors based on the Framework, including both a numerical score for each of the 22 indicators and a written justification for the highest and lowest scores in each of the four domains. Findings show significant differences in terms of how stakeholders are rating residents’ teaching practice. The variation in scores and rationales raises questions about the reliability and validity of the results of the Framework for use as a tool to evaluate student teachers. Implications for practice include the need to consider multiple and potentially conflicting roles, such as that of providing feedback while also evaluating student teachers. In addition, we consider the costs and benefits of more extensive training around the Framework within teacher preparation, if a lack of expertise with the rubric was the cause for the variation. Finally, we consider implications for student teachers around the different messages they may be receiving about what it means to learn to teach.

Similar content being viewed by others

Notes

We could also have chosen to use the terms formative and summative to make this distinction between assessment and evaluation; however, we chose to use assessment and evaluation to make more clear the distinction between the underlying purposes of different usages of frameworks such as Danielson’s.

The views are those of the authors and not of the USDOE.

The IEA program prepares secondary teachers with special education certification to provide a range of services in various contexts with an emphasis on an inclusive approach to heterogeneous classes, including racial, ethnic, ability, linguistic, and other heterogeneities.

In Danielson’s Framework, the scale includes 1—unsatisfactory, 2—basic, 3—proficient, and 4—distinguished. The program chose to reword the descriptors to recognize that residents were learning these skills, and also eliminated the “distinguished” category because it was seen as unlikely for novice teachers to achieve this level of performance.

The interactive journal is an online document in which residents and supervisors communicated, at minimum, on a bimonthly basis.

References

About edTPA (n.d.) Washington, DC: American Asosciation of Colleges for Teacher Education. Retrieved from http://edtpa.aacte.org/about-edtpa.

Benjamin, W. J. (2002). Development and validation of student teaching performance assessment based on Danielson’s Framework for Teaching. Paper presented at the annual meeting for the American Educational Research Association, New Orleans, LA, 1–5 April 2002.

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education, 5(1), 7–74.

Borko, H., & Mayfield, V. (1995). The roles of the cooperating teacher and university supervisor in learning to teach. Teaching and Teacher Education, 11(5), 501–518.

Bullough, R. V., & Draper, R. J. (2004). Making sense of a failed triad mentors, university supervisors, and positioning theory. Journal of Teacher Education, 55(5), 407–420.

Clarke, A., Triggs, V., & Nielsen, W. (2014). Cooperating teacher participation in teacher education: a review of the literature. Review of Educational Research, 84(2), 163–202.

Danielson, C. (1996). Enhancing professional practice: a framework for teaching. Alexandria VA: ASCD.

Danielson, C. (2007a). Enhancing professional practice: a framework for teaching (2nd ed.). Alexandria VA: ASCD.

Danielson, C. (2007b). The framework for teaching: evaluation instrument. Princeton: The Danielson Group.

Darling-Hammond, L., & Snyder, J. (2000). Authentic assessment of teaching in context. Teaching and Teacher Education, 16(5), 523–545.

Feiman-Nemser, S. (1996). Teacher mentoring: a critical review. ERIC Digest. ED397060.

Feiman-Nemser, S. (2001). From preparation to practice: designing a continuum to strengthen and sustain teaching. The Teachers College Record, 103(6), 1013–1055.

Field, A. (2013). Discovering statistics using IBM SPSS statistics. Thousand Oaks: Sage.

Goodwin, A. L., & Oyler, C. (2008). Teacher educators as gatekeepers: deciding who is ready to teach. In M. Cochran-Smith, S. Feiman-Nemser, & J. McIntyre (Eds.), Handbook of research on teacher education: enduring questions in changing contexts (3rd ed., pp. 468–490). NY: Routledge/Taylor & Francis & Associate of Teacher Educators.

Graham, M., Milanowski, A., & Miller, J. (2012). Measuring and promoting inter-rater agreement of teacher and principal performance ratings. Center of Educator Compensation Reform. Retrieved from http://www.educationalimpact.com/resources/TEPC/pdf/TEPC_6_MeasuringandPromotingInterRaterAgreement.pdf.

Hartmann, D. P. (1977). Considerations in the choice of interobserver reliability measures. Journal of Applied Behavior Analysis, 10, 103–116.

Hays, R. D., & Reviki, D. A. (2005). Reliability and validity (including responsiveness). In P. M. Fayers & R. D. Hays (Eds.), Assessing quality of life in clinical trials: methods and practice. New York: Oxford University Press.

Heneman, H. G., III, & Milanowski, A. T. (2003). Continuing assessment of teacher reactions to a standards-based teacher evaluation system. Journal of Personnel Evaluation in Education, 17(2), 173–195.

Hoover, N. L., & O’Shea, L. J. (1987). The influence of a criterion checklist on supervisors’ and interns’ conceptions of teaching. Paper presented at the annual meeting of the American Educational Research Association, Washington, DC.

Kane, T. J., & Staiger, D. O. (2012). Gathering feedback for teaching: combining high-quality observations with student surveys and achievement gains. Policy and Practice Brief. MET Project. Seattle: Bill & Melinda Gates Foundation.

Kane, T. J., Taylor, E. S., Tyler, J. H., & Wooten, A. L. (2010). Identifying effective classroom practices using student achievement data (NBER Working Paper 15803). Retrieved from National Bureau of Economic Research website: http://www.nber.org/papers/w15803.pdf.

Marzano R. J. (2011). The Marzano Teacher Evaluation Model. Retrieved from http://pages.solution-tree.com/rs/solutiontree/images/MarzanoTeacherEvaluationModel.pdf

Milanowski, A. (2004). The relationship between teacher performance evaluation scores and student achievement: evidence from Cincinnati. Peabody Journal of Education, 79(4), 33–53.

Milanowski, A. T. (2011). Validity research on teacher evaluation systems based on the Framework for Teaching. Paper presented at the annual meeting of the American Education Research Association, New Orleans, L.A. Abstract retrieved from http://eric.ed.gov/?id=ED520519.

New York State Education Department (2013). Teacher and principal practice rubrics. Retrieved from http://usny.nysed.gov/rttt/teachers-leaders/practicerubrics/#ATPR.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory (3rd ed.). New York: McGraw-Hill.

Odden, A. (2004). Lessons learned about standards-based teacher evaluation systems. Peabody Journal of Education, 79(4), 126–137.

Sartain, L., Stoelinga, S. R., & Krone, E. (2010). Rethinking teacher evaluation: findings from the first year of the excellence in teaching project in Chicago Public Schools. Consortium on Chicago School Research.

Sartain, L., Stoelinga, S. R., & Brown, E. R. (2011). Rethinking teacher evaluation in chicago: lessons learned from classroom observations, principal-teacher conferences, and district implementation. Research Report. Consortium on Chicago School Research. 1313 East 60th Street, Chicago, IL 60637.

Scriven, M. (1991). Evaluation thesaurus. Thousand Oaks: Sage.

Shepard, L. A. (2000). The role of assessment in a learning culture. Educational Researcher, 29(7), 4–14.

Slick, S. K. (1997). Assessing versus assisting: the supervisor's roles in the complex dynamics of the student teaching triad. Teaching and Teacher education, 13(7), 713–726.

Song, K. H. (2006). A conceptual model of assessing teaching performance and intellectual development of teacher candidates: a pilot study in the US. Teaching in Higher Education, 11(2), 175–190.

Soslau, E., & Lewis, K. (2014). Leveraging data sampling and practical knowledge: field instructors’ perceptions about inter-rater reliability data. Action in Teacher Education, 36(1), 20–44.

Stemler, S. E. (2004). A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Practical Assessment, Research & Evaluation, 9(4). (http://pareonline.net/getvn.asp?v=9&n=4.

Stufflebeam, D. (2001). Evaluation models. New Directions for Evaluation, 89, 7–98.

Tillema, H. H. (2009). Assessment for learning to teach appraisal of practice teaching lessons by mentors, supervisors, and student teachers. Journal of Teacher Education, 60(2), 155–167.

US Department of Education (2009). Race to the top: executive summary. Retrieved from http://www2.ed.gov/programs/racetothetop/executive-summary.pdf.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Assessment of clinical practice (ACP) domains and indicators

Domain 1: planning and preparation

1A: demonstrating knowledge of content and pedagogy

1B: demonstrating knowledge of students

1C: setting instructional outcomes

1D: demonstrating knowledge of resources

1E: designing coherent instruction

1F: designing student assessments

Domain 2: the classroom environment

2A: creating an environment of respect and rapport

2B: establishing a culture for learning

2C: managing classroom procedures

2D: managing student behavior

2E: organizing physical space

Domain 3: instruction

3A: communicating with students

3B: using questioning and discussion techniques

3C: engaging students in learning

3D: using assessment in instruction

3E: demonstrating flexibility and responsiveness

Domain 4: professional responsibilities

4A: reflecting on teaching

4B: maintaining accurate records

4C: communicating with families

4D: participating in a professional community

4E: growing and developing professionally

4F: showing professionalism

Rights and permissions

About this article

Cite this article

Roegman, R., Goodwin, A.L., Reed, R. et al. Unpacking the data: an analysis of the use of Danielson’s (2007) Framework for Professional Practice in a teaching residency program. Educ Asse Eval Acc 28, 111–137 (2016). https://doi.org/10.1007/s11092-015-9228-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11092-015-9228-3