Abstract

We address a numerical methodology for the approximation of coarse-grained stable and unstable manifolds of saddle equilibria/stationary states of multiscale/stochastic systems for which a macroscopic description does not exist analytically in a closed form. Thus, the underlying hypothesis is that we have a detailed microscopic simulator (Monte Carlo, molecular dynamics, agent-based model etc.) that describes the dynamics of the subunits of a complex system (or a black-box large-scale simulator) but we do not have explicitly available a dynamical model in a closed form that describes the emergent coarse-grained/macroscopic dynamics. Our numerical scheme is based on the equation-free multiscale framework, and it is a three-tier procedure including (a) the convergence on the coarse-grained saddle equilibrium, (b) its coarse-grained stability analysis, and (c) the approximation of the local invariant stable and unstable manifolds; the later task is achieved by the numerical solution of a set of homological/functional equations for the coefficients of a polynomial approximation of the manifolds.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The computation of invariant manifolds of dynamical systems is very important for a series of system-level tasks, particularly for the bifurcation analysis and control. For example, the detection of stable manifolds of saddle points allows the identification of the boundary between different basins of attraction, while the intersection of stable and unstable manifolds most often leads to complex dynamical behavior such as chaotic dynamics [10, 49]. Their computation is also central to the control of nonlinear systems and especially in the control of chaos [11, 33, 39, 49]. However, their computation is not trivial: even for relatively simple low-dimensional ODEs, their analytical derivation is most of the times an overwhelming difficult task. Thus, one has to resort to their numerical approximation. However, this task is not easy; at the beginning of the 1990s only one-dimensional global invariant manifolds of vector fields could be computed. Guckenheimer and Worfolk [18] proposed an algorithm for converging on the stable manifold of saddles based on geodesics emanating from the saddle by iteratively rescaling the radial part of the vector field on the submanifold. Johnson et al. [20] introduced a numerical scheme to reconstruct two-dimensional stable and unstable manifolds of saddles. The proposed method starts with the creation of a ring of points on the local-linear eigenspace and successively creates circles of points that are then connected by a triangular mesh. The appropriate points are selected through time integration so that the velocity of the vector field is similar in an arc-length sense for all trajectories. Krauskopf and Osinga [26] developed a numerical method based on geodesics; the manifold is evolved iteratively by hyperplanes perpendicular to a previous detected geodesic circle. Krauskopf et al. [27] addressed a numerical method for the approximation of two-dimensional stable and unstable manifolds which incorporates the solution of a boundary value problem; the method performs a continuation of a family of trajectories possessing the same arc-length. For a survey of methods for the numerical computation of stable and unstable manifolds see also Krauskopf et al. [27]. In the above methods, the stable manifold is computed as the unstable manifold of the inverse map, i.e., by following the flow of the vector field backward in time [8]. Thus, an explicit knowledge of the vector field and its inverse is required which however is not always available. England et al. [8] presented an algorithm for computing one-dimensional stable manifolds for planar maps when an explicit expression for the inverse map is not available and/or even the map is not invertible. Triandaf et al. [47] proposed a procedure for approximating stable and unstable manifolds given only experimental data based on time-delay embeddings of a properly selected data set of initial conditions. Another approach to compute invariant manifolds, the so-called parametrization method has been introduced by Cabre et al. [5,6,7]. This is a numerical-assisted approach based on functional analysis tools for deriving analytical expressions of the local invariant manifolds. This involves the expansion of the invariant manifold as series and the construction of a system of homological equations for the coefficients of the series. Based on this approach, Haro et al. [19] addressed a numerical approach for the computation of the coefficients of high-order power series expansions of parametrizations of two-dimensional invariant manifolds. Breden et al. [4] employed the parametrization method to compute stable and unstable manifolds of vectors fields. For the implementation of the method it is assumed that the vector field is explicitly available in a closed form. Finally, focusing on singularly perturbed systems, Zagaris et al. [51] and Kristiansen et al. [28] extended the Computational Singular Perturbation (CSP) algorithm of Lam and Goussis [17, 29] to approximate stable and unstable fiber directions on the slow manifold.

However, for many complex systems of contemporary interest, the equations that can describe adequately the dynamics at the macroscopic-continuum scale are not explicitly available in the form of ODEs or PDEs in a closed form. Take for example the case where the laws that govern the dynamics of the interactions between the subunits that constitute the complex system may be known in the form of, e.g., molecular dynamics, Brownian dynamics, agent-based modeling, and Monte Carlo, but a macroscopic description (ODEs or PDEs) is not available in a closed form. The lack of such a macroscopic description hinders the systematic numerical analysis, optimization and control. In general, two paths are traditionally followed for the numerical analysis of the emergent dynamics. The first one is the simple brute-force simulation in time. An ensemble of many initial condition configurations would be set up; then a large enough number of microscopic runs would be performed; some of the parameters would be modified and finally the statistics of the detailed simulations would be computed. However, this practice is not appropriate for the systematic numerical analysis (for example one cannot find saddles with temporal simulations). The second path follows the statistical-mechanics/assisted approach where one aims at extracting closures between the moments of the microscopic distributions. For example, for Monte Carlo Markovian models a Master equation is usually derived for a few moments of the underlying probability distribution. However, these equations usually involve higher order moments whose evolution dynamics are functions of higher order moments. This leads to an infinite hierarchy of evolution equations and at some point these higher order moments have to be expressed as functions of the lower order moments in order to “close” the system of equations. However, the assumptions that underlie such “closures” may introduce biases to the analysis of the “actual” dynamics (see for example the discussion in [36]).

The equation-free approach addressed by Kevrekidis et al. [24, 25, 31, 45], a multiscale numerical-assisted framework allows the bridging between traditional continuum numerical analysis methods, and microscopic/stochastic simulation of complex/multiscale systems bypassing the explicit derivation of moment closures. The equation-free approach identifies “on-demand” the necessary quantities for the numerical analysis of the emergent dynamics and has been used for the bifurcation analysis, control, optimization, rare-events analysis of a wide range of microscopic models and problems. Regarding the computation of coarse-grained invariant manifolds, Gear and Kevrekidis [14] introduced a method for the convergence on the coarse-grained slow manifolds of legacy simulators by requiring that the change in the “fast” variables (i.e., the variables that are quickly “slaved” to the variables that parametrize the slow manifold) is zero. Gear et al. [13] computed coarse-grained slow manifolds by restricting the derivatives of the “fast” variables to zero. Zagaris et al. [50] performed a systematic analysis of the accuracy and convergence of equation-free projection to the slow manifold. Frewen et al. [12] traced within the equation-free framework two-dimensional slow manifolds to get out of potential wells. Finally, Quinn et al. [34] have exploited the concept of equation-free approach to compute the one-dimensional stable manifold of a one-dimensional delay differential equation.

Here, building up on a previous work for the computation of coarse-grained center manifolds of microscopic simulators [43], we present a new numerical method based on the equation-free approach for the computation of coarse-grained stable and unstable manifolds of saddles of microscopic dynamical simulators (and in general large-scale discrete-time black-box simulators). The approximation of the coarse-grained stable and unstable manifolds is achieved using a polynomial approximation; the coefficients of the polynomials are computed iteratively by a Newton-Raphson scheme applied on a coarse-grained map of the microscopic simulator. Thus, the proposed numerical method involves a three-step procedure for the equation-free: (a) detection of the coarse-grained saddle, (b) computation of the coarse-grained Jacobian on the saddle and the computation of the corresponding eigenmodes, (c) identification of the coefficients of the polynomials that provide an approximation of the coarse-grained stable and unstable manifolds; this step involves: (i) the numerical construction of a back-box coarse-grained map for the coefficients of the polynomial approximation, and (ii) the iterative estimation of the polynomial coefficients by applying Newton’s method around the constructed coarse-grained map. The method is illustrated through two examples whose stable and unstable manifolds are also approximated analytically. The first example is a simple toy discrete-time map and the second one is a Gillespie-Monte Carlo realization of a simple catalytic reaction scheme describing the dynamics of CO oxidation on catalytic surfaces. This is the first work that addresses the equation-free computation of both coarse-grained stable and unstable manifolds of saddles of microscopic simulators.

2 Computation of stable and unstable manifolds of saddles for discrete-time models

We will first present the basis for approximating the local stable and unstable manifolds of a saddle point of discrete-time systems when the equations are given explicitly. Then, in Section 3, we will show how this can be exploited within the equation-free framework for the approximation of coarse-grained stable and unstable manifolds, when equations are not given explicitly, thus when one has a large-scale black-box simulator. The later case includes both large-scale black-box simulators of let’s say systems of ODEs and particularly microscopic/stochastic multiscale models, which is the focus of this work.

Let us begin by considering a discrete-time model given by:

where \(\boldsymbol {F}:\mathbb {R}^{n} \times \mathbb {R}^{p} \rightarrow \mathbb {R}^{n}\) is a smooth multivariable, vector-valued time-evolution operator that takes as initial condition at time tk = (kT), \(\boldsymbol {x}_{\mathrm {k}} \in \mathbb {R}^{n}\) and after some time horizon (sampling time) T reports the evolved state \(\boldsymbol {x}_{\mathrm {k}+1}, \in \mathbb {R}^{n}\) at time tk + 1 = (k + 1)T; \(\boldsymbol {p} \in \mathbb {R}^{p}\) denotes the vector of parameters.

Regarding the computation of the local stable and unstable manifolds of a saddle point of the above system, the following theorem can be easily proven:

Theorem 1

Let us denote by (x∗,p∗) a saddle point of the discrete-time model (1) which satisfies x∗ = F(x∗,p∗). Let us also assume that the Jacobian ∇F(x∗,p∗) is diagonalizable/block-diagonalizable. Let Vs be the n × l matrix whose l columns are the l eigevectors of ∇F(x∗,p∗) that correspond to the l eigenvalues lying inside the unit circle, and Vu be the n × n − l matrix whose columns are the eigevectors of ∇F(x∗,p∗) that correspond to the n − l eigenvalues lying outside the unit circle. Let us also define \(\boldsymbol {z}_{\mathrm {s}} \in \mathbb {R}^{l}\) and \(\boldsymbol {z}_{\mathrm {u}} \in \mathbb {R}^{n-l}\) by the transformation

where \(\boldsymbol {x}^{\prime }_{\mathrm {k}}=\boldsymbol {x}_{\mathrm {k}}-\boldsymbol {x}^{*}\). Then the fixed point \(\boldsymbol {x}^{\prime *}=\boldsymbol {0}\) has: (A1) a Cr l-dimensional local stable manifold Ws(0) tangent to the subspace spanned by the columns of Vs at the origin defined by:

where \(\boldsymbol {h}_{s}:\mathbb {R}^{l}\rightarrow \mathbb {R}^{n-l}\) is a Cr function which satisfies hs(0) = 0 and \(\nabla _{\boldsymbol {z}_{\mathrm {s}}}h_{\mathrm {s}\mathrm {j}}\equiv \left (\frac {\partial h_{\mathrm {s}\mathrm {j}}}{\partial z_{\mathrm {s}1}},\frac {\partial h_{\mathrm {s}\mathrm {j}}}{\partial z_{\mathrm {s}2}},\dots \frac {\partial h_{\mathrm {s}\mathrm {j}}}{\partial z_{\text {sl}}}\right )\vert _{\boldsymbol {z}_{\mathrm {s}}=\boldsymbol {0}}=\boldsymbol {0}\), \(\forall h_{\mathrm {s}\mathrm {j}}, \mathrm {j}=1,2,{\dots } n-l\); hsj(zs) is the j-th component of hs(zs).(A2) a Cr n − l-dimensional local unstable manifold Wu(0) tangent to the subspace spanned by the columns of Vu at the origin defined by:

where \(\boldsymbol {h}_{\mathrm {u}}:\mathbb {R}^{n-l}\rightarrow \mathbb {R}^{l}\) is a Cr function which satisfies hu(0) = 0 and \(\nabla _{\boldsymbol {z}_{\mathrm {u}}}h_{\mathrm {u}\mathrm {j}}\equiv \left (\frac {\partial h_{\mathrm {u}\mathrm {j}}}{\partial z_{\mathrm {u1}}},\frac {\partial h_{\mathrm {u}\mathrm {j}}}{\partial z_{\mathrm {u2}}},{\dots } \frac {\partial h_{\mathrm {u}\mathrm {j}}}{\partial z_{\mathrm {u1}}}\right )\vert _{\boldsymbol {z}_{\mathrm {u}}=\boldsymbol {0}}=\boldsymbol {0}\), \(\forall h_{\mathrm {u}\mathrm {j}}, \mathrm {j}=1,2,{\dots } l\); huj(zu) is the j-th component of hu(zu).(B1) On the stable manifold the following system of functional equations hold:

(B2) On the unstable manifold the following system of functional equations hold:

In the above, (5) and (6) provide the equations of the invariant manifolds in diagonalized coordinates. Λs is the l × l (block) diagonal matrix containing the l eigenvalues with |λi| < 1 and Λu is the (n − l) × (n − l) (block) diagonal matrix containing the (n − l) eigenvalues with |λi| > 1; gs and gu are l and (n − l) vector-valued functions, respectively, obtained by

where \(\boldsymbol {g}(\boldsymbol {x}^{\prime },\boldsymbol {x}^{*},\boldsymbol {p}^{*})\) corresponds to the n-vector-valued nonlinear function:

containing all, but the linearization around the saddle, nonlinear terms of F(xk,p), satisfying: \( \left \lVert \boldsymbol {g}(\boldsymbol {x}_{\mathrm {k}}^{\prime },\boldsymbol {x}^{*}, \boldsymbol {p}^{*})\right \rVert \leq c(\boldsymbol {x}^{*}) \left \lVert \boldsymbol {x}^{\prime }_{\mathrm {k}} \right \rVert ^{2}\).

Proof

Equations (5) and (6) are derived by re-writing (1) using the transformation given by (2) as:

and

Then, by taking (3), Eq. (10) reads:

Finally, by (11) and (9) we obtain:

with hs(0) = 0.

In a similar manner, it can be shown that (6) in (B2) holds true on the unstable manifold. □

2.1 Local approximation of the stable and unstable manifolds with truncated polynomial sequence

As by Theorem 1, the local stable and unstable manifolds are smooth nonlinear functions of zs and zu, respectively, then according to the Stone-Weierstrass theorem [37] they can be approximated by any accuracy around (x∗,p∗) by a sequence of polynomial functions of zs and zu, respectively.

For example, for the stable manifold (and similarly for the unstable manifold), \(\forall z_{\mathrm {u}\mathrm {j}}=h_{\mathrm {s}\mathrm {j}}(\boldsymbol {z}_{\mathrm {s}}), \mathrm {j}=1,2,{\dots } n-l\) we can write:

where \(P_{\mathrm {k_{i}}}\), i = 1, 2, .. l are polynomials (e.g., Chebyshev polynomials) of degree ki.

Truncating at a degree, say, M, we get the following truncated polynomial approximation:

A simple choice would be to take as polynomials the power series expansion of zs. For example, if l = 2, M = 2, (14) results to an expression with (M + 1)l = 9 terms (including the constant term which under the above formulation should be zero):

The existence of a local analytic solution for the form of the nonlinear functional (14) is guaranteed by the following theorem [46] (see also [21]):

Theorem 2

Consider the following system of nonlinear functional equations:

where \(\boldsymbol {\phi }:\mathbb {R}^{n} \rightarrow \mathbb {R}^{m}\) is an unknown function. Then if:

-

1.

\(\boldsymbol {f}:\mathbb {R}^{n}\rightarrow \mathbb {R}^{n}, \boldsymbol {w}:\mathbb {R}^{n} \times \mathbb {R}^{m} \rightarrow \mathbb {R}^{m}\) are analytic functions such that f(0) = 0 and w(0,0) = 0.

-

2.

The function ϕ admits a formal power series solution.

-

3.

The fixed point that satisfies f(0) = 0 is a hyperbolic point, i.e., none of the eigenvalues of the Jacobian ∇zf(z = 0) is on the unit circle.

Then, the above system admits a unique solution (which satisfies ϕ(0) = 0).

Thus, by introducing the polynomial approximation given by (14) into (5), we get ∀k, \(\forall h_{\mathrm {s}\mathrm {j}}(\boldsymbol {z}_{\mathrm {s}}), \mathrm {j}=1,2,{\dots } \mathrm {n}-l\), the following system for the stable manifold (see also (10), (12)):

where \(\boldsymbol {\hat {z}}_{\mathrm {s}}=\{\hat {z}_{\mathrm {s}1},{\dots } \hat {z}_{\text {sl}}\}\) are nonlinear functions of \(\boldsymbol {z}_{s}=\{{z}_{\mathrm {s}1},{\dots } {z}_{\text {sl}}\}\):

Note that in general, both the left-hand side and the right-hand side of (17) contain higher order terms than M due to (5) and the nonlinearities in guj. By equating both sides of (17) the terms up to an order r <= M with respect to \(\{{z}_{\mathrm {s}1},{\dots } {z}_{\mathrm {s}l}\}\), we get the following coupled system of nonlinear homological equations with respect to the (n − l) ⋅ (r + 1)l polynomial coefficients \(a_{\mathrm {k}_{1},\mathrm {k}_{2},\dots ,\mathrm {k}_{l}}^{(\mathrm {j})},\{\mathrm {j}=1,2, {\dots } n-l\}, \{\mathrm {k}_{1},\mathrm {k}_{2},...,\mathrm {k}_{l}=0,1,{\dots } r\}\):

with \({\mathrm {j}=1,2,{\dots } (n-l)},{\mathrm {i}=1,2,{\dots } (r+1)^{l}}\).

The above system constitutes a nonlinear (in general) system of (n − l) ⋅ (r + 1)l unknowns with (n − l) ⋅ (r + 1)l equations that can be solved iteratively, e.g., using the Newton-Raphson algorithm.

What is described above, forms the basis for the numerical algorithm presented in Section 3 for the equation-free computation of coarse-grained stable and unstable manifolds of saddles of microscopic simulators.

2.2 An illustrative example

Let us consider the following discrete dynamical system:

The above system can be written as:

It can be shown that the local stable manifold of the saddle (0,0,0) is given by \(h_{\mathrm {s}}(x_{1},x_{2})=-\frac {4}{7}{x}_{2}^{2}+\frac {32}{119}{x}_{1}^{2}x_{2} +O\left ({x}_{1}^{2}{x}_{2}^{2}\right )\) as follows. Let us choose a power series expansion up to order two (i.e., M = 2) of the stable manifold around the fixed point \(x_{1}^{*}=x_{2}^{*}=x_{3}^{*}=0\). Hence, an approximation of the stable manifold is given by:

Here, \(\boldsymbol {V}=\left [\begin {array}{cccc} 1 &0 &0\\0 &1 &0\\0 &0 &1 \end {array}\right ]\). Hence, from (5) we get:

or

or

Thus, from (22) we have:

or

By equating the coefficients of the corresponding power series up to order two, we get the following system of equations:

From the above system we get:

Thus, an approximation of the stable manifold around the saddle point is given by:

It can be shown, that the unstable manifold is the trivial solution x1 = 0, x2 = 0 as follows. Let us again choose a power series expansion up to order two (i.e., M = 2) of the unstable manifold around the saddle. Hence, an approximation of the stable manifold is given by:

Hence, from (6) we get:

For the above system, it can be easily verified that the unstable manifold is the one with x1 = 0, x2 = 0.

3 Numerical approximation of the stable and unstable manifolds of microscopic-stochastic multiscale and black-box simulators

Let us now assume that explicit model equations (such as the ones given by (1)) for the macroscopic (emergent) dynamics are not available in a closed form. Under this hypothesis, we cannot follow the procedure for the analytical approximation of the invariant manifolds as one needs to explicitly know the operator F (i.e., gs and gu in (7)). Thus, when explicit macroscopic equations are not available in a closed form, but a microscopic dynamical simulator is available, the approximation of the invariant stable and unstable manifolds at the macroscopic (the coarse-grained) level requires: (a) the bridging of the micro and macro scale, and (b) the numerical approximation of the coarse-grained manifolds. In what follows, we address a new multiscale numerical method for the approximation of the local stable and unstable manifolds based on the equation-free framework. Thus, let us assume that we have a microscopic (such as a Brownian dynamics, Monte Carlo, molecular dynamics, agent-based) computational model that, given a microscopic/detailed distribution of states

at time tk = kTU, will report the values of the evolved microscopic/detailed distribution after a time horizon TU:

\(\boldsymbol {{{\varPhi }}}_{T_{U}}:\mathbb {R}^{N} \times \mathbb {R}^{p} \rightarrow \mathbb {R}^{N}\) is the time-evolution microscopic operator, \(\boldsymbol {p} \in \mathbb {R}^{p}\) is the vector of the parameters.

A key hypothesis for the implementation of the equation-free numerical framework is that after some time t ≫ TU the emergent macroscopic dynamics can be described by a few observables, say, \(\boldsymbol {x} \in \mathbb {R}^{n}, n\ll N\). Usually these “few” observables are the first moments of the underlying microscopic distribution. This implies that there is a slow coarse-grained manifold that can be parametrized by x. The hypothesis of the existence of a slow coarse-grained manifold dictates that the higher order moments, say, \(\boldsymbol {y} \in \mathbb {R}^{N-n}\), of the microscopic distribution U become, relatively fast over the macroscopic time, functions of the n lower order moments. At the moments-space, this dependence can be written as a singularly perturbed system of the form:

where 𝜖 > 0 is a sufficiently small number. Under the above description and assumptions, Fenichels’ theorem for continuous systems [9] can be extended to the following theorem that guarantees the existence of an invariant low-dimensional “slow” manifold on which evolve the coarse grained dynamics of the discrete system (35) (see also [3]).

Theorem 3

Let us assume that the functions \(\boldsymbol {X}:\mathbb {R}^{n} \times \mathbb {R}^{N-n} \times \mathbb {R}^{p} \rightarrow \mathbb {R}^{n}\), \(\boldsymbol {Y}:\mathbb {R}^{n} \times \mathbb {R}^{N-n} \times \mathbb {R}^{p} \rightarrow \mathbb {R}^{N-n}\) \(\in C^{r}, r<\infty \) in an open set around a hyperbolic fixed point. Then the dynamics of the system given by (35) can be reduced to:

on a smooth manifold defined by:

The manifold M𝜖 is diffeomorphic and O(𝜖) close to the M0 manifold defined for 𝜖 = 0. Moreover, the manifold M𝜖 is locally invariant under the dynamics given by (35).

M𝜖 defines the “slow” manifold on which the dynamics of the system evolve after a short (in the macroscopic scale) time horizon.Under this perspective and under the above theorem assumptions, let us define the coarse-grained map:

where \(\boldsymbol {F}_{T}:\mathbb {R}^{n} \times \mathbb {R}^{p} \rightarrow \mathbb {R}^{n}\) is a smooth multivariable, vector-valued function having xk as initial condition at time tk = kT, where T is a macroscopic reporting time horizon with T >> TU.

The above coarse-grained map, which describes the system dynamics on the slow coarse-grained manifold M𝜖 can be obtained by finding χ that relates the higher order moments of the microscopic distribution Uk to the lower order moments x that describe the macroscopic observations/dynamics. The equation-free approach, through the concept of the coarse timestepper, bypasses the need to extract such a relation analytically which in most of the cases is an “overwhelming” difficult task and can introduce modeling biases (see the critical discussion in [36]). The equation-free approach provides such relations in a numerical way: relatively short calls of the detailed simulator provide this closure (refer to [24, 25, 45] for more detailed discussions). Briefly, the coarse timestepper consists of the following basic steps: Given the set of the macroscopic variables at time t0:

-

(a)

Set the coarse-grained initial conditions xk = 0 ≡x0.

-

(b)

Transform the coarse-grained initial conditions to consistent microscopic distributions U0 = μx0, where μ is a lifting operator.

-

(c)

Run the microscopic/detailed simulator for a short macroscopic interval T to get the resulting microscopic distributions Uk + 1. The choice of T is associated with the (estimated) gap of the eigenspectrum of the Jacobian of the unavailable closed macroscopic equations around the stationary state.

-

(d)

Compute the values of the coarse-grained variables using a restriction operator M: xk + 1 = MUk + 1.

The above steps constitute the black box coarse timestepper, which, given an initial coarse-grained state of the system {xk,p}, at time tk = kT will report the result of the integration of the microscopic rules after a given time-horizon T (at time tk + 1), i.e., xk + 1 = FT(xk,p).

At this point, one can use iterative linear algebra numerical methods such as the Newton-Raphson method (for low-order systems) to converge to the coarse-grained fixed points and to perform bifurcation analysis. For large-scale systems, one can also resort to matrix-free methods such as the Newton-GMRES [23] to find the coarse-grained fixed points and the Arnoldi method [38] to analyze the stability of the coarse-grained fixed points of the unavailable macroscopic evolution equations.

The coarse-grained Jacobian \(\nabla \boldsymbol {F}_{T}(\boldsymbol {x}^{*}, \boldsymbol {p}^{*})\) can be computed by appropriately perturbing the coarse-grained initial conditions fed to the coarse timestepper (38). For low to medium dimensions, the i-th column of the Jacobian matrix can be evaluated numerically, e.g., using central finite differences as:

where ei is the unit vector with one at the i-th component and zero in all other components.

Then, one can solve the eigenvalue problem

with direct solvers.

The tracing of solution branches of saddles around turning points can be achieved by standard continuation techniques such as the pseudo-arc-length continuation [22].

For the above procedure to be accurate, one should perform the required computations when the system lies on the slow manifold. If the gap between the fast and slow time scales is very big then the time required for trajectories starting off the slow manifold to reach the slow manifold will be small compared to T; hence, we expect that the coarse-grained computations will not be significantly affected for any practical means. As also discussed in Kevrekidis et al. [25], under the “strong assumption” of a big separation of time scales, the fast off-the slow manifold dynamics will lead to quick “healing” of the lifting error. Nevertheless, one can relax the above “strong” (and “vague” [25]) assumption and enhance the computing accuracy by producing lifting operators that bring the system on the slow manifold (using for example the algorithms presented in [13, 14, 32, 44, 50]).

Returning back to the problem of numerical approximation of the stable and unstable manifolds, as now there are no analytical expressions, due to the nonlinear dependence of gs and gu on zs and zu, the coefficients a of the polynomial approximation of the stable zu = h(zs) (and correspondingly of the unstable) manifold can be determined numerically by solving in an iterative way the system of nonlinear homological equations given by (19).

Here, we solve the above task through the concept of the coarse-timestepper as described in the following steps (in what follows, we present the algorithm for the computation of the stable manifold; the procedure for the computation of the unstable manifold is analogous):

-

1.

Construct the coarse-timestepper given by the map (38) using appropriate lifting μ and restricting M operators of the microscopic evolved distributions.

-

2.

“Wrap” around the coarse-timestepper a continuation technique (e.g., the pseudo-arc-length continuation) to converge to a saddle fixed point (x∗,p∗).

-

3.

Compute the coarse-grained Jacobian \(\nabla \boldsymbol {F}_{T}(\boldsymbol {x}^{*}, \boldsymbol {p}^{*})\) and solve the eigenvalue problem \(\nabla \boldsymbol {F}_{T}(\boldsymbol {x}^{*}, \boldsymbol {p}^{*}) \boldsymbol {V}=\boldsymbol {{{\varLambda }}}\boldsymbol {V}\). Find the l stable and n − l unstable eigenmodes. Rearrange V as \(\boldsymbol {V}=\left [\begin {array}{ll} \boldsymbol {V}_{\mathrm {s}} & \boldsymbol {V}_{\mathrm {u}} \end {array}\right ]\) with Vs being the n × l matrix whose columns are the eigevectors of the Jacobian that correspond to the l eigenvalues lying inside the unit circle, Vu is a n × n − l matrix whose columns are the eigevectors of the Jacobian that correspond to the n − l eigenvalues lying outside the unit circle.

-

4.

Choose a certain set of polynomials as well as their maximum order M for the numerical approximation of the j-th element, say hjs of the stable manifold in the form of:

$$ \begin{aligned} z_{\text{ju}}= h_{\text{js}}(\boldsymbol{z}_{\mathrm{s}})= \sum\limits_{k_{1}=0}^{M}\sum\limits_{k_{2}=0}^{M} {\dots} \sum\limits_{k_{l}=0}^{M} {a}_{\mathrm{k}_{1},\mathrm{k}_{2},\dots,\mathrm{k}_{l}}^{(j)} P_{\mathrm{k}_{1}}(z_{1\mathrm{s}}) \dotsm P_{\mathrm{k}_{l}}(z_{l\mathrm{s}}),\\ \mathrm{j}=1,2,{\dots} n-l, \end{aligned} $$(41)where, the variables zs,zu are defined by the transformation (2).

-

5.

Let us denote with q(j), \(\mathrm {j}=1,2,{\dots } n-l\), the column vector of dimension ((M + 1)l − 1) × 1 containing the unknown polynomial coefficients \(a_{\mathrm {k}_{1},\mathrm {k}_{2},\dots ,\mathrm {k}_{l}}^{(\mathrm {j})}\) of the j-th element (hjs) of the stable manifold as approximated by (41). Set an initial guess at time t = 0, for each one of the unknown coefficients of the (expansion) contained in q(j), say \(\boldsymbol {q}^{(\mathrm {j})}_{0}\). Form \(\boldsymbol {Q}_{0}=[\boldsymbol {q}^{(\mathrm {1})}_{0}, \boldsymbol {q}^{(\mathrm {2})}_{0}, {\dots } \boldsymbol {q}^{(\mathrm {j})}_{0}, {\dots } \boldsymbol {q}^{({n-l})}_{0}]'\), i.e., the column vector of dimension ((M + 1)l − 1) ⋅ (n − l) containing all the unknown coefficients.

-

6.

Create a grid of np initial conditions zs,0 within a certain distance B around (\(\boldsymbol {z}_{\mathrm {s}}=0, \boldsymbol {p}^{*}\)) where an approximation of the stable manifold is sought, and at a certain distance from it, i.e., \( \epsilon _{d}<\left \lVert \boldsymbol {z}_{\mathrm {s,0}}\right \rVert <B\).

-

7.

Set convergence tolerance, say, tol, for the approximation of the polynomial coefficients. Set the number of time steps kmax for calling the timestepper (38) and define \(\left \lVert \boldsymbol {dQ} \right \rVert =\left \lVert \boldsymbol {Q}_{\mathrm {k_{max}}} -\boldsymbol {Q}_{0}\right \rVert _{L_{2}}\).

-

8.

Do until convergence (\(\left \lVert \boldsymbol {dQ}\right \rVert >tol\)):

-

Use the coarse-timestepper (38) to construct the map:

$$ \boldsymbol{Q}_{\mathrm{k}_{\max}}=\boldsymbol{{{\varXi}}}(\boldsymbol{Q}_{0}) $$(42)over the kmax time steps. The map \(\boldsymbol {{{\varXi }}}: \mathbb {R}^{((M+1)^{l}-1)\cdot (n - l)} \longrightarrow \mathbb {R}^{((M+1)^{l}-1)\cdot (n - l)} \) is constructed using the coarse-timestepper as follows:

For \(i=1,2,\dots , n_{p}\) initial conditions \(\boldsymbol {z}^{(i)}_{\mathrm {s},0}\):

-

Use (41) to find \({z}_{\mathrm {u}\mathrm {j},0}^{(i)}, (\mathrm {j}=1,2,\dots ,n-l)\).

-

Use Q0 with the aid of (2) to find \(\boldsymbol {x}^{(i)}_{0}\).

-

For \(\mathrm {k}=0,1,{\dots } \mathrm {k}_{\max \limits }-1\) time steps

-

End For

End For

Based on all np simulations construct the matrix A that contains all the values of each one of the polynomials \(P_{\mathrm {k}_{1}}(z_{1\mathrm {s}}) \dotsm P_{\mathrm {k}_{l}}(z_{l\mathrm {s}})\), \(\mathrm {k}_{1},\mathrm {k}_{2},{\dots } \mathrm {k}_{l} =0,1,{\dots } M\). Thus, the above procedure will result to a matrix A of dimension (np ⋅ (kmax + 1)) × ((M + 1)l − 1) (the constant terms are set equal to zero).

Find \(\boldsymbol {q}^{(\mathrm {j})}_{\mathrm {k}_{\max \limits }}, (\mathrm {j}=1,2,\dots ,n-l)\), by solving the linear least squares problem:

$$ \underset{\boldsymbol{q}^{(\mathrm{j})}_{\mathrm{k}_{\max}}}{\min} \left\lVert \boldsymbol{A}\boldsymbol{q}^{(\mathrm{j})}_{\mathrm{k}_{\max}}-\boldsymbol{b}^{(\mathrm{j})}\right\rVert_{L_{2}}, $$(43)where, \(\boldsymbol {b}^{(\mathrm {j})}=\left [\begin {array}{llllll} {z}_{\mathrm {uj,0}}^{(1)} & {z}_{\mathrm {u}\mathrm {j},1}^{(1)} &{\dots } {z}_{\mathrm {u}\mathrm {j},\mathrm {k}_{\max \limits }}^{(1)} &{\dots } {z}_{\mathrm {u}\mathrm {j},0}^{(np)} & {z}_{\mathrm {u}\mathrm {j},1}^{(np)} &{\dots } {z}_{\mathrm {u}\mathrm {j},\mathrm {k}_{\max \limits }}^{(np)} \end {array}\right ]^{\prime }\) is a column vector of dimension \(\mathrm {n}_{\mathrm {p}} \cdot (\mathrm {k}_{\max \limits }+1)\times 1\).

The optimal solution of the above linear least squares problem is given by the solution of

$$ \boldsymbol{A}^{\prime}\boldsymbol{A} \boldsymbol{q}^{(\mathrm{j})}_{\mathrm{k}_{\max}}=\boldsymbol{A}^{\prime}\boldsymbol{b}^{(\mathrm{j})}. $$(44)If the matrix \(\boldsymbol {A}^{\prime }\boldsymbol {A}\) is of full rank, then the above system has a unique solution given by:

$$ \boldsymbol{q}^{(\mathrm{j})}_{\mathrm{k}_{\max}}=(\boldsymbol{A}^{\prime}\boldsymbol{A})^{-1}\boldsymbol{A}^{\prime}\boldsymbol{b}^{(\mathrm{j})}. $$(45)Note that if the initial points \(\boldsymbol {x}^{(i)}_{0}\) are chosen close enough to the fixed point x∗ and/or the number of time-steps kmax are relatively large then as \(\boldsymbol {z}_{\mathrm {s}} \rightarrow 0\), the matrix \(\boldsymbol {A}^{\prime }\boldsymbol {A}\) will not be of full rank. In that case one could use the Moore-Penrose pseudo-inverse of \(\boldsymbol {A}^{\prime }\boldsymbol {A}\) to solve (44) and the solution reads:

$$ \boldsymbol{q}^{(\mathrm{j})}_{\mathrm{k}_{\max}}=A^{+}\boldsymbol{b}^{(\mathrm{j})}, $$(46)where, the pseudo-inverse matrix A+ that is obtain by the Singular Value Decomposition (SVD) of the matrix A:

$$ \boldsymbol{A}^{+}=\boldsymbol{V}\boldsymbol{{{\varSigma}}}^{+}\boldsymbol{U}^{\prime}, $$(47)where, Σ+ is the inverse of sub-block diagonal matrix containing the non-zero singular values of the SVD decomposition of A.

Form \(\boldsymbol {Q}_{\mathrm {k}_{\max \limits }}=\left [\boldsymbol {q}_{\mathrm {k}_{\max \limits }}^{(\mathrm {1})}, \boldsymbol {q}_{\mathrm {k}_{\max \limits }}^{(\mathrm {2})}, \dots \boldsymbol {q}_{\mathrm {k}_{\max \limits }}^{(\mathrm {j})}, {\dots } \boldsymbol {q}_{\mathrm {k}_{\max \limits }}^{({n-l})}\right ]'\).

Thus the above procedure creates the map:

$$ \boldsymbol{Q}_{\mathrm{k}_{\max}}=\boldsymbol{{{\varXi}}}(\boldsymbol{Q}_{0}) $$(48) -

-

Update the values of the polynomials coefficients by using, e.g., the Newton-Raphson scheme around the map given by (42):

-

Set f = Q0 −Ξ(Q0)

-

Compute the Jacobian ∇Ξ(Q0) by perturbing appropriately Q0 (thus, repeating the pipeline described in step 8 above, ((M + 1)l − 1) ⋅ (n − l) times, thus starting simulations using Q0 ± 𝜖ei (where \(\boldsymbol {e}_{i}, i=1,2,\dots , ((M+1)^{l}-1)\cdot (n - l)\) is the unit vector with one at the i-th component and zero in all other components of Q0) for an approximation of the Jacobian Ξ(Q0) with central differences).

-

Solve the linear system

$$ \left[\begin{array}{ll} \boldsymbol{I} -\nabla \boldsymbol{{{\varXi}}}(\boldsymbol{Q}_{0}) \end{array}\right]\cdot \boldsymbol{dQ}=-\boldsymbol{f} $$(49)to get dQ.

-

Update the estimates for the polynomial coefficients:

$$ \boldsymbol{Q}_{0}=\boldsymbol{Q}_{0}+\boldsymbol{dQ}. $$(50)

End Do }.

-

-

Note, that the above scheme can be seen as a Gauss-Newton-like scheme for the estimation of the unknown coefficients of the series expansion by the aid of the coarse-timestepper. While the existence of a unique solution is on the one hand guaranteed by Theorems 1 and 2, on the other hand, the convergence of the scheme depends on the choice of the distance B around the saddle point, where the approximation of the invariant manifolds in sought, the initial guesses for the polynomial coefficients, the level of noise due to the microscopic (stochastic) simulations, as well as the “quality” of the lifting operator (see the discussion for equation-free computations, e.g., in [13, 14, 25, 31, 32, 42, 44, 50, 51]). An initial guess for the polynomial coefficients could be the values of the coefficients of the linear terms of the polynomial expansion that reconstruct the subspace spanned by the columns of Vs or Vu. The convergence properties of the above scheme with respect to the above factors will be studied more thoroughly in a future work.

4 The illustrative examples: numerical results

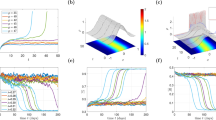

The proposed approach is illustrated through two examples: (a) the toy model (20) and (b) a Monte Carlo simulation of a catalytic reaction on a lattice presented in [31], for which we have also derived analytically an approximation of the stable and unstable manifolds based on the mean field model.

4.1 The toy model

We have shown that the stable manifold of the discrete time model given by (20) is given by

Here, we will derive a numerical approximation of the stable manifold by assuming that the equations of the model are not explicitly known. Our assumption is that we have a black-box model that given initial conditions (x1,0,x2,0,x3,0) it outputs (x1,k,x2,k,x3,k), \(\mathrm {k}=1,2,\dots \). The saddle point is the \((\boldsymbol {x}_{1}^{*},\boldsymbol {x}_{2}^{*}, \boldsymbol {x}_{3}^{*})=(0,0,0)\). The Jacobian on the saddle is approximated by central finite differences with 𝜖 = 0.01 as perturbation on the initial conditions and running the simulator for one step. By doing so, the numerical approximation of the Jacobian actually coincides for any practical means with the analytical one. The eigenvalues are λ1 = − 0.5, λ2 = − 0.5, λ3 = 2 and the eigenvectors are given by ei, i.e., the unit vectors with one at the i-th component and zero in all other components. From the above, it is clear that z1s = x1, z2s = x2, z1u = x3. Thus, we chose a power series expansion of the manifold around the saddle as

For the construction of the map (see (42)) we have used the following parameters: kmax = 3, np = 4, zs1,0 = (− 0.1,0.1), zs2,0 = (− 0.1,0.1), and central finite differences with 𝜖 = 0.01, for the numerical approximation of the Jacobian ∇Q that is required for the Newton-Raphson iterations; the tolerance was set to tol = 1E-04, and the initial guess of the power expansion coefficients was set as q0 ≡ (a0,1,a0,2,a1,0,a1,1,a1,2,a2,0,a2,1,a2,2)=(0,0,0,0,0,0,0,0).

The algorithm converged in two Newton iterations to the following expression for the stable manifold:

The numerical scheme outputs also a non-zero coefficient for a2,2 which is not present in the analytical approximation. This is due to the truncation of the power expansion to second-order terms: when equating the terms on both sides of (27), higher order powers than three are set to zero. One can confirm the contribution of this extra term found by the numerical scheme by simple simulations. For example, by setting as initial conditions x1,0 = 0.2, x2,0 = 0.2 and \(x_{3,0}=-\frac {4}{7}x_{2,0}^{2}+\frac {32}{119}x_{1,0}^{2}x_{2,0}\), we get the results shown in Table 1.

Note that x3,k goes to zero and then after k = 3 it diverges due to the (truncated) approximation of the manifold. This is what should be expected: the lower order model results in a trajectory that is a little bit off the stable manifold; thus at the beginning, the system approaches the saddle (see the first two time steps) and then diverges away from it as expected. In fact, if we add the extra term found with the numerical scheme, and start with the same initial conditions for x1,0 and x2,0, but with \(x_{3,0}=-\frac {4}{7}x_{2,0}^{2}+\frac {32}{119}{x}_{1,0}^{2}x_{2,0}-0.2598x_{1,0}^{2}x_{2,0}^{2}\), we get the results shown in Table 2, that is after k = 3 the system converges to the saddle.

4.2 Kinetic monte carlo simulation of CO oxidation on a catalyst

The proposed numerical approach is illustrated through a kMC microscopic model describing the dynamics of CO oxidation on a catalyst [31]. The species react adsorbed or desorbed on a finite lattice with periodic boundary conditions. At each time instant, the sites of the lattice are considered to be either vacant or occupied by the reaction species. The system dynamics are described by the following chemical master equation:

where, P(x,t) is the probability that the system will be in state x at time t and Q(y,x) is the probability for the transition from state y to x (and vice versa) per unit time. The summation runs over all possible transitions (reactions). Here, the numerical simulation of the above stochastic equation was realized using the Gillespie kMC algorithm [15, 16, 31]. The reaction mechanism can be schematically described by the following elementary steps (for more details see in [30]):

-

(1)

\(CO_{gas} +*_{i} \leftrightarrow CO_{ads,i}\)

-

(2)

\(O_{2,gas}+*_{i}+*_{j} \leftrightarrow O_{ads,i} +O_{ads,j}\)

-

(3)

\(CO_{ads,i}+O_{ads,j} \rightarrow CO_{2,gas}+*_{i}+*_{j}\)

where i, j are neighbor sites on the square lattice, ∗ denotes a site with a vacant adsorption site, while “ads” denotes adsorbed particles. By adding an inert site-blocking adsorbate with a reversible adsorption step the mean field approximation can be derived by the master (54) and is given by the following system of ordinary differential equations [31]:

where, 𝜃i represent the coverages of species (i = A,B,C, resp. CO, O and inert species C) on the catalytic surface; μ denotes C adsorption and η C desorption rate. For α = 1.6, γ = 0.04, kr = 1, η = 0.016, μ = 0.36 and treating β as the bifurcation parameter the mean field model (22) exhibits two Andronov-Hopf points at β ≈ 20.3 and β ≈ 21.2 and \((\theta _{A}^{*},\theta _{B}^{*},\theta _{C}^{*},\beta ^{*})_{2} \approx (0.1895, 0.0575, 0.7207, 21.2779)\). Between the two Andronov-Hopf points, the equilibria are saddles.

For the time integration of the mean field model (55), we used the Matlab ode suite function “ode15s” [40], a variable-step, variable-order solver with absolute and relative tolerances set to 1E-03. For the kMC simulations the number of the sites (system size) and the number of runs—consistent to the mean values of the distribution on the lattice realizations—were chosen to be Nsize = 2000 × 2000 and Nr = 2000, respectively. The value of the time horizon was selected as T = 0.05 (for a discussion on the appropriate choice of the reporting horizon T and as well as the system size and time realization please refer to [31]). The coarse-grained bifurcation diagram was obtained by applying the equation-free approach upon convergence of the Newton-Raphson to a residual of O(10− 3) for 𝜖 ≈ 10− 2. We have chosen this model as for big enough lattice realizations and runs, the coarse-grained bifurcation diagram and stability practically coincides with the one obtained from the mean filed model [31]; thus one can perform a direct comparison of the numerical approximation of the stable manifold obtained with the kMC simulator and the one derived analytically from the mean field model.

Here, we have chosen to find the stable and unstable manifolds at β = 20.7. For this value of the bifurcation parameter and the choice of the values of T, Nr and N, the coarse-grained fixed point is found to be \((\theta _{A}^{*},\theta _{B}^{*},\theta _{C}^{*})\approx (0.294, 0.029, 0.648)\) and the corresponding coarse-grained Jacobian is \(\left [\begin {array}{llll} 0.924&-0.121&-0.068\\ -0.108&0.845&-0.104\\ -0.016&-0.015&-0.597 \end {array}\right ]\) (compare this with the one that is obtained from the T-map of the mean field model: (\(\theta _{A}^{*},\theta _{B}^{*},\theta _{C}^{*})\approx (0.2924, 0.0294, 0.6492)\) and the corresponding Jacobian: \(\left [\begin {array}{ccc} 0.9244&-0.1202&-0.0684\\ -0.1088&0.8466&-0.1038\\ -0.0161&-0.0151&-0.597830 \end {array}\right ]\)). The coarse-grained eigenvalues and corresponding eigenvectors are:

λ1 ≈ 0.752, \(\boldsymbol {v}_{1}=\left (\begin {array}{c} -0.597\\-0.796\\-0.094 \end {array} \right )\), λ2,3 ≈ 1 ± 0.0013i, \(\boldsymbol {v}_{2,3}= \left (\begin {array}{c} -0.796\\0.181 \pm 0.064i\\ 0.565 \mp 0.098i \end {array} \right )\).

4.2.1 Numerical approximation of the stable manifold

For our illustrations, we have chosen to provide a third-order approximation of the stable manifold for the mean-field model (55) and its corresponding kMC simulation. Thus we sought for an approximation of the stable manifold as:

Following the approach described in Section 2, one obtains a nonlinear system of six algebraic equations (see A) which was solved for the unknown coefficients with Newton-Raphson. Here, the convergence tolerance was of the order of 10− 6, while the perturbation for computing the Jacobian matrix with finite differences was of the order of 10− 3. The initial guess of the coefficients was set as q0 = (0,0,0,0,0,0)′. In this case, the expression for the approximation of the stable manifold is given by:

Next, we applied the proposed numerical method, treating the mean-field timestepper-resulting from the integration of the system of ODEs (55) with ode15s-as a black-box with a reporting time horizon T = 0.05. We have set np = 6 and a grid of initial conditions zs,0 : {− 0.02,− 0.01,− 0.005,0.005,0.01,0.02} around the saddle and \(\mathrm {k}_{\max \limits }=6\). Again, the convergence tolerance was set to 10− 6, while the perturbation for computing the Jacobian matrix with finite differences was of the order of 10− 2. Starting the algorithm with zeros as initial guesses for all the unknown coefficients (i.e., by setting q0 = {0,0,0,0,0,0}), the algorithm results to the following expression:

The scheme converged within 3 iterations (the sequence of the convergence criterion \(\left \lVert \boldsymbol {dq}^{(\mathrm {j})} \right \rVert \) \(\sim \) was (after the first iteration) \(\sim \) O(0.1), \(\sim \) O(10− 7)).

By selecting a grid closer to the equilibrium as zs,0 : {− 0.005,− 0.003,− 0.001, 0.001,0.003,0.005}, the algorithm results to the following expression:

By comparing (57) and (59), we see that the numerical approximation of the coarse-grained manifold as obtained by “wrapping” the proposed algorithm around the black-box ODEs timestepper is almost identical with the one computed using Newton-Raphson for the solution of the analytically derived nonlinear homological equations (given in Appendix A). A relatively small deviation in the coefficients of the 3rd-order terms in (58) which was computed with the “wider” grid is due to the larger truncation error.

Finally, we applied the proposed numerical approach to the kMC timestepper with Nsize = 2000 × 2000, Nr = 2000 and a reporting time horizon T = 0.05. In this case, the convergence tolerance for the kMC simulations was set 10− 3, while the perturbation for computing the Jacobian matrix with finite differences was of the order of 10− 2. We have set np = 6 and a grid of initial conditions zs,0 : {− 0.02,− 0.01,− 0.005,0.005,0.01,0.02} around the coarse-grained saddle, and kmax = 6. Starting again the algorithm with zeros as initial guesses for all the unknown coefficients (i.e., by setting q(0) = {0,0,0,0,0,0}, the algorithm results to the following expression for the coarse-grained stable manifold:

In this case, the scheme converged within 4 iterations (the sequence of the convergence criterion \(\left \lVert \boldsymbol {dq}^{(\mathrm {j})} \right \rVert \) was (after the first iteration) \(\sim \) O(0.1), \(\sim \) O(0.01), \(\sim \) O(0.001)). The computation time for one Newton iteration was of the order of 50 min on a INTEL Xeon CPU E5-2630, 2.2GHz with 64GB RAM.

Taking Nsize = 4000 × 4000, Nr = 2000, and leaving everything else the same, the algorithm results to:

In this case, the scheme converged again within 4 iterations (the sequence of the convergence criterion \(\left \lVert \boldsymbol {dq}^{(\mathrm {j})} \right \rVert \) was (after the first iteration) \(\sim \) O(0.1), \(\sim \) O(0.01), \(\sim \) O(0.001)). At this point we should note that by taking larger lattice sizes Nsize and more runs Nr, the convergence to the mean-field results will increase. On the contrary, for small sizes and number of runs, the algorithm fails to converge. For example if one takes, Nsize = 200 × 200, Nr = 2000, the algorithm does not converge.

For the particular problem of kMC simulations, a more thorough discussion on the interplay between the level of noise, the selection of the amplitude of the perturbation for the estimation of the coarse-grained Jacobian, the “healing” assumption of the equation-free approach due to the lifting operation and the choice of the reporting horizon T can be found in [30, 31] where the equation-free approach has been addressed to construct the coarse-grained bifurcation diagram of the same model. For example, if the time reporting horizon T is too short, the errors that are introduced due to the lifting do not have the time to “heal” down to the slow manifold. If on the other hand, one selects a reporting horizon T too long, then the quantification of the Jacobian is inaccurate, thus introducing biases in the computations model.

4.2.2 Numerical approximation of the unstable manifold

Here, we seek for the following approximation of the unstable manifold

Following the approach described in the A, we obtained analytically seven algebraic equations, which were solved for the unknown coefficients with Newton-Raphson; the convergence tolerance was set 10− 6 while the perturbation for computing the Jacobian matrices was of the order of 10− 2. In this case, the approximation of the unstable manifold is given by:

Again, we first applied the proposed numerical method to the mean-field timestepper-resulting from the integration of the system of ODEs (55) with ode15s-as a black-box- with a reporting time horizon T = 0.05. We have set np = 4 and a grid of initial conditions zu1,0, zu2,0: {− 0.02,− 0.01,0.01,0.02} around the saddle setting kmax = 6. Again, the convergence tolerance was set 10− 6, while the perturbation for computing the Jacobian matrix with finite differences was of the order of 10− 3. Starting the algorithm with zeros as initial guesses for all the unknown coefficients (i.e., by setting q0 = {0,0,0,0,0,0,0}), the algorithm results to the following expression for the unstable manifold:

The scheme converged within 3 iterations (the sequence of the convergence criterion \(\left \lVert \boldsymbol {dq}^{(\mathrm {j})} \right \rVert \) was \(\sim \) O(10− 1), \(\sim \) O(10− 2), \(\sim \) O(10− 6)).

By selecting a grid closer to the equilibrium as zu1,0,zu2,0: {− 0.005,− 0.003, 0.003,0.005}, the algorithm results to the following expression for the unstable manifold:

By comparing (64) and (65) with (63), we see that the numerical approximation of the coarse-grained manifold as obtained by “wrapping” the proposed algorithm around the black-box ODEs timestepper is almost identical with the one computed using Newton-Raphson for the solution of the analytically derived nonlinear homological equations (given in Appendix A).

Finally, we applied the proposed numerical approach to the kMC timestepper with Nsize = 2000 × 2000, Nr = 200 and a reporting time horizon T = 0.05. In this case, the convergence tolerance for the kMC simulations was of the order of 10− 3, while the perturbation for computing the Jacobian matrix with finite differences was of the order of 10− 2. We have set np = 4 and a grid of initial conditions zu1,0,zu2,0: {− 0.02,− 0.01,0.01,0.02} around the coarse-grained saddle setting kmax = 6. Starting again the algorithm with zeros as initial guesses for all the unknown coefficients (i.e., by setting q(0) = {0,0,0,0,0,0,0}, the algorithm results to the following expression for the coarse-grained unstable manifold:

In this case, the scheme converged within 3 iterations (the sequence of the convergence criterion \(\left \lVert \boldsymbol {dq}^{(\mathrm {j})} \right \rVert \) was \(\sim \) O(0.1), \(\sim \) O(0.01), \(\sim \) O(0.001)). The computation time for one Newton iteration was of the order of 3.5 h on a INTEL Xeon CPU E5-2630, 2.2GHz with 64GB RAM.

Taking Nsize = 4000 × 4000, Nr = 2000, and leaving everything else the same, the algorithm results to:

In this case, the scheme converged again within 3 iterations (the sequence of the convergence criterion \(\lVert \boldsymbol {dq}^{(\mathrm {j})} \rVert \) was \(\sim \) O(0.1), \(\sim \) O(0.01), \(\sim \) O(0.001)).

By comparing the expressions (66) and (67) with (63), we see that the numerical approximation of the coarse-grained manifold of the kMC simulator is in a fair agreement with the one obtained by the mean field model.

5 Conclusions

We propose a numerical method for the approximation of the local coarse-grained stable and unstable manifolds of saddle points of microscopic simulators when macroscopic models in a closed form in the form of ODEs are not explicitly available. The methodology is based on the equation-free multiscale framework. The proposed numerical algorithm consists of three steps: (a) detection of the coarse-grained saddle by constructing the coarse-timestepper of the microscopic dynamics, (b) estimation of the coarse-grained Jacobian and evaluation of its eigenvalues and eigenvectors, and (c) estimation of the coefficients of the polynomial approximation of the invariant manifolds. The later step involves the construction of a map for the coefficients of the polynomial expansion of the manifolds. The key hypothesis is that a macroscopic model in the form of ODEs can in principle describe the emerging macroscopic dynamics but it is not available in a closed form. The proposed numerical approach was illustrated through two examples, a toy model treated as a black-box time-stepper and a kinetic Monte Carlo simulator of a simple catalytic reaction. For the kMC simulator a mean field model in the form of ODEs was also given. For both models, we have also derived analytically the parametrization of the invariant manifolds for comparison purposes. As we show, the proposed numerical method approximates fairly well the analytical approximation when considering the vector fields as known.

The proposed numerical method provides a polynomial approximation of the local stable and unstable manifolds in a neighborhood of the coarse-grained saddle, based on appropriately initialized temporal simulations of the microscopic simulator. It should be noted that the task of finding even linear stable manifolds from brute-force simulations (and thus physical experiments) is itself a non-trivial task [1, 47]. In physical experiments the additional difficulty is that most often one cannot set the initial conditions at will; towards this aim, one could resort to control-based continuation methods [1, 41, 45]. In a future work, we aim at extending the proposed numerical method to perform a piece-wise approximation of the global manifold. This could be done for example by coupling the proposed algorithm with parameter-continuation of the polynomial coefficients as we move far from the equilibrium. Of course the computation of global manifolds, especially of two-dimensional stable and unstable manifolds constitutes a much more difficult task. Towards this direction, Quinn et al. [34] have exploited the equation-free approach to compute the one-dimensional stable manifold of an one-dimensional delay differential equation. Other points that require further investigation are the analysis of the convergence properties of the algorithm, the numerical convergence properties of the scheme with respect to the amplitude of the noise to stochasticity, the sensitivity of the approximation with respect to the discretization of the domain around the saddle as well as the issue of finding confidence intervals for the coefficients of the polynomial expansion. Another extremely interesting problem is that of equation-free uncertainty quantification (UQ) [2, 35, 48, 52], an approach that can be exploited to deal with the inherent stochasticity of the microscopic simulations and to provide “on demand” the appropriate coefficients of generalized polynomial chaos expansions.

References

Barton, D.A.: Control-based continuation: bifurcation and stability analysis for physical experiments. Mech. Syst. Signal Process. 84, 54–64 (2017)

Bold, K.A., Zou, Y., Kevrekidis, I.G., Henson, M.A.: An equation-free approach to analyzing heterogeneous cell population dynamics. J. Math. Biol. 55(3), 331–352 (2007)

Bouyekhf, R., El Moudni, A.: On analysis of discrete singularly perturbed non-linear systems: Application to the study of stability properties, vol. 334, pp 199–212 (1997). https://doi.org/10.1016/S0016-0032(96)00076-2. http://www.sciencedirect.com/science/article/pii/S0016003296000762

Breden, M., Lessard, J.P., James, J.D.M.: Computation of maximal local (un)stable manifold patches by the parameterization method. Indag. Math. 27(1), 340–367 (2016). https://doi.org/10.1016/j.indag.2015.11.001

Cabré, X., Fontich, E., de la Llave, R.: The parameterization method for invariant manifolds i: Manifolds associated to non-resonant subspaces. Indiana Univ. Math. J. 52, 283–328 (2003)

Cabré, X., Fontich, E., de la Llave, R.: The parameterization method for invariant manifolds ii: regularity with respect to parameters. Indiana Univ. Math. J. 52, 329–360 (2003)

Cabré, X., Fontich, E., de la Llave, R.: The parameterization method for invariant manifolds III: overview and applications. J Differ Equ 218(2), 444–515 (2005). https://doi.org/10.1016/j.jde.2004.12.003

England, J.P., Krauskopf, B., Osinga, H.M.: Computing one-dimensional stable manifolds and stable sets of planar maps without the inverse. SIAM J. Appl. Dyn Syst 3(2), 161–190 (2004). https://doi.org/10.1137/030600131

Fenichel, N.: Geometric singular perturbation theory for ordinary differential equations. J Diff Equ 31(1), 53–98 (1979). https://doi.org/10.1016/0022-0396(79)90152-9

Feudel, F., Witt, A., Gellert, M., Kurths, J., Grebogi, C., Sanjuȧn, M.: Intersections of stable and unstable manifolds: the skeleton of lagrangian chaos. Chaos, Solitons &, Fractals 24(4), 947–956 (2005). https://doi.org/10.1016/j.chaos.2004.09.059

Flaßkamp, K., Ansari, A.R., Murphey, T.D.: Hybrid control for tracking of invariant manifolds. Nonlinear Anal. ybri. Syst. 25, 298–311 (2017). https://doi.org/10.1016/j.nahs.2016.08.002

Frewen, T.A., Hummer, G., Kevrekidis, I.G.: Exploration of effective potential landscapes using coarse reverse integration. J. Chem. Phys. 131(13), 10B603 (2009)

Gear, C.W., Kaper, T.J., Kevrekidis, I.G., Zagaris, A.: Projecting to a slow manifold: Singularly perturbed systems and legacy codes. SIAM J. Appl. Dyn. Syst. 4(3), 711–732 (2005). https://doi.org/10.1137/040608295

Gear, C.W., Kevrekidis, I.G.: Constraint-defined manifolds: a legacy code approach to low-dimensional computation. J. Sci. Comput. 25(1), 17–28 (2005). https://doi.org/10.1007/s10915-004-4630-x

Gillespie, D.T.: A general method for numerically simulating the stochastic time evolution of coupled chemical reactions. J. Comput. Phys. 22(4), 403–434 (1976). https://doi.org/10.1016/0021-9991(76)90041-3

Gillespie, D.T.: Exact stochastic simulation of coupled chemical reactions. J. Phys. Chem. 81(25), 2340–2361 (1977). https://doi.org/10.1021/j100540a008

Goussis, D.A., Najm, H.N.: Model reduction and physical understanding of slowly oscillating processes: The circadian cycle. Multiscale Model. Sim. 5(4), 1297–1332 (2006). https://doi.org/10.1137/060649768

Guckenheimer, J., Worfolk, P.: Dynamical systems: Some computational problems. In: Bifurcations and Periodic Orbits of Vector Fields. https://doi.org/10.1007/978-94-015-8238-4-5, pp 241–277. Springer, Netherlands (1993)

Haro, À., Mondelo, J.M.: Seminumerical Algorithms for Computing Invariant Manifolds of Vector Fields at Fixed Points, pp 29–73. Springer International Publishing, Cham (2016). https://doi.org/10.1007/978-3-319-29662-3-2

Johnson, M.E., Jolly, M.S., Kevrekidis, I.G.: Two-dimensional invariant manifolds and global bifurcations: some approximation and visualization studies. Numerical Algorithms 14(1/3), 125–140 (1997). https://doi.org/10.1023/a:1019104828180

Kazantzis, N.: On the existence and uniqueness of locally analytic invertible solutions of a system of nonlinear functional equations. J. Comput. Appl. Math. 146(2), 301–308 (2002). https://doi.org/10.1016/s0377-0427(02)00362-x

Keller, H.B.: Numerical solution of bifurcation and nonlinear eigenvalue problems. Appl. Bifurcation Theory 359–384 (1977)

Kelley, C.T.: Iterative methods for linear and nonlinear equations. Society for Industrial and Applied Mathematics. https://doi.org/10.1137/1.9781611970944 (1995)

Kevrekidis, I.G., Gear, C.W., Hummer, G.: Equation-free: The computer-aided analysis of complex multiscale systems. AIChE J 50(7), 1346–1355 (2004). https://doi.org/10.1002/aic.10106

Kevrekidis, I.G., Gear, C.W., Hyman, J.M., Kevrekidis, P.G., Runborg, O., Theodoropoulos, C.: Equation-free, coarse-grained multiscale computation: Enabling mocroscopic simulators to perform system-level analysis. Commun. Math. Sci. 1(4), 715–762 (2003). https://doi.org/10.4310/cms.2003.v1.n4.a5

Krauskopf, B., Osinga, H.: Two-dimensional global manifolds of vector fields. Chaos: An Interdisciplinary J Nonlinear Sci 9(3), 768–774 (1999). https://doi.org/10.1063/1.166450

Krauskopf, B., Osinga, H.M., Doedel, E.J., Henderson, M.E., Guckenheimer, J., Vladimirsky, A., Dellnitz, M., Junge, O.: A survey of methods for computing (un)stable manifolds of vector fields. Int J Bifurc Chaos 15 (03), 763–791 (2005). https://doi.org/10.1142/s0218127405012533

Kristiansen, K.U., Brøns, M., Starke, J.: An iterative method for the approximation of fibers in slow-fast systems. SIAM J. Appl. Dyn. Syst. 13(2), 861–900 (2014). https://doi.org/10.1137/120889666

Lam, S.H., Goussis, D.A.: The CSP method for simplifying kinetics. Int. J. Chem. Kinetics 26(4), 461–486 (1994). https://doi.org/10.1002/kin.550260408

Makeev, A.G., Kevrekidis, I.G.: Coarse-graining the computations of surface reactions: Nonlinear dynamics from atomistic simulators. Surface Sci. 603(10-12), 1696–1705 (2009)

Makeev, A.G., Maroudas, D., Kevrekidis, I.G.: “coarse” stability and bifurcation analysis using stochastic simulators: Kinetic monte carlo examples. J. Chem. Phys. 116(23), 10083–10091 (2002). https://doi.org/10.1063/1.1476929

Marschler, C., Sieber, J., Berkemer, R., Kawamoto, A., Starke, J.: Implicit methods for equation-free analysis: Convergence results and analysis of emergent waves in microscopic traffic models. SIAM J. Appl. Dyn. Syst. 13(3), 1202–1238 (2014)

Ott, E., Grebogi, C., Yorke, J.A.: Controlling chaos. Phys. Rev. Lett. 64(11), 1196–1199 (1990). https://doi.org/10.1103/physrevlett.64.1196

Quinn, C., Sieber, J., von der Heydt, A.S.: Effects of periodic forcing on a paleoclimate delay model. SIAM J. Appl. Dyn. Syst. 18(2), 1060–1077 (2019)

Rajendran, K., Tsoumanis, A.C., Siettos, C.I., Laing, C.R., Kevrekidis, I.G.: Modeling heterogeneity in networks using polynomial chaos. Int. J. Multiscale Comput. Eng. 14(3) (2016)

Reppas, A.I., Decker, Y.D., Siettos, C.I.: On the efficiency of the equation-free closure of statistical moments: dynamical properties of a stochastic epidemic model on erdös-rényi networks. J. Stat.Mech. Theory Exp 2012(08), P08020 (2012). https://doi.org/10.1088/1742-5468/2012/08/p08020

Rudin, W.: Principles of mathematical analysis. McGraw-Hill Inc (1976)

Saad, Y.: Numerical methods for large eigenvalue problems. Society for Industrial and Applied Mathematics. https://doi.org/10.1137/1.9781611970739 (2011)

Schiff, S.J., Jerger, K., Duong, D.H., Chang, T., Spano, M.L., Ditto, W.L.: Controlling chaos in the brain. Nature 370(6491), 615–620 (1994). https://doi.org/10.1038/370615a0

Shampine, L.F., Reichelt, M.W.: The matlab ode suite. SIAM J. Scient. Comput. 18(1), 1–22 (1997)

Sieber, J., Gonzalez-Buelga, A., Neild, S., Wagg, D., Krauskopf, B.: Experimental continuation of periodic orbits through a fold. Phys. Rev. Lett. 100(24), 244101 (2008)

Sieber, J., Marschler, C., Starke, J.: Convergence of equation-free methods in the case of finite time scale separation with application to deterministic and stochastic systems. SIAM J. Appl. Dyn. Syst. 17(4), 2574–2614 (2018)

Siettos, C.: Equation-free computation of coarse-grained center manifolds of microscopic simulators. J. Comput. Dyn. 1, 377 (2014). https://doi.org/10.3934/jcd.2014.1.377. http://aimsciences.org//article/id/a0ecbc85-b3d4-4277-8dc2-0f06648e2181

Siettos, C.I.: Equation-free multiscale computational analysis of individual-based epidemic dynamics on networks. Appl. Math. Comput. 218 (2), 324–336 (2011). https://doi.org/10.1016/j.amc.2011.05.067

Siettos, C.I., Graham, M.D., Kevrekidis, I.G.: Coarse brownian dynamics for nematic liquid crystals: Bifurcation, projective integration, and control via stochastic simulation. J. Chem. Phys. 118(22), 10149–10156 (2003). https://doi.org/10.1063/1.1572456

Smajdor, W.: Local analytic solutions of the functional equation \(\approxeq \shortparallel \eqsim \beth (\text {z})=\text {h}(\text {z}\approxeq \shortparallel \eqsim \beth \) [f(z)]) in multidimensional spaces. Aequationes Mathematicae 1(1-2), 20–36 (1968). https://doi.org/10.1007/bf01817555

Triandaf, I., Bollt, E.M., Schwartz, I.B.: Approximating stable and unstable manifolds in experiments.Phys. Rev. E 67(3). https://doi.org/10.1103/physreve.67.037201 (2003)

Xiu, D., Ghanem, R., Kevrekidis, I.: An equation-free approach to uncertain quantification in dynamical systems. IEEE Comput. Sci. Eng. J.(CiSE) 7(3), 16–23 (2005)

Yagasaki, K.: Chaos in a pendulum with feedback control. Nonlinear Dyn. 6(2), 125–142 (1994). https://doi.org/10.1007/bf00044981

Zagaris, A., Gear, C. W., Kaper, T. J., Kevrekidis, Y. G.: Analysis of the accuracy and convergence of equation-free projection to a slow manifold. ESAIM: Math. Model. Numer. Anal. 43(4), 757–784 (2009). https://doi.org/10.1051/m2an/2009026

Zagaris, A., Kaper, H.G., Kaper, T.J.: Fast and slow dynamics for the computational singular perturbation method. Multiscale Model. Simul. 2(4), 613–638 (2004). https://doi.org/10.1137/040603577

Zou, Y., Kevrekidis, I. G.: Uncertainty quantification for atomistic reaction models: An equation-free stochastic simulation algorithm example. Multiscale Model. Simul. 6(4), 1217–1233 (2008)

Funding

Open access funding provided by Università degli Studi di Napoli Federico II within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix.: Extraction of the stable and unstable manifolds for the mean field model of ODEs

Appendix.: Extraction of the stable and unstable manifolds for the mean field model of ODEs

Let us assume a continuous model in the following form of ODEs:

where f is considered to be sufficiently smooth.

To determine the local stable and unstable manifold of a saddle fixed point (x∗,p∗), the following linear transformation is introduced:

where V is the matrix with columns the eigenvectors vj of the Jacobian ∇xf(x,p) computed at (x∗,p∗). As in Section 2 expanding the right-hand side of (A.1) around (x∗,p∗) and introducing (A.2) we get:

g(V z,p) contains the higher order terms with respect to x.

By rearranging appropriately the columns of V, the Jacobian J ≡V− 1∇xf(x,p)V can be written in a block form as \(\boldsymbol {J}= \left (\begin {array}{cc} \boldsymbol {{{\varLambda }}}_{s} & \boldsymbol {0}\\ \boldsymbol {0} & \boldsymbol {{{\varLambda }}}_{u} \end {array} \right )\), where Λs is the l × l (diagonal/block diagonal) matrix whose eigenvalues are the l eigenvalues with negative real parts and Λu is the n − l × n − l (diagonal/block diagonal) matrix whose eigenvalues are the n − l eigenvalues with positive real parts.Thus, the system given by (A.3) can be written as:

where,

Vs and Vu are the sub-matrices of dimensions n × l and n × n − l, whose columns contain the eigenvectors corresponding to the eigenvalues with negative and positive real parts, respectively.

A stable manifold is given by the following equation:

while an unstable manifold is given by the following equation:

The dynamics on the stable manifold can be computed by differentiating (A.6) with respect to time to get:

Accordingly, the dynamics on the unstable manifold can can be computed by differentiating (A.7) with respect to time to get:

As described in Section 2, the stable and unstable manifolds can be approximated by polynomials, and the coefficients of the terms of the same order in both sides in (A.11) are equated. This leads to a system of (nonlinear) algebraic equations (homological equations) to be solved for the unknown polynomial coefficients.

As described in Section 4.2 we aim at computing the stable and unstable manifolds of the mean field model of CO oxidation given by (55) at β = 20.7. The fixed point is \(({\theta }_{A}^{*},{\theta }_{B}^{*},{\theta }_{C}^{*})\approx (0.2924, 0.0294, 0.6492)\) and the corresponding Jacobian of the right-hand side of (55) is

The eigenvalues and corresponding eigenvectors of \(\boldsymbol {J}(\theta _{A}^{*},\theta _{B}^{*},\theta _{C}^{*})\) are: λ1 ≈− 5.7148, \(\boldsymbol {v}_{1}=\left (\begin {array}{c} -0.5961\\-0.7973\\-0.0939 \end {array} \right )\), λ2,3 ≈ 0.0110 ± 0.0300i, \(\boldsymbol {v}_{2,3}= \left (\begin {array}{c} -0.7964\\0.1851\pm 0.0729i\\ 0.5600 \mp 0.1112i \end {array} \right )\).

1.1 A.1. Approximation of the stable manifold of the mean field model of co oxidation on catalytic surfaces

For the mean field model of CO oxidation given by (55), we used a third-order approximation of the stable manifold around \((\theta _{A}^{*},\theta _{B}^{*},\theta _{C}^{*})\) given by:

Introducing (A.13) into (A.11) and equating the terms up to third order of both sides, we get the following set of six nonlinear equations:

The above system of nonlinear algebraic equations is solved using Newton-Raphson. Below, we provide the Matlab code.

a0=[0;0;0;0;0;0]; eps=0.01; tol=1E-06; error1=10; kiter=0; while error1>tol F0= model3coefsnewtonanalytical(a0); for i=1:6 a=a0; a(i)=a0(i)+eps; Fplus = model3coefsnewtonanalytical(a); a(i)=a0(i)-eps; Fminus = model3coefsnewtonanalytical(a); FJac(:,i)=(Fplus-Fminus)/(2*eps); end dx=-FJac\F0; a0=a0+dx; error1=norm(dx); kiter=kiter+1; [kiter error1] end function [F] = model3coefsnewtonanalytical(a) a1=a(1);a2=a(2);a3=a(3);a4=a(4);a5=a(5);a6=a(6); F=[(0.011005*a1 + 0.030017*a4 + 2.0464e-12)-(a1*(9.6634e-13*a1 + 4.4054e-13*a4 - 5.7148) - 9.5641e-8*a2); (2*a2*(9.6634e-13*a1 + 4.4054e-13*a4 - 5.7148) - a1*(0.8705*a1^2 + 0.43711*a1*a4 + 2.1719*a1 + 0.047872*a4^2 + 3.9445*a4 -9.6634e-13*a2 - 4.4054e-13*a5 + 69.473) - 1.4346e-7 *a3)-... (- 0.088879*a1^2 + 0.0356*a1*a4 + 4.2609*a1 + 0.035831 *a4^2 + 2.7342*a4 + 0.011005*a2 + 0.030017*a5 +54.386); (4.2609*a2 + 0.011005*a3 + 2.7342*a5 + 0.030017*a6 - 0.17776* a1*a2 + 0.0356*a1*a5 + 0.0356*a2*a4 + 0.071662*a4*a5)-... (3*a3*(9.6634e-13*a1 + 4.4054e-13*a4 - 5.7148) - 2*a2*(0.8705 *a1^2 + 0.43711*a1*a4 + 2.1719*a1 + 0.047872*a4^2 + 3.9445*a4 - 9.6634e-13*a2 - 4.4054e-13*a5 + 69.473) - a1*(2.1719*a2 - 9.6634e-13*a3 + 3.9445*a5 - ... 4.4054e-13*a6 + 1.741*a1*a2 + 0.43711*a1*a5 + 0.43711*a2*a4 + 0.095744*a4*a5)); (a4*(9.6634e-13*a1 + 4.4054e-13*a4- 5.7148) - 9.5641e-8*a5)- (0.011005*a4 - 0.030017*a1 - 1.8879e-11); (0.28781*a1^2 + 0.54843*a1*a4 + 23.286*a1 + 0.22083*a4^2 + 17.097*a4 - 0.030017*a2 + 0.011005*a5 + 332.49)-... (2*a5*(9.6634e-13*a1 + 4.4054e-13*a4 - 5.7148) - a4*(0.8705*a1^2 + 0.43711*a1*a4 + 2.1719*a1 + 0.047872*a4^2 + 3.9445*a4 - 9.6634e-13*a2... - 4.4054e-13*a5 + 69.473) - 1.4346e-7*a6); (3*a6*(9.6634e-13*a1 + 4.4054e-13*a4 - 5.7148) - 2*a5*(0.8705*a1^2 + 0.43711*a1*a4 + 2.1719*a1 + 0.047872*a4^2 + 3.9445*a4 - 9.6634e-13*a2 - 4.4054e -13*a5 + 69.473) - a4*(2.1719*a2 - 9.6634e-13*a3 + 3.9445*a5 - 4.4054e-13*a6 + 1.741*a1*a2 + 0.43711*a1*a5 + 0.43711*a2*a4 + 0.095744*a4*a5))-... (23.286*a2 - 0.030017*a3 + 17.097*a5 + 0.011005*a6 + 0.57562*a1*a2 + 0.54843*a1*a5 + 0.54843*a2*a4 + 0.44166*a4*a5)]; end

1.2 A.2 Approximation of the unstable manifold of the mean field model of CO oxidation on catalytic surfaces

We approximate the unstable manifold of the mean field model (55) around \((\theta _{A}^{*},\theta _{B}^{*},\theta _{C}^{*})\) using the following series expansion:

Introducing (A.20) into (A.13) and equating the terms up to second order of both sides we get the following set of seven nonlinear equations:

The above system of nonlinear algebraic equations is solved with Newton-Raphson. Below, we provide the Matlab code.

a0=[0;0;0;0;0;0;0]; eps=0.001; tol=1E-06; error1=10; kiter=0; while error1>tol F0= model3unstablecoefsnewtonanalytical(a0); for i=1:7 a=a0; a(i)=a0(i)+eps; Fplus = model3unstablecoefsnewtonanalytical(a); a(i)=a0(i)-eps; Fminus = model3unstablecoefsnewtonanalytical(a); FJac(:,i)=(Fplus-Fminus)/(2*eps); end dx=-FJac\F0; a0=a0+dx; error1=norm(dx); kiter=kiter+1; [kiter error1] end function [F] = model3unstablecoefsnewtonanalytical(a) a110=a(1); a120=a(2); a101=a(3); a102=a(4); a111=a(5); a112=a(6); a121=a(7); F=[(a110*(2.0464e-12*a110 + 0.011005) - 1.9143e-9*a120 - 1.6761e-10*a111 - a101*(1.8879e-11*a110 + 0.030017))-... (9.6634e-13 - 5.7148*a110); (a110*(2.0464e-12*a101 + 0.030017) - 9.5717e-10*a111 - a101*(1.8879e-11*a101 - 0.011005) - 3.3523e-10*a102)-... (4.4054e-13 - 5.7148*a101); (a101*(332.49*a110^2 + 23.286*a110 - 1.8879e-11*a120 + 0.28781) - 1.6761e-10*a121+ a110*(54.386*a110^2 + 4.2609*a110 + 2.0464e-12*a120 - 0.088879) + 2*a120*(2.0464e-12*a110 + 0.011005) - a111*(1.8879e-11*a110 + 0.030017))-... (- 69.473*a110^2 - 2.1719*a110 - 5.7148*a120 - 0.8705); (a110*(54.386*a101^2 + 2.7342*a101 + 2.0464e-12*a102 + 0.035831) - 9.5717e-10*a112 + a101*(332.49 *a101^2 + 17.097*a101 - 1.8879e-11*a102 + 0.22083) - 2*a102*(1.8879e-11*a101 - 0.011005) + a111*(2.0464e-12*a101 + 0.030017))-... (- 69.473*a101^2 - 3.9445*a101 - 5.7148*a102 - 0.047872); (a101*(23.286*a101 + 17.097*a110 - 1.8879e-11*a111 + 664.98*a101*a110 + 0.54843) - 1.9143e-9*a121 - 3.3523e-10*a112+ a110*(4.2609*a101 + 2.7342*a110 + 2.0464e-12 *a111 + 108.77*a101*a110 + 0.0356)+ a111*(2.0464e-12*a110 + 0.011005) - a111*(1.8879e-11*a101 - 0.011005) + 2*a120*(2.0464e-12*a101 + 0.030017) - 2*a102*(1.8879e-11*a110 + 0.030017))-... (- 2.1719*a101 - 3.9445*a110 - 5.7148*a111 - 138.95*a101*a110 - 0.43711); (2*a102*(23.286*a101 + 17.097*a110 - 1.8879e-11 *a111 + 664.98*a101*a110 + 0.54843) + 2*a120*(54.386*a101^2 + 2.7342*a101 + 2.0464e-12*a102 + 0.035831) + a111*(4.2609*a101 + 2.7342*a110 + 2.0464e-12*a111 + 108.77*a101 *a110 + 0.0356) + a111*(332.49*a101^2 + 17.097*a101 - 1.8879e -11*a102 + 0.22083) + a101*(23.286*a102 + 17.097*a111 - 1.8879e-11*a112 + 664.98*a101*a111 + 664.98*a102*a110) + a110*(4.2609*a102 +2.7342*a111 + 2.0464e-12*a112 + 108.77 *a101*a111 + 108.77*a102*a110) + a112*(2.0464e-12*a110 + 0.011005) - 2*a112*(1.8879e-11*a101 - 0.011005) + 2*a121*(2.0464e-12*a101 + 0.030017))-... (- 2.1719*a102 - 3.9445*a111 - 5.7148*a112 - 138.95*a101*a111 - 138.95*a102*a110); (2*a102*(332.49*a110^2 + 23.286*a110 - 1.8879e-11*a120 + 0.28781) + a111*(23.286*a101 + 17.097*a110 - 1.8879e-11*a111 + 664.98*a101*a110 + 0.54843) + a111*(54.386*a110^2 + 4.2609*a110 + 2.0464e-12*a120 - 0.088879) + 2*a120*(4.2609*a101 + 2.7342*a110 + 2.0464e-12 *a111 + 108.77*a101*a110 + 0.0356)+ a101*(23.286*a111 + 17.097*a120 - 1.8879e-11*a121 + 664.98*a101*a120 + 664.98*a110*a111) + a110*(4.2609*a111 + 2.7342*a120 + 2.0464e -12*a121 + 108.77*a101*a120 + 108.77*a110*a111) + 2*a121*(2.0464e-12*a110 + 0.011005) - a121*(1.8879e-11*a101 - 0.011005) - 2*a112*(1.8879e-11*a110 + 0.030017))-... (- 2.1719*a111 - 3.9445*a120 - 5.7148*a121 - 138.95*a101*a120 - 138.95*a110*a111)]; end

the matlab code that implements the identification of the stable manifold of the toy discrete time model is available at: https://github.com/csiettos/equation-free-stable-unstable-manifolds

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article