Abstract

In this paper, we provide further illustrations of prolate interpolation and pseudospectral differentiation based on the barycentric perspectives. The convergence rates of the barycentric prolate interpolation and pseudospectral differentiation are derived. Furthermore, we propose the new preconditioner, which leads to the well-conditioned prolate collocation scheme. Numerical examples are included to show the high accuracy of the new method. We apply this approach to solve the second-order boundary value problem and Helmholtz problem.

Similar content being viewed by others

1 Introduction

In many cases, we are confronted with wave phenomena, such as wave scattering, signal processing, and antenna theory, which are characterized by bandlimited functions (whose Fourier transforms are compactly supported) [3, 14]. It is well known that the natural tool for effectively representing bandlimited functions on an interval is prolate spheroidal wave functions (PSWFs) [7, 28, 32]. Hence, there has been a growing interest in developing prolate spheroidal wave functions, which also offer an alternative to Chebyshev and other orthogonal polynomials for pseudospectral/collocation and spectral-element algorithms [5].

It is well known that a simple way of approximating a function f(x) is to choose a sequence of points \(\{x_{j}\}_{j=0}^{n}\) and find the function P(x) from the values of f(x) at these interpolation nodes, i.e., set P(xj) = f(xj), 0 ≤ j ≤ n. The standard tool for interpolation and approximation algorithms was investigated in [15, 32]. We highlight that a very popular alternative nowadays is to use barycentric interpolation formula, and the favourable numerical aspects of this way are summarized by Berrut and Trefethen [1, 24]. So, the related issues are worthy of investigation.

The purpose of this paper is to have new insights into prolate interpolation and pseudospectral differentiation based on the Prolate-Gauss-Lobatto points. The inspiration behind the proposed numerical method is the remarkable advantage of barycentric interpolation formula [1]. The main contributions reside in the following aspects.

-

We give the barycentric prolate interpolation and differentiation formula, which enjoys a more stable approximability and efficiency than the formulas given early [32, 34].

-

We give the error analysis of the barycentric prolate interpolation and differentiation based on the error analysis of the standard prolate interpolation and differentiation [22].

-

We offer a preconditioning matrix that nearly inverts the second-order prolate pseudospectral differentiation matrix, leading to a well-conditioned collocation approach for second-order boundary value problems.

The structure of the paper is as follows. In Section 2, we review some results of PSWFs and prolate interpolation and pseudospectral differentiation, while Section 3 combines the barycentric form with the prolate interpolation, which yields the new barycentric prolate interpolation and pseudospectral differentiation scheme. Furthermore, the convergence analysis of barycentric prolate interpolation and pseudospectral differentiation is given. In Section 4, we introduce the preconditioning matrix that nearly inverts the second-order barycentric prolate differentiation matrix. Sections 5 and 6 demonstrate the analysis via several numerical experiments and apply to the second-order boundary value problem and Helmholtz problem.

2 Prolate interpolation and pseudospectral differentiation

2.1 Preliminaries

The PSWFs were introduced in the 1960s by Slepian et al. in a series of papers [20, 21]. Firstly, we briefly recall some preliminary properties of the PSWFs. All of these can be found in [2, 6, 13,14,15,16, 18, 20, 21, 30, 31, 34].

Prolate spheroidal wave functions of order zero are the eigenfunctions ψj(x) of the Helmholtz equation in prolate spheroidal coordinates:

for x ∈ (− 1,1) and c ≥ 0. A series of papers [18, 32, 34] have shown that the ψj(x) are also the eigenfunctions of the following integral eigenvalue problem:

Here, \(\{\chi _{j}:=\chi _{j}(c)\}_{j=0}^{\infty }\) and \(\{\lambda _{j}:=\lambda _{j}(c)\}_{j=0}^{\infty }\) are the associated eigenvalues corresponding to the differential operator and integral operator, and the constant c is known as the “bandwidth parameter.” The eigenvalues \(\{\lambda _{j}\}_{j=0}^{\infty }\) satisfy |λ0| > |λ1| > |λ2| > … > 0, which decay exponentially to nearly 0. Specifically, based on the work of Wang and Zhang [31], we have:

where the notation \(A\simeq B\) means that for B≠ 0, the ratio \(\frac {A}{B}\rightarrow 1\) in the sense of some limiting process.

An important issue related to the PSWFs is the choice of bandlimit parameter c. For general functions, we do not have a simple optimal c. This is due to the fact that an arbitrary function has many different modes and each mode has a distinct optimal c [7]. Regardless of whether the function being represented is bandlimited or not, all the useful choices of c must satisfy [5]:

As recommended in [30, 32], in practice, a quite safe choice is \(c=\frac {N}{2}\). With this in mind, we choose \(c=\frac {N}{2}\). Guidelines on the suitable choice of c can be found in [3]. The practical rule for pairing up (c,N) has been given in [13, 32].

Denoting the zeros of (1 − x2)ψn(x) by the Prolate-Gauss-Lobatto points (PGL points). For computation, Boyd [6] described Newton’s iteration method with some care in selecting initial guesses. Generally, [12] gives the efficient algorithm for computing zeros of special functions, such as PSWFs. With the Prolate-Gauss-Lobatto points at our disposal, we will introduce the prolate interpolation and pseudospectal differentiation.

2.2 Prolate interpolation and pseudospectal differentiation

In this subsection, firstly, we review some facts about prolate interpolation and pseudospectal differentiation.

The key idea for interpolation is to search the prolate cardinal functions ℓi(x) := ℓi(x;c), which are designed to satisfy the interpolation property:

Then, the function f(x) is approximated by

The standard route to get the derivatives is by directly differentiating the prolate cardinal basis ℓi(x).

Generally, we define the prolate cardinal functions ℓi(x) as

where \(\{x_{k}\}_{k=0}^{N}\) are the Prolate points, which are zeros of sp(x). It follows that the standard interpolation is [15, 32]:

The standard differentiation generated from the cardinal basis (2.7) can be computed by:

Taking derivative of \(s_{p}(x)=s_{p}^{\prime }(x_{j})(x-x_{j})\ell _{j}(x)\) two times implies for

In the following, let us consider the prolate interpolation and pseudospectal differentiation scheme through the barycentric form.

3 Barycentric prolate interpolation and differentiation

In this section, we start with the barycentric interpolation [1, 9, 11], which are important pieces of the puzzle for our new approach, and then give the new insights into prolate interpolation, which are called the barycentric prolate interpolation. The differentiation matrices are derived through the barycentric interpolation formula. Then, the convergence analysis of barycentric prolate interpolation and differentiation is given.

3.1 Barycentric interpolation formula

Let \(\{x_{j}\}_{j=0}^{n}\) be a set of distinctive nodes in [− 1,1], which are arranged in ascending order, together with corresponding numbers f(xj). We assume that the nodes are real and they are zeros of the function s(x), i.e., s(xj) = 0 for 0 ≤ j ≤ N. Thus, the Lagrange interpolating basis is defined by:

Accordingly, the interpolation in Lagrange form for the function f(x) is

The barycentric formula is the alternative Lagrange form, and for computations, it is generally recommended that one should use barycentric interpolation formula [1, 11], which has stability or robustness property that proves advantageous in some application. The barycentric interpolation is defined as

where \(\{w_{j}\}_{j=0}^{N}\) are the barycentric weights. To this end, it suffices to note that the barycentric weights \(\{w_{j}\}_{j=0}^{N}\) can be written as different quantity. As with the polynomial interpolation [1, 24], s(x) can be written as:

such that barycentric weights become

For certain special sets of nodes \(\{x_{j}\}_{j=0}^{n}\), the explicit expressions of the barycentric weights wj were available in [1, 26, 27]. For general point sets, the barycentric weights wj can be evaluated by the fast multipole method [23]. These observations lead to an efficient method for computing prolate interpolants based on the Prolate-Gauss-Lobatto points through a new definition of non-zero barycentric weights.

3.2 Barycentric prolate interpolation and pseudospectral differentiation

Using the barycentric form, this subsection give a new definition of barycentric prolate weights, which leads to remarkably simple and efficient schemes for the construction of rational barycentric interpolation, which is denoted by barycentric prolate interpolation.

The fist question is how to choose barycentric weights. In the similar manner as deriving the barycentric formula (3.13) form Lagrange interpolation (3.11), since the Prolate-Gauss-Lobatto points are the roots of (1 − x2)ψN(x), it is straightforward to let s(x) := sp(x) = (1 − x2)ψN− 1(x). Correspondingly, we define the prolate barycentric weights to be

According to the foregoing observations, it is desirable to define a new interpolation which is called barycentric prolate interpolation. Moreover, the interpolation property is stable with respect to the nonzero weights, as noticed in [33].

Definition 3.1

The barycentric prolate interpolation can be expressed as

where \(\{x_{j}\}_{j=0}^{n}\) are the Prolate-Gauss-Lobatto points and \(w_{j}=\frac {1}{-2x_{j}\psi _{N-1}(x_{j})+(1-{x_{j}^{2}})\psi ^{\prime }_{N-1}(x_{j})}\).

The error analysis will be derived in the next subsection. In fact, from the numerical evidences in Figs. 3 and 4, the barycentric prolate interpolation gives better approximations.

Remark 1

The barycentric prolate interpolation enjoys several advantages, which makes it very efficient in practice. (i) The barycentric prolate interpolation are scale-invariant, thus avoiding any problems of underflow and overflow. (ii) Once wj are computed, the interpolant at any points x will take only \(\mathcal {O}(N)\) floating point operations to compute.

Remark 2

The barycentric formula has natural advantages for applications to fast multipole method [1], which is an useful and efficient tool to improve the complexity of centain sums (3.15) from \(\mathcal {O}(N^{2})\) to \(\mathcal {O}(N)\). The idea of using FMM to accelerate the interpolation and pseudospectral differentiation can be traced back to [4, 8] and we see from [15] that the FMM was used to accelerate the standard prolate interpolation and differentiation. It is noteworthy to point out that the new scheme (3.15) can also be accelerated by the FMM through a very similar process in [17].

Remark 3

We have to calculate sp(x) = (1 − x2)ψN− 1(x) in standard interpolation formula (2.8). Since \(\psi _{N}(x)={\sum }_{k}{\alpha ^{N}_{k}}\overline {P}_{k}(x)\), where the \(\overline {P}_{k}(x)\) is normalized Legendre polynomial and αk is the eigenvector of a matrix, which is complex and time-consuming. However, it is obvious that the factor sp(x) has dropped out in the (3.15), and this feature has practical consequences.

Furthermore, defining the cardinal basis function of the barycentric prolate interpolation (3.15) as

It leads to the differentiation matrices

which have the explicit formulas [23]:

where \(\{x_{j}\}_{j=0}^{n}\) are the Prolate-Gauss-Lobatto points and \(w_{j}=\frac {1}{-2x_{j}\psi _{N-1}(x_{j})+(1-{x_{j}^{2}})\psi ^{\prime }_{N-1}(x_{j})}\).

Remark 4

It is obvious that the standard differentiation method (2.10) involves the first-order differentiation value \(\ell ^{\prime }_{j}(x_{i})\) (2.9), which causes error propaganda for large number N. The barycentric prolate differentiation only involves the barycentric weights value. Hence, the barycentric prolate differentiation form is stable even for large N, which has been shown in Fig. 6.

3.3 Convergence properties of barycentric prolate interpolation and differentiation

Results can also be obtained for the convergence properties of barycentric prolate interpolation and differentiation.

Lemma 3.1

[22] Let f be the entire function, ΓR be the boundary of the square [−RK,RK] × [−i ⋅ RK,i ⋅ RK], \(R_{K}>\frac {\pi }{2c}+\frac {8(c+1)}{c\cdot \lambda _{n}}\), ψn(RK)≠ 0, \(C_{1}=\max \limits _{z\in {\Gamma }_{R}}|f(z)|\). Suppose Pn(x) is the interpolant of f(x) at the Prolate-Gauss-Lobatto points (2.8), then it follows for χn > c2 and − 1 < x < 1 that

where \(\widetilde {C}\) is a constant.

Remark 5

We remark that the condition “Let f be the entire function” in Lemma 3.1 can be refined as “Let f be analytic in a region bounded by the square [−Rk,Rk] × [−i ⋅ Rk,i ⋅ Rk]” [18, 35].

Theorem 3.1

Let f be analytic in a region bounded by the square [−Rk,Rk] × [−i ⋅ Rk,i ⋅ Rk], ΓR be the boundary of the square [−RK,RK] × [−i ⋅ RK,i ⋅ RK], \(R_{K}>\frac {\pi }{2c}+\frac {8(c+1)}{c\cdot \lambda _{n}}\), ψn(RK)≠ 0. Suppose Pn(x) and Gn(x) is the interpolant of f(x) at the Prolate-Gauss-Lobatto points by fomula (2.8) and (3.15), then it follows for χn > c2 and − 1 < x < 1 that

where \(\varepsilon _{n}:=\frac {2\cdot |\lambda _{n}|}{{R^{2}_{K}}-1}\left (1+4\cdot c\cdot R_{K}\cdot e^{-c\cdot R_{K}}\right )\) and \(\varepsilon _{n}^{\prime }=\left (2+\widetilde {C}\cdot \sqrt {2}\cdot n^{3}+\frac {1}{R_{K}}\right )\frac {2\cdot |\lambda _{n}|}{{R^{2}_{K}}-1}\left (1+4\cdot c\cdot R_{K}\cdot e^{-cR_{K}}\right )\).

Proof

Due to Lemma 3.1, when Pn[1](x) interpolates the constant function f(x) = 1, let Pn[1](x) = 1 + En(x), we provide the error that

It follows that

Then, we have:

and

Combining with (3.25), we obtain:

and

where εn and \(\varepsilon _{n}^{\prime }\) are defined in (3.24). The proof is completed. □

Remark 6

Theorem 3.1 shows a close connection between the barycentric prolate interpolation (3.15) and standard prolate interpolation (2.8). Roughly speaking, for λn satisfying (2.3), so |f(x) − Gn(x)| should decay exponentially with respect to n when c satisfies (2.4).

Remark 7

A function f may be less smooth than the case we have considered; numerical results illustrate that it might be also suited to this fast convergence. However, it appears open to know about exactly how the convergence rates of barycentric prolate interpolation depend on the degree of smoothness of f.

4 A well-conditioned prolate-collocation method

As everyone knows, the second-order prolate differentiation matrix is apparently unstable even for slightly large N [19]. Fortunately, Wang et al. [32] offered a new basis leading to well-conditioned collocation linear systems. In this subsection, we give a different way to evaluate the Birkhoff interpolation basis, which generates the preconditioner Pin, such that the eigenvalues of \(P_{in}D_{in}^{(2)}\) are nearly concentrated around one.

Consider the second-order BVPs with Dirichlet boundary conditions:

Following the work of Wang [32], the Birkhoff interpolation p(x) of f(x) can be uniquely determined by:

where \(\{B_{j}\}_{j=0}^{N}\) are the Birkhoff interpolation basis and satisfy:

Proposition 4.1

Let \(\{x_{j}\}_{j=0}^{n}\) be a set of Prolate-Gauss-Lobatto points. The Birkhoff interpolation basis \(\{B_{j}\}_{j=0}^{N}\) defined in (4.28)–(4.30) is given by:

where \(\{\widetilde {h}_{j}\}_{j=1}^{N-1}\) are the prolate barycentric interpolation basis at \(\{x_{j}\}_{j=1}^{N-1}\)

and \(\lambda _{j}=\{\frac {1}{\psi ^{\prime }_{N-1}(x_{j})}\}_{j=1}^{N-1}\). What’s more,

We omit the proof, since it is very similar to that in [29]. In order to avoid the instability and low-efficiency of the Lagrange interpolation, the barycentric form is used which is recommended by [1].

To construct the Birkhoff interpolation basis, we give the numerical scheme for integral (4.32) at xi

Introducing the change of variable

allows us to rewrite the definite integrals (4.34) further as

Since the integrands in (4.36) can be computed exactly using an Gauss quadrature at Legendre points. Based on fast \(\mathcal {O}(N)\) operations for the computation of Gaussian quadrature due to Hale and Townsend [10], we get the fast scheme for the Birkhoff interpolation basis \(\{B_{j}\}_{j=0}^{N}\) and \(\{B^{(1)}_{j}\}_{j=0}^{N}\).

Let \(b^{(k)}_{ij}:=B^{(k)}_{j}(x_{i})\), and define the matrices

Due to (4.27), hk(x) in (3.16) can be approximated by

According to the fact that hk(x) satisfying hj(xi) = δij, it follows that

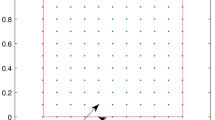

where IM is an M × M identity matrix, and the matrix \(\textbf {D}_{in}^{(2)}\) is the same as in (3.17). We depict in Fig. 1 the distribution of the largest and smallest eigenvalues of \(\textbf {B}_{in}\textbf {D}_{in}^{(2)}\) at the Prolate-Gauss-Lobatto points. This agrees with (4.39).

As we know, the usual collocation scheme is find f = (f(x1),…,f(xN− 1)) by solving

where g = (g(x1),…,g(xN− 1))t, Λr = diag(r(x1),…,r(xN− 1)), Λs = diag(s(x1),…,s(xN− 1)),

It is well known that the coefficient matrix of the usual collocation method has a high condition number. Below, let us consider the preconditioning method for solving BVP. On the one hand, due to \(B_{in}D_{in}^{(2)}=I_{N-1}\), the matrix Bin can be used to precondition the ill-conditioned system by:

where

On the other hand, recall the formula (4.27): one can directly use {Bk} as basis. Then, the collocation scheme of BVP can be expressed as:

where \(\mathbf {u}=(f^{\prime \prime }_{N}(x_{1}),f^{\prime \prime }_{N}(x_{2}),\ldots ,f^{\prime \prime }_{N}(x_{N-1}))^{T}\), and

We can obtain u by solving the system, and then recover f = (fN(x1),…,fN(xN− 1))T from

where bj = (Bj(x1),Bj(x2),…,Bj(xN− 1))T for j = 0,N.

Remark 8

Obviously, the new system (4.42) does not involve the direct multiplication of the preconditioner, and the round-off errors in forming differentiation matrices can be alleviated.

Remark 9

The use of Birkhoff interpolation as basis functions for deriving precondition is mimic to the preconditioning technique in [32]. However, [32] search for the Birkhoff interpolation basis {Bj(x)} through expansion in a different finite dimensional space, and then solving the coefficients by the interpolation conditions. This process involves inverting a matrix of PSWF values, which is time-consuming. My idea of constructing the basis {Bj(x)} in (4.31)–(4.32) is actually inspired by polynomial-based algorithms in [29] and the new insights reside in two aspects. First, in order to avoid the instability of the Lagrange interpolation, the barycentric form was used. Second, through changing the variable, the integrals in (4.32) were computed by the fast Gauss quadrature proposed by Hale and Townsend.

5 Numerical tests

In this section, we illustrate the numerical results in this paper. All the numerical results in this paper are carried out by using Matlab R2014a on a desktop (4.0 GB RAM, 2 Core2 (64 bit) processors at 3.17 GHz) with Windows 7 operating system.

Example 1

Figure 2 illustrates the convergence of the barycentric prolate interpolation formulas for the two analytic functions:

and

For each n, the error is defined by

which is measured at 1000 random points in [− 1,1]. As we can see, the convergence is exponential and is almost indistinguishable for different c. Moreover, it is shown that the optimal c depends on the function being approximated [7]. In the following, we will take c = n/2 for general functions, which is recommended in [30, 32].

Example 2

For the functions:

and

we focus on the comparison of the new barycentric prolate interpolation (c = n/2) (3.15) with the standard interpolation (2.8) in terms of the approximation error in \(L^{\infty }\) norm, which is measured at 1000 random points in [− 1,1]. Numerical results are shown in Figs. 3 and 4. It is seen that the errors for these approaches decrease very fast. Furthermore, the barycentric prolate interpolation has better stability than that of the Lagrange formulation for a large number of points.

Example 3

For the wave functions \(f(x)=\frac {\sin \limits (25x)}{2-x^{2}}\) and \(f(x)=(\cos \limits (25x)+\sin \limits (x))/(1+4x^{2})\), we compare the barycentric prolate interpolation (c = n/2) with the barycentric interpolation in the polynomial case, whose nodes and barycentric weights are computed in the chebfun system by the command legpts [24]. Figure 5 illustrates the barycentric prolate interpolation yields spectrally accurate results using even fewer points than barycentric interpolation in the polynomial case.

Example 4

We compare the absolute errors of the derivatives for

at Prolate-Gauss-Lobatto points by the barycentric prolate differentiation (3.18)–(3.19) and standard method (2.9)–(2.10). Results of these calculations are shown in Fig. 6. As can be seen, since the standard method (2.10) involves the first-order differentiation value, it causes error propaganda for a large number n. There is a good performance of prolate barycentric differentiation, which gives us the motivations for the application.

6 Application

Different from the usual collocation scheme using the standard Lagrange differentiation, barycentric prolate differentiation (3.18)–(3.19), combining with the usual spectral collocation method and GMRES, has been implemented and tested on the highly oscillatory problem and two-dimensional Helmholtz problem. The comparison with CC points-based method is reminiscent when the solution is highly oscillatory.

Example 5

The second example is one where the solution is very oscillatory

The exact solution is

The behavior of the prolate barycentric differentiation matrix is demonstrated in Fig. 7. It is clear that this method is rapidly convergent and stable, which is better than the usual collocation method based on CC points.

Example 6

We extend the barycentric prolate pseudospectral method to 2D Helmholtz problem [25], which arises in the analysis of wave propagation:

where u = 0 on the boundary and k is a real parameter. For such a problem, we set up a grid based on Prolate-Gauss-Lobatto points independently in each direction called a tensor product grid. To solve such a problem for the particular choices k = 9, f(x,y) = exp(− 10[(y − 1)2 + (x − 1/2)2]). The solution appears as a mesh plot in Fig. 8. Compared with the value u(0,0) is accurate to nine digits at Chebyshev grid [25] when N = 24, the new barycentric prolate differentiation scheme (3.19) achieves the accuracy to eleven digits at the same number of points. On the right side of Fig. 8, the absolute error at u(0,0) is illustrated when N = 4 : 2 : 38, which show the fast convergence rate at Prolate-Gauss-Lobatto points.

Example 7

We consider

with the exact solution \(u(x)=e^{(x^{2}-1)/2}\). Below, Table 1 compares the condition number and errors of the spectral collocation (SC) scheme (4.40), direct preconditioned (M-PC) scheme (4.41), and the new basis preconditioned collocation (B-PC) scheme (4.42), respectively. We also show the iteration number for solving the systems by GMRES. Table 1 clearly indicates that the two preconditioned schemes are well-conditioned and the new basis preconditioned collocation (B-PC) scheme has desired performance.

7 Conclusion

In this paper, we have developed a new scheme for the prolate interpolation and prolate spectral differentiation. The solver is based on the barycentric interpolation, which allows for stable approximation and the error analysis of barycentric prolate interpolation and differentiation are given. What’s more, the new preconditioning skill is proposed for the usual prolate-collocation scheme. The numerical examples demonstrate the performance of the proposed algorithms.

References

Berrut, J., Trefethen, L.: Barycentric lagrange interpolation. SIAM Rev. 46(3), 501–517 (2004)

Beylkin, G., Monzon, L.: On generalized gaussian quadratures for exponentials and their applications. Appl. Comput. Harmon. Anal. 12(3), 332–373 (2002)

Beylkin, G., Sandberg, K.: Wave propagation using bases for bandlimited functions. Wave Motion 41(3), 263–291 (2005)

Boyd, J.: Multipole expansions and pseudospectral cardinal functions: a new generalization of the fast Fourier transform. J. Comput. Phys. 103, 184–186 (1992)

Boyd, J.: Prolate spheroidal wavefunctions as an alternative to chebyshev and legendre polynomials for spectral element and pseudospectral algorithms. J. Comput. Phys. 199(2), 688–716 (2004)

Boyd, J.: Algorithm 840: computation of grid points, quadrature weights and derivatives for spectral element methods using prolate spheroidal wave functions—prolate elements. ACM Trans. Math. Software 31(1), 149–165 (2005)

Chen, Q., Gottlieb, D., Hesthaven, J.: Spectral methods based on prolate spheroidal wave functions for hyperbolic pdes. SIAM J. Numer. Anal. 43(5), 1912–1933 (2005)

Dutt, A., Gu, M., Rokhlin, V.: Fast algorithms for polynomial interpolation, integration, and differentiation. SIAM J. Numer. Anal. 33(5), 1689–1711 (1996)

Floater, M., Hormann, K.: Barycentric rational interpolation with no poles and high rates of approximation. Numer. Math. 107(2), 315–331 (2007)

Hale, N., Townsend, A.: Fast and accurate computation of Gauss-Legendre and Gaus-Jacobi quadrature nodes and weights. SIAM J. Sci. Comput. 35(2), A652–A674 (2013)

Higham, N.: The numerical stability of barycentric Lagrange interpolation. IMA J. Numer. Anal. 24(4), 547–556 (2004)

Glaser, A., Liu, X., Rokhlin, V.: A fast algorithm for the calculation of the roots of special functions. SIAM J. Sci. Comput. 29(4), 1420–1438 (2007)

Kong, W., Rokhlin, V.: A new class of highly accurate differentiation schemes based on the prolate spheroidal wave functions. Appl. Comput. Harmon. Anal. 33(2), 226–260 (2012)

Kovvali, N., Lin, W., Carin, L.: Pseudospectral method based on prolate spheroidal wave functions for frequency-domain electromagnetic simulations. IEEE Trans. Antennas Propag. 53(12), 3990–4000 (2005)

Kovvali, N., Lin, W., Zhao, Z., Couchman, L., Carin, L.: Rapid prolate pseudospectral differentiation and interpolation with the fast multipole method. SIAM J. Sci. Comput. 28(2), 485–497 (2006)

Lin, W.: Theory and Applications of Biorthorgonal Ridgelets and Prolate Spheroidal Wave Functions. Ph.D. thesis, Duke University, Durham NC (2005)

Liu, G., Xiang, S.: Fast multipole methods for approximating a function from sampling values. Numer. Algor. 76(3), 727–743 (2017)

Osipov, A., Rokhlin, V., Xiao, H.: Prolate Spheroidal Wave Functions of Order Zero. Springer, Berlin (2013)

Shen, J., Tang, T., Wang, L.: Spectral Methods: Algorithms, Analysis and Applications. Springer, Berlin (2011)

Slepian, D.: Prolate spheroidal wave functions, fourier analysis, and uncertainty—v: the discrete case. Bell System Tech. J. 57(5), 1371–1430 (1978)

Slepian, D., Pollak, H.O.: Prolate spheroidal wave functions, fourier analysis and uncertainty—ii. Bell System Tech. J. 40(1), 43–63 (1961)

Tian, Y., Xiang, S.: On convergence rates of prolate interpolation and differentiation. Appl. Math. Lett. 94, 250–256 (2019)

Tian, Y., Xiang, S., Liu, G.: Fast computation of the spectral differentiation by the fast multipole method. Comput. Math, Appl. 78 (1), 240–253 (2019)

Trefethen, L.: Approximation Theory and Approximation Practice. SIAM, Philadelphia (2013)

Trefethen, L.: Spectral Methods in MATLAB. SIAM, Philadelphia (2000)

Wang, H., Huybrechs, D., Vandewalle, S.: Explicit barycentric weights for polynomial interpolation in the roots or extrema of classical orthogonal polynomials. Math. Comp. 83(290), 2893–2914 (2014)

Wang, H., Xiang, S.: On the convergence rates of Legendre approximation. Math. Comp. 81(278), 861–877 (2012)

Wang, L.: Analysis of spectral approximations using prolate spheroidal wave functions. Math. Comp. 79(270), 807–827 (2009)

Wang, L., Samson, M., Zhao, X.: A well-conditioned collocation method using a pseudospectral integration matrix. SIAM J. Sci. Comput. 36(3), A907–A929 (2014)

Wang, L.: A review of prolate spheroidal wave functions from the perspective of spectral methods. J. Math. Study 50(2), 101–143 (2017)

Wang, L., Zhang, J.: A new generalization of the PSWFs with applications to spectral approximations on quasi-uniform grids. Appl. Comput. Harmon. Anal. 29(3), 303–329 (2010)

Wang, L., Zhang, J., Zhang, Z.: On hp-convergence of prolate spheroidal wave functions and a new well-conditioned prolate-collocation scheme. J. Comput. Phys. 268, 377–398 (2014)

Werner, W.: Polynomial interpolation: Lagrange versus Newton. Math. Comp. 43(167), 205–217 (1984)

Xiao, H., Rokhlin, V., Yarvin, N.: Prolate spheroidal wavefunctions, quadrature and interpolation. Inverse Problems 17(4), 805–838 (2001)

Zhang, Z.: Superconvergence points of polynomial spectral interpolation. SIAM J. Numer. Anal. 50(6), 2966–2985 (2012)

Acknowledgments

I am deeply grateful to Prof. Li-Lian Wang, Prof. Shuhuang Xiang and Prof. Hui-yuan Li for valuable comments on the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China with grant No. U1930402.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tian, Y. Barycentric prolate interpolation and pseudospectral differentiation. Numer Algor 88, 793–811 (2021). https://doi.org/10.1007/s11075-020-01057-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-01057-7