Abstract

The recently developed new algorithm for computing consistent initial values and Taylor coefficients for DAEs using projector-based constrained optimization opens new possibilities to apply Taylor series integration methods. In this paper, we show how corresponding projected explicit and implicit Taylor series methods can be adapted to DAEs of arbitrary index. Owing to our formulation as a projected optimization problem constrained by the derivative array, no explicit description of the inherent dynamics is necessary, and various Taylor integration schemes can be defined in a general framework. In particular, we address higher-order Padé methods that stand out due to their stability. We further discuss several aspects of our prototype implemented in Python using Automatic Differentiation. The methods have been successfully tested on examples arising from multibody systems simulation and a higher-index DAE benchmark arising from servo-constraint problems.

Similar content being viewed by others

1 Introduction

Higher-index DAEs do not only represent integration problems but also differentiation problems, as well (see, e.g., [4, 22, 23, 28]). Therefore, it seems worthwhile to solve an associated ODE with classical integration schemes and the differentiation problems using Automatic Differentiation (AD). However, depending on the structure, both differentiations and integrations may be intertwined in a complex manner such that this plausible idea may be difficult to realize in general.

In this context, different approaches have been considered for DAEs in order to combine AD with ODE integrations schemes. By construction, the approaches are based on corresponding index definitions and lead, therefore, to quite different algorithms.

-

In [26, 27] and the related work, the structural index was used to determine the degree of freedom.

-

In [13], we used the tractabiliy matrix sequence to solve the inherent ODE for DAEs of index up to two. The generalization to higher-index DAEs seemed rather complicated.

-

In [17], we described briefly how an approach based on the differentiation index defined in [14, 16] leads to an explicit Taylor series methods for DAEs. An analysis of the corresponding projected explicit ODE can be found in [18]. These methods can be considered as projected Taylor series methods.

In this paper, we analyze more general classes of the latter mentioned projected Taylor series methods. In particular, we discuss how projected implicit Taylor series methods can be defined for DAEs, generalizing the approach from [17]. Here we focus on the methods from [7, 21].

The main idea in this context is that the computation of Taylor coefficients of a solution of an implicit ODE can be considered as the solution of a nonlinear system of equations. In this sense, we will see that a generalization for DAEs can be obtained by solving a nonlinear optimization problem [16]. The obtained solution corresponds to a projected method. There are several advantages of this approach:

-

We assume weak structural properties of the DAEs (1), such that ODEs and semi-explicit DAEs are simple special cases. Theoretically, we can consider DAEs of any index.

-

An explicit description of the inherent dynamics is not required for the algorithmic realization. Indeed, due to our formulation as an optimization problem, implicitly we consider the orthogonally projected explicit ODE introduced in [18].

-

We can use higher-order integration schemes, also for stiff ODEs/DAEs.

The described methods were implemented in a prototype and first numerical tests for DAEs up to index 5 and integration schemes up to order 8 were successful.

The purpose of our implementation is the improvement of our diagnosis software [17]. Therefore, we do not focus on high-performance, but on information about the reliability of the numerical result, specially with regard to higher-index issues and the diagnosis of singularities like [19]. Due to their stability and order properties, the new higher-order methods presented here are a considerable improvement in comparison to [17], where only explicit Taylor series methods were considered.

The paper is organized as follows. In Section 2, we introduce DAEs and summarize some of our previous results that are crucial for the approach presented here.

The properties of Taylor coefficients in the derivative array of a DAE are discussed in Section 3. Using these properties, in Section 4, we define the general projected explicit/implicit Taylor series method that are, indeed, a generalization of the explicit method from [17].

Within this framework, in Section 5, we present four different types of projected methods: explicit methods, fully implicit method, two-halfstep (TH) schemes, and higher-order Padé (HOP) schemes.

The properties of the considered optimization problems that provide the projection are discussed in Section 6 and some practical considerations for the implementation are addressed in Section 7.

Our prototype implementation of the proposed projected methods for DAEs is tested on several well-known examples and benchmarks from the literature in Section 8. An outlook discussing directions for further investigations concludes this paper.

For completeness, in the Appendix, we summarize the stability functions and stability regions for the considered Taylor series methods, since they are essential for the development of HOP methods. We also summarize some linear algebra results for decoupling DAEs and provide the DAE formulation of the tested examples resulting from servo-constraint problems for multi-body systems.

2 DAEs: index, consistent values, and decoupling

In this article, we consider general DAEs:

for \(f: \mathcal G_{f} \rightarrow {\mathbb {R}}^{n}\), \(\mathcal G_{f} \subset {\mathbb {R}}^{n} \times {\mathbb {R}}^{n} \times {\mathbb {R}}\), where the partial Jacobian \(f_{x^{\prime }}\) is singular and \(\ker f_{x^{\prime }}(x^{\prime },x,t)\) is constant. For our purposes, we define the constant orthogonal projector Q onto \(\ker f_{x^{\prime }}\) as well as the complementary orthogonal projector P := I − Q.

Recall that the singularity of \( f_{x^{\prime }}\) means that (1) contains derivative-free equations, called explicit constraints, and that the differentiation of (1) may lead to further derivative-free equations, called hidden constraints. A consistent initial value x0 has to fulfill all explicit and implicit constraints. The characterization of all these constraints motivated the following definition for the differentiation index, cf. [4].

Definition 1

[14] The differentiation index is the smallest integer μ such that:

uniquely determines Qx as a function of (Px,t).

If μ is the differentiation index according to Definition 1, then the conventional differentiation index (see, e.g., [4]) results to be μ as well. According to this definition, in the following we will never prescribe initial values for Qx0, since we may compute Qx0 evaluating a function at (Px0,t0). Moreover, in the higher-index case, the Eqs. (2)–(4) contain explicit and hidden constraints that restrict the choice for Px0.

According to [16], for an initial guess \(\alpha \in {\mathbb {R}}^{n}\), consistent initial values x0 can be computed solving the following constrained optimization problem:

Equivalently, we can solve the system of equations:

for a suitable orthogonal projector π with rank π = d ≤rank P, where d is the degree of freedom of (1), cf. [15]. However, in particular for nonlinear DAEs, π may be difficult to compute and therefore, in practice, (5) may be more convenient than (7), cf. Appendix Appendix. The approach (5)–(6) was implemented in InitDAE, a Python program to determine the index and consistent initial values for DAEs [12, 17].

Furthermore, for linear DAEs of the form:

in [18], it was shown that using the derivative array (2)–(4), the decoupling:

can be obtained for suitable functions φ1,φ2, where (10) is an ODE in the invariant subspace im π. Therefore, theoretically, we can set up (10) and solve it with an integration scheme for ODEs. Subsequently, (I −π)x can be computed at each time point using (11). Notice that in doing so, the error in (I −π)x depends only on the error made solving (10) and the properties of φ2 from (11). Moreover, the values of (I −π)x at previous time points do not influence (10).

For nonlinear DAEs, analogous considerations can be undertaken considering the linearization along a solution. However, of course, the properties of f are decisive in practice. Since a detailed analysis for the nonlinear case goes far beyond the scope of this article, we focus on a general formulation of projected Taylor methods using (5)–(6), having in mind that at present, the theoretical basis has been developed for linear DAEs only. At least the numerical tests from Section 8 suggest the applicability for some classes of nonlinear DAEs.

3 Taylor series and DAEs

Since we wish to analyze one-step methods, we consider the computation of an approximation of the solution x(t) of the ODE/DAE (1) at time tj+ 1, given an approximation of the solution at time-point tj. Consequently, in order to describe our method in terms of Taylor expansion coefficients, for \(k_{c} \in {\mathbb {N}}\), we suppose that a suitable approximation:

is given and that we look at adequate methods to compute:

If we suppose that the ODE/DAE is described by (1), we require that:

are fulfilled. For our purposes, given K ≥ 1, we further consider the order (K − 1) derivative array derivative array [4] containing (1) and K − 1 derivatives of (1):

Therefore, we suppose that for the corresponding function:

it holds:

and

In practice, the function r can be provided by automatic differentiation (AD) [17, 31].

Using this notation, the index from Definition 1 is the smallest integer μ such that for K = μ, the derivative array r uniquely determines Qc0 as a function of (Pc0,t). For this purpose, the 1-fullness of the Jacobian of r is verified in [16, 17], cf. Appendix Appendix. We emphasize that the main difference to the conventional differentiation index [4] is precisely that for 1-fullness the columns corresponding to c0 (and not c1) are considered. With this index definition in mind, we can define consistency for the Taylor coefficients.

Definition 2

For K ≥ μ and 0 ≤ kc ≤ K − μ, the Taylor coefficients up to kc are consistent if they are in the set:

Note that \(\mathcal {T}_{0}^{0}\) corresponds to the set of consistent initial values and that if sufficient smoothness of f is given, we can suppose that for all \(c_{0} \in \mathcal {T}_{0}^{0}\) in regularity regions, there is a unique solution of the initial value problem. For a discussion of regularity regions and singularities within a projector-based analysis, we refer to [14, 23].

For sufficiently smooth regular linear DAEs (9), Theorem 1 from [18] implies that for any \(c_{0} \in \mathcal {T}_{0}^{0}\), there is a unique solution fulfilling x(t0) = c0. Analogously, for \([(c_{0})_{j}, (c_{1})_{j},\ldots , (c_{k_{c}})_{j} ] \in \mathcal {T}_{k_{c}}^{j}\), there exists a unique solution x(t) such that \( \frac {x^{(k)}}{k !}(t_{j}) =(c_{k})_{j}\), 0 ≤ k ≤ kc. Indeed, Theorem 1 from [18] provides a general description of the inherent dynamics in terms of the associated orthogonally projected explicit ODE (10).

Let us focus on the relation between kc, K, the DAE-index μ and the computation of consistent initial values and Taylor coefficients at a particular t0 considering:

-

For initial value problems x(t0) = c0 for ODEs \(g(x^{\prime }(t),x(t),t)=0\) with regular \(g_{x^{\prime }}\), if we consider (17), then we can compute K consistent coefficients:

$$ (c_{1})_{0}, (c_{2})_{0}, (c_{3})_{0},\ldots, (c_{K})_{0}, $$at t0, since c0 is given. In the above notation, the maximal value for kc is kc = K.

-

If we consider an uniquely solvable nonlinear time-dependent equation

g(x(t),t) = 0 with regular gx and a corresponding system of equations (17), then, at t0, we cannot prescribe c0 and compute therefore K consistent coefficients:

$$ (c_{0})_{0}, (c_{1})_{0}, (c_{2})_{0},\ldots, (c_{K-1})_{0}. $$In this case, the maximal value is kc = K − 1. For the coefficients (cK)0, no equations are given, since in this particular case, they do not appear in (17). Note that in principle, g(x,t) = 0 can be considered an index-1 DAE. In this sense, it fits into the case below.

-

For DAEs (1), if we consider (17) and fix the free initial conditions of c0, then in general, we may compute K + 1 − μ consistent coefficients:

$$ \begin{array}{@{}rcl@{}} (c_{0})_{0}, (c_{1})_{0}, (c_{2})_{0},\ldots, (c_{K-\mu})_{0}, \end{array} $$(18)cf. [17]. In this case, we have at most kc = K − μ. In general, the coefficients cK+ 1−μ,…,cK cannot be computed considering (17). Another crucial aspect is that not all components of c0 can be prescribed, since all the constraints have to be satisfied.

Note that according to (5)–(6), for an arbitrary initial guess α that, in general, may be not consistent, the optimization problem:

provides consistent initial values (18). Moreover, in terms of (7)–(8), this minimization problem is equivalent to the system of equations:

where π describes an appropriate orthogonal projector, and the rank of π coincides with the number of degrees of freedom of the DAE [15, 18]. Note further that, in general, the coefficients [(cK+ 1−μ)0,…,(cK)0] are not uniquely determined neither by (19)–(20) nor by (21)–(22). In our implementation from [17], the minimum norm solution \([(\tilde {c}_{K+1-\mu })_{0},\ldots , (\tilde {c}_{K})_{0} ]\) is computed.

Example 1

Consider the index-4 DAE:

with the general solution:

For this clearly structured example, the projector-based approach will lead to:

This means that Qx corresponds to x2 and according to the notation introduced in [18], the EOPE-ODE (the essential orthogonally projected ODE describing the dynamics) that corresponds to (10) will be formulated in terms of x1.

Let us have a closer look to the derivative array with respect to the index determination and the computation of consistent initial values, both related to the computation of π, see Appendix Appendix.

According to (12), for n = 5 and K = 4, we consider:

such that the equations on the left, that are formulated for functions as described in (13), correspond to the equations on the right, that can be formulated at some t = tj for the scalar numbers \(c_{k \ell }=\frac {x_{k}^{(\ell )}(t_{j};C)}{\ell !}\) (cf. (14)):

The colors visualize the chains of calculation by with each entry of (I −π)c0 is uniquely determined by the equations r = 0, cf. (15). In particular, the red equations permit the computation of Qc0, since:

Since no representation of c20 is possible with less differentiations, the index is μ = 4. Moreover, the violet, blue, and green expressions provide values for components of Pc0, in particular c50, c40, and c30, respectively. This means that we cannot prescribe initial values for (P −π)c0.

Indeed, the EOPE-ODE reads:

Summarizing, we see that to compute (c0)0, we have to prescribe a value for x1(t0) and consider at least derivatives of order up to three (with K = μ = 4) in the derivative array, i.e., r((c0)0,…,(c3)0,(c4)0,t0) = 0.

We emphasize that the gray items must vanish in order to satisfy r = 0, but for K = μ − 1, they do not uniquely determine all coefficients of (cℓ)0 for any ℓ > 0. This means that the value for kc from Definition 2 is kc = 0 = K − μ.

If we increase the number of derivatives with K = μ + 1 = 5 and consider r((c0)0,(c1)0,(c2)0,…,(c5)0,t0) = 0 together with an initial value for c10, then correct values for (c0)0 and (c1)0 can be computed. In general, for K ≥ μ, consistent (c0)0,…,(cK−μ)0 can be obtained.

Since with the approach (19)–(20) the projector π is not computed explicitly and at least we consider nonlinear under-determined systems of equations, they are solved in a minimum-norm sense. Therefore, the used solver obtains values for all higher derivatives, whereas we cut off \((\tilde {c}_{K-\mu +1})_{0}, \ldots , (\tilde {c}_{K})_{0}\), since only (c0)0,…,(cK−μ)0 are consistent in the sense of Definition 2.

In Table 1, we present the results of the computation of consistent initial values with InitDAE [12] that solves (19)–(20). For the considered initial value x1(0) = 1, the solution is \(x_{1}(t)=\cosh (t)\). We can appreciate that for K = 5, only the Taylor coefficient (c0)0 and (c1)0 are consistent, i.e., kc = 1 = K − μ. Increasing K, correspondingly more consistent Taylor coefficients could be computed.

The numerical solution delivered by the methods defined in the following corresponds to:

-

the numerical solution obtained by Taylor series methods applied to the projected explicit ODE (10) for πx, and

-

corresponding values for the components (I −π)x that result from (11).

Therefore, the stability and order properties of the integration methods defined below can be transferred from ODEs to DAEs. Due to the formulation as an optimization problem, the inherent dynamics of the DAE that can be expressed in terms of πx is not considered explicitly, but implicitly.

4 General definition of explicit/implicit methods

Recall that:

-

P = π = I holds for ODEs and that, therefore, for ODEs, the approach (19)–(20) means to compute the Taylor coefficients if c0 is prescribed.

-

We assumed that \( \ker f_{x^{\prime }}(c_{1},c_{0},t) \) and therefore also P do not depend on (c1,c0,t). Therefore, the Taylor coefficients of Px(t) at tj correspond to:

$$ [P(c_{0})_{j}, P(c_{1})_{j}, P(c_{2})_{j},\ldots, P(c_{k_{c}})_{j} ]. $$

With these two properties in mind, we can present a very general formulation for implicit and explicit Taylor series methods for ODEs and DAEs defining suitable objective functions instead of (19).

In a first step, we focus on consistency.

Lemma 1

Consider:

\(k_{e}, k_{i} \in {\mathbb {N}}\), 0 ≤ ke,ki ≤ K and weights \(\omega _{\ell _{e}}^{e}, \omega _{\ell _{i}}^{i} \in {\mathbb {R}}\) to define the objective function

Then for any solution:

of the minimization problem:

the values:

are consistent at tj+ 1 for all ki ≤ K − μ.

Proof

According to Definition 2, the coefficients (29) are consistent, since the constraints (28) are fulfilled. Recall further that, under suitable assumptions, the solvability of (27)–(28) follows from the Definition 1 of the index μ and has been discussed in [16]. □

Corollary 1

Consider linear DAEs (9) and suppose that consistent values:

are given. Consider further an integration method defined by (27)–(28) for suitable weights \(\omega _{\ell _{e}}^{e}, \omega _{\ell _{i}}^{i}\). Then the following two approaches provide the same consistent results (29):

-

the solution (27)–(28) for the ODE (10) that is invariant in the subspace im π, and the subsequent computation of the remaining components according to (11).

Proof

On the one hand, according to (21)–(22), the solution of (27)–(28) for the original DAE delivers the same result (29) as:

On the other hand, the derivative array (31) for the DAE contains the derivative array of the ODE (10) and the nonlinear equations (11). □

With the notation of the objective function, different Taylor integration methods can be described with corresponding weights \(\omega _{\ell _{e}}^{e}\), \(\omega _{\ell _{i}}^{i}\). This provides us a very flexible way to implement schemes with different order and stability properties.

5 Projected taylor integration methods

5.1 Explicit Taylor series method for DAEs

In terms of the above notation, the explicit Taylor series method for ODEs corresponds to ke ≥ 1, \(\omega _{\ell _{e}}^{e}=1\) for 0 ≤ ℓe ≤ ke, ki = 0, \({\omega _{0}^{i}}=1\). Recall that the approach from [17] for DAEs consists of the following steps:

-

Initialization: Solve the optimization problem:

$$ \begin{array}{@{}rcl@{}} \min &\quad \left\| P\left( (c_{0})_{0}-\alpha\right) \right\|_{2} \end{array} $$(32)$$ \begin{array}{@{}rcl@{}} \text{subject to} & \quad r((c_{0})_{0}, (c_{1})_{0}, (c_{2})_{0},\ldots, (c_{K})_{0},t_{0})=0, \end{array} $$(33)for an initial guess α.

-

For time-points tj+ 1, j ≥ 0, hj = tj+ 1 − tj: Solve the optimization problems:

$$ \begin{array}{@{}rcl@{}} \min &\quad || P \big((c_{0})_{j+1}-\underbrace{\sum\limits_{\ell=0}^{k_{e}} (c_{\ell})_{j} h_{j}^{\ell}}_{\approx x(t_{j}+h_{j})}\big) ||_{2} , \end{array} $$(34)$$ \begin{array}{@{}rcl@{}} \text{subject to} & \quad r((c_{0})_{j+1}, (c_{1})_{j+1}, (c_{2})_{j+1},\ldots, (c_{K})_{j+1},t_{j+1})=0 , \end{array} $$(35)successively for ke ≤ K − μ, where ke is the order of the series in tj.

This method is called explicit, since (32) is an explicit equation for (c0)j+ 1 that does not involve any value (cℓ)j+ 1 for ℓ ≥ 1. In contrast to explicit ODEs, where Taylor coefficients may be obtained by function evaluation, with this approach for DAEs in general, we have to solve a nonlinear system of equations. Therefore, it seems reasonable to consider also implicit Taylor approximations in the integration scheme.

5.2 Fully implicit Taylor series methods for DAEs

The implicit counterpart of the explicit Taylor series method for ODEs corresponds to ki ≥ 1, \(\omega _{\ell _{i}}^{i}=1\) for 0 ≤ ℓi ≤ ki, ke = 0, \({\omega _{0}^{e}}=1\). Our generalization for DAEs consists, therefore, of the following steps.

-

For time-points tj+ 1, j ≥ 0, hj = tj+ 1 − tj: Solve the optimization problems:

$$ \begin{array}{@{}rcl@{}} \min &\quad || P \big(\underbrace{\sum\limits_{\ell=0}^{k_{i}} (c_{\ell})_{j+1} (-h_{j})^{\ell}}_{\approx x(t_{j+1}-h_{j})}-(c_{0})_{j}\big) ||_{2} \end{array} $$(36)$$ \begin{array}{@{}rcl@{}} \text{subject to} & \quad r((c_{0})_{j+1}, (c_{1})_{j+1}, (c_{2})_{j+1},\ldots, (c_{K})_{j+1},t_{j+1})=0 , \end{array} $$(37)successively for ki ≤ K − μ, where ki is the order at tj+ 1.

Obviously, if, instead of (34) and (36), more general conditions of the type (27) are considered, then the dimension of the system that has to be solved remains equal. Therefore, it seems natural to consider more general schemes with better convergence and stability properties than the explicit and the fully implicit Taylor series methods.

5.3 Two-halfstep explicit/implicit schemes

One straightforward combination of the explicit and implicit integration schemes is to approximate x(tj + σhj) = x(tj+ 1 − (1 − σ)hj) for 0 ≤ σ ≤ 1 as follows:

and equalize the expressions from both right-hand sides. The properties of the methods (38)–(39) are described in [21]. The choice \(\sigma = \frac {1}{2}\), which can be interpreted as a generalization of the trapezoidal rule, turns out to be convenient. For ke = ki, \(\sigma = \frac {1}{2}\), it coincides with the one tested in [2, 9].

Remark 1

Note that another closely related implicit/explicit scheme is described in the literature, see, e.g., [29]. There, the first step is implicit and the second one explicit, in contrast to the approach from above. According to the extensive analysis from [29], \(\sigma = \frac {1}{2}\) is convenient also in that case. However, for 0 < σ < 1, these methods are less suitable for our DAE-scheme since the Taylor coefficients would be considered at tj + σhj, whereas the constraints have to be fulfilled at tj and tj+ 1.

In the notation from Section 4, choosing \(\sigma = \frac {1}{2}\) in (38)–(39) means to consider:

for 0 ≤ ke,ki ≤ K − μ, i.e.:

For shortness, we denote these two-halfstep methods by (ke,ki)-TH.

Recall that, in general, the stability function R(z) (cf. Appendix Appendix) of a (ke,ki)-TH method is not a Padé approximation of the exponential function. Consequently, the maximally achievable order of the integration method for fixed ke and ki is not given for these particular weights in general. Therefore, further higher-order schemes for stiff ODEs have been developed, namely the HOP-methods described in [7].

5.4 Higher-order Padé methods

According to [7], HOP may be interpreted as Hermite-Obrechkoff-Padé or higher-order Padé. The corresponding integration schemes may be considered as implicit Taylor series methods based on Hermite quadratures.

In our notation, a (ke,ki)-HOP scheme means choosing:

These coefficients correspond to the (ke,ki)-Padé approximation of the exponential function such that the stability function R(z) is precisely this approximation, see Appendix Appendix. Indeed, (ke,ki)-HOP methods have the following properties, cf. [7]:

-

the order of consistency is ke + ki,

-

the order of the local error is ke + ki + 1,

-

they are A-stable for ki − 2 ≤ ke ≤ ki,

-

they are L-stable for ki − 2 ≤ ke ≤ ki − 1.

Note that also in this case, the trapezoidal rule corresponds to ke = ki = 1 and the implicit Euler method to ke = 0, ki = 1. In this sense, the methods with ke = ki could be viewed as a generalization of the trapezoidal rule and those with ke = ki − 1 as a generalization of the implicit Euler method, cf. [7].

In Section 8, we numerically verify the outstanding properties of these methods.

6 Properties of the minimization problems

In [16], we analyzed the properties of the minimization problem obtained when computing consistent initial values. That analysis can directly be applied to the explicit Taylor series method, cf. [17]. To appreciate the properties for implicit methods (i.e., ki > 0), we define, for \(k \geq \max \limits \left \{k_{e},k_{i}\right \}\), the matrices:

assuming \(\omega _{\ell _{i}}=0\) for li > ki and \(\omega _{\ell _{e}}=0\) for ℓe > ke, and the vectors:

With this notation, we write:

Therefore, as in [16], for α := TeXj, X := Xj+ 1, we consider the objective function:

Observe that the matrix:

is, by construction, positive semi-definite. However, it is not an orthogonal projector in general. Therefore, Theorem 1 and Corollary 1 of [16] cannot be applied directly. Hence, the solvability of the optimization problem is more difficult than for explicit Taylor methods. More precisely, we want to emphasize that, for:

the nullspaces:

may be different. However, since \(\widetilde {P}_{i}\) depends on hj, it is reasonable to assume that a suitable stepsize hj can be found such that the optimization problem becomes solvable in the sense discussed in [16].

7 Some practical considerations

7.1 Dimension of the nonlinear systems solved in each step

For a given \(K \in {\mathbb {N}}\), the Lagrange approach for solving (27)–(28) leads to a nonlinear system of equations of dimension 2n ⋅ (K + 1), cf. [16]. Thereby, consistent coefficients:

are obtained. In contrast, the coefficients cK−μ+ 1,…,cK will not be consistent in general and the introduced Lagrange-multipliers are not even of interest.

However, increasing K by one means solving a nonlinear system containing 2n additional variables and equations.

7.2 Setting k e and k i in a simple implementation

Dealing with automatic differentiation (AD), the number \(K \in {\mathbb {N}}\) has to be prescribed a priori in order to consider (K + 1) Taylor coefficients. Since 0 ≤ ke,ki ≤ K − μ must be given in general, for the (ke,ki) TH and HOP methods, we set:

by default. We further tested ki := K − μ, ke := ki − 1 for HOP methods. So far, we considered schemes with constant order and stepsize only.

7.3 Jacobian matrices

To solve the optimization problems (27)–(28) numerically, we provide the corresponding Jacobians.

-

The Jacobian of the constraints (28) is described in [17], since it is also used for the computation of consistent initial values.

-

To describe the Jacobian of the objective function (27), which is a gradient, we define:

$$ q\left( (c_{0})_{j+1},\ldots, (c_{k_{i}})_{j+1}\right):= \left\|p \left( (c_{0})_{j+1},\ldots, (c_{k_{i}})_{j+1}\right)\right\|_{2} $$and realize that:

$$ \frac{\partial q}{ \partial (c_{\ell_{i}})_{j+1}} = \frac{1}{q\left( (c_{0})_{j+1},\!\ldots, \!(c_{k_{i}})_{j+1}\right)}\!\left( p\!\left( \!(c_{0})_{j+1},\ldots, (c_{k_{i}})_{j+1}\right)\right)^{T} \cdot \omega_{\ell_{i}}^{i}\cdot \left( \!-h_{j} \right)^{\ell_{i}}, $$for q≠ 0, 0 ≤ ℓi ≤ ki.

8 Numerical tests

8.1 Order validation

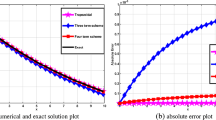

To visualize the order of the methods, we integrate Example 1 in the interval \(\left [0,1 \right ]\) with different stepsizes. The results can be found in Fig. 1. On the left-hand side, we show the results for the index-4 DAE. On the right-hand side, we report the results obtained for the corresponding ODE described in (25).

Stepsize-error diagram for the error \(\left |x_{1}(1)-\cosh (1)\right |\) for the DAE (left) and the essential ODE (right) corresponding to Example 1 for different methods and ftol for the module minimize from SciPy. For ke, ki= 2, we included graphs of Chp for p = 2,3,4 to appreciate the order of the methods. The crossing of the (4,4)-HOP and (3,4)-HOP methods for the DAE (left) are probably due to rounding errors, since the error is about 1e − 14

Summarizing, we observe that:

-

For ki,ke ≤ 1, the methods coincide with the explicit and implicit Euler methods or the trapezoidal rule. Therefore, the graphs coincide up to effects resulting from rounding errors.

-

The similarity of the overall behavior for the DAE and the ODE is remarkable.

-

As expected, the HOP methods are considerably more accurate due to the higher order.

-

For small h and large ke,ki, scaling and rounding errors impede more accurate results in dependence of the tolerance ftol from the module minimize from SciPy.

8.2 Numerical test for examples from the literature

8.2.1 Validation of known results

We report numerical results obtained by the methods (3,3)-HOP and (4,4)-HOP for the following examples from the literature:

-

Extended mass-on-car from [25], see Appendix, Section Appendix,

-

Pendulum index 3, which can be found in almost all introductions to DAEs, in the reduced to first-order form, with the positive y axis pointing upwards and the parameters m = 1.0, l = 1.0, and g = 1.0. (x1,x2) are the coordinates, (x3,x4) the corresponding velocities and x5 the Lagrange parameter. In our computation, the system starts from rest at 45 degrees to the vertical.

-

Car axis index 3 formulation with all parameters as given in [24]. In order to avoid a disadvantageous scaling of the Taylor coefficients, we changed the independent variable t to τ = 10t. This is advantageous, since the time-dependent input-function is \(y_{b}=0.1\sin \limits (10 \ t)\). For large K, the corresponding higher Taylor coefficient lead to considerable scaling differences that are avoided by the substitution with τ. For the details of our reformulation, we refer to [17].

For all examples (see also Table 2), we used ftol for the tolerance of the module minimize from SciPy. To estimate the error, we considered the difference between the results obtained by (3,3)-HOP and (4,4)-HOP.

All tests confirmed the applicability of the method. The solution graphs look identical with those given in the literature [24, 25, 30]. The graphs of the estimated errors of the (3,3)-HOP methods in Fig. 2 confirm the order expectations.

Since it is obvious that our implementation is not competitive with respect to runtime (see Table 3), we have not made a systematic comparison with other solvers here.

8.2.2 A challenging index 5 DAE

We consider now the index-5 example from [26] resulting from a model of two pendula, where the Lagrangian multiplier λ1 of the first one controls the length of the second one:

Note that in this formulation from [26] the positive y axis is pointing downwards. The DAE has index 5 and four degrees of freedom. For the numerical tests, we use the gravity constant g = 1, the length of the first pendulum L = 1, the parameter c = 0.1 and the interval [0,80]. In [26], this example was integrated with constant stepsize h = 0.05 and order k = 7 as well as h = 0.025 and order k = 8. For the component x2, the two solutions were very close until about t = 30 and clearly diverging from there to about t = 50 and totally unrelated from t = 55 on.

Our implementation leads to a very good results in the sense that two solutions have a small difference up to much larger t. We compare the solutions for K = 9 (with HOP method ki = ke = 4 and order 8) and K = 8 (with HOP method ki = ke = 3 and order 6) for the (numerically) consistent initial value:

The quality of our results is visualized for x2 in Fig. 3. For slightly perturbed initial values for x2 and y2, the obtained solutions behave analogously. For arbitrarily perturbed initial values, convergence difficulties during the computation of consistent initial values may appear.

Difference of the result obtained with (3,3)-HOP with h = 0.05 and (4,4)-HOP with h = 0.025 for the component x2 of the index-5 example from Section 8.2.2 in the interval [0,40] (left) and [0,80] (right)

8.2.3 Andrews squeezing mechanism

Finally, we want to report here the behavior we obtained for a well-known index-3 benchmark problem with an extreme scaling. According to [20, 24], the problem is of the form:

for \(q \in {\mathbb {R}}^{7}, \lambda \in {\mathbb {R}}^{6}\). To our surprise, using for α the initial value given in the literature, which leads there to an dynamic behavior, our computation of consistent initial values delivers a stationary solution, such that all our integration methods provide the same constant solution for:

and all other components are (numerically) equal to zero. Therefore, we explain here why this happened.

First of all, we want to mention that the indicated condition number, introduced in [14], corresponding to the DAE at α is about 1011, such that a clear hint to the scaling difficulties is given. In contrast, at the given stationary solution, the condition number is about 106.

To simplify further considerations, we notice that the last four equations of g(p) = 0 are used to compute Φ,δ,Ω,𝜖, such that we can neglect them and consider only g1,2 and f1,2,3 to determine β,Θ,γ,λ1,2. For a constant solution v = w = 0, the relevant equations are therefore:

At the equilibrium point corresponding to (41)–(42), the constant drive torque mom, the spring force f3, and the Lagrangian forces are equalized.

Therefore, it only remains to explain why the approach (19)–(20) delivers a stationary solution. Due to the high condition, the Lagrange multipliers λ are numerically difficult to compute. In fact, for other numerical computations, the accuracy for λ is not controlled [20, p. 536ff]. In contrast, if no (numerical) full row rank of the Jacobian \(\tilde {G}\) is given, then (19)–(20) computes a minimal norm solution [15], that in this case minimizes the values for λ, leading to the stationary solution. To our knowledge, this stationary solution was not reported before in the DAE literature.

We plan further investigations on this unexpected behavior. Indeed, for some perturbed initial values, we obtained a solution that converge towards the stationary solution (41)–(42). Moreover, with scaled equations and very different initial values, a nonconstant solution that behaves like the one described in [20, 24] has been obtained.

9 Summary and future work

In this article, we presented a projection approach that permits the extension of explicit/implicit Taylor integrations schemes from ODEs to DAEs. As a result, we obtained higher-order methods that can directly be applied also to higher-index DAEs. The methods are relatively easy to implement using InitDAE and convenient since, thanks to the formulation as an optimization problem, the inherent dynamics of the DAE are considered indirectly. We analyzed in detail explicit, fully implicit, two-halfstep (TH) and higher-order-Padé (HOP) methods. Particularly HOP methods present excellent stability and order properties.

The results obtained by a prototype in Python that is based on InitDAE [12] outperform our expectations, in particular with regard to the accuracy for higher-index DAEs, cf. Section 8.2.2. Until now, our focus was on the extension from ODEs to DAEs in order to use higher-order and A-stable methods with InitDAE for our diagnosis purposes during the integration to monitor singularities [17]. With this promising first results, we think that further developments of these projected methods are worthwhile.

In fact, at present, our implementation is not competitive by far. One reason is that setting up the nonlinear equations (27)–(28) and the corresponding Jacobians with AlgoPy, cf. [31], is still very costly. If equations (27)–(28) and the corresponding Jacobians are supplied in a more efficient way, competitive solvers might be achieved. At present, we do not even consider the sparsity of matrices. Furthermore, an improvement seems likely if we take advantage of specific structural properties, e.g., solving subsystems step-by-step, cf. [10, 11]. Another reason for our high computational costs is that the package minimize from SciPy often performs more iterations than we expected (often more than 30), although a good initial guess computed with the explicit Taylor series method is given in general. This behavior has to be inspected in more detail. For linear systems, a direct implementation considering the projector π from (21) (or, more precisely, a corresponding basis) should deliver an efficient algorithm. This could be of interest, e.g., for the applications from [25, 30]. Last but not least, competitive solvers require adaptive order and stepsize strategies—a broad field for future work.

Although these algorithms open new possibilities to integrate higher-index DAEs, we want to emphasize that, in practice, a high index is often due to modelling assumptions that should be considered very carefully. The dependencies on higher derivatives should always be well founded.

Change history

16 February 2021

Family name presentation of the 1st Author

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions with Formulas Graphs and Mathematical Tables. Dover, New York (1972)

Akishin, P.G., Puzynin, I.V., Vinitsky, S.I.: A hybrid numerical method for analysis of dynamics of the classical Hamiltonian systems. Comput. Math. Appl. 34(2-4), 45–73 (1997)

Barrio, R.: Performance of the Taylor series method for ODEs/DAEs. Appl. Math. Comput. 163(2), 525–545 (2005)

Brenan, K.E., Campbell, S.L., Petzold, L.R.: Numerical Solution of Initial-Value Problems in Differential-Algebraic Equations. Unabridged, corr. republ. Classics in Applied Mathematics, p 14. SIAM Society for Industrial and Applied Mathematics, Philadelphia (1996)

Campbell, S.L.: The numerical solution of higher index linear time varying singular systems of differential equations. SIAM J. Sci. Stat. Comput. 6, 334–348 (1985)

Campbell, S.L., Gear, C.W.: The index of general nonlinear DAEs. Numer. Math. 72(2), 173–196 (1995)

Corliss, G.F., Griewank, A., Henneberger, P., Kirlinger, G., Potra, F.A., Stetter, H.J.: High-order stiff ODE solvers via automatic differentiation and rational prediction. In: Vulkov, L., Waśniewski, J., Yalamov, P. (eds.) Numerical Analysis and Its Applications. WNAA 1996. Lecture Notes in Computer Science, vol. 1196, pp 114–124 (1997)

Deuflhard, P., Bornemann, F.: Numerical mathematics 2. Ordinary differential equations. (Numerische Mathematik 2 Gewöhnliche Differentialgleichungen.) 4th revised and augmented ed. de Gruyter Studium, Berlin (2013)

Dimova, S.N., Hristov, I.G., Hristova, R.D., Puzynin, I.V., Puzynina, T.P., Sharipov, Z.A., Shegunov, N.G., Tukhliev, Z.K.: Combined explicit-implicit Taylor Series Methods. In: Proceedings of the VIII International Conference “Distributed Computing and Grid-technologies in Science and Education” (GRID 2018), Dubna, Moscow region, Russia, September 10 -14 (2018)

Estévez Schwarz, D.: A step-by-step approach to compute a consistent initialization for the MNA. Int. J. Circuit Theory Appl. 30(1), 1–6 (2002)

Estévez Schwarz, D.: Consistent initialization for DAEs in Hessenberg form. Numer. Algorithms 52(4), 629–648 (2009)

Estévez Schwarz, D., Lamour, R.: InitDAE’s documentation. Available from: https://www.mathematik.hu-berlin.de/~lamour/software/python/InitDAE/html/

Estévez Schwarz, D., Lamour, R.: Projector based integration of DAEs with the Taylor series method using automatic differentiation. J. Comput. Appl Math. 262, 62–72 (2014)

Estévez Schwarz, D., Lamour, R.: A new projector based decoupling of linear DAEs for monitoring singularities. Numer. Algorithms 73(2), 535–565 (2016)

Estévez Schwarz, D., Lamour, R.: Consistent initialization for higher-index DAEs using a projector based minimum-norm specification. Technical Report 1. Institut für Mathematik, Humboldt-Universität zu Berlin (2016)

Estévez Schwarz, D., Lamour, R.: A new approach for computing consistent initial values and Taylor coefficients for DAEs using projector-based constrained optimization. Numer. Algorithms 78(2), 355–377 (2018)

Estévez Schwarz, D., Lamour, R.: InitDAE: Computation of consistent values, index determination and diagnosis of singularities of DAEs using automatic differentiation in Python. Journal of Computational and Applied Mathematics. https://doi.org/10.1016/j.cam.2019.112486 (2019)

Estévez Schwarz, D., Lamour, R.: A projector based decoupling of DAEs obtained from the derivative array. In: Progress in Differential-Algebraic Equations II, Differential-Algebraic Equations Forum (DAE-F) (2020)

Estévez Schwarz, D., Lamour, R., März, R.: Singularities of the Robotic Arm DAE. Progress in Differential-Algebraic Equations II, Differential-Algebraic Equations Forum (DAE-F) (2020)

Hairer, E., Wanner, G.: Solving Ordinary Differential Equations II. Springer (1996)

Kirlinger, G., Corliss, G.F.: On implicit Taylor series methods for stiff ODEs. In: Computer Arithmetic and Enclosure Methods. Proceedings of the 3rd International IMACS-GAMM Symposium on Computer Arithmetic and Scientific Computing (SCAN-91), Oldenburg, Germany, 1-4 October 1991, pp 371–379, Amsterdam (1992)

Kunkel, P., Mehrmann, V.: Differential-Algebraic Equations - Analysis and Numerical Solution. EMS Publishing House, Zürich (2006)

Lamour, R., März, R., Tischendorf, C.: Differential-Algebraic Equations: A Projector Based Analysis. Differential-Algebraic Equations Forum, vol. 1. Springer, Berlin (2013)

Mazzia, F., Magherini, C.: Test set for initial value problems, release 2.4. Technical report, Department of Mathematics, University of Bari and INdAM, Research Unit of Bari, February 2008. Available from: http://pitagora.dm.uniba.it/~testset

Otto, S., Seifried, R.: Applications of Differential-Algebraic Equations: Examples and Benchmarks, chapter Open-loop Control of Underactuated Mechanical Systems Using Servo-constraints: Analysis and Some Examples. Differential-Algebraic Equations Forum. Springer, Cham (2019)

Pryce, J.D.: Solving high-index DAEs by Taylor series. Numer. Algorithms 19(1–4), 195–211 (1998)

Pryce, J.D., Nedialkov, N.S., Tan, G., Li, X.: How AD can help solve differential-algebraic equations. Optim. Methods Softw. 33(4–6), 729–749 (2018)

Riaza, R.: Differential-Algebraic Systems. Analytical Aspects and Circuit Applications. World Scientific, Hackensack (2008)

Scott, J.R., Solving, ODE: Initial Value Problems with Implicit Taylor Series Methods. Technical report NASA/TM-2000-209400 (2000)

Seifried, R., Blajer, W.: Analysis of servo-constraint problems for underactuated multibody systems. Mech. Sci. 4, 113–129 (2013)

Walter, S.F., Lehmann, L.: Algorithmic differentiation in Python with AlgoPy. J. Comput. Sci. 4(5), 334–344 (2013)

Funding

Open Access funding enabled and organized by Projekt DEAL

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix: 1. Stability functions and stability regions of Taylor series methods

1.1 A.1 Stability functions

The general definition (27)–(28) allows for a straightforward description of the stability function. Applied to ODEs (and therefore P = I), the stability function \(R: \mathbb {C}\rightarrow \mathbb {C}\) results if we consider the test-ODE:

and describe the numerical method for constant h = hj in terms of:

For ODEs, the methods described in Section 4 imply:

and, for the test-equation (43), we obtain from:

the relationship:

i.e., for \(z=h_{j} \lambda \in {\mathbb {C:}}\)

1.2 A.2 Stability regions

The corresponding stability regions can thus be characterized by:

For the methods discussed in this article, we obtain:

-

Explicit Taylor:

$$ R^{E}_{k_{e},0}(z)={\sum}_{\ell_{e}=0}^{k_{e}}\frac{z^{\ell_{e}}}{\ell_{e} !}. $$The corresponding stability regions are illustrated in Fig. 4 (top), cf. also [3, 20].

-

Fully Implicit Taylor:

$$ R^{FI}_{0,k_{i}}(z)=\frac{1}{{\sum}_{\ell_{i}=0}^{k_{i}}(-1)^{\ell_{i}}\frac{z^{\ell}_{i}}{\ell_{i}!}}. $$The corresponding stability regions are illustrated in Fig. 4 (bottom).

-

For the two-halfstep explicit/implicit schemes (40), we obtain:

$$ R^{TH}_{k_{e},k_{i}}(z)=\frac{\sum\limits_{\ell_{e}=0}^{k_{e}} \frac{1}{\ell_{e} !} \left( \frac{z}{2}\right)^{\ell_{e}} } {\sum\limits_{\ell_{i}=0}^{k_{i}} \frac{1}{\ell_{i} !} \left( -\frac{z}{2} \right)^{\ell_{i} }}. $$Fig. 4 The corresponding stability regions for ke,ki = 0,…, 6 are represented in Fig. 5.

Fig. 5 Colored stability regions S of two-halfstep schemes considering \(R_{k_{e},k_{i}}\) for all combinations of ke,ki = 0,…,6, where ki corresponds to the rows and ke to the columns. The symmetry with respect to the main diagonal of the images results from (44). We can realize that, for ki = ke = 5,6, they are not A-stable. This contradicts the statement in [21]

Note that symmetry is due to:

$$ \begin{array}{@{}rcl@{}} R^{TH}_{k_{e},k_{i}}(-z)=\frac{1}{R^{TH}_{k_{i},k_{e}}(z)}. \end{array} $$(44)The schemes with ke ≤ ki seem to be A-stable or A(α)-stable for moderate α. Indeed, according to [2, 9], the schemes provide good results for Hamiltonian systems if ke = ki. Furthermore, A-stable schemes with ke < ki are L-stable, since:

$$ \lim_{z \rightarrow -\infty } \left| R^{TH}_{k_{e},k_{i}}(z) \right| =0 \quad \text{for} \quad k_{e} < k_{i}. $$ -

For HOP-methods, from:

$$ \begin{array}{@{}rcl@{}} \omega^{e}_{\ell_{e}}&:=& \frac{k_{e} ! (k_{e}+k_{i}-\ell_{e})!}{(k_{e}+k_{i})!(k_{e}-\ell_{e})!}, \quad \ell_{e} =0, \ldots, k_{e}\\ \omega^{i}_{\ell_{i}}&:=& \frac{k_{i} ! (k_{e}+k_{i}-\ell_{i})!}{(k_{e}+k_{i})!(k_{i}-\ell_{i})!}, \quad \ell_{i} =0, {\ldots} , k_{i} \end{array} $$we obtain:

$$ \begin{array}{@{}rcl@{}} R^{\text{HOP}}_{k_{e},k_{i}}(z)=\frac{k_{e} !}{k_{i} !} \frac{\sum\limits_{\ell_{e}=0}^{k_{e}} \frac{1}{\ell_{e} !} \frac{ (k_{e}+k_{i}-\ell_{e})!}{(k_{e}-\ell_{e})!} z^{\ell}} {\sum\limits_{\ell_{i}=0}^{k_{i}} (-1)^{\ell_{i}} \frac{1}{\ell_{i} !} \frac{(k_{e}+k_{i}-\ell_{i})!}{(k_{i}-\ell_{i})!} z^{\ell}}. \end{array} $$(45)Moreover, analogously as before, we have:

$$ \begin{array}{@{}rcl@{}} R^{\text{HOP}}_{k_{e},k_{i}}(-z)=\frac{1}{R^{\text{HOP}}_{k_{i},k_{e}}(z)}. \end{array} $$(46)Therefore, in Fig. 6, we obtain again symmetric stability regions that are in accordance with the stability properties reported in Section 5.4.

Fig. 6 Colored stability regions S of HOP-methods considering \(R_{k_{e},k_{i}}\) for all combinations of ke,ki = 0,…,6, where ki corresponds to the rows and ke to the columns. The symmetry with respect to the main diagonal of the images results from (46). For ke = ki, we observe \(S={\mathbb {C}}^{-}\)

Since we obtained (44) and (46) for TH and HOP methods with k = ke = ki, it holds for these methods that:

According to Lemma 6.20 from [8] and to the representations of the stability regions from Fig. 5, \(R_{k,k}^{TH}\) seems to have poles in \({\mathbb {C}}^{-}\) in general.

Appendix: 2: Linear algebra toolbox for DAEs

According to [6], a linear system of equations:

is 1-full with respect to s1, if s1 is uniquely determined for any consistent b, cf. also [5]. Since we focus on properties of the matrix \(\mathcal {A}\), according to [16], we prefer the following equivalent formulation:

For our analysis of DAEs, we focus on 1-full matrices \(\mathcal {A}\) of the following particular form:

for an orthogonal projector P. For DAEs, \(\mathcal {G}_{1}, \mathcal {G}_{2}\) result from the derivative array and P is the orthogonal projector introduced in Section 2.

Let W2 denote an arbitrary matrix fulfilling \(\ker W_{2} = \text {im } \mathcal {G}_{2}\). Then for \(N:=W_{2} \mathcal {G}_{1,} \) the 1-fullness of (48) implies:

For linear DAEs, N results from the constraints.

Let further W denote an arbitrary matrix fulfilling \(\ker W = \text {im } NQ\), such that WN = WNP. Then, the orthogonal projector π onto:

fulfills π := P − (WN)+(WN).

For linear DAEs, this projector π allows for the formulation of the orthogonally projected explicit ODE (10) in the invariant subspace im π, cf. Theorem 1 in [18]. In practice, we can avoid the explicit computation of the orthogonal projector π considering the constrained optimization problem (5)–(6) instead of (7)-(8).

Appendix: 3: Linear examples from Section 8.2

The following two examples, which result from servo-constraint problems for multibody systems, are linear DAEs of the form:

1.1 C.1 Example: mass-on-car

The DAE resulting from the spring-mass system mounted on a car from [30] corresponds to:

for \(x=(x_{1},s,v_{x_{1}},v_{s},F)\). We used the parameters m1 = 1.0, m2 = 2.0, k = 5.0, d = 1.0, \(\alpha =\frac {5}{180}\pi \). yd is a predefined trajectory for the position of the mass m2 and reads:

for y0 = 0.5, yf = 2.5, \(t_{\max \limits }=6.0\) and the polynomial p9 described in Appendix Appendix.

For \( 0 <\alpha < \frac {\pi }{2}\), the DAE-index is 3 and the projector π from (21) reads:

i.e., it depends on α only and is independent of the other parameters.

1.2 C.2 Example: extended mass-on-car system

The DAE resulting from the extension of the mass-on car systems described in [25] corresponds to:

for \(x=(x_{1},s_{1},s_{2}, v_{x_{1}}, v_{s_{1}},v_{s_{2}}, F)\) and with

for z0 = 1.0, zf = 4.0, \(t_{\max \limits }=15.0\), and the polynomial p15 described in Appendix Appendix. We used the parameters m1 = 1.0, m2 = 1.0, m3 = 2.0, k1 = 5.0, k2 = 5.0, d1 = 1.0, d2 = 1.0, \(\alpha =\frac {\pi }{4}\). In this case, the index (and therefore also the rank and shape of π) depends on the parameters α, d1, and d2.

1.3 C.3 About formulas for the prescribed path

In the examples of Sections C.1 and C.2, the prescribed path is described by a polynomial that results to be the incomplete regularized beta function.

For a > 0,b > 0, the incomplete beta function is defined by:

for 0 < x < 1 and

Moreover, the incomplete regularized beta function reads:

and has the obvious properties:

For \(n \in {\mathbb {N}}\), a = b = n + 1, the polynomial p2n+ 1(x) := Ix(n + 1,n + 1) has order 2n + 1 and can be represented with binomial expansion:

Although this formulation has often been used in literature, from a numerical point of view, it is inconvenient due to the alternating sign.

Since the polynomial \(p_{2n+1}(x)-\frac {1}{2}\) has a zero at \(\frac {1}{2}\), it can be shown that for h0 = 1, the polynomials:

allow the representation:

Moreover, using the formula for the relation between the incomplete regularized beta function and the binomial expansion for integer a from [1, p. 263], the same polynomial can also be described by:

Of course, the polynomials described by (49), (50), and (51) are identical, but the formulas from (50) and (51) have the clear advantage that they are completely numerically stable since for 0 < x < 1 all the terms are positive. The error that is caused by formulation (49) is visualized in Fig. 7.

In particular, for n = 4, we obtain:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Estévez Schwarz, D., Lamour, R. Projected explicit and implicit Taylor series methods for DAEs. Numer Algor 88, 615–646 (2021). https://doi.org/10.1007/s11075-020-01051-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-01051-z