Abstract

In this paper, a stable collocation method for solving the nonlinear fractional delay differential equations is proposed by constructing a new set of multiscale orthonormal bases of \(W^{1}_{2,0}\). Error estimations of approximate solutions are given and the highest convergence order can reach four in the sense of the norm of \(W_{2,0}^{1}\). To overcome the nonlinear condition, we make use of Newton’s method to transform the nonlinear equation into a sequence of linear equations. For the linear equations, a rigorous theory is given for obtaining their ε-approximate solutions by solving a system of equations or searching the minimum value. Stability analysis is also obtained. Some examples are discussed to illustrate the efficiency of the proposed method.

Similar content being viewed by others

1 Introduction

Nowadays, fractional differential equations have been a hot topic in the field of differential equations for their widespread applications in many science fields [1, 2]. Among them, fractional delay differential equations begin to arouse attentions of many researchers. These equations have also many applications in various areas such as control theory, biology, and economy [3, 4]. Since some models have a great deal to do with past condition, the insertion of a time delay makes these models more realistic. Therefore, the development of theory and numerical algorithms about fractional delay differential equations is of importance. For example, Hu and Zhao [5] give the condition of asymptotical stability of nonlinear fractional system with distributed time delay by utilizing the function monotonous properties and the stability theorem of the fractional linear system. Pimenov et al. [6] use a BDF difference scheme based on approximations of Clenshaw and Curtis type to get the numerical solution of the following equation:

For (1), Moghaddam and Mostaghim [4] use the fractional finite difference method to obtain its numerical solutions. Saeed et al. [7] draw on the steps method and Chebyshev wavelet method to solve the nonlinear fractional delay differential equation:

and get their approximate solutions. However, to our best knowledge, there are few articles about the study of fractional delay differential equations especially with regard to nonlinear fractional delay differential equations.

Newton’s iterative method is a very powerful tool to solve the nonlinear problems. Many researchers have been studying and generalizing Newton’s iterative method and they use it to solve nonlinear problems. Deuflhard [8] in his monograph constructs adaptive Newton algorithms for some specific nonlinear problems. Krasnosel’skii et al. [9] in their monograph study the Newton-Kantorovich’s method, give a modified Newton-Kantorovich’s method and solve the problem of the choice of initial approximations. Xu et al. [10] use quasi-Newton’s method to linearize a nonlinear operator equation, and so on. Theoretical analysis shows that the convergence order of Newton’s iterative formula is order 2.

Motivated greatly by the abovementioned excellent works, in this paper, we deal with the nonlinear fractional delay differential (2) in the condition p = 1, that is the following equation:

where f has continuous second order partial derivative, g(x) ∈ C[0, 1] and y0(x) ∈ C2 [−τ, 0] which are known functions. τ > 0 is a constant delay. The fractional derivative is in the sense of Caputo and y(x) ∈ C1 [−τ,1] is the unknown function.

In this article, we develop a stable and effective collocation method to solve (3). The collocation method is one of the most efficient methods for obtaining accurate numerical solutions of differential equations including variable coefficients and nonlinear differential equations [11,12,13,14]. The stability of collocation methods has always been an important topic. At present, the definition of its stability is that when there are many collocation nodes, the resulting equations are not ill conditioned and the results are still valid. For a stable collocation method, we can improve the accuracy by increasing the number of approximation terms. Accordingly, it is particularly important to establish a high-precision and stable collocation method.

The choice of bases of a space is important for a collocation method. Approximate solutions with different accuracy can be derived by using different bases for the same equation. For obtaining higher accuracy solution of the nonlinear fractional differential equation, we construct a new set of multiscale orthonormal bases of \(W^{1}_{2,0}\), give error estimations of approximate solutions, and prove in Section 3 that the highest convergence order can reach four in the sense of \(W_{2,0}^{1}\).

Noting that the problem of initial value selection of the Newton’s iterative method has been well solved in [9], so in this paper we transform (3) into a list of linear equations by using Newton’s iterative method. Then a new stable collocation method is proposed to solve these equations. Compared with [15], the final numerical experiments show that our method is better in dealing with this kind of equations.

The remainder of this paper is organized as follows: In Section 2, some relevant definitions and properties of the fractional calculus and the space \({W_{2}^{1}}\) and \(W_{2,0}^{1}\) are introduced. In Section 3, we construct a set of multiscale orthonormal bases of \(W^{1}_{2,0}\) and give an error estimation of the approximate solution. In Section 4, we construct the ε −approximate solution method and apply this method to solve the linear fractional delay differential equation. In Section 5, we analyze the stability of the ε −approximate solution method. In Section 6, we make use of Newton’s method to transform the nonlinear equation into a sequence of linear equations. In Section 7, we give the algorithm implementation of Newton’s iterative formula for solving the nonlinear fractional delay differential equation. In Section 8, three numerical examples are given to clarify the effectiveness of the algorithm. In the last section, the conclusions are prensented.

2 Preliminaries and notations

In this section, some preliminary results about the fractional calculus operators and reproducing kernel spaces are recalled [16,17,18].

Definition 2.1

The Riemann-Liouville (R-L) fractional integral operator \(J_{0}^{\alpha }\) is given by

where \({\Gamma }(\alpha )={\int \limits }_{0}^{\infty }x^{\alpha -1}e^{-x}\mathrm {d}x.\)

Definition 2.2

The Caputo fractional differential operator \(D_{C}^{\alpha }\) is given by

Theorem 2.1

Let u(x) be the exact solution of (3), then \(D^{\alpha }_{C}u(x)\in C[0, 1].\)

Proof

By the assumption, one has

Since integrability of a function is equivalent to its absolute integrability, one has

Taking x = 1, we obtain \(u^{\prime \prime }(x)\in L^{1}[0, 1]\). Thus, u(x) and \(u^{\prime }(x)\) are absolutely continuous on [0, 1]. Hence, \(D^{\alpha }_{C}u=g(x)+f[x,u(x),u^{\prime }(x),u(x-\tau ),u^{\prime }(x-\tau )]\in C[0, 1]\). □

Definition 2.3

Assume that n is a positive integer, then we denote

The inner product (⋅,⋅)1 and the norm ∥⋅∥1 of \( {W^{1}_{2}}\) are given by

It is easy to see that \({W^{1}_{2}}\subset C[0, 1]\). Similar to [17], we can prove that \({W^{1}_{2}}\) is not only a Hilbert space, but also a reproducing kernel space with the reproducing kernel

Definition 2.4

The inner product space \(W^{1}_{2,0}\) is defined as \( W^{1}_{2,0}\triangleq W^{1}_{2,0}[0, 1]=\{u(x)\in {W^{1}_{2}}|u(0)=0 \}\) with the inner product

Lemma 2.1

where \(\|u(x)\|_{C}\triangleq \underset {\left .x\in [0, 1]\right .}{\max \limits }|u(x)|.\)

Proof

For any \(u(x)\in {W^{1}_{2}}\), we have

Thus \(\|u(x)\|_{C}\leq \sqrt {2}\|u(x)\|_{1}.\)□

The following definition will be used in Section 6.

Definition 2.5

The inner product space \(W^{\alpha }_{2}\) is defined as \( W^{\alpha }_{2}\triangleq W^{\alpha }_{2}[0, 1]=\{J_{0}^{\alpha }u(s)|u(s)\in W^{1}_{2,0}[0, 1]\}\). And the inner product and the norm of \(W^{\alpha }_{2}\) are given by

where \(u,v\in W^{\alpha }_{2}.\)

Remark 2.1

Similar to [19], one can prove that \(W^{\alpha }_{2}\) is not only a Hilbert space, but also a reproducing kernel space.

3 Construction of the multiscale orthonormal bases of \(W^{1}_{2,0}\) and error estimations

In this section, we construct the multiscale orthonormal bases of \(W^{1}_{2,0}\) by the famous Legendre multiwavelets, and give error estimations of approximate solutions.

Define the cubic Legendre scaling functions in the interval [0, 1] [20],

and then cubic Legendre multiwavelets are given as

where i = 1, 2, ⋯ , k = 0, 1, 2, ⋯ , 2i− 1 − 1.

Denote

Lemma 3.1

\(\{\varphi _{i}(x)\}^{\infty }_{i=1}\) is a set of multiscale orthonormal bases of L2 [0, 1].

Proof

For the proof one can refer to [21]. □

Theorem 3.1

\(\{\psi _{i}(x)\}^{\infty }_{i=1}\!\triangleq \!\{{J_{0}^{1}}\varphi _{i}(x)\}^{\infty }_{i=1}=\{{J_{0}^{1}}\eta ^{0}(x),{J_{0}^{1}}\eta ^{1}(x),{J_{0}^{1}}\eta ^{2}(x),{J_{0}^{1}}\eta ^{3}(x)\), \({J_{0}^{1}}\phi ^{0}(x)\), \({J_{0}^{1}}\phi ^{1}(x)\), \({J_{0}^{1}}\phi ^{2}(x)\), \({J_{0}^{1}}\phi ^{3}(x)\), \({J_{0}^{1}}\phi ^{0}_{20}(x)\), \({J_{0}^{1}}\phi ^{0}_{21}(x)\), \({J_{0}^{1}}\phi ^{1}_{20}(x)\), \({J_{0}^{1}}\phi ^{1}_{21}(x)\), \({J_{0}^{1}}\phi ^{2}_{20}(x),{J_{0}^{1}}\phi ^{2}_{21}(x),{J_{0}^{1}}\phi ^{3}_{20}(x)\), \({J_{0}^{1}}\phi ^{3}_{21}(x),{J_{0}^{1}}\phi ^{0}_{30}(x),\cdots \}\) is a set of orthonormal bases of \(W^{1}_{2,0}[0, 1]\).

Proof

Firstly, we show that \(\{\psi _{i}(x)\}^{\infty }_{i=1}\) is orthonormal in \(W^{1}_{2,0}\).

where \(\delta _{ij}=\left \{ \begin {array}{ll} 1, & i=j\\ 0, & i\neq j \end {array} \right .. \) So \(\{\psi _{i}(x)\}^{\infty }_{i=1}\) is orthonormal in \(W^{1}_{2,0}\).

Next, we prove that \(\{\psi _{i}(x)\}^{\infty }_{i=1}\) is complete in \(W^{1}_{2,0}\). Let \(\xi (x)\in W^{1}_{2,0}\) and (ξ(x), ψi(x))1 = 0, i = 1,2,⋯ . That is,

By Lemma 3.1, \(\{\varphi _{i}(x)\}^{\infty }_{i=1}\) is a set of multiscale orthonormal bases of L2 [0, 1], and we obtain \(\xi ^{\prime }(x)=0\). Noting that \(\xi (x)\in W^{1}_{2,0}\), we have ξ(0) = 0. So \(\xi (x)=\xi (0)+{{{\int \limits }_{0}^{x}}\xi ^{\prime }(t)\mathrm {d}t}=0\). Thus, \(\{\psi _{i}(x)\}^{\infty }_{i=1}\) is complete in \(W^{1}_{2,0}\) and \(\{\psi _{i}(x)\}^{\infty }_{i=1}\) is a set of orthonormal bases of \(W^{1}_{2,0}\). □

Remark 3.1

Noting that

and we can see that \({J_{0}^{1}}\phi ^{m}(x),~m=0,1,2,3\) is multiwavelets in \(W^{1}_{2,0}.\)

Definition 3.1

Let μ(x) ∈ L2 [0, 1]. If μ(x) satisfies the following conditions

we call the Vanishing Moment of μ(x) is r + 1.

Property 3.1

The Vanishing Moment of ϕi(x) is i + 4, i = 0, 1, 2, 3.

Proof

By specific calculation, we can reach that

and \({{\int \limits }_{0}^{1}}\phi ^{i}(x)\cdot x^{i+4}{\mathrm {d}}x\neq {0,~i}=0,1,2,3\). So the conclusion holds. □

Remark 3.2

According to Property 3.1, we obtain that the Vanishing Moment of \(\{\phi ^{i}(x)\}_{i=0}^{3}\) is at least 4.

Property 3.2

For any \(v(x)\in W_{2,0}^{1}[0, 1]\) and \(N\in \mathbb {N}_{+}\), we have

where ai = (v(x), ψi(x))1.

Proof

According to Theorem 3.1, \(\{\psi _{i}(x)\}^{\infty }_{i=1}\) is a set of orthonormal bases of \(W^{1}_{2,0}[0, 1]\). Hence,

where ai = (v(x), ψi(x))1. Thus,

□

Let y(x) and yJ(x) be an exact solution and an approximate solution of (3), respectively. Denote

where \(a_{i}=(y(x),{J_{0}^{1}}\eta ^{i}(x))_{1}\), \(c_{ik}^{l}=(y(x),{J_{0}^{1}}\phi _{ik}^{l}(x))_{1}\).

Lemma 3.2

Let \(y(x)\in W_{2,0}^{1},\mid y^{(j)}(x)\mid \leq M,~{\forall ~x}\in [0, 1], {\text {for some } }j\in \{2,3,4,5\}\). Then \(|c_{ik}^{l}|\leq 2^{-(i-1)(j-1/2)}AM,~l=0,1,2,3\) where A is a constant.

Proof

Without loss of generality, we first consider \(|c_{ik}^{0}|\).

Expand \(y^{\prime }(x)\) at \(x=\frac {k}{2^{i-1}}\):

Thus, we have

The first integral of the above inequality

by using Property 3.1.

Similarly, one can obtain that

while

where \(A=\underset {\left .l=0,1,2,3\right .}{\max \limits }\left \{\frac {1}{(j-1)!}{{\int \limits }_{0}^{1}}t^{j-1}\big |\phi ^{l}(t)\big |\mathrm {d}t\right \}\). one has

In the same way, one can derive that \(|c_{ik}^{l}|\leq 2^{-(i-1)(j-1/2)}AM,~l=1,2,3\). So the conclusion holds. □

Theorem 3.2

Assume \(y(x)\in W_{2,0}^{1},~|y^{(j)}(x)|\leq M,~\forall ~x\in [0, 1], {\text { for some }}~j\in \{2,3,4,5\}\). Then ∥ y(x) − yJ(x) ∥1 ≤ C ⋅ 2−(j− 1)J where C is a constant.

Proof

Using Property 3.2 and Lemma 3.2, we can derive

where \(C=AM\sqrt {\frac {4}{1-2^{-2(j-1)}}}\). □

4 Linear fractional delay differential equations

In this section, we construct the ε-approximate solution method for solving (3) with f being linear, i.e.,

and analyze the convergence order of the ε-approximate solutions. \(a(x),b(x),c(x),d(x),h(x)\in {W^{1}_{2}}[0, 1]\), y0(x) ∈ C2 [−τ,0] are known functions and y(x) is an unknown function. τ > 0 is a constant delay. Similar to Theorem 2.1, we can prove \(D^{\alpha }_{C} y(x)\in C[0, 1]\). Here, we further assume \(D^{\alpha }_{C}y(x)\in {W^{1}_{2}}\).

Let

and

Then (5) is equivalently transformed into

where

Introducing the symbols \({~}^{-\tau }D_{C}^{\alpha }\) and \(J_{-\tau }^{\alpha }\), we have \(^{-\tau }D_{C}^{\alpha }=D_{C}^{\alpha },~J_{-\tau }^{\alpha }=J_{0}^{\alpha }\) due to the fact that \({~}^{-\tau }D_{C}^{\alpha }v(x)=\frac {1}{\Gamma (2-\alpha )}{\int \limits }_{-\tau }^{x}(x-t)^{1-\alpha }v^{\prime \prime }(t){\mathrm {d}}t=\frac {1}{\Gamma (2-\alpha )}{{\int \limits }_{0}^{x}}(x-t)^{1-\alpha }v^{\prime \prime }(t){\mathrm {d}}t=D_{C}^{\alpha }v(x)\) and \(J_{-\tau }^{\alpha }v(x)=\frac {1}{\Gamma (\alpha )}{\int \limits }_{-\tau }^{x}(x-t)^{\alpha -1}v(t){\mathrm {d}}t=\frac {1}{\Gamma (\alpha )}{{\int \limits }_{0}^{x}}(x-t)^{\alpha -1}v(t){\mathrm {d}}t=J_{0}^{\alpha }v(x)\). So we use the denotation \(D_{C}^{\alpha }\) and \(J_{0}^{\alpha }\) below.

Let \(\omega (x)={~}^{-\tau }\!D^{\alpha }_{C}v(x)=D^{\alpha }_{C}v(x)\). Thus, (6) is transformed into

And \(v(x)=J^{\alpha }_{-\tau }{{~}^{-\tau }\!D}^{\alpha }_{C}v(x)=J^{\alpha }_{-\tau }\omega (x)=J^{\alpha }_{0}\omega (x).\)

Defining an operator \(L: W^{1}_{2,0}\rightarrow W^{1}_{2,0}\),

then (6) is equivalent to

Therefore, the solution y(x) of (5) can be obtained by

We need the following Lemmas for our aims.

Lemma 4.1

\((J_{0}^{\alpha }\omega (x))_{x}^{\prime }\in L^{1}[0, 1]\) for any \(\omega (x)\in W^{1}_{2,0}[0, 1]\) with α > 0.

Proof

It is easy to see that

So we can derive

Thus,

This implies \((J_{0}^{\alpha }\omega (x))_{x}^{\prime }\in L^{1}[0, 1].\)□

Lemma 4.2

Let α > 0. Then

hold for any \(\omega (x)\in W^{1}_{2,0}\) where \(\tilde {M}_{\alpha }=\frac {1}{\alpha ^{2}{\Gamma }^{2}(\alpha )}\).

Proof

We can find that

hold for any \(\omega (x)\in W^{1}_{2,0}\). On the other hand, we obtain

by using the integration by parts. Hence, we have \(J_{0}^{\alpha }\omega (x)={{\int \limits }_{0}^{x}}(J_{0}^{\alpha }\omega )_{t}^{\prime }{\mathrm {d}}t\) and \(J_{0}^{\alpha }\omega (x)\in AC[0, 1].\)

Moreover, we have

Let \(\tilde {M}_{\alpha }=\frac {1}{\alpha ^{2}{\Gamma }^{2}(\alpha )}\). Then

holds. So \((J_{0}^{\alpha }\omega (x))_{x}^{\prime }\in L^{2}[0, 1]\). Noting that \(J_{0}^{\alpha }\omega (0)=0\), we have \(J_{0}^{\alpha }\omega (x)\in W^{1}_{2,0}\) and

□

Lemma 4.3

Let \(a(x)\in {W^{1}_{2}}[0, 1],\omega (x)\in W^{1}_{2,0}[0, 1],\alpha >0.\) Then

holds.

Proof

Using Lemmas 2.1 and 4.2, we have

So the conclusion holds. □

Theorem 4.1

The operator L defined by (7) is a bounded linear operator from \(W^{1}_{2,0}\) to \(W^{1}_{2,0}\).

Proof

Obviously, L is a linear operator. Noting that \(a(x),b(x),c(x),d(x)\in {W^{1}_{2}}[0, 1]\), thus we know a(x), b(x), c(x), d(x) ∈ AC[0, 1]. Denote \(M_{1}=\max \limits \{\|a(x)\|_{1},\|b(x)\|_{1},\|c(x)\|_{1},\|d(x)\|_{1}\}.\)

Let \(\omega (x)\in W^{1}_{2,0}[0, 1]\), according to Lemma 4.2 and 4.3, and we have \(J_{0}^{\alpha }\omega \in W^{1}_{2,0}\), and there exists an \(\tilde {M}_{\alpha }\) such that

Similarly, there exists an \(\tilde {M}_{\alpha -1}\) such that

Therefore,

which implies L is a bounded linear operator from \(W^{1}_{2,0}\) to \(W^{1}_{2,0}\). □

Theorem 4.2

Suppose there exists a unique solution of the equation defined by (8), then L is a bijection from \(W^{1}_{2,0}\) to \(L(W^{1}_{2,0})\) and \(L^{-1}:L(W^{1}_{2,0})\rightarrow W^{1}_{2,0}\) does not only exist but is also bounded.

Remark 4.1

It is easy to see that L is a bijection from \(W^{1}_{2,0}\) to \(L(W^{1}_{2,0})\). For the latter part of the conclusion, we can define an operator \(J:W^{1}_{2,0}\rightarrow W^{1}_{2,0},~J(\omega (x))=J^{\alpha }_{0}\omega (x),~\omega (x)\in W^{1}_{2,0}\). One can prove that the operator J is compact. Since the sum of finite compact operators is still compact and \(\{(I-J)\omega (x)|\omega (x)\in W^{1}_{2,0}\}\) is closed, one can obtain that \(L^{-1}:L(W^{1}_{2,0})\rightarrow W^{1}_{2,0}\) is bounded by the Inverse Operator Theorem in the Banach spaces.

Definition 4.1

y(x) is called an ε −approximate solution of (8) if ∥ L(y) − g ∥1 < ε for any given ε > 0.

Theorem 4.3

For any ε > 0, there exists a positive integer N such that for every fixed n ≥ N, \(\omega ^{*}_{n}(x)={\sum }^{n}_{i=0}c_{i}^{*}\psi _{i}(x)\) is an ε −approximate solution of (8), where \(\{c_{i}^{*}\}_{i=0}^{n}\) satisfies

and

Proof

Suppose ω(x) is the exact solution of (8). By Theorem 3.1, there exists a positive integer N such that for any n > N, there exists \(\omega _{n}(x)={\sum }_{i=0}^{n}c_{i}\psi _{i}(x)\) such that

So we can derive

Noting that L(ω(x)) = g(x), we have \(\|g(x)-L(\omega _{n}(x))\|_{1}=\|g(x)-{\sum }_{i=0}^{n}c_{i}f_{i}(x)\|_{1}<\varepsilon .\) Thus, we obtain

That is, \(\omega ^{*}_{n}(x)\) is an ε −approximate solution of (8). □

Next, we find the ε −approximate solution of (8). Denote

According to the norm definition, we have

For obtaining the minimum of the S(c1, c2, ⋯ , cn), we solve the normal equations of (12)

Theorem 4.4

The system of normal (13) has a unique solution.

Proof

The system of normal (13) can be rewritten as:

Denote

then G is a Gram matrix. So G is a nonsingular matrix if and only if f0(x), f1(x), ⋯ , fn(x) are linearly independent.

We prove that f0(x), f1(x), ⋯ , fn(x) are linearly independent. Let

We derive \({\sum }_{i=0}^{n}l_{i}L(\psi _{i}(x))\equiv 0\), or \(L({\sum }_{i=0}^{n}l_{i}\psi _{i}(x))\equiv 0.\) Since L is injective, thus \({\sum }_{i=0}^{n}l_{i}\psi _{i}(x)\equiv 0.\) Noting that \(\{\psi _{i}(x)\}_{i=0}^{n}\) are linearly independent, we obtain li = 0, i = 0,1, ⋯ , n. Therefore, f0(x), f1(x), ⋯ , fn(x) are linearly independent, G is nonsingular, and the system of normal (13) has a unique solution. □

Remark 4.2

The unique solution of normal (13) is denoted as \((c_{0}^{*},c_{1}^{*},\cdots ,c_{n}^{*})\). Similar to [22], we can prove \(S(c_{1},c_{2},\cdots ,c_{n})\geq S(c_{0}^{*},c_{1}^{*},\cdots ,c_{n}^{*}).\) Thus, (10) has a unique solution determined by (13). The desired approximate solution y∗(x) of (5) can be obtained by (9).

Theorem 4.5

Assume that \(\omega ^{*}_{n}({\kern -.4pt}x{\kern -.4pt})\! =\! {\sum }_{i=0}^{3}{\kern -.4pt}a_{i}{\kern -.4pt}{J_{0}^{1}}\eta ^{i}\!({\kern -.4pt}x{\kern -.4pt}){\kern -.4pt}+\!{\sum }_{i=1}^{n}\!{\sum }_{l=0}^{3}\!{\sum }_{k=0}^{2^{i-1}-1}\!c_{ik}^{l}{\kern -.5pt}{J_{0}^{1}}{\kern -.5pt}\phi _{ik}^{l}{\kern -.5pt}({\kern -.5pt}x{\kern -.5pt})\) obtained by Theorem 6 is an ε-approximate solution of Eq.(8), ω(x) is the exact solution of Eq.(8) and |ω(j)(x)| ≤ M, ∀ x ∈ [0, 1], for some j ∈ {2, 3, 4, 5}. Then \(\parallel \omega (x)-\omega ^{*}_{n}(x)\parallel _{1}\leq C\cdot 2^{-(j-1)n}\), where C is a constant.

Proof

According to Theorem 3.2, one has \(\parallel \omega (x)-\omega _{n}(x)\parallel _{1}\leq \bar {C}\cdot 2^{-(j-1)n}\) where \(\bar {C}\) is a constant. Thus, one can derive that

where \(C=\bar {C}\|L^{-1}\|\|L\|\). □

5 Stability analysis

In this section, we consider the stability of our proposed method.

Assume λ is a eigenvalue of the matrix G which is defined in the proof of Theorem 4.4, that is, there exists an \(X=[x_{0},x_{1},\cdots ,x_{n}]^{T}\in \mathbb {R}^{n+1},~X\neq 0,\) such that GX = λX. Hence,

So we derive that

and

Thus, we have

or

Noting that \(\{\psi _{i}(x)\}^{\infty }_{i=0}\) is a set of orthonormal bases of \(W^{1}_{2,0}\), we have

Hence, we can obtain that

Thus, the largest eigenvalue λmax of G satisfies λmax ≤∥L∥2.

On the other hand, we prove that the least eigenvalue of G satisfies \(\lambda _{min}\geq \frac {1}{\|L^{-1}\|^{2}}.\)

Otherwise, there exists {un}, ∥un∥1 = 1 such that \(\|L(u_{n})\|_{1}<\frac {1}{\|L^{-1}\|}\).

Denote ωn = L(un). Hence, \(\|\omega _{n}\|_{1}<\frac {1}{\|L^{-1}\|}\) and un = L− 1(ωn). So we can derive

It is a contradiction. So we obtain \(\lambda _{min}\geq \frac {1}{\|L^{-1}\|^{2}}.\)

Therefore, we have

That is, Cond(G)2 ≤ (∥L∥⋅∥L− 1∥)2, which implies that the spectral condition number [23] of the matrix G is bounded, and the algorithm is stable.

6 Nonlinear fractional delay differential equations

In this section, we solve (3) when f is nonlinear. In order to obtain high-accuracy approximate solutions, we employ F-derivative and Newton’s iterative formula.

Similar to the linear case, we denote

and

Then, (3) can be written as

Hence, there is no harm in supposing that the equation to be solved is

Let u be the exact solution of the above equation and assume that \(D^{\alpha }_{C}u(x)=\omega (x)\). At the beginning of Section 4, we have proved that \(u(x)=J_{0}^{\alpha } \omega (x)\) and \(\omega \in W_{2,0}^{1}\). So \(u(x)\in W_{2}^{\alpha }\).

Define an operator \(F: W_{2}^{\alpha }[0, 1]\rightarrow {W_{2}^{1}}[0, 1]\),

(14) is equivalent to the following equation

Lemma 6.1

Take any \(h\in W_{2}^{\alpha }\), then \(\|h\|_{1}\leq \sqrt {\tilde {M}_{\alpha }}\|h\|_{\alpha }\) holds, where \(\tilde {M}_{\alpha }=\frac {1}{\alpha ^{2}{\Gamma }^{2}(\alpha )}\).

Proof

From the definition of \(W_{2}^{\alpha }\), \(h=J_{0}^{\alpha } h_{1}\) with \(h_{1}\in W_{2,0}^{1}\). By Lemma 4.2, \(\|h\|_{1}=\|J_{0}^{\alpha } h_{1}\|_{1}\leq \sqrt {\tilde {M}_{\alpha }}\|h_{1}\|_{1}=\sqrt {\tilde {M}_{\alpha }}\|h\|_{\alpha }\). □

Theorem 6.1

Suppose that F is defined by (15), then

where \(F^{\prime }(u)\) refers to the Fréchet derivative [10].

Proof

Let \(F(u)=D^{\alpha }_{C}u-g(x)-f[x,u(x),u^{\prime }(x),u(x-\tau ),u^{\prime }(x-\tau )]\triangleq F_{1}(u)-F_{2}(u)\), here \(F_{1}(u)=D^{\alpha }_{C}u-g(x)\), \(F_{2}(u)=f[x,u(x),u^{\prime }(x),u(x-\tau ),u^{\prime }(x-\tau )].\)

According to the definition of F-derivative and property of Caputo derivatives, we have

So we can conclude that \(F_{1}^{\prime }(u)h=D^{\alpha }_{C}h\), that is \(F_{1}^{\prime }(u)=D^{\alpha }_{C}\). On the other hand,

Substituting \(u(x)+h(x), u^{\prime }(x)+h^{\prime }(x), u(x-\tau )+h(x-\tau ), u^{\prime }(x-\tau )+h^{\prime }(x-\tau )\) for y, z, p, q and \(u(x), u^{\prime }(x), u(x-\tau ), u^{\prime }(x-\tau )\) for y0, z0, p0, q0 in the above equation, we get

Therefore, ∃M > 0,

\(\leq M \| h\|_{1}^{2}/\| h\|_{\alpha }\leq M\tilde {M}_{\alpha }{\| h\|_{\alpha }^{2}}/\| h\|_{\alpha }\leq M\tilde {M}_{\alpha }\|h\|_{\alpha }\rightarrow 0,~(\|h\|_{\alpha }\rightarrow 0),\) where Lemma 6.1 is used. Thus,

Since \(F^{\prime }(u)h=F_{1}^{\prime }(u)h+F_{2}^{\prime }(u)h\), then the conclusion holds. □

Remark 6.1

An inequality used here is \(\|h^{2}\|_{1}\leq 2\sqrt {2}\|h\|_{1}^{2}\). In fact, according to the definition of the norm ∥⋅∥1 of \( {W^{1}_{2}}\) and Lemma 2.1, we can obtain

Squaring on both sides of the inequality, and the inequality \(\|h^{2}\|_{1}\leq 2\sqrt {2}\|h\|_{1}^{2}\) holds.

The Newton’s iterative formula for solving F(u) = 0 defined by (16) is

with initial selection u0 = 0, here \(F^{\prime }(u)\) is defined by (17). Equation (18) can be transformed into

which are linear fractional delay differential (5), so we can solve them by the ε −approximate solution method constructed in Section 4.

Remark 6.2

One can refer to [9] about the method of selecting a initial value and the convergence of Newton’s iterative formula.

7 Algorithm implementation of Newton’s iterative formula

In this section, we will concretely show algorithm implementation of Newton’s iterative formula.

Assume

where {ck, i},{dk, i} are unknown. It is not difficult to see that

Using (17) and (20), we have \(F^{\prime }(u_{k})(u_{k+1}-u_{k})\)

Denote

Substituting the above equations (19), (23), and (24) into the (25), we solve the following equations to obtain {dk, i}:

To sum up, the algorithm is as follows:

-

Step 1

Homogenize the equation as (14).

-

Step 2

Input {ψi(x)}, and compute \(\{J_{0}^{\beta }\psi _{i}(x)\}\), for any β > 0.

-

Step 3

Input α. Input the number of approximate items n. Set c0, i = 0.

-

Step 4

Begin the iterative procedure. From k = 0, do

-

(1)

Compute uk by (19).

-

(2)

Compute the F(uk), \(F^{\prime }(u_{k})(u_{k+1}-u_{k})\) according to (23) and (24).

-

(3)

Compute R(x) by (25), solve the equations (26), and obtain the {dk, i}.

-

(4)

Compute ck+ 1, i = ck, i + dk, i (i = 0, 1, 2, ⋯ , n).

-

(5)

If ∥uk+ 1 − uk∥C ≥ ε, go to (1); or else go to Step 5.

-

(1)

-

Step 5

Output the last approximate solution \(u_{k+1}={\sum }_{i=0}^{n}c_{k+1,i}J_{0}^{\alpha }\psi _{i}(x)\).

Using the software Mathematica, we can get the approximate solution uk+ 1(x). We don’t need too much iterations to reach desired approximate solutions because of high efficiency of Newton’s iterative method.

Remark 7.1

The algorithm can be extended to the case of m − 1 < α ≤ m (m ∈N+) for the fractional delay differential (3).

8 Numerical examples

In this section, the algorithm presented above is applied to solve linear and nonlinear fractional delay differential equations. Three examples are considered to illustrate the efficiency of the suggested algorithm. The relative error here is defined as \(R_{n}=\frac {u-u_{n}}{\|u\|_{C}}\) with the exact solution u and an approximate solution un obtained by Theorem 4.3. Basing on the numerical results, we adopt the formula \(\log _{2}[R_{n}/R_{2n-1}]\) to estimate the convergence order.

Example 8.1

Consider the linear fractional differential equation with delay

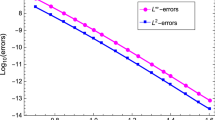

where g(x) is chosen such that the exact solution is x2.3 or x4.1. When the exact solution is x2.3, we solve the equation with n = 65, 257, 513, respectively, and obtain the corresponding approximate solutions. The relative errors between the exact solution and the approximate solutions are displayed in Fig. 1. When the exact solution of this example is chosen to be x4.1 which is of higher smoothness, the relative errors are displayed in Fig. 2. One can see that the relative errors are decreased with the increase of n. The results show that the error is decreased fast when the smoothness of solution is increased. In Table 1, we provide some numerical results illustrating the fact that the convergence order can be improved when smoothness of the solution is improved. Even if the solution of the equation is less smooth, the computing error is still acceptable for the engineering. Therefore, the algorithm is stable, reliable, and adaptive.

Example 8.2

Let us consider the nonlinear fractional differential equation with delay

where g(x) is chosen such that the exact solution of this example is x2.3 or x4.3. The relative errors between the exact solution and the approximate solutions with different times of iteration are displayed in Figs. 3 and 4 with n = 65, 129, 257 in both cases, respectively. The semi-log plots in error are displayed in Figs. 3 and 4. In Table 2, we compare the infinity-norm of relative errors at the discrete points and estimates of convergence order for both solution cases. The results show that our method is still valid, stable, and adaptive when solving nonlinear problems.

Example 8.3

Consider the linear fractional differential equation with delay [15]

The exact solution of this example is x2. The relative errors between the exact solution and the approximate solutions are displayed in Fig. 5 with n = 65, 257, 513, 1025, respectively. One can see that the relative errors are decreased with the increase of n . We compare the infinity-norm of absolute errors at the discrete points with that from Ref. [15] in Table 3. The numerical results show that our method has higher accuracy in this example and the computing errors may be acceptable for engineering.

9 Conclusion

In this paper, we construct a new stable collocation method for solving a kind of nonlinear fractional delay differential equations. More suitable multiscale orthonormal bases of \(W^{1}_{2,0}\) are constructed, error estimations of approximate solutions are given, and the highest convergence order can reach four in the sense of the norm of \({W_{2}^{1}}\). Newton’s iterative formula is used to linearize the nonlinear equation, and for the obtained linear equations we develop an ε-approximate solution method based on multiscale orthonormal bases to solve them. A concrete algorithm implementation is given. Numerical examples show that compared with [15], the presented method is more accurate in dealing with this kind of equations.

References

Sun, H.G., Zhang, Y., Baleanu, D., Chen, W., Chen, Y.Q.: A new collection of real world applications of fractional calculus in science and engineering. Commun. Nonlinear Sci. Numer. Simulat. 64, 213–231 (2018)

Xu, W.X., Sun, H.G., Chen, W., Chen, H.S.: Transport properties of concrete-like granular materials interacted by their microstructures and particle components. Int. J. Modern Phys. B 32(18), 1840011 (2018)

Bhrawy, A.H., Taha, T.M., Machado, J.A.T.: A review of operational matrices and spectral techniques for fractional calculus. Nonlinear Dyn. https://doi.org/10.1007/s11071-015-2087-0

Moghaddam, B.P., Mostaghim, Z.S.: A novel matrix approach to fractional finite difference for solving models based on nonlinear fractional delay differential equations. Ain Shams Eng. J. 5(2), 585–594 (2014)

Hu, J.B., Zhao, L.D., Lu, G.P., Zhang, S.B.: The stability and control of fractional nonlinear system with distributed time delay. Appl. Math. Model. 40, 3257–3263 (2016)

Pimenov, V.G., Hendy, A.S.: BDF-type shifted Chebyshev approximation scheme for fractional functional differential equations with delay and its error analysis. Appl. Numer. Math. 118, 266–276 (2017)

Saeed, U., Rehmana, M.U., Iqbalb, M.A.: Modified Chebyshev wavelet methods for fractional delay-type equations. Appl. Math. Comput. 264, 431–442 (2015)

Deuflhard, P.: Newton methods for nonlinear problems: affine invariance and adaptive algorithms. Springer, Berlin (2004)

Krasnosel’skii, M.A., Vainikko, G.M., Zabreiko, P.P., Rutitskii, Y.B., Stetsenko, V.Y.: Approximate solution of operator equations. Springer, Dordrecht (1972)

Xu, M.Q., Niu, J., Lin, Y.Z.: An efficient method for fractional nonlinear differential equations by quasi-Newton’s method and simplified reproducing kernel method. Math. Meth. Appl. Sci. 41, 5–14 (2018)

Bhrawy, A.H.: An efficient Jacobi pseudospectral approximation for nonlinear complex generalized Zakharov system. Appl. Math. Comput. 247, 30–46 (2014)

Salehi, R.: A meshless point collocation method for 2-D multi-term time fractional diffusion-wave equation. Numer. Algor. 74, 1145–1168 (2017)

Zhao, J.J., Xiao, J.Y., Ford, N.J.: Collocation methods for fractional integro-differential equations with weakly singular kernels. Numer. Algor. 65, 723–743 (2014)

Patel, V.K., Singh, S., Singh, V.K.: Two-dimensional shifted Legendre polynomial collocation method for electromagnetic waves in dielectric media via almost operational matrices. Math. Meth. Appl. Sci. 40, 3698–3717 (2017)

Morgado, M.L., Ford, N.J., Lima, P.M.: Analysis and numerical methods for fractional differential equations with delay. J. Comput. Appl. Math. 252, 159–168 (2013)

Diethelm, K., Ford, N.J.: Analysis of fractional differential equations. J. Math. Anal. Appl. 265(2), 229–248 (2002)

Chen, Z., Lin, Y.Z.: The exact solution of a linear integral equation with weakly singular kernel. J. Math. Anal. Appl. 344, 726–734 (2008)

Chen, J., Huang, Y., Rong, H.W., Wu, T.T., Zeng, T.S.: A multiscale Galerkin method for second-order boundary value problems of Fredholm integro-differential equation. J. Comput. Appl. Math. 290, 633–640 (2015)

Chen, Z., Wu, L.B., Lin, Y.Z.: Exact solution of a class of fractional integro-differential equations with the weakly singular kernel based on a new fractional reproducing kernel space. Math. Meth. Appl. Sci. 41, 3841–3855 (2018)

Alpert, B., Beylkin, G., Gines, D., Vozovoi, L.: Adaptive solution of partial differential equations in multiwavelet bases. J. Comput. Phys. 182, 149–190 (2002)

Lakestani, M., Saray, B.N., Dehghan, M.: Numerical solution for the weakly singular Fredholm integro-differential equations using Legendre multiwavelets. J. Comput. Appl. Math. 235, 3291–3303 (2011)

Cheng, X., Chen, Z., Zhang, Q.P.: An approximate solution for a neutral functional-differential equation with proportional delays. Appl. Math. Comput. 260, 27–34 (2015)

Li, Q.Y., Wang, N.C., Yi, D.Y.: Numerical analysis. Tsinghua University Press, Beijing (2001)

Funding

The work was supported by the National Natural Science Foundation of China (Grant No. 11501150).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shi, L., Chen, Z., Ding, X. et al. A new stable collocation method for solving a class of nonlinear fractional delay differential equations. Numer Algor 85, 1123–1153 (2020). https://doi.org/10.1007/s11075-019-00858-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-019-00858-9