Abstract

This paper mainly addressed the stability analysis and the estimation of domain of attraction for the endemic equilibrium of a class of susceptible-exposed-infected-quarantine epidemic models. Firstly, we discuss the global stability of the disease-free equilibrium and the local stability of the endemic equilibrium in the feasible region D of the epidemic model, respectively. Secondly, we use a geometric approach to investigate the global stability of the endemic equilibrium in a positive invariant region \(D_s(\subset D)\). Furthermore, we estimates the domain of attraction for the endemic equilibrium via sum of squares optimization method, and obtain the optimal estimation by solving an semidefinite programming problem with sum of squares polynomial constraints. Finally, numerical simulation is examined to demonstrate the feasibility and effectiveness of the research results.

Similar content being viewed by others

1 Introduction

Since the contributions to the mathematical model of epidemics, as the susceptible-infected-susceptible(SIS) model and the susceptible-infected-removed (SIR) model, were established in [1, 2], the study of mathematical epidemiology has grown rapidly. A large variety of mathematical models [3–7] have been formulated as dynamical systems of differential equations and applied to analyze the spread speed and control the infectious diseases, such as, susceptible-infected-removed-susceptible model (SIRS), susceptible-exposed-infected epidemic model (SEI), the suscptible-exposed-infected-susceptible model(SEIS), susceptible-exposed-infected-removed model (SEIR) etc. As we all know, it is common knowledge that the quarantine [8, 9] has been widely used to control some infectious diseases and avoid infecting broadly, for example, SARS, AIDS, H7N9, H5N6, H1N1 etc. According to the Kermack-Mckendrick bin model, we can build up a class about the quarantined individuals and call it state Q, which means that some people were quarantined once they were found to have infected with diseases in the exposed state or the infectious state. In fact, the study on the epidemic dynamic models with quarantine has been paid attention by more researchers [10–15]. Hethcote et al. investigated the susceptible-infected-quarantine-susceptible (SIQS) model and the susceptible-infected-quarantine-removed (SIQR) epidemic model by considering the effect of quarantine [10]. Wang et al. [11] gave a class of epidemic models with the quarantine and message delivery. In [12, 13], the quarantine models with multiple disease stages or a disease transmission were discussed, respectively. Dobay et al. [14] researched a SIR model with the quarantine by analyzing an epidemic of syphilis.

In the view of potentially dramatic social impact of epidemic diseases, the investigation of epidemic models with respect to feasible steady states and their stability property study is indeed of great importance. As one main purpose, it can enable forecasting determination of the developmental trend of infection and entire event. In recently years, the stability analysis of the epidemic models with quarantine has attracted a main concern in some literatures [15–20]. Zhang et al. [15] introduced a class of deterministic and stochastic SIQS models and gave the conditions for the globally asymptotically stable of the disease-free equilibrium and the unique endemic equilibrium. Liu et al. [16] discussed the stability of an SIQS model by considering the effects of transport-related infection and exit-entry screenings, and obtained that the disease-free equilibrium is globally asymptotically stable when the basic reproduction number is less than unify, and an endemic equilibrium is locally asymptotically stable with the condition that the reproduction number is great than unify. Similar results can be obtained in the following literatures. In [17, 18], the asymptotic dynamics of the epidemic models for quarantine and isolation were proposed. Sahu and Dhar concerned the dynamics of the susceptible-exposed-quarantined-infectious-hospitalized-recovered-susceptible (SEQIHRS) epidemic model and obtain a globally asymptotically stable disease-free equilibrium and a unique local asymptotically stable endemic equilibrium [19]. Zhao [20] analyzed the global dynamics behaviors of an SIQR model with pulse vaccination.

As mentioned in the previous paragraph, it is easy to find that the local stability of the endemic equilibrium plays an important role in the epidemiologic systems. Investigating the local stability of the epidemic systems inevitably leads to the problem on how to characterize the domain of attraction (DOA) containing the endemic equilibrium. In fact, we all know that it is a difficult problem to get the exact DOA for nonlinear systems [21], so estimating DOA(i.e.,computing the invariant subsets of DOA) has become a value problem. Generally, the fundamental method for estimating the domain of attraction is to solve an optimization problem by a sublevel set of a valid Lyapunov function. Many computing technologies have been used to solve such optimization problem. For example, Zubov’s method [22], the trajectory reversing method [23], LaSalle’s invariance principle [24], LMI optimization methods [25–28], etc. In recently years, the newborn sum of squares (SOS) optimization method [29–31], which were proposed by sum of squares of polynomials and semidefinite programs (SDPs), has been successfully applied to estimate the domain of attraction for nonlinear systems. Chesi et al. [32] had the first time to employ SOS method in conjunction with LMI method to solving convex optimization problems. Since then, some research results on the stability and the estimation of the domain of attraction via SOS optimization were presented (see, e.g., [31, 33, 34] and references therein). Chesi [31] studied the estimation and control of the DOA using SOS programming, and show the application on the various nonlinear nature systems in the first time. Topcua et al. [33] computed the bounds on the DOA of polynomial systems via SOS method. Franzè et al. [34] discussed the estimation DOA of a class of nonlinear polynomial time delay systems using SOS approach. Jarvis-Wloszek [30] also gave the great value research results on local stability of polynomial systems based on SOS optimization. Tan used SOS programming to deal with the nonlinear control problems in his Ph.D dissertation [35].

In conjunction with the above pointed investigations, the issue on how to estimate the DOA for the epidemic models by using the proper optimization methods has become one of the main research challenges. It is important to fully understand the dynamic characteristic of the infection spread as a function of the initial population distribution. Zhang et al. [36] set up an LMI optimization problems with polynomial constraints to solving the DOA of a class of SIRS epidemic model. Matallana et al. [37] used the Lyapunov-based approach to study the estimation of the DOA of a class of SIR models with the isolation of infected individuals. Li et al. [38] used the LMI methods on the basis the moments theory to estimate the DOA of an SIR epidemic model. Jing et al. [39] and Chen et al. [40] tried to solve the estimation of the DOA of SIRS and SEIR models via SOS method, respectively, and their research results demonstrated SOS optimization method is more effective on the estimation of the DOA of some epidemic models.

Motivation for our research endeavors come from the facts found and presented in some above literatures. Some recent solutions to the here investigated for the SIRS models and SEIR models were given in [37, 40], and here we extend those results further to the case of SEIQ epidemic models. In this paper, we wish to investigate the stability of a class of the susceptible-exposed-infected-quarantine (SEIQ) epidemic models, which is built up by the characteristics of infectious diseases in the different stages and the effects of quarantine, and estimate the DOA of the local stable endemic equilibrium based on SOS optimization method. We analyze the stability of the equilibrium points of the SEIQ model, including the global stability of the disease-free equilibrium, the local stability of the endemic equilibrium and the global stability of the endemic equilibrium in a positive invariant region, by LaSalle’s invariance principle and a geometric approach, which was proposed in [41, 42]. Furthermore, we use the convex optimization techniques via SOS method to obtain the largest estimation of the DOA for the local stable endemic equilibrium by finding the largest level set of a Lyapunov function.

2 Stability analysis of an SEIQ epidemic model

Following these works, in this section, we consider the following structural SEIQ epidemic model, which has four states: susceptible (S), exposed(E), infected(I) and quarantine(Q), which divide the total population into four classes. In our model, we consider that some exposed people and infected people will be quarantined once they were found to have infectious, and some exposed people and quarantined people will recover the healthy. Figure 1 gives a diagram of interactions between S, E, I and Q of the SEIQ epidemic model. Here, we assume that S(t), E(t), I(t) and Q(t) are the number of susceptible, exposed, infectious and quarantined individuals in the total population N(t) at time t, and \(\beta SI\) is the standard bilinear incidence rate, where \(\beta \) represents how fast the susceptible people come into the exposed class; A is the constant recruitment rate of the population, \(\mu \) is the natural mortality rate of all populations, \(\alpha \) is the mortality rate of infected and quarantined people due to illness, \(\varepsilon \) is the rate at which some exposed people become infective, c is the rate that the infected recovers and comes into the susceptible class, \(\sigma _1\) and \(\sigma _2\) represent the quarantined rate of the exposed people and the infected people, respectively. \(\gamma _1\), \(\gamma _2\) and \(\gamma _3\) represent the recovery rate of the exposed, infected and quarantined individuals, respectively.

The diagram leads to the following equations model as the SEIQ model, which can be formulated as

where all the parameters are strictly positive constants.

From (1) and \(N(t) = S(t) + E(t) + I(t) + Q(t)\), the derivative of N can be obtained as

when \(E=I=Q=0\), we get that \(\mathop {\lim }\limits _{t \rightarrow \infty } \sup N(t) \le \frac{A}{\mu }\), and when \(N > A/\mu \), \(\dot{N} < 0\), so the feasible region D of (1) is

Because all solutions of (1) will remain or be tend to the field of D, it is easy to know that the feasible region D is the positive invariant set for system (1). We denote the basic reproduction number \(R_{0}\) as follows

and then, it is simple to get equilibrium points \(P_{0}\left( A/\mu ,0,0,0\right) \) and \( P^* \left( {S^*, E^*, I^*, Q^* } \right) \), where

In view of that, we obtain that when \(R_0 \le 1\), there exists a unique equilibrium \(P_{0}\), i.e., the disease-free equilibrium point for system (1); when \(R_0 > 1\), there exist an equilibrium \(P_{0}\) and a unique endemic equilibrium \(P^*\in D_s\), where \(D_s\) is an positive invariant subset of D.

Now we will discuss the stability of equilibriums \(P_{0}\) and \(P^* \) for system (1), respectively. The main results can addressed as follows

Theorem 1

When \(R_0 \le 1\), the unique disease-free equilibrium \(P_{0}\left( A/\mu ,0,0,0\right) \) is globally asymptotically stable in D, and when \(R_{0}>1\), \(P_{0}\) is unstable in D.

Proof

Constructing a Lyapunov function as follows

when \(R_0 \le 1\), the derivative of V is

and If and only if \(I=0\), then \( \dot{V} = 0\). With LaSalle’s invariance principle, we obtain that \(P_{0}\left( A/\mu ,0,0,0\right) \) is globally asymptotically stable in D.

When \(R_{0}>1\), it can easy to get that if \(E\ne 0\), \(I\ne 0\) and \(Q\ne 0\), and when \(S \rightarrow \frac{A}{\mu }\) and \( S > \frac{A}{R_{0}\mu }\), one has \(\dot{V} > 0\), and the following conclusion can be obtained that any solutions of D, which are near to \(P_{0}\), will be away from \(P_{0}\), then \(P_{0}\) is unstable in D. \(\square \)

Remark 1

In fact, the Routh-Hurwitz stability criterion also can be used to prove Theorem 1 as follows, for \(P_{0},\) we have

where \(M = \mu + \varepsilon + {\sigma _1} + {\gamma _1}\) and \(N = \mu + \alpha + c + {\sigma _2} + {\gamma _2},\) and the eigenvalue equation is be obtained as

then it is easy to know that when \(R_{0}<1\), \(P_{0}\left( A/\mu ,0,0,0\right) \) is globally asymptotically stable in D, and when \(R_{0}>1\), \(P_{0}\) is unstable in D.

Theorem 2

When \(R_0 > 1\), the unique endemic equilibrium \( P^* \left( {S^*, E^*, I^*, Q^* } \right) \) is local asymptotically stable in D for system (1).

Proof

First, the Jacobian matrix of system (1) in \( P^* \left( {S^*, E^*, I^*, Q^* } \right) \) can be gotten as

Then, the eigenvalue equation \(det( {\lambda - J})= 0\) can be computed as

with

For Eq. (8), due to \({\mu +\alpha + {\gamma _3}}>0\), we can only discuss the following equation as

With the following inequality condition

one has

then

Meanwhile, we can obtain that

According to the Routh-Hurwitz stability criterion, it is easy to know that the real part of all roots is greater than or equal to 0, so one get that \( P^* \left( {S^*, E^*, I^*, Q^* } \right) \) is local asymptotically stable in D for system (1) when \(R_{0}>1\). \(\square \)

Remark 2

From Theorem 2, if \( P^*\) is local asymptotically stable in the set D, its basin of attraction is an open subset of the feasible region D and contains a neighborhood of \( P^*\), and then we can deduce that equilibrium \( P^*\) is said to global asymptotically stable with respect to an open subset \(D_s (\subset D)\), where \(P^*\) is the unique equilibrium in \(D_s\).

In [41], Li and Muldowney proposed a geometric method to deal with this global stability problem based on the criteria of Bendixson and Dulac, the core result of the above geometric method can be introduced as follows. For a dynamical system \(\dot{x} =f(x)\), where the map \(x\rightarrow f(x)\) from the region \(\varLambda \) to \({{\mathbb {R}}^n}\), and the initial condition \(x(0)=x_0\), the solutions of the system are \(x(t,x_0)\). Assume that \(\bar{x}\) is the local asymptotically stable equilibrium in \(\varLambda \), then the basic criteria for the global stability of \(\bar{x}\) with respect to \(\varLambda \) is implied by its local stability can be obtained as Lemma 1.

Lemma 1

[41] Assume that the region \(\varLambda \) is simple connected, and there is a compact absorbing set \(\varLambda _0 (\subset \varLambda )\), where \(\bar{x}\) is the only equilibrium in \(\varLambda _0\), if the quantity \(\bar{q}_2\) satisfies

where \(B = {Z_f}{Z^{ - 1}} + Z\frac{{\partial {f^{[2]}}}}{{\partial x}}{Z^{{\mathrm{- }}1}}\), Z(x) is a matrix valued function, \({Z_f} = \frac{{\partial {Z_{ij}}}}{{\partial x}}f\) and the measure \(\rho (B) = \mathop {\lim }\limits _{h \rightarrow {0^ + }} \frac{{\left\| {{I_0} + hB} \right\| - 1}}{h}\),\(\frac{{\partial {f^{[2]}}}}{{\partial x}}\) is the second additive compound matrix of \(\frac{{\partial {f}}}{{\partial x}}\), then equilibrium \(\bar{x}\) is global asymptotically stable in \(\varLambda _0\).

With the mentioned above, Li and Muldowney [41] used Lemma 1 to prove the global stability of the endemic equilibrium of an SEIR epidemic model. Huan et al. [42] gave the sufficient conditions of the global stability for a dynamic model of Hepatitis B via the geometric method. Lan [43] also obtained the results on global stability of some epidemic models using Lemma 1 in her dissertation. Zhou and Cui [45] gave the proof of the global stability of an susceptible-vaccinated-treated-exposed-infectious (SEIV) model via the Poimcaré-Bendixson criterion. Now, we will study that the endemic equilibrium \(P^*\) is global asymptotically stable in \(D_s\) with the above results.

Theorem 3

When \(R_0 > 1\), the endemic equilibrium \(P^*\) of system (1) is global asymptotically stable in \(D_s (\subset D)\).

Proof

First, it is easy to find that there exists a compact absorbing set \(D_s (\subset D)\), where \(P^*\) is the only equilibrium in \(D_s\), then according to Lemma 1, we only prove that \(\bar{q}_2<0\). Because the first three equations of (1) do not contain the state Q, so we consider the subsystem as follows

then the Jacobian matrix of system (14) in \(P^*\) is

The second additive compound matrix \(J^{[2]}\) of \(J\left| {_{{(S^*,E^*,I^*)}}} \right. \) can be gotten as

Choosing a proper function \(Z = \left( {\begin{array}{*{20}{c}} 1 &{} 0 &{} 0 \\ 0 &{} {\frac{E^*}{I^*}} &{} 0 \\ 0 &{} 0 &{} {\frac{E^*}{I^*}} \\ \end{array}} \right) \), then

and one has

where

The estimation of the Lozinskiľ measure \(\rho (B)\) corresponding to the vector norm \(\left\| \cdot \right\| \) in \({{\mathbb {R}}^3} \cong {{\mathbb {R}}^{^{\left( {\begin{array}{*{20}{c}} 3 \\ 2 \\ \end{array}} \right) }}} \) can be obtained as follows

where

According to system (14), we know that

and there exists \(t^*\), when \(t^* > t\), one has that \(\beta S-c>0\), then

So when \(t^* > t\), one get

Substituting (18) to the quantity \(\bar{q}_2\), one has

Because the subsystem (14) is uniformly persistent, there exists \(t>t^*\) such that \(\frac{1}{t}\ln {{E(t)}} < \frac{\mu }{2}\), then

we gain that equilibrium \((S^*,E^*,I^*)\) is global asymptotically stable for system (14) in \(D_s \). And then, we solve equation

the solution of (20) is

where

it implies that when \(t \rightarrow \infty \), one has

then the conclusion can be obtained that the endemic equilibrium \(P^*\) of system (1) is global asymptotically stable in \(D_s (\subset D)\) with the conditions \(R_0 > 1\). \(\square \)

Remark 3

According to Theorem 2 and 3, the endemic equilibrium \(P^*\) of system (1) is global asymptotically stable in \(D_s \) and is local asymptotically stable in D, where \(D_s\) is the compact absorbing subset of D, and \(P^*\) is only equilibrium in \(D_s\), so it is inevitable to solve the optimization problem how to maximally characterize the stable region \(D_s\) containing the unique endemic equilibrium \(P^*\). Then, the estimation the domain of attraction \(D_s\) for the endemic equilibrium \(P^*\) will be mainly discussed in next section.

3 Estimation the domain of attraction for the endemic equilibrium

From system (1), there is no the variable Q in the three equations, and the state Q only has contact with E and I, so we can only analyze the behavior of S, E and I of subsystem (14) to determine Q. According to subsystem (14), the feasible region \(D_0\) can be described as

and when \(R_{0}>1\), the local stable endemic equilibrium in \(D^*_0\) is \( P^*_0 \left( {S^*, E^*, I^* } \right) \). Let \( x_1 = S - S^*\), \(x_2 = E - E^* \) and \(x_3 = I - I^* \), the system (14) can be rewritten as

and the endemic equilibrium \( P^*_0 \) is translated into as \( P_0 (0,0,0)\), the feasible region \(D_0\) is

where \(X=({{x_1},{x_2},{x_3}})\). Let \(V_0(x_1,x_2,x_3)\) be the Lyapunov function defined on \(D_0\) containing the origin, any bounded region

is a domain of attraction for (21).

According to the framework of the expanding interior algorithm based on SOS programming [30], we firstly restrict \(V_0(x)\in \mathfrak {R}_3\) with \(V_0(0)=0\), \( \mathfrak {R}_3\) is the set of all polynomials in three variables with real coefficients, and we define a variable sized region

where \(P\left( {{x_1},{x_2},{x_3}} \right) \in {\varSigma _3}\), which satisfies the Lyapunov conditions, \({\varSigma _3}\) is the set of all sum of squares polynomials in three variables. Now we assume

then \(P_\varrho \subset D_0\).

Now the optimization problem for the estimation of the DOA based on sum of squares can be introduced. The task in this problem to maximize \(\varrho \) under certain constraints and is defined as follows

Instead of the constraints \({x_1},{x_2},{x_3} \ne 0\) with \(l_1\left( {x_1},{x_2},{x_3}\right) (\in \varSigma _3) \ne 0,\) \(l_2\left( {{x_1},{x_2},{x_3}} \right) (\in \varSigma _3)\ne 0\), (22) can be rewritten as

Under the framework of the Positivstellensatz [29, 30], (23) can be translated into the following optimization problem with equivalence constraints,

where s’s and the l’s are the sum of squares of polynomials, they are all even degree, \( d_{V_0}\) is even, \( d_{V_0}= d_{l_1 }\), \( \deg (ps_6 ) \ge d_{V_0}, \) \( \deg (V{_0}s_8 ) \ge \deg (\dot{V_0}s_9 )\), \( \deg ({V_0}s_8 ) \ge d_{l_2 }\).

In order to use SOSTOOLs box [44] to solve the above optimization problem (24), we choose that \(k_1 = k_2 = k_3 = 1\), \(s_2 = l_1\) and factor \(l_1\) out \(s_1\), and set \(s_3 = s_4 = 0\) and factor out a \((V_0 - 1)\), \(s_{10} = 0\) and factor out \(l_2\), then one gets that

The expanding interior algorithm [30] is used to solve the above optimization problem (25). We denote the Lyapunov function \(V^{(i=0)}_0 \in \mathfrak {R}_3\), and set \(\varrho ^{(i=0)}=0\), i is the iteration index and choose a specified tolerance \(\varpi \) and the degrees of \(V,l_1 ,l_2 ,s_6 ,s_8 ,s_9 \). By set \(V_0=V_0^{i-1}\), \(s_8=s_8^{(i)}\) and \(s_9=s_9^{(i)}\), we can solve the linesearch (25) on \(\varrho \) where \({s_6} \in {\varSigma _{3,{d_{{s_6}}}}}\),\({s_8} \in {\varSigma _{3,{d_{{s_8}}}}}\),\({s_9} \in {\varSigma _{3,{d_{{s_9}}}}}\), \({V_0} \in {\mathfrak {R}_3}\), and \(V(0) = 0\) after finite times iterations, when \(|\beta ^{(i)}-\beta ^{(i - 1)}|\le \varpi \), then \( \varrho ^{(i)} = \varrho \) and \(V_0^{(i)} = V_0\) are the optimal solutions of the optimization problem (25), and we know that the set \(D_{\varrho }:= {\{({x_1},{x_2},{x_3}) \in {\mathbb {R}}^3 | V_0^{(i)} ({x_1},{x_2},{x_3})= V_0 \le 1\}}\) is the optimal estimation of the DOA for the endemic equilibrium \( P^*_0 \) of (21).

Remark 4

For the solving process of the optimization problem (25), the linesearch of \(\varrho \) can be finished by the following optimization problems as

and

By the finite times iterations of (26) and (27) , if \(|\beta ^{(i)}-\beta ^{(i - 1)}|\) is less than \(\varpi \), the optimal results can be obtained, including the largest estimation of DOA, the Lyapunov function.

Based on the discussions above, we use two examples to demonstrate the feasibility and effectiveness of the above optimization method for the estimation of the DOA via SOS optimization.

Example I

Assume the parameters of the system (1)is \(A = 2,\beta = \frac{1}{2},\mu = c = \frac{1}{4}, \varepsilon = \frac{1}{2},{\sigma _1} = {\gamma _1} = \frac{1}{8},\alpha = \frac{1}{2},{\sigma _2} = {\gamma _2} = \frac{1}{4},{\gamma _3} = \frac{1}{2}\), then the system model can be obtained as

The subsystem model contained S, E and I can be rewritten as

The basic reproduction number \(R_{0}=\frac{4}{3}>1\), and the local stable endemic equilibrium for (29) is \((6,\frac{6}{11},\frac{2}{11})\), then set \(S=x_1+6,E=x_2+\frac{6}{11},I=x_3+\frac{2}{11}\), (29) can be converted to (30),

The feasible region \(D_{01}\) of (30) is

Setting the tolerance as \(\beta ^{(i)} - \beta ^{(i - 1)}=0.01 \) and choosing \( d_{V_0} = 2,d_{s_6 } = d_{s_8 } = 2,d_{s_9 } = 0,d_{l_1 } = 2,d_{l_2 } = 4\), with the optimization problems (22–25), we can get the optimal solutions under the framework of SOS optimization, the Lyapunov function \(V_{01}(x,y,z)\) can be gained as follows

and \(\varrho _{max}=0.0694,\) then the domain of attraction estimated of (30) by using sum of squares optimization method, which can be obtained as follows

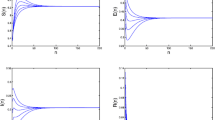

and Fig. 2 gives the simulation result for the domain of attraction estimated of (30), where we set \(x_1=x\), \(x_2=y\) and \(x_3=z\) in Fig. 2. It also can be observed from Fig. 2 that the feasibility of the above research results for the estimation of the DOA via SOS method.

The largest estimation of the DOA for the local stable equilibrium of (30) using SOS method

Example II

In this model, we assume that \(A = 4, \beta = \frac{1}{2}, \mu = c = \frac{1}{2}, \varepsilon = \frac{1}{2},{\sigma _1} = {\gamma _1} ={0}, \alpha = \frac{1}{6}, {\sigma _2} = {\gamma _2} = \frac{1}{6},{\gamma _3} = \frac{1}{2}\), the subsystem for this model can be rewritten as

The basic reproduction number satisfies that \(R_{0}=\frac{4}{3}>1\), and the local stable endemic equilibrium for (32) is \(\left( 6, \frac{6}{5},\frac{2}{5}\right) \). Set \(S=x_1+6,E=x_2+\frac{6}{5},I=x_3+\frac{2}{5}\), (32) can be transformed into as

The feasible region \(D_{02}\) is

Similar to Example 1, we set the same tolerance and the degrees for the variables, then the optimal solutions can be expressed as

and \(\varrho _{max} =0.6330\). The largest estimation of domain of attraction of (33) is

Figure 3 gives two estimations results of the DOA for (33) by using the different optimization methods. The smaller ellipsoid can be obtained by choosing the optimization method based on moment matrices, which can be shown in [27, 38], and the larger ellipsoid is computing by using the SOS optimization. It is easy to find the effectiveness of the research results from Fig. 3.

Remark 5

In fact, sum of square method provides a dynamic iterative linesearch process to obtain the most appropriate Lyapunov function and the largest estimation of DOA, it is more effective than some previous optimization methods [27, 28, 38], which set a selected Lyapunov function.

The largest estimations of the DOA for the local stable equilibrium of (33) using SOS and moment method

4 Conclusions

This paper has analyzed the stability of a class of SEIQ epidemic models, and estimated the domain of attraction for the local stable endemic equilibrium by using the advance SOS optimization method. Based on the discussion on the stability of the equilibriums, we have verified that the proof of global stability of the local stable endemic equilibrium in an positive invariant subset of the feasible region, can be efficiently achieved. In addition, we successfully used SOS optimization technique to obtained the optimal estimation of the domain of attraction for the endemic equilibrium. With two numerical examples, simulation results have illustrated the feasibility and effectiveness of the research results on the proof theory and the computational method addressed. Currently, some researchers are attempting to estimate the dependence of the domain of attraction on chaos emergence [46] or the Hamilton energy function, and discuss the relationship between the Hamilton energy function [47, 48] and the domain of attraction, so to solve the above much-studied topics by using SOS optimization technique are our future works.

References

Kermack, W.O., McKendrick, A.G.: A contribution to the mathematical theory of epidemics. Proc. R. Soc. Lond. A 115(772), 700–721 (1927)

Kermack, W.O., McKendrick, A.G.: Contributions to the mathematical theory of epidemics. II. The problem of endemicity. Proc. R. Soc. Lond. A 138(834), 55–82 (1932)

Diekmann, O., Heesterbeek, J.A.P.: Mathematical Epidemiology of Infectious Diseases: Model Building, Analysis and Interpretation. Wiley, New York (2000)

Brauer, F., Castillo-Chavez, C.: Mathematical Models in Population Biology and Epidemiology. Springer, New York (2001)

Ma, Z.E., Zhou, Y.A., Wang, W.D.: Mathematical Modeling and Research of Epidemic Dynamical Systems. Science Press, Beijing (2004)

Xu, R., Ma, Z.E.: Global stability of a delayed SEIRS epidemic model with saturation incidence rate. Nonlinear Dyn. 61, 229–239 (2010)

Yuan, Z.H., Ma, Z.J., Tang, X.H.: Global stability of a delayed HIV infection model with nonlinear incidence rate. Nonlinear Dyn. 68, 207–214 (2012)

Gensini, G.F., Yacoub, M.H., Conti, A.A.: The concept of quarantine in history: from plague to SARS. J. Infect. 49(4), 257–261 (2004)

Hsieha, Y.H., Kingb, C.C., Chenc, C.W.S., Hod, M.S., et al.: Impact of quarantine on the 2003 SARS outbreak: a retrospective modeling study. J. Theor. Biol. 2007(244), 729–736 (2003)

Herbert, H., Ma, Z., Liao, S.: Effects of quarantine in six endemic models for infectious diseases. Math. Biosci. 180, 141–160 (2002)

Wang, X.Y., Zhao, T.F., Qin, X.M.: Model of epidemic control based on quarantine and message delivery. Phys. A Stat. Mech. Appl. 458, 168–178 (2016)

Safi, M.A., Gumel, A.B.: Qualitative study of a quarantine/isolation model with multiple disease stages. Appl. Math. Comput. 218(5), 1941–1961 (2011)

Safi, M.A., Gumel, A.B.: Mathematical analysis of a disease transmission model with quarantine, isolation and an imperfect vaccine. Comput. Math. Appl. 61(10), 3044–3070 (2011)

Dobay, A., Gall, G.E.C., Rankin, D.J., Bagheri, H.C.: Renaissance model of an epidemic with quarantine. J. Theor. Biol. 317(21), 348–358 (2013)

Zhang, X.B., Huo, H.F., Xiang, H., Meng, X.Y.: Dynamics of the deterministic and stochastic SIQS epidemic model with non-linear incidence. Appl. Math. Comput. 243(15), 546–558 (2014)

Liu, X.N., Chen, X.P., Takeuchi, Y.: Dynamics of an SIQS epidemic model with transport-related infection and exit-entry screenings. J. Theor. Biol. 285(1), 25–35 (2011)

Safi, M.A., Gumel, A.B.: Global asymptotic dynamics of a model for quarantine and isolation. Discrete Contin. Dyn. Syst. Ser. B 14, 209–931 (2010)

Safi, M.A., Gumel, A.B.: Dynamics of a model with quarantine-adjusted incidence and quarantine of susceptible individuals. J. Math. Anal. Appl. 399, 565–575 (2013)

Sahu, G.P., Dhar, J.: Dynamics of an SEQIHRS epidemic model with media coverage, quarantine and isolation in a community with preexisting immunity. J. Math. Anal. Appl. 421, 1651–1672 (2015)

Zhao, W.C.: Global dynamics behaviors of an SIQR epidemic disease model with quarantine and pulse vaccination. J. Math. Pract. Theory 39(17), 78–85 (2009)

Khalil, H.K.: Nonlinear Systems, 3rd edn. Prentice Hall, Upper Saddle River (2002)

Zubov, V.I.: Methods of A.M. Lyapunov and their application. Izdatel’stvo Leningradsky University (1961)

Loccufier, M., Noldus, E.: A new trajectory reversing method for estimating stability regions of autonomous nonlinear systems. Nonlinear Dyn. 21(3), 265–288 (2000)

LaSalle, J.P., Lefschetz, S.: Stability by Liapunov’s Direct Method with applications. Academic Press, New York (1961)

Chesi, G.: Estimating the domain of attraction for non-polynomial systems via LMI optimizations. Automatica 45, 1536–1541 (2009)

Chesi, G.: Estimating the domain of attraction via union of continuous families of Lyapunov estimates. Syst. Control Lett. 56, 326–333 (2007)

Hachicho, O., Tibken, B.: Estimating domains of attraction of a class of nonlinear dynamical systems with LMI methods based on the theory of moments, pp. 3150–3155. Nevada, In Proc. CDC. Las Vegas (2002)

Hachicho, O.: A novel LMI-based optimization algorithm for the guaranteed estimation of the domain of attraction using rational Lyapunov functions. J. Frankl. Inst. 344, 535–552 (2007)

Parrilo, P.A.: Structured Semidefinite Programs and Semialgebraic Geometry Methods in Robustness and Optimization. Ph.D. dissertation. (2000)

Jarvis-Wloszek, Z.W.: Lyapunov based analysis and controller synthesis for polynomial systems using sum-of squares optimization, Ph.D. dissertation. (2003)

Chesi, G.: Domain of Attraction: Analysis and Control Via SOS Programming. Springer, New York (2011)

Chesi, G., Tesi, A., Vicino, A., Genesio, R.: On convexification of some minimum distance problems. In: In proc. of the 5th European Control Conference, pp. 1446-1451 (1999)

Topcua, U., Packard, A., Seiler, P.: Local stability analysis using simulations and sum-of-squares programming. Automatica 44, 2269–2657 (2008)

Franzè, G., Famularo, D., Casavola, A.: Constrained nonlinear polynomial time-delay systems: a sum-of-squares approach to estimate the domain of attraction. IEEE Trans. Autom. Control 57(10), 2673–2679 (2012)

Tan, W.H.: Nonlinear control analysis and synthesis using sum-of-squares programming, Ph.D. dissertation. (2006)

Zhang, Z.H., Wu, J.H., Suo, Y.H., Song, X.Y.: The domain of attraction for the endemic equilibrium of an SIRS epidemic model. Math. Comput. Simul. 81, 1697–1706 (2011)

Matallana, L.G., Blanco, A.M., Bandoni, J.A.: Estimation of domains of attraction in epidemiological models with constant removal rates of infected individuals. In proc. of the 16th argentine bioengineering congress and the 5th conference of clinical engineering. J. Phys. Conf. Ser. 90, 012052 (2007)

Li, C.J., Ryoo, C.S., Li, N., Cao, L.: Estimating the domain of attraction via moment matrices. Bull. Korean Math. Soc. 46, 1237–1248 (2009)

Jing, Y.W., Chen, X.Y., Li, C.J., Ojleska, V.M., Dimirovski, G.M.: Domain of attraction estimation for SIRS epidemic models via sum-of-square optimization. In: proc. of the 18th IFAC World Congress, pp. 14289–14294 (2011)

Chen, X.Y., Li, C.J., Lü, J.F., Jing, Y.W.: The domain of attraction for a seir epidemic model based on sum of square optimization. Bull. Korean Math. Soc. 49(3), 517–528 (2012)

Li, M.Y., Muldowney, J.S.: A geometric approach to global-stability problems. SIAM J. Math. Anal. 27, 1070–1083 (1996)

Huan, Q., Ning, P., Ding, W.: Global stability for a dynamic model of hepatitis B with antivirus treatment. J. Appl. Anal. Comput. 3(1), 37–50 (2013)

Lan, X.J., Huan, P., Ning, W. Ding: The study for several epidemic models with a varying total population size, Dissertation. (2008)

Papachristodoulou, A., et al.: SOSTOOLS version 3.00 sum of squares optimization toolbox for MATLAB. arXiv preprint (2013). arXiv:1310.4716

Zhou, X.Y., Cui, J.A.: Analysis of stability and bifurcation for an SEIV epidemic model with vaccination and nonlinear incidence rate. Nonlinear Dyn. 63, 639–653 (2011)

Song, X.L., Jin, W.Y., Ma, J.: Energy dependence on the electric activities of a neuron. Chin. Phys. B 24, 128710 (2015)

Li, F., Yao, C.G.: The infinite-scroll attractor and energy transition in chaotic circuit. Nonlinear Dyn. 84, 2305–2315 (2016)

Sarasola, C., Torrealdea, F.J., d’Anjou, A., Moujahid, A., et al.: Energy balance in feedback synchronization of chaotic systems. Phys. Rev. E 69, 011606 (2004)

Acknowledgments

All authors would like to express their sincere appreciation to Jos F. Sturm, who developed the widely used optimization software package called SeDuMi, and to A. Papachristodoulou, J. Anderson, G. Valmorbida, S. Prajna, P. Seiler and Pablo A. Parrilo for writing and maintaining the SOSTOOLS software. Meanwhile, we would like to thank the referees, the associate editor and editor-in-chief for the useful comments for our work. This work was supported by the Applied Mathematics Enhancement Program (AMEP) of Linyi University and the National Natural Science Foundation of China, under Grants 61273012, 61304023 and 61403179, and by the International Cooperations and Training Project of Youth Teacher in Colleges and Universities in Shandong Province of 2015, and by a Project of the Postdoctoral Sustentation Fund of Jiangsu Province under Grant 1402042B.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, X., Cao, J., Park, J.H. et al. Stability analysis and estimation of domain of attraction for the endemic equilibrium of an SEIQ epidemic model. Nonlinear Dyn 87, 975–985 (2017). https://doi.org/10.1007/s11071-016-3092-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-016-3092-7