Abstract

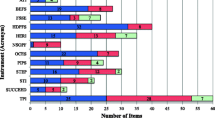

Ambitious efforts are taking place to implement a new vision for science education in the United States, in both Next Generation Science Standards (NGSS)-adopted states and those states creating their own, often related, standards. In-service and pre-service teacher educators are involved in supporting teacher shifts in practice toward the new standards. With these efforts, it will be important to document shifts in science instruction toward the goals of NGSS and broader science education reform. Survey instruments are often used to capture instructional practices; however, existing surveys primarily measure inquiry based on previous definitions and standards and with a few exceptions, disregard key instructional practices considered outside the scope of inquiry. A comprehensive survey and a clearly defined set of items do not exist. Moreover, items specific to the NGSS Science and Engineering practices have not yet been tested. To address this need, we developed and validated a Science Instructional Practices survey instrument that is appropriate for NGSS and other related science standards. Survey construction was based on a literature review establishing key areas of science instruction, followed by a systematic process for identifying and creating items. Instrument validity and reliability were then tested through a procedure that included cognitive interviews, expert review, exploratory and confirmatory factor analysis (using independent samples), and analysis of criterion validity. Based on these analyses, final subscales include: Instigating an Investigation, Data Collection and Analysis, Critique, Explanation and Argumentation, Modeling, Traditional Instruction, Prior Knowledge, Science Communication, and Discourse.

Similar content being viewed by others

Notes

Although it is difficult to precisely measure the cognitive level in any given task in a survey instrument (Tekkumru Kisa et al., 2015), the delineations described in this instrument represent a reasonable step toward attending to levels of cognitive involvement in the practices examined.

References

Abd-El-Khalick, F., Boujaoude, S., Duschl, R. A., Lederman, N. G., Mamlok-Naaman, R., Hofstein, A., & Tuan, H.-L. (2004). Inquiry in science education: International perspectives. Science Education, 88, 397–419.

Allen, M. J., & Yen, W. M. (1979). Introduction to measurement theory. Monterey, CA: Brooks-Cole.

Anderson, R. D. (2002). Reforming science teaching: What research says about inquiry. Journal of Science Teacher Education, 13, 1–12.

Au, W. (2007). High-stakes testing and curricular control: A qualitative metasynthesis. Educational Researcher, 36(5), 258–267.

Bandalos, D. L., & Finney, S. J. (2010). Factor analysis: Exploratory and confirmatory. In G. R. Hancock & R. O. Mueller (Eds.), The reviewer’s guide to quantitative methods in the social sciences (pp. 93–114). New York: Routledge.

Banilower, E. R., Heck, D. J., & Weiss, I. R. (2007). Can professional development make the vision of the standards a reality? The impact of the national science foundation’s local systemic change through teacher enhancement initiative. Journal of Research in Science Teaching, 44, 375–395.

Banilower, E. R., Smith, S. P., Weiss, I. R., Malzahn, K. A., Campbell, K. M., & Weis, A. M. (2013). Report of the 2012 national survey of science and mathematics education. Chapell Hill, NC: Horizon Research.

Bartholomew, H., Osborne, J., & Ratcliffe, M. (2004). Teaching students “ideas-about-science”: Five dimensions of effective practice. Science Education, 88, 655–682.

Burstein, L., McDonnell, L. M., Van Winkle, J., Ormseth, T., Mirocha, J., & Guitón, G. (1995). Validating national curriculum indicators. Santa Monica, CA: RAND Corporation.

Calabrese Barton, A. (2002). Learning about transformative research through others’ stories: What does it mean to involve “others” in science education reform? Journal of Research in Science Teaching, 39, 110–113.

Calabrese Barton, A., Tan, E., & Rivet, A. (2008). Creating hybrid spaces for engaging school science among urban middle school girls. American Educational Research Journal, 45(1), 68–103.

Campbell, T., Abd-Hamid, N. H., & Chapman, H. (2010). Development of instruments to assess teacher and student perceptions of inquiry experiences in science classrooms. Journal of Science Teacher Education, 21, 13–30.

Canale, M., & Swain, M. (1980). Theoretical bases of communicative approaches to second language teaching and testing. Applied Linguistics, 1, 1–47.

Capps, D. K., & Crawford, B. A. (2013). Inquiry-based instruction and teaching about nature of science: Are they happening? Journal of Science Teacher Education, 24, 497–526.

Center on Education Policy. (2007). Choices, changes, and challenges: Curriculum and instruction in the NCLB era. Washington, DC: Center on Education Policy.

Crocker, L., & Algina, J. (1986). Introduction to classical and modern test theory. Orlando, FL: Holt, Rinehart and Winston.

Cuban, L. (2013). Inside the black box of classroom practice: Change without reform in American education. Cambridge, MA: Harvard Education Press.

Desimone, L. M., & Le Floch, K. C. (2004). Are we asking the right questions? Using cognitive interviews to improve surveys in education research. Educational Evaluation and Policy Analysis, 26, 1–22.

Desimone, L. M., Porter, A. C., Garet, M. S., Yoon, K. S., & Birman, B. F. (2002). Effects of professional development on teachers’ instruction: Results from a three-year longitudinal study. Educational Evaluation and Policy Analysis, 24, 81–112.

Dorph, R., Sheilds, P., Tiffany-Morales, J., Hartry, A., & McCaffrey, T. (2011). High hopes-few opportunities: The status of elementary science education in California. Sacramento, CA: The Center for the Future of Teaching and Learning at WestEd.

Driver, R., Asoko, H., Leach, J., Scott, P., & Mortimer, E. (1994). Constructing scientific knowledge in the classroom. Educational Researcher, 23(7), 5–12.

Driver, R., Newton, P., & Osborne, J. (2000). Establishing the norms of scientific argumentation in classrooms. Science Education, 84, 287–312.

Duschl, R. A., & Osborne, J. (2002). Supporting and promoting argumentation discourse in science education. Studies in Science Education, 38, 39–72.

Forbes, C. T., Biggers, M., & Zangori, L. (2013). Investigating essential characteristics of scientific practices in elementary science learning environments: The practices of science observation protocol (P-SOP). School Science and Mathematics, 113, 180.

Garet, M. S., Birman, B. F., Porter, A. C., Desimone, L., & Herman, R. (1999). Designing effective professional development: Lessons from the Eisenhower Program [and] technical appendices. Jessup, MD: Editorial Publications Center, US Department of Education.

Germuth, A., Banilower, E., & Shimkus, E. (2003). Test-retest reliability of the Local Systemic Change teacher questionnaire. Chapel Hill, NC: Horizon Research.

Gogol, K., Brunner, M., Goetz, T., Martin, R., Ugen, S., Keller, U., … Preckel, F. (2014). ”My Questionnaire is Too Long!” The assessments of motivational-affective constructs with three-item and single-item measures. Contemporary Educational Psychology, 34, 188–205.

Hayes, K. N., & Trexler, C. J. (2016). Testing predictors of instructional practice in elementary science education: The significant role of accountability. Science Education. (in press).

Hill, L., & Betz, D. (2005). Revisiting the retrospective pretest. American Journal of Evaluation, 26(4), 501–517.

Hogan, K., Nastasi, B. K., & Pressley, M. (1999). Discourse patterns and collaborative scientific reasoning in peer and teacher-guided discussions. Cognition and Instruction, 17, 379.

Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6, 1–55.

Huffman, D., Thomas, K., & Lawrenz, F. (2003). Relationship between professional development, teachers’ instructional practices, and the achievement of students in science and mathematics. School Science and Mathematics, 103, 378–387.

Jiménez-Aleixandre, M. P., & Erduran, S. (2007). Argumentation in science education: An overview. In S. Erduran & M. P. Jiménez-Aleixandre (Eds.), Argumentation in science education: Perspectives from classroom-based research (pp. 3–27). Berlin: Springer.

Klein, S., Hamilton, L., McCaffrey, D., Stecher, B., Robyn, A., & Burroughs, D. (2000). Teaching practices and student achievement: Report of first-year findings from the “Mosaic” study of Systemic Initiatives in Mathematics and Science. Santa Monica, CA: Rand Corporation.

Krathwohl, D. R. (2002). A revision of Bloom’s taxonomy: An overview. Theory into practice, 41(4), 212–218.

Kuhn, D. (2015). Thinking together and alone. Educational Researcher, 44, 46–53.

Lee, C. D., Luykx, A., Buxton, C., & Shaver, A. (2007). The challenge of altering elementary school teachers’ beliefs and practices regarding linguistic and cultural diversity in science instruction. Journal of Research in Science Teaching, 44, 1269–1291.

Lee, O., Maerten-Rivera, J., Buxton, C., Penfield, R., & Secada, W. G. (2009). Urban elementary teachers’ perspectives on teaching science to English language learners. Journal of Science Teacher Education, 20, 263–286.

Lemke, J. L. (2001). Articulating communities: Sociocultural perspectives on science education. Journal of Research in Science Teaching, 38(3), 296–316.

Lemke, J. (2004). The literacies of science. In E. W. Saul (Ed.), Crossing borders in literacy and science instruction: Perspectives on theory and practice. Newark, DE: International Reading Association.

Llewellyn, D. (2013). Teaching high school science through inquiry and argumentation. Thousand Oaks, CA: Corwin.

Marsh, H. W. (1986). Global self-esteem: Its relation to specific facets of self-concept and their importance. Journal of Personality and Social Psychology, 51(6), 1224.

Marsh, H. W., Hau, K.-T., & Wen, Z. (2004). In search of golden rules: Comment on hypothesis-testing approaches to setting cutoff values for fit indexes and dangers in overgeneralizing Hu and Bentler’s (1999) findings. Structural Equation Modeling, 11, 320–341.

Marshall, J. C., Smart, J., & Horton, R. M. (2009). The design and validation of EQUIP: An instrument to assess inquiry-based instruction. International Journal of Science and Mathematics Education, 8, 299–321.

Matsunaga, M. (2010). How to factor-analyze your data right: Do’s, don’ts, and how-to’s. International Journal of Psychological Research, 3(1), 97–110.

McGinn, M. K., & Roth, W. M. (1999). Preparing students for competent scientific practice: Implications of recent research in science and technology studies. Educational Researcher, 28, 14–24.

McNeill, K. L., & Krajcik, J. (2008). Scientific explanations: Characterizing and evaluating the effects of teachers’ instructional practices on student learning. Journal of Research in Science Teaching, 45, 53–78.

Moll, L., Amanti, C., Neff, D., & Gonzalez, N. (1992). Funds of knowledge for teaching, using a qualitative approach to connect homes and classrooms. Theory into Practice, 31(2), 132–141.

National Research Council. (1996). National science education standards. Washington, DC: The National Academies Press.

National Research Council (NRC). (2012). A framework for K-12 science education: Practices, crosscutting concepts, and core ideas. Washington, DC: The National Academies Press.

National Research Council (NRC). (2013). Next generation science standards: For states, by states. Washington, DC: The National Academies Press.

Norris, S., Philips, L., & Osborne, J. (2008). Scientific inquiry: The place of interpretation and argumentation. In J. Luft, R. L. Bell, & J. Gess-Newsome (Eds.), Science as inquiry in the secondary setting (pp. 87–98). Arlington, VA: NSTA Press.

President’s Council of Advisors on Science and Technology (PCAST). (2010). Report to the President: Prepare and inspire: K-12 education in science, technology, engineering, and mathematics (STEM) for America’s future. Washington, DC: Executive Office of the President. http://www.whitehouse.gov/sites/default/files/microsites/ostp/pcast-stemed-report.pdf

Rea, L. M., & Parker, R. A. (2005). Designing and conducting survey research: A comprehensive guide. San Francisco: Jossey-Bass.

Richmond, G., & Striley, J. (1996). Making meaning in classrooms: Social processes in small-group discourse and scientific knowledge building. Journal of Research in Science Teaching, 33(8), 839–858.

Rosebery, A. S., Warren, B., & Conant, F. R. (1992). Appropriating scientific discourse: Findings from language minority classrooms. The Journal of the Learning Sciences, 2, 61–94.

Schwarz, C. V., Reiser, B. J., Davis, E. A., Kenyon, L., Achér, A., Fortus, D., … Krajcik, J. (2009). Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. Journal of Research in Science Teaching, 46(6), 632–654.

Smith, M. S. (2000). Balancing old and new: An experienced middle school teacher’s learning in the context of mathematics instructional reform. The Elementary School Journal, 100(4), 351–375.

Smolleck, L. D., Zembal-Saul, C., & Yoder, E. P. (2006). The development and validation of an instrument to measure preservice teachers’ self-efficacy in regard to the teaching of science as inquiry. Journal of Science Teacher Education, 17, 137–163.

Stapleton, L. M. (2010). Survey sampling, administration, and analysis. In G. R. Hancock & R. O. Mueller (Eds.), The reviewer’s guide to quantitative methods in the social sciences (pp. 399–412). New York: Routledge.

Stewart, J., Cartier, J. L., & Passmore, C. M. (2005). Developing understanding through model-based inquiry. In S. Donovan & J. D. Bransford (Eds.), How students learn: Science in the classroom (pp. 515–565). Washington, DC: National Academies Press.

Supovitz, J. A., & Turner, H. M. (2000). The effects of professional development on science teaching practices and classroom culture. Journal of Research in Science Teaching, 37(9), 963–980.

Tekkumru Kisa, M. T., & Stein, M. K. (2015). Learning to see teaching in new ways: A foundation for maintaining cognitive demand. American Educational Research Journal, 52(1), 105–136.

Tekkumru Kisa, M., Stein, M. K., & Schunn, C. (2015). A framework for analyzing cognitive demand and content-practices integration: Task analysis guide in science. Journal of Research in Science Teaching, 52, 659–685.

Thorndike, R. M., & Thorndike-Christ, T. (2010). Measurement and evaluation in psychology and education (8th ed.). Boston: Pearson, Merrill.

Walczyk, J. J., & Ramsey, L. L. (2003). Use of learner-centered instruction in college science and mathematics classrooms. Journal of Research in Science Teaching, 40, 566–584.

Wertsch, J. V. (1985). Vygotsky and the social formation of mind. Cambridge, MA: Harvard University Press.

Zimmerman, C. (2007). The development of scientific thinking skills in elementary and middle school. Developmental Review, 27, 172–223.

Acknowledgments

This work was supported by the National Science Foundation Grant No. 0962804.

Author information

Authors and Affiliations

Corresponding author

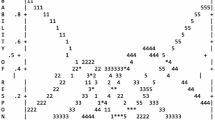

Appendix: Final Survey

Appendix: Final Survey

Instructional approaches

How often do your students do each of the following in your science classes? | Never | Rarely (a few times a year) | Sometimes (once or twice a month) | Often (once or twice a week) | Daily or almost daily |

|---|---|---|---|---|---|

1. Generate questions or predictions to explore | 1 | 2 | 3 | 4 | 5 |

2. Identify questions from observations of phenomena | 1 | 2 | 3 | 4 | 5 |

3. Choose variables to investigate (such as in a lab setting) | 1 | 2 | 3 | 4 | 5 |

4. Design or implement their OWN investigations | 1 | 2 | 3 | 4 | 5 |

5. Make and record observations | 1 | 2 | 3 | 4 | 5 |

6. Gather quantitative or qualitative data | 1 | 2 | 3 | 4 | 5 |

7. Organize data into charts or graphs | 1 | 2 | 3 | 4 | 5 |

8. Analyze relationships using charts or graphs | 1 | 2 | 3 | 4 | 5 |

9. Analyze results using basic calculations | 1 | 2 | 3 | 4 | 5 |

10. Write about what was observed and why it happened | 1 | 2 | 3 | 4 | 5 |

11. Present procedures, data and conclusions to the class (either informally or in formal presentations) | 1 | 2 | 3 | 4 | 5 |

12. Read from a science textbook or other hand-outs in class | 1 | 2 | 3 | 4 | 5 |

13. Critically synthesize information from different sources (i.e., text or media) | 1 | 2 | 3 | 4 | 5 |

14. Create a physical model of a scientific phenomenon (like creating a representation of the solar system) | 1 | 2 | 3 | 4 | 5 |

15. Develop a conceptual model based on data or observations (model is not provided by textbook or teacher) | 1 | 2 | 3 | 4 | 5 |

16. Use models to predict outcomes | 1 | 2 | 3 | 4 | 5 |

17. Explain the reasoning behind an idea | 1 | 2 | 3 | 4 | 5 |

18. Respectfully critique each others’ reasoning | 1 | 2 | 3 | 4 | 5 |

19. Supply evidence to support a claim or explanation | 1 | 2 | 3 | 4 | 5 |

20. Consider alternative explanations | 1 | 2 | 3 | 4 | 5 |

21. Make an argument that supports or refutes a claim | 1 | 2 | 3 | 4 | 5 |

How often do you do each of the following in your science instruction? | Never | Rarely (a few times a year) | Sometimes (once or twice a month) | Often (once or twice a week) | Daily or almost daily |

|---|---|---|---|---|---|

1. Provide direct instruction to explain science concepts | 1 | 2 | 3 | 4 | 5 |

2. Demonstrate an experiment and have students watch | 1 | 2 | 3 | 4 | 5 |

3. Use activity sheets to reinforce skills or content | 1 | 2 | 3 | 4 | 5 |

4. Go over science vocabulary | 1 | 2 | 3 | 4 | 5 |

5. Apply science concepts to explain natural events or real-world situations | 1 | 2 | 3 | 4 | 5 |

6. Talk with your students about things they do at home that are similar to what is done in science class (e.g., measuring, boiling water) | 1 | 2 | 3 | 4 | 5 |

7. Discuss students’ prior knowledge or experience related to the science topic or concept | 1 | 2 | 3 | 4 | 5 |

8. Use open-ended questions to stimulate whole class discussion (most students participate) | 1 | 2 | 3 | 4 | 5 |

9. Have students work with each other in small groups | 1 | 2 | 3 | 4 | 5 |

10. Encourage students to explain concepts to one another | 1 | 2 | 3 | 4 | 5 |

About this article

Cite this article

Hayes, K.N., Lee, C.S., DiStefano, R. et al. Measuring Science Instructional Practice: A Survey Tool for the Age of NGSS. J Sci Teacher Educ 27, 137–164 (2016). https://doi.org/10.1007/s10972-016-9448-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10972-016-9448-5