Abstract

Providing partial preference information for multiple criteria ranking or sorting problems results in the indetermination of the preference model. Investigating the influence of this indetermination on the suggested recommendation, we may obtain the necessary, possible and extreme results confirmed by, respectively, all, at least one, or the most and least advantageous preference model instances compatible with the input preference information. We propose a framework for answering questions regarding stability of these results. In particular, we are investigating the minimal improvement that warrants feasibility of some currently impossible outcome as well as the maximal deterioration by which some already attainable result still holds. Taking into account the setting of multiple criteria ranking and sorting problems, we consider such questions in view of pairwise preference relations, or attaining some rank, or assignment. The improvement or deterioration of the sort of an alternative is quantified with the change of its performances on particular criteria and/or its comprehensive score. The proposed framework is useful in terms of design, planning, formulating the guidelines, or defining the future performance targets. It is also important for robustness concern because it finds which parts of the recommendation are robust or sensitive with respect to the modification of the alternatives’ performance values or scores. Application of the proposed approach is demonstrated on the problem of assessing environmental impact of main European cities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The concept of criterion plays a fundamental role in decision aiding. Serving as a tool for evaluating and comparing alternatives, a criterion represents a specific point of view on the impact and quality of alternative decisions. As noted by Hyde and Maier [17], the performance values that are assigned to each alternative for every criterion are obtained from models, available data, or by expert judgment based on previous knowledge or experience.

In the presence of multiple conflicting criteria, decision aiding is performed with the use of some explicit usually formalized models and methods. A crucial element of these methods concerns dealing with preferences of the Decision Maker (DM) in a way that ensures that a recommended decision is as consistent with the DM’s objectives and value system as possible.

Indirect and imprecise preference information The majority of the recently proposed Multiple Criteria Decision Aiding (MCDA) methods admit partial preference information provided by the DM at the input. In particular, one may elicit holistic judgments, such as pairwise comparisons of alternatives or criteria (see, e.g., [12, 29]), assignment-based pairwise comparisons [19], assignment examples (see, e.g., [9, 33]), rank-related requirements [22], or desired class-cardinalities (see, e.g., [23, 42]). Furthermore, one may also specify some imprecise statements, like lower and upper bounds for comprehensive scores (see, e.g., [40]) or preference ratios (see, e.g., [28]). In any case, elicitation of partial preferences requires less cognitive effort on the part of the DM than providing precise values for the preference model parameters.

Robustness analysis in multiple criteria decision aiding When using partial preference information, the DM’s preference model is defined imprecisely. Depending on the selected compatible model instance, the recommendation for the set of alternatives may vary significantly. To investigate its robustness, several approaches have been proposed. In the context of ranking problems, they indicate the range of ranks attained by each alternative (see [21]), verify the possibility and necessity of the pairwise preference relations by checking if they hold for at least one or all compatible preference model instances, respectively (see, e.g., [12, 16, 28]), or indicate various intensity measures of the dominance relation (see, e.g., [2]). For multiple criteria sorting, analysis in terms of the possible and the necessary may be referred to class assignments (see [13, 27, 33]) or assignment-based preference relations (see [26]). Methods that employ the whole set of compatible preference model instances usually admit incremental specification of preference information in the course of an interactive procedure. Indeed, analysis of the robust results provokes reaction of the DM who may add a new or revise the old preference information. As noted by Corrente et al. [8], such an interactive process ends when the yielded necessary, possible, or extreme recommendation is decisive and convincing for the DM.

The core of post factum analysis In this paper, we propose a methodology of post factum analysis that is designed for use once recommendation has been worked out. Without loss of generality, we focus on an additive value function preference model which is of particular interest in MCDA for an intuitive interpretation of numerical scores of alternatives and a straightforward impact of pieces of preference information on the final result. Thus, in our case, a single preference model instance is a value function composed of marginal value functions with a precisely established course (shape).

Knowledge about the necessary, possible, and extreme consequences of the provided preference information may stimulate further questions from the DM. Indeed, knowing the performances of alternatives on considered criteria, as well as the ranges of their scores on all compatible preference model instances, the DM may wonder how the improvement or deterioration of some performances or scores influences the sort of an alternative in the obtained recommendation. On one hand, in case of falsity of some result concerning a given alternative, the DM may wish to know the minimal improvement that would warrant feasibility of the investigated part of the recommendation. On the other hand, if some result is already attained by an alternative for the current set of compatible preference model instances, the DM may be interested in the maximal deterioration for which this outcome still holds. Taking into account the setting of multiple criteria ranking and sorting, such questions may concern pairwise preference relations, attaining a particular rank or assignment. Considering the plurality of compatible preference model instances, reaching or preserving the target may be investigated for all or at least one compatible model instance. In this way, post factum analysis offers a rich framework addressing multivariate robustness and sensitivity concerns. The exemplary questions that may be suitably dealt within this framework are the following:

-

in case a is not even possibly preferred to b, what is the minimal improvement of performances of a that guarantees the truth of the possible preference;

-

in case a is possibly assigned to classes \(C_2\)–\(C_4\), what is the minimal improvement of the comprehensive value (score) of a that warrants that it is assigned to class at least as good as \(C_3\) for all compatible value functions;

-

in case a is ranked first for the most advantageous value function, what is the maximal deterioration of its performances on criteria \(g_1\) and \(g_2\), such that a is still possibly ranked at the very top;

-

in case a is assigned to a class at least as good as b for at least one compatible value function, what is the maximal deterioration of the comprehensive value (score) of a for which this possible assignment-based preference relation is still satisfied.

The discovered modifications indicate the strategy for achieving or maintaining the target. It may be useful in formulating the guidelines, design, planning, and defining the performance targets. This kind of analysis is also important for robustness concern because it finds which parts of the computed necessary, possible, and extreme results are robust. With small required improvement or allowed deterioration the recommended decision can be regarded as sensitive, whereas in case the modification of performance values is large the DM can be more confident of the validity of result. It is very useful in the perspective of co-constructive decision aiding [8]. Indeed, indication of the most or the least robust results may be used for stimulating the DM for further analysis, revising/enriching her assessments, or re-evaluating the performance values which are the most critical in the ranking or assignment of the alternatives. The latter is important if uncertainties arise in the performances of some alternatives. Other approaches in this spirit aim at justifying the suggested recommendation in terms of minimal pieces of preference information that make them true (called preferential reducts) [20] or stochastic analysis indicating an estimate of the probability of a possible preference relation, attaining some rank [25] or assignment [37].

We perceive the proposed post factum analysis as a complementary methodology to MCDA which, until now, concentrated mainly on providing a recommendation based on a preference model compatible with preference information provided by the DM. While robustness analysis of the obtained recommendations concerned validity of conclusions with respect to allowed changes of either performances or preference model parameters, the post factum analysis extends the concept of such analysis to other useful questions, like “what improvement on all or some performances of a given alternative should be made, so that it achieves a better result in the recommendation obtained with a set of compatible preference models?”, or “what is the margin of safety in some or all performances of a given alternative, within which it can maintain the same rank or class assignment as in the obtained robust recommendation?”. Answering this type of questions is very useful for the DM who wants to assess the opportunities and threats for particular alternatives. Such a preoccupation is typical for environmental management, engineering design, business consult, marketing, and public sector institutions.

Relation with data envelopment analysis Investigation of the improvement and deterioration of the alternative’s performances and/or comprehensive value that grants, respectively, achievement or maintenance of some target result has received a limited attention in MCDA (see [5, 17, 39, 41], and a review in Sect. 4). Moreover, among these approaches there is none that would investigate the impact of the change of alternatives’ performances or scores on the robustness of delivered recommendation.

Nevertheless, this type of analysis is at the core of Data Envelopment Analysis (DEA) (see [6]). DEA is a technique for measuring the relative efficiency of Decision Making Units (DMUs) that use similar inputs to produce similar outputs where the multiple inputs and outputs are incommensurate in nature. On one hand, the basic algorithms for checking if DMU is efficient, measure a “radial distance” of DMU from an efficient frontier. This distance can be interpreted as the coefficient by which DMU’s outputs should be multiplied (or DMU’s inputs need to be divided) in order to make it efficient (see [3]). In this way, DEA provides a measure of efficiency for each DMU, at the same time indicating for the non-efficient ones their “efficient peers”, i.e., the best practices to follow. Recently, DEA has been generalized to Ratio-based Efficiency Analysis (REA) which derives its results from all feasible output and input weights (see [34]). REA extends conventional efficiency scores by computing efficiency bounds, pairwise dominance relations, ranking intervals, and specification of performance targets. The latter is closely related to the necessary and possible improvements that we consider in view of multiple criteria ranking. On the other hand, if some DMU is efficient, neither an increase of any output nor the decrease of any input can change its efficiency status. Nevertheless, it can afford a limited increase in input or decrease in output, still remaining efficient after the change. Similar concerns have been raised with respect to uncertain coefficients with computation of the stability intervals which guarantee that the results do not change. Robustness analysis of this type has been conducted by, e.g., [11, 35, 43, 44].

Organization of the paper The organization of the paper is the following. In the next section, we introduce basic concepts and notation that will be used in the paper. In Sect. 3, we remind selected value-based multiple criteria ranking and sorting methods based on partial preference information. In Sect. 4, we review the existing multiple criteria sensitivity analysis methods investigating the impact of varying the performance values on the delivered recommendation. Moreover, we refer to a few management and environmental applications to which our post factum analysis can be applied. Section 5 introduces the framework for post factum analysis. For the purpose of illustration, in Sect. 6, we consider the problem of evaluating environmental impact of main European cities. The last section concludes the paper.

2 Notation

We shall use the following notation:

-

\(A=\{ a_1, a_2, \ldots , a_i, \ldots , a_n \}\)—a finite set of n alternatives;

-

\(G = \{ g_1, g_2,\ldots , g_j, \ldots , g_m \}\)—a finite set of m performance (evaluation) criteria, \(g_j: A \rightarrow \mathbb {R}\) for all \(j \in J=\{ 1,2, \ldots , m \}\);

-

\(A^R = \{ a^{*}, b^{*}, \ldots \}\)—a finite set of reference alternatives; in general, \(A^R\) may consist of past decision alternatives or fictitious alternatives, consisting of performances on the criteria which can be easily judged by the DM to express his/her comprehensive judgment; we assume, however, that \(A^R \subseteq A\) is a subset of alternatives relatively well-known to the DM on which (s)he accepts to express holistic preferences;

-

\(C_h, \ h=1, \ldots , p\)—pre-defined preference ordered classes such that \(C_{h+1}\) is preferred to \(C_h\), \(h=1, \ldots , p-1\); \(H=\{1, 2, \ldots , p\}\);

-

\(X_j = \{ x_j \in \mathbb {R}: g_j(a_i)=x_j, a_i \in A \}\)—the set of all different performance values on \(g_j\), \(j \in J\); we assume, without loss of generality, that the greater \(g_j(a_i)\), the better alternative \(a_i\) on criterion \(g_j\), for all \(j \in J\);

-

\(x_j^1, x_j^2, \ldots , x_j^{n_j(A)}\)—the ordered values of \(X_j\), \(x_j^k < x_j^{k+1}, k=1, \ldots , n_j(A)-1\), where \(n_j(A) = |X_j|\) and \(n_j(A) \le n\); consequently, \(X =\prod _{j=1}^m X_j\) is the performance space;

-

\(g_{j,*}\) and \(g_{j}^{*}\) are, respectively, the worst and the best possible performances on \(g_j\); \(g_{j,*} = x_j^1\) and \(g_j^* = x_j^{n_j(A)}\) or, if provided, \(g_{j,*}\) and \(g_j^*\) are, respectively, the lower and upper bounds for the performance scale on \(g_j\).

In order to represent DM’s preferences, we shall use a model in the form of an additive value function:

where the marginal value functions \(u_j\), \(j \in J\), are monotone, non-decreasing and their sum is normalized, so that the additive value (1) is bounded within the interval \([0, 1]\). Note that for simplicity of notation, one can write \(u_j(a)\), \(j \in J\), instead of \(u_j(g_j(a))\). Consequently, the basic set of constraints defining general additive value functions has the following form:

Instead of just monotone marginal value functions with all criteria values being their characteristic points, we can use piecewise linear or even linear ones. As indicated by Spliet and Tervonen [36], this is desirable for practical decision support. For formulation of the monotonicity constraints for piece-wise linear marginal value functions, see, e.g., [20]. Of course, the less linear pieces are permitted for such marginal value functions, the smaller the capacity of representation of preferences by the value function (1).

3 Reminder on the necessary, possible, and extreme results

When using partial preference information, there is usually more than a single model compatible with the DM’s judgments. To avoid any arbitrary selection, the prevailing trend in MCDA consists in taking into account the whole set of instances. Examination of its application on the set of alternatives A leads to identifying the necessary and possible consequences confirmed by all or at least one compatible preference model instance, respectively. Additionally, one may also compute the extreme recommendation for each alternative indicating the result observed for it in the most and least advantageous cases. While there are several methods for generating partial preference information, without loss of generality, in this section we will refer only to the basic holistic judgments. These are pairwise comparisons for multiple criteria ranking and assignment examples for sorting problems. In this section, we recall the basic ideas for multiple criteria ranking and sorting with a set of value functions.

3.1 Multiple criteria ranking with a set of value functions

Pairwise comparisons When dealing with multiple criteria ranking problems, the basic indirect preference information that may be provided by the DM has the form of pairwise comparisons for some reference alternatives. The comparison of a pair \((a^*,b^*) \in B^R \subseteq A^R \times A^R \) may state the strict preference, weak preference, or indifference denoted by \(a^* \succ _{DM} b^*\), \(a^* \succsim _{DM} b^*\), and \(a^* \sim _{DM} b^*\), respectively. Let each pairwise comparison from \(B^R\) be denoted by \(B^R_k\), \(k=1,\ldots ,|B^R|\). The transition from such a reference preorder to a value function is done in the following way:

where \(\varepsilon \) is an arbitrarily small positive value. Then, a set of value functions \(\mathcal U^{A^R}_{\textit{RANK}}\) that are able to reconstruct these judgments is defined with the following set of constraints:

where \(E^{A^R}_{\textit{BASE}}\) is the basic set of monotonicity and normalization constraints defining general additive value functions (see Sect. 2). If \(\varepsilon ^* = max \ \varepsilon \), s.t. \(E^{A^R}_{\textit{RANK}}\), is greater than 0 and \(E^{A^R}_{\textit{RANK}}\) is feasible, the set of compatible value functions is non-empty. Otherwise, the provided preference information is inconsistent with the assumed preference model.

Necessary and possible preference relations Applying all compatible value functions \(\mathcal U^{A^R}_{\textit{RANK}}\), we may define two binary relations in the set of alternatives A (see [12]):

-

Necessary weak preference relation, \(\succsim ^N\), that holds for a pair of alternatives \((a,b) \in A \times A\), in case \(U(a) \ge U(b)\) for all compatible value functions;

-

Possible weak preference relation, \(\succsim ^P\), that holds for a pair of alternatives \((a,b) \in A \times A\), in case \(U(a) \ge U(b)\) for at least one compatible value function.

The linear programs (LPs) that need to be solved to assess whether these relations hold are given by Corrente et al. [8] and Greco et al. [12].

Extreme ranks By considering all complete preorders established by value functions compatible with the preference information, we may also determine the best \(P^{*}(a)\) and the worst \(P_{*}(a)\) ranks attained by each alternative \(a \in A\). Identification of these extreme ranks requires solving some Mixed-Integer Linear Programming (MILP) problems presented by [21].

3.2 Multiple criteria sorting with a set of value functions

When it comes to methods designed for dealing with multiple criteria sorting problems, let us focus on a threshold-based sorting procedure, where the limits between consecutive classes \(C_h\), \(h=1,\ldots , p\), are defined by a vector of thresholds \(\mathbf t = \{ t_1, \ldots , t_{p-1} \}\) such that \(0 < t_1 < \ldots < t_{p-1} < 1 \), and \(t_{h-1}\) and \(t_h\) are, respectively, the lower and the upper threshold of class \(C_h, \ h =2, \ldots , p-1\) (see, e.g., [45]). These thresholds concern the scores assigned to alternatives by a value function. Note that \(t_1\) is an upper threshold of class \(C_1\) while the lower threshold is 0, and \(t_{p-1}\) is a lower threshold of class \(C_p\) while the upper threshold is \(>\)1. Thus, the DM preferences are represented with a pair \((U,\mathbf t )\), where U is an additive value function and \(\mathbf t \) is a vector of thresholds delimiting the classes. Alternative a is assigned to class \(C_h\) \((a \rightarrow C_h)\) iff \(U(a) \in [t_{h-1},t_{h}]\).

Assignment examples The basic indirect preference information that may be provided by the DM dealing with multiple criteria sorting has the form of possibly imprecise assignment examples. Each assignment example consists of a reference alternative \(a^* \in A^R\) and its desired assignment \([L_{DM}(a^*), R_{DM}(a^*)]\), with \(L_{DM}(a^*) \le R_{DM}(a^*)\). Let each assignment example be denoted by \(A^R_k\), \(k=1, \ldots , |A^R|\). These assignment examples are translated to the following constraints:

The set of pairs \((\mathcal U,\mathbf t )^{A^R}_{\textit{SORT}}\) compatible with the provided assignment examples is non-empty, if \(\varepsilon ^* = max \ \varepsilon \), s.t. \(E^{A^R}_{\textit{SORT}}\), is greater than 0 and \(E^{A^R}_{\textit{SORT}}\) is feasible.

Necessary and possible assignments Given a set \(A^R\) of assignment examples and a corresponding set of compatible pairs \((\mathcal U,\mathbf t )^{A^R}_{\textit{SORT}}\), for each alternative \(a \in A\) (see [13, 26]):

-

The possible assignment \(C_P(a) = [L_P(a), R_P(a)]\) is defined as the set of indices of classes \(C_h\) for which there exists at least one compatible pair \((U,\mathbf t )\) assigning a to \(C_h\) (denoted by \(a \rightarrow ^P C_h\));

-

The necessary assignment \(C_N(a) = [L_N(a), R_N(a)]\) as the set of indices of classes \(C_h\) for which all compatible pairs \((U,\mathbf t )\) assign a to \(C_h\) (denoted by \(a \rightarrow ^N C_h\)).

The linear programs for computing the possible and necessary assignments are given by Greco et al. [13] and Kadziński and Tervonen [26].

Necessary and possible assignment-based preference relations Given a set of compatible preference model instances, the possible assignment-based preference relation \(a \succsim ^{\rightarrow ,P} b\) holds if a is assigned to a class at least as good as class of b for at least one compatible model, and the necessary assignment-based preference relation \(a \succsim ^{\rightarrow ,N} b\) is true if a is assigned to a class at least as good as class of b for all compatible models. To verify the truth or falsity of these relations, we need to consider the MILP problems presented by Kadziński and Tervonen [26].

4 Review of multiple criteria sensitivity analysis methods investigating the impact of varying the performance values on the delivered recommendation

The majority of multiple criteria sensitivity analysis methods assess the impact of uncertainty in the preference model parameters (e.g., criteria weights) on the delivered recommendation [7]. In this section, we review few existing approaches which investigate the influence of variability in the performance values on the ranking of alternatives. Then, we refer to their real-world applications.

The early sensitivity analysis methods in this stream are limited to varying the alternative’s performance value on a single criterion. In particular, when using the PROMETHEE method, [41] verified how much a selected value needs to be improved to make the considered alternative ranked first. While considering three different multiple criteria aggregation procedures (the weighted sum model, the weighted product model, and the Analytic Hierarchy Process (AHP)), Triantaphyllou and Sanchez [39] investigated what is the minimum change in the performance value leading to an inversion of the currently observed preference relation for a pair of alternatives. These results were further used to indicate how critical the various performance values (in terms of a single criterion at a time) are in the ranking attained by the alternative.

The more recent methods for sensitivity analysis admit simultaneous variation of several performance values. Hyde and Maier [17, 18] verified what is the minimum modification of the performances to improve score of the alternative so that it becomes weakly preferred to another alternative. Such result is derived from solving an optimization problem which minimizes the distance between the original and optimized performance values. In the proposed setting, one considered both PROMETHEE and the weighted sum method. Moreover, the user was asked to choose between different distance metrics including a Euclidean distance, a Manhattan distance, and a Kullbacke-Leibler distance. In the same spirit, Beynon and Barton [4] and Beynon and Wells [5] identified the minimum (lean) changes necessary to the criteria values of a considered lower ranked alternative that improve its rank to that of a comparatively higher ranked alternative. Precisely, they considered the problem of minimization of a Euclidean distance in the context of PROMETHEE.

Yet another stream of algorithms admits variation of original performance values and investigates the consequence of thus imposed uncertainty using Monte Carlo simulation (see, e.g., [17, 32]). In this way, one can check the synthetic effect of varying different performance values (within some admissible pre-defined range or with respect to an assumed distribution) on attaining some target rank or comprehensive score.

The above-mentioned approaches have been used in a few management and environmental applications. These can be treated as exemplary real-world problems to which our post factum analysis can be applied. We divide these applications into two groups depending on the type of a ranking-specific target they considered:

-

Attaining a particular rank (either the top rank or an arbitrarily selected position):

-

Wolters and Mareschal [41] considered different designs of a heat exchanger network in terms of modifying the underlying investment costs. The results were used for granting additional funds on a specific alternative.

-

Beynon and Wells [5] considered a set of motor vehicles in terms of the minimum necessary engineering performance modification in their emission levels. The results were interpreted as the guidelines to be attained by future engineering work in the process of engine calibration and other adjustments.

-

Beynon and Barton [4] considered a set of individual police forces in the United Kingdom in terms of the minimum changes to sanction detection levels (clear up rates) that need to be attained. The outcomes were useful for strategy planning, offering quantitative evidence of course.

-

-

Inversion of a pairwise preference relation (attaining a rank equivalence):

-

Hyde and Maier [17] considered alternative water management options in terms of changing their environmental (e.g., efficient water use and reuse), social (e.g., clean industry and employment), and economic (e.g., true or full cost pricing) factors. Their results were helpful in determining the development strategy that offers a more sustainable future for the area.

-

Ravalico et al. [31] considered different locations of a salt interception scheme (SIS) in the Murray river in terms of changing the parameters related to the travel time and dead storage in the reaches of the river between some locks. This allowed to assess the sensitivity of the preferred location of each SIS and give the DMs a level of confidence in the ranking of these alternatives.

-

Chen et al. [7] considered a set of management options of diffuse pollution in the Taman river catchment in terms of adjusting the application of fertilisers, stocking density, and land use distribution, restoring wetland area, and increasing the area of non-farmed land. The outcomes were used to judge the robustness of the original results with respect to variation of performance values.

-

Rocco and Tarantola [32] considered a set of public investments in refineries, bridges, or petroleum exploration in terms of modifying their financial, economic, and social aspects (e.g., benefit-cost ratio, compensation of employees or remunerations, and employment).

-

5 Post factum analysis

As said before, exploitation of the set of preference model instances compatible with DM’s preferences results in the necessary, possible, and extreme recommendations. Their analysis may stimulate questions concerning the stability of the provided outcomes and conditions under which some parts of the considered recommendation become or remain true. We will call the proposed framework by “post factum analysis”, because it should be employed when some recommendation has been already produced in result of an interaction between the DM (possibly assisted by an analyst) and the method.

In this section, we will discuss different elements of this framework corresponding to a variety of targets to be achieved by the alternatives. These targets are distinguished by the type of considered recommendation, certainty level for the accounted results as well as currently observed outcomes. When it comes to the first aspect, we propose to analyze conditions granting the following targets:

-

in case of multiple criteria ranking:

-

the truth of a preference relation for an ordered pair of alternatives: \(a \succsim b\),

-

the truth of a preference of an alternative with respect to a group of at least two other alternatives: \(a \succsim b, \forall b \in A'\),

-

attaining a particular rank: \(P^*(a) \le k\) or \(P^*(a) \le k\),

-

-

in case of multiple criteria sorting:

-

the truth of an assignment-based preference relation for an ordered pair of alternatives: \(a \succsim ^{\rightarrow } b\),

-

attaining a particular class assignment: \(L_P(a) \ge k\) or \(R_P(a) \ge k\).

-

As far as certainty level for the results is concerned, we will consider the above five targets in view of their achievement for at least one or all preference model instances compatible with DM’s preference information. Obviously, achieving a certain target in the possible sense is easier than in the necessary setting. Finally, depending on the currently delivered recommendation, real-world experience indicates interest in two types of questions. The first one deals with quantifying either improvement that needs to be made or a gap that has to be covered to reach a certain target. The other one is about estimating potential deterioration that could be afforded, or about measuring an existing redundancy in case some target is already acquired.

We will analyze conditions that need to be satisfied to achieve or preserve some target from two perspectives. Firstly, we will take into account performances of alternatives. Secondly, we will investigate the change of a comprehensive value (score) of an alternative rather than direct improvement or deterioration of its performances. These complementary perspectives offer a diverse view on the robustness of the delivered recommendation.

In this section, we formalize the framework of post factum analysis by introducing the notions of the possible and necessary improvement, deterioration, missing and surplus values. Let us emphasize that all definitions are conditioned by the use of both a particular preference model and preference information provided by the DM. We will also present the procedures for computing these measures for each out of five accounted targets referring to the truth of a preference relation or assignment-based preference relation or a group of preference relations as well as to attaining some rank or assignment.

By proposing the framework of post factum analysis, we generalize the existing approaches which investigate the impact of a change in performance values on the delivered recommendation (see Sect. 4) in the following aspects:

-

We consider a set of preference model instances compatible with the DM’s indirect preference information for deriving the base recommendation (see Sect. 3) and subsequent formulation of the targets to be attained. On the contrary, the vast majority of existing approaches employ just a single preference model instance with precise parameter values, thus failing to investigate the impact of varying the alternatives’ performances or scores on the robustness of the delivered recommendation.

-

We formulate a wide spectrum of targets taking into account the specificity of both multiple criteria ranking and sorting problems. We extend the types of targets that can be considered in context of the former, and propose the targets that are of interest in terms of the latter.

-

Apart from investigating the improvement that needs to be made so that to attain some target (see Sects. 5.2.1, 5.3.1), we enrich the existing methods for sensitivity analysis by considering admissible deterioration by which some already attained target is maintained (see Sects. 5.2.3, 5.3.2).

-

We investigate two scenarios of attaining/maintaining the targets in terms of the necessary (for all compatible preference model instances) and the possible (for at least one compatible preference model instance). A related setting has been discussed in [31], where the question of changing the rank of an alternative is considered by dividing the space of compatible preference model instances into the three regions where the preference relation is, respectively, unaffected, inverse, and the boundary region separating the previous ones.

-

We discuss different procedures for changing the alternative’s performance values (see Sect. 5.2.2), primarily focusing on adapting the approach postulated in DEA which consists in multiplying the performances by a common factor (see Sects. 5.2.1, 5.2.3).

-

Apart from assessing the impact of varying the performance values (see Sect. 5.2), we show how to attain/maintain some target by changing directly the alternative’s comprehensive score (value) (see Sect. 5.3). Such change may be either treated as the result per se or further decomposed into the required/admissible variation of performance values. A related algorithm which admits varying a marginal score on a single criterion only, has been proposed in [7].

5.1 Notation used in post factum analysis

We will use the following notation w.r.t. the results of post factum analysis:

where:

-

\(W \in \{ \rho , u \}\), with \(\rho \) representing the change of alternative’s performances and u representing the change of alternative’s comprehensive value;

-

\(X \in \{P, N\}\), with P and N representing, respectively, the possibility or the necessity of achieving/preserving a target;

-

\(Y \in \{>, <\}\), with \(>\) representing investigation of an improvement that needs to be made in case some target has to be achieved, and \(<\) representing investigation of a deterioration that can be afforded in case some target needs to be preserved;

-

\(Z \in \{(a \succsim b), (a \succsim b, \forall b \in A'), (P^*(a) \le k), (P_*(a) \le k), (R_P(a) \ge k), (L_P(a) \ge k), (a \succsim ^{\rightarrow } b) \}\) represents the target under consideration.

For example:

-

\(\rho ^P_{>}(P^*(a) \le k)\) is the rank-related possible improvement, i.e., the improvement \((>)\) of the performances of a \((\rho )\) that is required so that its best rank \((P^*(a))\) is not worse than k for at least one compatible value function (P);

-

\(u^N_{<}(a \succsim b)\) is the preference-related necessary surplus value, i.e., the maximal value (u) that when subtracted \((<)\) from the comprehensive value of a still admits a to be preferred by b \((a \succsim b)\) for all compatible value functions (N).

5.2 Changing performances of an alternative

In this subsection, we will analyze the change of alternative’s performances that needs to be made to achieve or preserve some target. On one hand, when a certain target needs to be achieved, it means that current performances of an alternative on all or some criteria are not sufficiently good and should be improved. Obviously, we are interested in the minimal improvement that guarantees achieving a designated target. On the other hand, if some target has been already acquired, an alternative can possibly afford some deterioration of its performances not exerting an influence on the target maintenance. In this case, the maximal deterioration for which a target is still preserved is of interest to the DM.

Although, in general, one may consider different means for quantifying the improvement or deterioration, we believe that the answer provided to the DM should be as simple as possible. Thus, we focus on considering radial increases and decreases of the performances on different criteria by multiplying them by a common factor, respectively, greater or less than one. This approach has been postulated in DEA where the DMU’s outputs or inputs are multiplied by the common coefficient. Its value indicates whether the unit is efficient, i.e., if it is ranked first for some feasible output and input weights. Nevertheless, we also discuss some other distance metrics that can be employed within the proposed framework.

5.2.1 Possible and necessary improvement

Definition 1

Assume that some target is not attained by an alternative \(a \in A\) in the set of preference model instances compatible with DM’s preference information. A comprehensive possible (necessary) improvement for a in view of acquiring this target is the minimal real number greater than one by which the performances of a on all criteria need to be multiplied so that the target is achieved for at least one (all) compatible preference model instance. Depending on the type of considered target, we distinguish:

-

preference-related possible (necessary) improvement \(\rho ^P_{>}(a \succsim b)\) \((\rho ^N_{>}(a \succsim b))\) in case \(not(a \succsim ^P~b)\) \((not(a \succsim ^N b))\), i.e., a is not possibly (necessarily) preferred to b, and it needs to improve its performances so that \(a \succsim ^P b\) \((a \succsim ^N b)\);

-

group preference-related possible (necessary) improvement \(\rho ^P_{>}(a \succsim b, \forall b \in A')\) \((\rho ^N_{>}(a \succsim ~b, \forall b \in ~A'))\) in case \(\exists b \in A' \subseteq A,\) \(not(a \succsim ^P b)\) \((not(a \succsim ^N b))\), i.e., a is not possibly (necessarily) preferred to at least one alternative b from a subset of alternatives \(A' \subseteq A\), and it needs to improve its performances so that \(\forall b \in A'\), \(a \succsim ^P b\) \((a \succsim ^N b)\);

-

rank-related possible (necessary) improvement \(\rho ^P_{>}(P^*(a) \le k)\) \((\rho ^N_{>}(P_*(a) \le k))\) in case \(P^*(a) >~k\) \((P_*(a) > k)\), i.e., the best (worst) rank of a is worse than k, and a needs to improve its performances so that \(P^*(a) \le k\) \((P_*(a) \le k)\);

-

assignment-related possible (necessary) improvement \(\rho ^P_{>}(R_P(a) \ge k)\) \((\rho ^N_{>}(L_P(a)\ge k))\) in case \(R_P(a) < k\) \((L_P(a) < k)\), i.e., the best (worst) possible class of a is worse than \(C_k\), and a needs to improve its performances so that \(R_P(a) \ge k\) \((L_P(a) \ge k)\),

-

preference-related assignment-based possible (necessary) improvement \(\rho ^P_{>}(a~\succsim ^{\rightarrow }~b)\) \((\rho ^N_{>}(a \succsim ^{\rightarrow }~b))\) in case \(not(a \succsim ^{P,\rightarrow } b)\) \((not(a \succsim ^{N, \rightarrow } b))\), i.e., a is not possibly (necessarily) assigned to a class at least as good as b, and it needs to improve its performances so that \(a \succsim ^{P, \rightarrow } b\) \((a \succsim ^{N, \rightarrow } b)\).

Computation of the comprehensive possible improvement requires solving the following non-linear optimization problem:

s.t.:

where \(E(A^{\rho }_{a})\) is the constraint set defining compatible preference model instances when considering the set of alternatives \(A^{\rho }_{a} = A \cup \{a^{\rho }\} \setminus \{a\}\), such that \(a^{\rho }\) is obtained by multiplying the performances of a on all criteria by \(\rho \). We need to consider \(E(A^{\rho }_{a})\) instead of E(A), because a is replaced with a new alternative \(a^{\rho }\), whose performances need to satisfy monotonicity and normalization constraints as well as constraints corresponding to the DM’s preference information. Thus, the non-linearity of the above optimization problem is related to the fact that we multiply performances of a which are subsequently transformed into respective marginal values. Constraints related to achieving particular targets are the following:

-

for preference-related possible improvement: \(U(a^{\rho }) \ge U(b)\), which guarantees \(a^{\rho } \succsim ^P b\);

-

for group preference-related possible improvement: for all \(b \in A'\), \(U(a^{\rho }) \ge U(b)\), which guarantees \(a^{\rho } \succsim ^P b\) for all \(b \in A'\);

-

for rank-related possible improvement: constraint \(U(a^{\rho }) \ge U(b)\) can be relaxed only for up to \(k-1\) alternatives \(b \in A \setminus \{a\}\), which guarantees that at most \(k-1\) alternatives are at the same time ranked better than \(a^{\rho }\), i.e., \(a^{\rho }\) attains at least k-th rank;

-

for assignment-related possible improvement: \(U(a^{\rho }) \ge b_{k-1}\), which guarantees that a is assigned to a class not worse than \(C_k\);

-

for preference-related assignment-based possible improvement: \(U(a^{\rho }) \ge b_{h-1}\) and \(U(b) < b_{h}\) for some \(h \in \{1, \ldots , p\}\), which guarantees \(a^{\rho } \succsim ^{P,\rightarrow } b\).

Problem (4), being non-linear, cannot be solved efficiently with the use of contemporary solvers. Nevertheless, it can be easily solved using the binary search (bisection) method (see Algorithm 1 for the pseudo-code). It repeatedly bisects interval \([1, \text{ max }_{j \in J} \{g_j^*/g_j(a)\}]\) and then selects a subinterval in which a sought minimal \(\rho ^P_{>}\) must lie for further processing. This is achieved by testing if \(E^{possible}_{improvement}(a, \rho ^P_{>})\) is feasible. If so, the last tested \(\rho ^P_{>}\) is too great, which indicates the need for exploiting the lower subinterval. The binary search identifies the solution with the required precision \(\gamma \). For some recent uses of the binary search in optimization, see, e.g., [14, 15].

Although different methods can be used to solve this problem, the binary search has been selected because it is guaranteed to converge, the error of identified solution can be controlled, and the algorithm can be easily explained to non-specialists in computer science and mathematics. Moreover, its application is supported by the specific characteristics of considered problem. First, if the target under consideration can be achieved, there exists only a single solution. As a result, the binary search is guaranteed to identify it, and there is no need to use methods that detect multiple solutions. Secondly, even though the convergence of binary search is generally slow, in post factum analysis the targets to be analyzed should be indicated by the DM and in most real-world problems a limited number of such targets would be of particular interest to her/him.

Let us provide a few important remarks concerning computation of the possible comprehensive improvement. First of all, the need for identifying the improvement exists iff some target is not attained with the current performance vector. That is why, the lower bound for the search can be set to one, and we are guaranteed indication of \(\rho ^P_{>} > 1\) as the solution. Secondly, when multiplying an evaluation \(g_j(a)\) by a value \(\rho ^P_{>} > 1\), it is possible that \(\rho ^P_{>} \cdot g_j(a) > g_j^*\). We assume, however, that an alternative cannot reach an evaluation greater than \(g_j^*\). Thus, when \(\rho ^P_{>} \cdot g_j(a) > g_j^*\), we assume that \(u_j(\rho ^P_{>} \cdot g_j(a))\) is equal to the maximal marginal value on criterion \(g_j\), \(u_j(g_j^*)\).

Apart from considering comprehensive improvement of the performances on all criteria, it may be interesting for the DM to compute a partial possible improvement \(\rho ^P_{>, G_{\rho }}\) on a selected subset of criteria \(G_{\rho } \subseteq G\) only. The algorithm for identifying such partial improvement is the same as for the comprehensive one, with the proviso that comprehensive value of \(a^{\rho }\) is expressed as:

Obviously, there is no guarantee that improving performances only on criteria from subset \(G_{\rho }\) would be sufficient for achieving the target. In this case, it may be interesting to indicate all minimal subsets of criteria which guarantee such an achievement. Let us call them possible improvement reducts. They can be identified with Algorithm 2. It progressively tests all possible subsets of criteria, starting from the minimal ones, and eliminates from the test list the proper supersets of these subsets that already implied the achievement of the target.

Remark 1

For any subsets of criteria \(G_1 \subset G_2 \subseteq G\) allowing achievement of the target, \(\rho ^P_{>, G_1} \ge \rho ^P_{>, G_2}.\)

Computation of the comprehensive necessary improvement requires identification of the minimal real number greater than one by which we need to multiply the performances of a on all criteria so that the target is achieved for all compatible preference model instances. However, when there exists at least one compatible preference model instance, there are, in general, infinitely many compatible instances, which cannot be checked one by one. To find a way around, we need to compute the maximal multiplier for which the opposite target is not attained. This can be done by solving the following non-linear optimization problem:

s.t.:

Using the suitably adapted binary search method (see Algorithm 1), we need to identify the minimal \(\rho \) by which \(E^{necessary}_{improvement}(a,\rho )\) is infeasible (it is just greater than the maximal \(\rho \) by which \(E^{necessary}_{improvement}(a,\rho )\) is feasible). Infeasibility of \(E^{necessary}_{improvement}(a,\rho )\) ensures that the opposite target cannot be attained for any compatible preference model instance, which implies the necessary achievement of the target under consideration. Constraints modeling the targets opposite to the ones that should be acquired are the following:

-

for preference-related necessary improvement, \(U(a^{\rho }) < U(b)\); if this cannot be satisfied, \(a^{\rho } \succsim ^N b\);

-

for group preference-related necessary improvement, \(\exists b \in A', U(a^{\rho }) < U(b)\); if this cannot be satisfied, \(a^{\rho } \succsim ^N b\) for all \(b \in A'\);

-

for rank-related necessary improvement, constraint \(U(a^{\rho }) < U(b)\) holds for at least k alternatives \(b \in A \setminus \{a\}\); if this cannot be satisfied, there are at most \(k-1\) alternatives \(b \in A \setminus \{a\}\) ranked better than \(a^{\rho }\), i.e., \(a^{\rho }\) attains rank not worse than k for all compatible preference model instances;

-

for assignment-related necessary improvement, \(U(a^{\rho }) < b_{k-1}\); if this cannot be possibly satisfied, a is assigned to a class at least as good as \(C_k\) for all compatible preference model instances;

-

for preference-related assignment-based necessary improvement, \(U(b) \ge b_{h}\) and \(U(a^{\rho }) < b_{h}\) and for some \(h \in \{1, \ldots , p-1\}\); if \(U(b) \ge b_h > U(a^{\rho })\) cannot be satisfied for any considered h, then \(a^{\rho } \succsim ^{N, \rightarrow } b\).

Remark 2

For any target under consideration, the necessary comprehensive improvement is not less than the corresponding possible improvement, i.e., \(\rho ^N_{>} \ge \rho ^P_{>}.\)

5.2.2 Remarks on standardization of performance values, distance measures, and performance scales

Standardization Note that before using post factum analysis aiming at changing the performances of an alternative, to ensure that the scales of different criteria do not influence the optimization, if required, one needs to perform standardization of the performance values to commensurable units. Such standardization may consist in dividing each performance value by the standard deviation \(\sigma _j\) of the set of performances on \(g_j\), i.e.:

This approach has been used in [5, 17]. Another approach, called feature scaling or unity-based normalization, has been employed in [7, 31]. It relates each performance value \(g_j(a)\) to the difference between extreme performances observed/allowed on \(g_j\), i.e.:

Thus transformed, \(g_j^{st_2}(a)\) can be interpreted in terms of the achievement level (e.g., when considering scale \([2, 10]\), \(g_j(a) = 4\) corresponds to 25 % achievement level on \(g_j\)).

Measures of required improvement When multiplying the original or standardized performance values by a common factor \(\rho \), we investigate what is the minimal relative change needed for reaching the target. Then:

Alternatively, we may verify what is the minimal absolute improvement \(\rho ^{abs}\) of each performance value that is required for achieving the pre-defined target:

Obviously, one may differentiate the improvements that need to be made on each individual criterion. Let us denote the relative (absolute) improvement of \(a \in A\) on \(g_j \in G\) by \(\rho _j = g_j(a^\rho ) / g_j(a)\) (\(\rho ^{abs}_j = g_j(a^\rho ) - g_j(a)\)). Then, one may consider the minimization of the following distance measures:

-

the Euclidean distance \(d^{E,\rho ^{abs}}_a\) (used, e.g., in [5, 17, 31]):

$$\begin{aligned} d^{E,\rho ^{abs}}_a = \sqrt{\sum \nolimits _{j=1}^m (\rho ^{abs}_j)^2}; \end{aligned}$$(10) -

the Manhattan distance \(d^{M,\rho ^{abs}}_a\) (used, e.g., in [17]; note that in our case \(\rho ^{abs}_j\) is always greater than zero):

$$\begin{aligned} d^{M,\rho ^{abs}}_a = \sum _{j=1}^m \rho ^{abs}_j; \end{aligned}$$(11) -

the Chebyshev distance \(d^{Ch,\rho ^{abs}}_a\):

$$\begin{aligned} d^{Ch,\rho ^{abs}}_a = max_{j=1,\ldots ,m} \rho ^{abs}_j; \end{aligned}$$(12) -

the sum of relative improvements \(d^{R,\rho }_a\):

$$\begin{aligned} d^{R,\rho }_a = \sum _{j=1}^m \rho _j; \end{aligned}$$(13) -

the Kullbacke-Leibler distance \(d^{KL,\rho }_a\) (i.e., a relative entropy; used, e.g., in [17]):

$$\begin{aligned} d^{KL,\rho }_a = \sum _{j=1}^m g_j(a^{\rho }) \cdot ln(\rho _j). \end{aligned}$$(14)

Criteria scales The use of the proposed framework for post factum analysis is straightforward in case performances are expressed on a ratio scale. We may account for the interval scales by considering the objective functions (9–12). Moreover, these functions need to be considered when at least one of the performances to be improved is equal to zero. Since in this case it is impossible to determine the relative improvement, only the absolute one may be of interest to the DM.

Note, however, that the optimization problems with non-linear objective functions (10–14) are even more difficult to solve than these discussed in Sect. 5.2.1. Thus, to differentiate the improvements that need to be made on each criterion, one needs to use more advanced heuristic optimization methods than the binary search. For a discussion on employing the genetic algorithms in this particular context see, e.g., [5, 17].

When ordinal performance scale is employed, neither ratios nor differences between the performances or their codifications can be interpreted. Since in this case the linear interpolation between the characteristic points is not meaningful, these criteria should be modeled with the general marginal value functions with the characteristic points corresponding to all different performances values. Moreover, they need to be excluded from changing the performances by means of multiplication or addition. Instead, one can quantify the modification of performance values only in terms of the required change of performance levels (rank orders by which the performances are sorted). A single “shift” in performance corresponds to a change of alternative’s evaluation to a performance above the original one. In this setting, post factum analysis may indicate, e.g., the need for increasing the criteria values by one performance level (e.g., from “average” to “good”) on \(g_1\) and \(g_2\) for reaching the first rank. This is a rough measure, because the shifts between different performance values (e.g., from “bad” to “medium” and from “medium” to “good” on \(g_1\)) and criteria (e.g., from “bad” to “medium” on \(g_1\) and \(g_2\)) are not comparable. Alternatively, one can focus on computing the missing value. This technique is described in Sect. 5.3.1.

All remarks presented in this subsection can be formulated analogously for the case of deteriorating the performance values so that to maintain the already achieved target.

5.2.3 Possible and necessary deterioration

Definition 2

Assume that some target is attained by an alternative \(a \in A\) in the set of preference model instances compatible with DM’s preference information. A comprehensive possible (necessary) deterioration for a in view of maintaining this target is the minimal real number not greater than one by which the performances of a on all criteria need to be multiplied so that the target is still achieved for at least one (all) compatible preference model instance.

Comprehensive possible and necessary deteriorations in view of some specific targets are defined analogously to the corresponding improvements in Definition 1. For example, preference-related possible (necessary) deterioration \(\rho ^P_{<}(a \succsim b)\) \((\rho ^N_{<}(a \succsim b))\) is considered in case \(a \succsim ^P b\) \((a \succsim ^N b)\), i.e., a is possibly (necessarily) preferred to b, and a can afford deteriorating its performances while still \(a \succsim ^P b\) \((a \succsim ^N b)\). The formulation of algorithms for computing the possible and necessary deteriorations are the same as in case of the improvements. Obviously, the upper bound for the search can be set to one (thus, we are guaranteed that \(\rho \le 1\) will be indicated as the solution) and the lower bound can be set to \(\text{ min }_{j \in J} \{g_{j,*}/g_j(a)\}\). We also assume that in case \(\rho \cdot g_j(a) < g_{j,*}\), \(u_j(\rho \cdot g_j(a))\) is equal to the minimal marginal value on criterion \(g_j\) equal to \(u_j(g_{j,*})\). Apart from considering comprehensive deteriorations, we can also refer to the partial necessary and possible deteriorations defined analogously to the partial improvements.

Remark 3

For any subsets of criteria \(G_1 \subset G_2 \subseteq G\) which admit deterioration of the performances not exerting an influence on the target maintenance, \(\rho ^P_{<, G_1} \le \rho ^P_{<, G_2}.\)

Remark 4

For any target under consideration, the necessary comprehensive deterioration is not less than the corresponding possible deterioration, i.e., \(\rho ^N_{<} \ge \rho ^P_{<}.\)

5.3 Changing comprehensive value of an alternative

In this subsection, we investigate the change of a comprehensive value (score) of an alternative rather than direct improvement or deterioration of its performances. In particular, we may analyze what minimal value is missing to attain some target or what maximal value an alternative has in stock when this target is already acquired. Let us emphasize that such investigation is meaningful for the considered additive representation of preference, because the scale of the marginal value functions is a conjoint interval scale. Consequently, the difference between two values has the meaning of preference intensity. Thus, for example, a low missing or surplus value indicates the need for small improvement or a small margin for deterioration, respectively. In any case, such analysis aims at indicating the most (least) favorable compatible value function for an alternative in view of achieving (preserving) a target under consideration with the least improvement (the greatest deterioration) of its comprehensive value.

Note that the possible and necessary missing or surplus values may be treated per se as outcomes of post factum analysis. Nevertheless, the most (least) favorable compatible value functions can be further analyzed to derive the underlying required improvement (allowed deterioration) in the performance values for reaching (maintaining) the target. To decompose the missing or surplus values, one can apply the approaches discussed in Sect. 5.2. Then, the necessary and possible outcomes derived from the analysis of a single compatible value function would be equivalent.

5.3.1 Possible and necessary missing value

Definition 3

Assume that some target is not attained by an alternative \(a \in A\) in the set of preference model instances compatible with DM’s preference information. A possible (necessary) missing value for a in view of achieving this target is the minimal positive value (score) that added to the comprehensive value (score) of a allows achieving the target for at least one (all) compatible preference model instance.

Possible and necessary missing values for some specific targets are defined analogously to the possible and necessary improvements in Definition 1. For example, preference-related possible (necessary) missing value \(u^P_{>}(a \succsim b)\) \((u^N_{>}(a \succsim b))\) is considered in case \(not(a \succsim ^P b)\) \((not(a \succsim ^N b))\), i.e., a is not possibly (necessarily) preferred to b, and a needs to increase its comprehensive value so that \(a \succsim ^P b\) \((a \succsim ^N b)\). Computation of the possible missing value requires solving the following linear optimization problem:

s.t.:

Note that E(A) in the above constraint set implies that we exploit the original set of compatible value functions. The explanation of constraints related to achieving particular targets is analogous to \(E^{possible}_{improvement}(a, \rho )\). The analysis aims at identifying the most advantageous value function in terms of satisfying the target in a possible sense. Subsequently, the DM may analyze both the comprehensive values for judging how great is the missing gap and the shape of marginal value functions for examining the conditions under which a is the closest to achieving the target.

Computation of the necessary missing value requires solving the following linear optimization problem:

s.t.:

It identifies the most advantageous value function in terms of satisfying the target in a necessary sense. Again, the underlying idea is that the necessary missing value is equal to the minimal value by which the target opposite to the one under consideration is not attained.

5.3.2 Possible and necessary surplus value

Definition 4

Assume that some target is attained by an alternative \(a \in A\) in the set of preference model instances compatible with DM’s preference information. A possible (necessary) surplus value for a in view of achieving this target is the maximal positive value (score) that subtracted from the comprehensive value (score) of a still allows achieving the target for at least one (all) compatible preference model instance.

The possible surplus value can be computed by solving problem (15) with the target function

and inverse sign before subtracted value in the constraints corresponding to the targets, i.e., \((-u^P_{<})\) rather than \((+u^P_{>})\).

The necessary surplus value requires solving problem (16) with the target function

and inverse sign before subtracted value in the constraints corresponding to the targets, i.e., \((-u^N_{<})\) rather than \((+u^N_{>})\).

6 Illustrative study: application of post factum analysis for assessing the environmental impact of cities

To illutrate the use of post factum analysis, we will refer to the problem of assessing environmental impact of cities, which has been originally considered by the Economist Intelligence Unit [10]. The proposed European Green City Index aims at measuring and rating the environmental performance of 30 leading European cities both overall and across a range of specific areas. By doing so, it offers a tool to enhance the understanding and decision making abilities of all those interested in environmental performance. The index takes into account individual indicators per city concerning a wide range of environmental areas. The performances allow for direct comparison between cities. We will reconsider this problem, taking into account the following four criteria with an increasing direction of preference:

-

\(\hbox {CO}_2\) \((g_1)\), representing the intensity of CO\(_2\) emissions (the observed performances are between 2.49 and 9.58);

-

energy \((g_2)\), representing the intensity of energy consumption (the observed performances are between 1.50 and 8.71);

-

water \((g_3)\), representing water efficiency and treatment policy (the observed performances are between 1.83 and 9.21);

-

waste and land use \((g_4)\), representing waste reduction and treatment policy (the observed performances are between 1.43 and 8.98).

Cities’ performances on these criteria are provided in Table 1. All criteria are expressed on a ratio scale. A detailed description of indicators used to assign performance values to the considered cities is provided in [10] (p. 20). For example, \(g_1\) involves \(CO_2\) emissions, intensity, and reduction strategy. In what follows, we assume the use of linear marginal value functions.

Since all applications mentioned in Sect. 4 focus on attaining the ranking-specific targets, for illustrative purpose, we will formulate our problem in terms of multiple criteria sorting with the aim of assigning the cities to one of four classes \(C_1\)-\(C_4\), such that \(C_1\) is the worst class and \(C_4\) is the best one. We assume that the DM provided preference information in form of 12 assignment examples (3 per each class) given in Table 2.

The possible and the necessary results

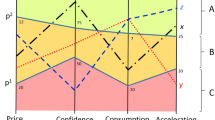

The possible assignments and Hasse diagram of the necessary assignment-based preference relation are presented in Fig. 1. Let us remind that—when using a threshold-based sorting procedure—in case the possible assignment is precise, it is equivalent to the necessary assignment; otherwise, the necessary assignment is empty (see [26]). Obviously, the 12 reference alternatives are assigned to their desired classes by all compatible preference model instances. Another 6 cities (Zurich, Bucharest, Tallinn, Belgrade, Sofia, and Kiev) are necessarily assigned to a single class. For the remaining 12 cities, the necessary assignment is empty, and the possible assignment is imprecise. There are 3 cities possibly assigned to three consecutive classes (Madrid, Bratislava, and Ljubljana), and 9 cities with a possible assignment to two classes (e.g., Copenhagen, Dublin, and Istanbul).

When it comes to the necessary assignment-based preference relation, cities necessarily assigned to the same class are indifferent. Thus, they are grouped within a single node in Fig. 1 (e.g., Oslo, Stockholm, Zurich, and Brussels are all assigned to \(C_4\) with all compatible preference model instances). Moreover, \(\succsim ^{\rightarrow ,N}\) is transitive, and, thus, e.g., Amsterdam \(\succsim ^{\rightarrow ,N}\) Rome and Rome \(\succsim ^{\rightarrow ,N}\) Dublin implicates Amsterdam \(\succsim ^{\rightarrow ,N}\) Dublin (note that the arcs obtainable by the transitive closure are omitted in the figure). On one hand, for pairs of cities (a, b) connected by an arc in Fig. 1 there is the necessary assignment-based strict preference relation, i.e., \(a \succ ^{\rightarrow ,N} b\) iff \(a \succsim ^{\rightarrow ,N} b\) and \(not(b \succsim ^{\rightarrow ,N} a)\). Note that it does not exclude that \(b \succsim ^{\rightarrow ,P} a\). On the other hand, pairs of alternatives which are not connected by arcs in the figure are incomparable in terms of \(\succsim ^{\rightarrow ,N}\). This means that with some compatible value functions and class thresholds one of them is assigned to a class strictly better than the other, while with some other compatible preference model instances, the order of classes is inverse. This holds for, e.g., Paris and Vienna or Amsterdam and London.

Within the framework of post factum analysis we offer a set of tools for answering different questions regarding robustness of the provided recommendation. In our view, the targets to be analyzed should be indicated by the DM. This means that we shall rather not analyze all possible targets, but only take into account these preference relations, ranks, or assignments which are of particular interest to the DM. Let us illustrate the type of questions that can be answered within the framework of post factum analysis, starting with the analysis of possible improvements and missing values. When investigating the required/allowed modification of performance values, we focus on radial improvements/deteriorations.

Possible assignment-related improvements and missing values

First, we focus on 11 non-reference cities whose best possible class is not better than \(C_3\). We are interested in the improvements that they need to make to be assigned to \(C_4\) for at least one compatible preference model instance. In Table 3, we provide the respective comprehensive assignment-related possible improvements \(\rho ^P_{>}(R_P(a) \ge 4)\) as well as possible missing values \(u^P_{>}(R_P(a) \ge 4)\). On one hand, the least improvement in terms of both \(\rho ^P_{>}(R_P(a) \ge 4)\) and \(u^P_{>}(R_P(a) \ge 4)\) needs to be made by Ljubljana, Riga, and Dublin. These are cities which are possibly assigned to \(C_3\) with their current performance vectors. On the other hand, the greatest improvement in view of possible assignment to \(C_4\) needs to be made by Kiev, Sofia, and Bucharest, which are currently necessarily assigned to \(C_1\). The marginal value functions which are the most advantageous in terms of possible assignment to \(C_4\) for Dublin, Warsaw, and Bucharest are presented in Fig. 2.

The most advantageous marginal value functions in terms of possible assignment to \(C_4\) for Dublin (solid line; \(U(\hbox {Dublin}) = 0.486, u^P_{>}(R_P(Dublin) \ge 4) = 0.2450)\), Warsaw (dashed line; \(U(\hbox {Warsaw}) = 0.407\); \(u^P_{>}(R_P(\hbox {Warsaw}) \ge 4) = 0.3082\)), and Bucharest (dotted line; U(Bucharest) = 0.209; \(u^P_{>}(R_P(\hbox {Bucharest}) \ge 4) = 0.4799\))

Let us now illustrate that the more demanding the target set (i.e., the better the class to attain), the greater improvement is needed to acquire it for at least one compatible preference model instance. For example:

-

Warsaw which is possibly assigned to \(C_2\) in the best case needs to improve its performances 1.0139 or 1.4514 times to possibly reach, respectively, \(C_3\) or \(C_4\); the respective missing values are 0.0095 and 0.3082;

-

Bucharest which is possibly and necessarily assigned to \(C_1\) needs to improve its performances 1.1240, 1.3541, or 1.9322 times to possibly reach, respectively, \(C_2\), \(C_3\) or \(C_4\); the respective missing values are 0.0641, 0.1812, and 0.4799.

Instead of investigating the simultaneous improvement of all performances to reach a specified possible assignment, it may be interesting to consider some subsets of criteria only. In Table 4, we present partial assignment-related possible improvements \(\rho ^P_{>, G_j}(R_P(a) \ge 4)\) for all subsets of criteria \(G_j \subseteq G\) in view of the possible assignment of Riga to \(C_4\). They confirm that for any subsets of criteria \(G_1 \subset G_2 \subseteq G\), allowing achievement of the target, the required improvement on \(G_2\) is not greater than the respective improvement on \(G_1\). The detailed analysis reveals that minimal subsets of criteria that, when improved, guarantee the possible assignment of Riga to \(C_4\) are \(\{g_1\}\) and \(\{g_2, g_4\}\) (these were called possible improvement reducts in Sect. 5.2.1). Further, whatever the improvement on \(\{g_2\}\), \(\{g_3\}\), \(\{g_4\}\), \(\{g_2, g_3\}\), or \(\{g_3, g_4\}\), the investigated target cannot be reached. Finally, the following strategies seem to be the best for Riga to follow: improvement of its performance on \(g_1\) by 1.3672 times, or slightly less simultaneous improvement on \(g_1\) and \(g_3\) or \(g_1\) and \(g_4\).

Possible preference-related assignment-based improvements and missing values

When it comes to the possible assignment-based preference relation, we investigate the improvement that needs to be made to turn its falsity into the truth. For example, Kiev which is assigned to a class worse than Athens by all compatible preference model instances needs to improve its performances 1.3904 times so that Kiev \(\succsim ^{\rightarrow ,P}\) Athens; the respective missing value is 0.1684. When considering Warsaw and Rome, the corresponding results are 1.0139 (for the possible improvement) and 0.0095 (for the possible missing value).

Necessary assignment-related improvements and missing values

Now, let us illustrate the way one could take advantage of the framework for investigating the necessary improvements. First, we focus on 6 cities which are possibly, but not necessarily, assigned to \(C_4\). We are interested in the improvements that they need to make to be assigned to the best class with all compatible preference model instances. The results of this analysis are provided in Table 5. On one hand, only a very slight improvement of the performances or the comprehensive value would grant Copenhagen the necessary assignment to \(C_4\). These results indicate that its possible assignment to \(C_3\) is very sensitive with respect to the current performance vector. On the other hand, the effort that needs to be made by Amsterdam and Madrid to reach the specified target is much greater. The marginal value functions which are least advantageous in terms of the necessary assignment to \(C_4\) for Copenhagen, Paris, and Madrid are presented in Fig. 3.

The most advantageous marginal value functions in terms of necessary assignment to \(C_4\) for Copenhagen (solid line; \(U(\hbox {Copenhagen}) = 0.871, u^N_{>}(L_P(\hbox {Copenhagen}) \ge 4) = 0.0030\)), Paris (dashed line; \(U(\hbox {Paris}) = 0.696\); \(u^N_{>}(L_P(\hbox {Paris}) \ge 4) = 0.104\)), and Madrid (dotted line; \(U(\hbox {Madrid}) = 0.638\); \(u^N_{>}(L_P(\hbox {Madrid}) \ge 4) = 0.1455\))

Again, let us illustrate that the more demanding the target, the greater improvement is needed to achieve it for all compatible preference model instances. For example:

-

Madrid which is possibly assigned to \([C_2, C_4]\) needs to improve its performances 1.0402 or 1.1686 times to be necessarily assigned to class at least \(C_3\) or \(C_4\), respectively; the respective missing values are 0.0346 and 0.1455;

-

Bratislava which is possibly assigned to \([C_1, C_3]\) needs to improve its performances 1.0287 or 1.5016 times to be necessarily assigned to class at least \(C_2\) or \(C_3\), respectively; the respective missing values are 0.0194 and 0.3337.

In Table 6, we present partial assignment-related necessary improvements \(\rho ^N_{>, G_j}(L_P(\hbox {Istanbul}) \ge 4)\) for necessary assignment of Istanbul to \(C_2\). The analysis confirms that by improving performances on any subset of criteria Istanbul may acquire this target. Nevertheless, the following strategies seem to be the best for Istanbul to follow: improvement of \(g_1(\hbox {Istanbul})\) by 1.3659 times, simultaneous improvement on \(g_1\) and \(g_3\), or \(g_3\) and \(g_4\), or \(g_1\), \(g_3\) and \(g_4\).

Let us also illustrate that achieving a certain target for at least one compatible preference model instance is easier than in the necessary sense. For example, Bratislava which is assigned to \(C_3\) in the best case needs to improve its performances 1.4482 or 1.8304 times to be assigned to class \(C_4\), respectively, for at least one or all compatible preference model instances; the respective missing values are 0.2647 and 0.5124. When considering Istanbul whose best possible class is \(C_2\), it would be possibly or necessarily assigned to \(C_3\) in case its performances are improved, respectively, 1.0062 or 1.4797 times, or its comprehensive value is improved by 0.0042 or 0.3249.

Necessary preference-related assignment-based improvements and missing values

When it comes to the improvement granting the truth of the exemplary necessary assignment-based preference relation, Madrid which is not assigned to a class at least as good as London with all compatible preference model instances needs to improve its performances 1.3904 times so that Madrid \(\succsim ^{\rightarrow ,N}\) London; the respective missing value is 0.1684. The necessary improvement and missing value needed to instantiate the relation: London \(\succsim ^{\rightarrow ,N}\) Madrid, are equal to 1.0139 and 0.0095, respectively. Further, Warsaw needs to improve its performance 1.3347 times (comprehensive value by 0.2450) to be necessarily assigned to a class at least as good as Athens.

Possible assignment-related deteriorations and surplus values

Let us remind that the possible or necessary improvement or missing value should be computed in case some target is not attained with the current performance vector. Otherwise, one may investigate what is the possible or necessary deterioration or surplus value that still guarantees preserving some target. First, let us focus on the deterioration of the performances and the comprehensive value of selected alternatives that would still grant their possible assignment to class at least \(C_3\). In Table 7, we provide the respective comprehensive assignment-related possible deteriorations \(\rho ^P_{<}(R_P(a) \ge 3)\) and surplus values \(u^P_{<}(R_P(a) \ge 3)\) for 11 alternatives which acquire this target with their current performance vectors. On one hand, Zurich, Copenhagen, Paris, Vienna, London, Amsterdam, and Madrid can afford significant deterioration in terms of both their performances and comprehensive values while still being possibly assigned to \(C_3\). On the other hand, for Riga, Dublin, and Bratislava only very slight deterioration is allowed to maintain assignment to \(C_3\) with at least one compatible preference model instance. In particular, the assignment of Bratislava to \(C_3\) is very sensitive to its current performance vector.

Obviously, the more loose the target, i.e., the worse the class to maintain, the greater deterioration is allowed to hold it for at least one compatible preference model instance. For example:

-

Madrid which is possibly assigned to \([C_2, C_4]\) can afford deterioration of its performances by 0.9513, 0.6590, or 0.5454 times to possibly maintain the assignment to, respectively, \(C_4\), \(C_3\), or \(C_2\); the respective surplus values are 0.3633, 0.5454 and 0.6590;

-

Riga which is possibly assigned to \([C_2, C_3]\) can afford deterioration of its performances by 0.8833 or 0.7374 times to possibly maintain assignment to, respectively, \(C_3\) or \(C_2\); the respective surplus values are 0.0879 and 0.2069.

Possible preference-related assignment-based deteriorations and surplus values

When it comes to the deterioration for the exemplary possible assignment-based preference relation, Riga which is assigned to a class at least as good as Dublin for at least one compatible preference model instance may deteriorate its performances by 0.7374 times so that still Riga \(\succsim ^{\rightarrow ,P}\) Dublin; the respective missing value is 0.2069. The possible deterioration and surplus value for the relation: Dublin \(\succsim ^{\rightarrow ,P}\) Riga, are equal to 0.08281 and 0.1246, respectively. Thus, the performances and the comprehensive value of Riga are less sensitive with respect to the truth of the possible assignment-based preference to Dublin than vice versa.

Necessary assignment-related deteriorations and surplus values

As far as the necessary deterioration and the surplus value are concerned, let us first focus on alternatives which are necessarily assigned to class at least \(C_3\). We wish to investigate what deterioration of their performances or what decrease of the comprehensive value they can afford while still being assigned to class \(C_3\) or better with all compatible preference model instances. The results of this analysis are provided in Table 8. On one hand, for Paris, London, and Amsterdam, only a very slight deterioration of their performances or comprehensive values is allowed. On the other hand, the necessary assignment to class not worse than \(C_3\) for Zurich and Copenhagen is much less sensitive to the change of their performances or comprehensive values.

Again, the less strict the designated target, the greater deterioration is allowed to maintain it for all compatible preference model instances. For example:

-

Zurich which is necessarily assigned to \(C_4\) can afford deterioration of its performances by 0.9913 or 0.8607 times to maintain assignment to, respectively, \(C_4\) and \(C_3\) with all compatible preference model instances; the respective surplus values are 0.0103 and 0.1650;

-

Amsterdam which is never assigned to class worse than \(C_3\) can afford deterioration of its performances by 0.9632 or 0.7218 times to maintain assignment to, respectively, \(C_3\) and \(C_2\) with all compatible preference model instances; the respective surplus values are 0.0391 and 0.3149.

Necessary preference-related assignment-based deteriorations and surplus values

Referring to an exemplary necessary assignment-based preference relation, Madrid can afford deterioration of its performances by 0.7218 times and its comprehensive value by 0.3149 while still being necessarily preferred to Budapest. When comparing Dublin with Vilnius, the corresponding results are 0.9921 (for the necessary deterioration) and 0.0064 (for the necessary surplus value).