Abstract

We study a general class of infimal convolution type regularisation functionals suitable for applications in image processing. These functionals incorporate a combination of the total variation seminorm and \(\mathrm {L}^{p}\) norms. A unified well-posedness analysis is presented and a detailed study of the one-dimensional model is performed, by computing exact solutions for the corresponding denoising problem and the case \(p=2\). Furthermore, the dependency of the regularisation properties of this infimal convolution approach to the choice of p is studied. It turns out that in the case \(p=2\) this regulariser is equivalent to the Huber-type variant of total variation regularisation. We provide numerical examples for image decomposition as well as for image denoising. We show that our model is capable of eliminating the staircasing effect, a well-known disadvantage of total variation regularisation. Moreover as p increases we obtain almost piecewise affine reconstructions, leading also to a better preservation of hat-like structures.

Similar content being viewed by others

1 Introduction

In this paper, we introduce a family of novel \(\mathrm {TV}\)–\(\mathrm {L}^{p}\) infimal convolution type functionals with applications in image processing:

Here \(\Vert \cdot \Vert _{\mathcal {M}}\) denotes the Radon norm of a measure. The functional (1.1) is suitable to be used as a regulariser in the context of variational non-smooth regularisation in imaging applications. We study the properties of (1.1), its regularising mechanism for different values of p and apply it successfully to image denoising.

1.1 Context

After the introduction of the total variation (\(\mathrm {TV}\)) for image reconstruction purposes [38], the use of non-smooth regularisers has become increasingly popular during the last decades (cf. [7]). They are typically used in the context of variational regularisation, where the reconstructed image is obtained as a solution of a minimisation problem of the type:

The regulariser is denoted here by \(\Psi \). We assume that the data f, defined on an open, bounded and connected domain \(\Omega \subset \mathbb {R}^{2}\), have been corrupted through a bounded, linear operator T and additive (random) noise. Different values of s can be considered for the first term of (1.2), the fidelity term. For example, models incorporating an \(\mathrm {L}^{2}\) fidelity term (resp. \(\mathrm {L}^{1}\)) have been shown to be efficient for the restoration of images corrupted by Gaussian noise (resp. impulse noise). Of course, other types of noise can also be considered and in those cases the form of the fidelity term is adjusted accordingly. Typically, one or more parameters within \(\Psi \) balance the strength of regularisation against the fidelity term in the minimisation (1.2).

The advantage of using non-smooth regularisers is that the regularised images have sharp edges (discontinuities). For instance, it is a well-known fact that \(\mathrm {TV}\) regularisation promotes piecewise constant reconstructions, thus preserving discontinuities. However, this also leads to blocky-like artefacts in the reconstructed image, an effect known as staircasing. Recall at this point that for two-dimensional images \(u\in \mathrm {L}^{1}(\Omega )\), the definition of the total variation functional reads

The total variation uses only first-order derivative information in the regularisation process. This can be seen from the fact that for \(\mathrm {TV}(u)<\infty \) the distributional derivative Du is a finite Radon measure and \(\mathrm {TV}(u)=\Vert Du\Vert _{\mathcal {M}}\). Moreover if \(u\in \mathrm {W}^{1,1}(\Omega )\) then \(\mathrm {TV}(u)=\int _{\Omega }|\nabla u|\,\mathrm{d}x\), i.e. the total variation is the \(\mathrm {L}^{1}\) norm of the gradient of u. Higher-order extensions of the total variation functional are widely explored in the literature e.g. [4, 5, 9, 11, 12, 27, 29, 30, 34]. The incorporation of second-order derivatives is shown to reduce or even eliminate the staircasing effect. The most successful regulariser of this kind is the second-order total generalised variation (TGV) introduced by Bredies et al. [5]. Its definition reads

Here \(\alpha ,\beta \) are positive parameters and \(\mathrm {BD}(\Omega )\) is the space of functions of bounded deformation, i.e. the space of all \(\mathrm {L}^{1}(\Omega )\) functions w, whose symmetrised distributional derivative \(\mathcal {E}w\) is a finite Radon measure. This is a less regular space than the usual space of functions of bounded variation \(\mathrm {BV}(\Omega )\) for which the full gradient Du is required to be a finite Radon measure. Note that if the variable w in the definition (1.4) is forced to be the gradient of another function then we obtain the classical infimal convolution regulariser of Chambolle–Lions [9]. In that sense \(\mathrm {TGV}\) can be seen as a particular instance of infimal convolution, optimally balancing first and second-order information.

In the discrete formulation of \(\mathrm {TGV}\) (as well as for \(\mathrm {TV}\)) the Radon norm is interpreted as an \(\mathrm {L}^{1}\) norm. The motivation for the current and the follow-up paper [8] is to explore the capabilities of \(\mathrm {L}^{p}\) norms within first-order regularisation functionals designed for image processing purposes. The use of \(\mathrm {L}^{p}\) norms for \(p> 1\) has been exploited in different contexts—infinity and p-Laplacian (cf. e.g. [16] and [26] respectively).

1.2 Our Contribution

Comparing the definition (1.1) with the definition of \(\mathrm {TGV}\) in (1.4), we see that the Radon norm of the symmetrised gradient of w has been substituted by the \(\mathrm {L}^{p}\) norm of w, thus reducing the order of regularisation. Up to our knowledge, this is the first paper that provides a thorough analysis of \(\mathrm {TV}\)–\(\mathrm {L}^{p}\) infimal convolution models (1.1) in this generality. We show that the minimisation in (1.1) is well-defined and that \(\mathrm {TVL}_{\alpha ,\beta }^{p}(u)<\infty \) if and only if \(\mathrm {TV}(u)<\infty \). Hence \(\mathrm {TVL}_{\alpha ,\beta }^{p}\) regularised images belong to \(\mathrm {BV}(\Omega )\) as desired.

In order to get more insight in the regularising mechanism of the \(\mathrm {TVL}_{\alpha ,\beta }^{p}\) functional we provide a detailed and rigorous analysis of its one-dimensional version of the corresponding \(\mathrm {L}^{2}\) fidelity denoising problem

For the denoising problem (1.5) with \(p=2\) we also compute exact solutions for simple one-dimensional data. We show that the obtained solutions are piecewise smooth, in contrast to \(\mathrm {TV}\) (piecewise constant) and \(\mathrm {TGV}\) (piecewise affine) solutions. Moreover, we show that for \(p=2\), the 2-homogeneous analogue of the functional (1.1)

is equivalent to a variant of Huber \(\mathrm {TV}\) [24], with the functional (1.6) having a close connection to (1.1) itself. Huber total variation is a smooth approximation of total variation and even though it has been widely used in the imaging and inverse problems community, it has not been analysed adequately. Hence, as a by-product of our analysis, we compute exact solutions of the one-dimensional Huber TV denoising problem. An analogous connection of the \(\mathrm {TVL}_{\alpha ,\beta }^{p}\) functional with a generalised Huber \(\mathrm {TV}\) regularisation is also established for general p.

We proceed with exhaustive numerical experiments focusing on (1.5). Our analysis is confirmed by the fact that the analytical results coincide with the numerical ones. Furthermore, we observe that even though a first-order regularisation functional is used, we are capable of eliminating the staircasing effect, similar to Huber \(\mathrm {TV}\). By using the Bregman iteration version of our method [32], we are also able to enhance the contrast of the reconstructed images, obtaining results very similar in quality to the \(\mathrm {TGV}\) ones. We observe numerically that high values of p promote almost affine structures similar to second-order regularisation methods. We shed more light of this behaviour in the follow-up paper [8] where we study in depth the case \(p=\infty \). Let us finally note that we also consider a modified version of the functional (1.1) where w is restricted to be a gradient of another function leading to the more classical infimal convolution setting. Even though, this modified model is not so successful in staircasing reduction, it is effective in decomposing an image into piecewise constant and smooth parts.

1.3 Organisation of the Paper

After the introduction we proceed with the introduction of our model in Sect. 2. We prove the well-posedness of (1.1), we provide an equivalent definition and we prove its Lipschitz equivalence with the \(\mathrm {TV}\) seminorm. We finish this section with a well-posedness result of the corresponding \(\mathrm {TVL}_{\alpha ,\beta }^{p}\) regularisation problem using standard tools.

In Sect. 3 we establish a link between the \(\mathrm {TVL}_{\alpha ,\beta }^{p}\) functional and its p-homogeneous analogue (using the p-th power of \(\Vert \cdot \Vert _{\mathrm {L}^{p}(\Omega )}\)). The p-homogeneous functional (for \(p=2\)) is further shown to be equivalent to Huber total variation, while analogous results are obtained for \(p\ne 2\).

We study the corresponding one-dimensional model in Sect. 4 focusing on the \(\mathrm {L}^{2}\) fidelity denoising case. More specifically, after deriving the optimality conditions using Fenchel–Rockafellar duality in Sect. 4.1, we explore the structure of solutions in Sect. 4.2. In Sect. 4.3 we compute exact solutions for the case \(p=2\), considering a simple step function as data.

In Sect. 5 we present a variant of our model suitable for image decomposition purposes, i.e. geometric decomposition into piecewise constant and smooth structures.

Section 6 focuses on numerical experiments. Confirmation of the obtained one-dimensional analytical results is done in Sect. 6.2, while two-dimensional denoising experiments are performed in Sect. 6.3 using the split Bregman method. There, we show that our approach can lead to elimination of the staircasing effect and we also show that by using a Bregmanised version we can also enhance the contrast, achieving results very close to \(\mathrm {TGV}\), a method considered state of the art in the context of variational regularisation. We finish the section with some image decomposition examples and we summarise our results in Sect. 7.

In the appendix, we remind the reader of some basic facts from the theory of Radon measures and \(\mathrm {BV}\) functions.

2 Basic Properties of the \(\mathrm {TVL}_{\alpha ,\beta }^{p}\) Functional

In this section, we introduce the \(\mathrm {TVL}_{\alpha ,\beta }^{p}\) functional (1.1) as well as some of its main properties. For \(\alpha ,\beta >0\) and \(1<p\le \infty \), we define \(\mathrm {TVL}^{p}_{\alpha ,\beta }: \mathrm {L}^{1}(\Omega )\rightarrow \overline{\mathbb {R}}\) (where \(\overline{\mathbb {R}}:=\mathbb {R}\cup \{+\infty \}\)) as follows:

While in the present paper we mainly focus on the finite p case, the results of this section are stated and proved for \(p=\infty \) as well, since the proofs are similar.

The next proposition asserts that the minimisation in (1.1) is indeed well-defined. We omit the proof, which is based on standard coercivity and weak lower semicontinuity techniques:

Proposition 2.1

Let \(u\in \mathrm { BV(\Omega )}\) with \(1< p\le \infty \) and \(\alpha ,\beta >0\). Then the minimum in the definition (1.1) is attained.

Another useful formulation of the definition (1.1) is the dual formulation:

where q denotes here the conjugate exponent of p, see (8.4). The following proposition shows that the two expressions coincide indeed. Recall first that for a functional \(F:X\rightarrow \overline{\mathbb {R}}\) the effective domain is defined as \(\mathrm{dom}F = \left\{ x\in X: F(x)<\infty \right\} \), while the indicator and characteristic functions of \(A\subseteq X\) are defined as

respectively. As usual, we denote by \(\left\langle \cdot ,\cdot \right\rangle _{}\) the duality product of X and its dual \(X^{*}\). Finally, recall that the convex conjugate \(F^{*}:X^{*}\rightarrow \overline{\mathbb {R}}\) of F is defined as \(F^{*}(x^{*})=\sup \limits _{x\in X}\left\langle x^{*},x\right\rangle _{}-F(x)\).

Proposition 2.2

Let \(u\in \mathrm { BV(\Omega )}\) and \(1<p\le \infty \) then

Proof

First notice that in (2.1), we can replace \(C^1_c(\Omega )\) by \(C^1_0(\Omega )\), since \(\overline{C^1_c(\Omega )}=C^1_0(\Omega )\) with the closure taken with respect to the \(C^{1}\) norm, i.e.  . We define

. We define

Then, we can rewrite (2.1) as

We can establish the following relation

i.e. the absence of duality gap between the primal and the dual problems [15, Chapter III], provided that the set

is a closed vector space [2]. This is indeed true since on one hand we have

and on the other hand, for every \(\phi \in X\), we can write \(\phi =\lambda (\lambda ^{-1}\phi -0)\) with  and \(0\in \mathrm{dom}F_{1}\). Since \(u\in \mathrm {BV}(\Omega )\), then \(\mathrm {TVL}^{p}_{\alpha ,\beta }\) is finite, see also Proposition 2.4. Hence, \(F_{1}^{*}(w)<\infty \), \(F_{2}^{*}(w)<\infty \) and

and \(0\in \mathrm{dom}F_{1}\). Since \(u\in \mathrm {BV}(\Omega )\), then \(\mathrm {TVL}^{p}_{\alpha ,\beta }\) is finite, see also Proposition 2.4. Hence, \(F_{1}^{*}(w)<\infty \), \(F_{2}^{*}(w)<\infty \) and

where we have used the fact that, with a density argument, the function \(w: C_{0}^{1}\rightarrow \mathbb {R}\) can be extended in the whole \(\mathrm {L}^{q}(\Omega )\) as a bounded, linear functional and using the Riesz representation theorem, we deduce that it is actually an \(\mathrm {L}^{p}\) function. Similarly, we have

Thus the desired equality is proven.

Remark 2.3

Note that using the dual formulation of \(\mathrm {TVL}^{p}_{\alpha ,\beta }\) one can easily derive that the functional is lower semicontinuous with respect to the strong \(\mathrm {L}^{1}\) topology since it is a pointwise supremum of continuous functions.

The following lemma shows that the \(\mathrm {TVL}^{p}_{\alpha ,\beta }\) functional is Lipschitz equivalent to the total variation.

Proposition 2.4

Let \(u\in \mathrm {L^{1}(\Omega )}\) and \(1<p\le \infty \). Then \(\mathrm {TVL}^{p}_{\alpha ,\beta }(u)<\infty \) if and only if \(u\in \mathrm {BV}(\Omega )\). Moreover there exist constants \(C_{1}=\alpha \) and \(C_{2}=(C\tilde{C})^{-1}\), where \(C=\max \big (1,|\Omega |^{\frac{1}{q}}\big )\) and \(\tilde{C}=\max \big (\frac{1}{\alpha },\frac{1}{\beta }\big )\) such that

Finally in the special case where

then

Proof

Let \(u\in \mathrm {BV}(\Omega )\). Using the definition (1.1) we have that

for every \(w\in \mathrm {L}^{p}(\Omega )\). Setting \(w=0\) and \(C_{1}=\alpha \), we obtain

For the other direction, for any \(w\in \mathrm {L}^{p}(\Omega )\subset \mathrm {L^{1}(\Omega )}\), by the triangle inequality we get

with \(C=\max \big (1,|\Omega |^{\frac{1}{q}} \big )\). By setting \(C_{2}\) as in the statement of the lemma we obtain

which, by minimising over w, yields the left-hand side inequality in (2.2).

Finally, observe simply that if (2.3) holds then from (2.6) we get

and thus minimising again over w and combining (2.5) we get (2.4).

Notice that when (2.3) holds then the above proposition implies that \(w=0\) is an admissible solution to the definition of \(\mathrm {TVL}_{\alpha ,\beta }^{p}(u)\), i.e.

However, in general we cannot prove that this solution is unique.

Having shown the basic properties of the \(\mathrm {TVL}^{p}_{\alpha ,\beta }\) functional, we can use it as a regulariser for variational imaging problems of the type

where \(T: \mathrm {L}^{s}(\Omega )\rightarrow \mathrm {L}^{s}(\Omega )\) is a bounded, linear operator and \(f\in \mathrm {L}^{s}(\Omega )\). We conclude our analysis with existence and uniqueness results for the minimisation problem (2.7).

Theorem 2.5

Let \(1<p\le \infty \) and \(f\in \mathrm {L}^{s}(\Omega )\). If \(T(\mathcal {X}_{\Omega })\ne 0\) then there exists a solution \(u\in \mathrm {L}^{s}(\Omega )\cap \mathrm {BV}(\Omega )\) for the problem (2.7). If \(s>1\) and T is injective then the solution is unique.

Proof

The proof is a straightforward application of the direct method of calculus of variations. We simply take advantage of the inequality (2.2) and the compactness theorem in \(\mathrm {BV}(\Omega )\), see Appendix, along with the lower semicontinuity property of \(\mathrm {TVL}^{p}_{\alpha ,\beta }\). We also refer the reader to the corresponding proofs in [34, 39].\(\square \)

Since we are mainly interested in studying the regularising properties of \(\mathrm {TVL}^{p}_{\alpha ,\beta }\), in what follows we focus on the case where \(s=2\) and T is the identity function (denoising task) where rigorous analysis can be carried out. From now on, we also focus on the case where p is finite, as the case \(p=\infty \) is studied in the follow-up paper [8]. We thus define the following problem

or equivalently

3 The p-Homogeneous Analogue and Relation to Huber TV

Before we proceed to a detailed analysis of the one-dimensional version of \((\mathcal {P})\), in this section we consider its p-homogeneous analogue

We show in Proposition 3.2 that there is a strong connection between the models \((\mathcal {P})\) and \((\mathcal {P}_{p-hom})\). The reason for the introduction of \((\mathcal {P}_{p-hom})\) is that, in certain cases, it is technically easier to derive exact solutions for \((\mathcal {P}_{p-hom})\) rather than for \((\mathcal {P})\) straightforwardly, see Sect. 4.3. Moreover, we can guarantee the uniqueness of the optimal \(w^{*}\) in \((\mathcal {P}_{p-hom})\), since

and thus \(w^{*}\) is unique as a minimiser of a strictly convex functional. The next proposition states that, unless f is a constant function then the optimal \(w^{*}\) in \((\mathcal {P}_{p-hom})\) cannot be zero but nonetheless converges to zero as \(\beta \rightarrow \infty \). In essence, this means that one cannot obtain \(\mathrm {TV}\) type solutions with the p-homogeneous model.

Proposition 3.1

Let \(1<p<\infty \), \(f\in \mathrm {L}^{2}(\Omega )\) and let \((w^{*},u^{*})\) be an optimal solution pair of the p-homogeneous problem \((\mathcal {P}_{p-hom})\). Then \(w^{*}=0\) if and only if f is a constant function. For general data f, we have that \(w^{*}\rightarrow 0\) in \(\mathrm {L}^{p}(\Omega )\) when \(\beta \rightarrow \infty \).

Proof

It follows immediately that if f is constant then (0, f) is the optimal pair for \((\mathcal {P}_{p-hom})\). Suppose that \((w^{*},u^{*})\) solve \((\mathcal {P}_{p-hom})\). Notice that in this case we also have

Suppose now that \(w^{*}=0\). Then (3.1) becomes

Furthermore, since \((0,u^{*})\) solve \((\mathcal {P}_{p-hom})\), then for every \(u\in C_{c}^{\infty }(\Omega )\) and \(\epsilon >0\), the pair \((\epsilon \nabla u,u^{*}+\epsilon u )\) is suboptimal for \((\mathcal {P}_{p-hom})\), i.e.

from which we take

By dividing the last inequality by \(\epsilon \) and taking the limit \(\epsilon \rightarrow 0\) we have that \(\int _{\Omega }(f-u^{*})u\,\mathrm{d}x\le 0\). By considering the analogous perturbations \(u^{*}-\epsilon u\) , we obtain similarly that \(\int _{\Omega }(f-u^{*})u\,\mathrm{d}x\ge 0\) and thus

Hence \(u^{*}=f\) and by taking the optimality condition of (3.2) we get that  , which implies that \(Df=0\), i.e. f is a constant function.

, which implies that \(Df=0\), i.e. f is a constant function.

For the last part of the proposition, (supposing \(f\ne 0\)), simply observe that for every \(u\in \mathrm {BV}(\Omega )\) and \(w\in \mathrm {L}^{p}(\Omega )\) we have that

and by setting \(u=w=0\), we obtain

and thus \(\Vert w^{*}\Vert _{\mathrm {L}^{p}(\Omega )}^{p}\rightarrow 0\) when \(\beta \rightarrow \infty \).\(\square \)

We further establish a connection between the 1-homogeneous \((\mathcal {P})\) and the p-homogeneous model \((\mathcal {P}_{p-hom})\):

Proposition 3.2

Let \(1<p<\infty \) and \(f\in \mathrm {L}^{2}(\Omega )\) not a constant. A pair \((w^{*},u^{*})\) is a solution of \((\mathcal {P}_{p-hom})\) with parameters \((\alpha ,\beta _{p-hom})\) if and only if it is also a solution of \((\mathcal {P})\) with parameters \((\alpha ,\beta _{1-hom})\) where \(\beta _{1-hom}=\beta _{p-hom}\Vert w^{*}\Vert _{\mathrm {L}^{p}(\Omega )}^{p-1}\).

Proof

Since f is not a constant by the previous proposition we have that \(w^{*}\ne 0\). Note that for an arbitrary function \(u\in \mathrm {BV}(\Omega )\):

This means that \(w^{*}\) is an admissible solution for both problems \((\mathcal {P})\) and \((\mathcal {P}_{p-hom})\), with the corresponding set of parameters \((\alpha ,\beta _{1-hom})\) and \((\alpha ,\beta _{p-hom})\), respectively. The fact that the same holds for \(u^{*}\) as well, comes from the fact that in both problems we have

Finally, it turns out that for \(p=2\), problem \((\mathcal {P}_{p-hom})\) is essentially equivalent to the widely used Huber total variation regularisation, [24]. In fact we can show that for \(1<p<\infty \) \((\mathcal {P}_{p-hom})\) is equivalent to a generalised Huber total variation regularisation, see also [23]. This is proved in the next proposition.

Proposition 3.3

Let \(1<p<\infty \) and consider the functional \(\mathrm {TVL}_{\alpha ,\beta }^{p-hom}:\mathrm {BV}(\Omega )\rightarrow \mathbb {R}\) with

Then

where \(\varphi _{p}:\mathbb {R}^{d}\rightarrow \mathbb {R}\) with

and \(D^{s}u\) denotes the singular part of the measure Du, cf. Appendix.

Proof

We have

Thus we can focus on the minimisation problem

Baring in mind that (as it can easily checked) for \(c\in \mathbb {R}^{d}\) and \(\lambda >0\),

and

it is straightforwardly verified setting \(\lambda =\beta /\alpha \) that the function

belongs to \(\mathrm {L}^{\infty }(\Omega )\subset \mathrm {L}^{p}(\Omega )\) and solves (3.4) with optimal value equal to \(\frac{1}{\alpha }\int _{\Omega }\varphi _{p}(\nabla u)\,\mathrm{d}x\).\(\square \)

Note that in the special case \(p=2\) we recover the classical Huber total variation regularisation since

i.e. in that case we have quadratic penalisation for small gradients (p–power penalisation for the general \(1<p<\infty \)) and linear penalisation for large gradients.

For the reader’s convenience, in Fig. 1 we have plotted some of the functions \(\varphi _{p}\) in order to illustrate how their form changes when their parameters vary. Note for instance in Fig. 1a how \(\phi _{2}\) is converging to an absolute type function when \(\beta \) is getting large, i.e. approaching a total variation regularisation. This can also be seen from Proposition 3.1 where the optimal variable w is converging to 0 when \(\beta \rightarrow \infty \). On the other hand when p is getting large, Fig. 1b, small gradients are essentially not penalised at all, allowing the gradient to be almost constant, equal to its maximum value, leading to piecewise affine structures. We refer to some of the numerical examples in Sect. 6.2 and also the second part of this paper [8] where the case \(p=\infty \) is examined in detail.

Illustration of the forms of the Huber-type functions \(\varphi _{p}\) of Proposition 3.3. Their linear and p–power parts are plotted with blue and red colour, respectively. a Huber functions \(\varphi _{2}\) with fixed \(p=2\), \(\alpha =1\) and varying \(\beta \). b Generalised Huber functions \(\varphi _{p}\) with fixed \(\alpha =1\), \(\beta =2\) and varying p (Color figure online)

4 The One-Dimensional Case

In order to get more insights into the structure of solutions of the problem \((\mathcal {P})\), in this section we study its one-dimensional version. As above, we focus on the finite p case, i.e. \(1<p<\infty \). The case \(p=\infty \) leads to several additional complications and will be subject of a forthcoming paper [8]. For this section \(\Omega \subset \mathbb {R}\) is an open and bounded interval, i.e. \(\Omega =(a,b)\). Our analysis follows closely the ones in [6] and [33] where the one dimensional \(\mathrm {L}^{1}\)–\(\mathrm {TGV}\) and \(\mathrm {L}^{2}\)–\(\mathrm {TGV}\) problems are studied, respectively.

4.1 Optimality Conditions

In this section, we derive the optimality conditions for the one-dimensional problem \((\mathcal {P})\). We initially start our analysis by defining the predual problem \((\mathcal {P}^{*})\), proving existence and uniqueness for its solutions. We employ again the Fenchel–Rockafellar duality theory in order to find a connection between the solutions of the predual and primal problems.

We define the predual problem \((\mathcal {P}^{*})\) as

where as always q is the conjugate exponent of p. Existence and uniqueness for the solutions of \((\mathcal {P}^{*})\) can be verified by standard arguments:

Proposition 4.1

For \(f \in \mathrm {L}^2(\Omega )\), the predual problem \((\mathcal {P}^{*})\) admits a unique solution in \(\mathrm {H}_{0}^{1}(\Omega ).\)

The next proposition justifies the term predual for the problem \((\mathcal {P}^{*})\).

Proposition 4.2

The dual problem of \((\mathcal {P}^{*})\) is equivalent to the problem \((\mathcal {P})\) in the sense that (w, u) is a solution of the dual of \((\mathcal {P}^{*})\) if and only if \((w,u)\in \mathrm {L}^{p}(\Omega )\times \mathrm {BV}(\Omega )\) and solves \((\mathcal {P})\).

Proof

Observe that we can also write down the predual problem \((\mathcal {P}^{*})\) using the following equivalent formulation:

where \(X=\mathrm {H_{0}^{1}(\Omega )}\times \mathrm {H_{0}^{1}(\Omega )}\), \(Y=\mathrm {H_{0}^{1}(\Omega )}\times \mathrm {L^{2}(\Omega )}\) and

We denote the infimum in \((\mathcal {P}^{*})\) by \(\inf \mathcal {P}^{*}\). Then, it is immediate that

The dual problem of (4.1), see [15], is defined as

where \(K^{\star }\) here denotes the adjoint of K. Let \((\sigma ,\tau )\) be elements of \(X^{*}=\mathrm {H}_{0}^{1}(\Omega )^{*}\times \mathrm {H}_{0}^{1}(\Omega )^{*}\). The convex conjugate of \(F_{1}\) can be written as

By standard density arguments and using the Riesz representation theorem we have

Moreover, we have

Since \(F_{1}^{*}(-K^{\star }(w,u))<\infty \), \(F_{2}^{*}(w,u)<\infty \), we obtain that

and

\(\square \)

We next verify that we have no duality gap between the two minimisation problems \((\mathcal {P})\) and \((\mathcal {P}^{*})\). The proof of the following proposition follows the proof of the corresponding proposition in [6]. We slightly modify it for our case.

Proposition 4.3

Let \(F_{1}, F_{2}, K, X, Y\) be defined as in (4.2). Then

and hence it is a closed vector space. Thus [2]

Proof

Let \((v,\psi )\in Y\) and define \(\psi _{0}(x)=c_{1}\), where

Now let \(\xi (x)=\int _{a}^{x}(\psi _{0}-\psi )(y) \,dy\). Since by construction, \(\xi '=\psi _{0}-\psi \in \mathrm {L^{2}(\Omega )}\) with \(\xi (a)=\xi (b)=0 \), we have that \(\xi \in \mathrm {H}_{0}^{1}(\Omega )\). Furthermore, let \(\phi = - v+\xi \in \mathrm {H}_{0}^{1}(\Omega )\) and \((\phi ,\xi )\in X\) with

Choosing appropriately \(\lambda >0\) such that  ,

,  , we can write

, we can write

with \(\mathrm{dom}F_{2} = \{0\} \times \mathrm {L^{2}(\Omega )}\) and  . Since \((v,\psi )\in Y\) were chosen arbitrarily, (4.8) holds.\(\square \)

. Since \((v,\psi )\in Y\) were chosen arbitrarily, (4.8) holds.\(\square \)

Since there is no duality gap, we can find a relationship between the solutions of \((\mathcal {P}^{*})\) and \((\mathcal {P})\) via the following optimality conditions.

Theorem 4.4

(Optimality conditions) Let \(1<p\le \infty \) and \(f\in \mathrm {L}^{2}(\Omega )\). A pair \((w,u)\in \mathrm {L}^{p}(\Omega ) \times \mathrm {BV}(\Omega )\) is a solution of \((\mathcal {P})\) if and only if there exists a function \(\phi \in \mathrm {H_{0}^{1}(\Omega )}\) such that

and

Proof

Since there is no duality gap, the optimality conditions read [15, Prop. 4.1(III)]:

for every \((\phi ,\xi )\) and (w, u) that solve \((\mathcal {P}^{*})\) and \((\mathcal {P})\), respectively. Hence, for every \((\sigma ,\tau )\in X^{*}\), we have the following:

Since \(\xi \in \mathrm {H_{0}^{1}(\Omega )}\subset \mathrm {C}_{0}(\Omega )\) in one dimension, we can make use of the fact that

see (8.1), and write the expressions in (4.15) as

and

Indeed, the \(\mathrm {L}^{p}\) norm is an one-homogeneous functional and thus its subdifferential reads

Note that for \(w=0\), the above expression reduces to  for all \(\sigma \in \mathrm {L}^{p}(\Omega )\), which is valid for any \(z\in \mathrm {L}^{q}(\Omega )\) with

for all \(\sigma \in \mathrm {L}^{p}(\Omega )\), which is valid for any \(z\in \mathrm {L}^{q}(\Omega )\) with  , i.e. the unit ball of \(\mathrm {L}^{q}(\Omega )\). If \(w\ne 0\) then the subdifferential is simply the Gâteaux derivative of the \(\mathrm {L}^{p}\) norm, i.e.

, i.e. the unit ball of \(\mathrm {L}^{q}(\Omega )\). If \(w\ne 0\) then the subdifferential is simply the Gâteaux derivative of the \(\mathrm {L}^{p}\) norm, i.e.  . Finally, from (4.14) we have for every \((\hat{w},\hat{u})\in Y^{*}\)

. Finally, from (4.14) we have for every \((\hat{w},\hat{u})\in Y^{*}\)

Combining all the above results, we obtain the optimality conditions (4.11) and (4.12).

Remark 4.5

We observe that if \(w=0\) then the conditions (4.11) coincide with the optimality conditions for the \(\mathrm {L}^{2}\)–\(\mathrm {TV}\) minimisation problem (ROF) with parameter \(\alpha \), i.e.

see also [37]. On the other hand when \(w\ne 0\), the additional condition (4.12) depends on the value of p and as we will see later it allows a certain degree of smoothness in the final solution u.

4.2 Structure of the Solutions

The optimality conditions (4.11) and (4.12) can help us explore the structure of the solutions for the problem \((\mathcal {P})\) and how this structure is determined by the regularising parameters \(\alpha , \beta \) and the value of p.

We initially discuss the cases where the solution u of \((\mathcal {P})\) is a solution of a corresponding ROF minimisation problem i.e. \(w=0\). Note that the following proposition holds for \(p=\infty \) as well.

Proposition 4.6

(ROF solutions) Let q be the conjugate exponent of \(p\in (1,\infty ]\) as defined in (8.4). If

then (0, u) is a solution pair for \((\mathcal {P})\) where u solves the ROF minimisation problem (4.18).

Proof

The proof follows immediately from Proposition 2.4.

Proposition 4.6 is valid for any dimension \(d\ge 1\). It provides a rough threshold for obtaining ROF type solutions in terms of the regularising parameters \(\alpha ,\beta \) and the image domain \(\Omega \). However, the condition is not sharp in general since as we will see in the following sections we can obtain a sharper estimate for specific data f.

The following proposition in the spirit of [6, 33] gives more insight into the structure of solutions of \((\mathcal {P})\).

Proposition 4.7

Let \(f\in \mathrm {BV(\Omega )}\) and suppose that \((w,u)\in \mathrm {L}^{p}(\Omega )\times \mathrm {BV}(\Omega )\) is a solution pair for \((\mathcal {P})\) with \(p\in (1,\infty ]\). Suppose that \(u>f\) (or \(u<f\)) on an open interval \(I\subset \Omega \) then \((Du-w)\lfloor I = 0\), i.e. \(u'=w\) on I and \(|D^{s}u|(I)=0\).

The above proposition is formulated rigorously via the use of good representatives of \(\mathrm {BV}\) functions, see [1], but for the sake of simplicity we rather not get into the details here. Instead we refer the reader to [6, 33] where the analogue propositions are shown for the \(\mathrm {TGV}\) regularised solutions and whose proofs are similar to the one of Proposition 4.7.

We now examine the case where the solution is constant in \(\Omega \), which in fact coincides with the mean value \(\tilde{f}\) of the data f:

Proposition 4.8

(Mean value solution) If the following conditions hold

then the solution of \((\mathcal {P})\) is constant and equal to \(\tilde{f}\).

Proof

Clearly, if u is a constant solution of \((\mathcal {P})\), then \(Du=0\) and from inequality (2.2) we get \(\mathrm {TVL}_{\alpha ,\beta }^{p}(u)=0\). Hence, we have \(u=\tilde{f}\).

In general, in order to have \(u=\tilde{f}\), from the optimality conditions (4.11) and (4.12), it suffices to find a function \(\phi \in \mathrm {H}^{1}_{0}(\Omega )\) such that

Letting \(\phi (x)=\int _{a}^{x} (f(s)-\tilde{f}) \,ds\), then obviously \(\phi \in \mathrm {H}^{1}_{0}(\Omega )\) since \(\phi (a)=\phi (b)=0\) and

Therefore,  . Also, since \(\mathrm {L}^{\infty }(\Omega )\subset \mathrm {L}^{q}(\Omega )\) we obtain

. Also, since \(\mathrm {L}^{\infty }(\Omega )\subset \mathrm {L}^{q}(\Omega )\) we obtain

Hence, it suffices to choose \(\alpha \) and \(\beta \) as in (4.20).

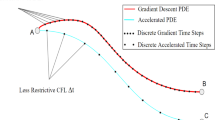

In Fig. 2, we summarise our results so far. There, we have partitioned the set \(\{\alpha >0,\beta >0\}\) into different areas that correspond to different types of solutions of the problem \((\mathcal {P})\). The red area, arising from thresholds (4.20) corresponds to the choices of \(\alpha \) and \(\beta \) that produce constant solutions while the blue area corresponds to ROF type solutions, according to threshold (2.3). Therefore, we can determine the area where the non-trivial solutions are obtained, i.e. \(w\ne 0\), see purple region. Note that since the conditions (2.3) and (4.20) are not sharp, the blue/red and the purple areas are potentially larger or smaller, respectively than it is shown in Fig. 2.

Characterisation of solutions of \((\mathcal {P})\) for any data f: The blue/red areas correspond to the ROF type solutions (\(w=0\)) and the purple area corresponds to the \(\mathrm {TVL}^{p}\) solutions (\(w\ne 0)\) for \(1<p<\infty \). We note that the blue/red and purple areas are potentially larger or smaller, respectively as the conditions we have derived are not sharp (Color figure online)

Propositions 4.6 and 4.8 tell us how the solutions u behave when \(\beta /\alpha \) or one of the parameters \(\alpha \) and \(\beta \) is large. The other limiting case is also of interest, i.e. when the parameters are small. The analogous questions have been examined in [35] for the \(\mathrm {TGV}\) case in arbitrary dimension. There it is shown that whenever \(\beta \rightarrow 0\) while keeping \(\alpha \) fixed or \(\alpha \rightarrow 0\) while keeping \(\beta \) fixed, the corresponding \(\mathrm {TGV}\) solutions converge to the data f strongly in \(\mathrm {L}^{2}\). The same result holds for the \(\mathrm {TVL}^{p}\) regularisation. The proof from [35] can be straightforwardly adapted to our case.

The following proposition reveals more information about the structure of solutions in the case \(w \ne 0\).

Proposition 4.9

(\(\mathrm {TVL}^p\) solutions) Let \(f\in \mathrm {BV(\Omega )}\) and suppose that \((w,u)\in \mathrm {L}^{p}(\Omega )\times \mathrm {BV}(\Omega )\) is a solution pair for \((\mathcal {P})\) with \(p\in (1,\infty )\) and \(w\ne 0\). Suppose that \(u>f\) (or \(u<f\)) on an open interval \(I\subset \Omega \) then the solution u of \((\mathcal {P})\) is obtained by

Proof

Since \(1<p<\infty \), \(w\ne 0\) using Proposition 4.7 and the second optimality condition of (4.12), we have that

Hence, using (4.11) we obtain (4.21) where  .\(\square \)

.\(\square \)

Let us make a few remarks regarding equation (4.21) which is in fact the p-Laplace equation. One cannot write down a priori the boundary conditions associated with this equation on an interval I where \(u>f\) (or \(u<f\)) as it depends on the data and the type of solution we are looking for. For instance see (4.31) for the kind of boundary conditions that might arise when we are seeking a particular exact solution. A general statement about the solvability of the equation cannot be made either. If the equation coupled with the boundary conditions (that arise when looking for a specific solution u) has a solution then indeed u can possibly solve the minimisation problem. On the other hand, if the p-Laplace equation does not have a solution then the function u that imposed the corresponding boundary conditions cannot be a minimiser. For more details on the p-Laplace equation and its solvability we refer the reader to [28] and the references therein.

4.3 Exact Solutions of \((\mathcal {P})\) for a Step Function

In what follows we compute explicit solutions of the \(\mathrm {TVL}^{p}\) denoising model \((\mathcal {P})\) for the case \(p=2\) for a simple data function. We define the step function in \(\Omega =(-L,L)\), \(L>0\) as

We first investigate conditions under which we obtain ROF type solutions, that is \(w=0\).

4.3.1 ROF Type Solutions

We note that in this case we can derive conditions for every \(1<p\le \infty \). We are initially interested in solutions that respect the discontinuity at \(x=0\) and are piecewise constant. From the optimality conditions (4.11)–(4.12), it suffices to find a function \(\phi \in \mathrm {H}^{1}_{0}(\Omega )\) which, apart from \(\phi (-L)=\phi (L)=0,\) it also satisfies

and it is piecewise affine. It is easy to see that by setting \(\phi (x)=\frac{\alpha }{L}(L-|x|)\), the conditions (4.23) are satisfied and the solution u is piecewise constant. The first condition of (4.12) implies that  and provides a necessary and sufficient condition that needs to be fulfilled in order for u to be piecewise constant, that is to say

and provides a necessary and sufficient condition that needs to be fulfilled in order for u to be piecewise constant, that is to say

A special case of the ROF type solution is when u is constant, i.e. when \(u=\tilde{f}\), the mean value of f. We define \(\phi (x)=\frac{h}{2}(L-|x|)\) and in that case we have that  and

and  . This implies that

. This implies that

Using now (4.24)–(4.25) we can draw the exact regions in the quadrant of \(\{\alpha >0,\beta >0\}\) that correspond to these two types of solutions, see the left graph in Fig. 4 for the special case \(p=2\). Notice that in these regions \(w=0\).

4.3.2 \(\mathrm {TVL}^2\) Type Solutions

For simplicity reasons, we examine here only the case \(p=2\) with \(w\ne 0\) in \(\Omega \). However, we refer the reader to Sect. 6.2 where we compute numerically solutions for \(p\ne 2\). Using Proposition 4.9, we observe that the solution is given by the following second-order differential equation:

Even though we can tell that the solution of (4.26) has an exponential form, the fact that the constraint on C depends on the solution w, creates a difficult computation in order to recover u analytically. In order to overcome this obstacle, we consider the one-dimensional version of the 2-homogeneous analogue of \((\mathcal {P})\) that was introduced in Sect. 3:

One can derive the optimality conditions for (4.27) similarly to Sect. 4.1. A pair (w, u) is a solution of (4.27) if and only if there exists a function \(\phi \in \mathrm {H}^{1}_{0}(\Omega )\) such that

In view of Proposition 3.2, in order to recover analytically the solutions of \((\mathcal {P})\) for \(p=2\) and determine exactly the purple region in Fig. 2, it suffices to solve the equivalent model (4.27) in which \(w\ne 0\) holds always. We may restrict our computations only on \((-L,0]\subset \Omega \) and due to symmetry the solution in (0, L) is given by \(u(x)=h-u(-x)\). The optimality condition (4.28) results to

Then, we get \(u(x)=c_{1}e^{kx}+c_{2}e^{-kx}\) with \(\phi (x)=\frac{c_{1}}{k}e^{kx}-\frac{c_{2}}{k}e^{-kx}+c_{3}\) for all \(x\in (-L,0]\). Firstly, we examine solutions that are continuous which due to symmetry must satisfy \(u(0)=\frac{h}{2}\). Since \(\phi \in \mathrm {H}^{1}_{0}(-L,L)\), we have \(\phi (-L)=0\) and also \(u'(-L)=0\). Finally, we require that \(\phi (0)<\alpha \). After some computations, we conclude that

where \(c_{1}=c_{2}e^{2kL}\), \(c_{2}=\frac{h}{2(e^{2kL}+1)}\) and \(k=\frac{1}{\sqrt{\beta }}\).

On the other hand, in order to get solutions that preserve the discontinuity at \(x=0\), we require the following:

Then we get

where \(c_{1}=c_{2}e^{2kL}\), \(c_{2}=\frac{\alpha k}{e^{2kL}-1}\) and \(k=\frac{1}{\sqrt{\beta }}\). Notice that the conditions for \(\alpha \) and \(\beta \) in (4.30) and (4.32) are supplementary and thus only these type of solutions can occur, see the quadrant of \(\{\alpha >0, \beta >0\}\) as it presented in Fig. 3. Letting \(g(\beta )=\sqrt{\beta }\tanh {(\frac{L}{\sqrt{\beta }})}\), if \(g(\beta )<\frac{2\alpha }{h}\) then the solution is of the form (4.30), see the blue region in Fig. 3. On the other hand in the complementary green region we obtain the solution (4.32). For extreme cases where \(\beta \rightarrow \infty \), i.e. \(k\rightarrow 0\) we obtain \(\frac{\tanh (kL)}{k}\rightarrow L\), which means that there is an asymptote of g at \(\alpha =\frac{hL}{2}\). Although, we know the form of the inverse function of the hyperbolic tangent, we cannot compute analytically the inverse \(f^{-1}\). However, we can obtain an approximation using a Taylor expansion which leads to

where \(\alpha >0\) and \(\alpha \ne \frac{hL}{2}\).

Finally, we would like to describe the solution on the limiting case \(\beta \rightarrow \infty \). Letting \(\beta \rightarrow \infty \) in (4.30), we have that \(c_{1},c_{2}\rightarrow \frac{h}{2}\) and \(u(x)\rightarrow \frac{h}{2}\) for every \(x\in \Omega \), which in fact is the mean value solution of \((\mathcal {P})\). For the discontinuous solutions, we have that \(c_{1},c_{2}\rightarrow \frac{\alpha }{2L}\) and

i.e. the limiting solution is (4.24). We also get that

with  and \(c_{2}\) is given either from (4.30) or (4.32). Then, in both cases we have \(w\rightarrow 0\) as \(k\rightarrow 0\). Observe that the product of

and \(c_{2}\) is given either from (4.30) or (4.32). Then, in both cases we have \(w\rightarrow 0\) as \(k\rightarrow 0\). Observe that the product of  is bounded as \(\beta _{2-hom}\rightarrow \infty \) for both types of solutions and in fact corresponds to the bounds found in (4.24) and (4.25). Indeed, since

is bounded as \(\beta _{2-hom}\rightarrow \infty \) for both types of solutions and in fact corresponds to the bounds found in (4.24) and (4.25). Indeed, since

if \(\alpha >\frac{hL}{2}\) then

while if \(\alpha \le \frac{hL}{2}\)

The last result is yet another verification of Proposition 3.2 and it shows that there is an one to one correspondence between  and

and  . The solutions that belong to the purple region of Fig. 4 are the same to the solutions that are shown in Fig. 3.

. The solutions that belong to the purple region of Fig. 4 are the same to the solutions that are shown in Fig. 3.

In the next proposition we summarise the type of solutions for \((\mathcal {P})\) for the step function:

Characterisation of solutions of \((\mathcal {P})\) for \(p=2\) for data f being a step function. The type of solutions in the purple region of the left graph are exactly the solutions obtained for the 2-homogeneous problem (4.27), on the right graph (Color figure online)

Proposition 4.10

There are four different types of solutions for the problem \((\mathcal {P})\) with \(p=2\) taking the step function (4.22) as data:

-

(1)

A piecewise constant solution given in (4.24) (blue region in Fig. 4).

-

(2)

A constant solution, equal to the mean value of the data, given in (4.25) (brown region in Fig. 4).

-

(3)

A continuous exponential solution given in (4.30) (lightblue region in Fig. 4).

-

(4)

A discontinuous piecewise exponential solution given in (4.32) (green region in Fig. 4).

Furthermore, there is an one to one correspondence between the purple and the green/light blue regions in Fig. 4.

5 An Image Decomposition Approach

In this section, we present another formulation of the problem \((\mathcal {P})\), where we decompose an image into a \(\mathrm {BV}\) part (piecewise constant) and a part that belongs to \(W^{1,p}(\Omega )\) (smooth). Let \(1<p\le \infty \) and \(\Omega \subset \mathbb {R}^{d}\) and consider the following minimisation problem:

In this way, we can decompose our image into two components of different structure. The second term captures the piecewise constant structures in the image, whereas the third term captures the smoothness that depends on the value of p. In the one-dimensional setting, we can prove that the problems \((\mathcal {P})\) and (5.1) are equivalent.

Proposition 5.1

Let \(\Omega =(a,b)\subset \mathbb {R}\) and \(1<p\le \infty \). Then a pair \((v^{*},u^{*})\in \mathrm {W}^{1,p}(\Omega )\times \mathrm {BV}(\Omega ) \) is a solution of (5.1) if and only if \((\nabla v^{*}, u^{*}+v^{*})\in \mathrm {L}^p(\Omega )\times \mathrm {BV}(\Omega )\) is a solution of \((\mathcal {P})\).

Proof

Let \(\overline{u}=u+v\) then, we have the following

However, the constraints \(w=\nabla v\), \(v\in \mathrm {W}^{1,p}(\Omega )\) can simply be substituted by \(w\in \mathrm {L}^{p}(\Omega )\) since

Indeed, let \(w\in \mathrm {L}^{p}(\Omega )\subset \mathrm {L}^{1}(\Omega )\) for \(p\in (1,\infty )\) and define \(v(x)=\int _{a}^{x}w(s)\,ds\) for \(x\in \Omega \subset \mathbb {R}\). Clearly, \(v'=w\) a.e. and by Hölder’s inequality we have for every \(x\in (a,b)\)

Thus \(v\in \mathrm {W}^{1,p}(\Omega )\) for \(p\in (1,\infty )\). If \(p=\infty \), suppose again \(w\in \mathrm {L}^{\infty }(\Omega )\) and let \(C>0\) be a constant such that \(|w(x)|\le C\) a.e. on \(\Omega \). In that case we have \(|v(x)|\le \int _{a}^{x}|w(s)|\,ds\le C|\Omega |<\infty \), i.e. \(v\in \mathrm {L}^{\infty }(\Omega )\) and hence \(v\in \mathrm {W}^{1,\infty }(\Omega )\) since \(v'=w\). Therefore,

where \(\overline{u^{*}}=u^{*}+v^{*}\) and \(w^{*}=\nabla v^{*}\).

Even though for \(d=1\) it is true that every \(\mathrm {L}^{p}\) function can be written as a gradient, this is not true in higher dimensions. In fact, as we show in the following sections, this constraint is quite restrictive and for example the staircasing effect cannot be always eliminated in the denoising process, see for instance Fig. 20.

The existence of minimisers of (5.1) is shown following again the same techniques as in Theorem 2.5. Moreover, due to the strict convexity of the fidelity term in (5.1), one can prove that the sum \(u+v\in \mathrm {BV(\Omega )}\) is unique for a solution \((u,v)\in \mathrm {W}^{1,p}(\Omega )\times \mathrm {BV}(\Omega )\). This result reflects the uniqueness for the problem \((\mathcal {P})\) for \(\overline{u}\). However one cannot show that the solutions (u, v) are unique in general. Yet, one can say something more about this issue. if \((u_{1},v_{1}), (u_{2},v_{2})\) are two minimisers of (5.1), then from the convexity of L(u, v) we have for \(0\le \lambda \le 1\)

Since \((u_{1},v_{1}), (u_{2},v_{2})\) are both minimisers, the above inequality is in fact an equality. Since \(u_{1}+v_{1}=u_{2}+v_{2}\), we obtain

If we assume that

then we contradict the equality on (5.3). Hence, the Minkowski inequality becomes an equality, i.e.

which is equivalent to the existence of \(\mu \ge 0\) such that \(\nabla v_{2}=\mu \nabla v_{1}\). In other words, we have proved the following proposition which was also shown in [25] in a similar context:

Proposition 5.2

Let \((u_{1},v_{1}), (u_{2},v_{2})\) be two minimisers of (5.1). Then

6 Numerical Experiments

In this section we present our numerical simulations for the problem \((\mathcal {P})\). We begin with the one-dimensional case where we verify numerically the analytical solutions obtained in Sect. 4.3. Through examples we also investigate the type of structures that are promoted for different values of p. Finally, we proceed to the two-dimensional case where we focus on image denoising tasks and in particular on the elimination of the staircasing effect.

We start by defining the discretised version of problem \((\mathcal {P})\)

Here \(\mathrm {TVL}_{\alpha ,\beta }^{p}:\mathbb {R}^{n\times m}\rightarrow \mathbb {R}\) is defined as

where for \(x\in \mathbb {R}^{n\times m}\), we set  and for \(x=(x_{1},x_{2})\in (\mathbb {R}^{n\times m})^2\) we define

and for \(x=(x_{1},x_{2})\in (\mathbb {R}^{n\times m})^2\) we define

We denote by \(\nabla =(\nabla _{1},\nabla _{2})\) the discretised gradient with forward differences and zero Neumann boundary conditions defined as

The discrete version of the divergence operator is defined as the adjoint of discrete gradient. That is, for every \(w=(w_{1},w_{2})\in (\mathbb {R}^{n\times m})^2\) and \(u\in \mathbb {R}^{n\times m}\), we have \(\left\langle -\mathrm{div}w,u\right\rangle _{}=\left\langle w,\nabla u\right\rangle _{}\) with

We solve the minimisation problem (6.1) in two ways. The first one is by using the CVX optimisation package with MOSEK solver (interior point methods) [19]. This method is efficient for small–medium scale optimisation problems and thus it is a suitable choice in order to replicate one-dimensional solutions. On the other hand, we prefer to solve large scale two-dimensional versions of (6.1) with the split Bregman method [18] which has been widely used for the fast solution of non-smooth minimisation problems.

6.1 Split Bregman for L\(\mathbf {^{2}}\)–TVL\(\mathbf {^{p}}\)

In this section we describe how we adapt the split Bregman algorithm to our discrete model (6.1). We first transform the unconstrained problem (6.1) into a constrained one by setting \(z=\nabla u -w\):

Replacing the constraint, using a Lagrange multiplier \(\lambda \), we obtain the following unconstrained formulation:

The Bregman iteration [32], that corresponds to the minimisation (6.6) leads to the following two-step algorithm:

Since solving (6.7) at once is a difficult task, we employ a splitting technique and minimise alternatingly for u, z and w. This yields the split Bregman iteration for our method:

Next, we discuss how we solve each of the subproblems (6.9)–(6.11). The first-order optimality condition of (6.9) results into the following linear system:

Here A is a sparse, symmetric, positive definite and strictly diagonal dominant matrix, thus we can easily solve (6.13) with an iterative solver such as conjugate gradients or Gauss–Seidel. However, due to the zero Neumann boundary conditions, the matrix A can be efficiently diagonalised by the two-dimensional discrete cosine transform,

where here \(W_{nm}\) is the discrete cosine matrix and \(D=diag(\mu _{1},\cdots ,\mu _{nm})\) is the diagonal matrix of the eigenvalues of A. In that case, A has a particular structure of a block symmetric Toeplitz-plus-Hankel matrix with Toeplitz-plus-Hankel blocks and one can obtain the solution of (6.9) by three operations involving the two-dimensional discrete cosine transform [20] as follows: Firstly, we calculate the eigenvalues of A by multiplying (6.14) with \(e_{1}=(1,0,\cdots ,0)^{\intercal }\) from both sides and using the fact that \(W_{nm}^{\intercal }W_{nm}=W_{nm}W_{nm}^{\intercal }=I_{nm}\), we get

Then, the solution of (6.9) is computed exactly by

The solution of the subproblem (6.10) is obtained in a closed form via the following shrinkage operator:

Finally, we discuss the solution of the subproblem (6.11). In the spirit of [40], we solve (6.11) by a fixed-point iteration scheme. Letting \(\kappa =\frac{\beta }{\lambda }\) and \(\eta =-b^{k}-z^{k+1}+\nabla u^{k+1}\), the first-order optimality condition of (6.11) becomes

For given \(w^{k}\), we obtain \(w^{k+1}\) by the following fixed-point iteration

under the convention that \(0/0=0\). We can also consider solving the p-homogenous analogue \((\mathcal {P}_{p-hom})\), where for certain values of p, e.g. \(p=2\), we can solve exactly the corresponding version of (6.11), since in that case \(w_{i}^{k+1}=\frac{\eta _{i}}{\kappa +1}\). However, we have observed empirically that there is no significant computational difference between these two methods.

Since we do not solve all the subproblems (6.9)–(6.11) exactly in every iteration, we cannot guarantee convergence for our version of the split Bregman iteration. Moreover, convergence of the split Bregman algorithm when more than two splittings are performed have not been yet fully established, even though this has been an active field of research lately see for instance [13, 17, 31]. Let us note that the three subproblems in the split Bregman algorithm can be modified into two subproblems (inexact linearised ADMM) with a small cost in the speed of convergence, see for instance [14, 21, 22]. However in practice, the algorithm converges to the right solutions. This claim is supported by the study presented in Fig. 5 where the solutions of the split Bregman iteration are compared to the corresponding solutions obtained with the CVX package for which we have convergence guarantees. There, we have solved the \(\mathrm {TVL}^{p}\) minimisations that correspond to Figs. 13d–f and 14d, i.e. for \(p=\frac{3}{2},2,3,7\) using both split Bregman and CVX. We plot the relative differences of the split Bregman iterates \(u^{k}\) and the CVX solution \(u_{\text {CVX}}\) until they are sufficiently close to each other, i.e.

In all the plots of Fig. 5, we observe that the split Bregman iterates in practice converge to the CVX solution. Their relative difference becomes of the order \(10^{-5}\) in around 100 iterations except for \(p=7\), Fig. 5d, where the error tolerance is still reached but only after approximately 10000 iterations.

In Table 1, we compare the computational times of the split Bregman algorithm until (6.20) is satisfied and the computational times of CVX for the same examples as in Fig. 5. The implementations were done in MATLAB (2013) using 2.4 GHz Intel Core 2 Duo and 2 GB of memory. Notice that unless \(p=2\), second line in Table 1, CVX needs more than an hour to converge, in contrast to split Bregman where only a few seconds are required for small values of p. Note that the split Bregman algorithm is significantly slower for large values of p, e.g. \(p=7\), see fourth line in Table 1, mainly due to the fixed-point iteration in the subproblem (6.19). We would like to point out that the computational speed can be significantly reduced in the \(p=\infty \) case, since the corresponding subproblem is solved exactly, see [8, 36] and in the same time we can obtain similar results to the ones obtained for high values of p.

Plots of the relative differences between the split Bregman iterates and the CVX solutions until (6.20) is true with tol \(= 10^{-5}\), for the examples in Figs. 13d–f and 14d. In all cases the split Bregman algorithm converges to the solutions given by CVX. a Relative residual error between the split Bregman iterates and the CVX solution for the example in Fig. 13d. b Relative residual error between the split Bregman iterates and the CVX solution for the example in Fig. 13e. c Relative residual error between the split Bregman iterates and the CVX solution for the example in Fig. 13f. d Relative residual error between the split Bregman iterates and the CVX solution for the example in Fig. 14d

6.2 One-Dimensional Results

In this section, we present some numerical results in dimension one, i.e. \(m=1\), \(u\in \mathbb {R}^{n\times 1}\) and \(w\in \mathbb {R}^{n\times 1}\). Initially, we compare our numerical solutions with the analytical ones, obtained in Sect. 4.3 for the step function. We set \(p=2\), \(h=100\), \(L=1\) and \(\Omega =(-1,1)\) which is discretised into 2000 points. We first examine the cases where ROF solutions are obtained, i.e. the parameters \(\alpha \) and \(\beta \) are selected according to the conditions (4.24) and (4.25). We have done that in Fig. 6 where we see that the analytical solutions coincide with the numerical ones.

Step function: comparison between numerical solutions of \((\mathcal {P})\) and the corresponding analytical solutions obtained in Sect. 4.3. The parameters \(\alpha \) and \(\beta \) are chosen so that conditions (4.24) and (4.25) are satisfied which result in ROF solutions. a Original data. b \(\mathrm {TVL}^{2}\): \(\alpha =15\), \(\beta =500\). c \(\mathrm {TVL}^{2}\): \(\alpha =60\), \(\beta =1300\) (Color figure online)

We proceed by computing the non-ROF solutions. The numerical solutions are solved using the 2-homogeneous analogue (4.27), since we have proved that the 1-homogeneous and p-homogeneous problems are equivalent modulo an appropriate rescaling of the parameter \(\beta \), see Proposition 3.2. Indeed, as it is described in Fig. 4, in order to obtain solutions from the purple region, it suffices to seek for solutions of the 2-homogeneous (4.27). Recall also that these solutions are exactly the solutions obtained solving a Huber TV problem, see Proposition 3.3. The analytical solutions are given in (4.30) and (4.32) and are compared to the numerical ones in Fig. 7, where we observe that they coincide. We also verify the equivalence between the 1-homogeneous and 2-homogeneous problems where \(\alpha \) is fixed and \(\beta \) is obtained from Proposition 3.2, see Fig. 7c.

Step function: comparison between numerical and analytical solutions obtained in Sect. 4.3, by solving the 2-homogeneous problem (4.27). The parameters \(\alpha \) and \(\beta \) are chosen so that conditions (4.30) and (4.32) are satisfied which result in non-ROF solutions. The last plot shows the equivalence between the 1-homogeneous problem \((\mathcal {P})\) and 2-homogeneous (4.27). a

\(\mathrm {TVL^{2}}:\)

\(\alpha =20\), \(\beta _{2-hom}=450\). b

\(\mathrm {TVL^{2}}:\)

\(\alpha =60\), \(\beta _{2-hom}=450\). c Equivalence of 1 and 2-homogeneous models: \(\alpha =15\), \(\beta _{2-hom}\) \(=\) 450,  (Color figure online)

(Color figure online)

We continue our experiments for general values of p focusing on the structure of the solutions as p increases. In order to compare the solutions for different values \(p\in (1,\infty )\), we fix the parameter \(\alpha \) and choose appropriate values of \(\beta \). Since we are mainly interested in non-ROF solutions, we choose \(\alpha \) and \(\beta \) so that they belong to the purple region of Fig. 4, i.e. \(\beta <(\frac{2L}{q+1})^{\frac{1}{q}}\alpha \) and \(\beta <\frac{h}{2} (\frac{2L^{q+1}}{q+1})^{\frac{1}{q}}\). We set \(p=\{\frac{4}{3}, \frac{3}{2}, 2, 3, 4, 10\}\) and in order to get solutions that preserve the discontinuity we set \(\beta =\{72, 140, 430, 1350, 2400, 6800\}\) with fixed \(\alpha =20\), see Fig. 8a. In order to obtain continuous solutions, we set \(\alpha =60\) and \(\beta =\{50, 110, 430, 1700, 3000, 9500\}\), see Fig. 8b. We observe that for \(p=\frac{4}{3}\), the solution has a similar behaviour to \(p=2\), but with a steeper gradient at the discontinuity point and the solution becomes almost constant near the boundary of \(\Omega \). On the other hand, as we increase p, the slope of the solution near the discontinuity point reduces and it becomes almost linear with a relative small constant part near the boundary.

Step function: the types of solutions for the problem \((\mathcal {P})\) for different values of p. a \(\mathrm {TVL}^p\) discontinuous solutions for \(p=\{\frac{4}{3}, \frac{3}{2}, 2, 3, 4, 10\}\). b \(\mathrm {TVL}^p\) continuous solutions for \(p=\{\frac{4}{3}, \frac{3}{2}, 2, 3, 4, 10\}\) (Color figure online)

The linear structure of the solutions that appears for large p motivates us to examine the case of piecewise affine data f defined as

see Fig. 9. We set again \(\Omega =(-1,1)\) and \(\lambda =\frac{1}{10}\). As we observe, the reconstruction for \(p=15\) behaves almost linearly everywhere in \(\Omega \) except near the boundary. In the follow-up paper [8], where the case \(p=\infty \) is examined in detail and exact solutions are computed for the data (6.21), the occurrence of this linear structure is justified also analytically.

In the last part of this section, we present some numerical examples of the image decomposition approach presented in Sect. 5. We use as data a more complicated one-dimensional noiseless signal with piecewise constant, affine and quadratic components and solve the discretised version of (5.1) using \(\mathrm {CVX}\). In Fig. 10, we verify numerically the equivalence between (5.1) and \((\mathcal {P})\) for \(p=2\), i.e. we show that \((\nabla v,u+v)\) corresponds to \((w,\overline{u})\) where (v, u) and \((w,\overline{u})\) are the solutions of (5.1) and \((\mathcal {P})\), respectively. We also compare the decomposed parts u, v for \(p=\frac{4}{3}\) and \(p=10\). In order to have a reasonable comparison on the corresponding solutions, the parameters \(\alpha , \beta \) are selected such that the residual  is the same for both values of p. As we observe, the v decomposition with \(p=\frac{4}{3}\) exhibits some flatness compared to \(p=2\), compare Figs. 10b and 11a. On the other hand for \(p=10\), the v component consists again of almost affine structures, Fig. 11b. Notice, that in both cases the v components are continuous. This is expected since in dimension one, we have \(\mathrm {W}^{1,p}(\Omega )\subset C(\overline{\Omega })\) for every \(1<p<\infty \).

is the same for both values of p. As we observe, the v decomposition with \(p=\frac{4}{3}\) exhibits some flatness compared to \(p=2\), compare Figs. 10b and 11a. On the other hand for \(p=10\), the v component consists again of almost affine structures, Fig. 11b. Notice, that in both cases the v components are continuous. This is expected since in dimension one, we have \(\mathrm {W}^{1,p}(\Omega )\subset C(\overline{\Omega })\) for every \(1<p<\infty \).

Decomposition of the data in Fig. 10a into u, v parts for \(p=\frac{4}{3}\) and \(p=10\). The value \(p=\frac{4}{3}\) produces a v component with flat structures while \(p=10\) produces a component with almost affine structures. In both cases we have  . a Decomposition of the data in Fig. 10a for \(p=\frac{4}{3}\). b Decomposition of the data in Fig. 10a for \(p=10\) (Color figure online)

. a Decomposition of the data in Fig. 10a for \(p=\frac{4}{3}\). b Decomposition of the data in Fig. 10a for \(p=10\) (Color figure online)

6.3 Two-Dimensional Results

In this section we consider the two-dimensional case where \(u\in \mathbb {R}^{n\times m}\), \(w\in (\mathbb {R}^{n\times m})^2\) with \(m>1\) and \(\Omega \) is a rectangular image domain. We focus on image denoising tasks and on eliminating the staircasing effect for different values of p.

We start with the image in Fig. 12, i.e. a square with piecewise affine structures. The image size is \(200\times 200\) pixels at a [0, 1] intensity range. The noisy image, Fig. 12b, is a corrupted version of the original image, Fig. 12a, with Gaussian noise of zero mean and standard deviation \(\sigma =0.01\).

In Fig. 13, we present the best reconstructions results in terms of two quality measures, the classical Peak Signal to Noise Ratio (PSNR) and the Structural Similarity Index (SSIM), see [41] for the definition of the latter. In each case, the values of \(\alpha \) and \(\beta \) are selected appropriately for optimal PSNR and SSIM. We use here the split Bregman algorithm as this is described in Sect. 6.1. Our stopping criterion is the relative residual error becoming less than \(10^{-6}\), i.e.

For computational efficiency, we set \(\lambda =10\alpha \) when \(1<p<4\) and \(\lambda =1000\alpha \) when \(p\ge 4\) (empirical rule).

Best reconstructions in terms of PSNR and SSIM for \(p=\frac{3}{2}\), 2, 3. a \(\mathrm {TVL}^{\frac{3}{2}}\): \(\alpha \,=\,0.1\), \(\beta =2.5\), PSNR \(=\) 33.63. b \(\mathrm {TVL}^{2}\): \(\alpha \,=\,0.1\), \(\beta =13.5\), PSNR \(=\) 33.68. c \(\mathrm {TVL}^{3}\): \(\alpha \,=\,0.1\), \(\beta =76\), PSNR \(=\) 33.70. d \(\mathrm {TVL}^{\frac{3}{2}}\): \(\alpha =0.3\), \(\beta =7.7\), SSIM \(=\) 0.9669. e \(\mathrm {TVL}^{2}\): \(\alpha =0.3\), \(\beta =34\), SSIM \(=\) 0.9706. f \(\mathrm {TVL}^{3}\): \(\alpha =0.3\), \(\beta =182\), SSIM \(=\) 0.9709

Observe that the best reconstructions in terms of the PSNR have no visual difference for \(p=\frac{3}{2}\), 2 and 3 and staircasing is present, Fig. 13a–c. This is one more indication that the PSNR—which is based on the squares of the pointwise differences between the ground truth and the reconstruction—does not correspond to the optimal visual results. The best reconstructions in terms of SSIM are visually better. They exhibit significantly reduced staircasing for \(p=\frac{3}{2}\) and \(p=3\) and is essentially absent in the case of \(p=2\), see Fig. 13d–f.

We can also get a total staircasing elimination by setting higher values for the parameters \(\alpha \) and \(\beta \), as we show in Fig. 14. There, one observes that on one hand as we increase p, almost affine structures are promoted—see the middle row profiles in Fig. 14—and on the other hand these choices of \(\alpha , \beta \) produce a serious loss of contrast that however can be easily treated via the Bregman iteration that we briefly discuss next.

Staircasing elimination for \(p=\frac{3}{2}\), 2, 3 and 7. High values of p promote almost affine structures. a \(\mathrm {TVL}^{\frac{3}{2}}\): \(\alpha =1\), \(\beta =25\), SSIM \(=\) 0.9391. b \(\mathrm {TVL}^{2}\): \(\alpha =1\), \(\beta =116\), SSIM \(=\) 0.9433. c \(\mathrm {TVL}^{3}\): \(\alpha =1\), \(\beta =438\), SSIM \(=\) 0.9430. d \(\mathrm {TVL}^{7}\): \(\alpha =2\), \(\beta =5000\), SSIM \(=\) 0.9001

Contrast enhancement via Bregman iteration was introduced in [32], see also [3] for an application to higher-order models. It involves solving a modified version of the minimisation problem. Setting \(u^{0}=f\), one solves for \(k=1,2,\ldots \):

Instead of solving (6.1) once for fixed \(\alpha \) and \(\beta \), we solve a sequence of similar problems adding back a noisy residual in each iteration. For stopping criteria regarding the Bregman iteration we refer to [32].

In Fig. 15 we present our best Bregman iteration results for \(p=2\) in terms of SSIM along with the corresponding \(\mathrm {TV}\) and \(\mathrm {TGV}\) results for which the Bregman iteration has also been employed for the sake of fair comparison. We also show the best SSIM results where no Bregman iteration is used. We solve the \(\mathrm {TGV}\) minimisation using the Chambolle–Pock primal-dual method [10]. We notice that the Bregman iteration version of \(\mathrm {TVL}^{2}\) leads to a significant contrast improvement, compare for instance Fig. 15b, e. In fact, it can achieve a reconstruction which is visually close to the Bregman iteration version of \(\mathrm {TGV}\), compare Fig. 15e, f.

First row Best reconstructions in terms of SSIM for \(\mathrm {TV}\), \(\mathrm {TVL}^{2}\) and \(\mathrm {TGV}\). Second row Best reconstructions in terms of SSIM for the Bregman iteration versions of \(\mathrm {TV}\), \(\mathrm {TVL}^{2}\) and \(\mathrm {TGV}\). a \(\mathrm {TV}\): \(\alpha =0.2\), SSIM \(=\) 0.9387. b \(\mathrm {TVL}^{2}\): \(\alpha =1\), \(\beta =116\), SSIM \(=\) 0.9433. c \(\mathrm {TGV}\): \(\alpha =0.12\), \(\beta =0.55\), SSIM \(=\) 0.9861. d \(\mathrm {TV}\) Bregman iteration: \(\alpha =1\), SSIM \(=\) 0.9401, 4th iteration. e \(\mathrm {TVL}^{2}\) Bregman iteration: \(\alpha =2\), \(\beta =220\), SSIM \(=\) 0.9778, 4th iteration. f \(\mathrm {TGV}\) Bregman iteration: \(\alpha =2\), \(\beta =10\), SSIM \(=\) 0.9889, 8th iteration

We continue our demonstration with a radially symmetric image, see Fig. 16. As in the previous example, we can achieve staircasing-free reconstructions using \(\mathrm {TVL}^{p}\) regularisation for different values of p, see Fig. 17. In fact, as we increase p, we obtain results that preserve the spike in the centre of the circle, see the corresponding middle row slice in Fig. 17d. This provides us with another motivation to examine the \(p=\infty \) case in [8]. The loss of contrast can be again treated using the Bregman iteration (6.23). The best results of the latter in terms of SSIM are presented in Fig. 18, for \(p=2\), 4 and 7 and they are also compared to the corresponding Bregman iteration version of \(\mathrm {TGV}\). We observe that we can obtain reconstructions that are visually close to the \(\mathrm {TGV}\) ones and in fact notice that for \(p=7\), the spike on the centre of the circle is better reconstructed compared to \(\mathrm {TGV}\), see also the surface plots in Fig. 19.

Better preservation of spike-like structures for large values of p. a \(\mathrm {TVL}^{\frac{3}{2}}\): \(\alpha =0.8\), \(\beta =17\), SSIM \(=\) 0.8909. b \(\mathrm {TVL}^{2}\): \(\alpha =0.8\), \(\beta =79\), SSIM \(=\) 0.8998. c \(\mathrm {TVL}^{3}\): \(\alpha =0.8\), \(\beta =405\), SSIM \(=\) 0.9019. d \(\mathrm {TVL}^{7}\): \(\alpha =0.8\), \(\beta =3700\), SSIM \(=\) 0.9024

Best reconstruction in terms of SSIM for the Bregman iteration versions of \(\mathrm {TVL}^{2}\), \(\mathrm {TVL}^{4}\), \(\mathrm {TVL}^{7}\) and \(\mathrm {TGV}\). a \(\mathrm {TV}\) Bregman iteration: \(\alpha =2\), SSIM \(=\) 0.8912, 6th iteration. b \(\mathrm {TVL^{2}}\) Bregman iteration: \(\alpha =5\), \(\beta =625\), SSIM \(=\) 0.9718, 12th iteration. c \(\mathrm {TVL^{4}}\) Bregman iteration: \(\alpha =5\), \(\beta =8000\), SSIM=0.9802, 13th iteration. d \(\mathrm {TVL^{7}}\) Bregman iteration: \(\alpha =3\), \(\beta =15000\), SSIM \(=\) 0.9807, 9th iteration. e \(\mathrm {TGV}\) Bregman iteration: \(\alpha =2\), \(\beta =10\), SSIM \(=\) 0.9913, 8th iteration

Surface plots of the images in Fig. 18. Notice how high values of p can better preserve the sharp spike in the middle of the image. a Original. b \(\mathrm {TVL}^{2}\) Bregman iteration. c \(\mathrm {TVL}^{7}\) Bregman iteration. d \(\mathrm {TGV}\) Bregman iteration. (e) Original: central part zoom. f \(\mathrm {TVL}^{2}\) Bregman iteration: central part zoom. g \(\mathrm {TVL}^{7}\) Bregman iteration: central part zoom. h \(\mathrm {TGV}\) Bregman iteration: central part zoom (Color figure online)

We conclude with numerical results for the image decomposition approach of Sect. 5 which we solve again using the split Bregman algorithm. Recall that in dimension two, the solutions of (5.1) are not necessarily the same with the ones of \((\mathcal {P})\). In fact, we observe that (5.1) cannot always eliminate the staircasing, see for instance Figure 20. Even though, as we have already seen, we can eliminate the staircasing both in the square and in the circle by applying \(\mathrm {TVL}^{p}\) regularisation, Fig. 20b, d, we cannot obtain equally satisfactory results by solving (5.1). While using the latter we can get rid of the staircasing in the circle, Fig. 20c, this is not possible for the square, Fig. 20a, where we observe—after extensive experimentation—that no values of \(\alpha \) and \(\beta \) lead to a staircasing elimination. This is analogous to the difference between \(\mathrm {TGV}\) and the \(\mathrm {TV}\)–\(\mathrm {TV}^{2}\) infimal convolution of Chambolle–Lions [9].

Comparison between the model (5.1) for \(p=2\) and \(\mathrm {TVL^{2}}\): Staircasing cannot be always eliminated by using the decomposition approach (5.1). a Solution \(u+v\) of (5.1): \(p=2\), \(\alpha =0.8\), \(\beta =120\), SSIM \(=\) 0.9268. b \(\mathrm {TVL}^{2}\): \(\alpha =1\), \(\beta =116\), SSIM \(=\) 0.9433. c Solution \(u+v\) of (5.1): \(p=2\), \(\alpha \,=\,0.8\), \(\beta =70\), SSIM \(=\) 0.8994. d \(\mathrm {TVL^{2}}\): \(\alpha =0.8\), \(\beta =79\), SSIM \(=\) 0.8998

However, the strength of the formulation (5.1) lies on its ability to efficiently decompose an image into piecewise constant and smooth parts. We depict that in Fig. 21, where we show the components u and v of the result in Fig. 20c.

7 Conclusion

We have introduced a novel first-order, one-homogeneous \(\mathrm {TV}\)–\(\mathrm {L}^{p}\) infimal convolution type functional suitable for variational image regularisation. The \(\mathrm {TVL}^{p}\) functional constitutes a very general class of regularisation functionals exhibiting diverse smoothing properties for different choices of p. In the case \(p=2\) the well-known Huber \(\mathrm {TV}\) regulariser is recovered.

We studied the corresponding one-dimensional denoising problem focusing on the structure of its solutions. We computed exact solutions of this problem for the case \(p=2\) for simple one-dimensional data. Hence, as an additional novelty in our paper we presented exact solutions of the one-dimensional Huber \(\mathrm {TV}\) denoising problem.

Numerical experiments for several values of p indicate that our model leads to an elimination of the staircasing effect. We show that we can further enhance our results by increasing the contrast via a Bregman iteration scheme and thus obtaining results of similar quality to those of \(\mathrm {TGV}\). Furthermore, as p increases the structure of the solutions changes from piecewise smooth to piecewise linear and the model, in contrast to \(\mathrm {TGV}\), is capable of preserving sharp spikes in the reconstruction. This observation motivates a more detailed study of the \(\mathrm {TVL}^{p}\) functional for large p and in particular for the case \(p=\infty \).

This concludes the first part of the study of the \(\mathrm {TVL}^{p}\) model for \(p< \infty \). The second part [8], is devoted to the \(p=\infty \) case. There we explore further, both in an analytical and an experimental level, the capability of the \(\mathrm {TVL}^{\infty }\) model to promote affine and spike-like structures in the reconstructed image and we discuss several applications.

References

Ambrosio, L., Fusco, N., Pallara, D.: Functions of Bounded Variation and Free Discontinuity Problems. Oxford Science Publications, Oxford (2000)

Attouch, H., Brezis, H.: Duality for the sum of convex functions in general Banach spaces. North-Holland Mathematical Library 34, 125–133 (1986)

Benning, M., Brune, C., Burger, M., Müller, J.: Higher-order TV methods—enhancement via Bregman iteration. J. Sci. Comput. 54(2–3), 269–310 (2013). doi:10.1007/s10915-012-9650-3

Bergounioux, M., Piffet, L.: A second-order model for image denoising. Set-Valued Var. Anal. 18(3–4), 277–306 (2010). doi:10.1007/s11228-010-0156-6

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imaging Sci. 3(3), 492–526 (2010). doi:10.1137/090769521

Bredies, K., Kunisch, K., Valkonen, T.: Properties of L\(^1\)-TGV\(^{\,2}\): the one-dimensional case. J. Math. Anal. Appl. 398(1), 438–454 (2013). doi:10.1016/j.jmaa.2012.08.053

Burger, M., Osher, S.: A guide to the TV zoo. Level Set and PDE Based Reconstruction Methods in Imaging, pp. 1–70. Springer, Berlin (2013)

Burger, M., Papafitsoros, K., Papoutsellis, E., Schönlieb, C.-B.: Infimal convolution regularisation functionals of \({\rm BV}\) and \({\rm L}^{p}\) spaces. The case \(p=\infty \). Submitted (2015)

Chambolle, A., Lions, P.L.: Image recovery via total variation minimization and related problems. Numerische Mathematik 76, 167–188 (1997). doi:10.1007/s002110050258

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011). doi:10.1007/s10851-010-0251-1

Chan, T., Marquina, A., Mulet, P.: High-order total variation-based image restoration. SIAM J. Sci. Comput. 22(2), 503–516 (2001). doi:10.1137/S1064827598344169

Chan, T.F., Esedoglu, S., Park, F.E.: Image decomposition combining staircase reduction and texture extraction. J. Vis. Commun. Image Represent. 18(6), 464–486 (2007). doi:10.1016/j.jvcir.2006.12.004

Chen, C., He, B., Ye, Y., Yuan, X.: The direct extension of ADMM for multi-block convex minimization problems is not necessarily convergent, Math. Program. 1–23 (2014)

Yuan, X., Dai, Y., Han, D., Zhang, W.: A sequential updating scheme of Lagrange multiplier for separable convex programming, Math. Comput., to appear (2013)

Ekeland, I., Témam, R.: Convex Analysis and Variational Problems. SIAM, Philadelphia (1999)

Elion, C., Vese, L.: An image decomposition model using the total variation and the infinity Laplacian. Proc. SPIE 6498, 64980W–64980W-10 (2007). doi:10.1117/12.716079

Esser, E.: Applications of Lagrangian-based alternating direction methods and connections to split Bregman. CAM Rep. 9, 31 (2009)

Goldstein, T., Osher, S.: The split Bregman algorithm method for \(L_{1}\)-regularized problems. SIAM J. Imaging Sci. 2, 323–343 (2009). doi:10.1137/080725891

Grant, M., Boyd, S.: CVX: Matlab software for disciplined convex programming, version 2.1, (2014)

Hansen, P.: Discrete inverse problems. Soc. Ind. Appl. Math. (2010). doi:10.1137/1.9780898718836

He, B., Hou, L., Yuan, X.: On full jacobian decomposition of the augmented lagrangian method for separable convex programming, preprint (2013)

He, B., Tao, M., Yuan, X.: Convergence rate and iteration complexity on the alternating direction method of multipliers with a substitution procedure for separable convex programming, Math. Oper. Res., under revision 2 (2012)

Hintermüller, M., Stadler, G.: An infeasible primal-dual algorithm for total bounded variation-based inf-convolution-type image restoration. SIAM J. Sci. Comput. 28(1), 1–23 (2006). doi:10.1137/040613263

Huber, P.J.: Robust regression: asymptotics, conjectures and monte carlo. Ann. Stat. 1, 799–821 (1973). http://www.jstor.org/stable/2958283

Kim, Y., Vese, L.: Image recovery using functions of bounded variation and Sobolev spaces of negative differentiability. Inverse Probl Imaging 3, 43–68 (2009). doi:10.3934/ipi.2009.3.43

Kuijper, A.: P-Laplacian driven image processing. IEEE Int. Conf. Image Process. 5, 257–260 (2007). doi:10.1109/ICIP.2007.4379814

Lefkimmiatis, S., Bourquard, A., Unser, M.: Hessian-based norm regularization for image restoration with biomedical applications. IEEE Trans. Image Process. 21, 983–995 (2012). doi:10.1109/TIP.2011.2168232

Lindqvist, P.: Notes on the \(p\)-Laplace equation, University of Jyvaskyla (2006)

Lysaker, M., Lundervold, A., Tai, X.C.: Noise removal using fourth-order partial differential equation with applications to medical magnetic resonance images in space and time. IEEE Trans. Image Process. 12(12), 1579–1590 (2003). doi:10.1109/TIP.2003.819229