Abstract

There has been a burst of work in the last couple of decades on mechanistic explanation, as an alternative to the traditional covering-law model of scientific explanation. That work makes some interesting claims about mechanistic explanations rendering phenomena ‘intelligible’, but does not develop this idea in great depth. There has also been a growth of interest in giving an account of scientific understanding, as a complement to an account of explanation, specifically addressing a three-place relationship between explanation, world, and the scientific community. The aim of this paper is to use the contextual theory of scientific understanding to build an account of understanding phenomena using mechanistic explanations. This account will be developed and illustrated by examining the mechanisms of supernovae, which will allow synthesis of treatment of the life sciences and social sciences on the one hand, where many accounts of mechanisms were originally developed, and treatment of physics on the other hand, where the contextual theory drew its original inspiration.

Similar content being viewed by others

1 Introduction: What is the Question?

There are two important areas of debate in philosophy of science which both concern intelligible explanations, but are not yet connected. First, various authors in the mechanisms literature claim that good mechanistic explanations are ‘intelligible’, although they do not say much about what this means. Accounts of mechanistic explanation have been developed over the last two decades in a literature that provided an alternative to covering law explanation, and primarily studied cases from the life sciences. Secondly, over the last decade there has been an emergence of interest in characterising scientific understanding, where that is explicitly conceived of as an attempt to elucidate a three-place relation between world, explanation, and the scientific community (see papers in de Regt et al. 2009 and de Regt 2017). Mieke Boon summarises the view the three-place approach is reacting to:

[M]ost authors reject it [sc. understanding] as a notion of philosophical interest because they assume that it is a mere psychological surplus of explaining. [...] The tradition in which Trout rejects the importance of scientific understanding presupposes that a philosophical account of science can be explicated in terms of a two-placed relation between world and knowledge. (Boon 2009, 250)

The idea is that previous treatment of understanding has largely either considered it to be little more than possession of, beliefs about, or perhaps communication of, an explanation,Footnote 1 or considered it to be a purely subjective and not very important phenomenological state—leaving no space for any substantive and scientifically important account of understanding.Footnote 2 The views I will examine reject this. Specifically, in one of the first papers in this tradition, ‘A contextual approach to scientific understanding’, de Regt and Dieks (2005) claim that intelligibility is a value that scientists in a particular community at a particular time confer on theories they can use. De Regt and Dieks draw paradigm cases from physics, but philosophers such as Leonelli (2009), who is also approaching understanding in broadly this way, draws ideas from the life sciences.

In this paper, I will apply the contextual theory to the understanding of phenomena gained from mechanistic explanations. This account will be developed by discussing our mechanistic understanding of the phenomenon of supernovae, specifically following SN1987A, spotted in 1987 and still one of the most important supernovae ever studied. Consider the following claim from Nature at the end of 1987:

The neutrinos detected from the recent supernova 1987A (SN1987A) in the Large Magellanic Cloud by two large water Cerenkov detectors run by collaborations at Kamiokande and at IMB confirm dramatically our understanding of the generic mechanism of formation of type II supernovae: the gravitational collapse of a massive stellar core to form a neutron star or black hole. (Walker 1987, 609)

Illari and Williamson (2012) have already argued that the mechanisms of supernovae fit the core three-part account of mechanisms and mechanistic explanation that is becoming consensus in the mechanisms literature: “A mechanism for a phenomenon consists of entities and activities organized in such a way that they are responsible for the phenomenon” (Illari and Williamson 2012, 120). Illari and Williamson explain how this consensus account captures work of many major mechanists, and so I will use this account to build an account of understanding phenomena mechanistically. This will allow me to address the enormous variety of things that are done in explaining supernovae mechanistically, and to tease out two things in this story: first, the place of entities and activities, and, second, the interdependent nature of our understanding of mechanisms, models, and more basic physical theory.Footnote 3

I will begin, in Sect. 2, by bringing the claims of mechanists together with the contextual theory of scientific understanding to build a theoretical account of understanding phenomena using mechanistic explanations, and setting up some interesting questions that arise about virtues of mechanistic explanations. In Sect. 3, I will develop this account by applying it to understanding the mechanisms of supernovae. It will turn out that the mechanisms of supernovae are particularly interesting for this purpose, as they enable a creative synthesis between the use of enormously general theories, and the use of multiple models, even down to multiple models of a particular supernova: SN1987A. As a teaser, consider the following from an astrophysics textbook:

Astrophysics does not deal with a special, distinct class of effects and processes, as do the basic fields of physics. [...] astrophysics deals with complex phenomena, which involve processes of many different kinds. It has to lean, therefore, on all the branches of physics, and this makes for its special beauty. The theory of the structure and evolution of stars presents a unique opportunity to bring separate, seemingly unconnected physical theories under one roof. (Prialnik 2010, 28–29)

Section 3 will show that understanding mechanistic explanations of type II supernovae requires modelling activities, entities and their organization in a way that requires distinctively mechanistic modes of virtues. It further requires a surrounding architecture of at least theories and laws, models and simulations: in this case at least our understanding of theory and mechanisms are interdependent. I will finish by drawing some wider conclusions, in Sect. 4, reflecting on what this means for the contextual theory of scientific understanding.

2 Understanding Phenomena

In this section I will apply the contextual theory of scientific understanding to develop an account of understanding mechanistic explanations of phenomena, before going on in Sect. 3 to apply the account to SN1987A.

2.1 Intelligible Mechanisms

The new mechanist literature arose alongside the realisation that traditional philosophy of science, focusing primarily on laws and theories, had limited application to the life sciences, which study a domain that is considerably more local and diverse than some areas of physics. Although the biggest burst of work was only initiated by Machamer, Darden and Craver’s famous paper (Machamer et al. 2000, now commonly known as “MDC”), the mechanisms literature has become very influential. While there has been a great deal of progress on what a mechanistic explanation is, there has been little on what it means to understand a mechanism, or to understand a phenomenon mechanistically.Footnote 4

In addressing the question of understanding or intelligibility, I hope to show here a different mode for the development of many of the ideas concerning representation and communication of mechanistic explanations currently of interest. Ideas bearing on understanding actually arose early in the mechanisms literature. MDC write: “Descriptions of mechanisms render the end stage intelligible by showing how it is produced by bottom out entities and activities” (Machamer et al. 2000, 21). For MDC, what they call “bottom out” activities and entities are those which are regarded as unproblematic by a scientific field at a particular time. They say that in molecular biology bottom-out activities are generally those of macromolecules, smaller molecules, and ions. These activities are usually geometrico-mechanical, electro-chemical, energetic, or electro-magnetic (Machamer et al. 2000, 14). Scientists in the field see no need to explain these activities further, although of course they may be targets of explanations in other fields. We will see that the bottom-out entities and activities of supernovae vary widely.

Although intelligibility is not their primary concern, MDC mention it often (pp. 3, 12, 21–23). It is fairly clear that they take mechanistic explanations to be intelligible in a way in which explanations subsuming the phenomenon under a covering law are not. The idea is that unlike laws, mechanisms explain how the phenomenon is produced, by identifying the entities and their activities that are responsible for the phenomenon, often laying out stages in that production. They write: “Productive continuities are what make the connections between stages intelligible” (Machamer et al. 2000, 3). It is important to MDC that these connections be uninterrupted: “In a complete description of mechanism, there are no gaps that leave specific steps unintelligible; the process as a whole is rendered intelligible in terms of entities and activities that are acceptable to a field at a time” (Machamer et al. 2000, 12). So bottom out entities and activities are regarded as the stopping place for mechanistic explanations generated by that field at that time, since they are regarded as needing no further explanation, although this can change over time, and such entities and activities may be targets of explanation in other fields. This is related to mechanistic explanations being in some sense continuous. Secondly, MDC also clearly think that mechanisms offer an intelligible explanation in a way in which regularities do not. They write:

We should not be tempted to follow Hume and later logical empiricists into thinking that the intelligibility of activities (or mechanisms) is reducible to their regularity. Descriptions of mechanisms render the end stage intelligible by showing how it is produced by bottom out entities and activities. To explain is not merely to redescribe one regularity as a series of several. (Machamer et al. 2000, 21–22)

These two claims are not surprising given the view popular within the mechanisms literature that mechanistic explanation is a much-needed alternative to laws-explanation, but a final claim is more striking in that context. For MDC, understanding is not an ontic matter, in the sense of Salmon (1998). They write:

The understanding provided by a mechanistic explanation may be correct or incorrect. Either way, the explanation renders a phenomenon intelligible. Mechanism descriptions show how possibly, how plausibly, or how actually things work. Intelligibility arises not from an explanation’s correctness, but rather from an elucidative relation between the explanans (the set-up conditions and intermediate entities and activities) and the explanandum (the termination condition or the phenomenon to be explained). (Machamer et al. 2000, 21)Footnote 5

They seem open to an account of understanding that is independent, then, of the influential insistence of Salmon on the need for ontic explanations, to capture causal explanation. Notice that the intelligibility they are concerned with seems to be of both the mechanism itself and thereby also of the phenomenon explained.

I lack space to examine even major figures in the mechanisms literature such as Bechtel (2007) and Glennan (2017) in depth, but I hope that it is at least plausible that MDC’s group of concerns extends more widely within that literature. For example, Bechtel, falling firmly on the side of epistemic explanations, is not likely to disagree. Nevertheless, after this fascinating beginning, major mechanists say little more about intelligibility directly.

MDC do not themselves return to the idea to give an account of how such bottom out activities and entities yield intelligibility. But since they cite Anscombe (1975) as the source of ideas concerning activities and causality, it is not unreasonable to suppose that they believe that the Anscombian “thick causal terms” that MDC call activities—such as binding, bonding, and folding—are intuitively more intelligible to us than the “thin” and relatively uninformative concept of causality itself (Bogen 2008). So a reasonable interpretation of what they mean is that the understanding of activity-terms and entity-terms gives scientists a grasp of what is happening, in a way in which using very abstract concepts such as causality—or laws—does not.

On this view, for example, we manage to explain a phenomenon such as protein synthesis in terms of the chemical binding and bonding already known to the field, thus yielding a grasp of what MDC call the productive continuity of the whole mechanism. However, there are questions to ask about how and why the complete explanation yields understanding. In extensive work on mechanism discovery, Bechtel, Craver, and Darden have all produced work that has implications for this issue, but do not synthesize it to address intelligibility directly (Bechtel and Richardson 2010; Bechtel and Abrahamsen 2010; Craver 2007; Darden 2002, 2006). I contribute to that broader project, but using the contextual theory of scientific understanding.

In Sect. 3, I will show, first, that what counts as bottom-out entities and activities for supernovae are varied, secondly, that what counts as productive continuity or gaps in a mechanistic explanation in astrophysics can be varied, but we can identify some recognisable ones, and, nevertheless, mechanistic explanations (alongside many other things) are needed to give an intelligible explanation of supernovae.

2.2 Understanding Theories

Before that, I need to examine the contextual theory of scientific understanding, which arose in a different literature, within a movement rejecting the idea that explanation can be fully characterised as a two-place relationship between an explanation and the world (see de Regt et al. 2009). De Regt and Dieks (2005) primarily draw cases from physics, and, in that context, one reason the question of understanding arose is because of the apparent unintelligibility of theories such as quantum mechanics, which seem to require a counterintuitive and mind-boggling effort to grasp.

According to the contextual theory of scientific understanding, intelligibility is a value that scientists in a particular community at a particular time project onto theories that they can use (de Regt and Dieks 2005; de Regt 2009a, 2014, 2017). In defending this account, de Regt and Dieks (2005) first dissociate scientific understanding from the feeling or ‘sense’ of understanding rejected by many philosophers of science, notably Trout (2002), as being too purely subjective to be an aim of science. For example, de Regt writes: “I do agree with Trout that the phenomenology of understanding has no epistemic function: the experience of a feeling of understanding (an ‘aha’ experience) is neither necessary nor sufficient for scientific understanding of a phenomenon” (de Regt 2009a, 25). I suspect that a substantive account of a phenomenology of understanding might go considerably beyond the relatively simple “sense of understanding” (Trout 2002) which de Regt and Dieks are rejecting, and so have some very interesting epistemic functions.Footnote 6 However, I will focus on the positive claim of the contextual theory, with which I agree: that usability is more substantive than a purely subjective and individual feeling of understanding.Footnote 7

De Regt and Dieks argue that scientists must be able to use theories to construct and evaluate explanations in a way that is essential to the epistemic aims of science, and cannot be supposed to be a mere pragmatic afterthought to the ‘real explanation’. For de Regt and Dieks, understanding a phenomenon requires having the skills to use the theory of that phenomenon. Theories have virtues, and they mention as examples visualisability and simplicity (de Regt and Dieks 2005, 142), but these virtues are relative to the skills that scientists have to use the theories. Theories are regarded as intelligible when they have a (or the) cluster of virtues that allows scientists to use them. These skills cannot be acquired purely from textbooks, but need practice, because skills cannot—or cannot all—be translated into an explicit set of rules. Since these skills are essential to the construction and evaluation of explanations, which are essential to the epistemic aims of science, epistemic and pragmatic aspects of explanation are not separable in practice, in spite of being analytically distinguishable. So an account of the epistemic aims of science cannot ignore understanding. De Regt and Dieks accept the implication that theories cannot be uniquely dependent on a direct evidential relation to the phenomena they explain. Note that if they are right, then we have not really given a full account of explanation until we address the aspects of usability that yield understanding. This means that an account of what it is for mechanistic explanations to be intelligible is a more urgent part of an account of mechanistic explanation than might have initially appeared.

So on this view the intelligibility of a theory is contextual because it is relative to the skills of a community of scientists at a time, in a way that goes beyond an individual, subjective feeling of understanding. De Regt and Dieks address a possible wish for objective features of explanation by suggesting that we could still more-or-less-objectively test for understanding. They suggest one test or indicator of usability of a theory, and so of its intelligibility, is scientists’ ability to recognise qualitatively characteristic consequences of the theory without performing exact calculations. They call this “CIT” (de Regt and Dieks 2005), and their discussion makes it clear that they are trying to use CIT to capture the case where, in using a theory, scientists progress from being able to use a theory to make accurate calculations that don’t make a lot of sense to them, to coming to have a ‘feel’ for the theory. According to this view, intelligibility is relative to a scientific community at a time, but is crucial to scientific explanation, as it is important to the fruitfulness of scientific theories. De Regt writes more recently: “So intelligibility is a measure of the fruitfulness of a theory, but it is a contextual measure: a theory can be fruitful for scientists in one context and less so for scientists in other contexts” (de Regt 2014, 381).

Since the contextual theory offers an account of understanding phenomena with intelligible theories, with one test being the shift from quantitative use to qualitative understanding, the application to the intelligibility of mechanistic explanations and so understanding the phenomena they explain is not yet clear. Nevertheless there is much of interest here. As a route to synthesising the two approaches, it is important that de Regt later makes it even clearer that he takes CIT to be one indicator among others: “There may be different ways to test whether a theory is intelligible for scientists, and not all of them may be applicable in all cases or for all disciplines” (de Regt 2009a, 32). He suggests a theory might also be considered intelligible if it can be used to build models, and his current work focuses on this.

I shall not attempt to provide tests of intelligibility, objective or not. What I will be focusing on is the importance of scientists’ abilities to use mechanistic explanations to the epistemic aims of science. However, in Sect. 3 we will see that even understanding a theory might require very much more than CIT, ranging over building models, mechanistic explanations, and application to a case (SN1987A) to gain empirical evidence.

2.3 Understanding Phenomena with Intelligible Mechanistic Explations

I will here finish the theoretical merging of the contextual theory of scientific understanding with work on mechanistic explanation to develop and defend the view that:

A phenomenon is mechanistically understood when scientists have an intelligible mechanistic explanation for the phenomenon; i.e. a mechanistic explanation that they can use.Footnote 8

I argue here that these two approaches can go together, and that their combination raises some interesting questions. Mechanistic explanations are usually directly of a phenomenon, where in places the contextual theory offers an account of understanding a phenomenon, using a theory (de Regt 2009a, 251). Laws and theories are not usually given the same kind of importance in mechanistic explanation, but we will see their importance in understanding supernovae.

Notice that for MDC, if a mechanistic explanation is intelligible, the phenomenon it explains is also thereby intelligible. De Regt and Dieks (2005) focus on the intelligibility of theories, as the way in which we understand phenomena. However, de Regt (2009b), having made a careful distinction, then writes: “Understanding in the sense of UP (having an appropriate explanation of the phenomenon) is an epistemic aim of science, but this aim can be achieved only by means of pragmatic understanding UT (the ability to use the relevant theory)” (de Regt 2009b, 591–592). I agree with both MDC and de Regt that we can talk of understanding phenomena, and of understanding theory, particularly when we appreciate the full complexity of scientific practice, which will emerge in the case of SN1987A in Sect. 3. However, to keep things clear, I will write of understanding phenomena using intelligible mechanistic explanations.

In spite of considerable surface differences, the two approaches can be aligned. In accord with the contextual theory, the usability of a mechanistic explanation goes beyond a simple feeling of a “sense of understanding”. On this view, we can think of mechanistic explanations as having virtues. However, the intelligibility of a mechanistic explanation is not intrinsic to it, but projected onto it by the community of scientists who can use it, for the purposes of their practice. This use is essential for the success of their practice, their epistemic aims, but it is dependent on the skills of scientists.

A significant difference between laws explanation and mechanistic explanation is the importance of parts. Bechtel and Richardson (2010) suggested very early in the new mechanist literature that the mark of mechanistic explanation is the heuristic strategies of decomposition and localisation, which concern parts. However, they now offer other heuristics, so I suggest we turn instead to the simpler: “Mechanistic explanation is inherently componential” (Craver 2007, 131). Note that all major mechanists recognise both what I shall call entities (the parts themselves) and activities (what the parts do), following Machamer et al. (2000) and Illari and Williamson (2012). I have explained in Sect. 2.1 that MDC take these to be crucial to intelligibility. But there is enormous variation in entities and activities studied in current work, and indeed parts have rarely been rigidly defined and restricted even in the history of mechanism, which is lengthy and rich. (Nicholson 2012, 454 also notes this.)

Thinkers such as Descartes, often considered a paradigmatically reductive mechanist, actually have more complex views than they are often presented as having. Descartes often writes about parts in his explanations, or what the mechanisms literature now calls entities, but the parts Descartes uses in his explanations are not all like little particles. Some of the most famous parts Descartes writes about are the fluid “animal spirits” of his account of reflexes (Roux 2017, 64). Barnaby Hutchins argues that, while the received view of Descartes’s mechanicism comes from his physics, such as his treatment of light, Descartes’s physiology is notably different. Hutchins studies Descartes’s explanation of the heartbeat in depth, and his explanations of muscular movement and nutrition more briefly, to show that his explanations of physiological phenomena look far more systemic and multi-level than traditionally reductive (Hutchins 2015, 65). So we should not be surprised to find great variation in kinds of mechanistic explanation, and in the kinds of entities and activities they appeal to.

It is true that grasping a mechanistic explanation, in the sense of knowing something about entities, activities and their organization by which the phenomenon is produced, may generate a feeling of understanding. But applying the contextual theory generates further questions. It suggests we turn our attention to examining the practices of a community of scientists using a mechanistic explanation, to assess whether they can use it well for the purposes of the kind of community-based practice suggested by de Regt and Dieks.

We should expect that it is within the context of the skills involved in that kind of practice that a community will say a mechanistic explanation has virtues, and value it as intelligible. Laws and theories skate over parts, and we can see why CIT, considering mathematically expressed laws, prioritised the skill of manipulation of equations. Mechanistic explanations may share, presumably, very general virtues of explanations, such as the visualisability and simplicity mentioned by de Regt and Dieks (2005). For distinctively mechanistic virtues, we need to consider the role of identifying parts and what they can do, and forms of organization, in the uses to which mechanistic explanations are put.

Specifically for supernovae, I will show that one of the uses for which mechanistic explanations are recruited is building simulations of the phenomenon that help us understand it, particularly in application to SN1987A. Information about mechanisms, rather than just laws, is crucial for building and using such simulations, because attention to parts is needed. A covering law explanation in a broadly Hempelian style is not sufficient; instead we see a great deal of reasoning about entities such as the star core, and activities, particularly the ‘bounce’.

Note that both the approach of MDC to intelligibility and the contextual theory of understanding indicate that pragmatics will be crucial. Consider:

What is taken to be intelligible (and the different ways of making things intelligible) changes over time as different fields within science bottom out their descriptions of mechanisms in different entities and activities that are taken as, or have come to be, unproblematic. This suggests quite plausibly that intelligibility is historically constituted and disciplinarily relative (which is nonetheless consistent with there being universal general characteristics of intelligibility). (Machamer et al. 2000, 22)

MDC seem here to be open to the relativity to a discipline at a time embedded in the contextual theory.Footnote 9

This will be particularly clear in mechanistic explanations using bottom-out entities and activities. Consider:

Bottoming out is relative: Different types of entities and activities are where a given field stops when constructing mechanisms. The explanation comes to an end, and description of lower-level mechanisms would be irrelevant to their interests. Also, scientific training is often concentrated at or around certain levels of mechanisms. (Machamer et al. 2000, 13)

This last idea is developed in some detail in Glennan (2017).

I will go on now to develop this account with respect to supernovae, particularly SN1887A, a type II supernova, in Sect. 3. I will look at bottom-out entities and activities, and critically examine MDC’s claims about gaps and productive continuity.

3 Understanding Supernovae

The exact nature of stars, and why some of them explode, has been a major concern for theoretical development, modelling and the search for observational data in astrophysics. Astrophysicists seek to identify the different ‘mechanisms of supernovae’. As argued elsewhere, and further explained below, these explanations broadly fit the view of what mechanisms are that has been developed in the mechanisms literature (Illari and Williamson 2012). In brief, mechanisms of supernovae explain the observed phenomena of ‘new stars’ or brighter stars, in terms of the activities of entities, such as accumulation of iron at the star core, their organisation, such as passing the Chandrasekhar limit, and further activities such as collapse and bounce. To develop my view of mechanistic understanding, I will now investigate how mechanistic explanations of supernovae are used, allowing us to understand supernovae. This case is novel as it is unusual in both of the literatures or views that I discuss: the mechanisms literature doesn’t usually look to physics; while the contextual theory has so far focused on theory.

Within this argument, use will be important in two places. First, I will show that understanding supernovae is a remarkably complex affair. Stellar astrophysics seeks to explain multiple things, (including at least the famous “H–R diagram” I will come to in a moment, main sequence burning, different types of supernovae, and the peculiarities of an individual supernova), and multiple things are used (including at least physical theories, stellar structure models, and mechanisms of supernovae). So I will show quite generally that the contextual theory needs to expand to consider many things that are used, that are put to many different uses. And while laws are indeed used to construct explanations, yielding understanding of phenomena, in this kind of case they are used in alliance with many other things, including models and mechanistic explanations.

Second, within this surrounding complex architecture, I will examine how mechanistic explanations are used to generate understanding of supernovae, and pull out features more distinctive of mechanistic explanations. We will examine the variety of bottom-out entities and activities for supernovae, and home in on when a mechanistic explanation is regarded as involving a problem or anomaly: the neutrino bounce. I will show that this isn’t a ‘gap’ in any simple sense, and productive continuity is also difficult to see. Nevertheless usable entities, activities and organisation are needed for an intelligible mechanistic explanation of supernovae. Further, successful use of mechanistic explanations of supernovae, i.e. understanding supernovae, requires the surrounding architecture of laws and models and the various practices which use them. We will also see that understanding supernovae is an immensely creative activity, in the sense developed by Bailer-Jones (1999) with respect to extragalactic radio sources.

Within this surrounding architecture, mechanistic explanation has a special place in understanding supernovae. Nevertheless, I will argue that there is no serious question of mechanistic explanation versus laws explanation for understanding supernovae. The two are deeply interdependent, not in competition. Physical law is vital (some but not all of the laws used are candidates for ‘fundamental’ physical law, if there is such a thing), as is its application to particular entities and their activities and organisation. We would have no understanding of stars without fundamental theory—and no fundamental theory, much less intelligible fundamental theory, without cases like this that require a complex architecture of modelling, simulation, and mechanistic explanations involving entities, activities and their organisation.

3.1 Stellar Structure Models

We must begin the story of supernovae with our understanding of stars. This is not dependent on a particular physical theory of stars. It depends, rather, on multiple important theories in physics, including at least general relativity, quantum field theory, thermodynamics, and theories of light—or more generally radiative transfer. Chemistry is also important to stellar evolution.Footnote 10 In so far as there is a ‘theory’ particular to stars, this consists in stellar structure models. These each consist of a cluster of equations describing the crucial elements of stars, and are used to explain their various properties, including the evolution of their burning. Crudely, each equation is designed to model a particular factor affecting stars, perhaps, in the simplest models, one governing dynamical or structural changes, one governing thermal changes, and one the nuclear processes (all of which characteristically operate on different timescales and draw on different background theories), so that when the equations are solved simultaneously, or simulated, the interactions between these factors can be effectively seen. Considerable simplifying assumptions are typically used, such as treating stars as isolated; that they have a uniform initial composition (70% hydrogen, 25–30% helium, and trace amounts of heavier elements); and that they are spherically symmetric. Note that this work is needed to get theories to successfully describe a particular kind of entity.

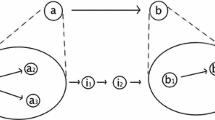

A crucial and lengthy activity of that entity is main sequence burning. Understanding that has been a major achievement of the use of physical theory to build stellar structure models. A striking example of explanatory success is explaining features of the Hertzsprung–Russell (H–R) diagram, which summarises observations of stars. If a cluster of observed stars are plotted on a diagram where one axis is decreasing surface temperature, and the other axis is increasing luminosity, this produces a characteristic scattering of the stars.Footnote 11 The idea in a nutshell is that, first, stellar structure models explain why stars in main sequence burning are located on the central diagonal according to their initial mass, and that is why most of the stars we observe lie on that; secondly, stellar structure models alongside the mechanisms of supernovae explain the features of some stars that lie off that line, and why there are so few of them, as supernovae happen much faster than main sequence burning; thirdly, both of these explain that, although there are probably many white dwarfs, they should be very difficult to detect, so few will show on the diagram. Other massive stars do also show (Fig. 1). The H–R diagram is a highly processed summary of empirical evidence concerning stars, but, nevertheless, one major unifying use of stellar structure models is to explain this diagram, which thereby offers significant support for stellar structure models.

This, then, is a brief summary of how stellar astrophysics uses physical theory to generate a cluster of models which helps explain what stars are, and their key activity of main sequence burning.

3.2 Mechanisms of Supernovae

Stellar structure models are also used to work out what will happen at the end of the life of a star, after main sequence burning exhausts the nuclear fuel which has been the source of energy supporting the star against gravitational collapse.

There are two major types of mechanism of supernovae now generally accepted: type I and type II (with subspecies type Ia, Ib and Ic; see also Murdin 1993). However, we will begin with type II, also called ‘core collapse’ or ‘iron disintegration’ supernovae. This only happens to high mass stars, much bigger than our sun. In brief, the iron cores of massive stars which have exhausted their nuclear fuel become too large to withstand gravity and collapse, releasing an enormous amount of gravitational potential energy, which is carried away, primarily by neutrinos, igniting nuclear burning in the envelope which contains the rest of the mass of the star.Footnote 12

During their main sequence life, stars burn hydrogen, and their composition is approximately homogenous: 70% hydrogen, most of the rest helium with small amounts of heavier elements. The centre of the star is hotter and denser due to gravity, so hydrogen burning is more intense in the core. As hydrogen nears exhaustion, things change and the core becomes increasingly differentiated from the rest of the star. A hydrogen-exhausted helium core grows gradually in mass, as hydrogen burning continues in a shell around that core. The helium core contracts, while the envelope (the rest of the mass of the star) expands in response to maintain energy equilibrium. On contraction, the core heats up, which triggers the next round of burning, and this process repeats through helium, then carbon, oxygen and silicon burning, until eventually the core of the star is iron, gradually increasing in mass.

Iron can no longer undergo nuclear burning to release energy that can support the core against gravity, because making iron into a heavier element takes up energy rather than releasing it. Ultimately an iron core that can no longer undergo nuclear burning can only be supported by what is called “electron degeneracy pressure”, and modeling this is where quantum mechanics becomes essential. Electrons are fermions and so must obey Pauli’s exclusion principle, which means that only two electrons (one spin up, one spin down) can occupy the lowest energy state, and only two the next, and so on, so that high lying energy states need to be filled. Some electrons must therefore be quite high energy, and this creates pressure, the electron degeneracy pressure which supports the star core. However, in 1931 Chandrasekhar calculated the upper limit to the mass of stars that can be supported by electron degeneracy pressure. It is known as the Chandrasekhar limit and is about 1.46M\(\bigodot\), i.e. 1.46 solar masses, although collapse can begin sooner, depending on the star’s rotation.

When the iron core passes the Chandrasekhar limit, it collapses rapidly, through various phases. Ultimately the iron nuclei break back into protons and neutrons, and the free protons capture free electrons and turn into neutrons, each releasing a neutrino. As long as the total mass is still below the Tolman-Oppenheimer-Volkoff limit, neutron degeneracy pressure can halt the collapse, forming a neutron star which is basically one huge nucleus, about 20km or so in diameter. The infalling envelope of the star bounces off this incredibly dense matter, and the released neutrinos blast out of the core during the bounce, the whole carrying off a vast amount of energy, and also blasting away much of the envelope, igniting nuclear burning there. If the star is symmetrical, this explosion should be symmetrical, but we will see shortly, regarding SN1987A, that this is not always so.

With the mechanism for type II supernovae agreed, it is much easier to explain type Ia supernovae. Smaller stars such as our sun, which are much less massive, will not undergo core collapse as the entire star has less mass than the Chandrasekhar limit. Instead, such stars collapse to form white dwarfs after the end of nuclear burning, but these remain small enough to be supported against gravitational collapse by electron degeneracy pressure while they still contain some usable nuclear fuel, such as carbon and oxygen, and the star does not collapse far enough to heat up enough to ignite their burning and so exhaust available nuclear fuel. Unlike our sun, many stars are not isolated, but exist in binary star systems, which characteristically involve the two stars exchanging mass. Type Ia supernovae result when a white dwarf remnant is tipped over the Chandrasekhar limit by accreting material from a binary companion star. Collapse begins while the star still contains nuclear material, significantly heating the core, and thereby triggering runaway nuclear reactions in the remaining nuclear material which blow the star completely apart, leaving no remnant.

These two types of supernovae are different kinds of explosions of star cores with different compositions, depending crucially on the Chandrasekhar mass. One major empirical reason to support these two separate mechanisms is that there are two distinctively different kinds of ‘light curves’ generated when we observe and plot light and other radiation received on Earth from supernovae: intensity of light emitted over time and elements found in the spectra. Indeed, the distinction between type Ia and type II supernovae began as a purely phenomenal one, mostly in terms of the presence of hydrogen lines in the spectra of type II supernovae, but not of type I. But this distinction is now agreed to match these two kinds of mechanisms. This agreement is so established that type Ia supernovae are now used as ‘standard candles’ to calculate such things as the distances to galaxies, and ultimately the speed of expansion of the universe. Since type Ia supernovae occur at approximately the same mass as each other, they should have approximately the same luminosity at source, so a dimmer type Ia supernova must be further away from us. Mechanisms of supernovae have become a vital tool in astrophysics.

Notice three things about this understanding of supernovae. First, it is generated by focusing on entities and activities and their organisation, most obviously the star core (entity), the ‘collapse’ and ‘bounce’ (activities) and the critical place of the Chandrasekhar limit (organisation). Second, it is deeply dependent on the understanding gained by building stellar structure models. We begin to model supernovae by realising that various assumptions that hold during main sequence burning will no longer hold. Most notably, the star does not remain approximately homogeneous, nor does the proportion of gases in the star continue to remain roughly constant. Instead, heavier elements begin to accrue at the star core. Relaxing the assumptions of homogeneity and allowing the proportion of elements to change allows for the simulation of explosions, as we will see in Sect. 3.3. Finally, our understanding of supernovae draws on physical theory to solve problems, to allow us to model entities, and calculate possible activities—in ways that can then be built into simulations. This is clearly seen in the use of Pauli’s exclusion principle to explain electron degeneracy pressure, and help to calculate the Chandrasekhar limit. These activities are organised and coordinated, but this does not mean that they are not creative. Bailer-Jones shows that this is possible by showing how visualisations were used to put sub-models back together in a coordinated but creative way to model extended extragalactic radio sources (Bailer-Jones 1999, 384ff.).

It is generally agreed in the current mechanisms literature that a mechanism’s activities and entities are not something decided in advance of investigation, but are chosen in the process of mechanism discovery. There is therefore no bar to a mechanistic explanation including such wildly divergent entities as ‘electrons’, ‘a Fermi sea’, and ‘a star’s core’; and such imaginative organisation and activities as ‘passing the Chandrasekhar limit’ and ‘collapsing’. It is a slightly different thing how entities and activities are chosen that require no further explanation. Recall from Sect. 2 that MDC call such entities and activities “bottom-out”, which means they are regarded as unproblematic by a particular field at a particular time. So long as the explanations of supernovae bottom out in entities and activities that are regarded by astrophysicists as unproblematic, then the mechanistic explanation will also be regarded by astrophysicists as intelligible.

In the discussion above we can see this in how electron degeneracy pressure is important in answering the mystery of how a neutron star is supported against gravity when it cannot be by nuclear burning. Pauli’s exclusion principle is regarded as unproblematic to astrophysics, although that is not true in other areas of physics. There are still complications, however, because what is regarded as unproblematic changes over time. We will see in Sect. 3.3 when something is problematic, how that changes, and how that is related to ‘gaps’ in mechanistic explanations.

Recall that I said at the beginning of Sect. 3 that use would prove to be important to understanding supernovae in two main ways. These should now have begun to become clearer as the full complexity of our understanding of supernovae is emerging. The ability of scientists to generate mechanisms of supernovae is dependent on physical theory, and stellar structure models, which are used to generate mechanistic explanations of different types of supernovae consistent with two distinct empirically observed phenomena. The mechanistic explanations of both major types of supernovae remain embedded in this context, allowing among other things the use of type Ia supernovae as standard candles. This shows that mechanistic explanations of supernovae depend at least on our ability to use fundamental physical theory and to build and use stellar structure models—modifying their assumptions for the end of a star’s life. Laws explanation and mechanistic explanation are not in competition, but interdependent and embedded also in other scientific practices. What we know about the entities and activities of stars is used to adapt models, make simulations, and work out which laws to apply and how.

This means that the first place in which use is important is in the myriad of uses of myriad scientific creations to understand supernovae. The contextual theory of scientific explanation needs to expand, even in its account of how laws are used to understand phenomena, to allow that laws are used alongside many other things like models and mechanistic explanations in this case and cases like it. Happily it seems the contextual theory has the resources to do this. I began that setup in Sects. 2.2 and 2.3, but here we can see that the main claims of the contextual theory seem to be right: that use is important, and that these uses are essential to the epistemic aims of science, rather than a mere afterthought.

The second place in which use is important shows when we home in on how the mechanistic explanations are used in understanding supernovae. This case is not really a case where there are different explanations of supernovae, and the mechanistic explanation is distinct in kind from the others. Instead, it is not clear this understanding could be achieved any other way; mechanistic explanation is a part, but an important part, of the wider architecture of laws and stellar structure models used to understand stars. General physical theory is important, but cannot be used alone. The process of getting it to apply to the special entity ‘star’, is itself an immensely creative empirically informed endeavour. We have to turn to trying to model the parts, in accord with such empirical data as we can gather. Mechanistic explanation is essential to the process. We will see shortly an instance of such a mechanistic explanation achieving success in deepening our understanding of type II supernovae.

Within this architecture of laws, models, and mechanisms, I suggest that these combinations of uses constitute understanding of various phenomena, rather than just identifying when understanding occurs, or offering a test for it. Unlike a purely subjective feeling of understanding of the kind criticised by Trout, these uses can explain why we value understanding, because they exist when an explanation is fruitful, as de Regt says. These uses may be accompanied by phenomenology, but any such phenomenology is not the whole story. Further, it is becoming increasingly clear how deeply intertwined explanation and understanding are. In the kinds of cases that de Regt and Dieks originally consider, it is possible for scientists to know a theory, and only later come to develop the skills to use it, and so have understanding. But having an explanation and using it are far harder to separate in a practice such as stellar astrophysics. Analytically we can separate knowing a mechanistic explanation and being able to use it, but in the practice itself these are very difficult to separate. Using the explanation is often part of how we get it, and it is always involved in developing and improving an existing explanation.

The next section will examine how we take a general model of a stable entity and build a simulation of a particular explosion, thereby also gaining empirical evidence for both a crucial piece of the mechanism of type II supernova, and for particle physics.

3.3 Supernova SN1987A

For various reasons, we have still more work to do to understand an individual supernova, and I will show that this work is both a vital use of the mechanism of type II supernovae, and crucial to letting empirical evidence impact on the surrounding architecture of laws and stellar structure models that I have described.

SN1987A is one of the most important type II supernovae ever observed. It was the core collapse of a massive star (Sanduleak-69 202, approximately 18M\(\bigodot\)) in the Large Magellanic Cloud. It was spotted in 1987, and, while not in our galaxy, the Large Magellanic Cloud is an unobscured close companion galaxy, making SN1987A at its time one of the closest supernovae in several hundred years. It was also the first supernova to be observed with many of our modern capabilities, including neutrino detectors at Kamiokande and IMB, and later space telescopes like Hubble and Chandra. It was also the first supernova where the progenitor star was already known.Footnote 13 It also had some fascinating peculiarities that manifested in a non-standard light-curve, and a distinctive asymmetric squashed-figure-8 shape, and these are still being investigated.

SN1987A is particularly illuminating for our purposes because its most immediate impact was to generate for a time considerable agreement about the mechanism of type II supernovae. The neutrino blast which had been posited as the major means of carrying away the energy of collapse was very controversial, constituting what MDC would probably recognise as a ‘gap’ in the mechanism, or a failure of ‘productive continuity’, and therefore intelligibility. For the first time scientists were able to detect two bursts of neutrinos from SN1987A, one each by Kamiokande and IMB (a third burst was reported at the time but later withdrawn). This generated significant consensus in the astrophysics community about the role of a neutrino burst. Further, particle physics met astrophysics as the first detection of neutrinos from such a source energised a great deal of work on both those bursts and more theoretical understanding of neutrinos in general (Walker 1987).

SN1987A also involved other mysteries that are fascinating for our purposes. That the supernova would be of type II was not a surprise: as Sanduleak-69 202 is estimated to have begun its life at approximately 20M\(\bigodot\), its core was very likely to pass the Chandrasekhar limit at some point, in spite of an estimated 2M\(\bigodot\) lost due to stellar winds. However there was significant debate, particularly from 1987 to 1989, about which was the progenitor star (Fabian et al. 1987; Joss et al. 1988; Podsiadlowski and Joss 1989; Chevalier 1992). Sanduleak-69 202 was a blue supergiant, and the community was surprised that it would be ready to explode. Not until the brightness of the supernova dimmed enough for it to be seen that Sanduleak-69 202 had gone was there agreement that it was indeed the progenitor. It is thought that SN1987A being the explosion of a blue supergiant was the main reason for the second mystery, its non-standard light-curve (Chevalier 1992).

The final feature of SN1987A I will discuss is its distinctive squashed-figure-8 appearance, composed of a bright central ring and two larger, dimmer, rings.Footnote 14 To us on Earth, it appeared as a bright centre, surrounded by an even brighter central ring (Fig. 2). This is common with a supernova, with the centre being thought to be the supernova remnant lit by radioactive decay, and the ring indicating nuclear burning in the envelope ignited by the blast. But this ring of SN1987A showed significant asymmetries, which have been understood using simulations from early years (Papaliolios et al. 1989). But SN1987A also had two much larger, fainter rings overlapping, to look from Earth like a squashed up figure 8. These are more mysterious, and were thought to indicate the ends of two cones expanding violently in opposite directions from the central explosion, showing that there was asymmetry in the explosion. This has been understood in many ways, most by running supernova simulations including significant asymmetric effects (Sato et al. 1996). More recently, they have been suggested to be the result of outflow from the progenitor while still in its red supergiant phase (de Grijs 2015).

In these three examples, the mechanism of type II supernovae was used, but adapted to account for the peculiarities of SN1987A. These uses constitute understanding of the more general mechanism, while in turn further uses in the astrophysics of supernovae show understanding of the particular event SN1987A. So here the mechanism of type II supernovae as so far known was the primary thing used. It is clear that a general understanding of a kind of supernova, which was all we had before spotting SN1987A, was applied to understand a particular phenomenon—a case of type II supernovae that was distinctive in various ways. But the general mechanism of type II supernovae was not used in a vacuum. First, it was supplemented by filling in a gap. Second, against the background of laws and stellar structure models, it was used alongside other things, specifically simulations, to understand why SN1987A appears to us as it does.

This required modelling what was peculiar about the particular entities and activities of SN1987A. So simulations were used to explore what kinds of asymmetries in a star might lead to the kind of asymmetric explosion we seemed to see, or whether outflow was more likely. Speculation about what kind of star might have led to such an explosion, with the right kind of light curve, was also important until it was clear that Sanduleak-69 202 had gone. These uses were important to help us use the crucial empirical evidence that we got from SN1987A.

This was particularly important to allow the consensus about the mechanism of type II supernovae. They had been regarded as a problem because there was no general agreement prior to 1987 about a crucial activity. We could calculate the enormous amount of energy which would be released on core collapse, but there was no consensus about where it went. Missing energy seems to be a clear failure of productive continuity. It is also an important gap in a mechanism, when a crucial activity is unknown, even mysterious. Neutrinos are extremely difficult to detect, and prior to 1987 a neutrino burst was one posited means of carrying away the energy. But once two different neutrino detectors reported a neutrino burst from a visible supernova, the community rapidly converged on a neutrino burst during the bounce as the missing activity that was needed. The gap that had been regarded as problematic was no more.

It should become clear here that mechanistic explanation, and understanding phenomena using a mechanistic explanation, are deeply intertwined. Recall that MDC say that mechanism descriptions show how possibly, how plausibly, or how actually things work. They also say that intelligibility doesn’t require a how-actually explanation, but merely an elucidative relationship (Machamer et al. 2000, 21). Before 1987, type II supernovae had some possible explanations. However, in achieving consensus, a gap in the explanation was filled in, and intelligibility of the mechanism was increased. So, as I noted in Sect. 2.3, in cases like this we don’t get the explanation, and afterwards work on using it and understanding the phenomenon. Use of the explanation is already ongoing in the practice. So here, we improve our explanation, and improve intelligibility and understanding, together.

This is important, but one of the lessons of SN1987A is that it is very particular. What a community regards as unproblematic is very dependent on the state of that community at that time—and very particularly on what else they know and what tools they have. I am not giving an account of explanation, but of understanding, and I take the community being able to use the explanation to be constitutive of understanding. I shall continue to develop this last idea in the rest of this section.

The community clearly regarded gaps, or breaks in productive continuity of this kind, to be a problem, and indeed to impede use. After 1987 they had a preferred mechanism of type II supernovae that they could use. But note that I chose this as a clear case. Much of the other work that was done on SN1987A was very exploratory, and could have led to very different results. At the time, for example, the non-standard light-curve might have proven to be of a hitherto unseen type of supernovae. Therefore, much of the empirical data could have turned out to indicate different gaps or problems of productive continuity.

The lesson for what the virtues of mechanistic explanations are is also one of particularity. Recall that according to the contextual theory I have been developing for mechanistic explanations, virtues are projected by scientists onto mechanistic explanations they can use. We can see here some general virtues that have been advanced of scientific work in general, including coherence with other things we know, and fit with empirical data.

However, these virtues show here their very particular facets. Here, coherence with what else we know cannot easily be formalised.Footnote 15 It involves the creative embedding in the whole architecture I have described above, where first physical law is used to make stellar structure models, and then these are adapted to generate mechanisms of supernovae. Fit with empirical data is extremely particular, and in some cases such as SN1987A this needs customised modelling and simulation. Far from being merely ad hoc and overfitted, models of individual cases are essential. Distinctively mechanistic virtues appear in the homing in on modelling a particular entity, and a particular instance of an activity. And this is the only way to get evidence that bears on the more general mechanism, as we discover more about what can happen with those kinds of entities and activities.

This whole process of modelling SN1987A and fitting it into what else we know about stellar structure models and type II supernovae is creative as above, active in the sense of being an ongoing process, and is highly skilled. I take this to be constitutive of understanding, rejecting the view of understanding as a phenomenological feeling, where that is construed as something more passively received. Note what “coherence” and “fit” mean here: a very active and creative process was required to model SN1987A and fit it into the surrounding architecture of what we already knew, and also with the empirical data we were constantly getting from our observations. The multiple tasks involved are highly skilled and shared across a large community, highly active, not a passive, wholly subjective feeling. The more distinctively mechanistic virtues include the role of identifying parts and what they can do, and the identification of forms of organisation and their place in the explanation of the phenomenon.

It would be extremely difficult to extract simple tests of scientists’ understanding of these various scientific items. No one particular skill or ability to use mechanistic explanations of supernovae is likely to indicate understanding of supernovae in any particularly reliable way. Instead, it seems that the shared abilities of the community to use the mechanistic explanations of supernovae in many different ways, embedded in the ability to use other things such as stellar structure models, constitutes the scientific community understanding supernovae. Many different abilities may indicate understanding on the part of individual scientists—but that will be a matter for the community to judge. It seems to me to make no particular sense to try to specify a bar for the understanding of an individual scientist. Instead, understanding is a multifaceted thing, had by a community, and any understanding individual scientists have of type II supernovae, and SN1987A in particular, is dependent upon the skills of the community.

4 Conclusion

I have developed and defended the views of de Regt and Dieks, to show that understanding type II supernovae required the use of many different kinds of things, involving both theoretical and embodied skills, and that the resulting understanding is primarily the achievement of a community. This use, and so the understanding that I have claimed it constitutes, is an active and ongoing thing, an activity of a community, rather than a passive achievement of individuals. It offers an account of intelligibility of mechanisms which is a useful contribution to the mechanisms literature, as is the application to the unusual case of SN1987A.

I will finish with some reflections on the contextual theory I have applied. In de Regt and Dieks’ initial work, CIT as a test, or the ability of scientists to recognise qualitatively characteristic consequences of the theory without performing exact calculations, was important. No doubt many scientists became comfortable with quantitative models of SN187A, but even this part of their work did not involve using a single theory, but building many models and simulations. Even in far more recent work, de Regt still focuses on prediction of consequences, although he construes it as a skill: “I will develop the idea that understanding phenomena consists in the ability to use a theory to generate predictions of the target systems behavior” (de Regt 2015, 3781). It is the ability, not a correct outcome, which is de Regt’s focus, and I have shown that this is an important difference.

Further, I have shown that even in the project of using theory to understand a phenomenon, the uses and skills required go far beyond that envisaged by de Regt and Dieks (2005). To understand supernovae, astrophysicists drew on physical theory, clusters of stellar structure models constituted by groups of equations, and built theoretically possible mechanisms of explosion, and increasingly specialised models and simulations ultimately designed for explaining features of individual stars. In explaining SN1987A simulations were used to explain features like its asymmetry: in a way that involves reproducing the behaviour, of course, but simulations are much richer than prediction. In stellar astrophysics generally, new phenomena are even created. In the H–R diagram, we have the construction of a complex new phenomenon that is explained successfully with stellar structure models.Footnote 16 So the turn towards models since de Regt (2015) is welcome.

I accepted de Regt and Dieks’ claim that scientific understanding goes significantly beyond a purely subjective and possibly individual ‘sense’ of understanding of the kind criticised by Trout (2002). Although I hold that such a sense still could have some important epistemic roles, and noted that according to current embodied or enactive views of mental states, any sharp dichotomy between thinking of understanding as a mental state and thinking of it as involving skills is rather artificial. Nevertheless, I have offered even more reason for rejecting the purely subjective sense of understanding that Trout criticises as the whole story of understanding. While scientists might also experience phenomenological responses to this achievement, and these activities, feeling that in using them extensively they have developed a more intuitive grasp of them, there is a great deal more going on here if we pay attention to use.

I am willing to accept the consequence, which some might find counterintuitive, that a scientist could in principle count as understanding a theory even in the absence of her—or even the whole community—‘feeling’ that they understand it. On this view we might just be disappointed in what we subjectively wanted when we embarked on a project. This seems to agree with a remark by Hasok Chang:

With these insights, I can finally tackle something that has been an enduring puzzle to me: what is the difference between simply applying an algorithm to solve a problem, and doing the same with a sense of understanding? Simply following an algorithm provides no relevant understanding to someone who is interested in some other epistemic activity, for example, visualizing what is going on, or giving a mechanical explanation. But for someone whose goal is to derive a prediction, there is surely the relevant sense of understanding in knowing how to apply the right tricks to derive the answer. (When I was an undergraduate, learning physics in the standard way gave me no sense of understanding because I went into the enterprise expecting something else. It was my mistake to think that my classmates who were not dissatisfied like me were in some way shallow or unreflective; they were simply happy with the epistemic activity they were engaged in.) (Chang 2009, 76)

I am also happy with the various controversial implications of the view that I have been noting throughout. Understanding or intelligibility is a value that the scientific community at a particular time confers on theories, models and mechanisms that they can use. Understanding is therefore something had by a community, and only derivatively by individuals. Finally, the complexity of the multiple uses and skills involved in understanding, and the purposes of theories, mechanisms and models, means that understanding will rarely if ever be an all-or-nothing matter. Understanding comes in greater and lesser degrees, and since it involves multiple skills, it may lie on a multidimensional continuum. That there are no sharp distinctions here still does not imply that it is an arbitrary matter.

I wish to finish by raising a concern for the new view. This account sets the bar for understanding very high, in the sense that only members of the relevant scientific community will really have access to the training to acquire the theoretical skills really needed to use scientific theories, models, and mechanisms. While the importance of these kinds of skills is crucial, this account would seem to bar anyone other than active experts from understanding the scientific phenomena we think we have explained. How others understand is not a simple issue, and I reserve it for further work: roughly, though, I expect that the very different kinds of uses people outwith the relevant community have for scientific knowledge might well be key to that story.

Notes

For recent work that takes this kind of approach, see Strevens (2009) and Potochnik (2011). For extended defence of this view, see Khalifa (2012, 2015), and for criticism see Newman (2014). Khalifa thinks the skill condition in de Regt’s work is either unnecessary or trivial. It will become clear that I hold it to be both necessary and substantive, as I argue for this extensively, thoroughly agreeing with Newman (2014) that scientific understanding requires a significant amount of non-propositional knowledge not captured by logical relations.

In current work, most notably Trout (2002).

I will not address how scientific knowledge is held in society, nor will I treat intelligibility historically (for an excellent book see Dear 2006). Note finally that I am not directly addressing the ontic-epistemic debate concerning mechanistic explanation (see Illari 2013), but providing an account of understanding phenomena mechanistically that I think could in principle be used by both sides of the ontic-epistemic divide.

Work sometimes bears on this obliquely, such as the debate about whether mechanisms are ontic (the mechanism itself explains; Craver 2014), or epistemic (some form of description of the mechanism explains; Wright and Bechtel 2007; Wright 2012). In Illari (2013) I have attempted to argue that this disagreement is not so deep as it appears.

It is difficult to classify MDC clearly as holding an ontic or an epistemic view of mechanistic explanation. Craver has extensively defended the ontic conception (Craver 2014), while Darden and Machamer have remained largely silent, although many of their comments, particularly in the MDC paper, seem to suggest an ontic view. While the comments from the MDC paper that I have quoted above read as epistemic, they seem to concern intelligibility, rather than mechanistic explanation itself.

Which may involve aesthetics: for one argument, see Kosso (2002).

Note also that what Trout seems to have in mind is a mental state. Now, on a traditional understanding of mental states, they involve qualitative or phenomenal feel, while use might or might not involve phenomenal feel. However, the rise of externalist views of mental states, and particularly the flourishing literature on embodied, enactive and distributed cognition, has a lot in common with the view I am advocating. See Ylikoski (2014) and Toon (2015) for direct application of this view to understanding.

Note that I don’t claim mechanistic explanation is the only form of explanation in the life sciences, nor does my account require a specific account of mechanistic explanation, so long as it accepts the importance of parts and what the parts do, as I will show.

I thank an anonymous reviewer for pressing me to include this.

This is an endlessly reproduced diagram, including various levels of detail. See Prialnik (2010, 9ff.) for a textbook presentation. It is also available openly in many places on the internet. For example, see https://en.wikipedia.org/wiki/Hertzsprung-Russell_diagram.

The precise mechanism of type II supernovae remains controversial, but they are associated with neutrino bursts. I focus on the state of the art in 1987, surrounding work on SN1987A. See McCray (1997).

In 2010 it remained the only such supernova (Prialnik 2010).

Images are widely available. See, for example, Hubble’s image http://www.spacetelescope.org/images/potw1142a/, or https://en.wikipedia.org/wiki/SN_1987A.

Ruphy (2011) offers us an important warning that models created from this kind of simulation process may exhibit what she calls permanent incompatible pluralism. She writes: “And the point is that those alternative pictures would be equally plausible in the sense that they would also be consistent both with the observations at hand and with our current theoretical knowledge” (Ruphy 2011, 184). Even worse, in some cases this kind of incompatibility will not be temporary, but permanent, in the sense that we cannot expect the incompatible plurality to go away when new data come in.

See Boon (2009) for discussion of this in another context.

References

Anscombe, G. (1975). Causality and determination. In E. Sosa (Ed.), Causation and conditionals (pp. 63–81). Oxford: Oxford University Press.

Bailer-Jones, D. M. (1999). Creative strategies employed in modelling: A case study. Foundations of Science, 4(4), 375–388.

Bechtel, W. (2007). Biological mechanisms: Organized to maintain autonomy. In F. C. Boogerd, F. J. Bruggeman, J.-H. S. Hofmeyr, & H. V. Westerhoff (Eds.), Systems biology: Philosophical foundations (pp. 269–302). Amsterdam: Elsevier.

Bechtel, W., & Abrahamsen, A. (2010). Dynamic mechanistic explanation: Computational modeling of circadian rhythms as an exemplar for cognitive science. Studies in History and Philosophy of Science, 41(3), 321–333.

Bechtel, W., & Richardson, R. (2010). Discovering complexity. Cambridge: MIT Press.

Bogen, J. (2008). Causally productive activities. Studies in History and Philosophy of Science, 39(1), 112–123.

Boon, M. (2009). Understanding in the engineering sciences: Interpretive structures. In H. de Regt, S. Leonelli, & K. Eigner (Eds.), Scientific understanding: Philosophical perspectives (pp. 249–270). Pittsburgh, PA: University of Pittsburgh Press.

Chang, H. (2009). Ontological principles and the intelligibility of epistemic activities. In H. de Regt, S. Leonelli, & K. Eigner (Eds.), Scientific understanding: Philosophical perspectives (pp. 64–82). Pittsburgh, PA: University of Pittsburgh Press.

Chevalier, R. A. (1992). SN1987A at 5 years of age. Nature, 355(6362), 691–696.

Craver, C. (2007). Explaining the brain. Oxford: Clarendon Press.

Craver, C. (2014). The ontic account of scientific explanation. In M. I. Kaiser, O. R. Scholz, D. Plenge, & A. Hüttemann (Eds.), Explanation in the special sciences: The case of biology and history (pp. 27–52). Dordrecht: Springer.

Darden, L. (2002). Strategies for discovering mechanisms: Schema instantiation, modular subassembly, forward/backward chaining. Philosophy of Science, 69(S3), S354–S365.

Darden, L. (2006). Reasoning in biological discoveries. Cambridge: Cambridge University Press.

de Grijs, R. (2015). Turning off the lights. Nature Physics, 11(8), 623–624.

de Regt, H. (2009a). Understanding and scientific explanation. In H. W. de Regt, S. Leonelli, & K. Eigner (Eds.), Scientific understanding: Philosophical perspectives (pp. 21–42). Pittsburgh: University of Pittsburgh Press.

de Regt, H. W. (2009b). The epistemic value of understanding. Philosophy of Science, 76(5), 585–597.

de Regt, H. W. (2014). Visualization as a tool for understanding. Perspectives on Science, 22(3), 377–396.

de Regt, H. W. (2015). Scientific understanding: Truth or dare? Synthese, 192(12), 3781–3797.

de Regt, H. (2017). Understanding scientific understanding. Oxford: Oxford University Press.

de Regt, H. W., & Dieks, D. (2005). A contextual approach to scientific understanding. Synthese, 144(1), 137–170.

de Regt, H., Leonelli, S., & Eigner, K. (Eds.). (2009). Scientific understanding: Philosophical perspectives. Pittsburgh, PA: University of Pittsburgh Press.

Dear, P. (2006). The intelligibility of nature: How science makes sense of the world. Chicago: University of Chicago Press.

Fabian, A. C., Reese, M. J., van den Heuvel, E. P. J., & van Paradijs, J. (1987). An interacting binary model for SN1987A. Nature, 328(6128), 323–324.

Glennan, S. (2017). The new mechanical philosophy. Oxford: Oxford University Press.

Hutchins, B. R. (2015). Descartes, corpuscles and reductionism: Mechanism and systems in Descartes’ physiology. The Philosophical Quarterly, 65(261), 669–689.

Illari, P. (2013). Mechanistic explanation: Integrating the ontic and epistemic. Erkenntnis, 78(S2), 237–255.

Illari, P. M., & Williamson, J. (2012). What is a mechanism? Thinking about mechanisms across the sciences. European Journal of the Philosophy of Science, 2(1), 119–135.

Joss, P. C., Podsiadlowski, P., Hsu, J. J. L., & Rappaport, S. (1988). Is supernova 1987A a stripped asymptotic-branch giant in a binary system? Nature, 331(6153), 327–340.

Khalifa, K. (2012). Inaugurating understanding or repackaging explanation? Philosophy of Science, 79(1), 15–37.

Khalifa, K. (2015). EMU defended: Reply to Newman (2014). European Journal for Philosophy of Science, 5(3), 377–385.

Kosso, P. (2002). The omniscienter: Beauty and scientific understanding. International Studies in the Philosophy of Science, 16(1), 39–48.

Leonelli, S. (2009). Understanding in biology: The impure nature of biological knoweldge. In H. de Regt, S. Leonelli, & K. Eigner (Eds.), Scientific understanding: Philosophical perspectives (pp. 189–209). Pittsburgh, PA: University of Pittsburgh Press.

Machamer, P., Darden, L., & Craver, C. (2000). Thinking about mechanisms. Philosophy of Science, 67(1), 1–25.

McCray, R. (1997). SN1987A enters its second decade. Nature, 386(6624), 438–439.

Murdin, P. (1993). Supernovae cannot be typecast. Nature, 363(6431), 668–669.

Newman, M. (2014). EMU and inference: What the explanatory model of scientific understanding ignores. European Journal for Philosophy of Science, 4(1), 55–74.

Nicholson, D. J. (2012). The concept of mechanism in biology. Studies in History and Philosophy of Biological and Biomedical Sciences, 43(1), 152–163.

Papaliolios, C., Karovska, M., Loechlin, L., Nisenson, P., Standley, C., & Heathcote, S. (1989). Asymmetry of the envelope of supernova 1987A. Nature, 338(6216), 565–566.

Podsiadlowski, P., & Joss, P. C. (1989). An alternative binary model for SN1987A. Nature, 338(6214), 401–403.

Potochnik, A. (2011). Explanation and understanding: An alternative to Strevens depth. European Journal for Philosophy of Science, 1(1), 29–38.

Prialnik, D. (2010). An introduction to the theory of stellar structure and evolution (2nd ed.). Cambridge: Cambridge University Press.

Roux, S. (2017). From the mechanical philosophy to early modern mechanisms. In S. Glennan, & P. Illari (Eds.), The Routledge handbook of mechanisms and the mechanical philosophy (pp. 26–45). Oxford: Routledge.

Ruphy, S. (2011). Limits to modeling: Balancing ambition and outcome in astrophysics and cosmology. Simulation & Gaming, 42(2), 177–194.

Salmon, W. C. (1998). Scientific explanation: Three basic conceptions. In Causality and explanation (pp. 320–332). Oxford University Press.

Sato, K., Shimizu, T., & Yamada, S. (1996). Explosion mechanism of collapse-driven supernovae. Nuclear Physics A, 588(1), C345–C356.

Strevens, M. (2009). Depth: An account of scientific explanation. Cambridge, MA: Harvard University Press.

Toon, A. (2015). Where is the understanding? Synthese, 192(12), 3859–3875.

Trout, J. D. (2002). Scientific explanation and the sense of understanding. Philosophy of Science, 69(2), 212–233.

Walker, T. P. (1987). Making the most of SN1987A. Nature, 330(6149), 609–610.

Wright, C. D. (2012). Mechanistic explanation without the ontic conception. European Journal for Philosophy of Science, 2(3), 375–394.

Wright, C., & Bechtel, W. (2007). Mechanisms and psychological explanation. In P. Thagard (Ed.), Philosophy of psychology and cognitive science (pp. 31–79). Amsterdam: Elsevier.

Ylikoski, P. (2014). Agent-based simulation and sociological understanding. Perspectives on Science, 22(3), 318–335.

Acknowledgements