Abstract

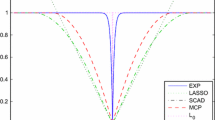

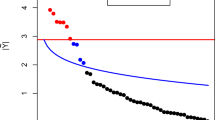

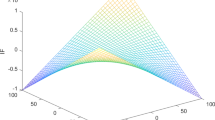

Non-concave penalized maximum likelihood methods are widely used because they are more efficient than the Lasso. They include a tuning parameter which controls a penalty level, and several information criteria have been developed for selecting it. While these criteria assure the model selection consistency, they have a problem in that there are no appropriate rules for choosing one from the class of information criteria satisfying such a preferred asymptotic property. In this paper, we derive an information criterion based on the original definition of the AIC by considering minimization of the prediction error rather than model selection consistency. Concretely speaking, we derive a function of the score statistic that is asymptotically equivalent to the non-concave penalized maximum likelihood estimator and then provide an estimator of the Kullback–Leibler divergence between the true distribution and the estimated distribution based on the function, whose bias converges in mean to zero.

Similar content being viewed by others

References

Akaike, H. (1973). Information theory and an extension of the maximum likelihood principle. In B. N. Petrov, F. Csaki (Eds.), Proceeding of the 2nd international symposium on information theory (pp. 267–281), Akademiai Kiado.

Andersen, P. K., Gill, R. D. (1982). Cox’s regression model for counting processes: A large sample study. The Annals of Statistics, 10, 1100–1120.

Beck, A., Teboulle, M. (2009). A fast iterative shrinkage–thresholding algorithm for linear inverse problems. SIAM Journal on Imaging Sciences, 2, 183–202.

Dicker, L., Huang, B., Lin, X. (2012). Variable selection and estimation with the seamless-\({L}_0\) penalty. Statistica Sinica, 23, 929–962.

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R. (2004). Least angle regression. The Annals of Statistics, 32, 407–499.

Fan, J., Li, R. (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association, 96, 1348–1360.

Fan, Y., Tang, C. Y. (2013). Tuning parameter selection in high dimensional penalized likelihood. Journal of the Royal Statistical Society: Series B, 75, 531–552.

Frank, L. E., Friedman, J. H. (1993). A statistical view of some chemometrics regression tools. Technometrics, 35, 109–135.

Hjort, N. L., Pollard, D. (1993). Asymptotics for minimisers of convex processes, arXiv preprint arXiv:1107.3806.

Knight, K., Fu, W. (2000). Asymptotics for lasso-type estimators. The Annals of Statistics, 28, 1356–1378.

Konishi, S., Kitagawa, G. (2008). Information criteria and statistical modeling. Springer Series in Statistics. New York: Springer.

Kullback, S., Leibler, R. A. (1951). On information and sufficiency. The Annals of Mathematical Statistics, 22, 79–86.

Masuda, H., Shimizu, Y. (2017). Moment convergence in regularized estimation under multiple and mixed-rates asymptotics. Mathematical Methods of Statistics, 26, 81–110.

Mazumder, R., Friedman, J. H., Hastie, T. (2011). SparseNet: Coordinate descent with nonconvex penalties. Journal of the American Statistical Association, 106, 1125–1138.

McCullagh, P., Nelder, J. A. (1989). Generalized linear models, monographs on monographs on statistics and applied probability. London: Chapman & Hall.

Meinshausen, N., Bühlmann, P. (2010). Stability selection. Journal of the Royal Statistical Society: Series B, 72, 417–473.

Ninomiya, Y., Kawano, S. (2016). AIC for the LASSO in generalized linear models. Electronic Journal of Statistics, 10, 2537–2560.

Pollard, D. (1991). Asymptotics for least absolute deviation regression estimators. Econometric Theory, 7, 186–199.

Radchenko, P. (2005). Reweighting the lasso. In 2005 Proceedings of the American Statistical Association [CD-ROM].

Rockafellar, R. T. (1970). Convex analysis, Princeton mathematical series. New Jersey: Princeton University Press.

Rockafellar, R. T. (1976). Augmented Lagrangians and applications of the proximal point algorithm in convex programming. Mathematics of Operations Research, 1, 97–116.

Shiryaev, A. N. (1996). Probability, volume 95 of graduate texts in mathematics (2nd ed.). New York: Springer.

Stein, C. M. (1981). Estimation of the mean of a multivariate normal distribution. The Annals of Statistics, 9, 1135–1151.

Stone, M. (1974). Cross-validatory choice and assessment of statistical predictions. Journal of the Royal Statistical Society: Series B, 36, 111–147.

Sugiura, N. (1978). Further analysts of the data by akaike’s information criterion and the finite corrections: Further analysts of the data by akaike’s. Communications in Statistics-Theory and Methods, 7, 13–26.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B, 58, 267–288.

Wang, H., Li, R., Tsai, C.-L. (2007). Tuning parameter selectors for the smoothly clipped absolute deviation method. Biometrika, 94, 553–568.

Wang, H., Li, B., Leng, C. (2009). Shrinkage tuning parameter selection with a diverging number of parameters. Journal of the Royal Statistical Society: Series B, 71, 671–683.

Yoshida, N. (2011). Polynomial type large deviation inequalities and quasi-likelihood analysis for stochastic differential equations. Annals of the Institute of Statistical Mathematics, 63, 431–479.

Yuan, M., Lin, Y. (2007). Model selection and estimation in the Gaussian graphical model. Biometrika, 94, 19–35.

Zhang, C.-H. (2010). Nearly unbiased variable selection under minimax concave penalty. The Annals of Statistics, 38, 894–942.

Zhang, Y., Li, R., Tsai, C.-L. (2010). Regularization parameter selections via generalized information criterion. Journal of the American Statistical Association, 105, 312–323.

Zou, H., Hastie, T., Tibshirani, R. (2007). On the “degrees of freedom” of the lasso. The Annals of Statistics, 35, 2173–2192.

Author information

Authors and Affiliations

Corresponding author

Additional information

The work of Y. Ninomiya (corresponding author) was partially supported by a Grant-in-Aid for Scientific Research (16K00050) from the Ministry of Education, Culture, Sports, Science and Technology of Japan. The works of H. Masuda and Y. Shimizu were partially supported by JST CREST Grant Number JPMJCR14D7, Japan.

Proofs

Proofs

1.1 Proof of Lemma 2

From (R1), the first term in the right-hand side of (3) converges in probability to \(h(\varvec{\beta })\) for each \(\varvec{\beta }\). In addition, from the convexity of \(g_i(\varvec{\beta })\) with respect to \(\varvec{\beta }\), we have

for any compact set K (Andersen and Gill 1982; Pollard 1991). Accordingly, we have

Note that in the following inequality,

the argmin of the right-hand side is the maximum likelihood estimator and is \(\mathrm{O}_{\mathrm{p}}(1)\). Also, note that for some \(M\ (>0)\),

because \(p_{\lambda }(0)=0\) from (C5). Therefore, we have

The last equality holds from (1). \(\square \)

1.2 Proof of (8)

Let \(\varvec{u}=\tilde{\varvec{u}}_{n}+l\varvec{w}\), where \(\varvec{w}\) is a unit vector, and let \(l\in (\delta ,\xi )\). The strong convexity of \(\eta _{n}(\varvec{u})\) implies

and we thus have

Since it follows that

we obtain from (6) and (7) that, for any \(\varepsilon \;(>0)\),

for sufficiently large n and sufficiently small \(\gamma \). If \(2\varDelta _{n}(\delta )+\varepsilon <\varUpsilon _{n}(\delta )\), then \(\nu _{n}(\varvec{u})\ge \nu _{n}(\tilde{\varvec{u}}_{n})\) for any \(\varvec{u}\) such that \(|\varvec{u}^{\dagger }|\le \gamma \) and \(\delta \le |\varvec{u}-\tilde{\varvec{u}}_{n}|\le \xi \). This means \(\varvec{u}_{n}\) must satisfy \(|\varvec{u}_{n}^{\dagger }|> \gamma \) or \(|\varvec{u}_{n}-\tilde{\varvec{u}}_{n}|\not \in [\delta ,\xi ]\) in order for \(\varvec{u}_{n}\) to be the argmin of \(\nu _{n}(\varvec{u})\). Hence, we obtain (8). \(\square \)

1.3 Proof of (17)

Let us consider a random function \(\mu _{n}(\varvec{\beta })\) in (3). Since \(p_{\lambda }(0)=0\) from (C5), we have

where \(\tilde{\varvec{\beta }}\) is a vector on the segment from \(\hat{\varvec{\beta }}_{\lambda }\) to \(\varvec{\beta }^{*}\). Then, we have

because \(\varvec{s}_{n}=\mathrm{O}_{\mathrm{p}}(1)\). From (C2) and (C3), \(\varvec{J}_{n}(\tilde{\varvec{\beta }})\) is positive definite for sufficiently large n, and therefore, it follows that

Let us express \(\mu _{n}(\varvec{\beta })\) by \(\mu _{n}(\varvec{\beta }^{(1)},\varvec{\beta }^{(2)})\). Because \(0\ge \mu _{n}(\hat{\varvec{\beta }}_{\lambda }^{(1)},\hat{\varvec{\beta }}_{\lambda }^{(2)})-\mu _{n}(\varvec{0},\hat{\varvec{\beta }}_{\lambda }^{(2)})\), we see that

is non-positive. Here, we use the fact that \(\sum _{j\in \mathcal{J}^{(1)}}p_{\lambda }(\hat{\beta }_{\lambda ,j})\) reduces to \(\lambda \Vert \hat{\varvec{\beta }}_{\lambda }^{(1)}\Vert _{q}^{q}\{1+\mathrm{o}_{\mathrm{p}}(1)\}\) from (C5) and (39) and that \(\varvec{J}_{n}(\tilde{\varvec{\beta }})\) is positive definite for sufficiently large n. Accordingly, we have

and thus \(\Vert \hat{\varvec{\beta }}_{\lambda }^{(1)}\Vert _{q}^{q}=\mathrm{O}_{\mathrm{p}}(|\hat{\varvec{\beta }}_{\lambda }^{(1)}|)\) since the first term on the left-hand side is nonnegative. Hence, we have

because \(0<q<1\) and \(\hat{\varvec{\beta }}_{\lambda }^{(1)}=\mathrm{o}_{\mathrm{p}}(1)\). This implies the former in (17). Since \(\tilde{\varvec{u}}_{n}^{(2)}\) is trivially \(\mathrm{O}_{\mathrm{p}}(1)\), we obtain the latter of (17) from (39) and (40). \(\square \)

1.4 Proof of (19) and (20)

Let \(\eta _{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})\) be the one with \(q=1\) in (9), and let \(\tilde{\eta }_{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})=-\varvec{u}^{\mathrm{T}}\varvec{s}_{n}+\varvec{u}^{\mathrm{T}}\varvec{J}\varvec{u}/2\) in place of (10). Then, we can obtain \(\eta _{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})=\tilde{\eta }_{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})+\mathrm{o}_{\mathrm{p}}(1)\) by taking a Taylor expansion around \((\varvec{u}^{(1)},\varvec{u}^{(2)})=(\varvec{0},\varvec{0})\). In addition, let \(\phi _{n}(\varvec{u})\) and \(\phi (\varvec{u})\) be \(\phi _{n}(\varvec{u})+\psi _{n}(\varvec{u}^{\dagger })\) and \(\phi (\varvec{u})+\psi (\varvec{u}^{\dagger })\) with \(q=1\) in (11), (12), and (13), let \(\varvec{u}^{\dagger }\) be empty vector and \(\psi _{n}(\varvec{u}^{\dagger })=\psi (\varvec{u}^{\dagger })=0\), and define \(\nu _{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})=\eta _{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})+\phi _{n}(\varvec{u})+\psi _{n}(\varvec{u}^{\dagger })\) and \(\tilde{\nu }_{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})=\tilde{\eta }_{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})+\phi (\varvec{u})+\psi (\varvec{u}^{\dagger })\) again. Here, note that

Next, because

we see by using \(\hat{\varvec{u}}_{n}^{(1)}\) in (18) that

where we have denoted \(\varvec{x}^{\mathrm{T}}A\varvec{x}\) by \(\Vert \varvec{x}\Vert _{A}^{2}\) for an appropriate size of matrix A and vector \(\varvec{x}\). Now, we apply Lemma 3 and evaluate the right-hand side in (4). In the same way as in (15), it follows that \(\varDelta _{n}(\delta )\) converges in probability to 0. Next, the definition of \(\tilde{\varvec{u}}_{n}^{(1)}\) ensures that

where \(\varvec{\gamma }\) is a \(|\mathcal{J}^{(1)}|\)-dimensional vector such that \(\gamma _{j}=1\) when \(\hat{u}_{n,j}^{(1)}>0\), \(\gamma _{j}=-1\) when \(\hat{u}_{n,j}^{(1)}<0\), and \(\gamma _{j}\in [-1,1]\) when \(\hat{u}_{n,j}^{(1)}=0\). Thus, noting that \(\tilde{\varvec{u}}_{n}^{(1)\mathrm{T}}\varvec{\gamma }=\Vert \tilde{\varvec{u}}_{n}^{(1)}\Vert _{1}\), we can write \(\tilde{\nu }_{n}(\varvec{u}^{(1)},\varvec{u}^{(2)})-\tilde{\nu }_{n}(\tilde{\varvec{u}}_{n}^{(1)},\tilde{\varvec{u}}_{n}^{(2)})\) as

after a simple calculation. Let \(\varvec{w}_{1}\) and \(\varvec{w}_{2}\) be unit vectors such that \(\varvec{u}^{(1)}=\tilde{\varvec{u}}_{n}^{(1)}+\zeta \varvec{w}_{1}\) and \(\varvec{u}^{(2)}=\tilde{\varvec{u}}_{n}^{(2)}+(\delta ^{2}-\zeta ^{2})^{1/2}\varvec{w}_{2}\), where \(0\le \zeta \le \delta \). Then, letting \(\rho ^{(22)}\) and \(\rho ^{(1|2)}\;(>0)\) be half the smallest eigenvalues of \(\varvec{J}^{(22)}\) and \(\varvec{J}^{(1|2)}\), respectively, it follows that

because the second term in (41) is nonnegative. Hence, the first term on the right-hand side in (4) converges to 0. In addition, because \((\varvec{u}_{n}^{(1)},\varvec{u}_{n}^{(2)})\) is \(\mathrm{O}_{\mathrm{p}}(1)\) from (39) and \((\tilde{\varvec{u}}_{n}^{(1)},\tilde{\varvec{u}}_{n}^{(2)})\) is also \(\mathrm{O}_{\mathrm{p}}(1)\), the second term on the right-hand side in (4) can be made arbitrarily small by considering a sufficiently large \(\xi \). Thus, we have \(|\varvec{u}-\tilde{\varvec{u}}_{n}|=\mathrm{o}_{\mathrm{p}}(1)\), and as a consequence, we obtain (19) and (20). \(\square \)

1.5 Proof of (26)

Because \(n^{1/2}\hat{\varvec{\beta }}_{\lambda }^{(1)}=\hat{\varvec{u}}_{n}^{(1)}+\mathrm{o}_{\mathrm{p}}(1)\) from Theorem 1, the terms including \(\hat{\varvec{\beta }}_{\lambda }^{(1)}\) do not reduce to \(\mathrm{o}_{\mathrm{p}}(1)\) in this case. Therefore, (24) is expressed as

and this converges in distribution to

In the same way, (25) is expressed as

and this converges in distribution to

where \(\tilde{\varvec{s}}_{n}^{(1)},\;\tilde{\varvec{s}}^{(2)},\;\tilde{\varvec{s}}^{(1|2)}\), and \(\tilde{\varvec{s}}^{(2)}\) are copies of \(\varvec{s}_{n}^{(1)},\;\varvec{s}^{(2)},\;\varvec{s}^{(1|2)}\), and \(\varvec{s}^{(2)}\), respectively. Thus, we see that

Since \(\tilde{\varvec{s}}\) and \(\varvec{s}\) are independently distributed according to \(\mathrm{N}(\varvec{0},\varvec{J}^{(22)})\), the asymptotic bias reduces to

As a result, we obtain (26).\(\square \)

About this article

Cite this article

Umezu, Y., Shimizu, Y., Masuda, H. et al. AIC for the non-concave penalized likelihood method. Ann Inst Stat Math 71, 247–274 (2019). https://doi.org/10.1007/s10463-018-0649-x

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-018-0649-x