Abstract

Consider the following generalized linear model (GLM)

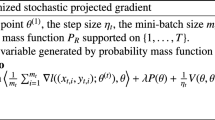

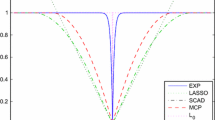

where h(.) is a continuous differentiable function, \(\{e_i\}\) are independent identically distributed (i.i.d.) random variables with zero mean and known variance \(\sigma ^2\). Based on the penalized Lq-likelihood method of linear regression models, we apply the method to the GLM, and also investigate Oracle properties of the penalized Lq-likelihood estimator (PLqE). In order to show the robustness of the PLqE, we discuss influence function of the PLqE. Simulation results support the validity of our approach. Furthermore, it is shown that the PLqE is robust, while the penalized maximum likelihood estimator is not.

Similar content being viewed by others

References

Araveeporn A (2021) The higher-order of adaptive lasso and elastic net methods for classification on high dimensional data. Mathematics 9:1091

Cao X, Lee K (2020) Variable selection using nonlocal priors in high-dimensional generalized linear models with application to FMRI data analysis. Entropy 22:807

Chang J, Tang C, Wu Y (2013) Marginal empirical likelihood and sure independence feature screening. Ann Stat 41:2123–2148

Chu T, Zhu J, Wang H (2011) Penalized maximum likelihood estimation and variable selection in geostatistics. Ann Stat 39(5):2607–2625

Cox DD, O’Sullivan F (1996) Penalized likelihood-type estimators for generalized nonparametric regression. J Multivariate Anal 56(2):185–206

Fan J (1997) Comments on wavelets in statistics: a review by A. Antoniadis. J Ital Stat Assoc 6:131–138

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96:1348–1360

Fan J, Lv J (2008) Sure independence screening for ultrahigh dimensional feature space (with discussion). J R Stat Soc B 70:849–911

Fan J, Song R (2010) Sure independent screening in generalized linear models with NP-dimensionality. Ann Stat 38:3567–3604

Ferrari D, Yang Y (2010) Maximum Lq-likelihood estimation. Ann Stat 38:753–783

Gao Q, Zhu C, Du X et al (2021) The asymptotic properties of Scad penalized generalized linear models with adaptive designs. J Syst Sci Complex 34(2):759–773

Giuzio M, Ferrari D, Paterlini S (2016) Sparse and robust normal and t-portfolios by penalized Lq-likelihood minimization. Eur J Oper Res 250:251–261

Goeman J, Van Houwelingen H, Finos L (2011) Testing against a high-dimensional alternative in the generalized linear model: asymptotic type I error control. Biometrika 98:381–390

Guo B, Chen S (2016) Tests for high dimensional generalized linear models. J R Stat Soc B 78:1079–1102

Hu H, Zeng Z (2021) Penalized Lq-likelihood estimators and variable selection in linear regression. Commun Stat Theory Methods 51(17):5957–5970

Huang H, Gao Y, Zhang H, Li B (2021) Weighted lasso estimates for sparse logistic regression: non-asymptotic properties with measurement errors. Acta Math Sci 41B(1):207–230

Kakhki F, Freeman S, Mosher G (2018) Analyzing large workers’ compensation claims using generalized linear models and Monte Carlo simulation. Safety 4:57

Kwon S, Kim Y (2012) Large sample properties of the scad-penalized maximum likelihood estimation on high dimensions. Stat Sin 22(2):629–653

Liu R, Zhao S, Hu T, Sun J (2022) Variable selection for generalized linear models with interval-censored failure time data. Mathematics 10:763

Öllerer V (2015) Robust and sparse estimation in high-dimensions. Ku Leuven, Leuven

van de Geer SA (2008) High-dimensional generalized linear models and the lasso. Ann Stat 36:614–645

Wang M, Tian G (2019) Adaptive group Lasso for high-dimensional generalized linear models. Stat Pap 60:1469–1486

Wang M, Wang X (2014) Adaptive lasso estimators for ultrahigh dimensional generalized linear models. Stat Probab Lett 89:41–50

Wang L, You Y, Lian H (2015) Convergence and sparsity of Lasso and group Lasso in high-dimensional generalized linear models. Stat Pap 56(3):819–828

Wang M, Song L, Wang X (2010) Bridge estimation for generalized linear models with a diverging number of parameters. Stat Probab Lett 80(21):1584–1596

Xia X (2003) Simultaneous diagonalization two matrices. J Nanchang Inst Aeronaut Technol (Nat Sci Ed) 17(03):26–32

Yin Z (2020) Variable selection for sparse logistic regression. Metrika 83:821–836

Zou H (2006) The adaptive LASSO and its oracle properties. J Am Stat Assoc 101:1418–1429

Acknowledgements

We thank the Editor and the anonymous referees for carefully reading the article and providing valuable comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

7. Appendix: Proofs of Lemmas and Theorems

7. Appendix: Proofs of Lemmas and Theorems

In this section, we provide some proofs of technical lemmas and Theorem 3.1.

Proof of Lemma 3.1

We only prove that for any given \(\varepsilon >0\), there exists a large constant C such that

The case of \(q_n=1\), the proof of (7.1) is similar to Theorem 2.1 of Wang and Tian (2019) or Wang and Wang (2014). In the following, we only consider the case of \(q_n\ne 1\). Note that \(p_{\lambda _n}(\cdot )\ge 0\) and \(p_{\lambda _n}(0)=0\), and by Taylor expansion, we have

where \({\tilde{h}}(x_i^T\beta _0)\) is between \(h(x_i^T(\beta _0+\alpha _nu))\) and \(h(x_i^T\beta _0)\), \({\tilde{\beta }}\) and \(\beta ^*\) is between \(\beta _0\) and \(\beta _0+\alpha _nu\).

Note that \(E(f^{-q_n}(e_i)f'(e_i))^2<\infty \). By the condition (A) and weak large number theorem, we easily obtain

Since f, \(f'\), \(f''\) and h are continuous, we have

Thus, (7.1) follows from (7.2)–(7.5). \(\square \)

Proof of Lemma 3.2

It is sufficient to show, for \(j=s+1,\ldots ,d\),

By Taylor!‘\(^{-}\)s expansion, we have

where \(e_{i0}=y_i-h(x_i^T\beta _0)\), \(e^*_i=y_i-h(x_i^T\beta ^*)\), \(\beta ^*\) is between \(\beta _0\) and \(\beta \). Observe that we have

By \(n^{-\frac{1}{2}}\lambda _n\rightarrow 0\), we have

Note that \(\liminf _{n\rightarrow \infty }\liminf _{\theta \rightarrow 0^+}\frac{p'_{\lambda _n}(\theta )}{\lambda _n}>0\), and \(\liminf _{n\rightarrow \infty }\liminf _{\theta \rightarrow 0^-}\frac{p'_{\lambda _n}(\theta )}{\lambda _n}<0\). Hence, (7.6) follows from (7.13). \(\square \)

Proof of Theorem 3.1

By Lemma 3.2, we only prove the asymptotic normality.

For \(j=1,2,\ldots ,s\), we have

By (7.10), we have

Taking \(\forall u=(u_1,u_2,\ldots ,u_s)\) such that \(\Vert u\Vert =1\), we will prove that

Since \(\left\{ \sum _{j=1}^su_jx_{ij}f^{-q_n}(e_{i0})f'(e_{i0}),\quad i=1,2,\ldots ,n \right\} \) are independent random variables, we have

and

Note that

By the central limit theorem, we have

Thus (7.12) follows from \(E(f^{-q_n}(e_{i0})f'(e_{i0}))=0\) and (7.15). Note that

and

By (7.11)(7.12) and (7.16)(7.17), we complete the proof. \(\square \)

Proof of Theorem 4.1

For the objective function of \(PL_qE\), corresponding to the population level is given by

After differentiating with respect to \(\beta \) and setting its derivative equal to zero (the solution is denoted as \(\beta _{PL_qE}(F_\varepsilon )\)), we get

Derivation formula (7.23) with respect to \(\varepsilon \), and taking the limit as \(\varepsilon \rightarrow 0\), we yield

Note that

we easily obtain the Theorem 4.1.

To verify Corollary 4.2, we first give a lemma (see Xia 2003). \(\square \)

Lemma 7.1

Let A and B be real symmetric matrices of order n, and A is a positive definite matrix, then A and B can be contract diagonalized at the same time.

Proof of Corollary 4.2

Let \(B=xx^T-b^{-1}U, A=bB^{-1}=(a_{ij})_{d\times d}\), \(l(e_0)=f^{-q}(e_0)f'(e_0)\), \(l(e)=f^{-q}(e)f'(e)\), \(D=l(e_0)x_0-E_{F_0}l(e)x\). Then

Note that

By lemma 4.1, there exists an invertible matrix Q such that

Thus

Since \(b\ne w_i\), the matrix B is invertible. Therefore,

Obviously,

Since \(l(e)=(2\pi )^{\frac{q-1}{2}}\exp \{\frac{q-1}{2}e^2\}e\) is an odd function for e,

Thus, by (7.28)(7.29), we have

The corollary follows from (7.25)(7.27) and (7.30). \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hu, H., Liu, M. & Zeng, Z. Penalized Lq-likelihood estimator and its influence function in generalized linear models. Metrika (2024). https://doi.org/10.1007/s00184-023-00943-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00184-023-00943-z