Abstract

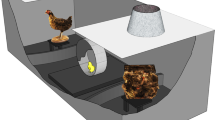

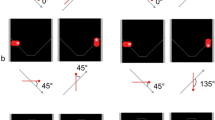

The purpose of the present study is to examine the applicability of a computer-generated, virtual animal to study animal cognition. Pigeons were trained to discriminate between movies of a real pigeon and a rat. Then, they were tested with movies of the computer-generated (CG) pigeon. Subjects showed generalization to the CG pigeon, however, they also responded to modified versions in which the CG pigeon was showing impossible movement, namely hopping and walking without its head bobbing. Hence, the pigeons did not attend to these particular details of the display. When they were trained to discriminate between the normal and the modified version of the CG pigeon, they were able to learn the discrimination. The results of an additional partial occlusion test suggest that the subjects used head movement as a cue for the usual vs. unusual CG pigeon discrimination.

Similar content being viewed by others

References

Adret P (1977) Discrimination of video images by zebra finches (Taeniopygia guttata): direct evidence from song performance. J Comp Psychol 111:115–125

Cook RG (2000) The comparative psychology of avian visual cognition. Curr Direct Psychol Sci 9:83–89

Cook RG, Shaw R, Blaisdell AP (2001) Dynamic object perception by pigeons: discrimination of action in video presentations. Anim Cogn 4:137–134

D’Eath RB (1998) Can video images imitate real stimuli in animal behavior experiments? Bio Rev Cambridge Philos Soc 73:267–292

D’Eath RB, Dawkins MS (1996) Laying hens do not discriminate between video images of conspecifics. Anim Behav 52:903–912

Dittrich WH, Lea SEG (1993) Motion as a natural category for pigeons: generalization and a feature-positive effect. J Exp Anal Behav 59:115–129

Dittrich WH, Lea SEG, Barrett J, Gurr PR (1998) Categorization of natural movements by pigeons: visual concept discrimination and biological motion. J Exp Anal Behav 70:281–299

Goto K, Lea SE, Dittrich WH (2002) Discrimination of intentional and random motion paths by pigeons. Anim Cogn 5:119–127

Evans CS, Marler P (1991) One video images as social stimuli in birds: audience effects on alarm calling. Anim Behav 41:17–26

Jitsumori M, Natori M, Okuyama K (1999) Recognition of moving video images of conspecifics by pigeons: effects of individuals, static and dynamic motion cues, and movement. Anim Learn Behav 27:303–315

Johansson G (1973) Visual perception of biological motion and a model for its analysis. Percep Psychophys 14:201–211

Partan S, Yelda S, Price V, Shimizu T (2005) Female pigeons, Columba livia, respond to multisensory audio/video playbacks of male courtship behaviour. Anim Behav 70:957–966

Patterson-Kane E, Nicol CJ, Foster TM, Temple W (1997) Limited perception of video images by domestic hens. Anim Behav 53:951–963

Powell RW (1967) The pulse-to-cycle fraction as a determinant of critical flicker fusion in the pigeon. Psychol Rec 17:151–160

Remy M, Emmerton J (1989) Behavioral spectral sensitivities of different retinal areas in pigeons. Behav Neurosci 103:170–177

Ryan CME, Lea SEG (1994) Images of conspecifics as categories to be discriminated by pigeons and chickens: slides, video tapes, stuffed birds and live birds. Behav Proc 33:155–175

Shimizu T (1998) Conspecific recognition in pigeons (Columba livia) using dynamic video images. Behav 135:43–53

Tinbergen N (1951) The study of instinct. Oxford Univ Press, London

Watanabe S (1993) Object-picture equivalence in the pigeon: An analysis with natural concept and pseudoconcept discrimination. Behav Proc 30:225–232

Watanabe S (1997) Visual discrimination of real objects and pictures in pigeons. Anim Learn Behav 25:185–192

Watanabe S (2001) Van Gogh, Chagall and pigeons: picture discrimination in pigeons and humans. Anim Cogn 4:147–151

Watanabe S (2002) Preference for mirror images and video image in Java sparrows (Padda oryzivora). Behav Proc 60:35–39

Watanabe S, Furuya I (1997) Video display for study of avian visual cognition: from psychophysics to sign language. Intl J Comp Psychol 10:111–127

Watanabe S, Huber L (2006) Animal logics: decisions in the absence of human language. Anim Cogn, DOI 10.1007/s10071-006-0043-6

Watanabe S, Jian T (1993) Visual and auditory cues in conspecific discrimination learning in Bengalese finches. J Eth 11:111–116

Yamazaki Y, Shinohara N, Watanabe S (2004) Visual discrimination of normal and drug induced behavior in quails (Coturnix coturnix, japonica). Anim Cogn 7:128–132

Acknowledgements

This research was supported by the 21st Century Center Of Excellence Program (D-1) and the Volkswagen Foundation. We are also grateful to Alias|Wavefront who supplied us with a research donation of their software Maya. Treatment of the animals used in testing was in accordance with the Guidelines of Animal Experiments (Keio University).

Author information

Authors and Affiliations

Corresponding author

Additional information

This contribution is part of the special issue ‘Animal Logics’ (Watanabe and Huber 2006)

Rights and permissions

About this article

Cite this article

Watanabe, S., Troje, N.F. Towards a “virtual pigeon”: A new technique for investigating avian social perception. Anim Cogn 9, 271–279 (2006). https://doi.org/10.1007/s10071-006-0048-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10071-006-0048-1