Abstract

The main result of this paper is that, for \(\kappa \in (0,4]\), whole-plane SLE\(_\kappa \) satisfies reversibility, which means that the time-reversal of a whole-plane SLE\(_\kappa \) trace is still a whole-plane SLE\(_\kappa \) trace. In addition, we find that the time-reversal of a radial SLE\(_\kappa \) trace for \(\kappa \in (0,4]\) is a disc SLE\(_\kappa \) trace with a marked boundary point. The main tool used in this paper is a stochastic coupling technique, which is used to couple two whole-plane SLE\(_\kappa \) traces so that they overlap. Another tool used is the Feynman–Kac formula, which is used to solve a PDE. The solution of this PDE is then used to construct the above coupling.

Similar content being viewed by others

1 Introduction

The stochastic Loewner evolution (SLE) introduced by Oded Schramm [1] describes some random fractal curves in plane domains that satisfy conformal invariance and Domain Markov Property. These two properties make SLEs the most suitable candidates for the scaling limits of many two-dimensional lattice models at criticality. These models are proved or conjectured to converge to SLE with different parameters (e.g., [2–7]). For basics of SLE, the reader may refer to [8] and [9].

There are several different versions of SLEs, among which chordal SLE and radial SLE are the most well-known. A chordal or radial SLE trace is a random fractal curve that grows in a simply connected plane domain from a boundary point. A chordal SLE trace ends at another boundary point, and a radial SLE trace ends an interior point. Their behaviors both depend on a positive parameter \(\kappa \). When \(\kappa \in (0,4]\), both traces are simple curves, and all points on the trace other than the initial and final points lie inside the domain. When \(\kappa >4\), the traces have self-intersections.

A stochastic coupling technique was introduced in [10] to prove that, for \(\kappa \in (0,4]\), chordal SLE\(_\kappa \) satisfies reversibility, which means that if \(\beta \) is a chordal SLE\(_\kappa \) trace in a domain \(D\) from \(a\) to \(b\), then after a time-change, the time-reversal of \(\beta \) becomes a chordal SLE\(_\kappa \) trace in \(D\) from \(b\) to \(a\). The technique was later used [11, 12] to prove Duplantier’s duality conjecture, which says that, for \(\kappa >4\), the boundary of the hull generated by a chordal SLE\(_\kappa \) trace looks locally like an SLE\(_{16/\kappa }\) trace. The technique was also used to prove that the radial or chordal SLE\(_2\) can be obtained by erasing loops on a planar Brownian motion [13], and the chordal SLE\((\kappa ,\rho )\) introduced in [2] also satisfies reversibility for \(\kappa \in (0,4]\) and \(\rho \ge \kappa /2-2\) [14].

Since the initial point and final point of a radial SLE are topologically different, the time-reversal of a radial SLE trace can not be a radial SLE trace. However, we may consider whole-plane SLE instead, which describes a random fractal curve in the Riemann sphere \(\widehat{\mathbb {C}}=\mathbb {C}\cup \{\infty \}\) that grows from one interior point to another interior point. Whole-plane SLE is related to radial SLE as follows: conditioned on the initial part of a whole-plane SLE\(_\kappa \) trace, the rest part of such trace has the distribution of a radial SLE\(_\kappa \) trace that grows in the complementary domain of the initial part of this trace. The main result of this paper is the following theorem.

Theorem 1.1

Whole-plane SLE\(_\kappa \) satisfies reversibility for \(\kappa \in (0,4]\).

The theorem in the case \(\kappa =2\) has been proved in [15]. The proof used the reversibility of loop-erased random walk (LERW for short, see [16]) and the convergence of LERW to whole-plane SLE\(_2\). In this paper we will obtain a slightly more general result: the reversibility of whole-plane SLE\((\kappa ,s)\) process, which is defined by adding a constant drift to the driving function for the whole-plane SLE\(_\kappa \) process. This is the statement of Theorem 7.1.

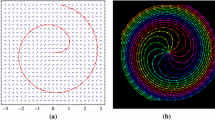

To get some idea of the proof, let’s first review the proof of the reversibility of chordal SLE\(_\kappa \) in [10]. We constructed a pair of chordal SLE\(_\kappa \) traces \(\gamma _1\) and \(\gamma _2\) in a simply connected domain \(D\), where \(\gamma _1\) grows from a boundary point \(a_1\) to another boundary point \(a_2\), \(\gamma _2\) grows from \(a_2\) to \(a_1\), and these two traces commute in the following sense. Fix \(j\ne k\in \{1,2\}\), if \(T_k\) is a stopping time for \(\gamma _k\), then conditioned on \(\gamma _k(t)\), \(t\le T_k\), the part of \(\gamma _j\) before hitting \(\gamma _k(t)((0,T_k])\) has the distribution of a chordal SLE\(_\kappa \) trace that grows from \(a_j\) to \(\gamma _k(T_k)\) in \(D_k(T_k)\), which is a component of \(D{\setminus }\gamma \!_k(t)((0,T_k])\). In the case \(\kappa \le 4\), a.s. \(\gamma _j\) hits \(\gamma _k(t)((0,T_k])\) exactly at \(\gamma _k(T_k)\), so \(\gamma _j\) visits \(\gamma _k(T_k)\) before any \(\gamma _k(t)\), \(t<T_k\). Since this holds for any stopping time \(T_k\) for \(\gamma _k\), the two traces a.s. overlap, which implies the reversibility.

To prove the reversibility of whole-plane SLE\(_\kappa \), we want to construct two whole-plane SLE\(_\kappa \) traces in \(D=\widehat{\mathbb {C}}\), one is \(\gamma _1\) from \(a_1\) to \(a_2\), the other is \(\gamma _2\) from \(a_2\) to \(a_1\), so that \(\gamma _1\) and \(\gamma _2\) commute. Here we can not expect that they commute in exactly the same sense as in the above paragraph. Note that conditioned on \(\gamma _k(t)\), \(t\le T_k\), the part of \(\gamma _j\) before hitting \(\gamma _k(t)\), \(t\le T_k\), can not have the distribution of a whole-plane SLE\(_\kappa \) trace in \(D_k(T_k)\) from \(a_1\) to \(\gamma _k(T_k)\) because now the complementary domain \(D_k(T_k)\) is topologically different from \(\widehat{\mathbb {C}}\), while whole-plane SLEs are only defined in \(\widehat{\mathbb {C}}\). Since the conditional curve grows from an interior point to a boundary point, it is neither a radial SLE trace nor a chordal SLE trace.

Thus, we need to define SLE traces in simply connected domains that grow from an interior point to a boundary point. We use the idea of defining whole-plane SLE using radial SLE. The situation here is a little different: after a positive initial part, the rest part of the curve grows in a doubly connected domain. Another difference is that there is a marked point on the boundary of the initial domain. In this paper, we use the annulus Loewner equation introduced in [17] together with an annulus drift function \(\Lambda =\Lambda (t,x)\) to define the so-called annulus SLE\((\kappa ,\Lambda )\) process in a doubly connected domain \(D\), which starts from a point \(a\in \partial D\), and whose growth is affected by a marked point \(b\in \partial D\). In the case when \(a\) and \(b\) lie on different boundary components, by shrinking the boundary component containing \(a\) to a singlet, we get the so called disc SLE\((\kappa ,\Lambda )\), which describes a random curve that grows in a simply connected domain and starts from an interior point.

We find that if \(\Lambda =\kappa \frac{\Gamma '}{\Gamma }\), where \(\Gamma \) is a positive differentiable function defined on \((0,\infty )\times \mathbb {R}\) that solves a linear PDE and satisfies some periodic condition [see (4.1) and (4.2)], then using the coupling technique we could construct a coupling of two whole-plane SLE\(_\kappa \) traces: \(\gamma _1\) and \(\gamma _2\), which commute in the sense that, conditioned on one curve up to a finite stopping time \(T\), the other curve is a disc SLE\((\kappa ,\Lambda )\) trace in the remaining domain, and its marked point is the tip point of the first curve at \(T\).

The main new idea in the current paper is an application of a Feynman–Kac representation, which is used to get a formal solution of the PDE for \(\Gamma \) in the case \(\kappa \in (0,4]\). Using Fubini’s Theorem, Itô’s formula, and some estimations, we prove that the formal solution \(\Gamma _\kappa \) is smooth and solves the PDE. We then find that \(\Lambda _\kappa {:=}\kappa \frac{\Gamma _\kappa '}{\Gamma _\kappa }\) satisfies the property that the marked point for an annulus or disc SLE\((\kappa ,\Lambda _\kappa )\) process is a subsequential limit point of the trace. This property implies that, if two whole-plane SLE\(_\kappa \) traces commute as in the previous paragraph, then they must overlap. So the main theorem is proved. Moreover, from the relation between whole-plane SLE\(_\kappa \) and radial SLE\(_\kappa \), we conclude that, for \(\kappa \in (0,4]\), the time-reversal of a radial SLE\(_\kappa \) trace is a disc SLE\((\kappa ,\Lambda _\kappa )\) trace.

The marked point and the initial point of an annulus SLE\((\kappa ,\Lambda )\) process could also lie on the same boundary component. In this case, if \(\Lambda =\kappa \frac{\Gamma '}{\Gamma }\), and \(\Gamma \) satisfies a similar linear PDE [see (4.48)], then for a doubly connected domain \(D\) with two boundary points \(a_1\) and \(a_2\) on the same boundary component, we can construct a pair of annulus SLE\((\kappa ,\Lambda )\) traces \(\gamma _1\) and \(\gamma _2\) in \(D\), which commute with each other. If an SLE process in a doubly connected domain is the scaling limit of some random path in a lattice, which satisfies reversibility at the discrete level, then such SLE should satisfy reversibility. We hope that the work in this paper will shed some light on the study of these processes.

The study on the commutation relations of SLE in doubly connected domains continues the work in [18] by Dubédat, who used some tools from Lie Algebra to obtain commutation conditions of SLE in simply connected domains. The annulus SLE\((\kappa ,\Lambda _{\kappa })\) process used to prove the reversibility of whole-plane SLE\(_\kappa \) was later studied in [19]. When \(\kappa =8/3\), the process satisfies the restriction property, which is similar to the restriction property for chordal SLE\(_{8/3}\) (see [2]). For \(\kappa \in (0,4]{\setminus }\{8/3\}\), it satisfies some “weak” restriction property.

Lawler [20] used a different method to define annulus SLE\(_\kappa \) processes for \(\kappa \in (0,4]\), which agree with our annulus SLE\((\kappa ,\Lambda _{\kappa })\) processes. His construction uses the Brownian loop measures. The “strong” (\(\kappa =8/3\)) and “weak” (\(\kappa \ne 8/3\)) restriction properties of Lawler’s annulus SLE processes are immediate from the definition; and the reversibility of these processes follows from the chordal reversibility. However, the reversibility of whole-plane SLE is not proved in [20]. To get the whole-plane reversibility, some additional work is required based on Lawler’s work. In this paper, the reversibility of annulus SLE\((\kappa ,\Lambda _{\kappa })\) and the reversibility of whole-plane SLE\(_\kappa \) are proved separately, and the coupling technique is applied in both proofs, which are similar though.

Miller and Sheffield [21] recently proved the reversibility of whole-plane SLE for all \(\kappa \in [0,8]\). Their proof uses the imaginary geometry of Gaussian free field developed in their earlier papers (c.f. [22]).

This paper is organized as follows. In Sect. 2, we introduce some symbols and notations. In Sect. 3, we review several versions of Loewner equations. In Sect. 3.4, we define annulus SLE\((\kappa ,\Lambda )\) and disc SLE\((\kappa ,\Lambda )\) processes, whose growth is affected by one marked boundary point. In Sect. 4 we prove that when \(\Gamma \) solves (4.1) or (4.48), there is a commutation coupling of two annulus SLE\((\kappa ,\Lambda )\) processes, where \(\Lambda =\kappa \frac{\Gamma '}{\Gamma }\). In Sect. 5, we construct a coupling of two whole-plane SLE processes, which is similar to the coupling in the previous section. In Sect. 6, we solve PDE (4.1) using a Feynman–Kac expression, and the solution is then used in Sect. 7 to prove the reversibility of whole-plane SLE\(_\kappa \) process. In fact, we obtain a slightly more general result: the reversibility of skew whole-plane SLE\(_\kappa \) processes for \(\kappa \in (0,4]\). In the last section, we find some solutions to the PDE for \(\Gamma \) and \(\Lambda \) when \(\kappa \in \{0,2,3,4,16/3\}\), which can be expressed in terms of well-known special functions.

2 Preliminary

2.1 Symbols

Throughout this paper, we will use the following symbols. Let \(\widehat{\mathbb {C}}=\mathbb {C}\cup \{\infty \}\), \(\mathbb {D}=\{z\in \mathbb {C}:|z|<1\}\), \(\mathbb {T}=\{z\in \mathbb {C}:|z|=1\}\), and \(\mathbb {H}=\{z\in \mathbb {C}:{{\mathrm{Im }}}z>0\}\). For \(p>0\), let \(\mathbb {A}_p=\{z\in \mathbb {C}:1>|z|> e^{-p}\}\) and \(\mathbb {S}_p=\{z\in \mathbb {C}:0< {{\mathrm{Im }}}z<p\}\). For \(p\in \mathbb {R}\), let \(\mathbb {T}_p=\{z\in \mathbb {C}:|z|=e^{-p}\}\) and \(\mathbb {R}_p=\{z\in \mathbb {C}:{{\mathrm{Im }}}z=p\}\). Then \(\partial \mathbb {D}=\mathbb {T}\), \(\partial \mathbb {H}=\mathbb {R}\), \(\partial \mathbb {A}_p=\mathbb {T}\cup \mathbb {T}_p\), and \(\partial \mathbb {S}_p=\mathbb {R}\cup \mathbb {R}_p\). Let \(e^i\) denote the map \(z\mapsto e^{iz}\). Then \(e^i\) is a covering map from \(\mathbb {H}\) onto \(\mathbb {D}\), and from \(\mathbb {S}_p\) onto \(\mathbb {A}_p\); and it maps \(\mathbb {R}\) onto \(\mathbb {T}\) and maps \(\mathbb {R}_p\) onto \(\mathbb {T}_p\). For a doubly connected domain \(D\), we use \({{\mathrm{mod}}}(D)\) to denote its modulus. For example, \({{\mathrm{mod}}}(\mathbb {A}_p)=p\).

A conformal map in this paper is a univalent analytic function. A conjugate conformal map is defined to be the complex conjugate of a conformal map. Let \(I_0(z)=1/\overline{z}\) be the reflection w.r.t. \(\mathbb {T}\). Then \(I_0\) is a conjugate conformal map from \(\widehat{\mathbb {C}} \) onto itself, fixes \(\mathbb {T}\), and interchanges \(0\) and \(\infty \). Let \(\widetilde{I}_0(z)=\overline{z}\) be the reflection w.r.t. \(\mathbb {R}\). Then \(\widetilde{I}_0\) is a conjugate conformal map from \(\mathbb {C}\) onto itself and satisfies \(e^i\circ \widetilde{I}_0=I_0\circ e^i\). For \(p>0\), let \(I_p(z){:=}e^{-p}/\overline{z}\) and \(\widetilde{I}_p(z)=ip+\overline{z}\). Then \(I_p\) and \(\widetilde{I}_p\) are conjugate conformal automorphisms of \(\mathbb {A}_p\) and \(\mathbb {S}_p\), respectively. Moreover, \(I_p\) interchanges \(\mathbb {T}_p\) and \(\mathbb {T}\), \(\widetilde{I}_p\) interchanges \(\mathbb {R}_p\) and \(\mathbb {R}\), and \(I_p\circ e^i =e^i\circ \widetilde{I}_p\).

We will frequently use functions \(\cot (z/2)\), \(\tan (z/2)\), \(\coth (z/2)\), \(\tanh (z/2)\), \(\sin (z/2)\), \(\cos (z/2)\), \(\sinh (z/2)\), and \(\cosh (z/2)\). For simplicity, we write \(2\) as a subscript. For example, \(\cot _2(z)\) means \(\cot (z/2)\), and we have \(\cot _2'(z)=-\frac{1}{2}\sin _2^{-2}(z)\).

An increasing function in this paper will always be strictly increasing. For a real interval \(J\), we use \(C(J)\) to denote the space of real continuous functions on \(J\). The maximal solution to an ODE or SDE with initial value is the solution with the biggest definition domain.

Many functions in this paper depend on two variables. In some of these functions, the first variable represents time or modulus, and the second variable does not. In this case, we use \(\partial _t\) and \(\partial _t^{n}\) to denote the partial derivatives w.r.t. the first variable, and use \('\), \(''\), and the superscripts \((h)\) to denote the partial derivatives w.r.t. the second variable. For these functions, we say that it has period \(r\) (resp. is even or odd) if it has period \(r\) (resp. is even or odd) in the second variable when the first variable is fixed. Some functions in Sects. 4 and 5 depend on two variables: \(t_1\) and \(t_2\), which both represent time. In this case we use \(\partial _j\) to denote the partial derivative w.r.t. the \(j\)-th variable, \(j=1,2\).

In this paper, a domain is a connected open subset of \(\widehat{\mathbb {C}}\), and a continuum is a connected compact subset of \(\widehat{\mathbb {C}}\) that contains more than one point. A continuum \(K\) is called a hull in \(\mathbb {C}\) if \(K\subset \mathbb {C}\) and \(\widehat{\mathbb {C}}{\setminus }\! K\) is connected. In this case, there is a unique conformal map \(f_K\) from \(\widehat{\mathbb {C}}{\setminus }\overline{\mathbb {D}}\) onto \(\widehat{\mathbb {C}}{\setminus }\! K\) and satisfies \(\lim _{z\rightarrow \infty } f_K(z)/z=a_K\) for some positive number \(a_K\). Then \(a_K\) is called the capacity of \(K\), and is denoted by \({{\mathrm{cap}}}(K)\).

A doubly connected domain in this paper is a domain whose complement is a disjoint union of two continuums. Let \(D\) be a doubly connected domain. If \(K\) is a relatively closed subset of \(D\), has positive distance from one boundary component of \(D\), and if \(D{\setminus }K\) is also doubly connected, then we call \(K\) a hull in \(D\), and call the number \({{\mathrm{mod}}}(D)-{{\mathrm{mod}}}(D{\setminus }K)\) the capacity of \(K\) in \(D\), and let it be denoted by \({{\mathrm{cap}}}_{D}(K)\).

2.2 Brownian motions

Throughout this paper, a Brownian motion means a standard one-dimensional Brownian motion, and \(B(t)\), \(0\le t<\infty \), will always be used to denote a Brownian motion. This means that \(B(t)\) is continuous, \(B(0)=0\), and \(B(t)\) has independent increment with \(B(t)-B(s)\sim N(0,t-s)\) for \(t\ge s\ge 0\). For \(\kappa \ge 0\), the rescaled Brownian motion \(\sqrt{\kappa }B(t)\) will be used to define annulus SLE\(_\kappa \). The symbols \(B_*(t)\), \(\widehat{B}_*(t)\), or \(\widetilde{B}_*(t)\) will also be used to denote a Brownian motion, where the \(*\) stands for subscript. Let \(({\mathcal {F}}_t)_{t\ge 0}\) be a filtration. By saying that \(B(t)\) is an \(({\mathcal {F}}_t)\)-Brownian motion, we mean that \((B(t))\) is \(({\mathcal {F}}_t)\)-adapted, and for any fixed \(t_0\ge 0\), \(B(t_0+t)-B(t_0)\), \(t\ge 0\), is a Brownian motion independent of \({\mathcal {F}}_{t_0}\).

Definition 2.1

Let \(\kappa >0\) and \(({\mathcal {F}}_t)_{t\in \mathbb {R}}\) be a right-continuous filtration. A process \(B^{(\kappa )}(t)\), \(t\in \mathbb {R}\), is called a pre-\(({\mathcal {F}}_t)\)-\((\mathbb {T};\kappa )\)-Brownian motion if \((e^i(B^{(\kappa )}(t)))\) is \(({\mathcal {F}}_t)\)-adapted, and for any \(t_0\in \mathbb {R}\),

is an \(({\mathcal {F}}_{t_0+t})\)-Brownian motion. If \(({\mathcal {F}}_t)\) is generated by \((e^i(B^{(\kappa )}(t)))\), then we simply call \((B^{(\kappa )}(t))\) a pre-\((\mathbb {T};\kappa )\)-Brownian motion.

Remark

The name of the pre-\((\mathbb {T};\kappa )\)-Brownian motion comes from the fact that \(B_{\mathbb {T}}(t):=e^i(B^{(\kappa )}(t))\), \(t\in \mathbb {R}\), is a Brownian motion on \(\mathbb {T}\) with speed \(\kappa \): for every \(t_0\in \mathbb {R}\), \(B_{\mathbb {T}}(t_0)\) is uniformly distributed on \(\mathbb {T}\); and \(B_{\mathbb {T}}(t_0+t)/B_{\mathbb {T}}(t_0)\), \(t\ge 0\), has the distribution of \(e^i(\sqrt{\kappa }B(t))\), \(t\ge 0\), and is independent of \(B_{\mathbb {T}} (t)\), \(t\le t_0\). One may construct \(B^{(\kappa )}(t)\) as follows. Let \(B_+(t)\) and \(B_-(t)\), \(t\ge 0\), be two independent Brownian motions. Let \(\mathbf{x}\) be a random variable uniformly distributed on \([0,2\pi )\), which is independent of \((B_\pm (t))\). Let \(B^{(\kappa )}(t) =\mathbf{x}+\sqrt{\kappa }B_{{{\mathrm{sign}}}(t)}(|t|)\) for \(t\in \mathbb {R}\). Then \(B^{(\kappa )}(t)\), \(t\in \mathbb {R}\), is a pre-\((\mathbb {T};\kappa )\)-Brownian motion.

Definition 2.2

Let \(B^{(\kappa )}(t)\), \(t\in \mathbb {R}\), be a pre-\(({\mathcal {F}}_t)\)-\((\mathbb {T};\kappa )\)-Brownian motion, where \(({\mathcal {F}}_t)\) is right-continuous, and every \({\mathcal {F}}_t\) contains all eligible events w.r.t. the process \((e^i(B^{(\kappa )}(t)))\). Suppose \(T\) is an \(({\mathcal {F}}_t)\)-stopping time, and \(T>t_0\) for a deterministic number \(t_0\in \mathbb {R}\). We say that \(X(t)\) satisfies the \(({\mathcal {F}}_t)\)-adapted SDE

if \(e^i(X(t))\), \(a(t)\), and \(b(t)\) are continuous and \(({\mathcal {F}}_t)\)-adapted, and if for any deterministic number \(t_0\) with \(t_0<T\), \(X_{t_0}(t) \,{:=}\,X(t_0+t)-X(t_0)\) satisfies the following \(({\mathcal {F}}_{t_0+t})_{t\ge 0}\)-adapted SDE with the traditional meaning (c.f. Chapter IV, Section 3 of [23]):

where \(B_{t_0}(t)\) is given by (2.1), \(a_{t_0}(t)\,{:=}\,a({t_0}\!+\!t)\), and \(b_{t_0}(t)\,{:=}\,b({t_0}\!+\!t)\). Note that \(B_{t_0}(t)\) is an \(({\mathcal {F}}_{{t_0}\!+\!t})_{t\ge 0}\)-Brownian motion, \(X_{t_0}(t)\), \(a_{t_0}(t)\) and \(b_{t_0}(t)\) are all \(({\mathcal {F}}_{{t_0}\!+\!t})_{t\ge 0}\)-adapted.

2.3 Special functions

We now introduce some functions that will be used to define annulus Loewner equations. For \(t>0\), define

Then \(\mathbf{H}(t,\cdot )\) is a meromorphic function in \(\mathbb {C}\), whose poles are \(\{2m\pi +i2kt:m,k\in \mathbb {Z}\}\), which are all simple poles with residue \(2\). Moreover, \(\mathbf{H}(t,\cdot )\) is an odd function and takes real values on \(\mathbb {R}{\setminus }\{\text{ poles }\}\); \({{\mathrm{Im }}}\mathbf{H}(t,\cdot )\equiv -1\) on \(\mathbb {R}_t\); \(\mathbf{H}(t,z+2\pi )=\mathbf{H}(t,z)\) and \(\mathbf{H}(t,z+i2t)=\mathbf{H}(t,z)-2i\) for any \(z\in \mathbb {C}{\setminus }\!\{\text{ poles }\}\).

The power series expansion of \(\mathbf{H}(t,\cdot )\) near \(0\) is

where \( \mathbf{r}(t)=\sum _{k=1}^\infty \sinh ^{-2}(kt)-\frac{1}{6}\). As \(t\rightarrow \infty \), \(\mathbf{S}(t,z)\rightarrow \frac{1+z}{1-z}\), \(\mathbf{H}(t,z)\rightarrow \cot _2(z)\), and \(\mathbf{r}(t)\rightarrow -\frac{1}{6}\). So we define \(\mathbf{S}(\infty ,z)=\frac{1+z}{1-z}\), \(\mathbf{H}(\infty ,z)=\cot _2(z)\), and \(\mathbf{r}(\infty )=-\frac{1}{6}\). Then \(\mathbf{r}\) is continuous on \((0,\infty ]\), and (2.2) still holds when \(t=\infty \). In fact, we have \(\mathbf{r}(t)-\mathbf{r}(\infty ) =O(e^{-t})\) as \(t\rightarrow \infty \), so we may define \(\mathbf{R}\) on \((0,\infty ]\) by \( \mathbf{R}(t) =-\int _t^\infty (\mathbf{r}(s)-\mathbf{r}(\infty ))ds\). Then \(\mathbf{R}\) is continuous on \((0,\infty ]\), \(\mathbf{R}(t)=O(e^{-t})\) as \(t\rightarrow \infty \), and for \(0<t<\infty \),

Let \(\mathbf{S}_I(t,z)=\mathbf{S}(t,e^{-t}z)-1\) and \(\mathbf{H}_I(t,z)=-i\mathbf{S}_I(t,e^{iz})=\mathbf{H}(t,z+it)+i\). It is easy to check:

So \(\mathbf{H}_I(t,\cdot )\) is a meromorphic function in \(\mathbb {C}\) with poles \(\{2m\pi +i(2k+1)t:m,k\in \mathbb {Z}\}\), which are all simple poles with residue \(2\); \(\mathbf{H}_I(t,\cdot )\) is an odd function and takes real values on \(\mathbb {R}\); and \(\mathbf{H}_I(t,z+2\pi )=\mathbf{H}_I(t,z)\), \(\mathbf{H}_I(t,z+i2t)=\mathbf{H}_I(t,z)-2i\) for any \(z\in \mathbb {C}{\setminus }\!\{\text{ poles }\}\).

It is possible to express \(\mathbf{H}\) and \(\mathbf{H}_I\) using classical functions. Let \(\theta (\nu ,\tau )\) and \(\theta _k(\nu ,\tau )\), \(k=1,2,3\), be the Jacobi theta functions defined in Chapter V, Section 3 of [24]. Define \(\Theta (t,z)=\theta \left( \frac{z}{2\pi },\frac{it}{\pi }\right) \) and \(\Theta _I(t,z)=\theta _2\left( \frac{z}{2\pi },\frac{it}{\pi }\right) \). Then \(\Theta _I\) has period \(2\pi \), \(\Theta \) has antiperiod \(2\pi \), and

These follow from the product representations of \(\Theta \) and \(\Theta _I\). For example,

Both \(\Theta \) and \(\Theta _I\) solve the heat equation

So \(\mathbf{H}\) and \(\mathbf{H}_I\) solve the PDE:

We rescale the functions \(\mathbf{H}\) and \(\mathbf{H}_I\) as follows. For \(t>0\) and \(z\in \mathbb {C}\), let

Since \(\widehat{\mathbf{H}}\) and \(\widehat{\mathbf{H}}_I\) have period \(2\pi \),

From the identities for \(\theta \) in [24] or formula (3) in [25], we see \(\mathbf{H}(t,z)=i\frac{\pi }{t}\mathbf{H}\left( \frac{\pi ^2}{t},i\frac{\pi }{t} z\right) -\frac{z}{t}\). So

Since \(\mathbf{H}_I(t,z)=\mathbf{H}(t,z+it)+i\),

From (2.8) and (2.9) we may check that

From (2.11) and (2.12) we see that \(\widehat{\mathbf{H}}(t,\cdot )\rightarrow \coth _2\) and \(\widehat{\mathbf{H}}_I(t,\cdot )\rightarrow \tanh _2\) as \(t\rightarrow \infty \).

From (2.4) we see that as \(t\rightarrow \infty \), \(\mathbf{H}_I(t,z)\rightarrow 0\), so its derivatives about \(z\) also tend to \(0\). The following lemma gives some estimations of these limits.

Lemma 2.1

If \(|{{\mathrm{Im }}}z|<t\), then

If \(t\ge |{{\mathrm{Im }}}z|+2\), then \(|\mathbf{H}_I(t,z)|< 5.5 e^{|{{\mathrm{Im }}}z|-t}\). For any \(h\in \mathbb {N}\), if \(t\ge |{{\mathrm{Im }}}z|+h+2\), then \(|\mathbf{H}_I^{(h)}(t,z)|< 15\sqrt{h} e^{|{{\mathrm{Im }}}z|-t}\).

Proof

From (2.4), if \(|{{\mathrm{Im }}}z|<t\), then

Here we use the facts that \(|\sin (z)| \le e^{|{{\mathrm{Im }}}z|}\) and \(|\cos (z)|\le \cosh (|{{\mathrm{Im }}}z|)<\cosh (t)\). Let \(h_0=t-|{{\mathrm{Im }}}z|>0\). Then for \(k\ge 0\),

So the RHS of (2.15) is not bigger than

So we proved (2.14).

If \(t\ge |{{\mathrm{Im }}}z|+2\), then \(4/({(1-e^{|{{\mathrm{Im }}}z|-t})^2(1-e^{2(|{{\mathrm{Im }}}z|-t)})})\le 4/((1-e^{-2})^2(1-e^{-4}))<5.5\). From (2.14) we have \(|\mathbf{H}_I(t,z)|< 5.5 e^{|{{\mathrm{Im }}}z|-t}\). Now we assume \(h\in \mathbb {N}\) and \(t\ge |{{\mathrm{Im }}}z|+h+2\). Then for any \(w\in \mathbb {C}\) with \(|w-z|=h\), we have \(t\ge |{{\mathrm{Im }}}w|+2\), so \(|\mathbf{H}_I(t,w)|< 5.5 e^{|{{\mathrm{Im }}}w|-t}\le 5.5 e^he^{|{{\mathrm{Im }}}z|-t}\). From Cauchy’s integral formula and Stirling’s formula, we have

\(\square \)

3 Loewner equations

3.1 Whole-plane Loewner equation

To motivate the definition of the whole-plane Loewner equation, let’s start with the well-known radial Leowner equation with reflection about the unit circle \(\mathbb {T}\). Let \(T\in (0,\infty ]\). Let \(\beta _I:[0,T)\rightarrow \mathbb {C}\) be a simple curve with \(\beta _I(0)\in \mathbb {T}\) and \(\beta _I(t)\in \mathbb {C}{\setminus }\overline{\mathbb {D}}\) for \(t\in (0,T)\). Let \(K_I(t)=\overline{\mathbb {D}}\cup \beta _I((0,t])\), \(0\le t<T\). Then each \(K_I(t)\) is a hull in \(\mathbb {C}\), and the capacity increases continuously in \(t\). After a time-change, we may assume that \({{\mathrm{cap}}}(K_I(t))=e^t\), \(0\le t<T\). Let \(g_I(t,\cdot )\) be the unique conformal map from \(\mathbb {C}{\setminus }K_I(t)\) conformally onto \(\mathbb {C}{\setminus }\overline{\mathbb {D}}\) with normalization \(\lim _{z\rightarrow \infty } z/g_I(t,z)=e^t\). It turns out that there is \(\xi \in C([0,T))\) such that \(g_I(t,\cdot )\) satisfies the radial Loewner equation

with initial value \(g_I(0,z)=z\). In fact, each \(g_I(t,\cdot )^{-1}\) extends continuously to \(\mathbb {T}\), and maps \(e^{i\xi (t)}\) to \(\beta _I(t)\), and the function \(\xi \) is determined by \(\beta _I\) up to an integer multiple of \(2\pi \).

Let \(a\in \mathbb {R}\) and \(T\in (a,\infty ]\). Now suppose a simple curve \(\beta _I:[a,T)\rightarrow \mathbb {C}\) satisfies \(\beta _I(0)\in e^a\mathbb {T}\) and \(\beta _I(t)\in \mathbb {C}{\setminus }e^a\overline{\mathbb {D}}\) for \(t\in (a,T)\). Let \(K_I(t)=e^a\overline{\mathbb {D}}\cup \beta _I((a,t])\), \(a\le t<T\). Assume that \({{\mathrm{cap}}}(K_I(t))=e^t\), \(a\le t<T\). Then the mappings \(g_I(t,\cdot )\) determined by \(K_I(t)\) also satisfy (3.1) for some \(\xi \in C([a,T))\), and the initial value now is \(g_I(0,z)=e^{-a}z\). Let \(a\) tend to \(-\infty \), then the initial point of \(\beta _I\) approaches \(0\). So let’s consider a simple curve \(\beta _I:[-\infty ,T)\rightarrow \mathbb {C}\) with \(\beta _I(-\infty )=0\). Let \(K_I(t)=\beta _I([-\infty ,t])\), \(-\infty <t< T\). Assume that \({{\mathrm{cap}}}(K_I(t))=e^t\), \(-\infty < t<T\). Then the mappings \(g_I(t,\cdot )\) determined by \(K_I(t)\) still satisfy (3.1) for some \(\xi \in C((-\infty ,T))\), and they have an asymptotic initial value at \(t=-\infty \):

For this reason, we also call (3.1) the whole-plane Loewner equation.

We now reverse the above process. Let \(T\in (-\infty ,\infty ]\) and \(\xi \in C((-\infty ,T))\). Let \(g_I(t,\cdot )\), \(-\infty <t<T\), be the solution of the whole-plane Loewner equation (3.1) with the asymptotic initial value (3.2). Note that for each fixed \(z\), (3.1) is an ODE in \(t\). For each \(t\in (-\infty ,T)\), let \(K_I(t)\) be the set of \(z\in \mathbb {C}\) at which \(g_I(t,\cdot )\) is not defined. Then \(K_I(t)\) and \(g_I(t,\cdot )\), \(-\infty <t<T\), are called the whole-plane Loewner hulls and maps driven by \(\xi \).

Remark

Since the asymptotic initial value is used, the existence and uniqueness of the solution is not trivial. From Proposition 4.21 in [8] we know that \(K_I(t)\) and \(g_I(t,\cdot )\) exist and are determined by \(e^{i\xi (s)}\), \(-\infty <s\le t\). Moreover, each \(g_I(t,\cdot )\) maps \(\widehat{\mathbb {C}}{\setminus }K_I(t)\) conformally onto \(\widehat{\mathbb {C}}{\setminus }\overline{\mathbb {D}}\) and fixes \(\infty \), and \(g_I(t,z)=e^{-t}z+O(1)\) near \(\infty \). So each \(K_I(t)\) is a hull in \(\mathbb {C}\) with \({{\mathrm{cap}}}(K_I(t))=e^t\). The whole-plane Loewner equation can be viewed as a mapping which takes the driving function \(\xi \) to a family of hulls \((K_I(t))\) or conformal maps \((g_I(t,\cdot ))\). The family \((K_I(t))\) increases in \(t\), but may not be simple curves.

We say that \(\xi \) generates a whole-plane Loewner trace \(\beta _I\) if

exists for \(t\in (-\infty ,T)\), and if \(\beta _I(t)\), \(-\infty \le t<T\), is a continuous curve in \(\mathbb {C}\). Such a trace, if it exists, starts from \(0\), i.e., \(\beta _I(-\infty )\,{:=}\,\lim _{t\rightarrow -\infty } \beta _I(t)=0\). The trace is called simple if \(\beta _I(t)\), \(-\infty \le t<T\), has no self-intersection. If \(\xi \) generates a whole-plane Loewner trace \(\beta _I\), then for each \(t\), \(\mathbb {C}{\setminus }K_I(t)\) is the unbounded component of \(\mathbb {C}{\setminus }\beta _I([-\infty ,t])\). In particular, if \(\beta _I\) is simple, then \(K_I(t)=\beta _I([-\infty ,t])\) for each \(t\), and we recover an earlier picture.

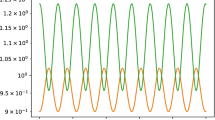

Let \(\kappa >0\). A pre-\((\mathbb {T};\kappa )\)-Brownian motion a.s. generates a whole-plane Loewner trace, which is called a standard whole-plane SLE\(_\kappa \) trace. The trace goes from \(0\) to \(\infty \), i.e., \(\lim _{t\rightarrow \infty }\beta _I(t)=\infty \). If \(\kappa \in (0,4]\), the trace is simple. If the driving function is the sum of a pre-\((\mathbb {T};\kappa )\)-Brownian motion and \(s_0t\) for some constant \(s_0\in \mathbb {R}\), then we also get a whole-plane Loewner trace, which is called a standard whole-plane SLE\((\kappa ,s_0)\) trace. The trace still goes from \(0\) to \(\infty \) as \(t\rightarrow \infty \), and is simple when \(\kappa \le 4\). For any \(z_1\ne z_2\in \widehat{\mathbb {C}}\), we may define whole-plane SLE\(_\kappa \) and SLE\((\kappa ,s_0)\) trace from \(z_1\) to \(z_2\) via Möbius transform.

Remark

Whole-plane SLE\(_\kappa \) is related to radial SLE in the way that, if \(T\in \mathbb {R}\) is fixed, then conditioned on \(K_I(t)\), \(-\infty <t\le T\), the curve \(\beta _I(T+t)\), \(t\ge 0\), is the radial SLE\(_\kappa \) trace in \(\widehat{\mathbb {C}}{\setminus }K_I(T)\) from \(\beta _I(T)\) to \(\infty \). Whole-plane SLE\((\kappa ,s_0)\) is related to radial SLE\((\kappa ,-s_0)\) (the radial Loewner process driven by \(\sqrt{\kappa }B(t)-s_0t\)) in a similar way. The additional negative sign is due to the reflection about \(\mathbb {T}\).

We will need the following inverted whole-plane Loewner process, which grows from \(\infty \). For \(-\infty <t<T\), let \(K(t)=I_0(K_I(t))\) and \(g(t,\cdot )=I_0\circ g_{I}(t,\cdot )\circ I_0\). Then for each \(t\), \(g(t,\cdot )\) maps \(\widehat{\mathbb {C}}{\setminus }K(t)\) conformally onto \(\mathbb {D}\) and fixes \(0\). Moreover, \(g(t,\cdot )\) satisfies (3.1) with some initial value at \(-\infty \). We call \(K(t)\) and \(g(t,\cdot )\) the inverted whole-plane Loewner hulls and maps driven by \(\xi \). If \(\xi \) generates a whole-plane Loewner trace \(\beta _I\), then \(\beta (t)\,{:=}\,I_0\circ \beta _I(t)\) is a continuous curve in \(\widehat{\mathbb {C}}\) that satisfies \(\beta (-\infty )=\infty \) and \(\beta (t)=\lim _{\mathbb {D}\ni z\rightarrow e^{i\xi (t)}} g(t,\cdot )^{-1}(z)\), \(-\infty <t<T\). We call \(\beta \) the inverted whole-plane Loewner trace driven by \(\xi \).

Let \(K_I(t)\) and \(g_I(t,\cdot )\), \(-\infty <t<T\), be as before. Let \(\widetilde{K}_I(t)=(e^i)^{-1}(K_I(t))\), \(-\infty <t<T\). It is easy to see that there exists a unique family \(\widetilde{g}_I(t,\cdot )\), \(-\infty <t<T\), such that, \(\widetilde{g}_I(t,\cdot )\) maps \(\mathbb {C}{\setminus }\widetilde{K}_I(t)\) conformally onto \(-\mathbb {H}\), \(e^i\circ \widetilde{g}_I(t,\cdot )=g_I(t,\cdot )\circ e^i\), and \(\widetilde{g}_I\) satisfies

and the initial value at \(-\infty \):

Then we call \(\widetilde{K}_I(t)\) and \(\widetilde{g}_I(t,\cdot )\) the covering whole-plane Loewner hulls and maps driven by \(\xi \).

For \(-\infty <t<T\), let \(\widetilde{K}(t)=\widetilde{I}_0(\widetilde{K}_I(t))\) and \(\widetilde{g}(t,\cdot )=\widetilde{I}_0\circ \widetilde{g}_I(t,\cdot )\circ \widetilde{I}_0\). Then \(\widetilde{K}(t)=(e^i)^{-1}(K(t))\) and \(e^i\circ \widetilde{g}(t,\cdot )=g(t,\cdot )\circ e^i\). We call \(\widetilde{K}(t)\) and \(\widetilde{g}(t,\cdot )\) the inverted covering whole-plane Loewner hulls and maps driven by \(\xi \). Then for each \(t\in (-\infty ,T)\), \(\widetilde{g}(t,\cdot )\) maps \(\mathbb {C}{\setminus }\widetilde{K}(t)\) conformally onto \(\mathbb {H}\), and satisfies (3.3) for \(t\in (-\infty ,T)\) and the initial value at \(-\infty \):

3.2 Annulus Loewner equation

The annulus Loewner equation was introduced in [17] to describe curves in a doubly connected domain. Let \(p\in (0,\infty )\). To motivate the definition, we consider a simple curve \(\beta (t)\), \(0\le t<T\), with \(\beta (0)\in \mathbb {T}\) and \(\beta (t)\in \mathbb {A}_p\), \(0<t<T\). Let \(K(t)=\beta ((0,t])\), \(0\le t<T\). Then each \(K(t)\) is a hull in \(\mathbb {A}_p\), and \({{\mathrm{cap}}}_{\mathbb {A}_p}(K(t))\) increases continuously. After a time-change, we may assume that \({{\mathrm{cap}}}_{\mathbb {A}_p}(K(t))=t\) for all \(t\). For each \(t\), there exists \(g(t,\cdot )\), which maps \(\mathbb {A}_p{\setminus }K(t)\) conformally onto \(\mathbb {A}_{p-t}\), and maps \(\mathbb {T}_p\) onto \(\mathbb {T}_{p-t}\). Such \(g(t,\cdot )\) is unique only up to a rotation. There are different ways to make \(g(t,\cdot )\) unique. For example, we may fix a point on \(w_0\in \mathbb {T}_p\) and require that \(e^{-t} g(t,\cdot )\) fixes \(w_0\). The normalization used here does not have a clear geometric meaning. The work in [17] shows that the \(g(t,\cdot )\) can be chosen to satisfy annulus Loewner equation of modulus \(p\) for some \(\xi \in C([0,T))\):

We now reverse the above process. Let \(\xi \in C([0,T))\) where \(0<T\le p\). Let \(g(t,\cdot )\) be the solution of the ODE (3.5). For \(0\le t<T\), let \(K(t)\) denote the set of \(z\in \mathbb {A}_p\) such that the solution \( g(s,z)\) blows up before or at time \(t\). We call \(K(t)\) and \( g(t,\cdot )\), \(0\le t<T\), the annulus Loewner hulls and maps of modulus \(p\) driven by \(\xi \). It turns out that, fo each \(t\), \(K(t)\) is a hull in \(\mathbb {A}_p\) with \({{\mathrm{cap}}}_{\mathbb {A}_p}(K(t))=t\), and \(g(t,\cdot )\) maps \(\mathbb {A}_p{\setminus }K(t)\) conformally onto \(\mathbb {A}_{p-t}\) and maps \(\mathbb {T}_p\) onto \(\mathbb {T}_{p-t}\). To see that \(g(t,\cdot )\) maps \(\mathbb {T}_p\) onto \(\mathbb {T}_{p-t}\), one may note that (3.5) implies that \(\partial _t \ln |g(t,z)|={{\mathrm{Re }}}\mathbf{S}(p-t, g(t,z)/e^{i\xi (t)})\), and \({{\mathrm{Re }}}\mathbf{S}(r,\cdot )\equiv 1\) on \(\mathbb {T}_{r}\) because \({{\mathrm{Im }}}\mathbf{H}(r,\cdot )\equiv -1\) on \(\mathbb {R}_{r}\) and \(\mathbf{H}(r,z)=-i\mathbf{S}(t,e^i(z))\).

We say that \(\xi \) generates an annulus Loewner trace \(\beta \) of modulus \(p\) if

exists for all \(t\in [0,T)\), and if \(\beta (t)\), \(0\le t<T\), is a continuous curve. The curve lies in \(\mathbb {A}_p\cup \mathbb {T}\) and starts from \(e^{i\xi (0)}\in \mathbb {T}\). The trace is called simple if \(\beta \) has no self-intersection and stays inside \(\mathbb {A}_p\) for \(t>0\). In that case, we have \(K(t)=\beta ((0,t])\) for each \(t\), and recover the picture at the beginning of this subsection.

Remark

-

1.

If \(\xi \) generates an annulus Loewner trace \(\beta \), then for each \(t\), \(\mathbb {A}_p{\setminus }K(t)\) is the component of \(\mathbb {A}_p{\setminus }\beta ((0,t])\) whose boundary contains \(\mathbb {T}_p\). If the trace is simple, then \(K(t)=\beta ((0,t])\) for each \(t\).

-

2.

Let \(\beta (t)\), \(0\le t<T\), be a simple curve with \(\beta (0)\in \mathbb {T}\) and \(\beta (t)\in \mathbb {A}_p\) for \(0<t<T\). If it is parameterized by capacity in \(\mathbb {A}_p\), i.e., \({{\mathrm{cap}}}_{\mathbb {A}_p}(\beta ((0,t]))=t\) for each \(t\), then it is an annulus Loewner trace of modulus \(p\). In the general case, let \(u(t)={{\mathrm{cap}}}_{\mathbb {A}_p}(\beta ((0,t]))\). Then \(\beta (u^{-1}(t))\) is an annulus Loewner trace of modulus \(p\).

-

3.

If \(\xi (t)=\sqrt{\kappa }B(t)\), \(0\le t<p\), then a.s. \(\xi \) generates an annulus Loewner trace. If \(\kappa \in (0,4]\), the trace is simple. From Girsanov theorem, the above still hold if \(\xi \) is a semimartingale, whose stochastic part is \(\sqrt{\kappa }B(t)\), and whose drift part is a continuously differentiable function.

The covering annulus Loewner equation of modulus \(p\) driven by the above \(\xi \) is

For \(0\le t<T\), let \(\widetilde{K}(t)\) denote the set of \(z\in \mathbb {S}_p\) such that the solution \(\widetilde{g}(s,z)\) blows up before or at time \(t\). Then for \(0\le t<T\), \(\widetilde{g}(t,\cdot )\) maps \(\mathbb {S}_p{\setminus }\widetilde{K}(t)\) conformally onto \(\mathbb {S}_{p-t}\) and maps \(\mathbb {R}_p\) onto \(\mathbb {R}_{p-t}\). We call \(\widetilde{K}(t)\) and \(\widetilde{g}(t,\cdot )\), \(0\le t<T\), the covering annulus Loewner hulls and maps of modulus \(p\) driven by \(\xi \). Let \(K(t)\) and \( g(t,\cdot )\) be the notations appeared above. Then we have \(\widetilde{K}(t)=(e^i)^{-1}(K(t))\) and \(e^i\circ \widetilde{g}(t,\cdot ) = g(t,\cdot )\circ e^i\) for \(0\le t<T\).

Since \(\widetilde{g}(t,\cdot )\) maps \(\mathbb {R}_p\) onto \(\mathbb {R}_{p-t}\) and \(\mathbf{H}_I(t,z)=\mathbf{H}(t,z+it)+i\), we have

Differentiating the above formula w.r.t. \(z\), we obtain

If \(\xi \) generates an annulus Loewner trace \(\beta \) of modulus \(p\), then a.s.

exists for \(0\le t<T\), and \(\widetilde{\beta }(t)\), \(0\le t<T\), is a continuous curve in \(\mathbb {S}_p\cup \mathbb {R}\) started from \(\widetilde{\beta }(0)=\xi (0)\in \mathbb {R}\). Such \(\widetilde{\beta }\) is called the covering annulus Loewner trace of modulus \(p\) driven by \(\xi \). And we have \(\beta =e^i\circ \widetilde{\beta }\). If \(\beta \) is a simple trace, then \(\widetilde{\beta }\) has no self-intersection, stays inside \(\mathbb {S}_p\) for \(t>0\), and \(\widetilde{K}(t)=\widetilde{\beta }((0,t])+2\pi \mathbb {Z}\) for each \(t\).

Let \(K_I(t)=I_p(K(t))\), \(g_I(t,\cdot )=I_{p-t}\circ g(t,\cdot )\circ I_p\), \(\widetilde{K}_I(t)=\widetilde{I}_p(\widetilde{K}(t))\), and \(\widetilde{g}_I(t,\cdot )=\widetilde{I}_{p-t}\circ \widetilde{g}(t,\cdot )\circ \widetilde{I}_p\). Then \(K_I(t)\) is a hull in \(\mathbb {A}_p\) with \({{\mathrm{cap}}}_{\mathbb {A}_p}(K_I(t))=t\), and \( g_I(t,\cdot )\) maps \(\mathbb {A}_p{\setminus }K_I(t)\) conformally onto \(\mathbb {A}_{p-t}\) and maps \(\mathbb {T}\) onto \(\mathbb {T}\). Moreover, \(\widetilde{K}_I(t)=(e^i)^{-1}(K_I(t))\), \(\widetilde{g}_I(t,\cdot )\) maps \(\mathbb {S}_p{\setminus }\widetilde{K}_I(t)\) conformally onto \(\mathbb {S}_{p-t}\), maps \(\mathbb {R}\) onto \(\mathbb {R}\), satisfies \(e^i\circ \widetilde{g}_I(t,\cdot )=g_I(t,\cdot )\circ e^i\), and the equation

We call \(K_I(t)\) and \( g_I(t,\cdot )\) (resp. \(\widetilde{K}_I(t)\) and \(\widetilde{g}_I(t,\cdot )\)) the inverted annulus (resp. inverted covering annulus) Loewner hulls and maps of modulus \(p\) driven by \(\xi \). The inverted hulls grow from the smaller circle \(\mathbb {T}_p\) instead of the unit circle \(\mathbb {T}\).

3.3 Disc Loewner equation

We now review the definition of the disc Loewner equation, which is used to describe a simple curve in a simply connected domain started from an interior point. The relation between the disc Loewner equation and the annulus Loewner equation is similar to that between the whole-plane Loewner equation and the radial Loewner equation. Intuitively, one considers the inverted annulus Loewner equations of modulus \(p\) so that the hulls start from \(\mathbb {T}_p\), and then lets \(p\rightarrow \infty \).

Let \(T\in (-\infty ,0]\) and \(\xi \in C((-\infty ,T))\). Let \(g_I(t,\cdot )\), \(-\infty <t<T\), be the solution of

For each \(t\in (-\infty ,T)\), let \(K_I(t)\) be the set of \(z\in \mathbb {D}\) at which \(g_I(t,\cdot )\) is not defined. Then \(K_I(t)\) and \(g_I(t,\cdot )\), \(-\infty <t<T\), are called the disc Loewner hulls and maps driven by \(\xi \).

Remark

From Proposition 4.1 and 4.2 in [17] we know that \(K_I(t)\) and \(g_I(t,\cdot )\) exist and are determined by \(e^{i\xi (s)}\), \(-\infty <s\le t\). Moreover, each \(g_I(t,\cdot )\) maps \(\mathbb {D}{\setminus }K_I(t)\) conformally onto \(\mathbb {A}_{-t}\) and maps \(\mathbb {T}\) onto \(\mathbb {T}\).

We say that \(\xi \) generates a disc Loewner trace \(\beta \) if

exists for every \(t\in (-\infty ,T)\), and if \(\beta _I(t)\), \(-\infty \le t<T\), is a continuous curve in \(\mathbb {D}\) with \(\beta _I(-\infty )=0\). The trace is called simple if it has no self-intersection. If \(\xi \) generates a disc Loewner trace \(\beta _I\), then for each \(t\), \(\mathbb {C}{\setminus }K_I(t)\) is the unbounded component of \(\mathbb {C}{\setminus }\beta _I([-\infty ,t])\). In particular, if \(\beta _I\) is simple, then \(K_I(t)=\beta _I([-\infty ,t])\) for each \(t\).

Let \(\beta _I(t)\), \(-\infty \le t<T\), be a simple curve in \(\mathbb {D}\) with \(\beta _I(-\infty )=0\). If it is parameterized by capacity in \(\mathbb {D}\), i.e., \({{\mathrm{mod}}}(\mathbb {D}{\setminus }\beta _I([-\infty ,t]))=-t\) for each \(t\), then \(\beta _I\) is a disc Loewner trace. In the general case, let \(u(t)=-{{\mathrm{mod}}}(\mathbb {D}{\setminus }\beta _I([-\infty ,t]))\), then \(\beta _I(u^{-1}(t))\) is a disc Loewner trace.

We will need the following inverted disc Loewner process, which grows from \(\infty \). For \(-\infty <t<T\), let \(K(t)=I_0(K_I(t))\) and \(g(t,\cdot )=I_{-t}\circ g(t,\cdot )\circ I_0\). Then each \(g(t,\cdot )\) maps \(\widehat{\mathbb {C}}{\setminus }\overline{\mathbb {D}}{\setminus }K(t)\) conformally onto \(\mathbb {A}_{-t}\) and maps \(\mathbb {T}\) onto \(\mathbb {T}_{-t}\). Moreover, \(g(t,\cdot )\) satisfies (3.10) with \(\mathbf{S}_I\) replaced by \(\mathbf{S}\). We call \(K(t)\) and \(g(t,\cdot )\), \(-\infty <t<T\), the inverted disc Loewner hulls and maps driven by \(\xi \). If \(\xi \) generates a disc Loewner trace \(\beta _I\), then \(\beta {:=}I_0\circ \beta _I\) is called the inverted disc Loewner trace driven by \(\xi \).

The covering disc Loewner hulls and maps are defined as follows. Let \(\widetilde{K}_I(t)=(e^i)^{-1}(K_I(t))\), \(-\infty <t<T\). There is a unique family \(\widetilde{g}_I(t,\cdot )\), \(-\infty <t<T\), which satisfy that, for each \(t\), \(\widetilde{g}_I(t,\cdot )\) maps \(\mathbb {H}{\setminus }\widetilde{K}_I(t)\) conformally onto \(\mathbb {S}_{-t}\) and maps \(\mathbb {R}\) onto \(\mathbb {R}\), \(e^i\circ \widetilde{g}_I(t,\cdot )=g_I(t,\cdot )\circ e^i\), and the following hold:

We call \(\widetilde{K}_I(t)\) and \(\widetilde{g}_I(t,\cdot )\) the covering disc Loewner hulls and maps driven by \(\xi \). Let \(\widetilde{K}(t)=\widetilde{I}_0(\widetilde{K}_I(t))\) and \(\widetilde{g}(t,\cdot )=\widetilde{I}_{-t}\circ \widetilde{g}_I(t,\cdot )\circ \widetilde{I}_0\). Then \(\widetilde{g}(t,\cdot )\) maps \(-\mathbb {H}{\setminus }\widetilde{K}(t)\) conformally onto \(\mathbb {S}_{-t}\), maps \(\mathbb {R}\) onto \(\mathbb {R}_{-t}\), \(e^i\circ \widetilde{g}(t,\cdot )=g(t,\cdot )\circ e^i\), and satisfies \(\partial _t{\widetilde{g}}(t,z)=\mathbf{H}(-t,\widetilde{g}(t,z)-\xi (t))\). We call \(\widetilde{K}(t)\) and \(\widetilde{g}(t,\cdot )\) the inverted covering disc Loewner hulls and maps driven by \(\xi \).

Remark

Now we summarize the conformal maps that appear in the this section so far. The relations between a (inverted) whole-plane, annulus, or disc Loewner map \(g(t,\cdot )\) or \(g_I(t,\cdot )\) and its corresponding covering map \(\widetilde{g}(t,\cdot )\) or \(\widetilde{g}_I(t,\cdot )\) are \(g(t,\cdot )\circ e^i=e^i\circ \widetilde{g}(t,\cdot )\) and \(g_I(t,\cdot )\circ e^i=e^i\circ \widetilde{g}_I(t,\cdot )\). The relation between the inverted pair \(\widetilde{g}(t,\cdot )\) and \(\widetilde{g}_I(t,\cdot )\) depends on the three cases. For the whole-plane Loewner maps,

For the annulus Loewner maps of modulus \(p\),

For the disc Loewner maps,

The relation between \(g(t,\cdot )\) and \(g_I(t,\cdot )\) depends on the three cases in a similar way.

3.4 SLE with marked points

Definition 3.1

A covering crossing annulus drift function is a real valued \(C^{0,1}\) differentiable function defined on \((0,\infty )\times \mathbb {R}\). A covering crossing annulus drift function with period \(2\pi \) is called a crossing annulus drift function.

Definition 3.2

Suppose \(\Lambda \) is a covering crossing annulus drift function. Let \(\kappa > 0\), \(p>0\), and \(x_0,y_0\in \mathbb {R}\). Let \(\xi (t)\), \(0\le t< p\), be the maximal solution to the SDE

where \(\widetilde{g}(t,\cdot )\), \(0\le t<p\), are the covering annulus Loewner maps of modulus \(p\) driven by \(\xi \). Then the covering annulus Loewner trace of modulus \(p\) driven by \(\xi \) is called the covering (crossing) annulus SLE\((\kappa ,\Lambda )\) trace in \(\mathbb {S}_p\) started from \(x_0\) with marked point \(y_0+p i\).

Definition 3.3

Suppose \(\Lambda \) is a crossing annulus drift function. Let \(\kappa > 0\), \(p>0\), \(a\in \mathbb {T}\) and \(b\in \mathbb {T}_p\). Choose \(x_0,y_0\in \mathbb {R}\) such that \(a=e^{ix_0}\) and \(b=e^{-p+iy_0}\). Let \(\xi (t)\), \(0\le t< p\), be the maximal solution to (3.13). The annulus Loewner trace \(\beta \) driven by \(\xi \) is called the (crossing) annulus SLE\((\kappa ,\Lambda )\) trace in \(\mathbb {A}_p\) started from \(a\) with marked point \(b\).

The above definition does not depend on the choices of \(x_0\) and \(y_0\) because \(\Lambda \) has period \(2\pi \), and for any \(n\in \mathbb {Z}\), the annulus Loewner objects driven by \(\xi (t)+2n\pi \) agree with those driven by \(\xi (t)\).

A covering chordal-type annulus drift function is a real valued \(C^{0,1}\) differentiable function defined on \((0,\infty )\times (\mathbb {R}{\setminus }2\pi \mathbb {Z})\). The word “covering” is omitted if the function has period \(2\pi \). If \(\Lambda \) is a chordal-type annulus drift function, using the same idea, we may define the annulus SLE\((\kappa ,\Lambda )\) processes, where the initial point \(a=e^{ix_0}\) and marked point \(b=e^{iy_0}\) both lie on \(\mathbb {T}\) and are distinct. The driving function \(\xi \) is the solution to (3.13) with \({{\mathrm{Re }}}\widetilde{g}(t,y_0+ pi)\) replaced by \(\widetilde{g}(t,y_0)\).

Via conformal maps, we can then define annulus SLE\((\kappa ,\Lambda )\) process and trace in any doubly connected domain started from one boundary point with another boundary point being marked. Here \(\Lambda \) is a chordal-type or crossing annulus drift function depending on whether or not the initial point and the marked point lie on the same boundary component. Let \(\Lambda _I(p,x)=-\Lambda (p,-x)\), then \(\Lambda _I\) is called the dual of \(\Lambda \). If \(W\) is a conjugate conformal map of \(\mathbb {A}_p\), and \(\Lambda _I\) is the dual of \(\Lambda \), then \((W(K(t)))\) is an annulus SLE\((\kappa ,\Lambda _I)\) process in \(W(\mathbb {A}_p)\) started from \(W(a)\) with marked point \(W(b)\).

Definition 3.4

Let \(\kappa \ge 0\), \(b\in \mathbb {T}\), and \(\Lambda \) be a crossing annulus drift function. Choose \(y_0\in \mathbb {R}\) such that \(e^{iy_0}=b\). Let \(B_*^{(\kappa )}(t)\), \(t\in \mathbb {R}\), be a pre-\((\mathbb {T};\kappa )\)-Brownian motion. Suppose \(\xi (t)\), \(-\infty <t<0\), satisfies the following SDE with the meaning in Definition 2.2:

where \(\widetilde{g}_I(t,\cdot )\) are the disc Loewner maps driven by \(\xi \). Then we call the disc Loewner trace driven by \(\xi \) the disc SLE\((\kappa ,\Lambda )\) trace in \(\mathbb {D}\) started from \(0\) with marked point \(b\).

Via conformal maps, we can define disc SLE\((\kappa ,\Lambda )\) trace in any simply connected domain started from an interior point with a marked boundary point.

4 Coupling of two annulus SLE traces

The goal of this section is to prove Theorem 4.1 below, which says that when certain PDE is satisfied, we may couple two annulus SLE\((\kappa ;\Lambda )\) processes such that they commute with each other. Although this result will not be used directly in the proof of the whole-plane reversibility, we prove this theorem because on the one hand, the result may be used in the future, and on the other hand, the proof will serve as a reference for a more complicated proof of the theorem about coupling two whole-plane SLE processes.

After some preparation in Sect. 4.1, the construction formally starts from Sect. 4.2, which resembles Section 3 of [10]. The extra complexity comes from the appearance of covering maps and inverted maps. Then we construct a two-dimensional local martingale \(M\) in Sect. 4.3, which resembles Section 4 of [10]. In the same subsection, we derive the boundedness of \(M\) when the two processes are stopped at some exiting time. In Sect. 4.4, we first construct local commutation couplings using \(M\), then construct the global commutation coupling using the coupling technique, and finishes the proof.

Theorem 4.1

Let \(\kappa >0\) and \(s_0\in \mathbb {R}\). Suppose \(\Gamma \) is a positive \(C^{1,2}\) differentiable function on \((0,\infty )\times \mathbb {R}\) that satisfies

We call \(\Gamma \) a partition function following Gregory Lawler’s terminology in [20]. Let \(\Lambda =\kappa \frac{\Gamma '}{\Gamma }\). Then \(\Lambda \) is a crossing annulus drift function. Let \(\Lambda _1=\Lambda \) and \(\Lambda _2\) be the dual of \(\Lambda \). Then for any \(p>0\), \(a_{1},a_{2}\in \mathbb {T}\), there is a coupling of two curves: \(\beta _{1}(t)\), \(0\le t<p\), and \(\beta _{2}(t)\), \(0\le t<p\), such that for \(j\ne k\in \{1,2\}\), the following hold.

-

(i)

\(\beta _j\) is an annulus SLE\((\kappa ,\Lambda _j)\) trace in \(\mathbb {A}_p\) started from \(a_{j}\) with marked point \(a_{I,k}{:=}I_p(a_{k})\).

-

(ii)

If \(t_k<p\) is a stopping time w.r.t. \((\beta _{k}(t))\), then conditioned on \(\beta _k(t)\), \(0\le t\le t_k\), after a time-change, \(\beta _j(t)\), \(0\le t<T_j(t_k)\) is the annulus SLE\((\kappa ,\Lambda _j)\) process in a connected component of \(\mathbb {A}_p{\setminus }I_p(\beta _k((0,t_k]))\) started from \(a_j\) with marked point \(I_p(\beta _k(t_k))\), where \(T_j(t_k)\) is the first time that \(\beta _j\) visits \(I_p\circ \beta _k((0,t_k])\), which is set to be \(p\) if such time does not exist.

Remark

-

1.

The \(\Lambda \) satisfies the PDE:

$$\begin{aligned} \partial _t \Lambda =\frac{\kappa }{2} \Lambda ''+\Big (3-\frac{\kappa }{2}\Big )\mathbf{H}_I''+\Lambda \mathbf{H}_I'+\mathbf{H}_I\Lambda '+\Lambda \Lambda '. \end{aligned}$$(4.3)On the other hand, if \(\Lambda \) satisfies (4.3), then there is \(\Gamma \) such that \(\Lambda =\kappa \frac{\Gamma '}{\Gamma }\) and (4.1) holds.

-

2.

The theorem also holds for \(\kappa =0\) if \(\Lambda \) satisfies (4.3) with \(\kappa =0\).

4.1 Transformations of PDE

Lemma 4.1

Let \(\sigma ,s_0\in \mathbb {R}\). Suppose \(\Gamma \), \(\Psi \), and \(\Psi _{s_0}\) are functions defined on \((0,\infty )\times \mathbb {R}\), which satisfy \(\Psi =\Gamma \Theta _I^{\frac{2}{\kappa }}\), \(\Psi _s=\Gamma _s\Theta _I^{\frac{2}{\kappa }}\), and \(\Psi _{s_0}(t,x)=e^{-\frac{s_0x}{\kappa }-\frac{s_0^2t}{2\kappa }}\Psi (t,x)\). Then the following PDEs are equivalent:

Proof

This follows from (2.5), (2.7), and some straightforward computations. \(\square \)

Remark

When \(\sigma =\frac{4}{\kappa }-1\), (4.4) agrees with (4.1).

Lemma 4.2

Let \(\sigma ,s_0\in \mathbb {R}\). Suppose \(\Psi _{s_0}\) is positive, has period \(2\pi \), and solves (4.6) in \((0,\infty )\times \mathbb {R}\). Then \(\Psi _{s_0}(t,x)\rightarrow C\) as \(t\rightarrow \infty \) for some constant \(C>0\), uniformly in \(x\in \mathbb {R}\).

Proof

Fix \(t_0>0\) and \(x_0\in \mathbb {R}\). For \(0\le t<t_0\), let \(X_{x_0}(t)=x_0+\sqrt{\kappa }B(t)+st\) and

From (4.6) and Itô’s formula, \(M(t)\), \(0\le t<t_0\), is a local martingale. Since \(\Psi _{s_0}\) and \(\mathbf{H}_I'\) are continuous on \((0,\infty )\times \mathbb {R}\) and have period \(2\pi \), we see that, for any \(t_1\in (0,t_0]\), \(M(t)\), \(0\le t\le t_0-t_1\), is uniformly bounded, so it is a bounded martingale. Thus,

Now suppose \(t_0>t_1\ge 3\). From Lemma 2.1, we see that,

Let \(\varepsilon >0\). Choose \(t_1\ge 3\) such that \(15\sigma e^{-t_1}<\varepsilon /3\). For \(t\in [t_1,\infty )\) and \(x\in \mathbb {R}\), define

As \(t\rightarrow \infty \), the distribution of \(e^i(X_x(t-t_1))\) tends to the uniform distribution on \(\mathbb {T}\). Since \(\Psi _{s_0}\) is positive, continuous, and has period \(2\pi \), we see that \(\Psi _{s_0,t_1}(t,x)\rightarrow \frac{1}{2\pi } \int _0^{2\pi } \Psi _{s_0}(t_1,x)dx>0\) as \(t\rightarrow \infty \), uniformly in \(x\in \mathbb {R}\). Thus, \(\lim _{t\rightarrow \infty }\ln (\Psi _{s_0,t_1})\) converges uniformly in \(x\in \mathbb {R}\). So there is \(t_2>t_1\) such that if \(t_a,t_b\ge t_2\) and \(x_a,x_b\in \mathbb {R}\), then \(|\ln (\Psi _{t_1}(t_a,x_a))-\ln (\Psi _{t_1}(t_b,x_b))|<\varepsilon /3\). From (4.7) and (4.8) we see that

Thus, \(|\ln (\Psi _{s_0}(t_a,x_a))-\ln (\Psi _{s_0}(t_b,x_b))|<\varepsilon \) if \(t_a,t_b\ge t_2\) and \(x_a,x_b\in \mathbb {R}\). So \(\lim _{t\rightarrow \infty }\ln (\Psi _{s_0})\) converges uniformly in \(x\in \mathbb {R}\), which implies the conclusion of the lemma. \(\square \)

Lemma 4.3

Let \(s_0\in \mathbb {R}\). Suppose \(\Gamma \) is positive, satisfies (4.2), and solves (4.4). Then there is \(C>0\) such that \(\Gamma _{s_0}(t,x){:=}C^{-1}e^{-\frac{s_0x}{\kappa }-\frac{s_0^2t}{2\kappa }}\Gamma (t,x)\) has period \(2\pi \) and satisfies \(\lim _{t\rightarrow \infty }\Gamma _{s_0}(t,x)= 1\), uniformly in \(x\in \mathbb {R}\).

Proof

Let \(\Psi _{s_0}\) be given by Lemma 4.1. Since \(\Theta _I>0\), \(\Psi _{s_0}\) is positive and solves (4.6). Since \(\Gamma \) satisfies (4.2) and \(\Theta _I\) has period \(2\pi \), \(\Psi _{s_0}\) also has period \(2\pi \). From Lemma 4.2, there is \(C>0\) such that \(\Psi _{s_0}\rightarrow C\) as \(t\rightarrow \infty \), uniformly in \(x\in \mathbb {R}\). Let \(\Gamma _{s_0}(t,x){:=}C^{-1}e^{-\frac{s_0x}{\kappa }-\frac{s_0^2t}{2\kappa }}\Gamma (t,x)\). Then \(\Gamma _{s_0}=C^{-1}\Psi _{s_0}\Theta _I(t,x)^{-\frac{2}{\kappa }}\). From (2.6), \(\Theta _I\rightarrow 1\) as \(t\rightarrow \infty \), uniformly in \(x\in \mathbb {R}\). Since \(\Theta _I\) has period \(2\pi \), we get the desired conclusion. \(\square \)

4.2 Ensemble

Let \(p>0\) and \(\xi _1,\xi _2\in C([0,p))\). For \(j=1,2\), let \(g_j(t,\cdot )\) (resp. \(g_{I,j}(t,\cdot )\)), \(0\le t<p\), be the annulus (resp. inverted annulus) Loewner maps of modulus \(p\) driven by \(\xi _j\). Let \(\widetilde{g}_j(t,\cdot )\) and \(\widetilde{g}_{I,j}(t,\cdot )\), \(0\le t<p\), \(j=1,2\), be the corresponding covering Loewner maps. Suppose \(\xi _j\) generates a simple annulus Loewner trace of modulus \(p\): \(\beta _j\), \(j=1,2\). Let \(\beta _{I,j}=I_p\circ \beta _j\), \(j=1,2\), be the inverted trace. Define

For \((t_1,t_2)\in {\mathcal {D}}\), we define

Fix any \(j\ne k\in \{1,2\}\) and \(t_k\in [0,p)\). Let \(T_j(t_k)\) be the maximal number such that for any \(t_j<T_j(t_k)\), we have \((t_1,t_2)\in {\mathcal {D}}\). As \(t_j\rightarrow T_j(t_k)^-\), the spherical distance between \(\beta _j((0,t_j])\) and \(\beta _{I,k}((0,t_k])\) tends to \(0\), so \({{\mathrm{m}}}(t_1,t_2)\rightarrow 0\). For \(0\le t_j<T_j(t_k)\), let \(\beta _{j,t_k}(t_j)= g_{I,k}(t_k,\beta _j(t_j))\). Then \(\beta _{j,t_k}(t_j)\), \(0\le t_j<T_j(t_k)\), is a simple curve that starts from \(g_{I,k}(t_k,e^{i\xi _j(t_j)})\in \mathbb {T}\), and stays inside \(\mathbb {A}_p\) for \(t_j>0\). Let

Then \(v_{j,t_k}\) is continuous and increasing and maps \([0,T_j(t_k))\) onto \([0,S_{j,t_k})\) for some \(S_{j,t_k}\in (0,p-t_k]\). Since \({{\mathrm{m}}}\rightarrow 0\) as \(t_j\rightarrow T_j(t_k)\), \(S_{j,t_k}=p-t_k\). Then \(\gamma _{j,t_k}(t){:=} \beta _{j,t_k}(v_{j,t_k}^{-1}(t))\), \(0\le t<p-t_k\), are the annulus Loewner trace of modulus \(p-t_k\) driven by some \(\zeta _{j,t_k}\in C([0,p-t_k))\). Let \(\gamma _{I,j,t_k}(t)\) be the corresponding inverted annulus Loewner trace. Let \( h_{j,t_k}(t,\cdot )\) and \(h_{I,j,t_k}(t,\cdot )\) be the corresponding annulus and inverted annulus Loewner maps. Let \(\widetilde{h}_{j,t_k}(t,\cdot )\), and \(\widetilde{h}_{I,j,t_k}(t,\cdot )\) be the corresponding covering maps.

For \(0\le t_j<T_j(t_k)\), let \(\xi _{j,t_k}(t_j)\), \(\beta _{I,j,t_k}(t_j)\), \(g_{j,t_k}(t_j,\cdot )\), \(g_{I,j,t_k}(t_j,\cdot )\), \(\widetilde{g}_{j,t_k}(t_j,\cdot )\), and \(\widetilde{g}_{I,j,t_k}(t_j,\cdot )\) be the time-changes of \(\zeta _{j,t_k}(t)\), \(\gamma _{I,j,t_k}(t)\), \( h_{j,t_k}(t,\cdot )\), \(h_{I,j,t_k}(t,\cdot )\), \(\widetilde{h}_{j,t_k}(t,\cdot )\), and \(\widetilde{h}_{I,j,t_k}(t,\cdot )\), respectively, via the map \(v_{j,t_k}\). For example, this means that \(\xi _{j,t_k}(t_j)=\zeta _{j,t_k}(v_{j,t_k}(t_j))\) and \(g_{j,t_k}(t_j,\cdot )=h_{j,t_k}(v_{j,t_k}(t_j),\cdot )\).

For \(0\le t_j<T_j(t_k)\), let

Then \(G_{I,k,t_k}(t_j,\cdot )\) maps \(\mathbb {A}_{p-t_j}{\setminus }g_j(t_j,\beta _{I,k}((0,t_k]))\) conformally onto \( \mathbb {A}_{{{\mathrm{m}}}(t_1,t_2)}\) and maps \(\mathbb {T}\) onto \(\mathbb {T}\); \(e^i\circ \widetilde{G}_{I,k,t_k}(t_j,\cdot )=G_{I,k,t_k}(t_j,\cdot )\circ e^i\); and \(\widetilde{G}_{I,k,t_k}(t_j,\cdot )\) maps \(\mathbb {R}\) onto \(\mathbb {R}\). Since \(\gamma _{j,t_k}(t)= \beta _{j,t_k}(v_{j,t_k}^{-1}(t))\), from (3.6) and a similar formula for \(\gamma \), we find that \(e^{i\xi _{j,t_k}(t_j)}=G_{I,k,t_k}(t_j,e^{i\xi _j(t_j)})\) for \(0\le t_j<T_j(t_k)\). So there is \(n\in \mathbb {Z}\) such that \(\widetilde{G}_{I,k,t_k}(t_j,\xi _j(t_j))=\xi _{j,t_k}(t_j)+2n\pi \) for \(0\le t_j<T_j(t_k)\). Since \(\zeta _{j,t_k}+2n\pi \) generates the same annulus Loewner hulls as \(\zeta _{j,t_k}\), we may choose \(\zeta _{j,t_k}\) such that for \(0\le t_j<T_j(t_k)\),

For \(0\le t_j<T_j(t_k)\), let

Then \(A_{j,S}(t_1,t_2)\) is the Schwarzian derivative of \(\widetilde{G}_{I,k,t_j}(t_k,\cdot )\) at \(\xi _j(t_j)\). A standard argument using Lemma 2.1 in [17] shows that, for \(0\le t_j<T_j(t_k)\),

so from (4.11) we have

Moreover, for \(0\le t_j<T_j(t_k)\),

From (4.13) we have

Differentiate (4.21) w.r.t. \(t_j\). Let \(w=\widetilde{g}_j(t_j,z)\rightarrow \xi _j(t_j)\). From (3.7), (4.14), (4.19), and (2.2) we get

Differentiate (4.21) w.r.t. \(t_j\) and \(z\), and let \(w=\widetilde{g}_j(t_j,z)\rightarrow \xi _j(t_j)\). Then we get

Note that both \(G_{I,k,t_k}(t_j,\cdot )\) and \(g_{I,k,t_j}(t_k,\cdot )\) map \(\mathbb {A}_{p-t_j}{\setminus }\beta _{I,k,t_j}((0,t_k])\) conformally onto \(\mathbb {A}_{{{\mathrm{m}}}(t_1,t_2)}\) and maps \(\mathbb {T}\) onto \(\mathbb {T}\). So they differ by a multiplicative constant of modulus \(1\). Thus, there is \(C_k(t_1,t_2)\in \mathbb {R}\) such that

Interchanging \(j\) and \(k\) in (4.24), we see that there is \(C_j(t_1,t_2)\in \mathbb {R}\) such that

From (4.13) we have

From the definition of inverted annulus Loewner maps, we have

From (4.27) and the above formulas, we get \(\widetilde{g}_{k,t_j}(t_k,\cdot )\circ \widetilde{g}_{I,j}(t_j,\cdot )+C_k=\widetilde{g}_{I,j,t_k}(t_j,\cdot )\circ \widetilde{g}_k(t_k,\cdot )\). Comparing this formula with (4.26), we see that \( C_1+C_2\equiv 0\). Now we define \(X_1\) and \(X_2\) on \({\mathcal {D}}\) by

From (4.24), (4.25), and \(C_1+C_2\equiv 0\), we have

Since \(\mathbf{H}_I'''\) is even, we may define \(Q\) on \({\mathcal {D}}\) by

Differentiate (4.20) w.r.t. \(z\) twice. We get

Let \(z=\xi _k(t_k)\) in (4.20), (4.31), and (4.32). Since \(\mathbf{H}_I\) and \(\mathbf{H}_I''\) are odd and \(\mathbf{H}_I'\) is even, from (4.25) and (4.28) we have

Differentiate (4.32) w.r.t. \(z\) again, and let \(z=\xi _k(t_k)\). Since \(\mathbf{H}_I'''\) is even, we get

which together with (4.30) and (4.35) implies that

Define \(F\) on \({\mathcal {D}}\) by

Since \(\widetilde{g}_{I,j,t_k}(0,\cdot )=\widetilde{h}_{I,j,t_k}(0,\cdot )={{\mathrm{id}}}\), when \(t_j=0\), we have \(A_{k,1}=1\), \(A_{k,2}=A_{k,3}=0\), hence \(A_{k,S}=0\). From (4.36), we see that

Remark

There is an explanation of \(F\) in terms of Brownian loop measure. If \(R\) is a function on \((0,\infty )\) that satisfies \(R'(t)=\mathbf{r}(t)+\frac{1}{t}\), then

is the Brownian loop measure of the loops in \(\mathbb {A}_p\) that intersect both \(\beta _1([0,t_1])\) and \(\beta _{I,2}([0,t_2])\).

4.3 Martingales in two time variables

Let \(a_{1},a_{2}\in \mathbb {T}\) be as in Theorem 4.1. Let \(a_{I,j}=I_p(a_{j})\in \mathbb {T}_p\), \(j=1,2\). Choose \(x_1,x_2\in \mathbb {R}\) such that \(a_j=e^{ix_j}\), \(j=1,2\). Let \(B_1(t)\) and \(B_2(t)\) be two independent Brownian motions. For \(j=1,2\), let \(({\mathcal {F}}^j_t)\) be the complete filtration generated by \((B_j(t))\). Let \(\Gamma \), \(\Lambda \), \(\Lambda _1\), and \(\Lambda _2\) be as in Theorem 4.1. Since \(\Gamma \) satisfies (4.2), \(\Lambda _j\), \(j=1,2\), has period \(2\pi \), which implies that they are annulus drift functions. For \(j=1,2\), let \(\xi _j(t_j)\), \(0\le t_j<p\), be the solution to the SDE:

Then \((\xi _1)\) and \((\xi _2)\) are independent. For simplicity, suppose \(\kappa \in (0,4]\) (for the case \(\kappa >4\), we may work on Loewner chains and apply Proposition 2.1 in [17]). Then for \(j=1,2\), a.s. \((\xi _j)\) generates a simple annulus Loewner trace \(\beta _j\), which is an annulus SLE\((\kappa ,\Lambda _j)\) trace \(\beta _j\) in \(\mathbb {A}_p\) started from \(a_{j}\) with marked point \(a_{I,3-j}\). We may apply the results in the prior subsection.

As the annulus Loewner objects driven by \(\xi _j\), \(\beta _j\), \(\beta _{I,j}=I_p\circ \beta _j\), \((g_{I,j}(t_j,\cdot ))\), \((\widetilde{g}_j(t_j,\cdot ))\), and \((\widetilde{g}_{I,j}(t_j,\cdot ))\) are all \(({\mathcal {F}}^j_{t_j})\)-adapted. Fix \(j\ne k\in \{1,2\}\). Since \(\beta _j\) is \(({\mathcal {F}}^j_{t_j})\)-adapted and \((g_{I,k}(t_k,\cdot ))\) is \(({\mathcal {F}}^k_{t_k})\)-adapted, we see that \((t_1,t_2)\mapsto \beta _{j,t_k}(t_j)=g_{I,k}(t_k,\beta _j(t_j))\) defined on \({\mathcal {D}}\) is \(({\mathcal {F}}^1_{t_1}\times {\mathcal {F}}^2_{t_2})\)-adapted. Since \(\widetilde{g}_{j,t_k}(t_j,\cdot )\) and \(\widetilde{g}_{I,j,t_k}(t_j,\cdot )\) are determined by \(\beta _{j,t_k}(s_j)\), \(0\le s_j\le t_j\), they are \(({\mathcal {F}}^1_{t_1}\times {\mathcal {F}}^2_{t_2})\)-adapted. From (4.13), \((\widetilde{G}_{I, k,t_k}(t_j,\cdot ))\) is \(({\mathcal {F}}^1_{t_1}\times {\mathcal {F}}^2_{t_2})\)-adapted. From (4.14), \((\xi _{j,t_k}(t_j))\) is also \(({\mathcal {F}}^1_{t_1}\times {\mathcal {F}}^2_{t_2})\)-adapted. From (4.10), (4.28), (4.15), and (4.16), we see that \(({{\mathrm{m}}})\), \((X_j)\), \((A_{j,h})\), \(h=1,2,3\), and \((A_{j,S})\) are all \(({\mathcal {F}}^1_{t_1}\times {\mathcal {F}}^2_{t_2})\)-adapted.

Fix \(j\ne k\in \{1,2\}\) and any \(({\mathcal {F}}^k_t)\)-stopping time \(t_k\in [0,p)\). Let \({\mathcal {F}}^{j,t_k}_{t_j}={\mathcal {F}}^j_{t_j}\times {\mathcal {F}}^k_{t_k}\), \(0\le t_j<p\). Then \(({\mathcal {F}}^{j,t_k}_{t_j})_{0\le t_j<p}\) is a filtration. Since \((B_j(t_j))\) is independent of \({\mathcal {F}}^k_{t_k}\), it is also an \(({\mathcal {F}}^{j,t_k}_{t_j})\)-Brownian motion. Thus, (4.39) is an \(({\mathcal {F}}^{j,t_k}_{t_j})\)-adapted SDE. From now on, we will apply Itô’s formula repeatedly, all SDE will be \(({\mathcal {F}}^{j,t_k}_{t_j})\)-adapted, and \(t_j\) ranges in \([0,T_j(t_k))\).

From (4.22), (4.28), (4.15), and (4.33), we see that \(X_j\) satisfies

Let \(\Gamma _{1}=\Gamma \) and \(\Gamma _{2}(t,x)=\Gamma (t,-x)\). Then for \(j=1,2\), \(\Lambda _j=\frac{\Gamma _j'}{\Gamma _j}\) and \(\Gamma _{j}\) satisfies (4.1). From (4.29), we may define \(Y\) on \({\mathcal {D}}\) by

From (4.1), (4.18), (4.40), and (4.41), we have

From (4.23) we have

Let

Actually, \(c\) is the central charge for SLE\(_\kappa \). Then we compute

Recall the \(\mathbf{R}\) defined in Sect. 2.3. Define \(\widehat{M}\) on \({\mathcal {D}}\) by

Then \(\widehat{M}\) is positive. From (2.3), (4.18), (4.34), (4.38), (4.42), and (4.43), we have

When \(t_k=0\), we have \(A_{j,1}=1\), \(A_{j,2}=0\), \({{\mathrm{m}}}=p-t_j\), and \(X_j=\xi _j(t_j)-\widetilde{g}_{I,j}(t_j,x_k)\), so the RHS of (4.45) becomes \(\frac{1}{\kappa }\Lambda _{j}(p-t_j,\xi _j(t_j)-\widetilde{g}_{I,j}(t_j,x_k))\partial \xi _j(t_j)\). Define \(M\) on \({\mathcal {D}}\) by

Then \(M\) is also positive, and \(M(\cdot ,0)\equiv M(0,\cdot )\equiv 1\). From (4.39) and (4.45) we have

So when \(t_k\in [0,p)\) is a fixed \(({\mathcal {F}}^k_t)\)-stopping time, \(M\) is a local martingale in \(t_j\).

Let \(\mathcal {J}\) denote the set of Jordan curves in \(\mathbb {A}_p\) that separate \(\mathbb {T}\) and \(\mathbb {T}_p\). For \(J\in \mathcal {J}\) and \(j=1,2\), let \(T_j(J)\) be the first time that \(\beta _j\) visits \(J\). It is also the first time that \(\beta _{I,j}\) visits \(I_p(J)\). Let \({{\mathrm{JP}}}\) denote the set of pairs \((J_1,J_2)\in {\mathcal {J}}^2\) such that \(I_p(J_1)\cap J_2=\emptyset \) and \(I_p(J_1)\) is surrounded by \(J_2\). This is equivalent to that \(I_p(J_2)\cap J_1=\emptyset \) and \(I_p(J_2)\) is surrounded by \(J_1\). Then for every \((J_1,J_2)\in {{\mathrm{JP}}}\), \(\beta _{I,1}((0,t_1])\cap \beta _{2}((0,t_2])=\emptyset \) when \(t_1\le T_{1}(J_1)\) and \(t_2\le T_2(J_2)\), so \([0,T_{1}(J_1)]\times [0,T_{2}(J_2)]\subset {\mathcal {D}}\).

Lemma 4.4

There are positive continuous functions \(N_L(p)\) and \(N_S(p)\) defined on \((0,\infty )\) that satisfies \(N_L(p),N_S(p)=O(pe^{-p})\) as \(p\rightarrow \infty \) and the following properties. Suppose \(K\) is an interior hull in \(\mathbb {D}\) containing \(0\), \(g\) maps \(\mathbb {D}{\setminus }K\) conformally onto \(\mathbb {A}_p\) for some \(p\in (0,\infty )\) and maps \(\mathbb {T}\) onto \(\mathbb {T}\), and \(\widetilde{g}\) is an analytic function that satisfies \(e^i\circ \widetilde{g}=g\circ e^i\). Then for any \(x\in \mathbb {R}\), \(|\ln (\widetilde{g}'(x))|\le N_L(p)\) and \(|S\widetilde{g}(x)|\le N_S(p)\), where \(S\widetilde{g}(x)\) is the Schwarzian derivative of \(\widetilde{g}\) at \(x\), i.e., \(S\widetilde{g}(x)=\widetilde{g}'''(x)/\widetilde{g}'(x)-\frac{3}{2} (\widetilde{g}''(x)/\widetilde{g}'(x))^2\).

Proof

Let \(f=g^{-1}\) and \(\widetilde{f}=\widetilde{g}^{-1}\). Then \(e^i\circ \widetilde{f}=f\circ e^i\). Since \(\widetilde{f}'(\widetilde{g}(x))=1/\widetilde{g}'(x)\) and \(S\widetilde{f}(\widetilde{g}(x))=-S\widetilde{g}(x)/\widetilde{g}'(x)^2\), we suffice to prove the lemma for \(\widetilde{f}\). Let \(P(p,z)=-{{\mathrm{Re }}}\mathbf{S}_I(p,z)-\ln |z|/p\) and \(\widetilde{P}(p,z)=P(p,e^{iz})={{\mathrm{Im }}}\mathbf{H}_I(p,z)+{{\mathrm{Im }}}z/p\). Then \(P(p,\cdot )\) vanishes on \(\mathbb {T}\) and \(\mathbb {T}_p{\setminus }\{e^{-p}\}\) and is harmonic inside \(\mathbb {A}_p\). Moreover, when \(z\in \mathbb {A}_p\) is near \(e^{-p}\), \(P(p,z)\) behaves like \(-{{\mathrm{Re }}}(\frac{e^{-p}+z}{e^{-p}-z})+O(1)\). Thus, \(-P(p,\cdot )\) is a renormalized Poisson kernel in \(\mathbb {A}_p\) with the pole at \(e^{-p}\). Since \(\ln |f|\) is negative and harmonic in \(\mathbb {A}_p\) and vanishes on \(\mathbb {T}\), there is a positive measure \(\mu _K\) on \([0,2\pi )\) such that

which implies that

So for any \(x\in \mathbb {R}\) and \(h=1,2,3\), \(\widetilde{f}^{(h)}(x)=\int \frac{\partial ^{h}}{\partial x^{h-1}\partial y}\widetilde{P}(p,x-\xi )d\mu _K(\xi )\). Let

We have \(0<m_p<M_p<\infty \) and \(m_p|\mu _K|\le \widetilde{f}'\le M_p |\mu _K|\) on \(\mathbb {R}\). Since \(\widetilde{f}(2\pi )=\widetilde{f}(0)+2\pi \), we get \(1/M_p\le |\mu _K|\le 1/m_p\). Thus, \(m_p/M_p\le \widetilde{f}'\le M_p/m_p\) and \(|\widetilde{f}^{(h)}|\le M^{(h)}_p/m_p\), \(h=2,3\), from which follows that \(|S\widetilde{f}| \le \frac{M_p^{(3)}M_p}{m_p^2}+\frac{3}{2}\left( \frac{M_p^{(2)}M_p}{m_p^2}\right) ^2\) on \(\mathbb {R}\). Since \(\widetilde{P}(p,z)={{\mathrm{Im }}}\mathbf{H}_I(p,z)+{{\mathrm{Im }}}z/p\), we see that \( \frac{\partial }{\partial y}\widetilde{P}(p,x)=\mathbf{H}_I'(p,x)+\frac{1}{p}\) and \(\frac{\partial ^h}{\partial x^{h-1}\partial y}\widetilde{P}(p,x)=\mathbf{H}_I^{(h)}(p,x)\), \(h=2,3\). From Lemma 2.1, \(M_p,m_p=\frac{1}{p}+O(e^{-p})\) and \(M_p^{(h)}=O(e^{-p})\), \(h=2,3\), as \(p\rightarrow \infty \). So we have \(\ln (M_p/m_p)=O(pe^{-p})\) and \(\frac{M_p^{(3)}M_p}{m_p^2}+\frac{3}{2}\left( \frac{M_p^{(2)}M_p}{m_p^2}\right) ^2=O(pe^{-p})\). \(\square \)

Proposition 4.1

(Boundedness) Fix \((J_1,J_2)\in {{\mathrm{JP}}}\). Then \(|\ln (M)|\) is bounded on \([0,T_1(J_1)]\times [0,T_2(J_2)]\) by a constant depending only on \(J_1\) and \(J_2\).

Proof

In this proof, we say a function is uniformly bounded if its values on \([0,T_1(J_1)]\times [0,T_2(J_2)]\) are bounded in absolute value by a constant depending only on \(p\), \(J_1\), and \(J_2\). If there is no ambiguity, let \(\Omega (A,B)\) denote the domain bounded by sets \(A\) and \(B\), and let \({{\mathrm{mod}}}(A,B)\) denote the modulus of this domain if it is doubly connected. Let \(J_{I,2}=I_0(J_2)\). Let \(p_0={{\mathrm{mod}}}(J_1,J_{I,2})>0\). If \(t_1\le T_1(J_1)\) and \(t_2\le T_2(J_2)\), since \(\Omega (J_1,J_{I,2})\) disconnects \(K_1(t_1)\) and \(K_{I,2}(t_2)\) in \(\mathbb {A}_p\), \({{\mathrm{m}}}(t_1,t_2)\ge p_0\). Since \({{\mathrm{m}}}\le p\) always holds, \({{\mathrm{m}}}\in [p_0,p]\) on \([0,T_{1}(J_1)]\times [0,T_{2}(J_2)]\). Since \(\mathbf{R}\) is continuous on \((0,\infty )\), \(\mathbf{R}({{\mathrm{m}}})\) is uniformly bounded. Since \(Q=\mathbf{H}_I'''({{\mathrm{m}}},X_1)\) and \(\mathbf{H}_I'''\) is continuous and has period \(2\pi \), \(Q\) is uniformly bounded. From Lemma 4.4, for \(j=1,2\), \(|\ln (A_{j,1})|\le N_L({{\mathrm{m}}})\), so it is uniformly bounded. From (4.38), \(\ln (F)\) is uniformly bounded. Let \(s_0\in \mathbb {R}\) be as in Theorem 4.1. Let \(\Gamma _{s_0}>0\) be defined by Lemma 4.3, and \(Y_{s_0}=\Gamma _{s_0}(X_1)\). Then \(\Gamma _{s_0}\) has period \(2\pi \). So \(\ln (Y_{s_0})\) is uniformly bounded. Define \(\widehat{M}_{s_0}\) and \(M_{s_0}\) using (4.44) and (4.46) with \(Y\) and \(\widehat{M}\) replaced by \(Y_{s_0}\) and \(\widehat{M}_{s_0}\), respectively. Then \(\ln (\widehat{M}_{s_0})\) and \(\ln (M_{s_0})\) are uniformly bounded because their factors are. Now it suffices to show that \(\ln (M)-\ln (M_{s_0})\) is uniformly bounded. We have

The second term on the RHS of the above formula is uniformly bounded because \({{\mathrm{m}}}\in [p_0,p]\). So it suffices to show that \( X_1(t_1,t_2)-X_1(t_1,0)-X_1(0,t_2)+X_1(0,0)\) is uniformly bounded. Let

From (4.28) we have \(X_1=\widetilde{G}-\widetilde{g}\). So it suffices to show that \( \widetilde{G}(t_1,t_2)-\widetilde{G}(t_1,0)-\widetilde{G}(0,t_2)+\widetilde{G}(0,0)\) and \( \widetilde{g}(t_1,t_2)-\widetilde{g}(t_1,0)-\widetilde{g}(0,t_2)+\widetilde{g}(0,0)\) are both uniformly bounded. From (4.20) we have \(\partial _1 \widetilde{g}(t_1,t_2)=A_{1,1}^2\mathbf{H}_I({{\mathrm{m}}}(t_1,t_2),\widetilde{g}(t_1,t_2)-\xi _{1,t_2}(t_1))\). Since \(A_{1,1}^2\) is uniformly bounded, \({{\mathrm{m}}}\in [p_0,p]\), and \(\mathbf{H}_I\) is continuous and has period \(2\pi \), \(\widetilde{g}(t_1,t_2)-\widetilde{g}(0,t_2)\) is uniformly bounded. Thus, \( \widetilde{g}(t_1,t_2)-\widetilde{g}(t_1,0)-\widetilde{g}(0,t_2)+\widetilde{g}(0,0)\) is uniformly bounded. Let \(\widetilde{G}_d(t_1,t_2)=\widetilde{G}(t_1,t_2)-\xi _1(t_1)\). Then \( \widetilde{G}(t_1,t_2)-\widetilde{G}(t_1,0)-\widetilde{G}(0,t_2)+\widetilde{G}(0,0)= \widetilde{G}_d(t_1,t_2)-\widetilde{G}_d(t_1,0)-\widetilde{G}_d(0,t_2)+\widetilde{G}_d(0,0)\). To finish the proof it suffices to show that \(\widetilde{G}_d\) is uniformly bounded.

Let \(J\) be a Jordan curve which is disjoint from \(J_1\) and \(I_p(J_2)\), and separates these two curves. Let \(\widetilde{J}=(e^i)^{-1}(J)\). Since \(\widetilde{G}_d(t_1,t_2)=\widetilde{G}_{I,2,t_2}(t_1,\xi _1(t_1))-\xi _1(t_1)\), from the Maximum principle, we suffice to show that \(\sup _{z\in \widetilde{g}_1(t_1,\widetilde{J})} (\widetilde{G}_{I,2,t_2}(t_1,z)-z)\) is uniformly bounded. Recall from (4.13) that \(\widetilde{G}_{I,2,t_1}(t_1,\cdot )=\widetilde{g}_{1,t_2}(t_1,\cdot )\circ \widetilde{g}_{I,2}(t_2,\cdot )\circ \widetilde{g}_1(t_1,\cdot )^{-1}\). So we suffice to show that the following three quantities are uniformly bounded:

The uniformly boundedness of these quantities follow from similar arguments. We only work on the last one since it is the hardest. From (4.19) we have

Since \(\int _0^{t_1}A_{1,1}(s,t_2)^2ds={{\mathrm{m}}}(0,t_2)-{{\mathrm{m}}}(t_1,t_2)\) is uniformly bounded, we suffice to show that

is uniformly bounded. From the properties of \(\mathbf{H}\), we suffice to show that there is a constant \(h>0\) such that \({{\mathrm{Im }}}\widetilde{g}_{1,t_2}(t_1,\cdot )\circ \widetilde{g}_{I,2}(t_2,z)\ge h\) for any \(z\in \widetilde{J}\). This is equivalent to that \(|g_{1,t_2}(t_1,\cdot )\circ g_{I,2}(t_2,z)|\le e^{-h}\) for any \(z\in J\). We suffice to show that the extremal distance (c.f. [26]) between \(\mathbb {T}\) and \(g_{1,t_2}(t_1,\cdot )\circ g_{I,2}(t_2,J)\) is bounded below by a positive constant depending only on \(p\), \(J\), \(J_1\) and \(J_2\). From conformal invariance, that is equal to the extremal distance between \(J\) and \(\mathbb {T}_p\cup \beta _I((0,t_2])\), which is not smaller than the extremal distance between \(J\) and \(I_p(J_2)\) since \(I_p(J_2)\) separates \(J\) from \(\mathbb {T}_p\cup \beta _I((0,t_2])\). So we are done. \(\square \)

4.4 Local couplings and global coupling

Let \(\mu _j\) denote the distribution of \((\xi _j)\), \(j=1,2\). Let \(\mu =\mu _1\times \mu _2\). Then \(\mu \) is the joint distribution of \((\xi _1)\) and \((\xi _2)\), since \(\xi _1\) and \(\xi _2\) are independent. Fix \((J_1,J_2)\in {{\mathrm{JP}}}\). From the local martingale property of \(M\) and Proposition 4.1, we have \( \mathbf{E}\,_\mu [M(T_1(J_1),T_2(J_2))]=M(0,0)=1\). Define \(\nu _{J_1,J_2}\) by \(d\nu _{J_1,J_2}/d\mu =M(T_1(J_1),T_2(J_2))\). Then \(\nu _{J_1,J_2}\) is a probability measure. Let \(\nu _1\) and \(\nu _2\) be the two marginal measures of \(\nu _{J_1,J_2}\). Then \(d\nu _1/d\mu _1=M(T_1(J_1),0)=1\) and \(d\nu _2/d\mu _2=M(0,T_2(J_2))=1\), so \(\nu _j=\mu _j\), \(j=1,2\). Suppose temporarily that the joint distribution of \((\xi _1)\) and \((\xi _2)\) is \(\nu _{J_1,J_2}\) instead of \(\mu \). Then the distribution of each \((\xi _j)\) is still \(\mu _j\).

Fix an \(({\mathcal {F}}^2_t)\)-stopping time \(t_2\le T_2(J_2)\). From (4.39), (4.47), and Girsanov theorem (c.f. Chapter VIII, Section 1 of [23]), under the probability measure \(\nu _{J_1,J_2}\), there is an \(({\mathcal {F}}^1_{t_1}\times {\mathcal {F}}^2_{t_2})_{t_1\ge 0}\)-Brownian motion \(\widetilde{B}_{1,t_2}(t_1)\) such that \(\xi _1(t_1)\), \(0\le t_1\le T_1(J_1)\), satisfies the \(({\mathcal {F}}^1_{t_1}\times {\mathcal {F}}^2_{ t_2})_{t_1\ge 0}\)-adapted SDE:

which together with (4.14), (4.22), and Itô’s formula implies that

Recall that \(\zeta _{1,t_2}(s_1)=\xi _{1,t_2}(v_{1,t_2}^{-1}(s_1))\) and \(\widetilde{h}_{I,1,t_2}(s_1,\cdot )=\widetilde{g}_{I,1,t_2}(v_{1,t_2}^{-1}(s_1),\cdot )\). So from (4.11) and (4.17), there is another Brownian motion \(\widehat{B}_{1,t_2}(s_1)\) such that for \(0\le s_1\le v_{1,t_2}(T_1(J_1))\),

Moreover, the initial values is \( \zeta _{1,t_2}(0)=\xi _{1,t_2}(0)=\widetilde{G}_{I,2,t_2}(0,x_1)=\widetilde{g}_{I,2}(t_2,x_1)\). Thus, after a time-change, \(g_{I,2}(t_2,\beta _{1}(t_1))\), \(0\le t_1\le T_1(J_1)\), is a partial annulus SLE\((\kappa ,\Lambda _1)\) trace in \(\mathbb {A}_{p-t_2}\) started from \(g_{I,2}(t_2,a_{1})\) with marked point \(I_{p-t_2}\circ e^i(\xi _2(t_2))\). This means that, conditioning on \({\mathcal {F}}^2_{t_2}\), after a time-change, \(\beta _1(t_1)\), \(0\le t_1\le T_1(J_1)\), is a partial annulus SLE\((\kappa ,\Lambda _1)\) trace in \(\mathbb {A}_p{\setminus }\beta _{I,2}((0,t_2])\) started from \(a_1\) with marked point \(\beta _{I,2}(t_2)\). Similarly, the above statement holds true if the subscripts “\(1\)” and “\(2\)” are exchanged.

The joint distribution \(\nu _{J_1,J_2}\) is a local coupling such that the desired properties in the statement of Theorem 4.1 holds true up to the stopping time \(T_1(J_1)\) and \(T_2(J_2)\). Then we can apply the coupling technique developed in Section 7 of [10] to construct a global coupling using the local couplings for different pairs \((J_1,J_2)\).