Abstract

In this paper we introduce an efficient fat-tail measurement framework that is based on the conditional second moments. We construct a goodness-of-fit statistic that has a direct interpretation and can be used to assess the impact of fat-tails on central data conditional dispersion. Next, we show how to use this framework to construct a powerful normality test. In particular, we compare our methodology to various popular normality tests, including the Jarque–Bera test that is based on third and fourth moments, and show that in many cases our framework outperforms all others, both on simulated and market stock data. Finally, we derive asymptotic distributions for conditional mean and variance estimators, and use this to show asymptotic normality of the proposed test statistic.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It has been recently shown in Jaworski and Pitera (2016) that for a normal random variable and a unique ratio close to 20/60/20 the conditional dispersion in the tail sets is the same as in the central set. In other words, if we split big normal sample into three sets—one corresponding to the worst 20% outcomes, one corresponding to the middle 60% outcomes, and one corresponding to the best 20% outcomes—then the conditional variance on those subsets is approximately the same.

In this paper we show that this property could be used to construct an efficient goodness-of-fit testing framework that has a direct (financial) interpretation. The impact of tail dispersion on central dispersion is a natural measure of tail heaviness and can serve as an alternative to other methods which are typically based on tail limit analysis or higher order moments; see Alexander (2009) and Jarque and Bera (1980). In particular, in contrast to the Jarque–Bera normality test that is based on third and fourth moments, our test relies on the conditional second moments which are often easier to estimate.

Testing for normality has a long history and many remarkable methods have been developed. This includes general distribution-fit frameworks like Anderson–Darling test based on the distance between theoretical and empirical distribution function (Anderson and Darling 1954) or Shapiro–Wilk test relying on the regression coefficient (Wilk and Shapiro 1965); see Madansky (2012), Henze (2002) or Thode (2002) for a comprehensive overview of normality testing procedures.

Most empirical studies suggest that the normality tests should be chosen carefully as their statistical power varies depending on the context; see e.g. Thadewald and Büning (2007), Romão et al. (2010) or Brockwell and Davis (2016). This is why the existing procedures are constantly refined and new ones are being developed; for example, a recent revision of the Jarque–Bera testing framework based on the second-order analogue of skewness and kurtosis could be found in Desgagné and Lafaye de Micheaux (2018).

The approach presented in this paper draws attention to interesting and previously not exploited aspect of normal distributions that could be used for efficient normality testing. In particular, we show that our approach outperforms and/or complements multiple benchmark normality testing frameworks when popular (financial) alternative distributions, such as Student’s t or logistic, are considered. More explicitly, we show that our test usually has the best power if one expects that a sample comes from a symmetric distribution which have heavier (or lighter) tails in comparison with the normal distribution. We illustrate that with a financial market data example; see Sect. 6 for details.

Finally, it is worth mentioning that the 20/60/20 division leads to a very accurate data clustering when it comes to the tail assessment performed in reference to the central set normality assumption. Consequently, our method could be embedded into data analytics frameworks based on cluster analysis, help to refine data mining techniques, etc; see Romesburg (2004), Kaufman and Rousseeuw (2009) and Hair et al. (2013) for an overview. In fact, the good performance of our test statistic on market data could be linked to a popular financial stylised fact saying that typical financial asset returns can be seen as normal, but the extreme returns are more frequent and with greater magnitude than the ones resulting from the normal fit; see Cont (2001) and Sheikh and Qiao (2010) for details.

This paper is organised as follows: In Sect. 2 we briefly recall the concept of the 20-60-20 Rule, while in Sect. 3 we outline the construction of the test statistic and discuss its basic properties. Section 4 provides a high-level discussion about the test power, and Sect. 5 discusses in details mathematical background including derivation of the asymptotic distribution of the proposed test statistic. Next, in Sect. 6, we present a simple market data case-study and discuss application of our framework in the financial context. We conclude in Sect. 7. For brevity, we moved the closed-form formula for the normalising constant introduced in Sect. 3 to Appendix A.

2 The 20-60-20 rule for the univariate normal distribution

Let us assume that X is a normally distributed random variable. We define left, middle, and right partitioning sets of X by

where \(F_X^{-1}(\alpha )\) is the \(\alpha \)-quantile of X. It has been shown in Jaworski and Pitera (2016) that

for this unique 20/60/20 ratio, where \(\sigma ^2_A\) denotes the conditional variance of X on set A.Footnote 1

This specific division together with the associated set of equalities in (2) create a dispersion balance for the conditioned populations. This property might be linked to the statistical phenomenon known as the 20-60-20 Rule: a principle that is widely recognised by the practitioners and used e.g. for efficient management or clustering. In fact, a similar statement is true in the multivariate case: the conditional covariance matrices of multivariate normal vector are equal to each other, when the conditioning is based on the values of any linear combination of the margins, and 20/60/20 ratio is maintained. For more details, see Jaworski and Pitera (2016) and references therein.

3 Test statistic

Let us assume we have a sample from X at hand. Then, based on (2), we define a test statistic

where \({{\hat{\sigma }}}^2\) is the sample variance, \({{\hat{\sigma }}}^2_A\) is the conditional sample variance on set A (where the conditioning is based on empirical quantiles), n is the sample size, and \(\rho \approx 1.8\) is a fixed normalising constant; see Fig. 1 for the R implementation code. We refer to Sect. 5 for more details including rigorous definitions of conditional variance \({{\hat{\sigma }}}^2_A\), constant \(\rho \), etc.

It is not hard to see that under the normality assumption N is a pivotal quantity. Furthermore, in Sect. 5 we show that the distribution of N is asymptotically normal; see Theorem 1 therein. In Fig. 2, we illustrate this by computing the Monte Carlo density of N under the normality assumption for samples of size 50, 100, and 250.

The distribution of N under the normality assumption for \(n=50, 100, 250\), for strong Monte Carlo sample of size 10,000,000. The obtained empirical density (solid curve) is very close to standard normal density (dashed curve); the table compares selected empirical quantiles with theoretical normal quantiles

Test statistic N has a clear interpretation: the difference between tail and central conditional variances could be seen as a measure of tail fatness, i.e. the bigger the value of N, the fatter the tails.

To illustrate this we compute the values of N for bigger sample size, \(n=500\), for three different fat-tail distributions and three different slim-tail distributions. For fat-tail comparison we picked logistic, Student’s t with five degrees of freedom, and Laplace distributions, while for slim-tail comparison we considered generalised normal distribution with shape parameter \(s\in \{2.5, 3, 5\}\); the (standardised) generalised normal density for \(s\in {\mathbb {R}}_{+}\) is given by

we refer to Nadarajah (2005) or Tumlinson et al. (2016) for more details. The results presented in Fig. 3 confirm that the behaviour of N is as expected.

Based on statistic N values, one can construct a one-sided or two-sided statistical test with normality (\(N=0\)) as a null hypothesis. For brevity, we refer to such test as N normality test or simply N test.

Boxplots of N for samples from different distributions. The results for three fat-tailed distributions (Logistic, t-Student with \(v=5\), and Laplace) are presented in the left plot. The results for three slim-tailed distributions (generalised normal with \(s\in \{2.5,3,5\}\)) are presented in the right plot. For transparency, we rescaled all distributions to have zero mean and unit variance, and added the results for normal distribution. The boxplots are based on strong Monte Carlo sample of size 10,000, where each simulation is of size \(n=500\)

4 Power of the test

In this section we check the power of the proposed N test in a controlled environment. We focus on symmetric distributional alternatives (used e.g. in finance) when one wants to abandon the normality assumption due to fat-tail or slim-tail phenomena. Namely, we consider the Cauchy distribution, the Logistic distribution, the Laplace distribution, the Student’s t distribution with \(v\in \{2, 5, 10, 20, 30\}\) degrees of freedom parameter, and the generalised normal distribution (GN) with the shape parameter \(s\in \{1.5,2.5,3,5,10\}\). Note that GN with \(s=1\) and \(s=2\) correspond to Laplace and normal distribution, respectively; see (4) for the GN density definition. In all cases, the location parameter is set to 0 and the scale parameter is set to 1.

For completeness, we compare test N results with well-established benchmark normality tests: Jarque–Bera test, Anderson–Darling test, and Shapiro–Wilk test. It should be noted that in contrast to these frameworks, statistic N allows one to consider a specific heavy-tail (or slim-tail) alternative, i.e. positive (or negative) values of N point out to heavy-tail (or slim-tail) alternative hypothesis. Consequently, we decided to construct right-sided (or left-sided) critical region for the fat-tailed (or slim-tailed) distributions. Nevertheless, for completeness, we include the results for two-sided critical regions in all cases.

For all alternative distribution choices we consider four different sample sizes, i.e. \(n=20,50,100,250\). For each n, we simulate 2,000,000 strong Monte Carlo sample, and check for what proportion of simulations the tests reject normality at significance level \(\alpha =5\%\).

All computations are performed in R 3.5.2. For benchmark normality testing we use multiple add-on R packages including gnorm (for GN simulation), stats (for Shapiro–Wilk test), nortest (Anderson–Darling test), and tseries (Jarque–Bera test). For better comparability, we used test-wise simulated rejection thresholds instead of theoretical p-values returned by R functions; for computations, we used big strong Monte Carlo sample of size 10,000,000. In particular, note that while Jarque–Bera test has asymptotic \(\chi ^2\) distribution (with 2 degrees of freedom) under normality, this approximation may be inaccurate for small samples, and lead to non-meaningful (non-adjusted) p-values.

It should be noted that our framework specification is consistent with the one presented in Desgagné and Lafaye de Micheaux (2018), where a comprehensive normality tests comparison is made. In particular, results presented in Appendix C therein are perfectly consistent with results presented here (for all benchmark tests).Footnote 2

For transparency, we have decided to consider fat-tail and slim-tail case separately. The results for fat-tailed distributions are presented in Table 1. The best performance of the right-sided test N could be observed for almost all considered distributions. The fatter the tails the bigger the absolute difference between N test power and JB test power, which could be considered as a second best choice. To check whether test statistic N brings some novel results, we decided to check the proportion of simulations on which the normality assumption was rejected uiquely by N among all considered tests; for comparison purposes, we checked the same for all other tests. The results for three selected distributions are presented in Table 2. It can be observed, that the unique rejection proportion for test N is the highest among all tests and point out to the fact that statistic N is taking into account sample properties that are not exploited by other tests.Footnote 3 Finally, it should be noted that the performance of two-sided N test is also quite good: the test outperforms both AD and SW in most of the considered cases.

The results for slim-tailed distributions are presented in Table 3. Both left-sided and two-sided test based on N statistic substantially outperform all other tests on all datasets. This suggest that the proposed framework is also suitable when assessing slim-tailed distributions.

5 Mathematical framework and asymptotic results

In this section, we provide the explicit formulas for the conditional variance estimators, study their asymptotic behaviour, and show that N is asymptotically normal.

First, we introduce the basic notation and provide more explicit formulas for sets L, M, and R that were given in Sect. 2; see (1).

We assume that \(X\sim {\mathcal {N}}(\mu ,\sigma )\) for mean parameter \(\mu \) and standard deviation parameter \(\sigma \). We use \(F_X\) to denote the distribution of X, \(\varPhi \) to denote the standard normal distribution, and \(\phi \) to denote the standard normal density. Following the usual convention, for any \(n\in {\mathbb {N}}\), we use \((X_1, \ldots , X_n)\) to denote the random sample from X and for \(i=1,\ldots , n\), we use \(X_{(i)}\) to denote the sample ith order statistic.

For fixed partition parameters \(\alpha ,\beta \in {\mathbb {R}}\), where \(0\le \alpha <\beta \le 1\), we define the conditioning set

For brevity and with slight abuse of notation, we often write A instead of \(A[\alpha ,\beta ]\). Then, the explicit formulas for sets L, M, and R given in (1) are

where \({\tilde{q}}:=\varPhi (x),\) and x is the unique negative solution of the equation

The approximate value of \({\tilde{q}}\) is 0.19809; we refer to (Jaworski and Pitera 2016, Lemma 3.3) for details. Note that (6) could be seen as a specific form of differential equation \(-xy-y'(1-2y)=0\), where \(y(x):=\varPhi (x)\); this could be used to determine similar ratios for other distributions.

Next, we give the exact definition of the conditional sample variance. For a fixed set A, where \(A=A[\alpha ,\beta ]\), the conditional variance estimator on the set A is given by

where \( [x]:=\max \{k\in {\mathbb {Z}}:k\le x\} \) denotes the floor of \(x\in {\mathbb {R}}\) and

is the conditional sample mean. In particular, we set \( {\hat{\sigma }}^2:={\hat{\sigma }}^2_{A[0,1]}. \) Recall that the test statistic N is given by

where the normalising constant \(\rho \) in (9) is approximately equal to 1.7885; we refer to Appendix A for the closed form formula for \(\rho \). Now, we are ready to state the main result of this section, i.e. Theorem 1.

Theorem 1

Let \(X\sim N(\mu ,\sigma )\). Then,

where N is given in (9), and \(\rho \) is a fixed normalising constant independent of \(\mu \), \(\sigma \), and n.

Before we present the proof of Theorem 1 let us introduce a series of Lemmas and additional notation; proof techniques are partially based on those introduced in Stigler (1973). To ease the notation, for a fixed set A, where \(A=A[\alpha ,\beta ]\), we define

Additionally, we set

where \(\mathbb {1}_{C}\) is the indicator function of set C. It is useful to note that \(A_n\) and \(B_n\) follow the binomial distributions \(B(n,\alpha )\) and \(B(n,\beta )\), respectively; note that for \(\alpha =0\) and \(\beta =1\) the distributions are degenerate with \(A_n\equiv 0\) and \(B_n \equiv n\).

Finally, for any sequence \((a_i)\) we introduce the notation of the directed sum that is given by

In Lemma 1, we show the consistency of the conditional sample expectation. Note that the statement of Lemma 1 does not explicitly rely on normality assumption. In fact, the proof is true under very weak conditions imposed on X (e.g. continuity of the distribution function of X); similar statement is true for other lemmas presented in this section. Also, it should be noted that Lemma 1 and 2 show consistency and asymptotic distribution of the standard non-parametric Expected Shortfall estimator; see e.g. McNeil et al. (2010) for details.

Lemma 1

For any \(A=A[\alpha ,\beta ]\), it follows that \( {\overline{X}}_{A}\xrightarrow {\,{\mathbb {P}}\,}\mu _A,\quad n\rightarrow \infty . \)

Proof

Let \(A=A[\alpha ,\beta ]\). For any \(n\in {\mathbb {N}}\), we get

Now, we show that

Due to the consistency of the empirical quantiles, we get \(X_{([n\alpha ])}\xrightarrow {{\mathbb {P}}}a\) and \(X_{(A_n)}\xrightarrow {{\mathbb {P}}}a\), as \(n\rightarrow \infty \). Thus, using inequality

to prove (10), it is sufficient to show that \( \left| \frac{A_n-[n\alpha ]}{m_n}\right| \xrightarrow {\,{\mathbb {P}}\,}0. \) Noting that

where

and, by the Law of Large Numbers, \( \left( \tfrac{1}{n}A_n-\alpha \right) \xrightarrow {\,{\mathbb {P}}\,}0, \) we conclude the proof of (10). The proof of

is similar to the proof of (10) and is omitted for brevity.

Next, observe that

Consequently, noting that \( \mu _A=\frac{{\mathbb {E}}[X\mathbb {1}_{\{X\in A\}}]}{\beta -\alpha }, \) and using the Law of Large Numbers, we get

Combining (10), (12), and (13), we conclude the proof. \(\square \)

Next, we focus on the asymptotic distribution of the conditional sample mean; note that Lemma 2 is a slight modification of the result of Stigler (1973) for trimmed means. For completeness, we present the full proof.

Lemma 2

For any \(A=A[\alpha ,\beta ]\), it follows that

for some constant \(0<\eta _A<\infty \).

Proof

For brevity, we assume that \(\alpha >0\) and \(\beta <1.\) The remaining degenerate cases could be treated in the similar manner. Let \(A=A[\alpha ,\beta ]\). Define

As in the proof of Lemma 1, observe that

Now, we show that

Due to the consistency of the empirical quantiles, we get

as \(n\rightarrow \infty \). Thus, using inequality

it is sufficient to show that \(\frac{A_n-[n\alpha ]}{m_n/\sqrt{n}}\) converges in distribution to some non-degenerate distribution. Note that

where

and, by the Central Limit Theorem applied to \(A_n\sim B(n,\alpha )\), we get

Thus, using the Slutsky’s Theorem (see e.g. (Ferguson 1996, Theorem 6’)), we get

which concludes the proof of (15). Similarly, one can show that

Combining (15) with (17), and noting that

we can rewrite (14) as

where \(r_n\xrightarrow {{\mathbb {P}}}0\). Next, we have

where for \(i=1,\ldots ,n\), we set

Finally, noting that for \(n\rightarrow \infty \) we get \( \frac{n(\beta -\alpha )}{m_n}\xrightarrow {\,{\mathbb {P}}\,}1, \) and combining the Central Limit Theorem applied to \((Z^A_i)\) with the Slutsky’s Theorem we conclude the proof; note that \((Z^A_i)\) are i.i.d. with zero mean and finite variance. \(\square \)

Next, we show that for the conditional variance estimator one can substitute the sample mean with the true mean without impacting the asymptotics. For any A, where \(A=A[\alpha ,\beta ]\), the conditional variance estimator with known mean is given by

Lemma 3

For any \(A=A[\alpha ,\beta ]\), it follows that \(\sqrt{n}\left( {\hat{\sigma }}^2_{A}-{\hat{s}}^2_{A}\right) \xrightarrow {\,{\mathbb {P}}\,}0\), \(n\rightarrow \infty \).

Proof

As in the proof of Lemma 2, we focus on the case \(0<\alpha<\beta <1\). Let \(A=A[\alpha ,\beta ]\) and note that

where the last summand equals 0 since

Consequently, we get \( \sqrt{n}\left( {\hat{s}}^2_{A}-{\hat{\sigma }}^2_{A}\right) =\sqrt{n}\left( {\overline{X}}_{A}-\mu _A\right) ^2. \) Thus, using Lemma 1 combined with Lemma 2, we conclude the proof. \(\square \)

Now, we study the asymptotic behaviour of the conditional variance estimator; this is a key lemma that will be used in the proof of Theorem 1. Moreover, this result may be of independent interest since it allows one to construct the asymptotic confidence interval for the conditional variance.

Lemma 4

For any \(A=A[\alpha ,\beta ]\) it follows that

where

Proof

Due to Lemma 3, it is enough to consider \({\hat{s}}^2_{A}\) instead of \({\hat{\sigma }}^2_{A}\). For

we get

By the arguments similar to the ones presented in the proof of Lemma 2, we get

and

Thus, recalling (18), we can rewrite (20) as

where \(r_n\xrightarrow {{\mathbb {P}}}0\). Next, for \(i=1,\ldots ,n\), we set

and by straightforward computations get \( {\mathbb {E}}[Y^A_i]=0\) and \(D^2[Y^A_i]=(\beta -\alpha )^2 \tau ^2_A\). Consequently, noting that

and using the Central Limit Theorem combined with the Slutsky’s Theorem, we conclude the proof. \(\square \)

Finally, we are ready to show the proof of Theorem 1.

Proof (of Theorem 1)

For conditioning sets L, M, and R given in (5), we define the associated sequences of random variables \((Y_i^L)\), \((Y_i^M)\), \((Y_i^R)\) using (21). For any \(n\in {\mathbb {N}}\), we set

where \({\tilde{q}}\) is defined via (6). By the multivariate Central Limit Theorem (cf. (Ferguson 1996, Theorem 5)), we get \( Z_n\xrightarrow {\,d\,}{\mathcal {N}}_3(0,\varSigma ), \) where

Now, let \( S_n :=\sqrt{n}\left( {\hat{\sigma }}^2_L+{\hat{\sigma }}^2_R-2{\hat{\sigma }}^2_M\right) . \) Using (2), it is easy to see that

Consequently, by the arguments similar to the ones presented in the proof of Lemma 4 (see (22)), we can rewrite (23) as \( S_n=M_n Z_n +r_n, \) where \(r_n\xrightarrow {\,{\mathbb {P}}\,}0\) and

Next, observing that \(M_n\xrightarrow {{\mathbb {P}}} [1,-2,1]\) and using the multivariate Slutsky’s Theorem (cf. (Ferguson 1996, Theorem 6)), we get \( S_n\xrightarrow {\,d\,} {\mathcal {N}}(0,\tau ), \) where

Let \( \rho :=\frac{\tau }{\sigma ^2} \) and \( N_n:=\frac{1}{\rho }\frac{S_n}{{{\hat{\sigma }}}^2}. \) Observing that \(\sigma ^2 / {\hat{\sigma }}^2_n\xrightarrow {\,{\mathbb {P}}\,}1\), and again using the Slutsky’s Theorem, we get \( N_n\xrightarrow {\,d\,} {\mathcal {N}}(0,1). \) To conclude the proof of Theorem 1, we need to show that \(\rho \) is independent of \(\mu \) and \(\sigma \).

To do so, let us first show that for any A, where \(A=A[\alpha ,\beta ]\), and the corresponding random variable \(Y^A_1\) given in (21), we get

where \({{\tilde{X}}}_1 := (X_1-\mu )/\sigma \) and \( \psi :{\mathbb {R}}\times [0,1]\times [0,1] \rightarrow {\mathbb {R}}\) is some fixed measurable function. From (Johnson et al. 1994, Sect. 13.10.1), we know that

Footnote 5 Consequently, the standardised mean \({{\tilde{\mu }}}_A :=\frac{\mu _A-\mu }{\sigma }\) and variance \({{\tilde{\sigma }}}_A^2:=\frac{\sigma ^2_A}{\sigma ^2}\) depend only on \(\alpha \) and \(\beta \). Recalling (21), we get

Combining this with equalities

we conclude the proof of (25).

Now, using (25) for L, M, and R, and expressing \(\varSigma / \sigma ^4\) as

we see that \(\varSigma / \sigma ^4\) does not depend on \(\mu \) and \(\sigma \).

Finally, recalling (24) and the definition of \(\rho \) we conclude the proof of Theorem (1); we refer to Appendix A for the closed-form formula for \(\rho \). \(\square \)

The results presented in this section could be directly applied to various other non-parametric quantile estimators and to the unbiased variance estimators.Footnote 6 This is summarised in the next two remarks.

Remark 1

The standard formula for the whole sample (unbiased) variance uses \(n-1\) instead of n in the denominator. In the conditional case, this would be reflected in the different formula for (7), where \(m_n\) is replaced by \(m_n-1\). Note that the statement of Theorem 1 remains valid for the modified conditional variance estimator due to the combination of the Slutsky’s Theorem and the fact that \((m_n-1)/m_n\rightarrow 1\).

Remark 2

When defining the conditional sample variance (7), we used \([n\alpha ]+1\) and \([n\beta ]\) as the limits of the summation in (7) and (8). This choice corresponds to the non-parametric \(\alpha \)-quantile estimator given by \(X_{([n\alpha ])}\).

In the literature there exist many different formulas for non-parametric quantile estimators, most of which are bounded by the nearest order statistics; see Hyndman and Fan (1996) for details. It is relatively easy to show that all results presented in this section hold true if we replace \([n\alpha ]\) and \([n\beta ]\) by suitably chosen sequences that correspond to different empirical quantile choices. For completeness, we provide a more detailed description of this statement.

Consider sequences \((\alpha _n)\) and \((\beta _n)\) such that \(n\alpha -\alpha _n\) and \(\beta _n-n\beta \) are bounded, and define \({\tilde{m}}_n:=\beta _n-\alpha _n\). The corresponding conditional sample mean and variance are given by

Then, we can replace \({\overline{X}}_A\) and \({\hat{\sigma }}^2_A\) by \({\bar{X}}_{A}^{*}\) and \({\hat{\sigma }}^{2,*}_{A}\) in Theorem 1 as well as in all lemmas presented in the section.

Instead of showing a full proof, we briefly comment how to show consistency of quantile estimators as well as comment on counterparts of (11) and (16). All proofs could be translated using a very similar logic.

First, noting that for some \(k\in {\mathbb {N}}\) we get \(X_{([n\alpha ]-k)}\le X_{(\alpha _n)}\le X_{([n\alpha ]+k)}\) and \(X_{([n\beta ]-k)}\le X_{(\beta _n)}\le X_{([n\beta ]+k)}\), it is straightforward to check that \(X_{(\alpha _n)}\) and \(X_{(\beta _n)}\) are consistent \(\alpha \)-quantile and \(\beta \)-quantile estimators; see e.g. (Serfling 1980, Sect. 2.3). Second, to show the analogue of (11), it is enough to use the boundedness of \(n\alpha -\alpha _n\) and \(\beta _n-n\beta \), and note that \(\frac{n\alpha -\alpha _n}{n}\rightarrow 0\) and \(\frac{\beta _n-n\beta }{n}\rightarrow 0\). Third, to show (16), it is enough to use boundedness of \(n\alpha -\alpha _n\) and note that for some \(k\in {\mathbb {N}}\) we get \( \frac{\left| n\alpha -\alpha _n\right| }{{\tilde{m}}_n/\sqrt{n}}\le \frac{k}{{\tilde{m}}_n}\sqrt{n}=\frac{{\tilde{m}}_n}{n}\frac{k}{\sqrt{n}} \).

6 Empirical example: case study of market stock returns

In this section we apply the proposed framework to stock market returns performing a basic sanity-check verification. Before we do that, let us comment on the connection between the 20-60-20 Rule and financial time series.

Assuming that X describes financial asset return rates we can split the population using 20/60/20 ratio and check the behaviour of returns within each subset. If non-normal perturbations are observed only for extreme events, the 20/60/20 break might identify the regime switch and provide a good spatial clustering; this could be linked to a popular financial stylised fact saying that average financial asset returns tend to be normal, but the extreme returns are not—see Cont (2001) and Sheikh and Qiao (2010) for details. It should be emphasized that according to authors’ best knowledge, the link between this property and data non-normality was not discussed in the literature before.

The easiest way to verify this hypothesis is to take stock return samples for different periods, make the quantile–quantile plots (with standard normal as a reference distribution) and check if the clustering is accurate. In Fig. 4, we present exemplary results for two major US stocks, namely GOOGL and AAPL, and two major stock indices, namely S&P500 and DAX; we took time-series of length 250 for different time intervals ranging the period from 10/2015 to 01/2018.Footnote 7

From Fig. 4 we see that this division is surprisingly accurate: a very good normal fit is observed in the M set (middle 60% of observations), while the fit in the tail sets L and R (bottom and top \(20\%\) of observations) is bad. By taking different sample sizes, different time-horizons, and different stocks we can confirm that this property is systematic, i.e. the results are almost always similar to the ones presented in Fig. 4.

While the presence of fat-tails in asset return distributions is a well-known observation in the financial world, it is quite surprising to note that the non-normal behaviour could be seen for approximately 40% of data. Also, test statistic N can be used to formally quantify this phenomenon and to measure tail heaviness: the bigger the conditional standard deviation in the tails (in reference to the central part), the fatter the tails.

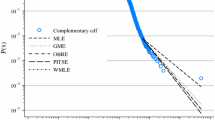

In the following, we focus on assessing the performance of the test statistic N on market data. We perform a simple empirical study and take returns of all stocks listed in S&P500 index on 16.06.2018 that have full historical data in the period from 01.2000 to 05.2018. This way we get full data (4610 daily adjusted close price returns) for 381 stocks. Next, for a given sample size \(n\in \{50, 100, 250\}\) we split the returns into disjoint sets of length n, and for each subset we compare the value of N with the corresponding empirical quantiles presented in Fig. 2. More precisely, using N we perform a right-sided statistical test and reject normality (null) hypothesis if the computed value is greater than the empirical value \(F^{-1}_{n}(1-\alpha )\), for \(\alpha \in \{1\%, 2.5\%, 5\%\}\).

To assess test performance, we compare the results with other benchmark normality tests: Jarque–Bera test, Anderson–Darling test, and Shapiro–Wilk test. While the non-normality of returns is a well-known fact, and all testing frameworks should show good performance, we want to check if our framework leads to some new interesting results. We check the normality hypothesis and compute two supplementary metrics that are used for performance assessment:

-

Statistic T gives the total rejection ratio of a given test. It corresponds to the proportion of data on which the normality assumption was rejected at a given significance level; it is the ratio of rejected data subsamples to all data subsamples.

-

Statistic U gives the unique rejection ratio of a given test. It corresponds to the proportion of data on which normality assumption was rejected at a given significance level only by the considered test (among all four tests); it is the ratio of uniquely rejected data subsamples to all data subsamples.

The combined results for all values of n and \(\alpha \) are presented in Table 4.

One can see that the statistic N performs very well and gives the best results for all choices of n. Surprisingly, our testing framework allows one to detect non-normal behaviour in cases when other tests fail: the outcomes of measure U are material in all cases. For example, for \(n=50\) and \(\alpha =5\%\), the value of U was equal to \(5.1\%\)—this corresponds to almost \(11\%\) of all rejected samples. The results are especially striking for \(n=250\), where the normality assumption was rejected in almost all cases (ca. 90%). While one might think that for such a big sample size the three classical tests should detect all abnormalities, our test still uniquely rejected normality in multiple cases. For \(\alpha =1\%\), the normality was rejected for additional 262 samples (\(3.8\%\) of the population). For transparency, in Fig. 5 we show exemplary data subset for which this happened.

7 Concluding remarks and other applications

In this paper we have shown that the test statistic N introduced in (3) could be used to measure the heaviness of the tails in reference to the central part of distribution and could serve as an efficient goodness-of-fit normality test statistic. Test statistic N is based on the conditional second moments, performs quite well on market financial data, and allows one to detect non-normal behaviour where other benchmark tests fail.

As mentioned in the introduction, most empirical studies suggest that the normality tests should be chosen carefully as their statistical power varies depending on the context. Our proposal proves to have the best test power in the cases when the true distribution is assumed to be symmetric and have tails that are fatter or slimmer than the normal one. It should be noted that our test is in fact based on the implicit distribution symmetry assumption. Indeed, in (3), the impact of left and right tail is taken with the same weight. Nevertheless, this could be easily generalised e.g. by considering only one of the tail variances; we comment on that later.

In Theorem 1 we proved that the asymptotic distribution of N is normal under the normality null hypothesis. This allows us to study the shape of rejection intervals for sufficiently large samples. To obtain this result, in Lemma 4 we derived the asymptotic distribution of the conditional sample variance.

Also, we showed that the 20-60-20 Rule explains the financial stylised fact related to tail non-normal behaviour and provides surprisingly accurate clustering of asset return time series. Quite surprisingly, non-normality is visible for almost 40% of the observations.

In summary, we believe that tail-impact tests based on the conditional second moments are very promising and provide a nice alternative to the classical framework based e.g. on the third and fourth moments.

For example, the multivariate extension of the test statistic N could be defined using the results presented in Jaworski and Pitera (2016), e.g. to assess the adequacy of using the correlation structure for dependence modeling. Also, this could be extended to any multivariate elliptic distribution using the results from Jaworski and Pitera (2017).

The construction of N shows how to use conditional second moments for statistical purposes. In fact, one might introduce various other statistics that test underlying distributional assumptions. Let us present a couple of examples:

-

We can test only the (left) low-tail impact on the central part by considering one of test statistics

$$\begin{aligned} N_1:= \left( \frac{{{\hat{\sigma }}}^2_L-{{\hat{\sigma }}}^2_M}{{{\hat{\sigma }}}^2}\right) \sqrt{n},\quad N_2:= \left( \frac{{{\hat{\sigma }}}^2_L-{{\hat{\sigma }}}^2_M}{{{\hat{\sigma }}}_{M}^2}\right) \sqrt{n}. \end{aligned}$$ -

For any quantile-based conditioning sets A and B, and any elliptical distribution, one can introduce the statistic

$$\begin{aligned} N_3 := \left( \frac{{{\hat{\sigma }}}^2_A}{{{\hat{\sigma }}}^2_B}-\lambda \right) \sqrt{n}, \end{aligned}$$where \(\lambda \in {\mathbb {R}}\) is a constant depending on the quantiles that define conditioning sets and the underlying distribution. Assuming that \(A=L\) and \(B={\mathbb {R}}\) (whole space), we get the proportion between the tail dispersion and overall dispersion. In this specific case, in the normal framework, we get

$$\begin{aligned} \lambda =1-\tfrac{\varPhi ^{-1}(0.2)\phi (\varPhi ^{-1}(0.2))}{0.2}-\tfrac{(\phi (\varPhi ^{-1}(0.2))^2}{0.2^2}; \end{aligned}$$see (Jaworski and Pitera 2016, Sect. 3) for details.

Note that under the normality assumption all proposed statistics are pivotal quantities which facilitates an easy and efficient hypothesis testing; the asymptotic distribution for all statistics could be derived using similar reasoning as the one presented in Theorem 1.

Notes

In fact, this equality is true for the ratio very close to 20/60/20, i.e. for upper and lower quantiles equal to approximately 0.198. For transparency, we have decided to use the rounded numbers here; see Sect. 5 for details.

Please note that results presented for Asymmetric Power Distribution (APD) with symmetry parameter \(\alpha =0.5\) correspond to results presented for Generalised Normal (GN) distribution; we refer to Section 2 in Desgagné and Lafaye de Micheaux (2018) for details.

The results for other distributions are consistent with those presented in Table 2 and could be obtained from authors upon request.

Note that for degenerate cases \(\alpha =0\) and \(\beta =1\), we get \(a=-\infty \) and \(b=\infty \), respectively. In those cases, the convention \(0\cdot \infty =0\) should be used.

For \(\alpha =0\) or \(\beta =1\) the convention \(0\cdot \pm \infty =0\) is used.

In particular, this refers to the estimator implemented via quantile function in R that was used in Fig. 1.

Data is downloaded from Yahoo Finance via R tidyquant package.

Recall that for \(\alpha =0\) or \(\beta =1\) we follow the convention \(0\cdot \pm \infty =0.\)

References

Alexander C (2009) Market risk analysis, quantitative methods in finance, vol I. Wiley, Hoboken

Anderson TW, Darling DA (1954) A test of goodness of fit. J Am Stat Assoc 49(268):765–769

Brockwell P, Davis R (2016) Introduction to time series and forecasting. Springer, New York

Cont R (2001) Empirical properties of asset returns: stylized facts and statistical issues. Quant Finan 1(2):223–236

Desgagné A, Lafaye de Micheaux P (2018) A powerful and interpretable alternative to the Jarque-Bera test of normality based on 2nd-power skewness and kurtosis, using the Rao’s score test on the APD family. J Appl Stat 45(13):2307–2327

Ferguson T (1996) A course in large sample theory. Springer, Dordrecht

Hair J, Black W, Babin B, Anderson R (2013) Multivariate data analysis: Pearson new, international edn. Pearson Education Limited, London

Henze N (2002) Invariant tests for multivariate normality: a critical review. Stat Pap 43(4):467–506

Hyndman RJ, Fan Y (1996) Sample quantiles in statistical packages. Am Stat 50(4):361–365

Jarque CM, Bera AK (1980) Efficient tests for normality, homoscedasticity and serial independence of regression residuals. Econom Lett 6(3):255–259

Jaworski P, Pitera M (2016) The 20–60-20 rule. DISCRETE CONT DYN-B 21(4)

Jaworski P, Pitera M (2017) A note on conditional covariance matrices for elliptical distributions. Stat Probabil Lett 129:230–235

Johnson NL, Kotz S, Balakrishnan N (1994) Continuous univariate distributions, vol 1. Wiley, New York

Kaufman L, Rousseeuw PJ (2009) Finding groups in data: an introduction to cluster analysis, vol 344. Wiley, Hoboken

Madansky A (2012) Prescriptions for working statisticians. Springer, New York

McNeil AJ, Frey R, Embrechts P (2010) Quantitative risk management: concepts, techniques, and tools. Princeton University Press, Princeton

Nadarajah S (2005) A generalized normal distribution. J Appl Stat 32(7):685–694

Romão X, Delgado R, Costa A (2010) An empirical power comparison of univariate goodness-of-fit tests for normality. J Statl Comput Simul 80(5):545–591

Romesburg C (2004) Cluster analysis for researchers. Lulu Press, Morrisville

Serfling R (1980) Approximation theorems of mathematical statistics. Wiley series in probability and mathematical statistics. Wiley, New York

Sheikh A, Qiao H (2010) Non-normality of market returns: a framework for asset allocation decision making. J Altern Invest 12(3):8–35

Stigler SM (1973) The asymptotic distribution of the trimmed mean. Ann Stat 1(3):472–477

Thadewald T, Büning H (2007) Jarque-Bera test and its competitors for testing normality—a power comparison. J Appl Stat 34(1):87–105

Thode HC (2002) Testing for normality. Marcel Dekker, New York

Tumlinson S, Keating J, Balakrishnan N (2016) Linear estimation for the extended exponential power distribution. J Stat Comput Simul 86(7):1392–1403

Wilk MB, Shapiro SS (1965) An analysis of variance test for normality (complete samples). Biometrika 52(3–4):591–611

Acknowledgements

Jagiellonian University in Krakow.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Part of the work of the second author was supported by the National Science Centre, Poland, via project 2016/23/B/ST1/00479.

The authors would like to thank the anonymous referees for their helpful comments and suggestions which improved greatly the final manuscript.

Closed-form formula for the normalising constant

Closed-form formula for the normalising constant

In this section, we present the closed-form formula for the normalising constant \(\rho \) from Theorem 1. For brevity, we omit detailed calculations and only present the outcome.

To ease the notation, for any \(\gamma \in [0,1]\), we set \( x_{\gamma } :=\varPhi ^{-1}(\gamma ). \) Then, for any \(A=A[\alpha ,\beta ]\), the standardised second, third, and fourth conditional central moments are given byFootnote 8

Moreover, the standardised conditional mean, conditional variance, and conditional kurtosis are equal to

Also, recall that \({\tilde{q}}=\varPhi (x)\), where x is the unique negative solution of the equation

Now, we are ready to present the closed-form formula for \(\rho \); see Corollary 1.

Corollary 1

The normalising constant \(\rho \) from Theorem 1 is given by

where for \(A\in \{L,M,R\}\) we have

and constants \(C_1\), \(C_2\), and \(C_3\) are given by

Approximately, the value of \(\rho \) is equal to 1.7885.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jelito, D., Pitera, M. New fat-tail normality test based on conditional second moments with applications to finance. Stat Papers 62, 2083–2108 (2021). https://doi.org/10.1007/s00362-020-01176-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-020-01176-2