Abstract

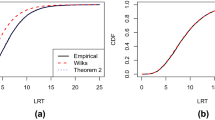

For the special case of balanced one-way random effects ANOVA, it has been established that the generalized likelihood ratio test (LRT) and Wald’s test are largely equivalent in testing the variance component. We extend these results to explore the relationships between Wald’s F test, and the LRT for a much broader class of linear mixed models; the generalized split-plot models. In particular, we explore when the two tests are equivalent and prove that when they are not equivalent, Wald’s F test is more powerful, thus making the LRT test inadmissible. We show that inadmissibility arises in realistic situations with common number of degrees of freedom. Further, we derive the statistical distribution of the LRT under both the null and alternative hypotheses \(H_0\) and \(H_1\) where \(H_0\) is the hypothesis that the between variance component is zero. Providing an exact distribution of the test statistic for the LRT in these models will help in calculating a more accurate p-value than the traditionally used p-value derived from the large sample chi-square mixture approximations.

Similar content being viewed by others

References

Castellacci G (2012) A formula for the quantiles of mixtures of distributions with disjoint supports. http://ssrn.com/abstract=2055022. Accessed 15 April 2013

Christensen R (1984) A note on ordinary least squares methods for two-stage sampling. J Am Stat Assoc 79:720–72

Christensen R (1987) The analysis of two-stage sampling data by ordinary least squares. J Am Stat Assoc 82:492–498

Christensen R (2011) Plane answers to complex questions: the theory of linear models, 4th edn. Springer, New York

Christensen R (2019) Advanced linear modeling: statistical learning and dependent data, 3rd edn. Springer, New York

Cloud MJ, Drachman BC (1998) Inequal Appl Eng, 1st edn. Springer, New York

Crainiceanu CM, Ruppert D (2004) Likelihood ratio tests in linear mixed models with one variance component. J R Stat Soc Ser B 66:165–85

Cuyt A, Petersen VB, Verdonk B, Waadeland H, Jones WB (2008) Handbook of continued fractions for special functions, pp 319–341

Dutka J (1981) The incomplete beta function–a historical profile. Arch History Exact Sci 24:11–29

Graybill FA (1961) An introduction to linear statistical models, vol I. McGraw Hill, New York

Greven S, Crainiceanu CM, Küchenhoff H, Peters A (2008) Restricted likelihood ratio testing for zero variance components in linear mixed models. J Comput Gr Stat 17(4):870–891

Harville DA (1997) Matrix algebra from a statistician’s perspective, 1st edn. Springer, New York

Herbach LH (1959) Properties of model II-type analysis of variance tests, a: optimum nature of the \(F\)-test for model II in the balanced case. Ann Math Stat 30(4):939–959

Johnson RA, Wichern DW (2002) Applied multivariate statistical analysis. Prentice hall, Upper Saddle River, NJ

Miller SS, Mocanu PT (1990) Univalence of Gaussian and confluent hypergeometric functions. Proc Am Math Soc 110(2):333–342

Lavine M, Bray A, Hodges J (2015) Approximately exact calculations for linear mixed models. Electron J Stat 9(2):2293–2323

Molenberghs G, Verbeke G (2007) Likelihood ratio, score, and wald tests in a constrained parameter space. Am Stat 61(1):22–27

O’Connor AN (2011) Probability distributions used in reliability engineering. RIAC, Maryland

Qeadan F, Christensen R (2014) New stochastic inequalities involving the F and Gamma Distribut. J Inequal Spec Funct 5(4):22–33

Scheipl F, Greven S, Küchenhoff H (2008) Size and power of tests for a zero random effect variance or polynomial regression in additive and linear mixed models. Comput Stat Data Anal 52(7):3283–3299

Self SG, Liang KY (1987) Asymptotic properties of maximum likelihood estimators and likelihood ratio tests under nonstandard conditions. J Am Stat Assoc 82(398):605–610

Wiencierz A, Greven S, Küchenhoff H (2011) Restricted likelihood ratio testing in linear mixed models with general error covariance structure. Electron J Stat 5:1718–1734

Yan L, Zhang G (2010) The equivalence between likelihood ratio test and f-test for testing variance component in a balanced one-way random effects model. J Stat Comput Simul 80:443–450

Acknowledgements

We acknowledge the reviewers greatly for their valuable time in reviewing the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Supplementary material includes (i) Proof for the PPOs Properties in (15), (ii) Illustration for the proof of Lemma 2, and (iii) Proof of Lemma 3.

1.1 Proof for the PPOs Properties in (15)

Firstly, \({\tilde{M}}=M_{*}+M_2\): This result is an immediate consequence of conditions (b) and (c) of Sect. 1.3. In particular, since \(C({\tilde{X}})= C(X_{*}, (I-M_1)X_2)\) then by defintion of PPO

The equality in the third line of (83) is due to condition (b) which implies that \((I-M_1)X_{*}=X_{*}^{'}(I-M_1)=0\).

Secondly, \(M_{*}M_1=M_{*}\): This result is an immediate consequence of condition (b). In particular, since \(C(X_{*})\subset C(X_1)\) then \(X_{*}=X_1B\) for some matrix B and therefore, by definition of PPO,

Thus, using \(M_{*}\) from (84) and \(M_1\) from (13) gives

Thirdly, \(M_{1}M_2=0\): This results is trivially obtained by simply multiplying \(M_1\) from (13) and \(M_2\) from (14).

Fourthly, \(M=M_1+M_2\): Let \(X=[X_1, X_2]\) such that M is the PPO onto C(X). Since \(M_1M_2=0\), then \(C(M_1)\perp C(M_2)\) and hence \(M=M_1+M_2\) is a PPO onto \(C(M_1,M_2)\) by Theorem B.45 of Christensen (2011). But \(C(M_1,M_2)=C(X_1,(I-M_1)X_2)\) since \(C(M_1)=C(X_1)\) and \(C(M_2)=C((I-M_1)X_2)\). So, it remains to prove that \(C(X_1,X_2)=C(X_1,(I-M_1)X_2)\) to complete the proof. To do so, we use the fact that \(C(A_1) = C(A_2)\) iff there exist \(B_1\) and \(B_2\) such that \(A_1 = A_2 B_2\) and \(A_2=A_1 B_1\) as follows.

and

That is, \(C(X_1, (I-M_1)X_2)\subset C(X_1, X_2)\) and \(C(X_1, X_2)\subset C(X_1, (I-M_1)X_2)\) so that \(C(X_1,X_2)=C(X_1,(I-M_1)X_2)\) as desired. \(\square \)

Appendix 2

1.1 Illustration for the proof of Lemma 2

Let \(\lambda =-\,\frac{a}{b}\). Then

However, the determinant \(|P-\lambda I_n|\) in (88) is the characteristic polynomial of P which equals to (89) since 1 and 0 are the eigenvalues for P with multiplicity r(P) and \(n - r(P)\) respectively.

Hence, substituting (89) in (88) gives the desired result

\(\square \)

Appendix 3

1.1 Proof of Lemma 3

When \(x_2> x_1> 0\), we have a standard maximization problem for a function of two variables. Setting the partial derivatives to zero gives

and

Let \(g_{x_ix_j}=\frac{\partial }{\partial x_j}\left( \frac{\partial }{\partial x_i}g(x_i,x_j)\right) \) for \(i,j\in \{1,2\}\). Then, according to the second derivative test, we have

with \(D(Q_1, Q_2)=\frac{q_1q_2}{Q_1^2Q_2^2}>0\) and \(g_{x_1x_1}(Q_1, Q_2)=\frac{-q_1}{Q_1^2}<0\) so that \((x_1, x_2)=(Q_1, Q_2)\) is a maximum point. Thus, if \(Q_2>Q_1>0\) then the point \((Q_1,Q_2)\) is in the interior and maximizes the function within the interior; i.e. a local maximum.

When \(x_1=x_2:=x\), using direct substitution, the problem reduces to maximizing the function of one variable

over \(R^{+}\). So, setting the partial derivative of g(x) to zero gives

Now, using the second derivative test, we have

with

so that \((x_1, x_2)=\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}, \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) is a maximum point on the boundary of the domain.

Now, we show that if \(Q_2>Q_1>0\) then the maximum in the interior is a global maximum. Note that when the maximum is in the interior at \((Q_1,Q_2)\) it attains the value

Further, when the maximum is on the boundary at \(\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}, \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) it attains the value

Showing that \(g(Q_1,Q_2)>g\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) is the same as showing

which is true due to Jensen’s Inequality:

Let Q be a r.v. such that \(P(Q=Q_1)=\frac{q_1}{q_1+q_2}\) and \(P(Q=Q_2)=\frac{q_2}{q_1+q_2}\) then by Jensen’s Inequality we have

Now, we show that if \(Q_1>Q_2>0\) then the maximum in the boundary is a global maximum. Note that if \(Q_1> Q_2>0\), there are no critical points of the function within the interior. Further, we know that \(g(x_1,x_2)\) goes to \(-\infty \) in both \(x_1\) and \(x_2\) which forces the maximum on the boundary at \(\left( \frac{q_1Q_1+q_2Q_2}{q_1+q_2}, \frac{q_1Q_1+q_2Q_2}{q_1+q_2}\right) \) to be a global maximum. \(\square \)

Rights and permissions

About this article

Cite this article

Qeadan, F., Christensen, R. On the equivalence between the LRT and F-test for testing variance components in a class of linear mixed models. Metrika 84, 313–338 (2021). https://doi.org/10.1007/s00184-020-00777-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-020-00777-z