Abstract

In this work, the Chebyshev collocation scheme is extended for the Volterra integro-differential equations of pantograph type. First, we construct the operational matrices of pantograph and derivative based on Chebyshev polynomials. Also, the obtained operational matrices are utilized to approximate the derivatives of unknown functions. Furthermore, a detailed analysis of convergence is discussed in the weighted square norm. We conduct some numerical experiments to verify the high performance of the suggested numerical approach. The results show that the computational scheme is accurate.

Similar content being viewed by others

1 Introduction

The main object of this current study is to extend a Chebyshev collocation scheme to calculate the following Volterra integro-differential equation of pantograph type:

with \(y(0)=y_{0}\), where \(0< q<1\). The kernel functions \(k_{1}(t,s)\), \(k_{2}(t,s)\) are defined in \(D=\{(t,s): 0\leq t \leq T, 0\leq s \leq t\}\) and \(D_{q}=\{(t,s): 0\leq t \leq T, 0\leq s \leq qt\}\), respectively. Assume the known functions \(a_{1}(t), a_{2}(t), b(t)\in C^{m}([0,T])\), \(k_{1}(t,s) \in C^{m}(D)\), and \(k_{2}(t,s) \in C^{m}(D_{q})\) for \(m\geq 0\). Then, for each initial value \(y_{0}\), the considered problems have a unique solution \(y(t)\in C^{m+1}([0,T])\) [1].

These equations appear in many fields of science, including biology, physics, finance, and so on [2]. In the past few decades, integro-differential equations have attracted widespread attention. In general, it is impossible to obtain analytical solutions to integro-differential equations. Therefore, many scholars are devoted to searching an effective approximate method to solve this class of problems. In view of numerical method, several types of integro-differential equations have been treated recently by different techniques. In [3, 4] the authors developed classic Runge–Kutta methods for the considered problems. The author of [5, 6] researched the discussed problems by means of finite element methods. Furthermore, there are a major number of research works on the spectral method for the aforementioned problem, we can refer to the works [7] and [8]. The authors of [9, 10] proposed a Legendre collocation method for multiple delays differential and integral equations. Meanwhile, Wei and Chen [11] extended successfully this method to handle the proportional delays-type Volterra integro-differential equations in detail. In addition, Zhao et al. [12] worked with a similar technique based on Legendre polynomials for nonlinear Volterra integro-differential equations with nonvanishing delays. In [13] the authors applied the sinc function and collocation method to deal with proportional delay Volterra integro-differential equation. Recently, many researchers used the operational matrix methods to solve these types of integral equations [14–16]. Furthermore, the authors in [17, 18] developed the above numerical scheme for fractional order partial integro-differential equations. More recently, the operational matrix together with the tau method was utilized to deal with the Volterra integro-differential equation with pantograph delay in [19]. Yang [20] and Deng [21] employed Jacobi polynomials and Galerkin methods to calculate this type of equations with singular and noncompact kernels, respectively. In [22] the mapped Laguerre collocation methods have been implemented for solving the types of problems with noncompact kernels.

In this paper, we develop an improved Chebyshev operational matrix method to handle the considered problem described in (1). First, we formulate the operational matrices of pantograph based on shifted Chebyshev polynomial. Then, we construct the discrete computational scheme that the variable coefficients are not approximated. We provide convergence analysis in \(L^{2}\)-norm in detail.

2 Shifted Chebyshev operational matrix of derivative

\(T^{*}_{n}(t)\) denotes the shifted Chebyshev polynomials which are defined as follows:

where \(T_{n}(t)\) are the standard Chebyshev polynomials, \(\mathbb{N}_{0}=\mathbb{N}\bigcup \{0\}\), and \(\mathbb{N}\) denotes a set of positive integers. Also, \(T^{*}_{n}(t)\) can be derived by the following recursive relationship:

where \(T^{*}_{0}(t)=1\), \(T^{*}_{1}(t)=2t/T-1\). Moreover, the system of \(T^{*}_{n}(t)\), \(n\in \mathbb{N}_{0} \) satisfies a discrete orthogonality property as follows:

where \(t_{k}=\frac{T}{2}(1-\cos (k\pi /N))\), \(k=0,1,\ldots ,N\).

For any function \(y(t)\in C[0,T]\) can be approximated as

According to the discrete orthogonality property in (2), we can denote the coefficient \(c_{k}\) as

Applying (3) and (4), we can rewrite \(y_{N}(t)\) in the matrix form

in which

and

So, the values of derivatives of \(y_{N}(t)\) are simply computed by

where

\(\boldsymbol{D^{(1)}}\) is the operational matrix of derivative (see [23]).

3 Operational matrix of pantograph

In a similar manner with the operational matrix of derivative, we have

where

and

Here, Q denotes the operational matrix of pantograph based on shifted Chebyshev polynomials.

Now, we consider handling the part of integral term in (1):

Then, applying (5), we get

So, we approximate the first integral term

where

and

where

Now we consider to calculate the element \(\boldsymbol{G}_{ij}=\int _{0}^{t_{i}}k_{1}(t_{i},s)T^{*}_{j}(s)\,ds \) of the matrix G. With the help of Gaussian quadrature formulas, we have

where \(\{\omega _{r}\}_{r=0}^{N}\) are the Chebyshev weights. Using a similar process, the second part of integral term in (1) can be approximated

By (5) and Gauss quadrature formulas, the following operational matrix \(\boldsymbol{F_{q}}\) is obtained:

where

and

where

and

4 Proposed numerical scheme

In this section, we are devoted to solving the considered problem (1) by previously derived operational matrix. First, substituting matrix relations (6), (7), (8), and (9) into (1), we have

where

Denoting the expression in parenthesis of (10) by C, the above system of equations (10) can be written as

where

Then we incorporate the initial value condition \(y(0)=y_{0}\) in (10) and obtain

Thus, we can get the unknown vector Y and \(y_{N}(t)\) by solving the above equations.

5 Some useful notations and lemmas

First, we present some lemmas and notations, which are necessary for the error analysis. \(L_{\omega ^{\alpha ,\beta}}^{2}(I)\) denotes a space of functions u for which \(\|u\|_{L_{\omega ^{\alpha ,\beta}}^{2}}<\infty \) with

where \(\omega ^{\alpha ,\beta}(x)\) is the weight function on \(I:=(-1,1)\). For \(m\geq 0\), \(m\in \mathbb{N}_{0}\), define

and

Particularly, let \(\omega (x)\) denote the Chebyshev weight function when \(\alpha =\beta =-1/2\).

For given \(N\in \mathbb{N}\), \(\mathbb{P}_{N}\) denotes the set of all real polynomials of degree less than N. Given any function \(u\in C[-1,1]\), the Lagrange interpolation polynomial of u is

which satisfies

where \(F_{i}(x)\) and \(\{x_{i}\}_{i=0}^{N}\) are the Lagrange polynomials and Chebyshev Gauss–Lobatto points, respectively.

Lemma 1

([24])

For any function \(u\in H^{m}_{\omega}(I)\) with \(m\geq 1\), \(I_{N}u\) is its interpolation polynomial. Then we have the estimates

According to Lemma 1, the following inequality can be proved. For the Gauss-type quadrature formulas, we have

where \(u\in H^{m}_{\omega}(I)\), \(\phi \in \mathbb{P}_{N}\), and \((u,\phi )_{N}\) is the discrete inner product associated with a Gauss-type quadrature.

Lemma 2

([25])

For a bounded function \(u(x)\), we have the following inequality:

where \(\{F_{i}(x)\}_{i=0}^{N}\) are the Lagrange interpolation polynomials.

Lemma 3

Suppose \(0\leq M_{1}\), \(M_{2}<+\infty \) and

Assume more that \(E(x)\) and \(H(x)\) are nonnegative integrable functions, then

6 Convergence analysis

Consider (1) again. By using \(t=T(1+x) /2\), the interval \([0,T]\) can be transformed to \([-1,1]\). So (1) can be rewritten as follows:

where

where \(x \in [-1,1] \). Furthermore, by using the following linear transformations:

equation (12) can be written as follows:

where

Theorem 4

If \(u_{N}(x)\) and \(u(x)\) are the approximate and analytical solutions of (12) with (13), respectively, assume that \(u(x)\) is sufficiently smooth, then

for N sufficiently large, where \(M=\max_{x\in [-1,1]}|K_{1}(x,t)|_{H_{\omega}^{m;N}(I)}+ \max_{x\in [-1,1]}|K_{2}(x,t)|_{H_{\omega}^{m;N}(I)}\).

Proof

First, we insert the Gauss–Lobatto collocation points \(\{x_{i}\}_{i=0}^{N}\) into (14) and obtain

and

We use \(u_{N}(x_{i})\) to approximate \(u(x_{i})\) and

where \(F_{i}(x)\) is the Lagrange interpolation polynomials. Next, we consider the integral terms involved in (15)

where \(\{\tau _{r}\}_{r=0}^{N}\) coincides with the collocation points \(\{x_{i}\}_{i=0}^{N}\). In a similar way we deal with

So, our numerical scheme can be reformulated as

For ease of analysis, the above numerical scheme can be rewritten as follows:

where

and

Applying the Lagrange interpolation operator to (18) yields

where

Obviously, by (14),

By subtracting (19) from (20), we can obtain

where \(e'_{N}(x)=u'(x)-u'_{N}(x)\). Meanwhile, subtracting (17) from(16) yields

Similarly, applying the Lagrange interpolation operator to (22) yields

Consequently, we rewrite (21) as

where

Substituting (25) into the first integral part of (24) and applying Dirichlet’s formula that \(\int _{-1}^{x}\int _{-1}^{\tau}\phi (\tau ,s)\,ds\,d\tau =\int _{-1}^{x} \int _{s}^{x}\phi (\tau ,s)\,d\tau \,ds\), we obtain

Considering the second integral part of (24),

For the sake of applying Dirichlet’s formula, we transform the above equation to

Substituting (26) and (28) into (24), provided the integral exists, we obtain

where

and

By Lemma 3,

Using (25) gives

Then, by (30), (31), and \(C_{1}C_{2}<1\), we have

First, using Lemma 2 and (11) yields

Next, by Lemma 1, we have

In addition, by Lemma 1 for \(m=1\), we find that

Therefore, a combination of \(J_{i}\), \(i=1,2,\ldots ,9\), yields

where \(M=\max_{x\in [-1,1]}|K_{1}(x,t)|_{H_{\omega}^{m;N}(I)}+ \max_{x\in [-1,1]}|K_{2}(x,t)|_{H_{\omega}^{m;N}(I)}\). □

7 Numerical experiments

In this section, we carried out the proposed computational scheme for solving the considered problem in the form of (1).

Example 7.1

First, we investigate the problem as follows:

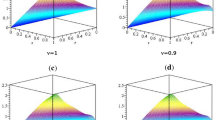

The analytical solution is \(y(t)=1-e^{-t}\). We implement the proposed computational scheme to calculate the problem with various polynomial degree N. Figure 1 illustrates the absolute error function \(|e_{N}(x)|=|u(x)-u_{N}(x)|\) for \(N=8,16\). Table 1 provides the computational results on the interval of \([0, 10]\). From the approximate solution, one can see high accuracy of the suggested numerical scheme.

Absolute errors for \(N=8,16\) on the interval of \([0, 1]\) for Example 7.1

Example 7.2

We consider

where

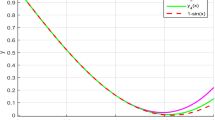

and then the analytical solution is \(y(t)=e^{t}-1\). Likewise, we implement the proposed computational scheme to solve the second problem with \(4\leq N\leq 20\) for \(T=1\) and \(16\leq N\leq 40\) for \(T=10\), various values of N and T. The obtained errors are plotted in \(L^{2}\)-norms in Fig. 2 for \(4\leq N\leq 40\). From Fig. 2, we know that the suggested numerical scheme is very effective. We compare the maximum absolute errors of our developed approach with sine collocation method [13]. The computational results are tabulated in Table 2. Moreover, a simple comparison between the two methods confirms the accuracy of the proposed computational scheme.

\(L^{2}-\) errors obtain by using our method for \(T=1,10\) for Example 7.2

8 Conclusion

We proposed a computational scheme for solving a class of Volterra integro-differential equations. The derivation of this scheme is based on Chebyshev operational matrices and the Gauss quadrature formula. Moreover, the convergence analysis for the present method is investigated in detail. We compare the computational results obtained in this work with other approximated methods. The comparison indicates that our approach is more accurate and efficient. Moreover, our proposed computational scheme can be extended for solving nonlinear problems of pantograph type.

Availability of data and materials

Not applicable.

References

Brunner, H.: Collocation Methods for Volterra Integral and Related Functional Differential Equations, vol. 15. Cambridge University Press, Cambridge (2004)

Atkinson, K.E.: The Numerical Solution of Integral Equations of the Second Kind. Cambridge University Press, Cambridge (1997)

Zhang, C., Vandewalle, S.: Stability analysis of Runge–Kutta methods for nonlinear Volterra delay-integro-differential equations. IMA J. Numer. Anal. 24(2), 193–214 (2004)

Wang, W., Li, S.: Convergence of Runge–Kutta methods for neutral Volterra delay-integro-differential equations. Front. Math. China 4(1), 195–216 (2009)

Brunner, H., Hu, Q.: Optimal superconvergence results for delay integro-differential equations of pantograph type. SIAM J. Numer. Anal. 45(3), 986–1004 (2007)

Ming, W., Huang, C., Zhao, L.: Optimal superconvergence results for Volterra functional integral equations with proportional vanishing delays. Appl. Math. Comput. 320, 292–301 (2018)

Brunner, H.: Recent advances in the numerical analysis of Volterra functional differential equations with variable delays. J. Comput. Appl. Math. 228(2), 524–537 (2009)

Brunner, H.: Current work and open problems in the numerical analysis of Volterra functional equations with vanishing delays. Front. Math. China 4(1), 3–22 (2009)

Ali, I., Brunner, H., Tang, T.: Spectral methods for pantograph-type differential and integral equations with multiple delays. Front. Math. China 4(1), 49–61 (2009)

Sheng, C., Wang, Z., Guo, B.: An hp-spectral collocation method for nonlinear Volterra functional integro-differential equations with delays. Appl. Numer. Math. 105, 1–24 (2016)

Wei, Y., Chen, Y.: Legendre spectral collocation methods for pantograph Volterra delay-integro-differential equations. J. Sci. Comput. 53(3), 672–688 (2012)

Zhao, J., Cao, Y., Xu, Y.: Legendre spectral collocation methods for Volterra delay-integro-differential equations. J. Sci. Comput. 67(3), 1110–1133 (2016)

Zhao, J., Cao, Y., Xu, Y.: Sinc numerical solution for pantograph Volterra delay-integro-differential equation. Int. J. Comput. Math. 94(5), 853–865 (2017)

Tohidi, E.: Application of Chebyshev collocation method for solving two classes of non-classical parabolic PDEs. Ain Shams Eng. J. 6(1), 373–379 (2015)

Singh, S., Patel, V.K., Singh, V.K., Tohidi, E.: Numerical solution of nonlinear weakly singular partial integro-differential equation via operational matrices. Appl. Math. Comput. 298, 310–321 (2017)

Hosseinpour, S., Nazemia, A., Tohidi, E.: Müntz–Legendre spectral collocation method for solving delay fractional optimal control problems. J. Comput. Appl. Math. 351, 344–363 (2019)

Dehestani, H., Ordokhani, Y., Razzaghi, M.: Pseudo-operational matrix method for the solution of variable-order fractional partial integro-differential equations. Eng. Comput. (2020). https://doi.org/10.1007/s00366-019-00912-z

Aslan, E., Kürkçü, Ö.K., Sezer, M.: A fast numerical method for fractional partial integro-differential equations with spatial-time delays. Appl. Numer. Math. 161, 525–539 (2021)

Zhao, J., Cao, Y., Xu, Y.: Tau approximate solution of linear pantograph Volterra delay-integro-differential equation. Comput. Appl. Math. 39, 46 (2020)

Yang, Y., Tohidi, E., Ma, X., Kang, S.: Rigorous convergence analysis of Jacobi spectral Galerkin methods for Volterra integral equations with noncompact kernels. J. Comput. Appl. Math. 366, 112403 (2019)

Deng, G., Yang, Y., Tohidi, E.: High accurate pseudo-spectral Galerkin scheme for pantograph type Volterra integro-differential equations with singular kernels. Appl. Math. Comput. 396, 135866 (2021)

Tang, Z., Tohidi, E., He, F.: Generalized mapped nodal Laguerre spectral collocation method for Volterra delay integro-differential equations with noncompact kernels. Comput. Appl. Math. 39(4), 1–22 (2020)

Yang, C.: Modified Chebyshev collocation method for pantograph-type differential equations. Appl. Numer. Math. 134, 132–144 (2018)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods: Fundamentals in Single Domains. Springer, Berlin (2006)

Paul, N.: Mean convergence of Lagrange interpolation. III. Trans. Am. Math. Soc. 282(2), 669–698 (1984)

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ji, T., Hou, J. & Yang, C. The operational matrix of Chebyshev polynomials for solving pantograph-type Volterra integro-differential equations. Adv Cont Discr Mod 2022, 57 (2022). https://doi.org/10.1186/s13662-022-03729-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-022-03729-1