Abstract

Several studies have explored the factors that influence self-efficacy as well as its contribution to academic development in online learning environments in recent years. However, little research has investigated the effect of a web-based learning environment on enhancing students’ beliefs about self-efficacy for learning. This is especially noticeable in the field of online distributed programming. We need to design online learning environments for programming education that foster both students’ self-efficacy for programming learning and the added value that students perceive of the tool as a successful learning environment. To that end, we conducted a quantitative analysis to collect and analyze data of students using an online Distributed Systems Laboratory (DSLab) in an authentic, long-term online educational experience. The results indicate that (1) our distributed programming learning tool provides an environment that increases students’ belief of programming self-efficacy; (2) the students’ experience with the tool strengthens their belief in the intrinsic value of the tool; however (3) the relationship between students’ belief in the tool intrinsic value and their self-efficacy is inconclusive. This study provides relevant implications for online distributed (or general) programming course teachers who seek to increase students’ engagement, learning and performance in this field.

Similar content being viewed by others

Introduction

One of the big concerns of distributed programming course teachers in distance learning is how to support and promote an engaging online learning experience and thus improve students’ learning and performance in distributed programming learning. Indeed, a major issue with online courses is to enhance students’ engagement, which is crucial to student success (Kehrwald, 2008; Olivier et al., 2020). At the same time, it is necessary to create an online learning environment that is cohesive and interactive (Gaytan & McEwen, 2007). This is even important in online environments that support distributed programming learning where students are faced with more complex programming assignments and algorithms (Pettit et al., 2015).

On the one hand, academic self-efficacy (SE) concerns student beliefs about one’s ability to successfully attain educational goals (Elias & MacDonald, 2007). In their systematic literature review, Honicke & Broadbent (2016) showed that a lot of literature exists that highlights the importance of SE for learning and academic performance. Recent research in e-learning tools and SE reveals the need for developing several artifacts in order to favor SE in online learning environments (Chen & Su, 2019; Fryer et al., 2020; Valencia-Vallejo et al., 2019). Yet, in his recent review, Yokoyama (2019) analyzed several studies that have examined the relationship between SE and academic performance in online learning. His review shows that SE tends to correlate with academic performance, similar to a general learning environment, though the specific characteristics of the online learning environment may affect the relationship between SE and academic performance.

On the other hand, it is a common practice that instructors examine the added value of e-learning tools in order to enhance students’ learning of course concepts (Florenthal, 2016). Besides, features of successful online learning environments, like interactivity, permit learners to actively engage with course content (Chou et al., 2010). When students feel engaged with an online learning environment, they also feel more motivated and challenged to spend more time on online assignments (Northey et al., 2015; Thai et al., 2020). In online computer programming courses, despite the increase of automated assessment tools in the last years, assessing programming assignments still remains a complex endeavor (Gordillo, 2019).

In the field of learning sciences, a wealth of research has shown that students’ SE and their perceived value of the online learning environment constitute critical factors driving students’ academic success (Bandura, 1993; Clayton et al., 2018; Jones & Jones, 2005; Moore & Wang, 2021; Wei & Chou, 2020). Yet, as far as we know, there is hardly any study that has investigated students’ beliefs regarding both programming SE and the intrinsic value of online learning environments for programming education. The online environment used in this work is an online Distributed Systems Laboratory (DSLab) which is based on the work of Marquès et al., (2020). The objective of this study is to examine whether the students’ active involvement in deploying and executing their programming assignments in DSLab results in an experience that enhances their beliefs both in the intrinsic value of the tool and programming SE.

Indeed, students can freely use the DSlab platform to submit each phase of their assignment and execute it, as many times as they wish, in order to assess the correctness of its code. As soon as students achieve a correct code, they can pass to submit the next phase until they complete the assignment. Students are able to use DSLab feedback from a previous execution to explore its logs, review their code for possible errors and thus improve their program. Immediate feedback constitutes an important feature of DSLab. Figure 1 presents the result of all submissions of a group of students for a certain period of time.

The rest of the paper is organized as follows: First we review the literature regarding students’ belief of SE and perceived value of online learning environments; then we proposs a model, defining the purpose of our study and research questions. In the following section we describe the methodology used in this study. Subsequently, we present the results, whereas in the next section we discuss and analyze these results with respect to the research questions. Finally, we present the conclusions and future work.

Literature review and research questions

Students’ self-efficacy (SE) in the context of online learning environments

In the first place, we have noticed a lack of previous research studies on students’ beliefs regarding SE in online distributed programming environments; that is, in online learning environments which are specifically employed for programming education. Because of this, we explore this issue in general online learning settings; that is, online learning environments not designed for a specific course or subject, but for supporting multiple courses over the full range of the academic program. A recent literature review on academic SE and academic performance in online learning indicates the existence of several factors that influence students’ SE in a positive or negative way (Yokoyama, 2019). Experience of mastery, observing someone succeed, and social persuasion (such as direct encouragement) may have a positive effect on students’ SE, whereas physiological factors such as perceptions of pain, fatigue and fear may have a negative effect.

Moreover, in online settings, attitude and digital literacy have a significant positive effect on SE (Prior et al., 2016; Lim et al., 2016) also found that SE is affected by content quality and system quality. Peechapol et al., (2018) argue that online learning does not readily foster opportunities for observing peer actions, performance and success. In their survey, they found that SE would be reduced if the learners fail to communicate and meet the performance of others. Consequently, an online learning system should enable students’ indirect experience in observing peers behaviors and actions when performing a task. This is also related to the effect of social influence on SE. However, more systematic research is needed in order to thoroughly explore the relationship between SE and students’ performance in online learning settings and identify the influential factors in this relationship.

Several studies explored different types of SE regarding technology (Tsai et al., 2011): Computer SE, which is defined as students’ perceived confidence regarding their ability to use a computer (Chen, 2017; Wolverton et al., 2020); Internet SE, which describes students’ perceptions about their own abilities to use the Internet (Jokisch et al., 2020); and, Internet-based Learning SE, which represents students’ confidence and self-belief in their ability to master an online course or an online learning activity (Lee et al., 2020). In their study, Johnson & Lockee (2018) stated that in online learning environments there is a gap in research that seeks to enhance an online learner’s SE with the goal of increasing academic achievement.

In online environments used for programming learning, Yukselturk and Bulut’s (2007) study revealed that SE correlated positively with students’ performance, revealing a mastery-approach goal and intrinsic motivation as influencing factors as well. In a more recent study, Tek et al., (2018) combined measurements from implicit theories and SE to examine student effort and performance in an introductory programming course. However, their model failed to explain more than 10% of the variance in course grades. Moreover, in order to explore students’ SE beliefs towards programming, computer programming SE scales were developed by several researchers (Korkmaz & Altun, 2014; Tsai et al., 2019).

Finally, the increase of systems for automatic assessment of programming assignments in the last years provides several advantages such as standard compiler/interpreter feedback and tracking of students’ actions. However, no systematic attempt was made to examine students’ SE for learning in these environments (Bey et al., 2018; Delgado-Pérez & Medina‐Bulo, 2020; Restrepo-Calle et al., 2019).

Students’ perceived value of online learning environments

We introduce the term intrinsic value of an online learning environment as the added value of students’ perceptions who consider it as a successful learning environment. This influences how the students interact with the environment, actively engage with course assignments, feel more motivated and challenged to spend more time in the environment, and ultimately learn effectively.

Again, no systematic studies exist that explore the intrinsic value of online environments used for distributed programming learning or for the automatic assessment of distributed programming assignments. Consequently, we initially explore perceived value in general online learning settings. Then, we focus in online programming courses and we examine the usefulness of automated assessment tools (AAT) that support these courses. We consider AATs in this review since these systems share similar features with our DSLab system but differ in functioning (general vs. distributed programming).

Florenthal (2016) examined students’ perceived value of interactive assignments in the online learning environment. She concluded that perceived value of interactive assignments is positively associated with attitude toward their completion as well as with satisfaction when completing them. However, the assessment of SE was not included in this study. Students’ perceptions of instructional quality may also have an impact on acceptance and use of an online learning environment (Larmuseau et al., 2019). Moreover, Rejón-Guardia et al., (2020) explored the role of subjective norms and social image for students’ acceptance of a personal learning environment based on Google apps. Social image proved to have a significant positive direct effect on perceived usefulness. Jeno et al., (2017) found that their mobile application tool contributes to higher perceived competence and intrinsic motivation compared to a traditional learning environment, which in turns enhances students’ belief in the intrinsic value of the tool. This was achieved since the tool provided interest, support for autonomy (i.e. choice) and competence (i.e. feedback), while matching each student’s skills and challenge. We take similar indicators into account in order to define the intrinsic value of our DSLab tool.

We now turn to review students’ perceived value of existing online environments used for programming learning or for the automatic assessment of programming assignments. However, few studies have thus far explored this issue, and when they did so, they have practically focused on the extrinsic value (perceived usefulness and perceived ease of use) of the tool.

In an initial survey of automated assessment tools (AAT), Pettit et al., (2015) analyzed the real usefulness of AATs in programming courses. The results of this survey indicate that students’ perceptions about the usefulness of AATs are inconclusive. Hence, in order to decrease negative student perceptions, the authors emphasize the importance for more systematic experimental research that takes the students’ opinions into account for determining the necessary factors and features to improve AATs’ design and use. Some assessment tools highlighted the use and importance of feedback when they analyze and assess programming assignments (Rubio-Sanchez et al., 2014; Stajduhar & Mausa, 2015; Vujošević-Janičić et al., 2013). However, all these systems do not provide a systematic way to explore the variety of attributes and factors that can promote the development of a programming assessment tool that matches the needs and interests of both teachers and students as regards its real usefulness.

More recent research on automated assessment tools focused on providing automated formative and formal summative assessment or customized contextual instructor guidance. Other functionalities include tracking of students’ actions, while examining students’ perceived usability and perceived effectiveness to some extent (Bakar et al., 2018; Carbonaro, 2019; Cardoso et al., 2020; Robinson & Carroll, 2017). Yet, there is a need of an in-depth evaluation of the users’ perceptions of the system’s utility in order to estimate the real extrinsic or intrinsic value of these tools.

Certainly, there are several relevant variables and salient factors that influence students’ value of an e-learning tool. Given its importance for student learning, one of the aims of this work is to explore the intrinsic value of the DSLab tool, a study which is missing in current literature. Specific focus is given on whether the students’ experience with the DSLab tool enhances their belief in the intrinsic value of the tool.

Proposed model

This work aims to cover two important aspects based on the student’s technical and programming competencies as well as his/her fruitful engagement with DSLab tool:

-

A.

self-evaluation of students’ programming self-efficacy (critical reflection of the students’ innate ability to use the tool effectively in order to perform the practical assignment successfully).

-

B.

evaluation of the intrinsic value of the DSLab tool (critical evaluation of the students’ experience with the tool; this is delineated by the students’ perceptions of the practical importance, interest and challenging opportunities from using the online learning environment that they experienced).

As regards (A), self-efficacy (SE) is the main aspect of Bandura’s social cognitive theory and can be developed from external experiences (EE) and self-perception (SP) (Bandura, 1988). As concerns the former (EE), the role of observational learning and social experience is crucial since students can also learn by observing others’ behaviors (Bandura, 1993). Besides, student’s social behaviors are influenced by the actions that the student has observed in others (Mischel & Shoda, 1995). As concerns the latter (SP), student’s cognitive processes are affected by students’ self-perception of their innate abilities, which endows them with high SE; this, in turn, enables them to exhibit coping behavior (goal striving) and thus make specific effort in order to achieve successful outcomes (Stajkovic & Luthans, 1998). This combines determination as well as perseverance to overcome obstacles and achieve goals (Maddux, 2009; Oettingen & Gollwitzer, 2007; Zimmerman et al., 1992). In online learning environments, students’ belief of SE can play a major role in how they manage goals, tasks and challenges (Johnson & Lockee, 2018; Luszczynska & Schwarzer, 2005).

As regards (B), the intrinsic value scale of the tool was constructed by taking into account the following indicators: the intrinsic interest in the tool (Davis et al., 1992); the perceived importance of working in the DSLab environment (Eccles, 1983); and, the preference for challenge and mastery goals (Harter, 1981). Mastery goals involve the aim of improving one’s own performance and gain task mastery; i.e., maintain a more mastery-oriented focus on learning (Wolters, 1998). These types of goals are positioned in broader motivational theory (Ames, 1992).

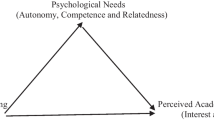

As a consequence of the previous discussion and our analysis of literature review, we proceed to propose a research model and research questions for this study. Figure 2 depicts the research model to be tested and analyzed. The links between the concepts of the model represent the possible relationships and paths that lead to the formulation of the three research questions we aimed to answer in this study (see Sect. 2.4).

Consequently, the upper part of the model is composed of the independent variables: Students’ E xternal E xperiences based on O bservation (EEO), Students’ S elf- P erception of D etermination (SPD), and Students’ S elf- P erception of G oal striving (SPG) as well as the dependent variable Students’ S elf- E fficacy (SE). The lower part of the model is composed of the independent variables: intrinsic int erest in the tool (INT), perceived imp ortance of working in the DSLab environment (IMP), and preference for ch allenge and m astery g oals (CH-MG) as well as the dependent variable T ool I ntrinsic V alue (TIV). Moreover, TIV also acts as a moderating variable for SE.

Purpose of the study

This study aimed to examine how the variables of external observation, determination, and goal striving as well as interest, working environment importance, and mastery-oriented focus on learning influence students to positively change their beliefs, including their SE for programming learning and belief about the intrinsic value (and acquired benefits) of the environment they use.

In view of the lack of such systematic studies in online distributed programming environments, this work examines the proposed model based on the analysis of students’ perceptions which are captured through a questionnaire and analyzed quantitatively in an authentic online learning situation. The following research questions (RQ) guided the study:

-

RQ1 – Does the DSLab tool provide an environment that increases students’ belief of programming SE?

-

RQ2 – Has the students’ experience with the DSLab tool strengthened their belief in the intrinsic value of the tool?

-

RQ3 – Has the students’ belief in the intrinsic value of the DSLab tool had a positive influence on their SE for programming learning?

Methods

Participants and experimental procedure

The realization of our case study involved undergraduate students in a Distributed Systems online course who had to carry out a programming assignment that comprised four consecutive implementation phases (phases: 1, 2, 3 and 4.1). The duration of the online course was seven weeks and 132 adult students were involved. All participants performed the same assignment which could be carried out either individually or in pairs (formed by students themselves). In any case, students could decide which option they preferred since the same task and assessment criteria were applied.

To facilitate students’ external experience, based on observation of others’ activity in the DSLab environment, we implemented a specific feature depicted in Fig. 3. In DSLab Home page a student s can observe the aggregated activity and behavior of his/her classmates in every implementation phase. This graphical representation is generated every time the student enters DSLab; so, a new page is uploaded and shows new data for students’ behavior in every implementation phase in real time.

Taking a closer look at Fig. 3, during phase 1 (marked with a red eclipse labeled A), 132 students have participated. Phase 1 is an obligatory activity performed individually. 99 from these students (75%) – green part of the horizontal bar – have performed at least one execution (a submission to be evaluated by DSLab), which has functioned correctly (succeeded). In this particular phase, no red section appears, since all students (99) who have submitted their assignment to be executed in DSLab have achieved at least one correct assessment by DSLab. The grey section of the bar indicates that there are students who have not sent any assignment to be evaluated yet. There were 132 − 99 = 33 such students.

During phases 2, 3 and 4.1 (marked with a blue eclipse labeled B), students formed groups of one or two members. 72 groups have participated; so, the activity result is shown for groups in each of these phases. In phase 2, 67 groups (93%) – green part of the horizontal bar – have performed at least one execution (a submission to be evaluated by DSLab), which has functioned correctly (succeeded). Here, the red section of the bar indicates the groups that have not achieved any correct execution so far, whereas the grey part of the bar shows the groups that have not performed any execution in this phase so far. The same reasoning applies for the rest of phases.

Students answered a questionnaire at the end of the learning experience. We designed a customized questionnaire with the aim to specifically respond to our RQs, which allowed us to collect quantitative data for our analysis. The questionnaire was based on and adapted from the Motivated Strategies for Learning Questionnaire (MSLQ) that was used in the study of Pintrich & De Groot (1990) to measure students’ motivational beliefs. The adaptation of the original MSLQ questions was influenced by the particular context of our study, such as the observation feature of DSLab, as shown in next section.

Data collection

The questionnaire was finally answered by 115 students, that is, more than 87% of the participants and aimed to examine students’ perceptions of programming SE (RQ1) and intrinsic value of the DSLab tool (RQ2). As such, it included 18 items divided into two sections (one for each RQ), as shown in Table 1.

As regards RQ1, we associated a set of questions to the three variables we defined for measuring programming SE. More specifically:

-

EEO: Students’ E xternal E xperiences based on O bservation of others’ behavior is related to questions 1, 4, 7 and 8 (EEO1-EEO4).

-

SPD: Students’ S elf- P erception of D etermination is related to questions 2, 5 and 9 (SPD1-SPD3).

-

SPG: Students’ S elf- P erception of G oal striving is related to questions 3 and 6 (SPG1-SPG2).

.

Since a particular observation feature of DSLab was used, question items 1, 4, 7 and 8 were adapted from MSLQ to meet the specific characteristics of this feature. Indeed, question 1 was adapted to capture the fact that when seeing that other students have advanced this could motivate some students to think that they could also perform as good as their classmates. Question 4 implies that students, who have achieved correct assessments in every phase, where others have not, could see themselves much closer to a good academic performance. As for the ones who have not succeeded all phases, the tool allows them to know which is the resulting level achieved with respect to the other classmates. Question 7 implies that as long as a student advances each phase successfully, he/she forms his/her computing level with respect to the other classmates. Similarly, question 8 implies that if a student is progressing adequately during all phases of the assignment, his/her belief of the potential knowledge he/she acquires is growing.

In any case, to ascertain that a student has acquired a good computing skills level, one needs to have more information. There is most probably a correlation between having a good computing level and achieving more implementation phases; however, in an online university there are also other factors that impede a student with good computing skills not to complete with all phases (such as lack of time, be happy with a lower mark, etc.). Consequently, the adaptation and interpretation of all questions (regarding both RQ1 and RQ2) has to take this limitation into account.

As regards RQ2, we associated a set of questions to the three variables we defined for measuring the intrinsic value of the DSLab tool. More specifically:

-

INT: Students’ int rinsic interest in the tool is related to questions 12, 14, 17 (INT1-INT3).

-

IMP: Students’ perceived imp ortance of working in the DSLab environment is related to questions 11, 16 and 18 (IMP1-IMP3).

-

CH-MG: Students’ preference for ch allenge and m astery g oals is related to questions 10, 13 and 15 (CH-MG1 - CH-MG3).

.

A five-point Likert-type scale was used on a continuum from 1 (Strongly disagree) to 5 (Strongly agree); therefore, quantitative data is obtained.

Data analysis

Data were analyzed using descriptive statistics techniques, based on the Kolmogorov-Smirnov test for a sample and a frequency table. In addition, we calculated Pearson correlations between certain variables of our study with the aim to provide a more comprehensive answer of our research questions. The Cronbach’s alpha coefficient was applied to the student data to ensure the reliability of data collection. In the analysis of questionnaire data, we obtained a Cronbach’s alpha (0.81) which, being higher than 0.70, strengthens the reliability of our items. Table 2 shows the reliability statistics regarding RQ1, whereas Table 7 shows the equivalent Cronbach’s alpha coefficient for RQ2.

In addition, we examined the coefficients of multivariate skewness and kurtosis for assessing multivariate normality. Critical values of all test statistics were calculated. The results showed that data were normally distributed as absolute values of skewness and kurtosis did not exceed the allowed maximum (2.0 for univariate skewness and 7.0 for univariate kurtosis). These results are shown in Table 3 with regard to the RQ1 and in Table 8 with regard to the RQ2. The statistical results are presented in detail in the following section.

Results

The results with regard to the RQ1

We analyzed students’ perceptions of programming SE in relation to three parameters: (1) EEO: Students’ E xternal E xperiences based on O bservation of others’ behavior, which is related to questions 1, 4, 7 and 8 (EEO1-EEO4); (2) SPD: Students’ S elf- P erception of D etermination, which is related to questions 2, 5 and 9 (SPD1-SPD3), and (3) SPG: Students’ S elf- P erception of G oal striving, which is related to questions 3 and 6. (SPG1, SPEG2).

The corresponding descriptive statistic measures of the above items are presented in Tables 3 and 4.

Tables 3 and 4 indicate that the most significant values were obtained for variables SPD (mainly SPD2 and SPD3) and SPG. Variable EEO obtained either neutral (EEO1, EEO2) or non significant values (EEO3, EEO4). As concerns EEO1, the possibility of observing the activity of other students in the DSLab environment has not sufficiently supported students’ expectations of doing well their assignments. Basically, 47% of them expressed agreement; nearly 40% were neutral. Even more students (51%) were neutral that observing the activity of others in the DSLab environment would give them the impression of being good students and thus be able to succeed in their task (EEO2).

Yet, students were observing more specific characteristics of others’ activity in the DSLab environment, such as the state of executions of their classmates’ assignments (EEO3) or their classmates’ evolution and progress toward accomplishing the assignment phases (EEO4). In this case, less than 20% of students expressed their agreement that this type of experience could give them the sense that their computing skills were excellent or they had the necessary knowledge that would enable them to advance and complete the assignment successfully. These results show that the observation of others’ activity that the DSLab tool offered did not constitute a crucial feature to enhance students’ belief of self-efficacy for programming learning.

As regards students’ self-perception of determination (SPD), at the initial phase of the assignment, students’ perceptions about the understandability of the tool feedback (SPD1) were divided. An equal number of students (37%) expressed either agreement or disagreement, which does not constitute a very strong indicator for supporting students’ feeling of determination. If students feel that the tool feedback is not comprehensible enough, this would most probably not assist students’ determination and perseverance to overcome obstacles. However, most students’ belief of determination of achieving successful outcomes (68% of students) seemed to increase as they were advancing the phases of the assignment (SPD2), whereas even more students (78%) ascertained that they would be able to learn both the necessary theoretical bases and programming skills for this course (SPD3). Despite the tool feedback shortcomings to provide better support, these results show that DSLab generally provided an environment that fostered students’ belief in their particular conative strengths, such as determination, a factor that plays a significant role in enhancing students’ belief of SE for programming learning.

As regards students’ self-perception of goal striving (SPG), the majority of the students (88%) agreed that DSLab offered an environment that promoted effort and dedication, a key for achieving their goals (SPG1). Moreover, 75% of students believed that they could achieve good academic performance by receiving a good grade in the course (SPG2). These results show that DSLab provided an environment that strengthened students’ belief in their innate abilities which help them exhibit coping behavior and long effort which, in turn, increase their expectations of SE and goal achievement.

In conclusion, two of the three indicators that affect students’ SE (students’ self-perception of determination – SPD, and students’ self-perception of goal striving – SPG) seem to be supported sufficiently by the DSLab environment, though improvement of tool feedback could substantially strengthen students’ belief of determination. So, regarding these two aspects, DSLab tool seems to provide an environment that increases students’ belief of programming SE. However, further considerable reinforcement of students’ SE can be achieved by implementing in DSLab a more innovative and useful functionality for the observation of others’ activity.

Furthermore, we provide the Pearson correlations between the variable EEO (Students’ E xternal E xperiences based on O bservation of others’ behavior) and the variables SPD (Students’ S elf- P erception of D etermination) and SPG (Students’ S elf- P erception of G oal striving), so that to identify whether external observation has any significant influence on determination and goal striving (Table 5). We also provide the Pearson correlations between variables SPD and SPG (Table 6). We analyze these results in the Discussion section in order to obtain a more complete and knowledge-grounded response as concerns the students’ perceptions of programming SE from using the DSLab tool.

The results with regard to the RQ2

Here, we analyzed students’ perceptions of the intrinsic value of DSLab tool in relation to three parameters: (1) INT: Students’ intrinsic int erest in the tool, which is related to questions 12, 14 and 17 (INT1-INT3); (2) IMP: Students’ perceived imp ortance of working in the DSLab environment, which is related to questions 11, 16 and 18 (IMP1-IMP3), and (3) CH-MG: Students’ preference for ch allenge and m astery g oals, which is related to questions 10, 13 and 15 (CH-MG1 – CH-MG3). The corresponding descriptive statistic measures of the above items are presented in Tables 8 and 9.

Tables 8 and 9 indicate that all the three variables obtained significant values, with some exceptions that concerned items INT3 and IMP2. Variable CH-MG obtained more or less acceptable values for items CH-MG1 and CH-MG2 and significant values for CH-MG3. As concerns students’ intrinsic interest in the tool (INT), a large number of students (70%) agreed that they liked what they have learned in the DSLab environment (INT1). This was further confirmed by even more students (82%) who affirmed that they have chosen the current course because they are very interested in courses that make use of innovative tools, like DSLab, even if they require more work (INT2). Yet, they stated that they expected more from the tool, since more than a third (36%) have not felt that the tool captivated their attention or maintained their motivation and engagement throughout the course (INT3). Moreover, another third (33%) of students expressed neutrality in this matter.

These results show that DSLab provided an environment that generally gained students’ intrinsic interest. However, next versions of the tool should certainly provide improvement of the tool feedback and other features that focus on enhancing students’ motivation and engagement with the tool so that it constitutes an interesting experience for all students.

Regarding students’ perceived importance of working in the DSLab environment (IMP), again many students (72%) ascertained that it was important for them to work and learn on what they have been asked to do in the DSLab environment (IMP1). The majority (86%) of students considered very important the fact of having a real environment that gave them the possibility to validate the correct functioning of their code and thus learn how to program distributed systems in authentic settings (IMP3). Yet, a considerable number of students (31%) thought that working with the tool was often a waste of time, so not everything they were doing in that environment was really useful for them (IMP2).

These results show that though students in general appreciated the importance of working in the DSLab environment, a fair number of them demanded a more useful and practical experience with the tool. Time is an issue for online adult students. For instance, wasting time to untangle the tool feedback in order to correct coding errors may not be acceptable for everybody. A better scaffolding and monitoring of students’ work is certainly needed.

As concerns students’ preference for challenge and mastery goals (CH-MG), though nearly a third of them (32%) were neutral, half of the students (50%) really preferred carrying out the assignment through the tool since they considered it challenging and focused on new goals (CH-MG1, with mean 3.64). Again half of the students (50%) thought that they could use the knowledge and skills obtained from using the tool in their professional career (CH-MG2, with mean 3.66). Yet, the most important challenge for the majority of students (85%) was when they did poorly in an assignment phase. In that case, they used the tool repeatedly with the aim of improving their own performance (learning from their mistakes) and gain task mastery; i.e., maintain a more mastery-oriented focus on learning (Wolters, 1998) (CH-MG3, with mean 4.36). These results show that students have generally shown their preference for challenge and mastery goals by using the tool. Even though there is still space for improvements in the current environment (as mentioned above), the whole endeavor has been deemed promising.

In conclusion, after examined three aspects (interest, working environment importance, and mastery-oriented focus on learning) that affect the tool intrinsic value, we can argue that the students’ experience with the DSLab tool seems to strengthen their belief in the intrinsic value of the tool. Yet, our analysis identified some deficiencies which, if treated adequately, will add greater value to the tool.

In this direction, we provide the Pearson correlations between the variable INT (Students’ intrinsic int erest in the tool) and the variables IMP (Students’ perceived imp ortance of working in the DSLab environment) and CH-MG (Students’ preference for ch allenge and m astery g oals), so that to identify whether interest has any significant influence on working environment importance and mastery-oriented focus on learning (Table 10). We also provide the Pearson correlations between variables IMP and CH-MG (Table 11). We analyze these results in the Discussion section.

The results with regard to the RQ3

After presenting the results that concern students’ beliefs about both programming SE (RQ1) and the intrinsic value of the DSLab tool (RQ2), in order to provide a more complete study we proceed to examine whether students’ belief in the intrinsic value of the DSLab tool has any positive influence on their SE for programming learning (RQ3). To that end, we provide the Pearson correlations between the variable TIV (Tool Intrinsic Value) and the variable SE (students’ Self-Efficacy) – Table 12. We analyze these results in the Discussion section.

Discussion

In the previous section, based on descriptive statistics analysis, we found that DSLab tool provides an environment that contributes to increase students’ belief of programming SE (RQ1) by satisfying two of the three indicators that affect students’ SE. We also showed that the students’ experience with the DSLab tool strengthened their belief in the intrinsic value of the tool (RQ2) since all the indicators we examined presented fairly acceptable values. Moreover, our analysis gave us the opportunity to reveal important shortcomings with certain tool functionalities (namely, the observation and feedback features) which, if improved, could further increase both students’ programming SE and students’ perceptions of the intrinsic value of the tool. On the basis of these results, in this section we seek to delve more deeply at the research questions RQ1 and RQ2 as well as to analyze RQ3, comparing our results with relevant findings of the existing literature.

Analysis for RQ1

Our previous analysis indicated high students’ belief in their particular conative strengths, such as determination and goal striving, when they were working in the DSLab environment for executing and assessing their distributed programming assignments. When a student is able to exhibit coping behavior and maintain long effort in the face of obstacles, he/she will be able to sustain high SE and will achieve successful outcomes (Hill & Aita, 2018; Luszczynska & Schwarzer, 2005; Stajkovic & Luthans, 1998). In our study, students’ SE beliefs were significantly related to students’ self-perception of determination and goal striving. This finding is consistent with similar results reported in previous research. For instance, House (2000) found that SE beliefs were significantly related to grade performance and persistence in science, engineering, and mathematics. Brown & Lent (2006) associated SE with key motivation constructs such as optimism and achievement goal orientation, among others. Setting challenging goals had also a significant effect on SE in computer programming courses (Law et al., 2010).

Our study also showed that students’ self-perception of determination has been limited by students’ difficulty to understand the tool feedback. Providing elaborate feedback proved to be significantly related to the development of students’ SE in web-based learning (Wang & Wu, 2008). This indicates that the feedback component of DSLab tool definitely needs improvement, which can contribute to further enhance student’s programming SE.

Most importantly, our study revealed that an important feature of DSLab tool, observation of others’ activity, was not supporting students’ SE as expected. Students build their efficacy beliefs through the vicarious experience of observing others and they often gauge their capabilities in relation to the performance of others (Usher & Pajares, 2008). In their survey of SE, Usher and Pajares state that when students are uncertain about their own abilities or have limited experience with the academic task at hand, social models play a powerful role in the development of SE. This is further supported by the notion of coping models (Schunk & Pajares, 2002) which represent those learners who struggle through problems until they reach a successful outcome. Then these models are most likely to be followed by observers. This may give ideas of how the observation feature of DSLab could be further developed and improved.

But why observation can be considered as an important factor for enhancing students’ SE for learning in our study? This can be explained by analyzing the Pearson correlations between the variable EEO (Students’ External Experiences based on Observation of others’ behavior) and the variables SPD (Students’ Self-Perception of Determination) and SPG (Students’ Self-Perception of Goal striving) presented in Table 5 (Results section).

The results of these correlations indicate that a strong positive relationship exists between all observation variables and all variables of determination and goal striving. The only weaker relationships occur between EEO2/EEO3 and SPD1 items. In fact, this concerns the feedback deficiency of the tool we also presented above. The incapacity of some students to understand the feedback (logs) provided by the tool (SPD1) is not necessarily related with the fact (it does not mean) that these students are not good students (EEO2) or they do not possess good computing skills to carry out the assignment successfully (EEO3). In other words, it is the tool feedback that needs improvement as we also evidenced previously. Consequently, in our online Distributed Systems Laboratory (DSLab) environment, observation is an important factor for supporting students’ belief in their particular conative strengths, such as determination and goal striving, and thus students’ programming SE. Consequently, it should de developed accordingly.

Finally, the Pearson correlations between variables SPD and SPG (Table 6) reveal that students’ self-perception of determination is strongly correlated with students’ self-perception of goal striving, except for the SPD1 item. Again, this discordance is due to the feedback deficiency of the tool. Consequently, determination can be also considered an important factor for enhancing students’ programming SE.

These findings are broadly consistent with previous research which has shown that students’ observation becomes more engaged when they observe those classmates who exhibit coping behavior and long effort until they achieve their goals (Schunk & Hanson, 1985; Stevens et al., 2006; Yukselturk & Bulut, 2007). In these studies, vicarious experience (observation) proved to be a considerable factor for predicting SE. This is also reported by Hodges (2008) in his survey of SE in the context of online learning environments, where he states that it could be challenging to include vicarious experiences in online courses. Yet, our findings are unique in the field of distributed programming supported by an automated programming evaluation tool, where students’ SE has not been explored yet.

Analysis for RQ2

Our descriptive statistics analysis showed that students generally had a positive experience working in the DSLab environment. Being an innovative tool for the field, it captivated their interest (though not their full attention, motivation or engagement throughout the course). They recognized the tool importance for learning and validating distributed programming assignments they were asked for (though a number of students spent too much time coping with the tool feedback). Moreover, half of the students clearly considered the tool challenging, focused on new goals and skills, and linked to their professional career. Most importantly, the majority of students showed their preference to use the tool for challenge and mastery goals, by learning from their mistakes, and gain task mastery; i.e., maintain a more mastery-oriented focus on learning. These results lead us to consider that the students’ experience with the DSLab tool has, in principle, strengthened their belief in the intrinsic value of the tool.

The study of these three parameters (interest, working environment importance, and mastery-oriented focus on learning) echoes findings from other studies related with analyzing students’ belief in the intrinsic or extrinsic value of an online learning environment. More specifically, interest and mastery-oriented focus on learning are implicit factors in the mobile-application tool of Jeno et al., (2017). These researchers explored biology students’ belief in the intrinsic value of the tool by examining whether the tool was matching each student’s intrinsic motivation, skills and challenge. A mastery-oriented focus on learning is also implicit in Florenthal’s (2016) study, where the perceived value of interactive assignments is positively associated with attitude toward their completion as well as with satisfaction when completing them. Students’ implicit interest in using mobile learning (Park et al., 2012) or a tablet-based environment (Kim & Jang, 2015) was also studied by examining the relationship of students’ behavioral intention or satisfaction and desire to use the environment with the perceived usefulness and perceived ease of use of the environment (extrinsic value of the tool).

However, our study makes an important contribution to the literature by making a first attempt to analyze the intrinsic value of an automated assessment tool for distributed programming, taking into consideration three parameters (interest, working environment importance, and mastery-oriented focus on learning) explicitly. A further analysis of the Pearson correlations between these three variables (Tables 10 and 11 in the Results section) demonstrates the strong positive relationship between all variables. This reinforces our initial result that the students’ experience with the DSLab tool strengthened their belief in the intrinsic value of the tool. Besides, based on these premises, these findings suggest that future studies should examine whether these parameters are truly meaningful in relation to other relevant factors that affect the intrinsic value of e-learning tools so that to provide a deeper investigation to this matter.

Analysis for RQ3

Since the students’ belief in the intrinsic value of the DSLab tool was positive, it would be interesting to analyze whether this belief had also a positive influence on students’ SE for programming learning. To that end, we analyzed the Pearson correlations between variable TIV (Tool Intrinsic Value) and variable SE (students’ Self-Efficacy) – Table 12 in the Results section.

As concerns students’ intrinsic interest in the tool (INT), this parameter seems to be an influential factor to students’ SE, but not that strong. Both INT1 and INT2 items have a positive relationship with the most SE items to a greater or lesser extent, especially INT2 (students’ interest for innovative tools). Yet, the fact that the tool has not ultimately managed to captivate students’ attention or maintain their motivation and engagement throughout the course (INT3), as much as they expected, was rather a negative factor influencing their SE.

As regards students’ perceived importance of working in the DSLab environment (IMP), this parameter also seems to be an influential factor to students’ SE, but not that strong. Both IMP1 and IMP2 items have a positive relationship with the most SE items to a greater or lesser extent, especially IMP2 (students appreciated a really useful experience with the tool). Yet, the possibility to validate the correct functioning of students’ code and thus learn how to program distributed systems (IMP3) was reduced by the rather poor functioning of the tool observation and feedback features, which influenced their belief of SE negatively.

As concerns students’ preference for challenge and mastery goals (CH-MG), only CH-MG3 item seems to be important. By having the possibility to learn from their mistakes (CH-MG3), students increased their self-perception of goal striving (SPG) considerably, improved their self-perception of determination (SPD) in some aspects (mainly their possibility to learn both the necessary theoretical bases and programming skills); however, this did not have any substantial positive influence on students’ external experiences based on observation of others’ behavior. From the other items (CH-MG1, CH-MG2), it is interesting to note that students considered challenging and useful for their professional career the fact of using the tool feedback (SPD1), despite its comprehension problems. Also, students found challenging to observe the activity of their classmates in the DSLab environment so that to compare whether they were doing well or not (EEO1).

All in all, students’ belief in the intrinsic value of the DSLab tool could be in principle a potential factor for increasing students’ SE for programming learning. In our study, the obtained results, though they seem positive in some aspects, in some others they are not; so they cannot be considered conclusive yet. Certainly, more research is needed to examine whether these relationships are truly meaningful and positive since they are dependent on the functioning of important features of the tool, such as the observation, feedback and probably others. This need is also confirmed by looking at the literature. More specifically, regarding the relationship between the extrinsic value (perceived usefulness and perceived ease of use) or intrinsic value (perceived enjoyment, satisfaction, interest, importance or challenge) of e-learning tools and SE, several studies examined the effect of SE on the tool extrinsic or intrinsic value.

Among them, the study of Lee & Mendlinger (2011) showed that university students’ perceived SE had a positive and significant effect on the extrinsic value of online classes (both perceived ease of use and perceived usefulness), but not the same positive effect on students’ perceived satisfaction (intrinsic value). Park et al., (2012) found that SE had the largest effect on perceived ease of use but not so on perceived usefulness in a m-learning environment. They also noted that SE supported students’ attitude toward m-learning (intrinsic value).

In contrast, few studies examined the effect of the tool extrinsic or intrinsic value on SE in generic or specific online learning environments (as we did in this study). More specifically, Lee & Lee (2008) showed that e-learners’ SE is more sensitive to the effect of perceived usefulness of the online learning environment they use. Kim & Jang (2015) showed that students’ perceived usefulness and perceived ease of use had an indirect positive effect on SE in a tablet-based environment. They also found that desire to learn when using tablets in the classroom (intrinsic value) had a positive influence on SE. However, satisfaction with using tablets (intrinsic value), as a single variable, was not found to support SE. In fact, the perceptions (ease of use, usefulness and satisfaction) of tablet use significantly predicted positive belief about SE only for those students who strongly wished to learn with tablets.

Our research model revisited

Based on the presentation and discussion of the results, we summarize our findings, revisiting our research model and validating the existing relationships among the different variables we considered in our study. The results of our analysis of the research model are presented in Fig. 4.

The results in Fig. 4 show that:

-

1.

The observation of others’ activity (EEO) feature that the DSLab tool offered did not constitute a crucial factor to enhance students’ belief of self-efficacy (SE) for programming learning.

-

2.

DSLab generally provided an environment that fostered students’ belief in their particular conative strengths, such as determination (SPD), a factor that plays a significant role in enhancing students’ belief of SE for programming learning.

-

3.

DSLab provided an environment that strengthened students’ belief in their innate abilities which helped them exhibit coping behavior and long effort (SPG) which, in turn, increased their expectations of SE and goal achievement.

-

4.

DSLab provided an environment that generally gained students’ intrinsic interest (INT), a factor that strengthened students’ belief in the intrinsic value of the tool (TIV).

-

5.

Students in general appreciated the importance of working in the DSLab environment (IMP), which in turn had a positive influence on students’ belief in the intrinsic value of the tool (TIV).

-

6.

Students have generally shown their preference for challenge and mastery goals by using DSLab (CH-MG), which had a significant direct effect on their positive belief in the intrinsic value of the tool (TIV).

-

7.

There is a strong positive relationship between students’ external experiences based on observation of others’ behavior (EEO) and students’ self-perception of determination (SPD) and goal striving (SPG).

-

8.

Students’ self-perception of determination (SPD) is positively correlated with students’ self-perception of goal striving (SPG).

-

9.

Students’ intrinsic interest in DSLab (INT) had a positive influence on students’ perceived importance of working in the DSLab environment (IMP) as well as on students’ preference for challenge and mastery goals (CH-MG).

-

10.

There is a strong positive relationship between students’ perceived importance of working in the DSLab environment (IMP) and their preference for challenge and mastery goals (CH-MG).

-

11.

Students’ belief in the intrinsic value of the DSLab tool (TIV) had a moderate influence on their self-efficacy for programming learning (SE).

Conclusion and Limitations

This work analyzed the effect of an online Distributed Systems Laboratory (DSLab) on students’ beliefs regarding programming self-efficacy (SE) and the intrinsic value of the tool. After testing DSLab in an authentic learning experience, the above eleven (11) conclusions we reached (in Sect. 5.4) provide relevant implications both for online distributed (or general) programming course teachers and for designers of automated assessment tools (AAT) that support these courses. The ultimate goal is how to increase students’ engagement, learning and performance in the field of programming. As a consequence, teachers are enabled to use DSLab as an AAT allowing their students to deploy and execute their programming assignments in DSLab, as many times as they wish. During this process teachers can invigilate and monitor the correctness of students’ code. Though students are able to use DSLab feedback, explore its logs and review their code for possible errors, teachers themselves can intervene and complement DSLab assistance through a more formative feedback, which leads to a better improvement of students’ program. This results in an experience that enhances students’ beliefs both in the intrinsic value of the tool and programming SE. In addition, AAT designers can take into account DSLab features in order to improve their own AATs. Immediate feedback constitutes an important feature of DSLab. The observation of others’ activity has been another DSLab feature, which we detected that it should be further improved in order to influence students’ SE more effectively. Possible improvements of this feature are discussed later in the limitations in this section. In general, the insights gained from this experience can provide valuable directions for improving both DSLab design and any other AAT design with the aim of enhancing tool intrinsic value. Such possible directions are the incorporation of specific notifications and recommendations for the students and even the tool integration with an educational chatbot for a more efficient and purposeful communication with students (Quiroga et al., 2020). Finally, regarding students, as evidenced recently (Johnson & Lockee, 2018), “although online learning research has helped to identify connections between online learners’ SE perceptions and success in online coursework, there are few studies designed to purposefully affect students’ SE perceptions while in their online learning environment”. Our study makes an important contribution to the literature by providing an environment that affects not only the way students elaborate and evaluate their assignments but also their perceptions of SE and intrinsic value of the tool.

Limitations of the current work reveal the need for future research. First, research regarding the use and effectiveness of online assessment tools for distributed programming is very new and so far there has been no other attempt to assess students’ motivational beliefs when using such tools. As such, our research model was based on the initial MSLQ (Pintrich & De Groot, 1990) in order to avoid complexity at this stage of research and provide the necessary insights for improving our tool design. In this study, we used MSLQ to measure one portion of its potential. In fact, our analysis was concerned with the students’ motivational beliefs as an important component for their academic performance. According to the learning model of Pintrich & De Groot (1990), students’ motivational beliefs are linked to students’ self-regulated learning. Then, a challenging issue could be to explore the self-regulated learning strategies that students employ during the realization of the practical assignment with the DSLab tool to improve learning and performance. Second, research with DSLab could proceed further to include the more advanced conceptual framework for assessing motivation and self-regulating learning (Pintrich, 2004) which goes beyond the simple social cognitive and information processing perspective of MSLQ. This will allow for the possibility of exploring the regulation of different cognitive, motivational/affective, behavioral, or contextual features. Third, at a technical level, this study revealed the need for improving the observation of others’ activity feature offered by DSLab, a parameter that influences students’ SE. Possible improvements could be adding new graphical facilities for tracking class behavior with regard to the current activity (implementation phase) of the assignment, including “warning” signals when students have sent the activity for assessment a maximum number of times N but have not achieved a correct assessment. Moreover, specific graphical information can be given for each group anonymously and for each activity phase, indicating the state of the group with respect to the number of executions tried and successful or unsuccessful tries performed. Finally, at a technical level, a related issue to the intrinsic value of the DSLab tool is the usability of the tool. Examination of the tool usability could lead not only to propose improvements at design level but also to identify how new DSLab features may affect and support students’ self-regulated learning, optimum task realization and goal achievement.

References

Ames, C. (1992). Classrooms: Goals, structures, and student motivation. Journal of Educational Psychology, 84, 261–271

Bakar, M. A., Esa, M. I., Jailani, N., Mukhtar, M., Latih, R., & Zin, A. M. (2018). Auto-marking system: A support tool for learning of programming. International Journal on Advanced Science, Engineering and Information Technology, 8(4), 1313–1320

Bandura, A. (1988). Organizational Application of Social Cognitive Theory. Australian Journal of Management, 13(2), 275–302

Bandura, A. (1993). Perceived Self-Efficacy in Cognitive Development and Functioning. Educational Psycologids, 28(2), 117–148

Bey, A., Jermann, P., & Dillenbourg, P. (2018). A Comparison between Two Automatic Assessment Approaches for Programming: An Empirical Study on MOOCs. Educational Technology & Society, 21(2), 259–272

Brown, S. D., & Lent, R. W. (2006). Preparing adolescents to make career decisions: A social cognitive perspective. In F. Pajares, & T. Urdan (Eds.), Adolescence and education: Vol. 5. Self-efficacy beliefs of adolescents (pp. 201–223). Greenwich, CT: Information Age

Carbonaro, A. (2019). Good practices to influence engagement and learning outcomes on a traditional introductory programming course. Interactive Learning Environments, 27(7), 919–926

Cardoso, M., Marques, R., Vieira de Castro, A., & Rocha, A. (2020). Using Virtual Programming Lab to improve learning programming: The case of Algorithms and Programming. Expert Systems, 2020;e12531, https://doi.org/10.1111/exsy.12531

Chen, I. S. (2017). Computer self-efficacy, learning performance, and the mediating role of learning engagement. Computers in Human Behavior, 72, 362–370

Chen, C., & Su, C. (2019). Using the BookRoll E-Book System to Promote Self-Regulated Learning, Self-Efficacy and Academic Achievement for University Students. Journal of Educational Technology & Society, 22(4), 33–46

Chou, S. W., Min, H. T., Chang, Y. C., & Lin, C. T. (2010). Understanding continuance intention of knowledge creation using extended expectation-confirmation theory: An empirical study of Taiwan and China online communities. Behavior & Information Technology, 29(6), 557–570

Clayton, K. E., Blumberg, F. C., & Anthony, J. A. (2018). Linkages between course status, perceived course value, and students’ preference for traditional versus non-traditional learning environments. Computers & Education, 125, 175–181

Davis, F., Bagozzi, R. P., & Warshaw, P. R. (1992). Extrinsic and intrinsic motivation to use computers in the workplace. Journal of Applied Social Psychology, 22, 1111–1132

Delgado-Pérez, P., & Medina‐Bulo, I. (2020). Customizable and scalable automated assessment of C/C + + programming assignments. Computer Applications in Engineering Education, 28 (6), 1449–1466. https://doi.org/10.1002/cae.22317

Eccles, J. (1983). Expectancies, values and academic behaviors. In J. T. Spence (Ed.), Achievement and achievement motives (pp. 75–146). San Francisco: Freeman

Elias, S. M., & MacDonald, S. (2007). Using past performance, proxy efficacy, and academic self-efficacy to predict college performance. Journal of Applied Social Psychology, 37, 2518–2531

Florenthal, B. (2016). The value of interactive assignments in the online learning environment. Marketing Education Review, 26(3), 154–170

Fryer, L. K., Thompson, A., Nakao, K., Howarth, M., & Gallacher, A. (2020). Supporting self-efficacy beliefs and interest as educational inputs and outcomes: Framing AI and Human partnered task experience. Learning and Individual Differences, 80, 101850. https://doi.org/10.1016/j.lindif.2020.101850

Gaytan, J., & McEwen, B. C. (2007). Effective online instructional and assessment strategies. The American Journal of Distance Education, 21(3), 117–132

Gordillo, A. (2019). Effect of an Instructor-Centered Tool for Automatic Assessment of Programming Assignments on Students’ Perceptions and Performance. Sustainability, 11(20), 5568. doi:https://doi.org/10.3390/su11205568

Harter, S. (1981). A new self-report scale of intrinsic versus extrinsic orientation in the classroom: Motivational and informational components. Developmental Psychology, 17, 300–312

Hill, B. D., & Aita, S. L. (2018). The positive side of effort: A review of the impact of motivation and engagement on neuropsychological performance. Applied Neuropsychology: Adult, 25(4), 312–317

Hodges, C. (2008). Self-Efficacy in the Context of Online Learning Environments. A Review of the Literature and Directions for Research. Performance Improvement Quarterly, 20(3–4), 7–25

Honicke, T., & Broadbent, J. (2016). The Relation of Academic Self-Efficacy to University Student Academic Performance: A Systematic Review. Educational Research Review, 17, 63–84

House, J. (2000). Academic background and self-beliefs as predictors of student grade performance in science, engineering and mathematics. International Journal of Instructional Media, 27(2), 207–220

Jeno, L. M., Grytnes, J. A., & Vandvik, V. (2017). The effect of a mobile-application tool on biology students’ motivation and achievement in species identification: A Self-Determination Theory perspective. Computers & Education, 107, 1–12

Johnson, A. L., & Lockee, B. B. (2018). Self-Efficacy Research in Online Learning. In C. Hodges (Ed.), Self-Efficacy in Instructional Technology Contexts (pp. 3–13). Cham: Springer

Jokisch, M. R., Schmidt, L. I., Doh, M., Marquard, M., & Wahl, H. W. (2020). The role of internet self-efficacy, innovativeness and technology avoidance in breadth of internet use: Comparing older technology experts and non-experts. Computers in Human Behavior, 111(10), 106408. https://doi.org/10.1016/j.chb.2020.106408

Jones, G. H., & Jones, B. H. (2005). A comparison of teacher and student attitudes concerning use and effectiveness of web-based course management software. Educational Technology & Society, 8(2), 125–135

Kehrwald, B. (2008). Understanding social presence in text-based online learning environments. Distance Education, 29(1), 89–106

Kim, H. J., & Jang, H. Y. (2015). Factors influencing students’ beliefs about the future in the context of tablet-based interactive classrooms. Computers & Education, 89, 1–15

Korkmaz, Ö., & Altun, H. (2014). Adapting computer programming self-efficacy scale and engineering students’ self-efficacy perceptions. Participatory Educational Research, 1(1), 20–31

Larmuseau, C., Desmet, P., & Depaepe, F. (2019). Perceptions of instructional quality: impact on acceptance and use of an online learning environment. Interactive Learning Environments, 27(7), 953–964

Law, K. M. Y., Lee, V. C. S., & Yu, Y. T. (2010). Learning motivation in e-learning facilitated computer programming courses. Computers & Education, 55, 218–228

Lee, J. K., & Lee, W. K. (2008). The relationship of e-Learner’s self-regulatory efficacy and perception of e-Learning environmental quality. Computers in Human Behavior, 24, 32–47

Lee, J. W., & Mendlinger, S. (2011). Perceived Self-Efficacy and Its Effect on Online Learning Acceptance and Student Satisfaction. Journal of Service Science and Management, 4, 243–252

Lee, D., Watson, S., & Watson, W. (2020). The Relationships Between Self-Efficacy, Task Value, and Self-Regulated Learning Strategies in Massive Open Online Courses. International Review of Research in Open and Distributed Learning, 21(1), 23–39

Lim, K., Kang, M., & Park, S. Y. (2016). Structural relationships of environments, Individuals, and learning outcomes in Korean online university settings. International Review of Research in Open and Distributed Learning, 17(4), 315–330

Luszczynska, A., & Schwarzer, R. (2005). Social cognitive theory. In M. Conner, & P. Norman (Eds.), Predicting health behaviour (pp. 127–169). Buckingham, England: Open University Press

Maddux, J. E. (2009). Self-efficacy: the power of believing you can. In S. J. Lopez, & C. R. Snyder (Eds.), Handbook of positive psychology (pp. 277–287). Oxford, UK: Oxford University Press

Marquès, J. M., Daradoumis, T., Calvet, L., & Arguedas, M. (2020). Fruitful Student Interactions and Perceived Learning Improvement in DSLab: a Dynamic Assessment Tool for Distributed Programming. British Journal of Educational Technology, 51(1), 53–70

Mischel, W., & Shoda, Y. (1995). A cognitive-affective system theory of personality: Reconceptualizing situations, dispositions, dynamics, and invariance in personality structure. Psychological Review, 102(2), 246–268

Moore, R. L., & Wang, C. (2021). Influence of learner motivation dispositions on MOOC completion. Journal of Computing in Higher Education, 33, 121–134

Northey, G., Bucic, T., Chylinski, M., & Govind, R. (2015). Increasing student engagement using asynchronous learning. Journal of Marketing Education, 37(3), 171–180

Oettingen, G., & Gollwitzer, P. (2007). Goal Setting and Goal Striving. In A. Tesser & N. Schwarz (Eds.), Blackwell Handbook of Social Psychology: Intraindividual Processes (pp. 329–347). Wiley Blackwell

Olivier, E., Galand, B., Hospel, V., & Dellisse, S. (2020). Understanding behavioural engagement and achievement: The roles of teaching practices and student sense of competence and task value. British Journal of Educational Psychology, 90(4), 887–909. https://doi.org/10.1111/bjep.12342

Park, S. Y., Nam, M. W., & Cha, S. B. (2012). University students’ behavioral intention to use mobile learning: Evaluating the technology acceptance model. British Journal of Educational Technology, 43(4), 592–605

Peechapol, C., Na-Songkhla, J., Sujiva, S., & Luangsodsai, A. (2018). An Exploration of Factors Influencing Self-Efficacy in Online Learning: A Systematic Review. Int. Journal of Emerging Technologies in Learning, 13(9), 64–86

Pettit, R. S., Homer, J. D., Holcomb, K. M., Simone, N., & Mengel, S. A. (2015). Are automated assessment tools helpful in programming courses? In Proceedings of the 122nd ASEE Annual Conference & Exposition (pp. 1–20). Seattle, WA

Pintrich, P. R., & De Groot, E. V. (1990). Motivational and Self-Regulated Learning Components of Classroom Academic Performance. Journal of Educational Psycology, 82(1), 33–40

Pintrich, P. R. (2004). A conceptual framework for assessing motivation and selfregulated learning in college students. Educational Psychology Review, 16, 385–407

Prior, D. D., Mazanov, J., Meacheam, D., Heaslip, H., & Hanson, J. (2016). Attitude, digital literacy and self-efficacy: Flow-on effects for online learning behavior. The Internet and Higher Education, 29, 91–97

Quiroga, J., Daradoumis, T., & Marquès, J. M. (2020). Re-Discovering the use of chatbots in education: A systematic literature review. Computer Applications in Engineering Education, 28(6), 1549–1565

Rejón-Guardia, F., Polo-Peña, A. I., & Maraver-Tarifa, G. (2020). The acceptance of a personal learning environment based on Google apps: the role of subjective norms and social image. Journal of Computing in Higher Education, 32, 203–233

Restrepo-Calle, F., Ramírez-Echeverry, J. J., & González, F. A. (2019). Continuous assessment in a computer programming course supported by a software tool. Computer Applications in Engineering Education, 27(1), 80–89

Robinson, P. E., & Carroll, J. (2017). An Online Learning Platform for Teaching, Learning, and Assessment of Programming, Global Engineering Education Conference (pp.547–556). Athens, Greece: IEEE

Rubio-Sanchez, M., Kinnunen, P., Pareja-Flores, C., & Velázquez-Iturbide, A. (2014). Student perception and usage of an automated programming assessment tool. Computers in Human Behavior, 31, 453–460

Schunk, D. H., & Hanson, A. R. (1985). Peer models: Influence on children’s self-efficacy and achievement. Journal of Educational Psychology, 77, 313–322

Schunk, D. H., & Pajares, F. (2002). The development of academic self-efficacy. In A. Wigfield, & J. Eccles (Eds.), Development of achievement motivation (pp. 15–31). San Diego, CA: Academic Press

Stajduhar, I., & Mausa, G. (2015). Using string similarity metrics for automated grading of SQL statements. In Proceedings of the 38th IEEE International Convention on Information and Communication Technology, Electronics and Microelectronics (pp. 1250–1255). Opatija, Croatia:IEEE

Stajkovic, A. D., & Luthans, F. (1998). Self-efficacy and work-related performance: A meta-analysis. Psychological Bulletin, 2(2), 240–261

Stevens, T., Olivárez, A. Jr., & Hamman, D. (2006). The role of cognition, motivation, and emotion in explaining the mathematics achievement gap between Hispanic and White students. Hispanic Journal of Behavior Sciences, 28, 161–186

Tek, F. B., Benli, K. S., & Deveci, E. (2018). Implicit Theories and Self-efficacy in an Introductory Programming Course. IEEE Transactions on Education, 61(3), 218–225

Thai, N. T. T., De Wever, B., & Valcke, M. (2020). Face-to-face, blended, flipped, or on-line learning environment? Impact on learning performance and student cognitions. Journal of Computer Assisted Learning, 36(3), 397–411

Tsai, C. C., Chuang, S. C., Liang, J. C., & Tsai, M. J. (2011). Self-efficacy in Internet-based Learning Environments: A Literature Review. Educational Technology & Society, 14(4), 222–240

Tsai, M. J., Wang, C. Y., & Hsu, P. F. (2019). Developing the computer programming self-efficacy scale for computer literacy education. Journal of Educational Computing Research, 56(8), 1345–1360

Usher, E. L., & Pajares, F. (2008). Sources of Self-Efficacy in School: Critical Review of the Literature and Future Directions. Review of Educational Research, 78(4), 751–796

Valencia-Vallejo, N., López-Vargas, O., & Sanabria-Rodríguez, L. (2019). Effect of a metacognitive scaffolding on self-efficacy, metacognition, and achievement in e-learning environments. Knowledge Management & ELearning, 11(1), 1–19

Vujošević-Janičić, M., Nikolić, M., Tošić, D., & Kuncak, V. (2013). Software verification and graph similarity for automated evaluation of students’ assignments. Information and Software Technology, 55(6), 1004–1016

Wang, S. L., & Wu, P. Y. (2008). The role of feedback and self-efficacy on web-based learning: The social cognitive perspective. Computers & Education, 51, 1589–1598

Wei, H., & Chou, C. (2020). Online learning performance and satisfaction: Do perceptions and readiness matter? Distance Education, 41(1), 48–69

Wolters, C. (1998). Self-regulated learning and college students’ regulation of motivation. Journal of Educational Psychology, 90, 224–235

Wolverton, C. C., Guidry Hollier, B. N., & Lanier, P. A. (2020). The Impact of Computer Self Efficacy on student Engagement and Group Satisfaction in Online Business Courses. The Electronic Journal of e-Learning, 18(2), 175–188

Yokoyama, S. (2019). Academic Self-Efficacy and Academic Performance in Online Learning: A Mini Review. Frontiers in Psychology, 9, 2794

Yukselturk, E., & Bulut, S. (2007). Predictors for student success in an online course. Educational Technology & Society, 10(2), 71–83

Zimmerman, B. J., Bandura, A., & Martinez-Pons, M. (1992). Self-motivation for academic attainment: The role of self-efficacy beliefs and personal goal setting. American Educational Research Journal, 29(3), 663–676

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

This article does not contain any studies with human participants or animals performed by any of the authors. Human participants (university students) have merely answered an accorded questionnaire anonymously. That is, no participants’ personal data were used or recorded.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Daradoumis, T., Marquès Puig, J.M., Arguedas, M. et al. Enhancing students’ beliefs regarding programming self-efficacy and intrinsic value of an online distributed Programming Environment. J Comput High Educ 34, 577–607 (2022). https://doi.org/10.1007/s12528-022-09310-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12528-022-09310-9