Abstract

A key challenge in CSCL research is to find ways to support learners in becoming effective collaborators. While the effectiveness of external collaboration scripts is well established, there is a need for research into support that acknowledges learners’ autonomy during collaboration. In the present study, we compare an external collaboration script and a reflection scaffold to a control condition and examine their effects on learners’ knowledge about effective collaboration and on their groups’ interaction quality. In an experimental study that employed a 1× three-factorial design, 150 university students collaborated in groups of three to solve two information pooling problems. These groups either received an external collaboration script during collaboration, no support during collaboration but a reflection scaffold before beginning to collaborate on the second problem, or no support for their collaboration. Multilevel modeling suggests that learners in the reflection condition gained more knowledge about effective collaboration than learners who collaborated guided by an external collaboration script or learners who did not receive any support. However, we found no effect of the script or the reflection scaffold on the quality of interaction in the subsequent collaboration. Explorative analyses suggest that learners acquired knowledge particularly about those interactions that are required for solving information pooling tasks (e.g., sharing information). We discuss our findings by contrasting the design of the external collaboration script and the reflection scaffold to identify potential mechanisms behind scripting and collaborative reflection and to what extent these forms of support foster collaboration skills and engagement in productive interaction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The ability to solve problems collaboratively is widely recognized to be of critical importance (Graesser, Fiore et al., 2018a; Graesser, Foltz et al., 2018b; Hesse et al., 2015; van Laar et al., 2017). While the effects of collaborative learning for domain-specific knowledge have been studied extensively, ways to systematically foster collaboration skills have received far less attention. However, as many jobs require profound collaboration skills, their development and dedicated training deserve more attention.

In the field of the learning sciences, one of the most prominent methods for supporting collaboration and the acquisition of collaboration skills is through the use of external collaboration scripts, which provide learners with external scaffolds that enable them to engage in and practice productive interaction (Fischer et al., 2013). As a result, over time, learners construct internal representations of these interactions. These internal collaboration scripts then enable learners to engage in productive interaction without external scaffolding. Meta-analytical evidence suggests that external collaboration scripts are effective in supporting the acquisition of collaboration skills, such as argumentation (Vogel et al., 2022) or interprofessional collaboration (Rummel & Spada, 2005, 2007). However, some argue that external collaboration scripts have shortcomings. For instance, tailoring the external collaboration script to the learner’s current skills to reduce the negative effects of the learner’s prior knowledge about collaboration can be challenging (Dillenbourg, 2002). Furthermore, external scripts may overly restrict learners’ autonomy, especially when the script prescribes specific tasks, roles, and resources for the group, which hampers natural interaction among learners (Provocation 2 in Wise & Schwarz, 2017).

In the present study, we address this tangent of the discussion and explore the effects of an alternative to explicitly guiding learners’ interaction. Collaborative reflection (Phielix et al., 2011; Yukawa, 2006) may be a less directive approach to facilitating collaboration and administering knowledge about effective collaboration. However, little is known about whether reflection on the interaction promotes the acquisition of collaborative skills and can improve the quality of further interaction. Therefore, this study investigates how an external collaboration script and a reflection scaffold affect learners’ knowledge about productive collaboration, and how these forms of support affect the quality of interaction in the group in a subsequent collaboration. It needs to be noted that these types of collaboration support leverage different learning mechanisms to foster collaboration. By comparing these forms of collaboration support to a control condition that did not receive support, we hope to develop insights regarding the acquisition of knowledge about collaboration and interaction quality.

Theoretical background

Acquiring skills for effective collaboration

Several attempts to define the skills that are necessary for effective collaboration can be found in research studies (Rummel et al., 2009), in domain-specific models such as the framework to improve interprofessional collaboration in health education and care (FINCA, Witti et al., 2023), and in frameworks for large-scale assessment such as the Programme for International Student Assessment (PISA) in 2015 (Graesser, Foltz, et al., 2018b) or the Assessment and Teaching of 21st Century Skills Framework (ACT21S) (Hesse et al., 2015). Based on seminal research in the learning sciences and in team science, the framework underlying PISA (Graesser, Foltz, et al., 2018b) proposes that collaborative problem solving requires three skills: establishing and maintaining a shared understanding, taking appropriate action to solve the problem (i.e., problem solving actions and communication), and establishing and maintaining group organization. Similarly, the ACT21S framework (Hesse et al., 2015) specifies five problem solving processes (i.e., problem identification, problem representation, planning, executing, monitoring) and two sets of skills, namely social skills (collaboration, i.e., participation, perspective taking, social regulation) and cognitive skills (problem solving, i.e., planning, executing, monitoring, flexibility, learning). Furthermore, research studies in the field of collaborative learning and CSCL have synthesized evidence about beneficial interaction during collaboration, which can be formulated as skills that are necessary for effective collaboration. For example, Saab et al. (2007) derived the Respect, Intelligent collaboration, Deciding together, and Encouraging (RIDE) rules from previous studies. The RIDE rules are further detailed in sub-rules such as negative individual evaluation and symmetric communication for the rule “respect,” informative responses, asking for understanding, argumentation, informative activities for the rule, “intelligent collaboration,” asking for action, confirmation and acceptance, asking for agreement, coordinated off-task talk for the rule “deciding together,” and asking open questions, asking critical questions, asking after incomprehension, and positive individual evaluation for the rule “encouraging.” A similar, but finer-grained framework that encompasses interactions that benefit collaboration was presented by Meier et al. (2007). Their rating schema was developed on the basis of an extensive literature review (e.g., Meier, 2005, Meier et al., 2007) and has been used to assess the quality of interaction in groups. The authors identified five aspects of successful computer-supported interdisciplinary collaborative problem-solving processes: communication, coordination, joint information processing, relationship management, and motivation. These five aspects are further subdivided into nine dimensions of collaboration processes: sustaining mutual understanding, dialog management, information pooling, reaching consensus, task division, time management, technical coordination, reciprocal interaction, and individual task orientation. While the original goal of the rating schema is to assess the quality of the interaction in a group, it also allows to derive skills that are necessary to achieve a high level of collaboration quality.

All these frameworks show great overlap. For example, they highlight the importance of establishing and maintaining mutual understanding in the group (i.e., common ground, Baker et al., 1999; Clark & Brennan, 1991), the use of unshared information (i.e., information pooling, Deiglmayr & Spada, 2011; Stasser & Titus, 1985), joint decision making (Brodbeck et al., 2007), as well as joint monitoring and regulation (Järvelä et al., 2018; Sobocinski et al., 2022). For the present study we adopt the perspective of the rating schema proposed by Meier et al. (2007) as our model of productive collaboration and collaboration skills because it encompasses the skills described by Saab et al. (2007), PISA 2015, and ACT21S and also describes how these skills can be observed and rated on different levels of quality. In comparison to the framework by Witti et al. (2023), the rating schema is domain agnostic and can be adjusted to the specific collaboration tasks (e.g., Kahrimanis et al., 2012; Martinez-Maldonado et al., 2013; Rummel et al., 2011; Schneider & Pea, 2017).

Scripting collaboration

According to the script theory of guidance (Fischer et al., 2013), knowledge about collaboration is encoded in internal collaboration scripts. Analogously to cognitive scripts for everyday situations (e.g., the “restaurant script”, Abelson, 1981), internal collaboration scripts encompass knowledge and expectations regarding the structure of the situation, phases, roles, as well as the actions necessary to accomplish the goal of the current collaboration (Kollar et al., 2006). One way to promote productive engagement in collaborative learning settings, if learners lack effective internal scripts, are external collaborations scripts (Fischer et al., 2013). External collaboration draw on the notion of Vygotsky’s notion of a zone of proximal development, research on cognitive scripts and instructional scaffolding (e.g., Pea, 2004; Tabak, 2018) to guide the interaction in groups which may otherwise engage in less beneficial interaction. Specifically, external collaboration scripts are textual or graphical representations of beneficial collaborative practice that aim at scaffolding interaction by facilitating beneficial interactions, or by inhibiting undesired interaction patterns (Fischer et al., 2013; Vogel et al., 2021). To this end, external collaboration scripts specify sequences of actions, actions, or roles that facilitate effective socio-cognitive processes (Vogel et al., 2017). Thus, external collaboration scripts include information on processes that are beneficial for learning during collaboration and problem solving. When a group is instructed to follow an external collaboration script, group members are presumed to follow the script and engage in the suggested interactions, such as argumentation, providing peer feedback or engaging in joint regulation (Kollar et al., 2018). Thus, the external collaboration script scaffolds beneficial interaction patterns. Through repeated collaboration practice that is guided by an external collaboration script, learners build up the components of an effective internal collaboration script (Kollar et al., 2018). It is worth noting that the internal collaboration script that learners develop is not a direct replication of the external collaboration script. Instead, the external collaboration script affords interaction patterns, which then either induces a functional configuration of the internal collaboration script, helps learners modify their existing internal collaboration script in accordance with the behavior presented in the external collaboration script (Fischer et al., 2013).

To benefit from an external collaboration script, the learners in a group need to actively process the script (e.g., action sequences) and enact the scripted actions. Indeed, a wealth of research has shown that groups can leverage external collaboration scripts and engage in the scripted patterns of interaction which affects the quality of the interaction in the group and supports learners in internalizing the external collaboration script (e.g., Kollar et al., 2007; Tsovaltzi et al., 2014; cf. Rummel et al., 2009 for a different result). Meta-analyses on the effectiveness of external collaboration scripts have found medium (Radkowitsch et al., 2020) to large positive effects (Vogel et al., 2017) on collaboration skills in general, and medium to large effects on specific skills such as negotiation, information sharing, coordination, or a combination of different collaboration skills. Considering these results, external collaboration scripts can be seen as an effective means of fostering productive interaction. Their effectiveness for the acquisition of a broader set of collaboration skills, however, should receive further attention.

Despite, or because of their pervasive use, there is an ongoing debate regarding the degree to which external collaboration scripts may be overly coercive and limit opportunities for self-expression. This raises questions about the compatibility of external collaboration scripts with the fundamental concept of collaborative learning, which is to provide learners with autonomy over their learning (see Provocation 2 “prioritize learner agency over collaborative scripting” in Wise & Schwarz, 2017). One of the main concerns regarding external collaboration scripts is their potential negative impact on learners' motivation. However, a recent meta-analysis by Radkowitsch et al. (2020) found no evidence for the claim that learners are less motivated when collaborating using an external collaboration script. Another persistent challenge is the alignment between learners' internal collaboration script and the external collaboration script. If learners have well-developed internal collaboration scripts, a detailed external collaboration script may cause process losses that negatively affect their performance (overscripting, Dillenbourg, 2002; expertise-reversal effect, Kalyuga, 2007). Providing learners with a vague external collaboration script may not offer enough guidance, resulting in suboptimal interaction among group members, especially if they have insufficient prior knowledge on collaboration (Dillenbourg, 2002; Vogel et al., 2017). CSCL research has accumulated ample evidence on the effectiveness of external collaboration scripts. The ‘conciliator' in Wise and Schwarz’ (2017) article thus calls for studies that explore less directive means of support which transfer the responsibility for regulation to the group and convey knowledge about collaboration without coercing learners during interaction. With the present study, we seek to address this desideratum by investigating the effects of an autonomy-supportive alternative (Reeve & Cheon, 2021) to external collaboration scripts for fostering collaboration skills.

Guiding collaborative reflection

Providing learners with autonomy during collaboration involves allowing space for regulation. Effective regulation during learning encompasses a preparation, a monitoring, and a reflection phase (Zimmerman, 2000). In the reflection phase, learners review their learning process and evaluate which strategies were advantageous and which need modification. In their seminal work, Boud et al. (1985) posit that reflection targets past experience after expectations were not met, for instance after a strategy did lead to an undesired or unexpected outcome. Regarding the regulation of collaborative processes, reflection scaffolds can help make groups aware of potential discrepancies or impasses in their interactions, and stimulate the group members to adapt their subsequent interaction (Heitzmann et al., 2023). Reflection is therefore a metacognitive process to make sense of past experiences. Team science builds on this notion of reflection and conceptualizes collaborative reflection as “cognitive and affective interactions between two or more individuals who explore their experiences in order to reach new intersubjective understandings and appreciations” (Yukawa, 2006, p. 206). Gabelica et al. (2014) describe the same concept as “team reflexivity,” which involves gathering feedback on collaboration, evaluating performance, discussing group performance or results, seeking alternatives, and making decisions that entail the implementation of new strategies. Renner et al. (2016) argued, that other persons not only serve as catalysts for reflection but also promote reflection on levels that one individual alone could not achieve. Consequently, when several individuals reflect collaboratively, they share knowledge and strategies, which may not have been available for the individual alone.

In reference to Hattie and Timperley (2007), and Korthagen and Vasalos (2005), we propose that reflective groups (Gabelica et al., 2014) utilize feedback about their collaboration to engage in collaborative reflection. During collaborative reflection, we expect that groups gather information on their past interaction (i.e., feed-up), discuss their performance against the background of a desired goal-state (i.e., feed-back), diagnose the need for regulation, and if necessary, derive plans on how to adapt the interaction to reach the desired goals (i.e., feed-forward, Hattie & Timperley, 2007). We conject that collaborative reflection process interrupts automated behavior, which makes deliberate information processing more likely (see Mamede & Schmidt, 2017). As a result, groups can engage in an interactive process of knowledge sharing (Weinberger et al., 2007) and co-construction (e.g., Chi & Wylie, 2014; Weinberger et al., 2007), through which the group members can create and internalize new knowledge about effective collaboration. This can include knowledge about desired goal states, conditional strategy knowledge, or knowledge about effective strategies and how they are performed. When groups enact the plans that they derived from reflection (i.e., feed-forward), it is possible for the group to improve the quality of the interaction, given the plans target dimensions of the collaboration that required regulation and given that the group planned effective behavior.

While the learning sciences and team science have provided insights into collaborative reflection on future actions and collaboration processes, how groups adapt their collaboration, and how this affects group performance, less attention has been paid to how collaborative reflection affects the acquisition of collaboration skills. The meta-analysis by Guo (2021) showed that most studies focused on leveraging reflection in individual learning settings to promote the acquisition of domain-specific knowledge and self-regulation (e.g., strategies, reflective thinking). Overall, reflection interventions had a medium positive effect on learning outcomes, where the effect of collaborative reflection on learning was larger than the effect of individual reflection (Guo, 2021). Regarding effects of reflection on collaboration, only few studies exist. With respect to performance, Gabelica et al. (2014) found that reflection during the task (i.e., reflection-in-action, Schön, 1987) does not affect performance in a subsequent task, while stopping to reflect on the performance after completing a task benefits future performance (i.e., reflection-on-action, Schön, 1987). The importance of reflection during collaborative learning is further stressed by the results by Wang et al. (2017) who found that groups showed more reflective behavior when prompted to do so, and that those groups also showed more regulation during the subsequent, unguided transfer problem.

Several approaches to stimulating reflection have been explored, such as journals or prompts (for overviews see Fessl et al., 2017 and Guo, 2021). Another autonomy-supportive approach that provides groups with opportunities for collaborative reflection are group awareness tools (GATs; Bodemer et al., 2018; Chen et al., 2024; Jeong & Hmelo-Silver, 2016; Strauß & Rummel, 2023). GATs collect data about the group and visualize this information for the group (Janssen & Bodemer, 2013). One central function of GATs is to provide feedback (Jermann & Dillenbourg, 2008), which serves as a basis for collaborative reflection and facilitates monitoring and regulation of the interaction in the group (Strauß & Rummel, 2023). This is accomplished through collaborative reflection, during which group members intentionally review their past collaboration process, assess which strategies were beneficial and which led to impasses, and therefore require adaptation (Gabelica et al., 2014; Heitzmann et al., 2023; Mamede & Schmidt, 2017). As previous studies suggest, the collaborative reflection process may not always occur spontaneously. Thus, groups may benefit from a dedicated reflection phase (Dehler et al., 2009; Strauß & Rummel, 2023).

Similar to external collaboration scripts, GATs offer information about effective collaboration. For instance, the aspects of collaboration that are visualized in a GAT represent potential goals of regulation. Through visualization, these aspects become more salient for the group and draw learners’ attention (Bachour et al., 2010; Carless & Boud, 2018; Pea, 2004). We propose that increasing the salience of specific aspects of the interaction, including a standard that should be achieved, triggers accommodation and assimilation processes that lead to the internalization of the visualized aspects as new internal script components. The information provided by the GAT also serves as feedback for the group (Jermann & Dillenbourg, 2008) and promotes collaborative reflection, in which group members review and deliberately process information about their past interaction and results, compare it to desired goals, and share or co-construct strategies, how the group can close the gap to desired goals in future collaborations.

GATs have not widely been explored as an approach to stimulate reflection or how they afford the acquisition of collaboration skills (see Chen et al., 2024; Janssen & Bodemer, 2013). One of the few studies that have leveraged a GAT to promote group reflection was conducted by Phielix et al. (2011), who visualized students’ self- and peer-assessment of their collaboration and asked students to co-reflect. They found that co-reflection stimulated students to develop plans regarding aspects of the collaboration that were related to the dimensions visualized in the GAT. In addition, research into working teams by Konradt et al. (2015) found that a guided reflection activity was associated with more reflection behavior within teams. This increase in reflection was associated with adaptation of group behavior and eventually improved team performance, thus highlighting the benefits of instructional support that guides collaborative reflection.

Against this background, we propose that a reflection scaffold that uses a GAT as a focal point for reflection represents an alternative approach to fostering the acquisition of collaboration skills and productive interaction. During collaborative reflection, a GAT serves as a shared object that highlights dimensions of the interaction that are worth monitoring and regulating, as well as standards for regulation (i.e., desired-goal states). In this context we assume that guiding collaborative reflection is crucial for acquiring knowledge about effective collaboration, which subsequently serves as the basis for adapting the interaction in the group. In comparison with external collaboration scripts, a reflection scaffold leaves learners with more autonomy over their interaction, as discussed by Wise and Schwarz (2017) and in research on autonomy-supportive teaching (e.g., Reeve & Cheon, 2021). Since previous studies on reflection concentrated on the manner in which groups adapt their interaction but did not sufficiently examine whether learners develop an understanding of collaboration, we conducted an investigation into the impact of a reflection scaffold on learners’ collaboration abilities.

Summary: Comparison between external collaboration scripts and collaborative reflection

External collaboration scripts and reflection scaffolds are different approaches to supporting interaction and promoting the acquisition of knowledge about effective collaboration. To highlight the differences between these two approaches to supporting collaboration, we compare external collaboration scripts and reflection scaffolds using the taxonomy of CSCL support dimensions proposed by Rummel (2018). The framework proposes twelve dimensions that allow to describe the design of CSCL support. Our comparison focuses on the most salient CSCL support dimensions, namely: goal, timing, target, and directivity. The remaining dimensions of the framework are contingent upon the particular implementation of the support. We will return to these in the discussion of our findings.

At the core of our study is the goal to help learners achieve collaboration skills and engage in productive interaction. To achieve this goal, external collaboration scripts and reflection scaffolds take different approaches, which are reflected in their design. While external collaboration scripts are typically presented during collaboration (timing) and directly target the interaction during the collaborative task (e.g., argumentation, Kollar et al., 2007; or sharing and clarifying information, Rummel et al., 2009), reflection scaffolds may be presented before episodes of working on a collaborative task (e.g., Gabelica et al., 2014; Phielix et al., 2011) and target processes of collaborative reflection that are expected to affect the interaction during subsequent collaboration. Specifically, an external collaboration script targets the social interaction during a collaborative task by providing a group with affordances that help the group members engage in productive interaction, whereas a reflection scaffold targets the metacognitive process of collaborative reflection. During reflection, groups review their past collaboration based on criteria that describe effective interaction, and subsequently derive plans whether and how to improve subsequent interaction. Finally, external collaboration scripts usually provide groups with general or specific guidance regarding the interaction (directivity) for example through prompts (e.g., Kollar et al., 2007; Mende et al., 2017; Rummel et al., 2009). Reflection scaffolds may also utilize direct guidance regarding the collaborative reflection (e.g., prompts, Phielix et al., 2011); however, this guidance does not target the goal of the reflection scaffold, that is, fostering collaboration skills and allowing groups to engage in productive interaction.

Research questions and hypotheses

External collaboration scripts and reflection scaffolds are different approaches to supporting productive interaction and promoting the acquisition of collaboration skills. While external collaboration scripts provide directive, socio-cognitive support during collaboration through prompting or proposing different phases, reflection scaffolds, represent group metacognitive support that fosters monitoring and reflection of their past collaboration. Given the call for exploring different ways of supporting collaboration and fostering the acquisition of collaboration skills (e.g., Wise & Schwarz, 2017), our study addresses the following research questions:

-

RQ (1) To what extend does an external collaboration script, and a reflection scaffold foster learners’ knowledge about effective collaboration in comparison with no collaboration support?

-

RQ (2) To what extend does an external collaboration script, and a reflection scaffold foster interaction quality in comparison with no collaboration support?

Against the background of the meta-analytical evidence on external scripts (Vogel et al., 2017; Radkowitsch et al., 2020), we expect an external collaboration script to be more effective compared to no support in fostering productive interaction and knowledge about productive collaboration. For collaborative reflection, this question has not yet been investigated. Based on theoretical account we assume that collaborative reflection represents a co-constructive process (see Chi & Wylie, 2014; Weinberger et al., 2007) that can lead to the acquisition of new knowledge, that is, knowledge about effective collaboration. Previous studies on the effects of individual reflection on domain-specific learning (see meta-analysis by Guo, 2021) and studies on the role of reflection during collaboration (e.g., Eshuis et al., 2019; Gabelica et al., 2014; Phielix et al., 2011) have yielded promising results. Thus, we hypothesize that both an external collaboration script and a reflection scaffold increase knowledge about effective collaboration, in comparison with no collaboration support (H1a). Regarding differences between an external collaboration script and a reflection scaffold, we did not formulate a directed hypothesis (H1b). With respect to the interaction quality, some evidence exists that an external script can increase the quality of the collaboration (Rummel et al., 2009). For collaborative reflection, this question has not yet been investigated directly. Again, studies from different fields (e.g., Gabelica et al., 2014; Konradt et al., 2015) yielded promising results that (collaborative) reflection improved the performance of individuals and groups. Thus, we hypothesize that the external collaboration script and the reflection scaffold lead to better interaction quality than no collaboration support (H2a). Again, we did not formulate a directed hypothesis for the effect of the external collaboration script and the reflection scaffold (H2b).

Methods

To answer our research questions, we conducted a laboratory experiment with a one-factorial intervention design where participants collaborated in small groups to solve collaborative tasks. In this experiment we investigated the effects of an external collaboration script and a reflection scaffold on participants’ knowledge about effective collaboration and group' quality of interaction during collaboration. To this end, the effects of these types of support were compared with a control condition. The study received approval from the university’s ethics committee and participants gave informed consent. The supplementary material for this study is available online in an Open Science Framework (OSF) project: https://osf.io/katdz/.

Participants

Participants were recruited via mailing lists and university courses. Participants volunteered and received monetary compensation of 35€ [~37 US dollars (USD)] for their participation. Before conducting our analyses, we performed an implementation check to exclude groups that did not interact with the collaboration support as intended (see Results). The results of the implementation checks did not warrant excluding participants or groups. Our final sample consisted of 150 German university students [age: mean (M) = 23.61, standard deviation (SD) 4.69; range: 18–45 years) who collaborated in N = 50 groups of three participants. Participants were assigned to a small group of three depending on their temporal availability. Each group was then randomly assigned to one of three experimental conditions: control condition (n = 45 participants, N = 14 groups), collaboration script condition (n = 54 participants, N = 17 groups), and reflection condition (n = 51 participants, N = 16 groups). Further, each group member was randomly assigned a role that the participant had to fulfill during the collaboration. Each role specified a content-based goal and perspective for the collaborative tasks as well as role-specific information about the problem posed in the tasks (see Materials).

Procedure

The experiment comprised a pre-test, a short video about general strategies for effective collaboration, the first collaboration phase (learning phase) with or without collaboration support depending on the experimental condition, a mid-test followed by a short break, and the second collaborative phase (testing phase) (see Fig. 1). The experiment lasted approximately 2 h.

On the day of the experiment, the members of the group met online via the online video-conferencing service Zoom (video, audio, shared screen) and individually answered the pre-test in an online questionnaire. This questionnaire collected demographic information (gender, age, field of study, German language skills) and assessed participants’ knowledge of effective collaboration. Subsequently, the participants viewed a brief animated video (ca. 3 min) that described general advice for successful collaboration. Groups then worked on two different information-pooling problems and received collaboration support according to their experimental conditions. We refer to these problems as the “learning phase” and “testing phase”. At the beginning of each phase, the participants were instructed to read the materials that would be used during the collaborative task. The material included information about the task and role descriptions for each participant. The groups each consisted of three students who took on one of the three roles: architect, daycare center manager, and fire and health safety officer. The roles therefore differed in terms of their requirements for solving the problem and the information they had about the situation. The experimenter then informed the group about the start of the collaboration and the time limit of 30 min to work on the collaborative task. The group was instructed to use the annotation feature of the video conferencing service to provide each other with graphical cues on the shared screen and to use a shared text editor (Etherpad) to take notes during the collaboration and document their solution. During the collaboration phases, the experimenter shared their screen showing a 2D floor plan of a construction project which served as the basis for solving the joint problem (see Fig. 2). The experimenter then withdrew from the virtual room by switching off their audio and video broadcast, and the group started to collaborate. The collaboration phases were video recorded.

Groups in the control condition and the reflection condition did not receive any collaboration support during the first collaboration phase (i.e., learning phase) while groups in the script condition received an external collaboration script that specified a sequence of phases with actions that the groups should take during each phase (see Materials). Groups in the control condition and the reflection condition had 30 min to finish the task, whereas groups in the script condition received one additional minute in each phase to read the prompts in the script; thus, groups in this condition worked on the problem for 34 min. After a group signaled that they had finished the first problem, or after the time limit had elapsed (30 min in the reflection condition, 34 min in the script condition), groups either immediately answered the mid-test (control condition, script condition) or were provided with the reflection scaffold (reflection condition) and collaboratively reflected on their performance for up to 12 min.

Following the learning or the reflection phase, respectively, participants in all conditions completed a mid-test to again evaluate their knowledge of collaborative processes. After the mid-test, participants took a 10 min break before continuing the experiment. During the second problem, each member retained their role but received additional information. The groups then collaborated for 30 min without support to solve the second problem during the testing phase. Following the testing phase, the participants were thanked for their participation and given the opportunity to ask questions about the experiment. The experimenter’s manual is available in the supplementary material.

Materials

The materials are available in the supplementary material.

Introduction video

The video was introduced by asking the participants to follow the instructions from the video in their subsequent collaboration. It described typical pitfalls of collaboration and briefly characterized general strategies (i.e., the process dimensions from Meier et al., 2007) to mitigate these challenges. These strategies were consistent with those implemented in the external collaboration script and the GAT that provided the information for the reflection scaffold.

Collaboration tasks

The collaboration tasks consisted of civil engineering problems that required finding ways to integrate new demands into a 2D model (floorplan) of the construction of a kindergarten (see Fig. 2). In one task, the group had to find a position for a mezzanine play structure for the kids to climb and play on. In the other task, the group had to find a way to rearrange walls to construct a room that is suited for larger groups and festivities. The order of the two tasks was randomized for each group. Both collaboration tasks presented groups with an information-pooling problem with a hidden-profile (e.g., Stasser & Titus, 1985), that is, groups were only able to derive an adequate solution for the tasks by sharing previously unshared information that each group member held. Thus, the tasks imposed individual accountability and social interdependence with the aim to spark interaction between the group members (Johnson & Johnson, 2009). The hidden profile was implemented by distributing roles among the group members. Each group member was assigned the role of one of three experts (architect, daycare manager, or fire and health protection officer), which was introduced to them in a two-page booklet (i.e., role material). Each booklet included the description of the problems and two types of information about the construction projects. First, it encompassed shared information about the problem, that is, pieces of information that each group member held. For example, the floor plan that each group member received included the area of each room in square meters. Second, the booklets included unshared information, that is, pieces of information that represented the specialized knowledge of the individual experts. For example, the architect held information about which walls were weight-bearing walls. In both problems, the proposals, the requirements and arguments, and the undivided information were evenly distributed among the three roles. The description of each role contained one solution proposal, four requirements for the joint solution, and one unshared information that is relevant for finding a solution. The booklets, especially the role descriptions, did not suggest activities or interaction patterns, and did not make the group members aware that the other group members held unshared knowledge about the problem. Thus, the roles in our study distributed resources, instead of activities (Kobbe et al., 2007), and therefore can be characterized as role as a pattern that is directed at a product (i.e., solution to the task) (Strijbos & De Laat, 2010). To arrive at a joint solution for the collaborative tasks, the group members had to pool their unshared information (i.e., hidden profile). There were no single best solutions to the problem in each collaborative task so that groups needed to develop alternative solutions and decide on one. To keep the difficulty of the two tasks comparable, the roles (architect, daycare manager, fire and health protection officer) held an identical number of shared and unshared information, as well as arguments for and against their respective proposed solution. The interactivity of the elements (i.e., individual information and arguments) of the tasks was identical in both collaborative tasks.

Independent variable: Collaboration support

Each small group was randomly assigned to one of three experimental conditions where the groups received (a) no collaboration support, (b) an external collaboration script that was available during the collaboration and that structured the problem-solving process, prompted students to use the collaboration strategies, and provided explicit descriptions of collaboration strategies, or (c) no support during the first collaboration (learning phase), but a reflection scaffold after collaborating. The external collaboration script and the reflection scaffold were designed on the basis of the nine dimensions of successful collaborative problem-solving processes proposed by Meier et al. (2007). While the script provided an on-line scaffold during the collaboration process (in the form of action prompts), the reflection scaffold was provided after the collaboration and targeted the groups’ past interaction (in the form of questions) with the aim to develop plans for their future collaboration during the second collaboration task. A side-by-side comparison of the prompts used in the external collaboration script, the questions used during the reflection that were visualized in the GAT, and how these map to the process dimensions by Meier et al. (2007) are available in Appendix B.

External collaboration script

The external collaboration script scaffolded the collaboration by specifying a sequence of actions (scene-level) and within each phase provided the group with prompts on beneficial actions for the respective phase (scriptlet level; Fischer et al., 2013). The external collaboration script was presented during the collaboration in the margin area of the shared screen in the video conference. The right panel of Fig. 2 shows the external collaboration script for the information pooling phase. The script consisted of four consecutive phases that were introduced with a short description of the general purpose of this phase, followed by explicit instructions for interaction. The four phases of the external collaboration script were coordination (5 min), information pooling (7 min), discussion (10 min), and decision making (7 min). For each phase, groups received one additional minute for reading the prompts. Each phase was highlighted in a different color and contained a progress bar at the top of the page for visual feedback about how much time was left in the current phase. Phase changes were indicated by an audio signal.

Reflection scaffold

The reflection scaffold was modeled after the activity described by Phielix et al. (2011) and consisted of three components: feed-up, feedback, and feed-forward. In the first phase of the reflection scaffold, the members of the group individually and anonymously completed an online questionnaire in which the participants assessed the quality of the collaboration of their group during the learning phase (i.e., the GAT, feed-up). Participants were informed that their individual answers remained anonymous and that in the next phase they will discuss the resulting average values. In our study, the GAT served the purpose of visualizing the results of group members’ individual reflection regarding the interaction quality, which then served as the basis for collaborative reflection.

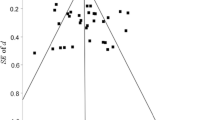

The online questionnaire contained 28 reflection questions, which targeted the dimension of effective collaboration, that is, the phases and actions included in the external collaboration script. The results of the feed-up were visualized in a spider plot. For example, for the information pooling dimension, group members evaluated their information pooling strategies with reflection questions such as “I think during the collaboration we exchanged all relevant information with each other” on a seven-point Likert scale (1 = completely disagree, 7 = completely agree). The individual ratings for each dimension of collaboration (coordination, information pooling, discussion, decision making) were then automatically averaged and visualized for the group in the form of spider-plot-diagrams (see Fig. 3).

These diagrams provided the basis for the reflection scaffold. During the collaborative reflection, group members were instructed to view the spider plot diagrams, reflect on their self-assessment of the first collaboration phase (feedback), and discuss how they would like to improve their collaboration in the second collaboration phase (feed-forward). The groups were asked to use an online shared text editor (Etherpad) to write down what they would like to do differently to improve the interaction in the group. The reflection phase was limited to 12 min.

Measures

We assessed participants’ knowledge about collaboration before and after the first collaboration, using the method of Rummel and Spada (2005). Their measure consisted of two questions that participants answered in an open format. The first item asked participants to describe the phases that a group should perform while solving a joint problem: “Imagine you are asked to find a solution to problems in a construction project together with two collaborative partners. Describe in keywords how you imagine the individual phases of collaboration in solving a problem in a group. How should the collaboration partners proceed step by step in their collaboration?” Afterwards, participants indicated in a second item what they perceive as “good” collaboration: “What should be taken into account in good collaboration and communication in general?”.

To calculate a score for each participant, we scored participants’ replies against the background of our model of productive interaction processes (Meier et al., 2007). These aspects and dimensions of successful computer-based collaborative problem-solving processes were the codes for the first draft of the coding schema. In some cases, the dimensions were further differentiated to take all indicators into account. For example, the dimension of “sustaining mutual understanding” was divided into the subcodes “establishing a common understanding of the problem and the goal,” “clarifying ambiguities,” “taking time for questions,” “clarifying questions of understanding,” and “written documentation of the process.” A random sample of n = 9 participants from both measurement times was drawn to check whether the codes describe the data conclusively and comprehensively. A coding manual was then created with the final codes and subcodes, as well as anchor examples (see Appendix C). All answers to the knowledge test were first broken down into units, based on numbering, bullet points, paragraphs, or punctuation. Each unit was then assigned to one code. One point was awarded for every aspect in a participant’s answer that matched with the codes or subcodes. A second coder independently analyzed 20% of the answers using the coding manual (Cohen’s κ = 0.74). The points were then added to form a total score.

We measured the interaction quality of each group by coding and rating the interaction during collaboration in the learning phase and the testing phase based on the rating schema by Meier et al. (2007) (see Appendix D). The rating process consisted of two stages. In the first stage, we identified instances during the collaboration that fit to one of the nine dimensions from the original rating schema. We assigned at least one of the nine codes to each utterance in the video recording of each group. Utterances were defined as completed speech acts that were visualized in the MAXQDA software by the acoustic deflections. The data were coded by three independent coders who reached an agreement of 74.51% on 20% of the data. Disagreements were resolved by discussion to obtain the final dataset.

Video sections could be labeled with more than one code under certain circumstances, for example, in the case of overlapping word contributions. Also, the codes “information exchange” and “decision making” could both be assigned to the same utterance. This double coding was applied when the respondents neither only shared information nor only argued throughout a contribution but introduced new arguments based on the information they shared. In the second stage, we rated the quality of the interaction on a three-point scale (0, 1, 2). To this end, we divided the video recordings (containing the codes that were assigned in the first stage) into segments of 3 min. We then rated the quality of every coded interaction in this segment. For example, all utterances within a three-minute segment, which were coded as “information pooling” in the first stage, were rated on the three-level scale using defined criteria. On average, the ratings on a subset of 20% of the data reached satisfactory interrater agreement of Cohen’s weighted κ = 0.87 (sustaining mutual understanding: κ = 0.89, dialog management: κ = 0.90, information pooling: κ = 0.81, reaching consensus: κ = 0.80, individual task orientation: κ = 0.75, reciprocal interaction: κ = 0.87, time management: κ = 1.00, technical coordination: κ = 0.91, task division: κ = 0.89). To calculate the quality of the groups’ interaction on each quality dimension, we averaged the quality-scores within each dimension across all segments. To receive a value for the overall quality of the interaction, we calculated a grand mean over the dimensions of interaction quality, for both the learning and the testing phase.

To check the implementation of our external collaboration script, we assessed the script adherence by analyzing the degree to which the groups engaged in actions that were associated with the phases and actions prescribed by the external collaboration script. To this end, we coded the video recordings and identified instances of each process dimension of productive interaction as described above. Afterwards, we matched the actions that occurred in the video with the actions that were prescribed by the external collaboration script. Figure 4 provides an example of the expected distribution of actions during collaboration (top half) and the actions of a group (bottom half).

For instance, for the first phase (coordination phase) the external collaboration script prompted sustaining mutual understanding, information pooling, task division, and time management. If the coding in this phase contained at least one segment of sustaining mutual understanding, information pooling, task division, and time management, and no actions that would be required in later phases, such as reaching consensus, the group would receive a perfect score of five points in this phase for closely abiding by the script. Any mismatch with the script would result in a point deduction. Groups could reach a maximum of 20 points (5 points in each of the 4 phases), indicating a perfect adherence to the phases of the external collaboration script. We analyzed the script adherence for groups in the script condition, as well as for groups in the control and reflection condition. Agreement was determined by calculating Cohen’s weighted kappa for a 30% subset of data analyzed by a second coder and revealed high agreement (κ = 0.94).

To check the implementation of the reflection scaffold, we measured the time that the groups spent reflecting and the number of suggestions for improvements that groups made during the reflection phase. We determined the duration by counting the minutes that had elapsed between the start of the reflection (i.e., after the experimenter turned off the video and audio transmission) and the time the group signaled to the experimenter that they had finished the reflection. The number of suggestions for improvement was counted from the written notes that the groups took during the reflection phase. To this end, we first segmented each groups’ collection of notes into meaningful units, as indicated by bullet points and then counted the number of units. Figure 5 shows an example of a group’s reflection notes, where a group created nine suggestions.

We determined the target of the suggestions using the same process used to assess participants’ knowledge about collaboration. Specifically, we assigned each unit in the groups’ reflection notes to one of the nine process dimensions.

Time on task was measured based on the video-recordings for each phase (learning phase, reflection phase). We determined time on task by counting the time that elapsed between the start of collaboration (i.e., after the experimenter turned off the video and audio transmission) and the time the group signaled to the experimenter that they had completed the task. Time on task was measured in hours.

Analyses

To answer RQ 1, we performed multilevel modeling (MLM) (or hierarchical linear modeling; HLM). We selected this approach to account for the nested structure of our data, that is participants (level 1) working in small groups (level 2). Neglecting the nested structure would underestimate effects (Cress, 2008; Janssen et al., 2013).

For RQ 1, we constructed and compared five multilevel models, which represented different levels of complexity. The first model (model 0) was a so-called “null-model” or intercept-only model that allowed us to determine the intraclass correlation (ICC), that is, the degree to which being a member of a specific small group accounts for variance in participants’ knowledge about collaboration after the learning phase (i.e., mid-test). Afterwards, we compared the experimental conditions. To this end, we fitted random-intercept models that included an interaction term of the experimental condition (control, script, reflection) and measurement time (pre-test, mid-test). This allowed us to analyze the change in knowledge (i.e., increase or decline) over time, in the different experimental conditions.

First, we calculated two random-intercept models to compare the difference in knowledge (mid-test) between the experimental conditions. We compared the means of the script and reflection conditions to the control condition (model 1a), and then compared the control and script conditions to the reflection condition (model 1b). This aimed at determining the effectiveness of the experimental conditions. In the next step, we extended the models from the previous step by adding time on the task as a covariate to further isolate the effect of the experimental condition. We added time on task as a covariate because longer collaboration may provide participants with more opportunities to learn about productive interaction. By including time on task, we were able to further isolate the effect of the collaboration support. Similar to before, we started by comparing the script and reflection condition to the control (model 2a) and subsequently compared the control and script condition to the reflection condition (model 2b). To assess which model is the most parsimonious and describes our data the best, we conducted deviance tests as suggested by Field et al. (2012). Similar MLMs were calculated to analyze the distribution of knowledge elements in our exploratory analysis. The multilevel analyses were conducted in R-Studio (Version: 3.4.1, R Core Team 2024) using the “lme4” (Bates et al., 2015) and “sjPlot” (Lüdecke, 2023) packages. In all frequentist analyses we used α = 0.05 to determine statistical significance.

For all analyses that used data without a nested structure (i.e., RQ 2 and the explorative analyses) we used Bayesian procedures. To classify the strength of the evidence for (or against) hypotheses, we interpreted the Bayes factor (BF) (see van Doorn et al., 2021 and Kruschke & Liddell, 2018). The Bayes factor (BF) reflects how well a hypothesis (e.g., H0: no difference exists versus H1: difference exists) predicts the data that has been collected. For example, a BF10 = 3.0 indicates that the collected data is three times more likely to occur under H1 than under H0 (van Doorn et al., 2021). Bayesian analyses can be tailored so that we can interpret them as either in favor of the null hypothesis (BF01, note the subscript is 0 followed by 1) or in favor of the alternative hypothesis (BF10, note the subscript is 1 followed by 0). For an intuitive interpretation of the results, we report the Bayes factor that is larger. While clear thresholds to interpret the Bayes factor are not compatible with Bayesian inference (in contrast to inspecting p values to reject a null hypothesis), a rule of thumb suggests the following levels of evidence: BF between 1 and 3, weak evidence; BF between 3 and 10, moderate evidence; aand BF greater than 10, strong evidence (see van Doorn et al., 2021 and Kruschke & Liddell, 2018). For our analysis, we used an uninformed prior (uniform distribution). The Bayesian analyses were performed in JASP (Version 0.18.3, JASP Team, 2023).

Results

The dataset and the documentation of our analyses (R-notebook, JASP outputs) are available in the supplementary material. Before presenting the findings for both research questions, we report the results of an implementation check. The results for the two research questions are supplemented with results of exploratory analyses that aim to provide additional context information for better interpretation of the findings regarding the research questions.

Implementation check

We conducted implementation checks to analyze the extent to which groups acted as intended when supported with the external collaboration script and the reflection scaffold. For the external collaboration script, we analyzed the degree to which the groups engaged in actions that were associated with the phases and actions prescribed by the external collaboration script (i.e., script adherence) during the learning phase. During the learning phase, the groups in the script condition reached script-adherence score of M = 11.24 [SD 2.36; 95% confidence interval (CI) [10.02, 12.45]] with a median (Md) score of 12.00 on a 20-point scale. Based on this distribution we concluded that most groups in the script condition followed the external collaboration script sufficiently during the learning phase. To compare whether the structure of the interaction processes in the script condition stemmed from the external collaboration script, we determined the script adherence for the reflection and control condition and tested whether the script adherence in the script condition was larger than in the other two conditions. We found that groups in the control condition (M = 9.93, SD 1.83; 95% CI [8.92, 10.95]) and the reflection condition (M = 9.25, SD 1.92; 95% CI [8.23, 10.27]) followed a collaboration strategy similar to the one suggested by the external collaboration script. Post hoc comparisons of a Bayesian analysis of variance (ANOVA) suggests that our data provided moderate evidence that groups in the script condition had a higher script-adherence than groups in the reflection condition (BF10 = 4.21), whereas more data is needed to decide whether groups in the script condition did not differ in their script adherence from groups in the control condition (BF01 = 1.99). These results led us to conclude that groups in the script condition tended to adhere to the external collaboration script, however not perfectly.

As an implementation check for the reflection scaffold, we assessed the time that the groups spent reflecting as well as the number of suggestions for improvements that groups made during the reflection phase. On average, the reflection phase lasted M = 9.71 (SD 2.26) min and ranged from 5.3 min to 12.27 min (Md = 9.70 min). During this time, groups created between 0 and 9 notes, while the average group wrote down M = 3.87 (SD 3.14) notes (Md = 4.00). Considering these results, we concluded that the reflection condition was implemented successfully. Based on the results of the implementation checks, we did not exclude groups from our sample.

RQ 1: Effect of collaboration support on knowledge about collaboration

RQ 1 was concerned with comparing the effect of the experimental conditions on participants' knowledge about effective collaboration. The average scores on the knowledge test before the experiment (pre-test) and after the learning phase (mid-test) are shown in Table 1.

Before the learning phase (i.e., pre-test) participants already held knowledge about effective collaboration. Overall, a Bayesian ANOVA suggests, that more data are required to gain confidence in the decision that there is no significant overall difference between the control condition, script condition, and in the reflection condition, BF01 = 1.50 (weak evidence). A post hoc test with pairwise comparisons found moderate evidence that the control condition and the script condition did not differ significantly (BF01 = 3.98) and weak evidence that the script condition and the reflection condition did not differ (BF01 = 1.38); however, more data is needed to gain confidence in this finding. For the comparisons between the control condition and the reflection condition, the analysis suggests that more data is needed to determine whether a significant difference exists (BF10 = 2.03, weak evidence). Based on these findings, we assume that participants from the three experimental conditions did not differ in their prior knowledge.

In the following, we present the multilevel models that we fitted to answer RQ 1. An overview of all hierarchical linear models can be found in Table 2. The intercept-only model (null model, model 0) showed that the intercept (i.e., the mean over all participants) is significantly different from 0 and that the intraclass correlation (ICC) for the level 2 variable (the small groups) was ICC = 0.14, indicating that 14% of the variance in participants’ knowledge about productive collaboration could be attributed to the small groups. Subsequently, we compared multilevel models to determine the effect of the experimental condition on participants’ knowledge about effective collaboration after the learning phase (i.e., mid-test).

The first models included the experimental condition as predictor for participants’ mid-test knowledge, as well as an interaction term for condition and measurement time, while allowing the small groups to have random intercepts. In model 1a, we did not find a significant main effect of condition or for measurement time when using the control condition as the baseline. However, the interaction effect for the reflection condition versus the control condition was significant (b = 1.28, p < 0.05). This suggests that the change in knowledge from pretest to mid-test in the reflection condition significantly differ from the change in the control condition. The effect is positive, which indicates that the change in knowledge is higher in the reflection condition than in the control condition. The other interactions did not reach statistical significance.

In model 1b (reflection condition as a baseline), we found a significant main effect for measurement time (b = 1.86, p < 0.05). This suggests that there is an overall improvement of knowledge in the control and script condition (collapsed over both conditions), when compared with the reflection condition. This effect does not occur when the script and reflection condition are compared to the control condition (model 1a). As in model 1a, we found a significant interaction effect for measurement time and control condition versus the reflection condition (only with inversed signs). We did not find a significant interaction between the condition and measurement time for the script condition, when compared with the reflection condition. This suggests that the change in knowledge from pre-test to mid-test did not differ between the script and reflection condition.

To further isolate the effect of the experimental condition on participants’ knowledge about effective collaboration we added time on task as covariates to the random-intercept models 1a/b. Overall, the covariate time on task did not have a significant influence on participants’ knowledge (b = −0.1.07, p > 0.05). Further, all effects that we found in random intercept model 1a are still significant when adjusting the means for time on task. The significant interaction effect in model 2a showed that, when adjusting for time on task, participants in the reflection condition increased their knowledge by b = 1.39 points compared with participants in the control condition, which is a significant difference (p < 0.05). Participants in the script condition increased their knowledge by b = 0.71 points compared with their peers in the control condition, which did not reach statistical significance (p > 0.05). Setting the reflection conditions as baseline (model 2b) revealed the inverse effects that were found in model 2a.

To determine whether the different random intercept models fit the data better than the null-model, we calculated chi-squared tests as suggested by Field et al. (2012). The detailed results can be found in the supplementary materials. The chi-squared test showed that the random-intercept models 2a and 2b fit the data significantly better than a random intercept model without the covariate (models 1a and 1b) (χ2 (1) = 55.16 p < 0.001). Thus, we interpret the findings from the more complex models, models 2a and 2b.

In summary, our findings partly support our hypotheses. Our results did not indicate a general advantage of both support measures over the control condition (H1a). Instead, we found that participants in the reflection condition task gained more knowledge over time than participants who collaborated using an external collaboration script (H1b). Using an external collaboration script did not lead to a larger increase in knowledge in comparison to the control condition or the reflection condition, however (H1b).

RQ 2: Effect of collaboration support on quality of interaction

To explore our second research question, we conducted a Bayesian two-way ANOVA to compare the experimental conditions in terms of interaction quality (see Wagenmakers et al., 2018). We entered the experimental condition (control versus script versus reflection) as between-subjects factor, and the phase (learning phase versus testing phase) as a within-subjects factor. The dependent variable was the interaction quality, that is, the grand mean over the nine process dimensions of collaboration that were addressed by the external collaboration script and the reflection scaffold. Table 3 provides an overview of the average collaboration quality during the learning phase and the testing phase. Since we measured the collaboration quality on the level of the group, the following analyses were conducted with groups as the unit of analysis (n = 48 groups in three conditions).

During the learning phase, groups in the three conditions showed comparable levels of interaction quality. During the second collaboration (i.e., the testing phase), groups in the reflection condition showed a higher interaction quality, when compared with the control condition and the script condition. For both main effects, our analyses yielded a Bayes factor in favor of the null hypothesis. For the main effect of the phase, we found weak evidence of BF01 = 3.87 for the assumption that the conditions did not differ in terms of interaction quality in the learning phase and the testing phase. Similarly, we found moderate evidence for the main effect of condition with a BF01 = 4.41, suggesting that the conditions did not differ in terms of interaction quality. Our data provided strong evidence for the assumption that there is no interaction between phase and condition, BF01 = 16.25. Also, post hoc tests did reveal weak evidence in favor of the assumption that the groups in the different experimental conditions did not show different interaction quality.

Taken together, our findings are not in line with our hypotheses (H2a, H2b). Instead, they suggest that neither the collaboration script nor the reflection scaffold affected the overall interaction quality during an immediate subsequent unsupported collaboration.

Explorative analyses

After comparing the effects of the collaboration support, we were interested to learn more about the knowledge that participants acquired throughout the collaboration. As only few studies on collaborative reflection exist, we further sought to investigate the reflection process in more detail. Specifically, we explored (1) for which dimensions of productive interaction participants gained knowledge, (2) how the conditions compared in terms of script-adherence, especially after withholding the external collaboration script for groups in the script condition, (3) how the groups in the reflection condition perceived their collaboration, and (4) which plans they derived during this process. These explorations complement our planned analyses and help us understand our findings. Against the background of our findings regarding RQ 1, we expect that groups in the reflection condition gained certain knowledge components to a larger extent than groups in the other two conditions.

Knowledge about effective collaboration

The analyses for RQ 1 revealed that the participants entered the study with prior knowledge about effective collaboration. This finding is not surprising given the age and education of the participants. To gain more insight into the structure of prior knowledge and how the collaboration support affected the knowledge, we analyzed participants’ answers to both knowledge tests in terms of different knowledge components. Table 4 provides an overview about how the knowledge was distributed across these nine process dimensions before (i.e., pretest) and after the learning phase (i.e., mid-test). We fitted random-intercept models as for RQ 1 to determine whether changes in the number of knowledge components are different in our three conditions. In the following we only report significant effects, please refer to the supplementary material to view all models.

Overall, prior to the study, participants' knowledge of productive collaboration encompassed all nine process dimensions. Most participants, however, covered only the two dimensions, information pooling and reaching consensus, as indicated by mean scores above 1. After the learning phase, the participants mentioned more knowledge components for all process dimensions, except for dialogue management and time management. This suggests that the participants became more aware (or learned) that developing and sustaining mutual understanding, pooling unshared information and systematically searching for a consensus are core requirements when working on a hidden-profile task.

In comparison, the increase of knowledge components that concern information pooling (Mpre = 1.36 to Mmid = 2.74) is the most visible. Comparing the change in knowledge about collaboration in the three conditions suggests that this trend is most visible in the reflection condition. Here, we found an increase from Mpre = 1.63 to Mmid = 3.43. Random-intercept models suggest that the increase visible in the reflection condition is significantly larger than in the control condition (b = 0.83, 95% CI [0.15, 1.50], p < 0.05); however, the change in knowledge in the script condition was not significantly different than in the reflection condition (b = −0.51, 95% CI [−1.15, 0.14], p = 0.12). Similarly, participants from the reflection condition increased their knowledge about sustaining mutual understanding from Mpre = 1.55 to Mmid = 1.94. Random-intercept models suggest that learners in the reflection condition gained more knowledge than participants in the control condition (b = 0.89, 95% CI [0.40, 1.39], p < 0.001). In the script condition, this change was significantly smaller than in the reflection condition (b = −0.92, 95% CI [−1.40, −0.45], p < 0.001).

Regarding the dimension dialogue management, participants in the control and script condition did not exhibit visible changes in knowledge, while participants in the reflection condition showed a small descriptive increase from Mpre = 0.51 to Mmid = 0.63. A random-intercept model did not yield a significant interaction effect, indicating that the three conditions did not differ in the change in knowledge about dialogue management. Reciprocal interaction was mentioned approximately once by participants before the learning phase (Mpre = 1.16); however, after the learning phase the participants mentioned this dimension visibly less (Mmid = 0.71). Fitting a random-intercept model suggests that the conditions did not differ in their change in knowledge about reciprocal interaction, however. The remaining three dimensions time management, technical coordination, and individual task orientation were only mentioned by few participants, as exhibited by mean values of close to zero. Regarding individual task orientation, a random-intercept model suggests that the increase in knowledge was smaller in the script condition than in the reflection condition (b = −0.22, 95% CI [−0.37, −0.08], p < 0.05).

In summary, our exploration suggests that the participants entered the study with prior knowledge about effective collaboration, especially they were aware that productive group members share information and negotiate decisions. After the learning phase, participants in all three conditions reported more knowledge in these two dimensions, but also mentioned interactions that benefit developing and maintaining a shared understanding. The increase in knowledge about sustaining mutual understanding and information pooling was significantly larger in the reflection condition compared to the control condition. Participants in the reflection condition also gained more knowledge about sustaining mutual understanding than their peers in the script condition. Regardless of the condition, only a few participants mentioned the need for effective time management, coordinating regarding the collaboration environment, or individual task orientation in their replies before or after the learning phase.

Adhering to a scripted progression of collaboration phases

In addition to analyzing the extent to which groups adhered to the scripted phases during the learning phase (i.e., implementation check), we investigated whether these groups followed the external collaboration script during the testing phase, when the script was no longer available. Table 5 provides an overview of the script-adherence in all three conditions during both phases.

To investigate the change of script adherence over time, we conducted a Bayesian repeated measures ANOVA with phase (learning phase versus testing phase) as the within-subjects factors, condition (control versus script versus reflection) as the between-subjects factors, and script adherence as dependent variable. The Bayesian ANOVA suggests that our data yields moderate evidence for the assumption that a significant main effect of phase (BF10 = 3.75), and weak evidence (BF10 = 1.57) for a significant interaction effect. Our data further suggested that no significant main effect of condition exists (BF01 = 1.43). While additional data is required to increase our confidence in these findings, our data suggests that the script adherence in the script condition decreased significantly from M = 11.23 (SD = 2.34) during the learning phase to M = 9.35 (SD = 2.82) in the testing phase, while the decrease was smaller in the control condition and the reflection conditions. An additional Bayesian ANOVA further suggests that the conditions did not differ in their script adherence during the testing phase (BF01 = 6.07, moderate evidence). Thus, our analyses indicates that groups in the script condition struggled to reproduce the phases of the external collaboration script without the script.

Reflection phase: Self-assessment of interaction quality and planning adaptations

Given the central role of regulating the interaction, we explored how groups perceived the quality of their interaction and compared it to our rating of the video-recordings. This exploration was driven by the assumption that an accurate self-assessment of the interaction quality is the first step to deriving plans that positively affect future collaboration. The analysis of the groups’ self-assessment revealed that most groups perceived the interaction quality of their group as high, M = 6.09 (SD = 0.57), minimum of 4.87, maximum of 6.79 on a seven-point scale. This finding raises the question of whether the groups overestimated themselves. Our data allow us to approach this question by examining the correlation between the groups’ self-assessment (i.e., their averaged response to the self-assessment questionnaire, i.e., feed-back) and our averaged rating of the interaction quality based on the video recordings. Figure 6 shows a scatter plot and regression line for the bivariate relation between the interaction quality (rating) during the learning phase and groups’ self-assessment.

A Bayesian analysis for an undirected correlation revealed a moderate positive correlation of Kendall’s τb = 0.41. This suggests that groups who rated their collaboration as better also received a higher score during our rating (and vice-versa). This correlation is BF10 = 3.1 times more likely to occur under our data than no correlation. This finding, although more data is needed as suggested by a BF of 3, suggests that the groups were able to adequately assess the quality of their interaction to some extent.

We further explored the suggestions the groups made to improve the interaction during the subsequent collaboration to determine what dimensions of the collaboration the groups planned to adapt in the second collaboration task (i.e., feed-forward). Using the same process we used to assess participants’ knowledge about collaboration, we assigned each idea in the groups' reflection notes to one of the nine process dimensions. The groups in the reflection condition created notes containing between 0 and 9 suggestions for improving their interaction, with M = 3.87 (SD = 2.70) suggestions on average, as shown in Fig. 7. Note that groups D016 and D043 did not create written notes during their reflection.

With respect to the distribution of plans, it becomes visible that most groups created plans that covered sustaining mutual understanding. For this process dimension they created the highest number of plans. Further, groups covered information pooling and reaching consensus, two process dimensions that are key to working on a hidden-profile problem. Rarely, groups decided to adapt reciprocal interaction, task division, technical coordination, or time management.

Discussion