Abstract

We explore the question of whether cost-free uncertain evidence is worth waiting for in advance of making a decision. A classical result in Bayesian decision theory, known as the value of evidence theorem, says that, under certain conditions, when you update your credences by conditionalizing on some cost-free and certain evidence, the subjective expected utility of obtaining this evidence is never less than the subjective expected utility of not obtaining it. We extend this result to a type of update method, a variant of Judea Pearl’s virtual conditionalization, where uncertain evidence is represented as a set of likelihood ratios. Moreover, we argue that focusing on this method rather than on the widely accepted Jeffrey conditionalization enables us to show that, under a fairly plausible assumption, gathering uncertain evidence not only maximizes expected pragmatic utility, but also minimizes expected epistemic disutility (inaccuracy).

Similar content being viewed by others

1 Introduction

Can it be that a rational agent would postpone a decision in order to acquire cost-free but uncertain evidence? Suppose that there are two types of urns containing marbles. Urn X contains 8 blue and 2 violet marbles, while urn Y contains 2 blue and 8 violet marbles. A given urn is selected at random by the toss of a fair coin. Ann is about to guess the type of the selected urn, and her possible guesses have different practical consequences that depend on whether the selected urn is of type X or Y. But before guessing, she has the opportunity to draw, cost free, a marble from the selected urn. Yet Ann knows in advance that the lighting is so dim that it would be difficult to discern what her experience says: given her background information about the lighting conditions, she expects that learning experience would make her uncertain about whether the drawn marble is blue, B, or violet, V.

A classical result in Bayesian decision theory (Savage 1954, ch. 4; Raiffa and Schlaifer 1961, ch. 4.5; Good 1967; Ramsey 1990), known as the value of evidence theorem (VET), says that, under certain conditions, when an agent updates her credences upon the receipt of cost-free evidence, the subjective expected pragmatic utility of obtaining this evidence is never less than the subjective expected pragmatic utility of not obtaining it. That is, expecting to obtain cost-free evidence cannot lead you to expecting to make worse practical decisions.Footnote 1 The original VET, however, is limited to cases where the agent learns proposition E for certain from a set \({\mathcal {E}}\) of mutually exclusive and jointly exhaustive propositions, and hence may update her credences by dint of Bayesian conditionalization (BCondi, for short). Crucially, in such cases the agent is certain ex ante that exactly one proposition E from \({\mathcal {E}}\) will be true. But often, like in Ann’s case, we undergo learning experiences where it is hard to discern what that proposition is, and so we become uncertain about which element of \({\mathcal {E}}\) is true. Could cost-free uncertain evidence so understood be worth waiting for in advance of making a decision?

This question has not gone unnoticed in the literature. Graves (1989) showed that we can extend VET so that it holds for cases where we become uncertain about what the logically strongest proposition we learn is, and update our credences by using a rule called Jeffrey conditionalization (JCondi, for short). This rule requires uncertain evidence to be specified as a redistribution of the agent’s credences over the propositions in some partition \({\mathcal {E}}\) of a set of possibilities, without assigning absolute certainty to any particular proposition (hereafter, a Jeffrey shift). For example, Ann’s learning experience can be understood as a Jeffrey shift over the partition \(\left\{ B, V \right\} \). To accommodate this type of uncertain evidence, Graves’s argument, as we will argue, is mobilized by two conceptual moves. The first one is that any Jeffrey shift can be specified as a sort of propositional certainty, i.e. as a proposition that receives posterior credence 1 in an enriched subjective probability space. This enrichment is achieved by adding to the original smaller space propositions about one’s posterior credences attached to the members of a partition \({\mathcal {E}}\). The second key move is to show that, under certain conditions, BCondi on the proposition specifying the posterior credences over \({\mathcal {E}}\) in the enriched space is equivalent to JCondi in the original small space.

After challenging Graves’s argument, this paper offers an alternative extension of VET to the case of learning from uncertain evidence. To preview, instead of recasting uncertain evidence as certain in an enriched subjective probability space, the proposed view retains the uncertainty of one’s evidence in the original smaller space, and provides a specification of this uncertainty by utilizing the method of virtual evidence proposed by Pearl (1988, 1990) and developed in Chan and Darwiche (2005). According to this method, uncertain evidence can be specified as a set of likelihood ratios, where each likelihood ratio tells you how well some virtual evidence fits with some proposition in partition \({\mathcal {E}}\) as compared to how well it fits with another proposition in that partition. The virtual evidence is meant to be an auxiliary proposition that bears on the truth of propositions in \({\mathcal {E}}\). We supply this method with a specific understanding of what the auxiliary proposition could be in the context of learning from uncertain evidence. Our proposal is that it can be understood as the proposition \(U_{c_{\lambda }}^{E}\) which says that you take your evidence to be E and adopt the posterior credence function \(c_{\lambda }\) in response. For short, we will refer to this proposition as saying that you update on E.Footnote 2 Importantly, when you take E as your evidence and adopt a posterior credence function in response, you foresee the possibility that \(U_{c_{\lambda }}^{E}\) could be true, even if E is in fact false. This is because, in the context of learning from uncertain evidence, you may mistake the true evidence \(E'\) for some other \(E \in {\mathcal {E}}\). So for example, in Ann’s case, when looking at the drawn marble in a dim light, she might take her evidence to be B, and adopt the posterior \(c_{\lambda }\) in response, when in fact V is true.

There is, we suggest, a way to incorporate this possibility of mistake in one’s learning into the update mechanism. The key idea is that we can express it by determining the extent to which the proposition \(U_{c_{\lambda }}^{E}\) is more likely under E than \(E'\), or the extent to which \(U_{c_{\lambda }}^{E}\) favours E over \(E'\). Intuitively, if Ann has updated on B, she can express the extent to which \(U_{c_{\lambda }}^{B}\) is more likely under B than V. One natural way to determine this extent is to settle on a likelihood ratio which tells Ann to what extent the proposition \(U_{c_{\lambda }}^{B}\) is more expected under B than V. This likelihood ratio can be any non-negative number she considers reasonable in the light of her background knowledge. As will be explained in more detail later on, there is a reasonable way to plug these likelihood ratios into an update rule, without the need of determining the absolute likelihoods for \(U_{c_{\lambda }}^{E}\). As will be shown, the proposed update method gives the expected result in Ann’s case in the sense that it leaves B and V uncertain in Ann’s posteriors.

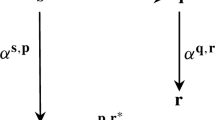

Armed with the method of virtual evidence so understood, we show how VET can be extended to the context of uncertain evidence. Three basic ideas underpin this extension. First, updating on a set of likelihood ratios for the proposition \(U_{c_{\lambda }}^{E}\) can be modelled as a variant of what Pearl called ‘virtual conditionalization’ (VCondi, for short). Second, under a plausible assumption, updating by this variant of VCondi is equivalent to a version of Bas van Fraassen’s (1984) reflection principle. This principle says that one’s posterior credence function should be equal to one’s prior conditional on the proposition \(U_{c_{\lambda }}^{E}\). Third, once we assume that the propositions \(U_{c_{\lambda }}^{E}\) form a partition, we can show that the expected worth of accomodating cost-free uncertain evidence by the proposed variant of VCondi cannot be negative. And this expectation is calculated relative to the agent’s prior credences over the propositions \(U_{c_{\lambda }}^{E}\).

We proceed as follows. In Sect. 2, we discuss three different ways of specifying uncertain evidence that play a crucial role in both Graves’s and our alternative extension of VET. In Sect. 3, we present three updating rules, each attuned to a different way of specifying uncertain evidence. In Sect. 4, we spell out in detail Graves’s extension of VET. In Sect. 5, we show that Graves’s argument is problematic when we care not only about the practical rationality of our decisions, but also about the epistemic rationality of our beliefs. In Sect. 6, we lay down our alternative extension of VET to the case of learning from uncertain evidence and show that it dovetails with a purely epistemic approach that aims to vindicate updating on uncertain evidence. Section 7 concludes.

2 Specifying uncertain evidence: three ways

Let \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \) be an agent’s subjective probability space (her credal space), where \({\mathcal {W}}\) represents the possibilities that the agent can distinguish between, \({\mathcal {F}}\) is an algebra of subsets of \({\mathcal {W}}\) that can be understood as the propositions the agent can express, and c is the agent’s credence function which assigns numbers from \(\left[ 0, 1 \right] \), called credences, to propositions in \({\mathcal {F}}\). We will assume throughout that, at any given time, the credence function c is a probability function over \({\mathcal {F}}\). Since we will be mostly interested in the dynamics of credences in a decision context, we require that \({\mathcal {F}}\) includes a finite partition \(\mathcal {S}\) of \({\mathcal {W}}\), \(\mathcal {S} \subseteq {\mathcal {F}}\), which contains propositions S representing the states of the world upon which the consequences of the agent’s actions depend. As will be apparent later on, we also need to require that the algebra \({\mathcal {F}}\) is sufficiently rich so that it includes a finite number of propositions \(U_{c_{\lambda }}^{E}\). Recall that a proposition of this sort says that you update on E.

Now, learning experience can provide an agent with various types of evidential input \(\lambda \) that may prompt a revision of their credence in any \(X \in {\mathcal {F}}\), c(X), resulting in a posterior credence in X, \(c_{\lambda }(X)\). But how can we characterize an evidential input more precisely? There are at least two ways of answering this question that are well entrenched in Bayesian epistemology. Both assume that learning experiences do not provide evidential inputs all by themselves. Rather they provide evidential inputs because they impact on our credences about evidence propositions, which may in turn provide support for other propositions. However, these two approaches differ on how this impact should be cashed out.

According to the first, somewhat more popular view, any evidential input takes the form of a direct change in one’s credences over some set of propositions. Thus, any evidential input acts like a constraint on the set of possible credence functions \(\mathcal {C}\) and restricts the candidates for the agent’s posterior credence function. More formally, an evidential input of this sort can be understood as a set of posterior credence functions over \({\mathcal {F}}\), \(\mathcal {C}_{\lambda }\), which contains those possible credence functions of the agent that are consistent with this input, that is, \(\mathcal {C}_{\lambda }\subseteq \mathcal {C}\) (see, e.g. van Fraassen 1989, ch. 13; Uffink 1996; Joyce 2010; Dietrich et al. 2016; van Fraassen and Halpern 2017). Importantly, this view allows us to treat as evidential input information or data that cannot be expressed as propositional evidence.

According to the second view, we may think of evidential input as a sort of update factor, which can then bring about changes in one’s credences (see, e.g. Wagner 2002; Hawthorne 2004). Some update factors may be essentially relative, i.e. they tell us how well an outcome of one’s learning experience fits with the proposition X as compared to how well it fits with the proposition Y. A classical example of such a factor is given by the likelihood ratio of propositions X and Y given the evidence proposition E, \(\frac{c\left( E\vert X \right) }{c\left( E\vert Y \right) }\) (see, e.g. Good 1950). A more general representation of this factor is given by the odds ratio of X and Y, also called the Bayes factor:Footnote 3

Bayes factor: If \(c_{\lambda }\) is a posterior credence function and \(X, Y \in {\mathcal {F}}\), then the Bayes factor of X and Y is given by:

$$\begin{aligned} \mathbb {B}_{c_{\lambda }, c} \left( X, Y \right) = \frac{c_{\lambda }\left( X\right) }{c_{\lambda } \left( Y \right) }/\frac{c\left( X\right) }{c\left( Y \right) }. \end{aligned}$$(1)

That is, the Bayes factor of X and Y is a ratio of new-to-old odds for X against Y, where the new odds are \(\frac{c_{\lambda } \left( X\right) }{c_{\lambda } \left( Y \right) }\), and the old odds are \(\frac{c\left( X\right) }{c\left( Y \right) }\). This ratio is meant to capture the factor by which the old odds for X against Y can be multiplied to get the new odds. Observe that the agent’s Bayes factors do not uniquely specify her posterior credences, but only impose overall constraints on these posteriors.

In order to show that the likelihood ratio—which isolates the full import of learning experience, with prior credences factored out—is an instance of the Bayes factor, assume that one’s posterior credence for any \(X \in {\mathcal {F}}\), \(c_{\lambda }\left( X \right) \), comes from one’s prior credence in X by conditionalizing on the proposition \(E \subseteq {\mathcal {W}}\), i.e. \(c_{\lambda }\left( X \right) = c\left( X\vert E \right) \). Then,

Given the above preliminaries, how can we represent uncertain evidence? In what follows, we will characterize three ways of specifying uncertain evidence: the first one is due to Jeffrey (1983), the second one is due to Skyrms (1980), and was used in Graves’s extension of VET, and the third one is an amended method proposed in Pearl (1988, 1990) and developed in Chan and Darwiche (2005).

Jeffrey (1983) argued that in many cases learning experience does not constrain an agent’s credences in a way that is tailor-made for the orthodox Bayesian conditioning, that is, when the evidential input can be modelled as the logically strongest evidence proposition E in \({\mathcal {W}}\) that receives the posterior credence of 1, or simply as the Bayes input:

Bayes input: \(\mathcal {C}_{\lambda }^{E} = \mathcal {C}_{\lambda } = \left\{ c_{\lambda } : c_{\lambda }\left( E\right) = 1 \right\} \) for some \(E \subseteq {\mathcal {W}}\) such that \(E \ne \emptyset \).

Jeffrey believed that, in cases like Ann’s, although learning experience does not single out an evidence proposition E that receives posterior credence 1, \(c_{\lambda }\left( E\right) = 1\), it nevertheless directly affects the agent’s prior credences over the propositions in some set \({\mathcal {E}}\), which is a partition of \({\mathcal {W}}\), shifting them to posterior credences \(c_{\lambda }\left( E\right) \), for all \(E \in {\mathcal {E}}\). Thus, uncertain evidence so understood can be modelled as the following evidential input:

Jeffrey shift: \(\mathcal {C}_{\lambda }^{\mathbb {E}} = \mathcal {C}_{\lambda } = \left\{ c_{\lambda } : c_{\lambda }\left( E\right) \ge 0 \ \text {for all} \ E \in {\mathcal {E}}\right\} \) for some set \(\mathbb {E}\) of ordered pairs \(\left\{ \left\langle E, c_{\lambda }(E)\right\rangle \right\} \) such that \(E \in {\mathcal {E}}\) and \(\sum _{E \in {\mathcal {E}}}c_{\lambda }\left( E\right) = 1 \).

That is, uncertain evidence so understood is a redistribution of the agent’s credences over the propositions in some partition \({\mathcal {E}}\) of the set of worlds she considers possible.

To provide Skyrms’s characterization of uncertain evidence, we need to consider a particular extension of \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \). Given two algebras \({\mathcal {F}}\) and \({\mathcal {F}}^{*}\), let the injective map \(*: {\mathcal {F}} \rightarrow {\mathcal {F}}^{*}\) be an algebra embedding, that is, a function that preserves all Boolean operations. Then, let \(\left( {\mathcal {W}}^{*}, {\mathcal {F}}^{*}, c^{*} \right) \) be an extension of \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \) such that:

- \(E_1\):

-

For every \(X \in {\mathcal {F}}\), \(c^{*}\left( X^{*} \right) = c\left( X\right) \).

- \(E_2\):

-

For some \({\mathcal {E}} \subseteq {\mathcal {F}}\) and a finite set of posterior credence functions \(\mathcal {C}_{\lambda }\) , \({\mathcal {F}}^{*}\) contains a finite number of propositions \(R_{c_{\lambda }}^{{\mathcal {E}}}\).

That is, the algebra \({\mathcal {F}}^{*}\) in the extended credal space \(\left( {\mathcal {W}}^{*}, {\mathcal {F}}^{*}, c^{*} \right) \) contains the copies \(X^{*} \) of all the propositions X in the original smaller algebra \({\mathcal {F}}\), and it also contains a finite number of propositions \(R_{c_{\lambda }}^{{\mathcal {E}}}\), each saying that the posterior credences over \({\mathcal {E}}\) are given by the credence function \(c_{\lambda }\).

Now, we can also consider learning experiences in the extended credal space that prompt revisions of \(c^{*}\) resulting in a new credence function \(c_{\lambda }^{*}\). In particular, given the set of posterior credence distributions over \({\mathcal {F}}^{*}\), \(\mathcal {C}_{\lambda }^{*}\subseteq \mathcal {C}^{*}\), that are consistent with the evidential input \(\lambda \) in that extended credal space, uncertain evidence may be presented as the following evidential input:

Skyrms input: \(\mathcal {C}_{\lambda }^{*R } = \mathcal {C}_{\lambda }^{*} = \left\{ c^{*}_{\lambda } : c^{*}_{\lambda }\left( R_{c_{\lambda }}^{{\mathcal {E}}} \right) = 1 \right\} \) for some \(R_{c_{\lambda }}^{{\mathcal {E}}}\) such that \(R_{c_{\lambda }}^{{\mathcal {E}}} \ne \emptyset \).

Thus, uncertain evidence modelled as Skyrms input is the agent’s assignment of posterior credence 1 in the extended algebra to the proposition \(R_{c_{\lambda }}^{{\mathcal {E}}}\) that specifies a Jeffrey shift over some partition \({\mathcal {E}}\).

Unlike Jeffrey’s and Skyrms’s methods, Pearl’s method of virtual evidence specifies uncertain evidence as an evidential input that directly constrains quantities different from the absolute values of posterior credences. The core idea of Pearl’s method is that we can interpret the uncertainty of every proposition E in some partition \({{\mathcal {E}}}\) of \({\mathcal {W}}\) as the uncertainty of E’s relevance to some auxiliary proposition in \( {\mathcal {W}}\). And to specify how uncertain this relevance is, Pearl proposes to use a set of likelihood ratios. Here we will amend Pearl’s method by assuming that the auxiliary proposition is the proposition \(U_{c_{\lambda }}^{E}\), which says that you update on E. More precisely,

Pearl-style input: \(\mathcal {L}^{E} = \left\{ \alpha _{E} : \alpha _{E} = \frac{c\left( U_{c_{\lambda }}^{E}\vert E \right) }{c\left( U_{c_{\lambda }}^{E}\vert E' \right) }, \ \alpha _{E} \in \left[ 0, \infty \right) \ \text {and } \ \alpha _{E'} = 1 \right\} \), for some \(U_{c_{\lambda }}^{E} \subseteq {\mathcal {W}}\) and some partition \(\left\{ E : E \in {\mathcal {E}} \right\} \) of \({\mathcal {W}}\).

That is, the set \(\mathcal {L}^{E}\) contains the likelihood ratios \(\alpha _{E}\) of the proposition \(U_{c_{\lambda }}^{E}\) that are relative to a pair of propositions E and \(E'\), with the likelihood ratio \(\frac{c\left( U_{c_{\lambda }}^{E}\vert E' \right) }{c\left( U_{c_{\lambda }}^{E}\vert E' \right) }\) set equal to 1. And each likelihood ratio in this set is given by the prior credence of \(U_{c_{\lambda }}^{E}\) conditional on E divided by the prior credence of \(U_{c_{\lambda }}^{E}\) conditional on \(E'\).

Importantly, to determine a Pearl-style input, we only need to specify a set of likelihood ratios, not the absolute likelihoods for \(U_{c_{\lambda }}^{E}\) given E, for every \(E \in {\mathcal {E}}\). But since every likelihood ratio \(\alpha _{E}\) in the set \(\mathcal {L}^{E}\) is proportional to the absolute likelihood of \(U_{c_{\lambda }}^{E}\) given E, i.e. \(\alpha _{E} \propto c\left( U_{c_{\lambda }}^{E} \vert E \right) \), there exists a positive constant l such that, for all \(E \in {\mathcal {E}}\), \(c\left( U_{c_{\lambda }}^{E}\vert E \right) = l\cdot \alpha _{E}\). Thus, the absolute likelihoods can be determined indirectly from the set of likelihood ratios, albeit not uniquely.

Before moving on, let us elaborate on the proposition \(U_{c_{\lambda }}^{E}\). Recall that this proposition says that you take your evidence to be E and adopt the posterior credence function \(c_{\lambda }\) in response. Firstly, following Gallow (2019), we assume that ‘taking your evidence to be E’ does not involve any belief that E is true, and hence does not mean that you assign to E the posterior credence 1. Secondly, we want to emphasize that even if the agent already knows that she has updated on E, it does not entail that \(c\left( U_{c_{\lambda }}^{E}\vert E \right) = 1\), for all E in \({\mathcal {E}}\). That is, though \(U_{c_{\lambda }}^{E}\) can be regarded as ‘old evidence’ after the agent has updated on E, we can still reasonably inquire about its evidential impact on the propositions in \({\mathcal {E}}\).Footnote 4 If so, we should not interpret the conditional credence \(c\left( U_{c_{\lambda }}^{E}\vert E \right) \) as the agent’s actual credence in \(U_{c_{\lambda }}^{E}\), supposing E to be true, for if you already know that \(U_{c_{\lambda }}^{E}\) is true, your prior actual credence in \(U_{c_{\lambda }}^{E}\) conditional on any proposition is 1. Instead, we should understand it as a kind of counterfactual credence. That is, in determining these credences, we should answer the question: How probable would the actual evidence \(U_{c_{\lambda }}^{E}\) be if E were true? Naturally, we might expect that assigning precise counterfactual credences to propositions involves a lot of conceptual and formal intricacies.Footnote 5 Fortunately, in our approach, there is no need to determine the precise values of these counterfactual credences. It suffices that the agent will express only their ratios, leaving the precise values unspecified.

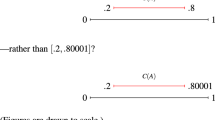

Let us now show how uncertain evidence recast as Pearl-style input can be applied to Ann’s case. Recall that Ann knows beforehand that because she would observe the drawn marble in a dim light, she would neither become certain that B is true, nor that V is true. Nevertheless, foreseeing the possibility of error, she can take B or V as her evidence and adopt some posterior credences over \({\mathcal {F}}\) in response. Suppose that, after looking at the drawn marble, she updates on B. But, due to her knowledge about the dim lighting, she foresees the possibility that \(U_{c_{\lambda }}^{B}\) could be true, even if B is false. Still, she can interpret \(U_{c_{\lambda }}^{B}\) as providing evidence for B against V whose strength can be given by a set of likelihood ratios. Let us suppose that Ann thinks that it is twice as likely that \(U_{c_{\lambda }}^{B}\) would be true if B were true as if V were true. If this is so, then we may say that learning experience provides Ann with the following likelihood ratios: \(\alpha _{B} = \frac{c\left( U_{c_{\lambda }}^{B}\vert B \right) }{c\left( U_{c_{\lambda }}^{B}\vert V \right) } = 2\) and \(\alpha _{V} = \frac{c\left( U_{c_{\lambda }}^{B}\vert V \right) }{c\left( U_{c_{\lambda }}^{B}\vert V \right) } = 1\), and so \(\mathcal {L}^{B} = \left\{ 1, 2 \right\} \). This set of likelihood ratios enables her in turn to specify, though not uniquely, the absolute likelihoods \(c\left( U_{c_{\lambda }}^{B}\vert B \right) \) and \(c\left( U_{c_{\lambda }}^{B}\vert V \right) \). For example, she might assign \(c\left( U_{c_{\lambda }}^{B}\vert B \right) = 0.4 \) and \(c\left( U_{c_{\lambda }}^{B}\vert V \right) = 0.2\), or \(c\left( U_{c_{\lambda }}^{B}\vert B \right) = 0.8 \) and \(c\left( U_{c_{\lambda }}^{B}\vert V \right) = 0.4\).

The above understanding of Pearl-style input can also be explained in terms of Ann’s error credences, as shown in Table 1. Think of \(U_{c_{\lambda }}^{B}\) and \(U_{c_{\lambda }}^{\lnot B}\), where \(\lnot B = V\), as the possible, mutually exclusive noisy signals of Ann’s learning experience. That is, before looking at the drawn marble, Ann thinks that she could mistake B for \(\lnot B\), and likewise she could mistake \(\lnot B\) for B. Thus, Ann thinks that there is some non-zero probability—Ann’s false positive credence—that \(U_{c_{\lambda }}^{B}\) would be true, even if B were not, and some non-zero probability—Ann’s false negative credence—that \(U_{c_{\lambda }}^{\lnot B}\) would be true even if B were true. Then, when Ann takes B as her evidence and adopts the posterior \(c_{\lambda }\) in response, the differential support that \(U_{c_{\lambda }}^{B}\) provides can be expressed as a likelihood ratio \(\alpha _{B}\) of false negative and false positive credences, \(\frac{1 - c\left( U_{c_{\lambda }}^{\lnot B}\vert B \right) }{c\left( U_{c_{\lambda }}^{B}\vert \lnot B \right) }\).

Why should we think that the problem of specifying uncertain evidence is philosophically important? Firstly, as it turns out, the way we choose to specify uncertain evidence helps us to resolve the problem of non-commutativity of JCondi, which says that, once you update sequentially by dint of JCondi, switching the order in which a pair of Jeffrey shifts over partitions \({\mathcal {E}}\) and \({\mathcal {E}}'\) is learned can yield different posterior credences in the end. This feature of JCondi is often regarded as its flaw. But, as shown by Field (1978) and developed by Wagner (2002), JCondi is commutative when identical learning is interpreted as identical Bayes factors. Note also that if uncertain evidence is represented by Skyrms input, then sequential updating on such uncertain evidence by a variant of BCondi, as given in chapter 3, would also be commutative, since BCondi is essentially commutative. Secondly, as argued in Wagner (2009), a certain parametrization of JCondi which uses the agent’s Bayes factors rather than her posterior credences enables us to show that JCondi and the so-called opinion pooling—a method of aggregating probabilistic credences—commute. That is, the result of pooling and then updating by JCondi is the same as first updating by JCondi and then pooling.

As we will show in what follows, the way we specify uncertain evidence bears also on whether or not updating on uncertain evidence both maximizes expected pragmatic utility and minimizes expected epistemic disutility (inaccuracy).

3 Updating on uncertain evidence

A widespread position in Bayesian epistemology is that JCondi is an appropriate update rule when the agent undergoes a learning experience which does not rationalize absolute certainty in any proposition. This rule may be presented as follows:

JCondi: Given a Jeffrey shift \(\mathcal {C}_{\lambda }^{\mathbb {E}}\), the agent’s posterior credence function \(c_{\lambda }\) should be such that, for every \(X \in {\mathcal {F}}\),

$$\begin{aligned} c_{\lambda }\left( X \right) = \sum _{E \in {\mathcal {E}}} c\left( X\vert E \right) \cdot c_{\lambda }\left( E \right) \end{aligned}$$

Although any Jeffrey shift \(\mathcal {C}_{\lambda }^{\mathbb {E}}\) which does not rationalize absolute certainty in any proposition in \({\mathcal {E}}\) cannot be mediated by way of Bayes input in the credal state \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \), it might be tempting to think that it can be mediated by some other proposition in \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \) that we learn for certain. But if this were possible, there would be no need for JCondi in the first place. After all, this rule was motivated by the thought that there is no proposition in \({\mathcal {F}}\) to conditionalize upon. Consider Jeffrey’s candlelight case in which the agent inspects a piece of cloth by candlelight and gets the impression that it is green, although she concedes that it might be blue, or even violet. As Jeffrey (1983, p. 165) argued convincingly, there is no proposition that can convey the precise quality of this learning experience. And even if we allow for the possibility that the agent learns with certainty the proposition that the cloth appears green, this proposition would be too vague, for various learning experiences that fit this proposition would justify different credences in the proposition that the cloth is green (Christensen 1992). But we might well think that a Jeffrey shift can be mediated by the proposition \(R_{c_{\lambda }}^{{\mathcal {E}}}\) that the agent learns with certainty in \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \). After all, it seems plausible to think that, in the context of JCondi, the agent becomes certain that her credences over some partition \({\mathcal {E}}\) shifted in a certain way. But it seems that, in cases like Jeffrey’s candlelight example, this proposition does not describe the precise content of the agent’s learning experience, but merely summarizes the effect of this experience on her posterior credences over \({\mathcal {E}}\). And, again, if \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \) included this kind of proposition, there would be no need for JCondi in the first place. After all, one could just then use only BCondi and conditionalize on the proposition \(R_{c_{\lambda }}^{{\mathcal {E}}}\), so that, for every \(X \in {\mathcal {F}}, c_{\lambda }\left( X \right) = c\left( X \vert R_{c_{\lambda }}^{{\mathcal {E}}}\right) \).

Schwan and Stern (2017) have recently argued that what the agent learns with certainty in the context of JCondi can be represented by a dummy proposition, D, which says what the agent would have learned with certainty were she capable of expressing it. They claim that this in turn allows us to represent updating on uncertain evidence as conditionalization on a dummy proposition in \({\mathcal {F}}\). For example, when Ann looks at the drawn marble in a dim light and undergoes a Jeffrey shift over \(\left\{ B, V \right\} \), we can represent her as if she becomes certain of some ineffable-colour-proposition D. But though this view has considerable merit when combined with Schwan and Stern’s causal understanding of when the condition called rigidityFootnote 6 is satisfied, it does not provide much help in establishing VET in the context of uncertain evidence. For VET requires an agent to determine prior credences over various evidence propositions that she might learn in the future, but the ineffability of the dummy proposition prevents it from entering into the calculation of her prior credences. In particular, we cannot determine Ann’s prior credence in the ineffable-colour-proposition D because we lack the ability to describe what Ann sees in a dim light.

Still, however, it seems reasonable to think that a Jeffrey shift can be mediated by way of a Skyrms input, \(\mathcal {C}_{\lambda }^{*R}\), i.e. by the proposition \(R_{c_{\lambda }}^{{\mathcal {E}}}\) that one learns with certainty in the extended credal state \(\left( {\mathcal {W}}^{*}, {\mathcal {F}}^{*}, c^{*} \right) \).Footnote 7 If so, then we may think that when the agent updates by JCondi, she ought to update as if she were (i) expanding \({\mathcal {F}}\) to \({\mathcal {F}}^{*}\) and extending c to \(c^{*}\), (ii) conditionalizing \(c^{*}\) upon some \(R_{c_{\lambda }}^{{\mathcal {E}}}\) in \({\mathcal {F}}^{*}\) to get the posterior \(c_{\lambda }^{*}\), and then (iii) recovering her posterior \(c_{\lambda }\) over \({\mathcal {F}}\) by restricting \(c_{\lambda }^{*}\) to \({\mathcal {F}}\). This idea is captured by the extended Bayesian conditionalization:

EBCondi: Given a Skyrms input \(\mathcal {C}_{\lambda }^{*R}\), the agent’s posterior credence function \(c_{\lambda }\) should be such that, for every \(X \in {\mathcal {F}}\):

$$\begin{aligned} c_{\lambda }\left( X \right) = c^{*}(X^{*}\vert R_{c_{\lambda }}^{{\mathcal {E}}}). \end{aligned}$$

Skyrms (1980) has argued that, under some auxiliary assumptions, updating by EBCondi in \(\left( {\mathcal {W}}^{*}, {\mathcal {F}}^{*}, c^{*} \right) \) is equivalent to JCondi in \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \). This result can be stated more formally as follows:

Proposition 1

Suppose that \({\mathcal {E}}\) is a finite partition of \({\mathcal {W}}\) and \(R_{c_{\lambda }}^{{\mathcal {E}}} \in {\mathcal {F}}^{*}\). And suppose that \(c^{*}\) satisfies the following two conditions:

- \(C_1\):

-

For every \(E \in {\mathcal {E}}\), \(c^{*}\left( E^{*}\vert R_{c_{\lambda }}^{{\mathcal {E}}}\right) = c_{\lambda }\left( E \right) \), provided that \(c^{*}\left( R_{c_{\lambda }}^{{\mathcal {E}}} \right) > 0\).

- \(C_2\):

-

For every \(X \in {\mathcal {F}}\) and every \(E \in {\mathcal {E}}\), \(c^{*}\left( X^{*}\vert E^{*} \wedge R_{c_{\lambda }}^{{\mathcal {E}}}\right) = c^{*}(X^{*}\vert E^{*})\), provided that \(c^{*}( E^{*} \wedge R_{c_{\lambda }}^{{\mathcal {E}}}) > 0\).

Then, EBCondi \(\Leftrightarrow \) JCondi.

Proof

Given the conditions (C1) and (C2), the proof of Proposition 1 is straightforward:

\(\square \)

More informally, Proposition 1 tells us that, given a fixed extended credal state \(\left( {\mathcal {W}}^{*}, {\mathcal {F}}^{*}, c^{*} \right) \) which satisfies both (C1) and (C2), updating by EBCondi in that space gives the same result as if the agent were updated her credences by JCondi in the smaller credal state \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \).

Note that condition (C1) may be understood as an instance of what Bas van Fraassen (1984) called reflection principle, i.e. a principle which requires one’s current credences to defer to one’s future credences. After all, (C1) says that the agent’s prior conditional credence in proposition \(E^{*} \leftrightarrow E \in {\mathcal {F}}\) given that her posterior credences over \({\mathcal {E}}\) are determined by \(c_{\lambda } \) should be equal to the posterior credence in E, \(c_{\lambda }\left( E \right) \). The second condition (C2) tells us that once you learn that some E in the partition \({\mathcal {E}}\) is true, the information about your posterior credences over \({\mathcal {E}}\) should have no bearing on the truth of X.

Importantly, Proposition 1 is not the only way to establish how JCondi can be represented as Bayesian conditioning in some extended credal state. Another influential approach has been given by (Diaconis and Zabell 1982, Theorem 2.1). They have identified a necessary and sufficient condition, sometimes called superconditioning, for one’s posterior credence to be the result of conditioning one’s prior credence in some larger credal space. It says that \(c_{\lambda }\) comes from c by conditioning in the extended credal space just in case there exists a number \(b \ge 0\) such that, for every \(X \in {\mathcal {F}}\), \(c_{\lambda }\left( X \right) \le b \cdot c\left( X \right) \). However, contrary to Skyrms, Diaconis and Zabell’s approach places no constraints on how the extended credal state should look like. It only says that we can construct an extended credal space by adding two propositions a and b to the original credal space, where a says that the agent had the learning experience she had, and b indicates its absence. For this reason, Skyrms’s approach appears to be better suited for the task of providing VET in the case of uncertain evidence. For VET requires an agent to assign prior credences to propositions describing the possible outcomes of her learning experience. And in order to do this, the agent should be in a position to grasp the content of these propositions, prior to her learning experience. But a is a proposition the agent is in a position to grasp once she has already had the learning experience. Skyrms’s approach appears to deliver the required element by telling us that these propositions, represented by the \(R_{c_{\lambda }}^{{\mathcal {E}}}\)’s in the extended credal state, describe the possible Jeffrey shifts the agent might undergo, and hence their content can be grasped before the agent’s learning experience.

As we have argued in the previous section, we can also think of uncertain evidence in terms of Pearl style-input. But how should the agent update her credences in response to this input? Here we propose a variant of what Pearl calls virtual conditionalization:Footnote 8

VCondi: Given a Pearl-style input \(\mathcal {L}^{E}\), an agent’s posterior credence in every \(X \in {\mathcal {F}}\) should be:

$$\begin{aligned} c_{\lambda }\left( X \right) = \frac{\sum _{E \in {\mathcal {E}}}\alpha _{E} \cdot c\left( X \wedge E \right) }{\sum _{E \in {\mathcal {E}}}\alpha _{E}\cdot c\left( E \right) }. \end{aligned}$$

Let us look at how VCondi works in Ann’s case. Suppose that Ann assigns equal credences to the proposition that the selected urn is of type X (S) and to the proposition that the selected urn is of type Y (\(\lnot S\)). Given the experimental set-up she faces, her prior credences for the propositions B, V, \(S\wedge B\), and \(S \wedge V\) can be determined as follows:

-

\(c\left( B \right) = c\left( S \right) \cdot c\left( B\vert S \right) + c\left( \lnot S\right) \cdot c\left( B\vert \lnot S \right) = \frac{1}{2}\cdot \frac{8}{10} + \frac{1}{2} \cdot \frac{2}{10} = \frac{1}{2} \)

-

\(c\left( V \right) = c\left( S \right) \cdot c\left( V\vert S \right) + c\left( \lnot S\right) \cdot c\left( V\vert \lnot S \right) = \frac{1}{2}\cdot \frac{2}{10} + \frac{1}{2} \cdot \frac{8}{10} = \frac{1}{2}\)

-

\(c\left( S \wedge B \right) = c\left( S \right) \cdot c\left( B\vert S \right) = \frac{1}{2}\cdot \frac{8}{10} = \frac{2}{5} \)

-

\(c\left( S \wedge V \right) = c\left( S \right) \cdot c\left( V\vert S \right) = \frac{1}{2}\cdot \frac{2}{10} = \frac{1}{10} \)

Ann then looks at the drawn marble in dim light, takes B as her evidence and adopts the posterior credence function \(c_{\lambda }\) in response. This in turn provides her with evidence for B and V whose differential support is settled by Ann as \(\alpha _{B} = \frac{c\left( U_{c_{\lambda }}^{B}\vert B \right) }{c\left( U_{c_{\lambda }}^{B}\vert V \right) } = 2\) and \(\alpha _{V} = \frac{c\left( U_{c_{\lambda }}^{B}\vert V \right) }{c\left( U_{c_{\lambda }}^{B}\vert V \right) } = 1\). Then, VCondi prescribes the following posterior credence for S:

Similarly, VCondi shows that, when after looking at the drawn marble in a dim light, Ann updates on B, she should become more confident in B, though still less than certain. That is:

Although VCondi seems to deliver intuitively correct answers in the context of learning from uncertain evidence, one might still ask if it is a rational update method to follow. Specifically, VCondi hinges on the assumption that, after learning, the agent takes her evidence to be some E from \({\mathcal {E}}\) and adopts the posterior credence function \(c_{\lambda }\) in response. But why shouldn’t we think that the agent adopts this posterior also for reasons other than taking E as her evidence? For example, suppose that you take E as your evidence and you believe it provides a strong support for your scientific hypothesis. But you are a scientist in a small town, and you suspect that, like most small-town scientists, you will come very soon to justifiably doubt your hypothesis. So your posterior credence in X is not only a result of taking E as your evidence, but also a result of expecting evidence against X in the near future. But this is hardly acceptable, for expecting evidence against X is not the same as possessing or taking E as evidence against X.Footnote 9 Yet there is nothing in the update mechanism of VCondi that precludes this possibility.

But there seems to be a reasonable way to prevent the above possibility. It rests on the following condition:

CIndi: For every \(E \in {\mathcal {E}}\) and \(X \in {\mathcal {F}}\),

$$\begin{aligned} c\left( U_{c_{\lambda }}^{E}\vert E \wedge X \right) = c\left( U_{c_{\lambda }}^{E}\vert E \right) . \end{aligned}$$

That is, CIndi, when imposed on one’s prior credences, expresses a relation of conditional independence between \(U_{c_{\lambda }}^{E}\) and X given E. It says that once we suppose E, the propositions \(U_{c_{\lambda }}^{E}\) and X cease to bear any information about one another. Recall the small-town scientist’s example. Suppose you think that it is more likely to have a high posterior credence in X when you don’t expect evidence against X than when you do. What CIndi says is that, given E, your posterior credence function which assigns a high credence to X should be insensitive to whether or not you expect evidence against X. This seems intuitively right, for you adopt your posterior credence in X in response to taking E as your evidence which provides a strong support for X, and not in response to expecting evidence against X.

As it turns out, when we assume CIndi, an agent who updates by VCondi would satisfy an instance of reflection principle which goes as follows:

Reflection: For every \(X \in {\mathcal {F}}\),

$$\begin{aligned} c\left( X\vert U_{c_{\lambda }}^{E} \right) = c_{\lambda }\left( X \right) , \end{aligned}$$provided that \(c\left( U_{c_{\lambda }}^{E} \right) > 0\).

That is, this principle says that your prior credence in X given your anticipated posterior credence function over \({\mathcal {F}}\) which results from taking E as your evidence should be equal to your anticipated posterior credence in X.Footnote 10 Note that the proposition \(U_{c_{\lambda }}^{E}\) does not specify the mechanism by which you arrive at the posterior credence function in response to taking E as your evidence. It thus describes your anticipated posterior credence function after the black-box learning event, where the content of your learning experience is left opaque (see Huttegger 2017, ch. 5.4). For your learning experience does not tell you which proposition in \({\mathcal {E}}\) is true.

More precisely, we can state the following proposition:

Proposition 2

Suppose that an agent’s prior credences satisfy CIndi. Then, VCondi \(\Leftrightarrow \) Reflection.

Proof

(VCondi \(\Rightarrow \) Reflection)

Suppose c satisfies CIndi and \(c_{\lambda }\) satisfies VCondi. Then:

(Reflection \(\Rightarrow \) VCondi)

Suppose c satisfies CIndi and \(c_{\lambda }\) satisfies Reflection. Then:

It is important to emphasize that the above result may be understood as providing a guidance to rational updating in the context of uncertain evidence. For it is often argued that reflection principles regulate rational learning in the sense that an agent cannot violate some instance of reflection principle and at the same time think that she will form her posterior credences in a rational way (see, e.g. Skyrms 1990, 1997; Huttegger 2013, 2017). For example, when you update your credences in cases involving forgetting or memory loss, you often violate reflection principles and hence you do not form your posterior credences by rational learning.Footnote 11 If so, then the above result tells us how learning uncertain evidence can be deemed rational. After all, it implies that when VCondi is combined with CIndi, the agent’s credences satisfy an instance of reflection principle, and hence are mandated by a rational learning process.

4 Graves’s extension of VET

In this section, we present a variant of Graves’s extension of VET. Before doing so, however, let us highlight a few assumptions of this result.

First, we assume that an agent faces a decision problem \(\left\langle \mathcal {A}, \mathcal {S}, c, u \right\rangle \), in which \(\mathcal {A}\) is a finite partition of propositions \(A_{1}, ..., A_{n}\) representing the actions available to her, \(\mathcal {S}\) is a finite set of propositions \(S_{1}, ..., S_{n}\) representing the possible states of the world upon which the consequences of the actions depend, c is the agent’s credence function in her credal state \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \), and u is the agent’s utility function which assigns to propositions of the form \(A \wedge S\) a number that measures the pragmatic utility that would result for the agent were the act A to be performed in state S.Footnote 12

Second, following Graves, we assume that before undergoing a learning experience, the agent contemplates a finite set \(\mathcal {R}\) of possible Jeffrey shifts. And each of these possible Jeffrey shifts can be represented as proposition \(R_{c_{\lambda }}^{{\mathcal {E}}}\) that specifies the agent’s assignment of posterior credences to the members of \({\mathcal {E}}\). Thus, what is crucial to Graves’s argument is that there exists an extension \(\left( {\mathcal {W}}^{*}, {\mathcal {F}}^{*}, c^{*} \right) \) of \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \) such that:

- \((G_1)\):

-

For every \(S \in {\mathcal {F}}\), \(c^{*}\left( S^{*} \right) = c\left( S\right) \).

- \((G_2)\):

-

For some \({\mathcal {E}} \subseteq {\mathcal {F}}\) and a finite set of posterior credence functions \(\mathcal {C}_{\lambda }\), \(\mathcal {R} \subseteq {\mathcal {W}}^{*}\).

- \((G_3)\):

-

\(c^{*}\) satisfies conditions (C1) and (C2).

With the above in mind, observe first that, prior to undergoing a Jeffrey shift, the agent would choose the prior Bayes act, i.e. the act A that maximizes

By (G1), (3) is equivalent toFootnote 13

After undergoing a learning experience which results in a Jeffrey shift \(\mathcal {C}_{\lambda }^{\mathbb {E}}\), the agent would choose an act A to maximize

That is, she would choose the act A that maximizes expected pragmatic utility calculated relative to the agent’s posterior credence function, \(c_{\lambda }\), recommended by JCondi. By Proposition 1, (5) is equivalent to

Since we want to know ex ante whether updating by JCondi is always helpful in making practical decisions, we need to determine an expectation of \(\max _{A \in \mathcal {A}}\mathbb {E}_{c_{\lambda }^{*}}\left[ u\left( A \right) \vert R_{c_{\lambda }}^{{\mathcal {E}}} \right] \), which is the maximal value of making a choice after undergoing a Jeffrey shift (or the posterior Bayes act). Because it is assumed that the agent considers ex ante a finite set of possible Jeffrey shifts, and these shifts can be represented by propositions \(R_{c_{\lambda }}^{{\mathcal {E}}}\) from the partition \(\mathcal {R}\), this can be achieved by weighing the posterior value of Bayes act by the prior credence of \(R_{c_{\lambda }}^{{\mathcal {E}}}\). Hence, the expectation of posterior Bayes act is:

Note that, by the equivalence of (5) and (6), \(\max _{A \in \mathcal {A}}\mathbb {E}_{c_{\lambda }^{*}}\left[ u\left( A \right) \vert R_{c_{\lambda }}^{{\mathcal {E}}} \right] = \max _{A \in \mathcal {A}}\mathbb {E}_{c_{\lambda }}\left[ u\left( A \right) \right] \), and hence (7) is equivalent to

Now let us introduce the quantity \(\Delta ^{u}_{c_{\lambda }^{*}} \left( A_{R}, A_{\max } \right) \), which is the difference between the maximizer of (6), \(A_{R}\), and the maximizer of (4), \(A_{\max }\), as assessed by the agent’s posterior credence \(c_{\lambda }^{*}(S^{*}) = c^{*}\left( S^{*}\vert R_{c_{\lambda }}^{{\mathcal {E}}} \right) \) for every \(S^{*} \in {\mathcal {F}}^{*}\):

Importantly, notice that \(\Delta ^{u}_{c_{\lambda }^{*}} \left( A_{R}, A_{\max }\right) \ge 0\), for if \(A_{\max } = A_{R}\), then \(\Delta ^{u}_{c_{\lambda }^{*}} \left( A_{R}, A_{\max }\right) = 0\), and if \(A_{\max } \ne A_{R}\), then \(\Delta ^{u}_{c_{\lambda }^{*}} \left( A_{R}, A_{\max }\right) > 0\).

We can also determine the expectation of \(\Delta ^{u}_{c_{\lambda }^{*}} \left( A_{R}, A_{\max }\right) \) relative to the agent’s extended prior function \(c^{*}\) over \(\mathcal {R}\):

Given the above notions, it is now not difficult to show that the maximal value of choosing now cannot be greater than the expected value of choosing after updating by JCondi. To begin with, observe that since \(\Delta ^{u}_{c_{\lambda }^{*}} \left( A_{R}, A_{\max }\right) \ge 0\), its expected value is also non-negative, and thus:

Then, by the definition of (9), we get

Then, we get

By the law of total probability we have \(c^{*}(S^{*}) = \sum _{R_{c_{\lambda }}^{{\mathcal {E}}} \in \mathcal {R}} c^{*}\left( R_{c_{\lambda }}^{{\mathcal {E}}} \right) \cdot c^{*}\left( S^{*}\vert R_{c_{\lambda }}^{{\mathcal {E}}} \right) \), and hence (14) reduces to

Hence, we have

And since (3) is equivalent to (4) and (5) is equivalent to (6), we get

as required. Thus, we have shown that if we utilize an extended credal state in which \(c^{*}\) satisfies conditions (C1) and (C2), then the agent’s update in response to uncertain evidence recommended by JCondi won’t result in making foreseeably harmful practical decisions.

However, Graves’s extension of VET seems problematic for at least two reasons. First, one might claim that it imposes unrealistic cognitive demands on decision-makers. That is, it requires that they must have ex ante prior credences over all the possible Jeffrey shifts they could undergo in the future. But it is doubtful that, in Ann’s case, before looking at the drawn marble, she is aware of all the possible ways by which her visual experience can prompt changes in her initial credences. For a Jeffrey shift is understood as the agent’s probabilistic judgement over \({\mathcal {E}}\) or what she takes away from experience rather than what experience delivers to her. And it is perfectly possible that, when undergoing her learning experience, Ann would actually judge probabilistically in a way that she has not considered prior to her learning experience. But how fatal is this objection?

In response, one may argue that this is just an instance of a more general problem, which says that it is implausible that each evidence you might learn is one about which you had a prior credence. After all, it is tempting to think that you might learn a proposition that you do not already grasp. But if this is so, then this problem also affects the original VET which shows that updating by BCondi maximizes expected pragmatic utility. After all, it assumes that each proposition that you might conditionalize upon is already contained in the partition \({\mathcal {E}}\) over which you have prior credences. Hence, the cognitive demands imposed by Graves’s extension of VET are no more unrealistic than those imposed by the original VET.

Still, however, we think that this problem faces Graves’s approach more severely. For, as it is often claimed, JCondi does not even tell us what partition of propositions should be affected by a given learning experience (Christensen 1992; Weisberg 2009). So, prior to her learning experience, the agent might not be able to even identify correctly the partition of propositions over which she would actually undergone a Jeffrey shift. That is, the agent might contemplate ex ante various Jeffrey shifts over partition \({\mathcal {E}}\) when in fact her learning experience would directly affect an entirely different partition \({\mathcal {E}}'\). For example, although in Ann’s case, she may stipulate ex ante that her learning experience would directly affect the partition \(\left\{ B, V \right\} \), there is no normative guidance as to how she could prevent the possibility that it would actually affect the partition \(\left\{ B \wedge L, V \wedge L\right\} \), where L is the proposition that the lighting is dim. Therefore, by assuming that, prior to a learning experience, the agent is always in a position to grasp correctly the set \({\mathcal {E}}\) that would be directly affected by her anticipated learning experience, Graves’s approach imposes far more unrealistic cognitive demands than the original VET.

The second problem involves accuracy considerations, and, as we will argue, is more threatening to Graves’s approach than the first one. If we grant that the only goal of updating our credences is to improve our practical decisions, i.e. to maximize expected pragmatic utility, then Graves’s approach seems successful. But many philosophers claim that, alongside practical goals, we should update our credences in a way that could also be regarded as epistemically rational. In particular, some argue that our updated credences should minimize expected inaccuracy, i.e. they should be as close as possible to the truth, from the standpoint of our prior credences (e.g. Greaves and Wallace 2006; Leitgeb and Pettigrew 2010). If this is the case, then we will argue that Graves’s extension of VET cannot guarantee that updating on uncertain evidence would be both practically and epistemically rational. Since this argument requires more scrutiny, we devote the next section to it.

5 Graves’s extension of VET and accuracy

In the previous section, we have seen that if the agent obeys JCondi, then updating on uncertain evidence would never lead, in expectation, to worse practical decisions. In this section, we argue that this approach is in tension with a purely epistemic approach which seeks to justify the agent’s dynamic norms for credences as a consequence of the rational pursuit of accuracy. That is, if we apply the machinery of accuracy-first epistemology, then we must conclude that there appears to be no acceptable way to show that JCondi minimizes expected inaccuracy. Therefore, for an expected inaccuracy minimizer, Graves’s extension of VET cannot be satisfactory, since it establishes VET for JCondi and this update rule is hardly justifiable, if ever, in terms of the rational pursuit of accuracy.

To make this point more precise, we assume, following accuracy-firsters, that we have a local epistemic disutility function (or local inaccuracy measure) for each proposition X, \({\mathfrak {s}}_{X}\), which takes X’s truth-value at w, w(X), and the credence c(X) and returns the local epistemic disutility (or inaccuracy) of having that credence in X at a world in which X’s truth-value is w(X), \({\mathfrak {s}}_{X}\left( w(X), c(X) \right) \). There are some desirable properties that this function should have, and they single out the class of strictly proper scoring rules. These properties are the following:

Extensionality: \({\mathfrak {s}}_{X} \) is extensional if it can be thought of as two functions: \({\mathfrak {s}}_{X}\left( 1, x \right) \) which gives the local inaccuracy of having credence x in X when X is true, and \({\mathfrak {s}}_{X}\left( 0, x \right) \) which gives the local inaccuracy of having credence x when X is false.

Strict Propriety: \({\mathfrak {s}}_{X}\) is strictly proper if for all \(0\le p, x \le 1\),

$$\begin{aligned} p \cdot {\mathfrak {s}}_{X}\left( 1, p \right) + (1 - p)\cdot {\mathfrak {s}}_{X}\left( 0, p \right) \le p \cdot {\mathfrak {s}}_{X}\left( 1, x \right) + (1 - p)\cdot {\mathfrak {s}}_{X}\left( 0, x \right) , \end{aligned}$$with equality iff \(x = p\).

Continuity: \({\mathfrak {s}}_{X}\) is a continuous function of x on [0, 1].

More informally, Extensionality says that the inaccuracy of having credence x in proposition X depends only on whether X is true or false. Strict Propriety tells us that an agent with probabilistic credence p in X proposition expects only that credence to have the lowest inaccuracy. And Continuity says that the the inaccuracy of having credence x in X varies continuously with that credence.

A standard expected inaccuracy-minimization argument for an update rule says that, before the evidence is in, an agent should expect to have less inaccurate posterior credences recommended by that rule than by any other update rule. To establish such an argument, accuracy firsters say that we should evaluate our posterior credences by looking at their expected inaccuracies, where the expectation is taken relative to a prior credence function. Can we establish such an argument in the case where your posterior credence in X is recommended by JCondi?

As shown by Leitgeb and Pettigrew (2010), the answer is negative. Their point is that, under the quadratic scoring rule, one’s posterior credence in any \(X \in {\mathcal {F}}\) that results from JCondi does not minimize expected local inaccuracy given by

That is, if our goal is to choose a posterior credence function \(c_{\lambda }\) that assigns credence \(c_{\lambda }\left( E \right) \) for all \(E \in {\mathcal {E}}\) (i.e. satisfies the constraints imposed by a Jeffrey shift) and is minimal with respect to the expected local inaccuracy of the credence it assigns to each \(X \in {\mathcal {F}}\) by the lights of one’s prior credence function c over the set of possible worlds \({\mathcal {W}}\), then this cannot be achieved by selecting the posterior credence function that results from JCondi. A crucial assumption of this result is that the local inaccuracy is measured by the quadratic scoring rule, \(\mathfrak {q}_{X} = \left( i - x\right) ^{2}\), where \(i = 1\) or 0. That is, this rule gives (i) the squared difference \(\left( 1 - x\right) ^{2}\) between the credence x in X and the value \(w(X) = 1\) of the indicator function of w (\(w(X) = 1\) if X is true at w), and (ii) the squared difference \(\left( 0 - x\right) ^{2}\) between the credence x in X and the value \(w(X) = 0\) of the indicator function of w (\(w(X) = 0\) if X is false at w). Importantly, \(\mathfrak {q}_{X}\) is a strictly proper scoring rule.

To make Leitgeb and Pettigrew’s point more concrete, let us first introduce their alternative update rule:

LPCondi: Given a Jeffrey shift \(\mathcal {C}_{\lambda }^{\mathbb {E}}\), let \(d_{E}\) be the unique real number such that

$$\begin{aligned} \sum _{\left\{ w \in E \ : \ c\left( \left\{ w \right\} \right) + d_{E} > 0 \right\} } c\left( \left\{ w \right\} \right) + d_{E} = c_{\lambda }(E). \end{aligned}$$Then, the agent’s posterior credence function \(c_{\lambda }\) should be such that, for \(w \in E\),

$$\begin{aligned} c_{\lambda }\left( \left\{ w \right\} \right) = {\left\{ \begin{array}{ll} c\left( \left\{ w \right\} \right) + d_{E}, &{} \text {for }c\left( \left\{ w \right\} \right) + d_{E} > 0\\ 0, &{} \text {for }c\left( \left\{ w \right\} \right) + d_{E} \le 0. \end{array}\right. } \end{aligned}$$

Now, suppose that initially Ann does not rule out any of the following possible worlds:

-

\(w_{1}\), in which the selected urn is X and the drawn marble is blue.

-

\(w_{2}\), in which the selected urn is X and the drawn marble is violet.

-

\(w_{3}\), in which the selected urn is Y and the drawn marble is blue.

-

\(w_{4}\), in which the selected urn is Y and the drawn marble is violet.

More precisely, she assigns the following prior credences to these worlds:

Ann, then, looks at the drawn marble in dim lighting which results in the following Jeffrey shift over the partition \(\left\{ B, V\right\} \):

-

\(c_{\lambda }\left( B \right) = c_{\lambda }\left( \left\{ w_{1}, w_{3}\right\} \right) = \frac{1}{2}.\)

-

\(c_{\lambda }\left( V \right) = c_{\lambda }\left( \left\{ w_{2}, w_{4}\right\} \right) = \frac{1}{2}.\)

In response to this Jeffrey shift, Ann applies JCondi and gets the following posterior credences:

-

\(c_{\lambda }\left( \left\{ w_{1}\right\} \right) = c_{\lambda }\left( \left\{ w_{1}, w_{3}\right\} \right) \cdot \frac{c\left( \left\{ w_{1}\right\} \cap \left\{ w_{1}, w_{3}\right\} \right) }{c\left( \left\{ w_{1}, w_{3}\right\} \right) } + c_{\lambda }\left( \left\{ w_{2}, w_{4}\right\} \right) \cdot \frac{c\left( \left\{ w_{1}\right\} \cap \left\{ w_{2}, w_{4}\right\} \right) }{c\left( \left\{ w_{2}, w_{4}\right\} \right) } = \frac{1}{3}.\)

-

\(c_{\lambda }\left( \left\{ w_{2}\right\} \right) = c_{\lambda }\left( \left\{ w_{1}, w_{3}\right\} \right) \cdot \frac{c\left( \left\{ w_{2}\right\} \cap \left\{ w_{1}, w_{3}\right\} \right) }{c\left( \left\{ w_{1}, w_{3}\right\} \right) } + c_{\lambda }\left( \left\{ w_{2}, w_{4}\right\} \right) \cdot \frac{c\left( \left\{ w_{2}\right\} \cap \left\{ w_{2}, w_{4}\right\} \right) }{c\left( \left\{ w_{2}, w_{4}\right\} \right) } = \frac{2}{5}.\)

-

\(c_{\lambda }\left( \left\{ w_{3}\right\} \right) = c_{\lambda }\left( \left\{ w_{1}, w_{3}\right\} \right) \cdot \frac{c\left( \left\{ w_{3}\right\} \cap \left\{ w_{1}, w_{3}\right\} \right) }{c\left( \left\{ w_{1}, w_{3}\right\} \right) } + c_{\lambda }\left( \left\{ w_{2}, w_{4}\right\} \right) \cdot \frac{c\left( \left\{ w_{3}\right\} \cap \left\{ w_{2}, w_{4}\right\} \right) }{c\left( \left\{ w_{2}, w_{4}\right\} \right) } = \frac{1}{6}.\)

-

\(c_{\lambda }\left( \left\{ w_{4}\right\} \right) = c_{\lambda }\left( \left\{ w_{1}, w_{3}\right\} \right) \cdot \frac{c\left( \left\{ w_{4}\right\} \cap \left\{ w_{1}, w_{3}\right\} \right) }{c\left( \left\{ w_{1}, w_{3}\right\} \right) } + c_{\lambda }\left( \left\{ w_{2}, w_{4}\right\} \right) \cdot \frac{c\left( \left\{ w_{4}\right\} \cap \left\{ w_{2}, w_{4}\right\} \right) }{c\left( \left\{ w_{2}, w_{4}\right\} \right) } = \frac{1}{10}.\)

Let us then apply the quadratic scoring rule, \(\mathfrak {q}_{\left\{ w_{1}\right\} }\), as our local inaccuracy measure in order to determine the local expected inaccuracy of Ann’s posterior credence in proposition \(\left\{ w_{1} \right\} \) that results from JCondi, \(\mathbb {E}_{c}\left[ \mathfrak {q}_{\left\{ w_{1} \right\} }\left( w(\left\{ w_{1} \right\} ), c_{\lambda }(\left\{ w_{1}\right\} ) \right) \right] \). If we let \(x = c_{\lambda }\left( \left\{ w_{1} \right\} \right) \), then we get:

Now, suppose that, instead of JCondi, Ann uses LPCondi in response to the above Jeffrey shift. If we let \(d_{E} = \frac{\left( c_{\lambda }\left( E \right) - c\left( E \right) \right) }{\vert E\vert }\), then Ann’s posterior credences recommended by LPCondi are the following:

-

\(c_{\lambda }'\left( \left\{ w_{1}\right\} \right) = c\left( \left\{ w_{1} \right\} \right) + \frac{\left( c_{\lambda }\left( B \right) - c\left( B \right) \right) }{\vert B\vert } = \frac{1}{4} + \frac{\left( \frac{1}{2} - \frac{3}{8} \right) }{2} = \frac{5}{16}\).

-

\(c_{\lambda }'\left( \left\{ w_{2}\right\} \right) = c\left( \left\{ w_{2} \right\} \right) + \frac{\left( c_{\lambda }\left( V \right) - c\left( V \right) \right) }{\vert V\vert } = \frac{1}{2} + \frac{\left( \frac{1}{2} - \frac{5}{8} \right) }{2} = \frac{7}{16}\).

-

\(c_{\lambda }'\left( \left\{ w_{3}\right\} \right) = c\left( \left\{ w_{3} \right\} \right) + \frac{\left( c_{\lambda }\left( B \right) - c\left( B \right) \right) }{\vert B\vert } = \frac{1}{8} + \frac{\left( \frac{1}{2} - \frac{3}{8} \right) }{2} = \frac{3}{16}\).

-

\(c_{\lambda }'\left( \left\{ w_{4}\right\} \right) = c\left( \left\{ w_{4} \right\} \right) + \frac{\left( c_{\lambda }\left( V \right) - c\left( V \right) \right) }{\vert V\vert } = \frac{1}{8} + \frac{\left( \frac{1}{2} - \frac{5}{8} \right) }{2} = \frac{1}{16}\).

Then, if we apply the quadratic scoring rule, \(\mathfrak {q}_{\left\{ w_{1} \right\} }\), and let \(x = c_{\lambda }'\left( \left\{ w_{1} \right\} \right) \), then we get the following local expected inaccuracy of Ann’s posterior credence in \(\left\{ w_{1}\right\} \) that results from LPCondi:

But since \(\mathbb {E}_{c}\left[ \mathfrak {q}_{\left\{ w_{1} \right\} }\left( w(\left\{ w_{1} \right\} ), c_{\lambda }'(\left\{ w_{1}\right\} ) \right) \right] < \mathbb {E}_{c}\left[ \mathfrak {q}_{\left\{ w_{1} \right\} }\left( w(\left\{ w_{1} \right\} ), c_{\lambda }(\left\{ w_{1}\right\} ) \right) \right] \), it follows that Ann’s posterior credence in \(\left\{ w_{1} \right\} \) determined by JCondi does not minimize the local expected inaccuracy.

Moreover, as shown by Leitgeb and Pettigrew (2010), the situation is not better when instead of focusing on the expected local inaccuracy, we focus on the expected global inaccuracy. Firstly, given a strictly proper scoring rule (or local inaccuracy measure) for proposition X, \({\mathfrak {s}}_{X}\), we may define a global inaccuracy measure for the credence function c as follows:

If we define \(\mathfrak {I}_{{\mathfrak {s}}}\) in this way, we may say that the global inaccuracy measure is generated from \({\mathfrak {s}}\). As it is easy to see, \(\mathfrak {I}_{{\mathfrak {s}}}\) is an additive inaccuracy measure, for the inaccuracy of c is the sum of the inaccuracies of individual credences that c assigns to the propositions in \({\mathcal {F}}\). Given an additive and strictly properFootnote 14\(\mathfrak {I}_{{\mathfrak {s}}}\), we can define the expected global inaccuracy of \(c_{\lambda }\) from the standpoint of c as follows:

Specifically, Leitgeb and Pettigrew adopt the Brier score, i.e. a global inaccuracy measure generated from the quadratic scoring rule \(\mathfrak {q}_{X}\):

Secondly, suppose that you are governed by the following norm: you should adopt the posterior credence function that satisfies constraints given by the \(c_{\lambda }\left( E\right) \)’s for all \(E \in {\mathcal {E}}\), and is minimal amongst the posterior credence functions thus constrained with respect to expected global inaccuracy given by

Then, Leitgeb and Pettigrew show that the posterior credence that satisfies the above norm does not result from JCondi, but is mandated by LPCondi. So, again, if your goal is to minimize expected global inaccuracy, you should not update by JCondi.

Is there any way to salvage the idea of inaccuracy minimization for the case of JCondi? Levinstein (2012) has suggested that this could be achieved if we replace the Brier score with the following logarithmic global inaccuracy measure:

More precisely, \(\mathfrak {L}\) takes the inaccuracy of a credence function c at world w to be the negative of the natural logarithm, ln, of the credence it assigns to w. Armed with the inaccuracy measure \(\mathfrak {L}\), Levinstein has shown that one’s probabilistic posterior credence function recommended by JCondi satisfies constraints given by the \(c_{\lambda }\left( E\right) \)’s for all \(E \in {\mathcal {E}}\) and minimizes expected global inaccuracy given by

However, this accuracy-based vindication of JCondi has a number of complications. First, \(\mathfrak {L}\) is not generated from a strictly proper scoring rule, and hence is not itself strictly proper. But without a strictly proper scoring rule, neither an expected-accuracy argument for BCondi (Greaves and Wallace 2006) nor an accuracy-dominance argument for BCondi (Briggs and Pettigrew 2020) can be established. Thus, if we adopt \(\mathfrak {L}\), we rule out at least two well-trodden accuracy-based justifications of the most popular updating rule, BCondi. Second, \(\mathfrak {L}\) is not additive, for it only considers credences assigned to singleton propositions \(\left\{ w \right\} \), and says nothing about credences in the more coarse-grained propositions in \({\mathcal {F}}\). Consequently, this measure cannot distinguish between probabilistic and non-probabilistic credence functions, and hence cannot be used to establish the accuracy-dominance argument for probabilism, i.e. the norm which says that one’s credences ought to be probabilities.Footnote 15 Thus, it appears that \(\mathfrak {L}\) is hardly defensible.

The above considerations show that an expected inaccuracy minimizer cannot accept JCondi as an updating rule, since it does not minimize expected inaccuracy under the class of strictly proper scoring rules, and even if it does so under the inaccuracy measure \(\mathfrak {L}\), this accuracy-based justification of JCondi is hardly acceptable. Hence, although Graves’s argument establishes VET in the context of uncertain evidence, it does so for JCondi, and so cannot be accepted by the expected inaccuracy minimizer. If we wish to show that gathering uncertain evidence is both practically and epistemically rational in expectation, we should consider some alternatives to Graves’s approach.

6 An alternative approach

In this section, we present an alternative account of the idea that learning from uncertain evidence cannot lead an agent to expect to make worse practical decisions. Our approach utilizes the following assumptions. First, we assume that the agent assigns prior credences to propositions \(U_{c_{\lambda }}^{E}\) from the finite set \({\mathcal {U}}\), which is a partition of \({\mathcal {W}}\). And since \({\mathcal {U}}\) is a partition, exactly one proposition \(U_{c_{\lambda }}^{E}\) will be true after the agent’s learning experience. Importantly, the finite set \({\mathcal {U}}\) is already included in \({\mathcal {W}}\) in the small space \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \). Hence, contrary to Graves’s approach, we do not need to invoke an extended credal state \(\left( {\mathcal {W}}^{*}, {\mathcal {F}}^{*}, c^{*} \right) \) in order to account for the practical value of updating on uncertain evidence. Recall that, in Graves’s approach, if the propositions \(R_{c_{\lambda }}^{{\mathcal {E}}}\) were already included in the small space \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \), then, as we have argued, there would be no need to use JCondi in the first place, and so Graves’s approach would in fact establish VET in the case where one updates by conditionalizing on the proposition \(R_{c_{\lambda }}^{{\mathcal {E}}}\). But since we do not assume that the agent updates by JCondi, and hence that no proposition in \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \) that describes your experience can be learned for certain, we take it to be plausible that \({\mathcal {U}}\) is already included in \({\mathcal {W}}\) and the agent learns exactly one proposition \(U_{c_{\lambda }}^{E}\) for certain.

Second, our approach assumes that when experience results in the proposition \(U_{c_{\lambda }}^{E}\) being true, the agent settles on the likelihood ratios for \(U_{c_{\lambda }}^{E}\), and updates her credences by dint of VCondi. Moreover, in order to ensure that the agent adopts the posterior credence \(c_{\lambda }\) in response to evidence E alone, we assume that her prior credences obey CIndi. Thus, we are assured that updating by dint of VCondi is a rational learning process.

With the above assumptions in mind, let us now show how VET can be established for the agent who faces a decision problem \(\left\langle \mathcal {A}, \mathcal {S}, c, u \right\rangle \) and updates, in response to uncertain evidence, by VCondi. Before receiving uncertain evidence, the agent would choose the act A which maximizes:

After undergoing a learning experience which provides a set of likelihood ratios for some \(U_{c_{\lambda }}^{E}\) in \({\mathcal {U}}\), the agent would choose the posterior Bayes act, i.e. an act A which maximizes

Note that the posterior Bayes act is an act that maximizes expected pragmatic utility relative to the agent’s posterior credence function, \(c_{\lambda }\), mandated by VCondi. Since \({\mathcal {U}}\) is a partition, we can determine the expectation of posterior Bayes act as follows:

Now the difference, \(\Delta ^{u}_{c_{\lambda }} \left( A_{U}, A_{\max } \right) \), between the maximizer of (27), \(A_{U}\), and the maximizer of (26), \(A_{\max }\), as assessed by the agent’s posterior credence \(c_{\lambda }(S) = \frac{\sum _{E \in {\mathcal {E}}}\alpha _{E} \cdot c\left( S \wedge E \right) }{\sum _{E \in {\mathcal {E}}}\alpha _{E}\cdot c\left( E \right) }\), can be given as follows:

Note that \(\Delta ^{u}_{c_{\lambda }} \left( A_{U}, A_{\max }\right) \ge 0\), for if \(A_{\max } = A_{U}\), then \(\Delta ^{u}_{c_{\lambda }} \left( A_{U}, A_{\max }\right) = 0\), and if \(A_{\max } \ne A_{U}\), then \(\Delta ^{u}_{c_{\lambda }} \left( A_{U}, A_{\max }\right) > 0\). We can also calculate the expectation of \(\Delta ^{u}_{c_{\lambda }} \left( A_{U}, A_{\max }\right) \) as follows:

Now in order to establish that, in expectation, updating on uncertain evidence by VCondi cannot lead you to make worse practical decisions, we will show that

To begin with, observe first that since \(\Delta ^{u}_{c_{\lambda }} \left( A_{U}, A_{\max }\right) \ge 0\), the expectation of its value must also be non-negative, and so:

By (29), we have

By using (27), we get

Now, assuming CIndi, we get, by Proposition 2,

Then, we have

And, by the law of total probability, we have \(c(S) = \sum _{U_{c_{\lambda }}^{E} \in {\mathcal {U}}} c\left( U_{c_{\lambda }}^{E} \right) \cdot c\left( S\vert U_{c_{\lambda }}^{E} \right) \), and hence

Finally, by (26) and (28), we get

Hence, we have

as required.

So far we have seen that, under the assumption of CIndi, expecting to update by VCondi cannot lead you to expect to make worse practical decisions. Since VCondi, when coupled with CIndi, can be regarded as a rational update mechanism in the context of uncertain evidence, our result establishes VET in that context. But could our argument be accepted by an expected inaccuracy minimizer? It turns out that so long as the inaccuracy of your credences is measured by a strictly proper scoring rule and your credences obey CIndi, your posterior credences recommended by VCondi minimize a kind of expected inaccuracy. More precisely:

Proposition 3

Suppose that \(c_{\lambda }\) and c are probability functions and c obeys CIndi. If \({\mathfrak {s}}_{X}\) strictly proper, extensional and continuous, then, for any \(U_{c_{\lambda }}^{E} \in {\mathcal {U}}\) with positive probability, and any \(X \in {\mathcal {F}}\), the agent’s posterior credence recommended by VCondi minimizes the expected local inaccuracy given by

Proof

Choose an arbitrary \(X \in {\mathcal {F}}\) and \(U_{c_{\lambda }}^{E} \in {\mathcal {U}}\) with positive probability. In order to establish Proposition 3, we need to find \(c_{\lambda }(X)\) which minimizes (40). Let \(x = c_{\lambda }(X)\). Since \({\mathfrak {s}}_{X}\) is extensional, the choice of \(c_{\lambda }(X)\) must minimize

If a choice of \(c_{\lambda }(X)\) minimizes (41), it will continue to minimize it if we divide it by \(c\left( U_{c_{\lambda }}^{E} \right) \) (which is positive by assumption). That is, the choice of \(c_{\lambda }(X)\) will minimize:

Since \(c\left( \cdot \vert U_{c_{\lambda }}^{E} \right) \) is a probability function, we may write (42) as:

Now, since \({\mathfrak {s}}_{X}\) is strictly proper, \(c_{\lambda }\left( X \right) \) minimizes (43) iff \(c_{\lambda }\left( X \right) = c\left( X\vert U_{c_{\lambda }}^{E}\right) \). Thus, for any \(U_{c_{\lambda }}^{E} \in {\mathcal {U}}\) with positive probability, \(c_{\lambda }\left( X \right) \) minimizes (40) iff \(c_{\lambda }\left( X \right) \) obeys Reflection. Hence, given CIndi, \(c_{\lambda }\left( X \right) \) minimizes (40) iff \(c_{\lambda }\left( X \right) \) satisfies VCondi.

Thus, unlike Graves’s argument, our alternative extension of VET dovetails with an accuracy-centered approach to vindicating updating on uncertain evidence. Under the current proposal, gathering uncertain evidence is not only pragmatically rational in expectation. It is also epistemically rational to do so.

Another advantage of our approach is that it appears to be less cognitively demanding than Graves’s extension of VET. The agent is no longer required to assign ex ante prior credences over propositions describing the possible Jeffrey shifts she might undergo in the future. Hence, she is also no longer required to grasp correctly ex ante the partition of propositions that her learning experience would affect directly. Recall that this is especially problematic for Graves’s approach, since neither EBCondi nor JCondi provides a normative guidance as to how to do this. However, one might object here that our approach assumes that the agent is in a position to specify ex ante the anticipated posterior credence functions, which itself appears to be highly demanding. In response, we would like to note that our approach works well even if the agent anticipates one and the same posterior credence function that she would adopt in response to taking E as well as to taking \(E'\) as her evidence. That is, the propositions \(U_{c_{\lambda }}^{E}\) and \(U_{c_{\lambda }}^{E'}\) would be still mutually exclusive, even if the agent adopted the same posterior credence function \(c_{\lambda }\), irrespective of whether she took E or \(E'\) as her evidence. This is so because taking E as one’s evidence is essentially different from taking \(E'\) as one’s evidence.Footnote 16

One may also worry that the use of VCondi in our approach is redundant, for if the agent learns a proposition \(U_{c_{\lambda }}^{E}\) for certain, she might simply conditionalize on \(U_{c_{\lambda }}^{E}\) and set her posterior credence function equal to \(c\left( \cdot \vert U_{c_{\lambda }}^{E}\right) \). In particular, she might determine her posterior credences in every \(E \in {\mathcal {E}}\) as follows: \(c_{\lambda }(E) = c\left( E \vert U_{c_{\lambda }}^{E} \right) \). To answer this objection, we would like to stress out that VCondi appears to be a more user-friendly update rule than Bayes conditioning on \(U_{c_{\lambda }}^{E}\). After all, Bayes theorem tells us that \(c\left( E \vert U_{c_{\lambda }}^{E} \right) = \frac{c(E) \cdot c\left( U_{c_{\lambda }}^{E} \vert E \right) }{c(U_{c_{\lambda }}^{E})}\), and so requires the agent to determine the absolute likelihood for \(U_{c_{\lambda }}^{E}\), \(c\left( U_{c_{\lambda }}^{E} \vert E \right) \). And this is a hard task if one wants this quantity to be determined in a reasonable way. For example, it is hard to say what the absolute likelihood \(c\left( U_{c_{\lambda }}^{B} \vert B \right) \) should be in Ann’s case. VCondi enables us to mitigate this problem, for it allows the agent to express only the likelihood ratios of the form \(\frac{c\left( U_{c_{\lambda }}^{E}\vert E \right) }{c\left( U_{c_{\lambda }}^{E}\vert E' \right) }\), without the need of determining the absolute likelihoods.

7 Conclusion