Abstract

It seems intuitive that our credal states are improved if we obtain evidence favoring truth over any falsehood. In this regard, Fallis and Lewis have recently provided and discussed some formal versions of such an intuition, which they name ‘the Monotonicity Principle’ and ‘Elimination’. They argue, with those principles in hand, that the Brier rule, one of the most popular rules of accuracy, is not a good measure, and that accuracy-firsters cannot underwrite both probabilism and conditionalization. In this paper, I will argue that their conclusions are somewhat hasty. Specifically, I will demonstrate that there is another version of the Monotonicity Principle that can be satisfied by some additive rules of accuracy, such as the Brier rule. Moreover, it will also be argued that their version of the principle has some undesirable features regarding the epistemic betterness. Therefore, their criticisms can hardly jeopardize accuracy-firsters until any further justification of their versions of the Monotonicity Principle and Elimination is provided.

Similar content being viewed by others

Notes

As is well known, this understanding of the reflection principle depends on the assumption that there is no cognitive malfunction like memory loss in between the current and the past credal states.

In this paper, the evaluative concepts of betterness and improvement of a credal state are all epistemic, rather than practical in nature. I will occasionally omit the modifiers like ‘epistemic’ and ‘epistemically’ if there is no danger of confusion.

I would like to emphasize that the actual epistemic utility (or betterness) should be distinguished from the expected epistemic utility (or betterness). The former is the epistemic utility of a credal state at a particular world, whereas the second is a kind of average over those actual epistemic utilities each of which may be different across possible worlds. It is noteworthy that some works employ expected epistemic utility to investigate the relationship between evidence and the epistemic betterness. For example, see Good (1967) and Myrvold (2012). Thanks to an anonymous reviewer for informing me of such references. My main concern in this paper, however, is the actual epistemic utility, not the expected epistemic utility.

Here is the proof. For any two credence functions \({\textbf {x}}\) and \({\textbf {y}}\) defined over a finite partition \({\mathbb {H}}\), it holds that \(y_i = x_i \cdot \pi ^{{\textbf {x}},{\textbf {y}}}_i\). Then, we have that \(r_i = q_i \cdot \pi ^{{\textbf {q}}{} {\textbf {r}}}_i = s_i \cdot \pi ^{{\textbf {s}},{\textbf {q}}}_i \cdot \pi ^{{\textbf {q}},{\textbf {r}}}_i\) and \(r^*_i = p_i \cdot \pi ^{{\textbf {p}},{\textbf {r}}^*}_i = s_i \cdot \pi ^{{\textbf {s}},{\textbf {p}}}_i \cdot \pi ^{{\textbf {p}},{\textbf {r}}^*}_i\). Suppose now that \(\pi ^{{\textbf {s}},{\textbf {q}}}_{i}=\pi ^{{\textbf {p}},{\textbf {r}}^*}_{i}\) and \(\pi ^{{\textbf {q}},{\textbf {r}}}_{i}=\pi ^{{\textbf {s}},{\textbf {p}}}_{i}\). Then it follows from the above equations that \(r_i=r^*_i\). This holds for any member in \({\mathbb {H}}\). Hence, we can conclude that the ratio parameter satisfies Commutativity. \(\square \)

The following formulation of Strict Propriety is restricted to coherent credence functions. However, many accuracy-firsters do not impose such a restriction on Strict Propriety. I will revisit this issue in the next section.

Indeed, Weak Propriety, according to which \(\sum _{i}r_i\mathfrak {A}_{i}(\textbf{r}) \ge \sum _{i}r_i\mathfrak {A}_{i}(\textbf{c})\) for any coherent credence function \(\textbf{c}\), is sufficient to avoid such ill-motivated updating. However, Weak Propriety entails Strict Propriety under some plausible assumptions. See Campbell-Moore and Levinstein (2021).

This point and some relevant discussions can be found in Pettigrew (2022). Consider two credence functions defined over a partition \(\{H_1,H_2,H_3\}\): \(\textbf{r}=(1/3,1/3,1/3)\) and \(\textbf{c}=(1,1,1)\). Note that \(\textbf{r}\) is coherent but \(\textbf{c}\) is not. Then, \(\sum _{i}r_i\mathfrak {L}^S_{i}(\textbf{r})=-\ln {3} <0 =\sum _{i}r_i\mathfrak {L}^S_{i}(\textbf{c})\), which conflicts with the reformulated version of Strict Propriety.

Not all the global measures can be generated from a local measure. The simple spherical and logarithmic rules can be taken as global, but they have no local counterpart from which they are generated.

There is another way of generating the global measure from the local one, which can be called ‘Averaging’. This way can be formulated as follows: \(\mathfrak {A}^*_{k}(\textbf{c})=(1/N)\sum _{i}\mathfrak {u}_{k}(c_{i})\). Here, N refers to the size of \({\mathbb {H}}\). For some relevant discussions, see Joyce (2009) and Carr (2015). The discussion that follows remains the same even if we adopt Averaging, instead of Additivity, as a way of generating the global measure from the local one. This is because, in this context, the rankings based on Additivity are ordinally equivalent to the rankings based on Averaging.

In regard to the credence functions over a partition, the additive Brier rule \(\mathfrak {B}^A\) is a positive linear transformation of the simple Brier rule \(\mathfrak {B}^S\). It is not hard to see this. Note that \(\mathfrak {B}^A_{k}(\textbf{c})=-(1-c_k)^2+(c_k)^2-\sum _i (c_i)^2=1+\mathfrak {B}^S_{k}(\textbf{c}).\) However, such a relationship between an additive rule and its simple counterpart dose not hold for the spherical and logarithmic rules.

Revisit the example in the previous section: \(\textbf{r}=(1/3,1/3,1/3)\) and \(\textbf{c}=(1,1,1)\). Note that \(\sum _{i}r_i\mathfrak {L}^A_{i}(\textbf{r})\) is negatively finite while \(\sum _{i}r_i\mathfrak {L}^A_{i}(\textbf{c})\) is negatively infinite, and so that \(\sum _{i}r_i\mathfrak {L}^A_{i}(\textbf{r})> \sum _{i}r_i\mathfrak {L}^A_{i}(\textbf{c})\). (Recall that \(\sum _{i}r_i\mathfrak {L}^S_{i}(\textbf{r})=-\ln {3} <0 =\sum _{i}r_i\mathfrak {L}^S_{i}(\textbf{c})\).)

Fallis and Lewis (2016, pp. 582–583) say, without any explicit proof, that Monotonicity\(^\pi \) entails Elimination\(^\pi \). M3 and M4 appearing in that paper correspond to Monotonicity\(^\pi \) and Elimination\(^\pi \), respectively. However, for the proof at issue, we need an assumption entailing that, for any \(k (\ne n)\), it holds that \(\mathfrak {A}_{k}(\textbf{s}) \ne \mathfrak {A}_{k}(\textbf{r})\) if \(s_n > r_n\) and \(\pi _{i}^{\textbf{s},\textbf{r}}=\pi _{k}^{\textbf{s},\textbf{r}}\) for any \(i(\ne n)\). Without such an assumption, we can prove just a weak version of Elimination\(^ \pi \)—that is, \(\mathfrak {A}_{k}(\textbf{s}) \le \mathfrak {A}_{k}(\textbf{r})\text { for any} k (\ne n) \text { if } s_n > 0 = r_n \text { and } s_i /s_j = r_i /r_j \text { for any} i, j (\ne n) \). I think the assumption in question is very plausible although Fallis and Lewis do not explicitly mention it.

In other words, suppose that evidence eliminates the epistemic possibility that \(H_n\) is true, and so leads to assign a zero credence to that hypothesis. This is why Fallis and Lewis call this version of the monotonicty principle ‘Elimination’.

Note that Elimination\(^\pi \) can be thought of as connecting conditionalization with the actual epistemic utilities, not the expected epistemic utilities. See footnote 4. Indeed, there are many attempts to elucidate the relationship betwen conditionalization and the expected epistemic utilities. One of the most relevant works is given by Greaves and Wallace (2006).

Similar to the relationship between Monotonicity\(^\pi \) and Elimination\(^\pi \), Monotonicity\(^\delta \) entails Elimination\(^\delta \) under the assumption that, for any \(k (\ne n)\), it holds that \(\mathfrak {A}_{k}(\textbf{s}) \ne \mathfrak {A}_{k}(\textbf{r})\) if \(s_n > r_n\) and \(\delta _{i}^{\textbf{s},\textbf{r}}=\delta _{k}^{\textbf{s},\textbf{r}}\) for any \(i(\ne n)\). See footnote 16.

The additive Brier rule is a positive linear transformation of the simple Brier rule. Note that, in order for \(\textbf{s}\) and \(\textbf{r}\) in the above quotation to be taken as an example to show that the simple (and additive) Brier rule violates Monotonicity\(^\delta \), it should be shown that \(\mathfrak {B}^S_{2}(\textbf{s})\) is greater than \(\mathfrak {B}^S_{2}(\textbf{r})\), but it is not the case since \(\mathfrak {B}^S_{2}(\textbf{s})=0.460<0.632=\mathfrak {B}^S_{2}(\textbf{r})\).

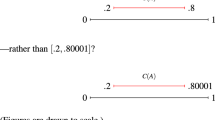

Table 1 includes the results given in Fallis and Lewis (2016) and Lewis and Fallis (2021). ‘OK’ means that the relevant pair of an accuracy measure and an evidential parameter satisfies the Monotonicity Principle, and ‘NG’ means that such a pair violates the principle. The relevant proofs and comments are given in Appendix.

Note that Fallis and Lewis’s claim can be thought of as the claim that accuracy-firsters cannot underwrite both the Monotonicity Principle and probabilism.

Here is the proof. For any two credence functions \({\textbf {x}}\) and \({\textbf {y}}\) defined over a finite partition \({\mathbb {H}}\), it holds that \(y_i = x_i + \delta ^{{\textbf {x}}{} {\textbf {y}}}_i\). Then, we have that \(r_i = q_i + \delta ^{{\textbf {q}},{\textbf {r}}}_i = s_i + \delta ^{{\textbf {s}},{\textbf {q}}}_i + \delta ^{{\textbf {q}},{\textbf {r}}}_i\) and \(r^*_i = p_i + \delta ^{{\textbf {p}},{\textbf {r}}^*}_i = s_i + \delta ^{{\textbf {s}},{\textbf {p}}}_i + \pi ^{{\textbf {p}},{\textbf {r}}^*}_i\). Suppose now that \(\delta ^{{\textbf {s}},{\textbf {q}}}_{i}=\delta ^{{\textbf {p}},{\textbf {r}}^*}_{i}\) and \(\delta ^{{\textbf {q}},{\textbf {r}}}_{i}=\delta ^{{\textbf {s}},{\textbf {p}}}_{i}\). Thus, it follows from the above equations that \(r_i=r^*_i\). This holds for any member in \({\mathbb {H}}\). Therefore, the difference parameter satisfies Commutativity.\(\square \)

A caveat needs to be stated. I just say here that Commutativity is a necessary, but not sufficient, condition for an evidential parameter to represent only the impact of evidence itself. Other conditions have also been suggested and utilized to epistemically compare various evidence parameters. See Jeffrey (2004) and Wagner (2002, 2003), for example. Using such conditions, those authors advocate what is known as the Bayes factor. The Bayes factor of a proposition \(H_i\) against another proposition \(H_j\) with respect to the credence updating from \({\textbf {s}}\) to \({\textbf {r}}\), which may be denoted by \(\beta ^{{\textbf {s}},{\textbf {r}}}_{i, j }\), is defined as follows: \(\beta ^{{\textbf {s}},{\textbf {r}}}_{i, j } = (r_i /r_j)/(s_i /s_j)\). (Here, \(r_i\) and \(r_j\) are the new credence in \(H_i\) and in \(H_j\), respectively. These two propositions \(H_i\) and \(H_j\) are members of a partition \({\mathbb {H}}\). Similarly, \(s_i\) and \(s_j\) are the old credence in \(H_i\) and in \(H_j\), respectively.) In words, the Bayes factor of \(H_i\) against \(H_j\) is the ratio of new-to-old odds, not probabilities. Thanks to an anonymous reviewer for helping me clarify this point.

I am grateful to an anonymous reviewer for helping me clarify this point.

The problem of irrelevant conjunction is related to how to formulate the degree to which evidence incrementally supports a hypothesis—i.e., the degree of confirmation. In particular, the problem besets the attempt to formulate the degree of confirmation by means of P(H|E)/P(H). (Here P is a probability function.) For the problem of irrelevant conjunction and the law of likelihood, see Earman (1992), Fitelson (1999, 2007), Steel (2007) and Rosenkrantz (1994), for example.

Note that, contrary to this consideration, the law of likelihood says that \(\lnot B\) raises the credence in \( H \& X\) as much as it raises the credence in D. This is because each of the two propositions entails \(\lnot B\).

Suppose that the chance of X is extremely low and so the chance of \(\lnot X\) is extremely high. Then, while \( H \& X\) is much more informative than H, \( H \& \lnot X\) is only slightly more informative than H. So, it can be said that the evidence \(\lnot B\) raises the credence in \( H \& X\) to a lesser degree than it raises the credence in \( H \& \lnot X\) as well as D. Note that \( H \& \lnot X\) and D are false at the world where \( H \& X\) is true.

Suppose that \({\textbf {s}}\) is updated to \({\textbf {r}}\) in accordance with conditionalization on \(\lnot B\). Then, for any contingent proposition X, \( {\textbf {r}}(H \& X)/{\textbf {s}}(H \& X) \ge {\textbf {r}}(Y)/{\textbf {s}}(Y)\) for any proposition Y in the partition at issue. Thus, if an accuracy measure satisfies Monotonicity\(^\pi \), then the measure says, regardless of the informativeness at issue, that \({\textbf {r}}\) is at least as good as \({\textbf {s}}\) at the world where \( H \& X\) is true.

Here, I assume that X is probabilistically independent of H. Someone might think that the credence function \({\textbf {s}}\) is defined over the partition \( \{H \& X,H \& \lnot X, D, B \}\), and therefore we cannot use \({\textbf {s}}\) to formulate the probabilisitic independence between H and X. However, there is no need to worry about this. We can formulate the independence in question using some probabilistically coherent extensions of \({\textbf {s}}\), which are defined over a \(\sigma \)-algebra that includes H and X as its members.

A proof is given in Appendix II.

How about the additive spherical rule \(\mathfrak {S}^A\)? Example A2 in Appendix, which shows \(\mathfrak {S}^A\) violates Monotonicity\(^\delta \), does not prove that the rule also violates Weak Monotonicity. This is because the credence functions \({\textbf {s}}\) and \({\textbf {r}}\) in that example violate the condition that \(\bar{\pi }_{k}^{\textbf{s},\textbf{r}}\le \bar{\pi }_{i}^{\textbf{s},\textbf{r}}\) for any i. Similarly, Fallis and Lewis’s example, which is used to show that \(\mathfrak {S}^A\) violates Monotonicity\(^\pi \), cannot show that \(\mathfrak {S}^A\) violates Weak Monotonicity. (In that example, \({\textbf {s}}=(1/7,3/7,3/7)\) and \({\textbf {r}}=(1/4,3/4,0)\).) Admittedly, if it can be proved in this paper whether \(\mathfrak {S}^A\) satisfies Weak Monotonicity or not, my discussions will be more complete. However, I will not prove such a thing. This is because it is proved here that Weak Elimination is satisfied by all of the strictly proper accuracy measures, and the conclusions in this paper do not depend on whether \(\mathfrak {S}^A\) satisfies Weak Monotonicity.

Some proofs related to the discussion in this paragraph are given in Appendix II.

References

Campbell-Moore, C., & Levinstein, B. A. (2021). Strict propriety is weak. Analysis, 81(1), 8–13.

Carr, J. R. (2015). Epistemic expansions. Res Philosophica, 92(2), 217–236.

Domotor, Z. (1980). Probability kinematics and representation of belief change. Philosophy of Science, 47(3), 384–403.

Döring, F. (1999). Why Bayesian psychology is incomplete. Philosophy of Science, 66(3), 389.

Earman, J. (1992) . Bayes or bust? A critical examination of Bayesian confirmation theory.

Fallis, D., & Lewis, P. J. (2016). The brier rule is not a good measure of epistemic utility (and other useful facts about epistemic betterness). Australasian Journal of Philosophy, 94(3), 576–590.

Field, H. (1978). A note on Jeffrey conditionalization. Philosophy of Science, 45(3), 361–367.

Fitelson, B. (1999). The plurality of Bayesian measures of confirmation and the problem of measure sensitivity. Philosophy of Science, 66(S3), S362–S378.

Fitelson, B. (2007). Likelihoodism, Bayesianism, and relational confirmation. Synthese, 156(3), 473–489.

Good, I. J. (1967). On the principle of total evidence. British Journal for the Philosophy of Science, 17(4), 319–321.

Greaves, H., & Wallace, D. (2006). Justifying conditionalization: Conditionalization maximizes expected epistemic utility. Mind, 115(459), 607–632.

Hacking, I. (2016). Logic of statistical inference. Cambridge University Press.

Jeffrey, R. (2004). Subjective probability: The real thing. Cambridge University Press.

Joyce, J. (2009). Accuracy and coherence: prospects for an alethic epistemology of partial belief. In F. Huber & C. Schmidt-Petri (Eds.), Degrees of belief, synthese (pp. 263–297). Springer.

Joyce, J. (2021). Bayes’ Theorem. In E. N. Zalta (Ed.), ‘The stanford encyclopedia of philosophy’, fall (2021st ed.). Metaphysics Research Lab: Stanford University.

Joyce, J. M. (1998). A nonpragmatic vindication of probabilism. Philosophy of Science, 65(4), 575–603.

Lange, M. (2000). Is Jeffrey conditionalization defective by virtue of being non-commutative? Remarks on the sameness of sensory experiences. Synthese, 123(3), 393–403.

Leitgeb, H., & Pettigrew, R. (2010a). An objective justification of Bayesianism I: Measuring inaccuracy. Philosophy of Science, 77(2), 201–235.

Leitgeb, H., & Pettigrew, R. (2010b). An objective justification of Bayesianism II: The consequences of minimizing inaccuracy. Philosophy of Science, 77(2), 236–272.

Lewis, P. J., & Fallis, D. (2021). Accuracy, conditionalization, and probabilism. Synthese, 198, 4017–4033.

Myrvold, W. C. (2012). Epistemic values and the value of learning. Synthese, 187(2), 547–568.

Pettigrew, R. (2016). Accuracy and the laws of credence. Oxford University Press.

Pettigrew, R. (2022). Accuracy-first epistemology without additivity. Philosophy of Science, 89(1), 128–151.

Rosenkrantz, R. (1994). Bayesian confirmation: Paradise regained. The British Journal for the Philosophy of Science, 45(2), 467–476.

Royall, R. (1997). Statistical evidence: A likelihood paradigm (Vol. 71). CRC Press.

Sober, E. (2008). Evidence and evolution: The logic behind the science. Cambridge University Press.

Steel, D. (2007). Bayesian confirmation theory and the likelihood principle. Synthese, 156, 53–77.

van Fraassen, B. C. (1984). Belief and the will. Journal of Philosophy, 81(5), 235–256.

Wagner, C. G. (2002). Probability kinematics and commutativity. Philosophy of Science, 69(2), 266–278.

Wagner, C. G. (2003). Commuting probability revisions: The uniformity rule. Erkenntnis, 59(3), 349–364.

Acknowledgements

I should be grateful to Jaemin Jung and Minkyung Wang for their valuable comments. I should also thank the anonymous reviewers of this journal for their suggestions and comments. I was informed of some typos and grammatical errors by Khyutae Kang and Joonsoo Lee. I am grateful to them for their assistance. The earlier version of this paper was presented at the annual conference of the Korean Society for the Philosophy of Science in 2020 and at the work-in-progress workshop of the Munich Center for Mathematical Philosophy in 2022.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author reports there are no competing interests to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix I: Proofs of the results in Table 1

‘OK’ in Table 1 means that the corresponding accuracy measure satisfies the relevant version of the Monotonicty Principle. On the other hand, ‘NG’ in the table means that the corresponding measure violates the principle in question. Some proofs have already been provided in Fallis and Lewis’s papers of 2016 and 2021. In particular, they have proved what measure of the aforementioned accuracy measures satisfy Monotonicity\(^\pi \). However, they have not demonstrated the results related to Monotonicity\(^\delta \). In what follows, I will provide such proofs. In particular, I will use some concrete examples to show NGs, and give some mathematical proofs of OKs. All credence functions in what follows are assumed to be coherent and defined over a partition \({\mathbb {H}}=\{H_1, \ldots , H_n \}\).

1.1 Examples showing NGs

The following examples show that neither \(\mathfrak {S}^S\) (Example A1) nor \(\mathfrak {S}^A\) (Example A2) satisfies Monotonicity\(^\delta \).

Example A1

Suppose that \(\textbf{s}=(0.7,0.2,0.1)\) and \(\textbf{r}=(0.75,0.25,0)\). Then, \(\delta _{1}^{{\textbf {s}},{\textbf {r}}}=\delta _{2}^{\textbf{s},\textbf{r}}=0.05\) and \(\delta _{3}^{\textbf{s},\textbf{r}}=-0.1\). So, \(\delta _{1}^{\textbf{s},\textbf{r}}\ge \delta _{i}^{\textbf{s},\textbf{r}}\) for any i. However, \(\mathfrak {S}^S_{1}(\textbf{s})=0.953>0.949=\mathfrak {S}^S_{1}(\textbf{r})\).

Example A2

Suppose that \(\textbf{s}=(0.15,0.35,0.35,0.15)\) and \(\textbf{r}=(0.2,0.4,0.4,0)\). Then, \(\delta _{1}^{\textbf{s},\textbf{r}}=\delta _{2}^{\textbf{s},\textbf{r}}=\delta _{3}^{\textbf{s},\textbf{r}}=0.05\), and \(\delta _{4}^{\textbf{s},\textbf{r}}=-0.15\) and so \(\delta _{1}^{\textbf{s},\textbf{r}}\ge \delta _{i}^{\textbf{s},\textbf{r}}\) for any i. However, \(\mathfrak {S}^A_{1}(\textbf{s})=2.920>2.907=\mathfrak {S}^A_{1}(\textbf{r})\).

1.2 A Proof that \(\mathfrak {B}^S\) and \(\mathfrak {B}^A\) satisfy monotonicity\(^\delta \)

Suppose that the antecedent of Monotonicity\(^\delta \)—that is, \(r_{k}-s_{k}=\delta _{k}\ge \delta _{i}=r_{i}-s_{i}\) for any i. (Here I use \(\delta _{i}\) rather than \(\delta _{i}^{\textbf{s},\textbf{r}}\), for the sake of notational simplicity.) Then, it holds that:

Note that \(\sum _{i}r_{i}=\sum _{i}s_{i}=1\) and \(\delta _{k}\ge \delta _{i}\) for any i. Thus, it holds that \(\delta _{k}=\sum _{i}\delta _{k}r_{i}\ge \sum _{i}\delta _{i}r_{i}\) and \(\delta _{k}=\sum _{i}\delta _{k}s_{i}\ge \sum _{i}\delta _{i}s_{i}\), which implies that \(\mathfrak {B}^S_{k}(\textbf{r})\ge \mathfrak {B}^S_{k}(\textbf{s})\). Hence, \(\mathfrak {B}^S\) satisfies Monotonicity\(^\delta \). As explained, \(\mathfrak {B}^S\) is ordinally equivalent to \(\mathfrak {B}^A\), and therefore we can also conclude that \(\mathfrak {B}^A\) satisfies Monotonicity\(^\delta \). \(\square \)

1.3 A proof that \(\mathfrak {L}^S\) and \(\mathfrak {L}^A\) satisfy monotonicity\(^\delta \)

It is very straightforward that \(\mathfrak {L}^S\) satisfies Monotonicity\(^\delta \). Suppose that \(\delta _{i}^{\textbf{s},\textbf{r}}\le \delta _{k}^{\textbf{s},\textbf{r}}\) for any i. Then, it holds that \(r_{k}-s_{k}>0\), and so \(\mathfrak {L}^S_k(\textbf{r})=\ln {r_k}>\ln {s_k}=\mathfrak {L}^S_k(\textbf{s})\), as Monotonicity\(^\delta \) requires. However, it is not easy to show that \(\mathfrak {L}^A\) satisfies Monotonicity\(^\delta \). In what follows, I will prove this, and provide some relevant examples.

For this purpose, I will show that:

entails that \(\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {L}^A_{1}(\textbf{r})\). Note that \(\sum _{i} \delta ^{\textbf{s},\textbf{r}}_{i}=0\) since \(\textbf{r}\) is coherent. Then, it should hold that, if some \(\delta ^{\textbf{s},\textbf{r}}\)s are positive, then some other \(\delta ^{\textbf{s},\textbf{r}}\)s should be negative. In this regard, (*) says that at most one \(\delta ^{\textbf{s},\textbf{r}}_i\) has a negative value. However, this assumption does not damage the generality of the proof that follows. This is because a similar proof, mutatis mutandis, can be provided for cases where two or more \(\delta ^{\textbf{s},\textbf{r}}_i\)s are negative. (Below, I will explain this using Example A7.) Moreover, it is also noteworthy that (*) assumes that \(\delta ^{\textbf{s},\textbf{r}}_i\)s are weakly decreasing—that is, \(\delta ^{\textbf{s},\textbf{r}}_{i+1}\le \delta ^{\textbf{s},\textbf{r}}_{i}\) for any \(i=1,\ldots ,n-1\). However, this assumption does not undermine the generality of my proof, either. This is because any two credence function \(\textbf{s}\) and \(\textbf{r}\), whose \(\delta ^{\textbf{s},\textbf{r}}_i\)s are not weakly decreasing, can be transformed into two functions \(\textbf{s}^\prime \) and \(\textbf{r}^\prime \), respectively, so that \(\delta ^{\textbf{s}^\prime ,\textbf{r}^\prime }_i\)s are weakly decreasing, without any change in their epistemic utilities. Suppose, for instance, that \(\textbf{s}=(0.10,0.30,0.20,0.40)\) and \(\textbf{r}=(0.15,0.30, 0.25,0.30)\). Note that \(\delta ^{\textbf{s},\textbf{r}}_{3}=0.05>0=\delta ^{\textbf{s},\textbf{r}}_{2}\) and so \(\delta ^{\textbf{s},\textbf{r}}_i\)s are not weakly decreasing. However, when \(\textbf{s}\) and \(\textbf{r}\), respectively, are transformed into \(\textbf{s}^\prime =(0.10,0.20,0.30,0.40)\) and \(\textbf{r}^\prime =(0.15,0.3,0.25,0.30)\), \(\delta ^{\textbf{s}^\prime ,\textbf{r}^\prime }_i\)s become weakly decreasing while \(\mathfrak {L}^A_{1}(\textbf{s})=\mathfrak {L}^A_{1}(\textbf{s}^\prime )\) and \(\mathfrak {L}^A_{1}(\textbf{r})=\mathfrak {L}^A_{1}(\textbf{r}^\prime )\). It can be said, as a result, that (*) does not undermine the generality of my proof.

Anyway, I will prove in what follows that (*) entails that \(\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {L}^A_{1}(\textbf{r})\). In particular, I will suggest a way of constructing a sequence of \({\textbf {s}}_1, \ldots , {\textbf {s}}_{n-2}\) such that

under the assumption of (*).

Let me begin with proving the following lemma.

Lemma A3

Suppose that \(\textbf{c}=(c_{1},\ldots ,c_{n})\) is a coherent credence function such that \(c_{1} >0\) and \(c_{i}<1\) for any \(i\ne 1\). Then,

where \(k=2,\ldots ,n\).

Proof

Suppose that \(\textbf{c}\) is coherent, and that \(c_{1} > 0\), and \(c_{i}<1\) for any \(i\ne 1\). Then, we have that:

Moreover, it holds that \(\left( 1-c_{1}\right) ^{j}=\left( \sum _{i=2}^{n}c_{i}\right) ^{j}\ge \sum _{i=2}^{k}\left( c_{i}\right) ^{j}\) for any natural number j. Then, it follows from the above mathematical facts that

as required. \(\square \)

Now, I will suggest a particular way of constructing a credence function that is epistemically better relative to \(\mathfrak {L}^A\) than \({\textbf {s}}\) at the world where \(H_1\) is true. The credence functions so constructed will consist of \({\textbf {s}}_1, \ldots , {\textbf {s}}_{n-2}\) satisfying (**) under the assumption of (*). The following Theorem A4, which can be proved with help of Lemma A3, specifies such a way.

Theorem A4

Suppose that \(\textbf{s}=(s_{1},\ldots ,s_{n})\) is a coherent credence function. Let’s define a credence function \(\textbf{s}_{x,k}\) as follows:

where x is a real number, and k is a natural number such that \(1\le k \le n-1\) and \(0\le x\le s_{n}/k\). Then, \(\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {L}^A_{1}(\textbf{s}_{x,k})\) for any \(x\in [0,s_{n}/k]\).

Proof

It is not hard to find that this theorem is true when \(\textbf{s}\) is maximally opinionated-that is, \(s_{i}=1\) for some i. On the one hand, when \(s_{i}=1\) for some \(i<n\), x should be zero and so the theorem is trivially true. On the other hand, when \(s_{n}=1\), it holds that \(\mathfrak {L}^A_{1}(\textbf{s})=\ln {0}+\sum _{i=2}^{n-1}\ln (1-0)+\ln {(1-1)}=-\infty \), and so the theorem is true. Moreover, the theorem is also trivially true when \(s_{n}=0\).

Now, consider the cases in which \(\textbf{s}\) is not maximally opinionated and \(s_{n}\ne 0\). Note that \(\mathfrak {L}^A_{1}(\textbf{s}_{x,k})\) can be regarded as a function of x. Let f be such a function. That is,

This function is continuous and continuously differentiable on \([0,s_{n}/k]\). Then, we obtain the following equations:

Note that \(f^{\prime \prime }(x)<0\) for any \(x\in [0,s_{n}/k]\), and so \(f^{\prime }(x)\) is a decreasing function of \(x\in [0,s_{n}/k]\). So, if

then it is guaranteed that f(x) is an increasing function of \(x\in [0,s_{n}/k]\), and hence that, for any \(x\in [0,s_{n}/k]\), \(f(0)=\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {L}^A_{1}(\textbf{s}_{x,k})=f(x) \), which is the conclusion that we want to reach.

To prove (\(\dagger \)), let me first define another credence function \(\textbf{c}=(c_{1},\ldots ,c_{n})\), as follows:

Note that \(\textbf{c}\) is coherent, and that \(c_{1} >0\) and \(c_{i}<1\) for any \(i\ne 1\). (Recall that we assume that \(\textbf{s}\) is not maximally opinionated. So, \(s_i <1\) for any i.) Then, we obtain, with the help of Lemma A3, that:

where \(k=2,\ldots ,n-1\). Hence, it can be said that, when \(k=2,\ldots ,n-1\), the decreasing function \(f^{\prime }\) on \([0,s_{n}/k]\) has a positive minimum value, and so f(x)—i.e., \(\mathfrak {L}^A_{1}(\textbf{s}_{x,k})\)—is an increasing function of \(x\in [0,s_{n}/k]\). Therefore, we have that \(\mathfrak {L}^A_{1}(\textbf{s}_{x,k})\ge \mathfrak {L}^A_{1}(\textbf{s})\) for any \(x\in [0,s_{n}/k]\) when \(k=2,\ldots ,n-1\).

What about the case in which \(k=1\)? It is easy to find that \(\mathfrak {L}^A_{1}(\textbf{s}_{x,1})\ge \mathfrak {L}^A_{1}(\textbf{s})\) for any \(x\in [0,s_{n}]\). What is called ‘Truth-directedness’ entails that \(\textbf{s}_{x,1}=(s_{1}+x,s_{2},\ldots ,s_{n-1},s_{n}-x)\) is epistemically better than \(\textbf{s}=(s_{1},s_{2},\ldots ,s_{n-1},s_{n})\) at the world where \(H_1\) is true. Note that, while \(\textbf{s}_{x,1}\) is closer to the truth hypothesis \(H_1\) than \({\textbf {s}}\), \(\textbf{s}_{x,1}\) is not closer to any false hypotheses than \({\textbf {s}}\). As a result, we have that \(\mathfrak {L}^A_{1}(\textbf{s}_{x,k})\ge \mathfrak {L}^A_{1}(\textbf{s})\) for any \(x\in [0,s_{n}/k]\) when \(k=1,\ldots ,n-1\), as required. \(\square \)

Using this theorem, we can prove our main result—that is, \(\mathfrak {L}^A\) satisfies Monotonicity\(^\delta \). For the proof, it may be helpful to consider a concrete example.

Example A5

Suppose that \(\textbf{s}=(0.10,0.20,0.30,0.40)\) and \(\textbf{r}=(0.25,0.30,0.33,0.12)\). Note that \(0\le \delta ^{\textbf{s},\textbf{r}}_{i}\le \delta ^{\textbf{s},\textbf{r}}_{1}\) for any \(i<4\), and \(\delta ^{\textbf{s},\textbf{r}}_{4}<0\). Regarding these functions, we can provide two other credence functions \(\textbf{s}^{1}\) and \(\textbf{s}^{2}\), as follows:

Here, a credence function \(\textbf{s}^{i}_{x,k}\) is generated from the corresponding credence function \(\textbf{s}^i\) in accordance with the definition in Theorem A2. Then, it can be said, with the help of the theorem, that \(\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {L}^A_{1}(\textbf{s}^{1})\le \mathfrak {L}^A_{1} (\textbf{s}^{2})\le \mathfrak {L}^A_{1}(\textbf{r})\), as required by Monotonicity\(^\delta \).

In a similar way to this example, we can prove the main result, which is formulated as follow.

Theorem A6

Suppose that \(\textbf{s}=(s_{1},\ldots ,s_{n})\) and \(\textbf{r}=(r_{1},\ldots ,r_{n})\) are coherent credence functions. Suppose also that \(\delta ^{\textbf{s},\textbf{r}}_{n}\le 0 \le \delta ^{\textbf{s},\textbf{r}}_{n-1}\le \cdots \le \delta ^{\textbf{s},\textbf{r}}_{2}\le \delta ^{\textbf{s},\textbf{r}}_{1}\). Then, \(\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {\mathfrak {L}^A}_{1}(\textbf{r})\).

Proof

I will use ‘\(\delta _i\)’ instead of \(\delta ^{\textbf{s},\textbf{r}}_{i}\)’ for notational simplicity. Suppose that two credence functions \(\textbf{s}\) and \(\textbf{r}\) satisfy all assumptions of this theorem. Let’s define recursively a credence function \(\textbf{s}^{i}\), as follows:

-

\(\textbf{s}^{1}=\textbf{s}_{\delta _{n-1},n-1}\);

-

\(\textbf{s}^{i+1}=\textbf{s}_{\delta _{n-i-1}-\delta _{n-i},n-i-1}^{i}\) for \(i=1,\ldots ,n-2\).

Here, \(\textbf{s}^i_{x,k}\) is generated from the corresponding credence function \(\textbf{s}^i\) in accordance with the definition in Theorem A4. Then, it follows from Theorem A4 that:

Here, it can also be shown that \(\textbf{s}^{n-1}=\textbf{r}\). In particular, it holds that: for any i,

To understand, it may be useful to pay attention to the underlined numbers in Example A5, where \(n=4\) and it holds, for instance, that:

Therefore, we obtain that \(\textbf{s}^{n-1}=\textbf{r}\), and so it follows from (\(\ddagger \)) that \(\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {L}^A_{1}(\textbf{r})\), as required. \(\square \)

As mentioned, Theorem A6 assumes that there is at most one \(\delta ^{\textbf{s},\textbf{r}}_i\) that has a negative value. As shown in the following example, however, this assumption does not undermine the generality of Theorem A6 and its proof.

Example A7

Suppose that \(\textbf{s}=(0.10,0.20,0.30,0.40)\) and \(\textbf{r}=(0.40,0.30,0.20,0.10)\). Note that there are two negative \(\delta ^{\textbf{s},\textbf{r}}_i\)s—that is, \(\delta ^{\textbf{s},\textbf{r}}_3 = -0.1\) and \(\delta ^{\textbf{s},\textbf{r}}_4= -0.3\). Be that as it may, we can provide two credence functions \(\textbf{s}^{1}\) and \(\textbf{s}^{2}\) such that \(\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {L}^A_{1} (\textbf{s}^{1})\le \mathfrak {L}^A_{1}(\textbf{s}^{2})\le \mathfrak {L}^A_{1}(\textbf{r})\), as follows:

Note first that Theorem A4 ensures that \(\mathfrak {L}^A_{1}(\textbf{s})\le \mathfrak {L}^A_{1}(\textbf{s}^{1})\le \mathfrak {L}^A_{1}(\textbf{s}^{2})\). Now, consider the following two credence functions:

Here, \(\textbf{s}^{2*}\) is the credence function generated from \(\textbf{s}^2\) by switching \(s^2_3(=0.30)\) with \(s^2_4(=0.10)\). According to Theorem A4, it holds that \(\mathfrak {L}^A_{1}(\textbf{s}^{2*})\le \mathfrak {L}^A_{1}(\textbf{s}^{3*})\). On the other hand, the definition of the additive Logarithmic rule entails that \(\mathfrak {L}^A_{1}(\textbf{s}^2)=\mathfrak {L}^A_{1}(\textbf{s}^{2*})\) and \(\mathfrak {L}^A_{1}(\textbf{s}^{3*})=\mathfrak {L}^A_{1}(\textbf{r})\). Therefore, we have that \(\mathfrak {L}^A_{1}(\textbf{s}^{2})\le \mathfrak {L}^A_{1}(\textbf{r})\).

This example clearly shows that there is a way of proving, without the assumption in question, that \(\mathfrak {S}^A\) satisfies Monotonicity\(^\delta \).

Appendix II: Proofs regarding weak monotonicity and weak elimination

In this appendix, I will prove some propositions that are relevant to the discussions in Sect. 4. Especially, it is demonstrated here that Monotonicity\(^\delta \) entails Weak Monotonicity, and that all of the strictly proper accuracy measures satisfy Weak Elimination.

1.1 A Proof that monotonicity\(^\delta \) entails weak monotonicity

Suppose that \(\textbf{s}\) and \(\textbf{r}\) are coherent credence functions over a partition \({\mathbb {H}}=\left\{ H_{1},\ldots ,H_{n}\right\} \). For our purpose, it is sufficient to prove that: for any i,

entails \(\delta _{k}^{\textbf{s},\textbf{r}} \ge \delta _{i}^{\textbf{s},\textbf{r}}.\) Note that (b) entails that:

Thus, we have that \(s_k - r_k = \delta _{k}^{\textbf{s},\textbf{r}} \ge \delta _{i}^{\textbf{s},\textbf{r}} = s_i -r_i\) since (a) says that \(r_k s_i -r_i s_k \ge 0\). This reasoning holds for any i. Therefore, it can be concluded that Monotonicity\(^\delta \) entails Weak Monotonicity. On the other hand, if at least one of (a) and (b) does not follow from the condition that \(\delta _{1}^{\textbf{s},\textbf{r}} \ge \delta _{i}^{\textbf{s},\textbf{r}}\) for any i, then it can be said that the converse does not hold. Suppose that \({\textbf {s}}=(0.7,0.2,0.1)\) and \({\textbf {r}}=(0.75,0.25,0)\). Then, we obtain that \(\delta _{1}^{\textbf{s},\textbf{r}} \ge \delta _{i}^{\textbf{s},\textbf{r}},\) for any i. However, we have that \(\pi _{1}^{\textbf{s},\textbf{r}}=15/14 < 5/4=\pi _{i}^{\textbf{s},\textbf{r}}\)—that is, (a) does not hold. Hence, we can conclude that the converse in question does not hold. \(\square \)

1.2 A Proof that the strictly proper accuracy measures satisfy weak elimination

Suppose that \(\textbf{s}\) and \(\textbf{r}\) are coherent credence functions over a partition \({\mathbb {H}}=\left\{ H_{1},\ldots ,H_{n}\right\} \). Suppose also that \(s_{n}>0=r_{n}\), and

for any i, j (\(\ne n\)). It is the case that \(n>2\). If not, \({\textbf {r}}\) cannot be coherent. Similarly, it cannot be the case that \(r_i =0\) for any \(i(\ne n)\), since \({\textbf {r}}\) is coherent. Note that (1) entails that there is a real number \(\pi \) such that \(r_{i}=\pi s_{i}\) for any \(i(\ne n)\). Similarly, it follows from (2) that there is a real number \(\bar{\pi }\) such that \((1-r_{i})=\bar{\pi } (1-s_{i})\) for any \(i(\ne 1)\). And, these consequences entail that \(s_{i}=(1-\bar{\pi })/(1-\pi )\) for any \(i(\ne n)\). As a result, we have that \(s_{i}=s_{j}\) for any i, j (\(\ne n\)), which says that the old credences are evenly distributed over the hypotheses, except for the hypothesis \(H_{n}\).

Suppose now that \(\mathfrak {A}\) is a strictly proper accuracy measure. From the above result, it follows that there is a real number r such that \(r_{i}=r\) for any \(i(\ne n)\). This is because \(r_{i}=\pi s_{i}\) for any \(i(\ne n)\), and \(s_{i}=s_{j}\) for any i, j (\(\ne n\)). As a result, it holds that \(\mathfrak {A}_{i}(\textbf{s})=\mathfrak {A}_{j}(\textbf{s})\) and \(\mathfrak {A}_{i}(\textbf{r})=\mathfrak {A}_{j}(\textbf{r})\) for any i and j (\(\ne n\)). Therefore, we have that: for any \(k(\ne n)\),

As assumed, \(\mathfrak {A}\) is strictly proper. Hence, we have that: for any \(k(\ne n)\),

As mentioned, \(n\ne 1\) and \(r\ne 0\). Therefore, we obtain that \(\mathfrak {A}_k (\textbf{s})<\mathfrak {A}_k (\textbf{r})\) for any \(k(\ne n)\). \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Park, I. Evidence and the epistemic betterness. Synthese 202, 119 (2023). https://doi.org/10.1007/s11229-023-04350-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11229-023-04350-9