Abstract

Fluid administration is a cornerstone of treatment of critically ill patients. The aim of this review is to reappraise the pathophysiology of fluid therapy, considering the mechanisms related to the interplay of flow and pressure variables, the systemic response to the shock syndrome, the effects of different types of fluids administered and the concept of preload dependency responsiveness. In this context, the relationship between preload, stroke volume (SV) and fluid administration is that the volume infused has to be large enough to increase the driving pressure for venous return, and that the resulting increase in end-diastolic volume produces an increase in SV only if both ventricles are operating on the steep part of the curve. As a consequence, fluids should be given as drugs and, accordingly, the dose and the rate of administration impact on the final outcome. Titrating fluid therapy in terms of overall volume infused but also considering the type of fluid used is a key component of fluid resuscitation. A single, reliable, and feasible physiological or biochemical parameter to define the balance between the changes in SV and oxygen delivery (i.e., coupling “macro” and “micro” circulation) is still not available, making the diagnosis of acute circulatory dysfunction primarily clinical.

Take-home messages

-

Fluids are drugs used in patients with shock to increase the cardiac output with the aim to improve oxygen delivery to the cells. The response to fluid administration is determined by the physiological interaction of cardiac function and venous return. In septic shock, the beneficial clinical response of fluid administration is rapidly reduced after few hours and fluid titration is crucial to avoid detrimental fluid overload. The fluid challenge is a fluid bolus given at a defined quantity and rate to assess fluid responsiveness.

-

The ideal fluid for critically ill patients does not exist; however, crystalloids should be used as first choice. Balanced crystalloid solutions may be associated with better outcomes but the evidence is still low. Albumin infusion may have a role in already fluid resuscitated patients at risk of fluid overload.

-

Fluid administration is integrated into the complex management of pressure and flow “macro” hemodynamic variables, coupled to the “micro” local tissue flow distribution and regional metabolism. Macro-variables are managed by measuring systemic blood pressure and evaluating the global cardiac function. The critical threshold of oxygen delivery to the cells is difficult to estimate, however, several indexes and clinical signs may be considered as surrogate of that, and integrated in a decision-making process at the bedside.

Similar content being viewed by others

Background

Fluid administration is one of the most common but also one of the most disputed interventions in the treatment of critically ill patients. Even more debated is the way how to appraise and manage the response (in terms of flow and pressure variables) to fluid administration, which ranges from a prosaic “just give fluids” to the fluid challenge, to the evaluation of fluid responsiveness before fluid administration to, finally, recent approaches based on machine learning and Artificial Intelligence aimed at personalizing its use [1,2,3].

Shock occurs in many intensive care unit (ICU) patients, representing a life-threatening condition that needs both prompt recognition and treatment to provide adequate tissue perfusion and thus oxygen delivery to the cells [4]. A large trial in more than 1600 patients admitted to ICU with shock and requiring vasopressors demonstrated that septic shock was the most frequent type of shock, occurring in 62% of patients, while cardiogenic shock (16%), hypovolemic shock (16%) and other types of distributive (4%) or obstructive (2%) shock were less frequent. The progression of this syndrome is associated with mitochondrial dysfunction and deregulated cell-signaling pathways, which can lead to multiple organ damage and failure and, eventually, untreatable hemodynamic instability and death [5].

Optimal treatment of shock is time-dependent and requires prompt and adequate combined support with fluids and/or vasopressors [4, 6,7,8]. The rationale, supported by robust evidence from several physiological and clinical studies, is to improve oxygen delivery (DO2), so that systemic oxygen requirements can be met [4, 6]. Oxygen delivery is defined as the product of oxygen content and cardiac output (CO). Pathological cellular oxygen utilization results from a tissue oxygen request exceeding the DO2 or the cellular inability to use O2. Our understanding of the mechanisms of shock has improved in the last decades, shifting the clinical practice from a “one size fits all” policy to individualized management [4, 9, 10].

Fluids are the first line of treatment in critically ill patients with acute circulatory failure aiming to increase venous return, stroke volume (SV) and, consequently, CO and DO2 [4]. The effect of the increase in CO following fluid resuscitation on blood pressure is not linear and related to baseline conditions (see "Fluids and ICU outcomes: does the type of fluid matter?") [4, 11,12,13,14,15].

Dr Thomas Latta first described the technique of fluid resuscitation to treat an episode of shock in 1832 in a letter to the editor of The Lancet [16]. He injected repeated small boluses of a crystalloid solution to an elderly woman and observed that the first bolus did not produce a clinically relevant effect; however, after multiple boluses (overall 2.8 L) ‘soon the sharpened features, sunken eye, and fallen jaw, pale and cold, bearing the manifest imprint of death's signet, began to glow with returning animation; the pulse returned to the wrist’. This lady was ultimately the first fluid responder reported in the literature.

This meaningful witness from the past addresses several physiological and clinical issues, which are still valid after almost 200 years:

CO is the dependent variable of the physiological interaction of cardiac function (described by the observations of Otto Frank and Ernest Starling more than 100 years ago) [17] and venous return (based on Guyton’s relationship between the elastic recoil of venous capacitance vessels, the volume stretching the veins, the compliance of the veins and the resistance of the venous system) [18, 19]. Fluid responsiveness indicates that the heart of the patient is operating in the steep part of Frank–Starling’s curve of heart function, while fluid non-responsiveness is observed on the flat part of the curve where an increase in preload doesn't increase CO further [20,21,22]. The lady treated by Dr. Latta probably did not respond to the first fluid bolus because the volume infused was insufficient to increase venous return (i.e., induce a change in the stressed volume related to venous compliance) [18, 19]. Thus, the volume infused is a crucial factor. The most recent Surviving Sepsis Campaign guidelines again recommend to administer an initial fluid volume of at least 30 ml/kg in patients with sepsis, which is considered, on average, a safe and effective target [6]. However, as the goal of fluid therapy is to increase SV and then CO, fluids should only be given if the plateau of cardiac function has not been reached in the individual patient. In fact, and probably even before reaching this point, fluid administration that does not increase CO can be considered futile. The fluid challenge (FC) is a hemodynamic diagnostic test consisting of the administration of a fixed volume of fluids with the purpose of identifying fluid responsive patients who will increase CO in response to fluid infusion [12, 23, 24]. This approach allows the individual titration of fluids and reduces the risk of fluid overload, which affects patients’ clinical outcome and mortality [9, 25,26,27].

In clinical practice, the likelihood of a beneficial clinical response to an FC is rapidly reduced after a few hours following the onset of septic shock resuscitation which renders the optimization of fluid therapy quite complex without adopting hemodynamic monitoring and resuscitation targets (i.e., CO increase above predefined thresholds) [28].

Fluid administration in responsive shock patients is associated with clinically evident signs of restored organ perfusion. Hence, administering fluids during shock and observing the patient’s clinical improvement at the bedside has proven to be reasonable since 1832. Does the target matter? There is no single clinical or laboratory variable that unequivocally represents tissue perfusion status. Therefore, a multimodal assessment is recommended [4]. Several aspects should be taken into account when identifying a variable as a potential trigger or target for fluid resuscitation, but most importantly the variable has to be flow-sensitive [29]. This means choosing a variable that exhibits an almost real-time response to increases in systemic blood flow and/or perfusion pressure and may be suitable to assess the effect of a fast-acting therapy such as a fluid bolus over a very short period of time (e.g., 15 min) [30]. Persistent hyperlactatemia may not be an adequate trigger since it has multiple aetiologies, including some that are non-perfusion related in many patients [e.g., hyperadrenergism or liver dysfunction], and pursuing lactate normalization may thus increase the risk of fluid overload [31]. Indeed, a recent study showed that systemic lactate levels remained elevated in 50% of a cohort of ultimately surviving septic shock patients. In contrast, flow-sensitive variables such as peripheral perfusion, central venous O2 saturation, and venous–arterial pCO2 gradients were normal in almost 80% of patients at two hours [32]. Peripheral perfusion, as represented by capillary refill time (CRT), appears to be a physiologically sound variable to be used as a trigger and a target for fluid resuscitation. A robust body of evidence confirms that abnormal peripheral perfusion after early [33] or late [34,35,36] resuscitation is associated with increased morbidity and mortality. A cold, clammy skin, mottling and prolonged CRT have been suggested as triggers for fluid resuscitation in patients with septic shock. Moreover, the excellent prognosis associated with a normal CRT or its recovery, its rapid response time to fluid loading, relative simplicity, availability in resource-limited settings, and its capacity to change in parallel with perfusion of physiologically relevant territories such as the hepatosplanchnic region [37], are strong reasons to consider CRT as a target for fluid resuscitation in septic shock patients. A recent major randomized controlled trial (RCT) demonstrated that CRT-targeted resuscitation was associated with lower mortality, less organ dysfunction, and lower treatment intensity than a lactate-targeted one, including less resuscitation fluids [38, 39]. Septic shock is characterized by a combination of a decrease in vascular tone, affecting both arterioles and venules, myocardial depression, alteration in regional blood flow distribution and microvascular perfusion and increased vascular permeability. Moreover, macro- and micro-circulation are physiologically regulated to maintain the mean arterial pressure (MAP) by adapting the CO to the local tissue flow distribution, which is associated with regional metabolism. From a clinical perspective, once normal organ perfusion is achieved, the rationale for augmenting macro hemodynamic variables (MAP and CO) by giving fluids or vasopressors, is quite low.

The lady's pulse “returned to the wrist”, implying that flow and pressure responses in that patient were linked. In daily practice, hypotension is frequently used to trigger fluid administration. The MAP target is also used by many ICU physicians as an indicator to stop fluid infusion [40]. This assumption is flawed in many aspects. First, restoring MAP above predetermined targets does not necessarily mean reversing shock; similarly, a MAP value below predefined thresholds does not necessarily indicate shock [4]. Second, and more importantly, the physiological relationship between changes in SV and changes in MAP is not straightforward and depends on vascular tone and arterial elastance. In patients with high vasomotor tone, an increase in SV after fluid administration will be associated with an increase in MAP. This is typically the case in patients with pure hypovolemia, such as hemorrhagic shock, in whom the physiologic response to hemorrhage includes severe venous and arterial vasoconstriction. In patients with low vasomotor tone, such as in sepsis but also during deep anesthesia, MAP hardly changes after fluid administration even though SV may markedly increase. The lack of a significant relationship between MAP and SV has been demonstrated in many ICU patients, especially during septic shock [41,42,43]. Interestingly, dynamic arterial elastance (computed as respiratory changes in pulse pressure divided by changes in SV) can be used to identify patients who are likely to increase their MAP in response to fluids [44, 45], but this requires specific monitoring tools. Finally, one should recall that the main purpose of fluid administration is to increase tissue perfusion, and hence changes in MAP should be considered as beneficial but not regarded as the main target for fluid administration [46].

Since human physiology has remained consistent over the centuries, the mechanisms in the interplay of flow and pressure, the systemic response of these variables to the shock syndrome, the effects of fluid administration and the concept of preload dependency and preload responsiveness are still valid. This paper aims to integrate these physiological concepts with recent advances related to three main pathophysiological aspects of fluid administration in ICU patients.

The fluid challenge, fluid bolus and fluid infusion: does the rate of administration matter?

According to the Frank–Starling law, there is a curvilinear relationship between preload (the end-diastolic transmural pressure) and the generated SV, which is affected by the inotropic condition of the heart muscle (for a given preload, increased inotropy would enhance the response and, hence, the SV, and vice versa). The curve is classically subdivided into two zones that can be distinguished: (1) a steep part where small preload changes produce a marked increase in SV (preload dependent zone) and (2) a flat part where the SV is minimally or not affected by preload changes (preload independence zone).

The physiological link behind the described relationship between preload, SV and fluid administration is that the volume infused has to be large enough to increase the driving pressure for venous return, and that the resulting increase in end-diastolic volume produces an increase in SV only if both ventricles are operating on the steep part of the curve. Accordingly, the FC may be defined as the smallest volume required to efficiently challenge the system. Thus, the only reason to give fluids during resuscitation of circulatory shock is to increase the mean systemic pressure with the aim to increase the driving pressure for venous return (defined as mean systemic pressure minus right atrial pressure), as shown in a recent prospective study exploring the cardiovascular determinants of the response to resuscitation efforts in septic patients [47]. Most FC will increase mean systemic pressure, if given in large enough volumes and at a fast enough rate as described below. However, a simultaneous increase in right atrial pressure suggests that the subject is not volume responsive, and their preload responsiveness status needs to be reassessed.

Considering the FC as a drug (e.g., study the response by applying a pharmacodynamic methodology) has been the topic of only a few studies. The first small-sized study conducted by Aya et al. in postoperative patients, demonstrated that the minimum volume required to perform an effective FC was 4 ml/kg [48]. However, in the literature, most of the studies in the field of fluid responsiveness and FC response in ICU patients adopt a volume of 500 ml (on average) [49], which is largely above 4 ml/kg for the vast majority of ICU patients. Interestingly, 500 mL was also the median volume administered in clinical practice in the FENICE study (an observational study including 311 centers across 46 countries) [40], whereas a lower mean volume (250 ml) is usually used in high-risk surgical patients undergoing goal-directed therapy optimization [50]. This difference may imply that a larger fluid bolus is often adopted not just to assess fluid responsiveness but also to treat an episode of hemodynamic instability, implying a therapeutic effect of fluid administration. Since, the use of repetitive fluid boluses may increase the risk of fluid overload, the prediction of fluid responsiveness prior to FC administration is a key point which, unfortunately, remains challenging [4, 51,52,53,54]. In fact, several bedside clinical signs, systemic pressures and static volumetric variables adopted in the clinical practice at the bedside are poorly predictive of the effect of FC infusion [53,54,55]. To overcome these limitations, bedside functional hemodynamic assessment has gained in popularity, consisting of a maneuver that affects cardiac function and/or heart–lung interactions, with a subsequent hemodynamic response, the extent of which varies between fluid responders and non-responders [53,54,55,56].

Recently, all aspects related to FC administration were investigated, showing that the amount of fluid given, the rate of administration and the threshold adopted to define fluid responsiveness impact on the final outcome of an FC [57,58,59,60,61]. A RCT showed that the duration of the administration of an FC affected the rate of fluid responsiveness, shifting from 51.0% after a 4 ml/kg FC completed in 10 min to 28.5% after an FC completed in 20 min [57]. However, this study was conducted in a limited sample of neurosurgical patients during a period of hemodynamic stability, which limits the external validity of the results in different surgical settings or in critically ill patients.

What would be the best rate of infusion when boluses of fluid are given without using the FC technique? It has been postulated that slower rates may limit vascular leakage due to a less abrupt increase in hydrostatic pressure. Recently, a large multicentric trial randomized 10,520 patients to receive fluids at an infusion rate reflecting current standard of care [a fluid bolus of 500 ml over approximately 30 min, i.e., the upper limit of infusion rate for infusion pumps (999 ml/h; 16 ml/min)] versus a slower infusion rate (333 ml/h; 5.5 ml/min), which reflects less than the 25% percentile in FENICE cohort study [62]. Importantly, the rates adopted in this trial were overall slower than those adopted in clinical studies where the FC is used to correct hemodynamic instability (i.e., 500 ml in 10 min = 50 ml/min; 500 ml in 20 min = 25 ml/min), suggesting that the authors applied a fluid bolus, just not at the “correct” rate. Neither the primary outcome (90-day mortality), nor all of the secondary clinical outcomes during the ICU stay were different between the two groups, suggesting that the infusion rate of continuous fluid administration for fluid expansion does not affect clinical outcomes [62]. This was not unexpected as only the administration rate differed, while the total amount of fluids was identical and the proportion of volume responsive patients was probably also similar in the two groups (even though not measured, this proportion is assumed to be identical as per the effects of randomization in large groups).

Fluids and ICU outcomes: does the type of fluid matter?

The ideal fluid for patients in shock should have a composition similar to plasma to support cellular metabolism and avoid organ dysfunction and should be able to achieve a sustained increase in intravascular volume to optimize CO. Unfortunately, no ideal fluid exists. The available fluid options are broadly divided into three groups: crystalloids, colloids, and blood products. The latter have few very specific indications, including shock in trauma patients and hemorrhagic shock, and will not be discussed in this review.

Colloids are composed of large molecules designed to remain in the intravascular space for several hours, increasing plasma osmotic pressure and reducing the need for further fluids. Despite their theoretical advantages, patients with sepsis often have alterations in glycocalyx and increased endothelial permeability, which may lead to extravasation of colloids’ large molecules [63, 64], increases the risk of global increased permeability syndrome and abolishes the primary advantage [65]. Colloids are further divided into semisynthetic colloids and albumin. Semisynthetic colloids include hydroxyethyl starches, dextrans and gelatins, which have demonstrated either no effect [66] or detrimental consequences in critically ill patients, increasing the risk of acute kidney injury (AKI) [67, 68]. Thus, the use of semisynthetic colloids in shock patients should be abandoned.

Albumin is distributed in intravascular and extravascular fluid. In health, up to 5% of intravascular albumin leaks per hour into the extravascular space [transcapillary escape rate (TER)] giving a distribution half-time of about 15 h. This rate may increase up to 20% or more in septic shock. Accordingly, the measured TER of albumin to the tissues (the so-called “TCERA”) is said to be an index of ‘vascular permeability [69].

The role of albumin for fluid therapy is still debated (reference 64). Although theoretically promising for its anti-inflammatory and anti-oxidant proprieties [70], and for its supposedly longer intravascular confinement due to the interaction between its surface negative charges and the endovascular glycocalyx [70], clinical data have been conflicting [30, 71]. While the use of albumin was associated with improved MAP, the relative risk of mortality was similar to crystalloid infusion [71]. A predefined subgroup analysis of the ‘Comparison of Albumin and Saline for Fluid Resuscitation in the Intensive Care Unit’ (SAFE) study suggested that albumin should be avoided in patients with traumatic brain injury. In contrast, albumin is recommended for patients with chronic liver disease and in combination with terlipressin for patients with hepatorenal syndrome [72, 73]. The most recent Surviving Sepsis Guidelines also suggest using albumin in patients with sepsis who have received large volume crystalloid resuscitation [6].

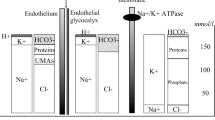

On the other waterside of fluid therapy, crystalloids are composed of water and electrolytes [74]. Saline 0.9% was the first crystalloid solution to be utilized in humans. Its drawbacks are an unphysiological concentration of chloride and sodium and high osmolarity, which have been associated with nephrotoxicity and hyperchloremic acidosis [75]. Extracellular chloride influences the tone of the afferent glomerular arterioles, directly impacting the glomerular filtration rate (GFR). Several balanced solutions have since been introduced, such as Ringer’s lactate (Hartmann’s solution), Ringer’s acetate and Plasmalyte. These solutions have a lower chloride concentration and lower osmolarity (between 280 and 294mosm/l) and are buffered with lactate or acetate to maintain electroneutrality. In healthy adult human volunteers, infusion of 2 l of saline 0.9% versus a balanced crystalloid solution decreased urinary excretion of water and sodium [76].

Several recent RCTs assessed the effect of balanced solutions vs saline 0.9% in critically ill patients (Table 1). The SPLIT trial, conducted in 4 ICUs, showed no advantage in either group [77]. The SMART trial was a monocentric study (5 ICUs in 1 academic center) comparing Plasmalyte versus saline 0.9% in critically ill patients admitted to ICU [78]. A significant difference in favor of Plasmalyte was found in the composite outcome MAKE30 consisting of death from any cause, new renal replacement therapy or persistent renal dysfunction within 30 days [78]. The Plasma-Lyte 148® versus Saline (PLUS) study was a blinded RCT in 5037 adult patients expected to stay in the ICU for at least 72 h and needing fluid resuscitation [79]. Patients with traumatic brain injury or at risk of cerebral edema were excluded. There was no significant difference in 90-day mortality or AKI between both groups. Similarly, the ‘Balanced Solutions in Intensive Care Study’ (BaSICS), a multi-center double-blind RCT comparing the same fluid solutions in 11,052 patients in 75 ICUs across Brazil, found no significant difference in mortality or renal outcomes [62]. An updated meta-analysis of 13 high-quality RCTs, including the PLUS and BaSICS trials, concluded that the balanced crystalloid effect ranged from a 9% relative reduction to a 1% relative increase in mortality with a similar decrease in risk of AKI [80].

A possible cofounding factor of trials investigating the effect of different types of crystalloids on the final outcome could be related to the volume and type of fluid administration prior to enrollment. In fact, a secondary post hoc analysis of the BaSICS trial categorized the enrolled patients according to fluid use in the 24 h before enrollment and according to admission type, showing a high probability that 90-day mortality was reduced in patients who exclusively received balanced fluids [81].

Overall, considering that balanced solutions in sepsis may be associated with improved outcomes compared with chloride-rich solutions and the lack of cost-effectiveness studies comparing balanced and chloride-rich crystalloid solutions, balanced crystalloids are recommended (weak recommendation) as first-line fluid type in patients with septic shock [6, 78].

Fluid administration response during acute circulatory failure

Prompt fluid resuscitation in the early phase of acute circulatory failure is a key recommended intervention [11, 82]. On the other hand, the hemodynamic targets and the safety limits indicating whether to stop this treatment in already resuscitated patients are relatively undefined and poorly titratable to the specific patient response [9, 82]. However, targeted fluid management is of pivotal importance to improve the outcome of hemodynamically unstable ICU patients since both hypovolemia and hypervolemia are harmful [4]. Acute circulatory dysfunction is often approached by using a fluid resuscitation, with the purpose of optimizing the CO to improve the DO2. However, a single, reliable, and feasible physiological or biochemical parameter to define the balance between the changes in CO and in DO2 (i.e., coupling “macro” and “micro” circulation) is still not available, making the diagnosis of acute circulatory dysfunction primarily clinical [3].

However, recognizing the value of the CO itself or tracking its changes after fluid administration, is poorly associated with the variables usually evaluated at the bedside. In fact, the ability of ICU physicians to estimate the exact CO value based on clinical examination is rather low (i.e., 42–62% of the cases), often leading to incongruent evaluations (meaning that the CO was estimated as increased, whereas the real CO was decreased, or vice versa) [83].

The role of echocardiography in ICU has changed in the last decades with more focus on the characteristics of the individual patient. “Critical care echocardiography” (CCE) is performed and interpreted by the intensivists 24/7 at the bedside, to help diagnosing the type of shock, to guide therapy according to the type of shock and, finally, to customize the therapy at the bedside by re-evaluating the strategies adopted [84, 85]. The global adoption of CCE has been hampered by technical problems (portability and availability of the machines), and by a lack of formal training programs for CCE. These gaps have been recently filled by technical progress providing high-quality images at the bedside, and by new guidelines for skills certification, developed by the European Society of Intensive Care Medicine [86], the American [87] and the Canadian Society of Echocardiography [88], and training standards [89]. CCE should now be considered as a part of the routine assessment of ICU patients with hemodynamic instability since assessment of cardiac function plays a central role in therapy.

The CCE-enhanced clinical evaluation of hemodynamically unstable patients should be coupled with clinical variables evaluating the relationship between DO2 and oxygen consumption. In fact, although the exact value of “critical” DO2 is difficult to estimate, the systemic effects of overcoming this threshold can be recognized.

The CRT measures the time required to recolor the tip of a finger after pressure is applied to cause blanching. Since this maneuver depends on the applied pressure, Ait-Oufella et al. recommended to use just enough pressure to remove the blood at the tip of the physician’s nail illustrated by appearance of a thin white distal crescent under the nail, for 15 s [36]. CRT at 6 h after initial resuscitation was strongly predictive of 14-day mortality (area under the curve of 84% [IQR: 75–94]). Hernandez et al. reported that CRT < 4 s, 6 h after resuscitation was associated with resuscitation success, with normalization of lactate levels 24 h after the occurrence of severe sepsis/septic shock [90]. A prospective cohort study of 1320 adult patients with hypotension in the emergency room, showed an association between CRT and in-hospital mortality [91].

Serum lactate is a more objective metabolic surrogate to guide fluid resuscitation. Irrespectively of the source, increased lactate levels are associated with worse outcomes [92], and lactate-guided resuscitation significantly reduced mortality as compared to resuscitation without lactate monitoring [93]. Since serum lactate is not a direct measure of tissue perfusion [94], a single value is less informative than the trend of lactate clearance. However, serum lactate normalization is indicative of shock reversal whereas severe hyperlactatemia is associated with very poor outcomes. Recent published data showed that lactate levels > 4 mmol/l combined with hypotension are associated with a mortality rate of 44.5% in ICU patients with severe sepsis or septic shock [92]. For instance, a large retrospective study showed that a subgroup of ICU patients with severe hyperlactatemia (lactate > 10 mmol/l) had a 78.2% mortality, which increased up to 95% if hyperlactatemia persisted for more than 24 h [95].

ScvO2 reflects the balance between oxygen delivery and consumption, being a surrogate value of mixed venous oxygen saturation (normally the ScvO2 is 2–3% lower than SvO2) [96]. It was previously considered as a therapeutic target in the management of early phases of septic shock [14, 97, 98] but this approach has been challenged by the negative results of three subsequent large multicentric RCTs [99,100,101] and is no longer recommended [82]. However, since the ARISE, PROMISE and the PROCESS trials probably included populations of less severe critically ill patients compared to the study by Rivers et al. [97] (i.e., lower baseline lactate levels, ScvO2 at or above the target value at the admission, and lower mortality in the control group) [99,100,101], the normalization of low ScvO2 in the early phase of septic shock can be still considered a good goal of successful resuscitation. While the incidence of low ScvO2 in current practice is low [102], the persistence of high values of ScvO2 is associated with mortality in septic shock patients, probably indicating an irreversible impairment of oxygen extraction by the cells [69].

The venous-to-arterial CO2 tension difference (ΔPCO2) and central venous oxygen saturation (ScVO2) provide adjunctive relevant clinical information. It is obtained by measuring central venous PCO2 sampled from a central vein catheter and arterial PCO2 and strongly correlates with the venous-to-arterial CO2 tension difference [P (v-a) CO2], which is the gradient between PCO2 in mixed venous blood (PvCO2 measured with pulmonary artery catheter) and PCO2 in arterial blood (PaCO2): P(v-a)CO2 = PvCO2-PaCO2 [103]. This point is crucial because the results of several studies in the past analyzing the changes of P(v-a) CO2 during shock emphasize that it is still useful to measure central venous PCO2 instead of mixed venous blood. In health, ΔPCO2 ranges between 2 and 6 mmHg.

The pathophysiological background of the determinants of this index is rather complex but ΔPCO2 changes in a shock state are coupled with other indices of tissue perfusion. First of all, according to a modified Fick equation, ΔPCO2 is linearly linked to CO2 generation and inversely related to CO [104]. Several clinical studies confirmed both the strong association between CO and P (v-a) CO2 and between impairment in microcirculatory perfusion and tissue PCO2 [105]. Accordingly, an elevated ΔPCO2 may be due to either a low CO state or to an insufficient microcirculation to remove the additional CO2 in hypoperfused tissues despite an adequate CO.

These conditions may be further investigated by coupling the information obtained by ΔPCO2 and ScVO2. In fact, an increased ΔPCO2 associated with a decreased ScVO2 is suggestive of a low CO, whereas a normal/high ScVO2 associated with an increased ΔPCO2 indicates impaired tissue perfusion. Pragmatically, a normal ∆PCO2 (< 6 mmHg) value in a shocked patient should defer from increasing the CO as first step; instead, regional blood flow may be impaired even in presence of a normal/high CO.

All these aspects may be integrated into a decision-making algorithm where the clinical signs of hypoperfusion are coupled with the CCE evaluation (Fig. 1). The clinical recognition of signs of systemic hypoperfusion should trigger the use of fluids with the purpose of optimizing the CO and improving the DO2. This choice recognizes fluids as drugs that should only be used as long as the effect on CO is likely. Monitoring fluid responsiveness during the resuscitation phase of an episode of acute circulatory failure may be achieved by applying a “closed-loop” operative strategy, where the signs of tissue hypoperfusion and the findings of CCE are re-evaluated after each fluid bolus. More sophisticated tools are useful when the cardiovascular system reaches the plateau of clinical response or, earlier, when the CCE shows acute or acute-on-chronic cardiac dysfunction at the baseline examination of the patient.

Decision-making process at the bedside to guide and titrate fluid administration during an episode of acute circulatory failure. CCE critical care echocardiography, CO cardiac output, CRT capillary refill time, FC fluid challenge, ΔPCO2 venous-to-arterial CO2 tension difference, ScVO2 central venous oxygen saturation

Conclusions

The physiology of fluid administration in critically ill patients is of major importance in ICU. With a solid basis in the dynamic and complex balance between cardiovascular function and systemic response, fluids should be considered as drugs and intensivists should consider their pharmacodynamic and biochemical properties to optimize the therapy. A multimodal approach is required since single physiological or biochemical measurements able to adequately assess the balance between the CO and tissue perfusion pressure are still lacking. The assessment of response to fluid administration may be obtained by coupling the changes of different signs of tissue hypoperfusion using clinical and invasive hemodynamic monitoring, with the evaluation of cardiac function based on critical care echocardiography.

Availability of data and materials

Not applicable.

References

Bataille B, de Selle J, Moussot PE, Marty P, Silva S, Cocquet P (2021) Machine learning methods to improve bedside fluid responsiveness prediction in severe sepsis or septic shock: an observational study. Br J Anaesth 126:826–834

Komorowski M, Celi LA, Badawi O, Gordon AC, Faisal AA (2018) The artificial intelligence clinician learns optimal treatment strategies for sepsis in intensive care. Nat Med 24:1716–1720

De Backer D, Aissaoui N, Cecconi M, Chew MS, Denault A, Hajjar L, et al (2022) How can assessing hemodynamics help to assess volume status? Intensive Care Med

Cecconi M, De Backer D, Antonelli M, Beale R, Bakker J, Hofer C et al (2014) Consensus on circulatory shock and hemodynamic monitoring. Task force of the European society of intensive care medicine. Intensive Care Med 40:1795–1815

Singer M (2017) Critical illness and flat batteries. Crit Care 21:309

Evans L, Rhodes A, Alhazzani W, Antonelli M, Coopersmith CM, French C et al (2021) Surviving sepsis campaign: International guidelines for management of sepsis and septic shock 2021. Intensive Care Med 47:1181–1247

Lat I, Coopersmith CM, De Backer D, Coopersmith CM, Research Committee of the Surviving Sepsis C (2021) The surviving sepsis campaign: fluid resuscitation and vasopressor therapy research priorities in adult patients. Intensive Care Med Exp 9:10

Bakker J, Kattan E, Annane D, Castro R, Cecconi M, De Backer D et al (2022) Current practice and evolving concepts in septic shock resuscitation. Intensive Care Med 48:148–163

Hjortrup PB, Haase N, Bundgaard H, Thomsen SL, Winding R, Pettila V et al (2016) Restricting volumes of resuscitation fluid in adults with septic shock after initial management: the classic randomised, parallel-group, multicentre feasibility trial. Intensive Care Med 42:1695–1705

Ince C (2017) Personalized physiological medicine. Crit Care 21:308

Myburgh JA, Mythen MG (2013) Resuscitation fluids. N Engl J Med 369:1243–1251

Cecconi M, Parsons AK, Rhodes A (2011) What is a fluid challenge? Curr Opin Crit Care 17:290–295

Magder S (2010) Fluid status and fluid responsiveness. Curr Opin Crit Care 16:289–296

Dellinger RP, Levy MM, Rhodes A, Annane D, Gerlach H, Opal SM et al (2013) Surviving sepsis campaign: International guidelines for management of severe sepsis and septic shock, 2012. Intensive Care Med 39:165–228

Sanfilippo F, Messina A, Cecconi M, Astuto M (2020) Ten answers to key questions for fluid management in intensive care. Med Intensive

Latta T (1832) Relative to the treatment of cholera by the copious injection of aqueous and saline fluid into the veins. Lancet 2:274–277

Katz AM (2002) Ernest henry starling, his predecessors, and the “law of the heart.” Circulation 106:2986–2992

Guyton AC, Jones CE (1973) Central venous pressure: physiological significance and clinical implications. Am Heart J 86:431–437

Guyton AC, Richardson TQ, Langston JB (1964) Regulation of cardiac output and venous return. Clin Anesth 3:1–34

Feihl F, Broccard AF (2009) Interactions between respiration and systemic hemodynamics. Part i: basic concepts. Intensive Care Med 35:45–54

Feihl F, Broccard AF (2009) Interactions between respiration and systemic hemodynamics. Part ii: practical implications in critical care. Intensive Care Med 35:198–205

Vincent JL, De Backer D (2013) Circulatory shock. N Engl J Med 369:1726–1734

Marik PE, Monnet X, Teboul JL (2011) Hemodynamic parameters to guide fluid therapy. Ann Intensive Care 1:1

Monnet X, Marik PE, Teboul JL (2016) Prediction of fluid responsiveness: an update. Ann Intensive Care 6:111

Boyd JH, Forbes J, Nakada TA, Walley KR, Russell JA (2011) Fluid resuscitation in septic shock: a positive fluid balance and elevated central venous pressure are associated with increased mortality. Crit Care Med 39:259–265

Marik PE, Linde-Zwirble WT, Bittner EA, Sahatjian J, Hansell D (2017) Fluid administration in severe sepsis and septic shock, patterns and outcomes: an analysis of a large national database. Intensive Care Med 43:625–632

Marik PE (2016) Fluid responsiveness and the six guiding principles of fluid resuscitation. Crit Care Med 44:1920–1922

Kattan E, Ospina-Tascon GA, Teboul JL, Castro R, Cecconi M, Ferri G et al (2020) Systematic assessment of fluid responsiveness during early septic shock resuscitation: secondary analysis of the andromeda-shock trial. Crit Care 24:23

Kattan E, Castro R, Vera M, Hernandez G (2020) Optimal target in septic shock resuscitation. Ann Transl Med 8:789

Finfer S, Bellomo R, Boyce N, French J, Myburgh J, Norton R et al (2004) A comparison of albumin and saline for fluid resuscitation in the intensive care unit. N Engl J Med 350:2247–2256

Hernandez G, Bellomo R, Bakker J (2019) The ten pitfalls of lactate clearance in sepsis. Intensive Care Med 45:82–85

Hernandez G, Luengo C, Bruhn A, Kattan E, Friedman G, Ospina-Tascon GA et al (2014) When to stop septic shock resuscitation: clues from a dynamic perfusion monitoring. Ann Intensive Care 4:30

Lara B, Enberg L, Ortega M, Leon P, Kripper C, Aguilera P et al (2017) Capillary refill time during fluid resuscitation in patients with sepsis-related hyperlactatemia at the emergency department is related to mortality. PLoS ONE 12:e0188548

Lima A, Jansen TC, van Bommel J, Ince C, Bakker J (2009) The prognostic value of the subjective assessment of peripheral perfusion in critically ill patients. Crit Care Med 37:934–938

Ait-Oufella H, Lemoinne S, Boelle PY, Galbois A, Baudel JL, Lemant J et al (2011) Mottling score predicts survival in septic shock. Intensive Care Med 37:801–807

Ait-Oufella H, Bige N, Boelle PY, Pichereau C, Alves M, Bertinchamp R et al (2014) Capillary refill time exploration during septic shock. Intensive Care Med 40:958–964

Brunauer A, Kokofer A, Bataar O, Gradwohl-Matis I, Dankl D, Bakker J et al (2016) Changes in peripheral perfusion relate to visceral organ perfusion in early septic shock: a pilot study. J Crit Care 35:105–109

Hernandez G, Ospina-Tascon GA, Damiani LP, Estenssoro E, Dubin A, Hurtado J et al (2019) Effect of a resuscitation strategy targeting peripheral perfusion status vs serum lactate levels on 28-day mortality among patients with septic shock: the andromeda-shock randomized clinical trial. JAMA 321:654–664

Zampieri FG, Damiani LP, Bakker J, Ospina-Tascon GA, Castro R, Cavalcanti AB et al (2020) Effects of a resuscitation strategy targeting peripheral perfusion status versus serum lactate levels among patients with septic shock. A Bayesian reanalysis of the andromeda-shock trial. Am J Respir Crit Care Med 201:423–429

Cecconi M, Hofer C, Teboul JL, Pettila V, Wilkman E, Molnar Z et al (2015) Fluid challenges in intensive care: the Fenice study: a global inception cohort study. Intensive Care Med 41:1529–1537

Lakhal K, Ehrmann S, Perrotin D, Wolff M, Boulain T (2013) Fluid challenge: tracking changes in cardiac output with blood pressure monitoring (invasive or non-invasive). Intensive Care Med 39:1953–1962

Dufour N, Chemla D, Teboul JL, Monnet X, Richard C, Osman D (2011) Changes in pulse pressure following fluid loading: a comparison between aortic root (non-invasive tonometry) and femoral artery (invasive recordings). Intensive Care Med 37:942–949

Pierrakos C, Velissaris D, Scolletta S, Heenen S, De Backer D, Vincent JL (2012) Can changes in arterial pressure be used to detect changes in cardiac index during fluid challenge in patients with septic shock? Intensive Care Med 38:422–428

Garcia MI, Romero MG, Cano AG, Aya HD, Rhodes A, Grounds RM et al (2014) Dynamic arterial elastance as a predictor of arterial pressure response to fluid administration: a validation study. Crit Care 18:626

Cecconi M, Monge Garcia MI, Gracia Romero M, Mellinghoff J, Caliandro F, Grounds RM et al (2015) The use of pulse pressure variation and stroke volume variation in spontaneously breathing patients to assess dynamic arterial elastance and to predict arterial pressure response to fluid administration. Anesth Analg 120:76–84

van der Ven WH, Schuurmans J, Schenk J, Roerhorst S, Cherpanath TGV, Lagrand WK et al (2022) Monitoring, management, and outcome of hypotension in intensive care unit patients, an international survey of the European society of intensive care medicine. J Crit Care 67:118–125

Guarracino F, Bertini P, Pinsky MR (2019) Cardiovascular determinants of resuscitation from sepsis and septic shock. Crit Care 23:118

Aya HD, Ster IC, Fletcher N, Grounds RM, Rhodes A, Cecconi M (2015) Pharmacodynamic analysis of a fluid challenge. Crit Care Med

Messina A, Longhini F, Coppo C, Pagni A, Lungu R, Ronco C et al (2017) Use of the fluid challenge in critically ill adult patients: a systematic review. Anesth Analg 125:1532–1543

Messina A, Pelaia C, Bruni A, Garofalo E, Bonicolini E, Longhini F et al (2018) Fluid challenge during anesthesia: a systematic review and meta-analysis. Anesth Analg

Vincent JL (2011) Let’s give some fluid and see what happens “versus the” mini-fluid challenge. Anesthesiology 115:455–456

Vincent JL, Weil MH (2006) Fluid challenge revisited. Crit Care Med 34:1333–1337

Pinsky MR (2015) Functional hemodynamic monitoring. Crit Care Clin 31:89–111

Pinsky MR, Payen D (2005) Functional hemodynamic monitoring. Crit Care 9:566–572

Hadian M, Pinsky MR (2007) Functional hemodynamic monitoring. Curr Opin Crit Care 13:318–323

Messina A, Dell’Anna A, Baggiani M, Torrini F, Maresca GM, Bennett V et al (2019) Functional hemodynamic tests: a systematic review and a metanalysis on the reliability of the end-expiratory occlusion test and of the mini-fluid challenge in predicting fluid responsiveness. Crit Care 23:264

Messina A, Palandri C, De Rosa S, Danzi V, Bonaldi E, Montagnini C et al (2021) Pharmacodynamic analysis of a fluid challenge with 4 ml kg(− 1) over 10 or 20 min: a multicenter cross-over randomized clinical trial. J Clin Monit Comput

Aya HD, Rhodes A, Chis Ster I, Fletcher N, Grounds RM, Cecconi M (2017) Hemodynamic effect of different doses of fluids for a fluid challenge: a quasi-randomized controlled study. Crit Care Med 45:e161–e168

Toscani L, Aya HD, Antonakaki D, Bastoni D, Watson X, Arulkumaran N et al (2017) What is the impact of the fluid challenge technique on diagnosis of fluid responsiveness? A systematic review and meta-analysis. Crit Care 21:207

Ayala A, M FL, Machado A (1986) Malic enzyme levels are increased by the activation of NADPH-consuming pathways: detoxification processes. FEBS Lett 1986;202:102–106

Messina A, Sotgiu G, Saderi L, Cammarota G, Capuano L, Colombo D, et al.: Does the definition of fluid responsiveness affect passive leg raising reliability? A methodological ancillary analysis from a multicentric study. Minerva Anestesiol 2021

Zampieri FG, Machado FR, Biondi RS, Freitas FGR, Veiga VC, Figueiredo RC et al (2021) Effect of slower vs faster intravenous fluid bolus rates on mortality in critically ill patients: the basics randomized clinical trial. JAMA 326:830–838

Brunkhorst FM, Engel C, Bloos F, Meier-Hellmann A, Ragaller M, Weiler N et al (2008) Intensive insulin therapy and pentastarch resuscitation in severe sepsis. N Engl J Med 358:125–139

Hahn RG, Dull RO (2021) Interstitial washdown and vascular albumin refill during fluid infusion: novel kinetic analysis from three clinical trials. Intensive Care Med Exp 9:44

Woodcock TE, Woodcock TM (2012) Revised starling equation and the glycocalyx model of transvascular fluid exchange: an improved paradigm for prescribing intravenous fluid therapy. Br J Anaesth 108:384–394

Annane D, Siami S, Jaber S, Martin C, Elatrous S, Declere AD et al (2013) Effects of fluid resuscitation with colloids vs crystalloids on mortality in critically ill patients presenting with hypovolemic shock: the cristal randomized trial. JAMA 310:1809–1817

Myburgh JA, Finfer S, Bellomo R, Billot L, Cass A, Gattas D et al (2012) Hydroxyethyl starch or saline for fluid resuscitation in intensive care. N Engl J Med 367:1901–1911

Perner A, Haase N, Guttormsen AB, Tenhunen J, Klemenzson G, Aneman A et al (2012) Hydroxyethyl starch 130/0.42 versus ringer’s acetate in severe sepsis. N Engl J Med 367:124–134

Textoris J, Fouche L, Wiramus S, Antonini F, Tho S, Martin C et al (2011) High central venous oxygen saturation in the latter stages of septic shock is associated with increased mortality. Crit Care 15:R176

Vincent JL (2009) Relevance of albumin in modern critical care medicine. Best Pract Res Clin Anaesthesiol 23:183–191

Caironi P, Tognoni G, Masson S, Fumagalli R, Pesenti A, Romero M et al (2014) Albumin replacement in patients with severe sepsis or septic shock. N Engl J Med 370:1412–1421

Salerno F, Navickis RJ, Wilkes MM (2013) Albumin infusion improves outcomes of patients with spontaneous bacterial peritonitis: a meta-analysis of randomized trials. Clin Gastroenterol Hepatol 11(123–130):e121

Joannidis M, Wiedermann CJ, Ostermann M (2022) Ten myths about albumin. Intensive Care Med 48:602–605

Varrier M, Ostermann M (2015) Fluid composition and clinical effects. Crit Care Clin 31:823–837

Yunos NM, Bellomo R, Glassford N, Sutcliffe H, Lam Q, Bailey M (2015) Chloride-liberal vs. chloride-restrictive intravenous fluid administration and acute kidney injury: an extended analysis. Intensive Care Med 41:257–264

Chowdhury AH, Cox EF, Francis ST, Lobo DN (2012) A randomized, controlled, double-blind crossover study on the effects of 2-l infusions of 0.9% saline and plasma-lyte(r) 148 on renal blood flow velocity and renal cortical tissue perfusion in healthy volunteers. Ann Surg 256:18–24

Young P, Bailey M, Beasley R, Henderson S, Mackle D, McArthur C et al (2015) Effect of a buffered crystalloid solution vs saline on acute kidney injury among patients in the intensive care unit: the split randomized clinical trial. JAMA 314:1701–1710

Semler MW, Self WH, Wanderer JP, Ehrenfeld JM, Wang L, Byrne DW et al (2018) Balanced crystalloids versus saline in critically ill adults. N Engl J Med 378:829–839

Finfer S, Micallef S, Hammond N, Navarra L, Bellomo R, Billot L et al (2022) Balanced multielectrolyte solution versus saline in critically ill adults. N Engl J Med 386:815–826

Hammond DA, Lam SW, Rech MA, Smith MN, Westrick J, Trivedi AP et al (2020) Balanced crystalloids versus saline in critically ill adults: a systematic review and meta-analysis. Ann Pharmacother 54:5–13

Zampieri FG, Machado FR, Biondi RS, Freitas FGR, Veiga VC, Figueiredo RC et al (2022) Association between type of fluid received prior to enrollment, type of admission, and effect of balanced crystalloid in critically ill adults: a secondary exploratory analysis of the basics clinical trial. Am J Respir Crit Care Med 205:1419–1428

Rhodes A, Evans LE, Alhazzani W, Levy MM, Antonelli M, Ferrer R et al (2017) Surviving sepsis campaign: international guidelines for management of sepsis and septic shock: 2016. Intensive Care Med 43:304–377

Hiemstra B, Eck RJ, Keus F, van der Horst ICC (2017) Clinical examination for diagnosing circulatory shock. Curr Opin Crit Care 23:293–301

Vignon P, Begot E, Mari A, Silva S, Chimot L, Delour P et al (2018) Hemodynamic assessment of patients with septic shock using transpulmonary thermodilution and critical care echocardiography: a comparative study. Chest 153:55–64

Messina A, Greco M, Cecconi M (2019) What should i use next if clinical evaluation and echocardiographic haemodynamic assessment is not enough? Curr Opin Crit Care 25:259–265

Expert Round Table on Echocardiography in ICU (2014) International consensus statement on training standards for advanced critical care echocardiography. Intensive Care Med 40:654–666

Spencer KT, Kimura BJ, Korcarz CE, Pellikka PA, Rahko PS, Siegel RJ (2013) Focused cardiac ultrasound: recommendations from the american society of echocardiography. J Am Soc Echocardiogr 26:567–581

Burwash IG, Basmadjian A, Bewick D, Choy JB, Cujec B, Jassal DS et al (2011) 2010 Canadian cardiovascular society/Canadian society of echocardiography guidelines for training and maintenance of competency in adult echocardiography. Can J Cardiol 27:862–864

Robba C, Wong A, Poole D, Al Tayar A, Arntfield RT, Chew MS et al (2021) Basic ultrasound head-to-toe skills for intensivists in the general and neuro intensive care unit population: consensus and expert recommendations of the European society of intensive care medicine. Intensive Care Med 47:1347–1367

Hernandez G, Pedreros C, Veas E, Bruhn A, Romero C, Rovegno M et al (2012) Evolution of peripheral vs metabolic perfusion parameters during septic shock resuscitation. A clinical-physiologic study. J Crit Care 27:283–288

Londono J, Nino C, Diaz J, Morales C, Leon J, Bernal E et al (2018) Association of clinical hypoperfusion variables with lactate clearance and hospital mortality. Shock 50:286–292

Casserly B, Phillips GS, Schorr C, Dellinger RP, Townsend SR, Osborn TM et al (2015) Lactate measurements in sepsis-induced tissue hypoperfusion: results from the surviving sepsis campaign database. Crit Care Med 43:567–573

Jansen TC, van Bommel J, Schoonderbeek FJ, Sleeswijk Visser SJ, van der Klooster JM, Lima AP et al (2010) Early lactate-guided therapy in intensive care unit patients: a multicenter, open-label, randomized controlled trial. Am J Respir Crit Care Med 182:752–761

Levy B (2006) Lactate and shock state: the metabolic view. Curr Opin Crit Care 12:315–321

Haas SA, Lange T, Saugel B, Petzoldt M, Fuhrmann V, Metschke M et al (2016) Severe hyperlactatemia, lactate clearance and mortality in unselected critically ill patients. Intensive Care Med 42:202–210

Bloos F, Reinhart K (2005) Venous oximetry. Intensive Care Med 31:911–913

Rivers E, Nguyen B, Havstad S, Ressler J, Muzzin A, Knoblich B et al (2001) Early goal-directed therapy in the treatment of severe sepsis and septic shock. N Engl J Med 345:1368–1377

Dellinger RP, Levy MM, Carlet JM, Bion J, Parker MM, Jaeschke R et al (2008) Surviving sepsis campaign: international guidelines for management of severe sepsis and septic shock: 2008. Intensive Care Med 34:17–60

Investigators A, Group ACT, Peake SL, Delaney A, Bailey M, Bellomo R et al (2014) Goal-directed resuscitation for patients with early septic shock. N Engl J Med 371:1496–1506

Pro CI, Yealy DM, Kellum JA, Huang DT, Barnato AE, Weissfeld LA et al (2014) A randomized trial of protocol-based care for early septic shock. N Engl J Med 370:1683–1693

Mouncey PR, Osborn TM, Power GS, Harrison DA, Sadique MZ, Grieve RD et al (2015) Trial of early, goal-directed resuscitation for septic shock. N Engl J Med 372:1301–1311

van Beest PA, Hofstra JJ, Schultz MJ, Boerma EC, Spronk PE, Kuiper MA (2008) The incidence of low venous oxygen saturation on admission to the intensive care unit: a multi-center observational study in the Netherlands. Crit Care 12:R33

Cuschieri J, Rivers EP, Donnino MW, Katilius M, Jacobsen G, Nguyen HB et al (2005) Central venous-arterial carbon dioxide difference as an indicator of cardiac index. Intensive Care Med 31:818–822

Lamia B, Monnet X, Teboul JL (2006) Meaning of arterio-venous pco2 difference in circulatory shock. Minerva Anestesiol 72:597–604

Mallat J, Lemyze M, Tronchon L, Vallet B, Thevenin D (2016) Use of venous-to-arterial carbon dioxide tension difference to guide resuscitation therapy in septic shock. World J Crit Care Med 5:47–56

Acknowledgements

None to declare.

Funding

None to declare.

Author information

Authors and Affiliations

Contributions

AM and MC drafted the manuscript; JB, MC, DDB, OH, GH, SM, XM, MO, MP and JLT substantially contributed to manuscript preparation. All the authors read and approved the final version of the paper.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests for this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Messina, A., Bakker, J., Chew, M. et al. Pathophysiology of fluid administration in critically ill patients. ICMx 10, 46 (2022). https://doi.org/10.1186/s40635-022-00473-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40635-022-00473-4