Abstract

In this paper, we use operational matrices of Chebyshev polynomials to solve fractional partial differential equations (FPDEs). We approximate the second partial derivative of the solution of linear FPDEs by operational matrices of shifted Chebyshev polynomials. We apply the operational matrix of integration and fractional integration to obtain approximations of (fractional) partial derivatives of the solution and the approximation of the solution. Then we substitute the operational matrix approximations in the FPDEs to obtain a system of linear algebraic equations. Finally, solving this system, we obtain the approximate solution. Numerical experiments show an exponential rate of convergence and hence the efficiency and effectiveness of the method.

Similar content being viewed by others

1 Introduction

We consider a fractional partial differential equation (FPDE) of the form

on \((x,y)\in [0,1]\times [0,1]\), with initial conditions:

where \(\rho _{i}\) for \(i=1, \ldots, 5\) are constants real numbers, f, g and h are known continuous functions and u is unknown functions with \(0\leq \alpha,\beta \leq 1\). Here, \(\frac{ \partial ^{\alpha }u(x,y)}{\partial x^{\alpha }}\) and \(\frac{ \partial ^{\beta }u(x,y)}{\partial y^{\beta }}\) are fractional derivatives in Caputo sense, defined by

and

The study of FPDE (1) motivated us with many applications. We study in this paper two instances of these applications. First, we generalize the advection equation to include the dissipating of energy due to the friction and other possible factors that may not be considered in their model easily. Second, we solve the Lighthill–Whitham–Richards equation [1] arose in vehicular traffic flow on a finite-length highway. This equation is a particular case of Eq. (1) and is obtained by putting \(\rho _{3}=\rho _{4}=\rho _{5}=0\), \(\rho _{1}=1\) and \(f=0\) in Eq. (1).

The memory terms in FPDEs make them completely different from integer order partial differential equations (PDEs) and solving FPDEs numerically or analytically is more challenging than PDEs. However, the memory term in the integral form has its advantages and is useful in the modeling of a physical or chemical phenomenon in which the recent data depends completely on the data of the whole past time. In this respect, for example, the fractional model of the Ambartsumian equation was generalized for describing the surface brightness of the Milky Way [2]. Other recent advantages for these types of applications can be found in [3,4,5,6,7,8,9,10,11]. Therefore, it is of paramount importance to find efficient methods for solving FPDEs [12,13,14,15].

Recently, new methods for solving FPDEs have been developed in the literature. These methods include the variational iteration method [16], the Laplace transform method [17], the wavelet operational method [18,19,20,21], the Haar wavelet method [22], the Adomian decomposition method [23], the homotopy analysis method [24], the Legendre base method [25], Bernstein polynomials [26] and converting to a system of fractional differential equations [27]. The finite-difference methods are mostly studied for the numerical solution of partial differential equations [28, 29]. The advantage of these methods over other methods is that it can be used for nonlinear type of equations. However, for linear equations, the spectral methods are highly recommended because of the simplicity and efficiency [30]. Clearly, the finite-difference methods introduced in [28, 29] can be generalized for solving nonlinear fractional differential equations, but they cannot be essential for linear equations.

The spectral methods using Chebyshev polynomials are well known for ordinary and partial differential equations with rapid convergence property [30,31,32,33,34,35,36,37,38,39,40]. An important advantage of these methods over finite-difference methods is that computing the coefficient of the approximation completely determines the solution at any point of the desired interval. Therefore, in this paper, we introduce an operational matrix spectral method using Chebyshev polynomials for solving FPDEs.

Orthogonal polynomials play important roles in the spectral methods for fractional differential equations. A novel spectral approximation for the two-dimensional fractional sub-diffusion problems has been studied in [41]. New recursive approximations for variable-order fractional operators with applications can be found in [42]. Recovery of a high order accuracy in Jacobi spectral collocation methods for fractional terminal value problems with non-smooth solutions can be found in [43]. Highly accurate numerical schemes for multi-dimensional space variable-order fractional Schrödinger equations are in [44]. Operational matrices were adapted for solving several kinds of fractional differential equations. The use of numerical techniques in conjunction with operational matrices of some orthogonal polynomials for the solution of FDEs produced highly accurate solutions for such equations [45,46,47,48,49].

The discrete orthogonality properties of the Chebyshev polynomials are its advantages over other orthogonal polynomials like Legendre polynomials. Also, the zeros of the Chebyshev polynomials are known analytically. These properties lead to the Clenshaw–Curtis formula which makes integration easy. We use this formula to obtain the operational matrix of the fractional integration.

The main aim of this paper is to obtain a numerical solution of general FPDEs (1) by Chebyshev polynomials. To this end, we first approximate second partial derivatives of the unknown solution of the FPDEs (1) by Chebyshev polynomials. Then we obtain the operational matrices corresponding to fractional partial derivatives, partial derivatives, and an approximate solution. Substituting these operational formulas into FPDEs (1), we obtain a system of linear algebraic equations. Finally, by solving this system of linear algebraic equations we can find the desired approximate solution. We show also that this procedure is equivalent to applying the Chebyshev operational matrix method to a multivariable Volterra integral equation. Based on this equivalency, we obtain an error analysis.

The major difference of the introduced method in this paper with other methods with operational matrix is that we have avoided to use the differential operation. Indeed, we have approximated the second partial derivative of the solution by Chebyshev polynomials and then we have used the integral operations to obtain the approximate solution.

The structure of this paper is as follows. In Sect. 2, we review important formula and definitions of the fractional calculus and the Chebyshev polynomials. In Sect. 3, we introduce the approximations of multivariable functions in terms of the shifted Chebyshev polynomials. In Sect. 4, we obtain the operational matrix for approximating the integral and fractional integral operators. In Sect. 5, we propose a spectral method based on operational matrix for solving FPDEs of the form (1). In Sect. 6, we provide the related error analysis. In Sect. 7, some numerical examples are provided to show the efficiency of the introduced method and in Sect. 8, some applications of FPDE (1) in modeling of the advection equation and the Lighthill–Whitham–Richards equation are studied.

2 Preliminaries and notations

In this section we review some definitions and theorems for the topics of Chebyshev polynomials and fractional calculus.

2.1 Fractional calculus

In order to define the Caputo-fractional derivative, we first define the Riemann–Liouville fractional integral.

Definition 2.1

([3])

A real function \(f(t)\), on \((0,\infty )\) is said to be in the space \(\mathcal{C}_{\mu }\), \(\mu \in \mathrm{R}\) if there exists a real number \(p>\mu \) such that \(f(t)=t^{p}f_{1}(t)\), where \(f_{1}\in \mathcal{C}([0,\infty ])\), and it is said to be in the space \(\mathcal{C}_{\mu }^{n}\) if \(f^{(n)}\in \mathcal{C}_{\mu }, n\in \mathcal{N}\).

Definition 2.2

([3])

The Riemann–Liouville fractional integral of order \(\alpha \geq 0\) of a function \(f\in \mathcal{C}_{\alpha }\), \(\alpha \geq -1\), is defined as

Therefore, the fractional integral of \((t-t_{0})^{\beta }\) is

Definition 2.3

([3])

Let \(f\in \mathcal{C}_{-1}^{m}\), \(m\in \mathcal{N}\). Then the Caputo-fractional derivative of f is defined as

Thus, for \(0<\alpha <1\), we have

Some important properties of Caputo-fractional derivative are [3]

Here, \(\lfloor \alpha \rfloor \) and \(\lceil \alpha \rceil \) are the floor and the ceiling of α, respectively. Furthermore, it is straightforward to show that, for every \(m\in \mathcal{R}^{+}\) and \(n\in \mathcal{N}\), we have \({}_{t_{0}}D_{t}^{m+n}f(t)= {_{t_{0}}D_{t} ^{m}}({_{t_{0}}D_{t}^{n}}f(t))\), [3]. Moreover, for \(0\leq \alpha \leq 1\), we have

and

2.2 Chebyshev polynomials

Definition 2.4

Let \(x=\cos (\theta )\). Then the Chebyshev polynomial \(T_{n}(x)\), \(n\in \mathbb{N}\cup \{0\}\), over the interval \([-1,1]\), is defined by the relation

The Chebyshev polynomials are orthogonal with respect to the weight function \(w(x)=\frac{1}{\sqrt{1-x^{2}}}\) and the corresponding inner product is

The well-known recursive formula

with \(T_{0}(x)=1\) and \(T_{1}(x)=x\) is important for computing these polynomials, whereas we may use

to compute Chebyshev polynomials in analysis. Since the range of the problem (1) is \([0,1]\), we use the shifted Chebyshev polynomials \(T^{*}_{n}(x)\) defined by

with corresponding weight function \(w^{*}(x)=w(2x-1)\). Using, \(T_{n}^{*}(x)=T_{2n}(\sqrt{x})\), (see [40], Sect. 1.3) we could compute the shifted Chebyshev polynomials by

The discrete orthogonality of Chebyshev polynomials leads to the Clenshaw–Curtis formula [40]:

where \(x_{k}\) for \(k=1,\ldots, N+1\) are zeros of \(T_{N+1}(x)\). Also, the norm of \(T^{*}_{i}(x)\),

will be of importance below.

3 Function approximation

A function f defined over the interval \([0,1]\), may be expanded as

where C and Ψ are the matrices of size \((N+1)\times 1\),

and

The following error estimate for an infinitely differentiable function f shows that the Chebyshev expansion of f converges with exponential rate.

Theorem 3.1

([40] Theorem 5.7)

Let \(g\in \mathbb{C}[0,T]\) and g satisfy the Dini–Lipschitz condition, i.e.,

where ω is modulus of continuity. Then \(\Vert g-p_{n}g \Vert _{\infty }\rightarrow 0 \) as \(n\rightarrow \infty \).

A similar error estimate exists for the Clenshaw–Curtis quadrature.

Theorem 3.2

Let the hypotheses of Theorem 3.1 be satisfied. Then

where \(I(f)=\int _{-1}^{1}w(x)f(x)\,dx\) and \(I_{N}(f)=\frac{\pi }{N+1} \sum_{k=1}^{N+1}f(x_{k})\).

Proof

It is clear from Theorem 1 of [50, 51]. □

Let \(u(x,y)\) be a bivariate function defined on \([0,1]\times [0,1]\). Then it can similarly be expanded using Chebyshev polynomials as follows:

where \(U=(u_{i,j})\) is a matrix of size \((N+1)\times (N+1)\) with the elements

4 Operational matrices

Theorem 4.1

Let \(\varPsi (x)\) be the vector of shifted Chebyshev polynomials defined by (14). Then

where the operational matrix P can be defined by

Proof

An easy computation shows that

and

which can be used to obtain the first and the second rows of the matrix P, respectively. For \(n>1\), we can use

to obtain

which shows the structure of the other rows of the matrix P. □

Theorem 4.2

Let \(0<\alpha <1\). Then there exists \(r>1\) such that

\(D_{\alpha }=(d_{n,r})\) is the operational matrix and its elements can be approximated by

for \(r=1, \ldots, N\), and

for \(n=1, \ldots, N\) and \(r=0, \ldots, N\).

Proof

From (11), we get

for \(n>0\), and

for \(n=0\). Applying (13) to \(f(x)=x^{n-k+1-\alpha }\), we obtain

Now, by substituting the \(x^{n-k+1-\alpha }\) from (22) into (20) and (21) we obtain the desired result. □

Remark 4.3

For \(f\in C[-1,1]\), the maximum error of Clenshaw–Curtis formula is less than \(4 \Vert f-p_{N}f \Vert _{\infty }\), [50]. Hence, the Clenshaw–Curtis formula for \(x^{n-k+\alpha }\) in the proof of Theorem 4.2 shows convergence and the approximation is exact when \(N\rightarrow \infty \).

5 Implementation

Considering \(\varPsi (y)^{T}U\varPsi (x)\) as an approximation to \(u(x,y)\), we will need to compute partial derivatives of this approximation. But this type of differentiation leads to a reduction of the order of convergence. Therefore, by considering some regularity conditions, we change our strategy and we apply the approximation of the form

where U is an unknown matrix. To this end, we suppose the regularity condition

Remark 5.1

Schwarz’s theorem (or Clairaut’s theorem) is a well-known result that asserts that \(u\in C^{2}\) is a sufficient condition for (24) to hold.

Now we can obtain the other operators of \(u_{xy}\) by using appropriate operational matrices. From (23) and (24), we have, using initial conditions,

and

Consequently, we have

and

Substituting from (25)–(29) into (1), we obtain

where

is approximated by \(k(x,y)\simeq \varPsi ^{T}(x)K\varPsi (y)\), using (16). Taking the orthogonality properties of \(\varPsi ^{T}(x)\) and \(\varPsi (y)\) into account, we can drop \(\varPsi (x)\) and \(\varPsi (y)\) to obtain the following system of algebraic equations:

Finally, the approximate solution can be computed using (27):

where \(u_{N}\) stands for approximate solution to distinguish it from the exact solution u.

6 Error analysis

Suppose that \(u\in C^{2}\) is a unique solution of system (1), and set \(z:=u_{xy}\). Then an easy computation shows that

and

Substituting from (34) and (35) into (1), we obtain

Introducing, the operator \(L:C_{L}[0,1]\rightarrow C_{L}[0,1]\) by

we can write Eq. (36) in the operator form

Here, \(C_{L}\) stands for continuous functions satisfying the Dini–Lipschitz condition. Suppose that

where the operator \(L_{N}\) is defined by

Substituting \(z(x,y)\) and \(k(x,y)\) from (38) and (39) into (37) we obtain

Using (40) and the fact that L and \(L_{N}\) are linear operators we obtain

Taking into account that

and denoting by \(E=Z-U\) the error function, we obtain

If \(L_{N}\) is an invertible operator, we obtain

Supposing \(L_{N}^{-1}\) and L are continuous operators we obtain

where \(c>0\) is constant number not depending on N. We note that by Remark 4.3, \(\Vert \varepsilon (x,z) \Vert _{\infty }\rightarrow 0\) and by Theorem 3.1

and

as \(N\rightarrow \infty \). Since \(\Vert z-u_{N} \Vert _{\infty }\leq c \Vert E \Vert _{\infty }\), the convergence of the approximate solution is evident. This analysis also shows that the convergence rate depends on the convergence rate of the Chebyshev polynomials.

Remark 6.1

Since Z is not available, usually in most of the literature, the perturbed term

can be introduced to obtain

for error estimation. By solving

with the given numerical method, an error estimation is obtained.

7 Numerical examples

In this section, we apply the proposed method introduced in the previous sections to obtain numerical solutions to some FPDEs. The maximum errors are computed using

where \(D_{M}=\{(x_{i},y_{j})| x_{i}=ih, y_{j}=jh, i,j=0, \ldots , M, h=\frac{1}{M}\}\).

Example 7.1

We consider the class of FPDEs

subjected to the initial conditions

with free parameters m, n, α and β. Using (31) we obtain \(h\equiv 0\) and hence, \(U=0_{N+1}\) (zero matrix of dimension \(N+1\)), and the approximate solution using (27) is \(u(x,y)=h(x)+g(y)-h_{0}=x^{n}+y^{m}\). Therefore, as we expected the proposed method leads to an exact solution.

Example 7.2

We consider the class of FPDEs

subjected to the initial conditions

with free parameters \(m\geq 1\), \(n\geq 1\), α and β. We could examine the numerical solutions with changing these parameters. The exact solution is \(u(x,y)=x^{n}y^{m}\). In Table 1, the maximum error \(E(N)\) is reported for \(N=1,\ldots, 6\), \(\alpha =0.5\), \(\beta =2/3\) and different parameters of n and m. For \(m=n=1\) the approximate solution is exact and the truncated error is observed only. For \(m=n=2\) the approximate solution is exact when \(N\geq 2\). This pattern is observed for other parameters of n and m, and the method gives the exact solution whenever \(N\geq \max \{n,m\}\). Finally, we choose \(m=n=12\) to find the well-known exponential rate of convergence for Chebyshev spectral methods.

Example 7.3

Consider the class of FPDEs of the form

subjected to the initial conditions

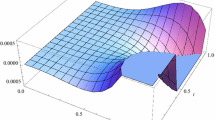

with free parameters \(\lambda _{1}\), \(\lambda _{2}\), α and β. Here, \(E_{n,m}(Z)\) is the two-parameter function of Mittag-Leffler type [52, 53]. The exact solution is \(e^{\lambda _{1} x+\lambda _{2}y}\). In Table 2, the maximum error \(E(N)\) is reported for \(N=1,\ldots, 6\), \(\lambda _{1}=0.5\), \(\lambda _{2}=0.5\) \(\beta =0.5\) and \(\alpha =0.1, 0.3, 0.5, 0.7, 0.9\). It shows the exponential rate of convergence for all values of the α. To illustrate this point we plotted the logarithm of maximum error in Fig. 1. Table 3 shows the maximum error \(E(N)\) for negative parameters \(\lambda _{1}=-0.5\), \(\lambda _{2}=-0.5\).

The logarithm of maximum error versus N, for Example 7.3

Example 7.4

Let us consider a class of FPDEs of the form

subjected to the initial conditions

with free parameters \(\lambda _{1}\), \(\lambda _{2}\), and α. This time the exact solution is the sinusoid \(u(x,y)=\sin (\lambda _{1} x) \cos (\lambda _{2} y)\). Table 4 shows the maximum error for \(\alpha =0.2, 0.4, 06, 0.8, 1\) and \(\lambda _{1}=\lambda _{2}=\pi \), and Table 5 shows these values for \(\lambda _{1}=\lambda _{2}=2 \pi \). Though these tables show that increasing the frequency \(f=\frac{\lambda }{2\pi }\) increase the absolute maximum error, the logarithm of maximum error plotted in Figs. 2 and 3 shows that both experiments of this example are of exponential rate. To show the effectiveness of the method we also illustrated a numerical solution in Figs. 4 and 5.

The logarithm of maximum error versus N, for \(\lambda _{1}= \lambda _{2}=\pi \), in Example 7.4

The logarithm of maximum error versus N, for \(\lambda _{1}= \lambda _{2}=2\pi \), in Example 7.4

The approximate solution for \(\lambda _{1}=\lambda _{2}= \pi \), in Example 7.4

The approximate solution for \(\lambda _{1}=\lambda _{2}=2 \pi \), in Example 7.4

8 Applications

Example 8.1

The advection is the transport of a substance by bulk motion. The model has been obtained by many restrictions such as neglecting friction and other parameters which dissipate energy. The dynamics of this phenomenon is described by

where ν is a nonzero constant velocity, f is a source function and u is a particle density. There is much literature showing how advection–dispersion equations are generalized by fractional differential equations (see for examples [54, 55]). Therefore, it is reasonable to add a term containing a fractional derivative for dissipating energy in the advection equations and we have

where η is a constant and \(0 <\alpha \leq 1\).

Now we consider a source function of the form \(f(x,t)=\sin (\lambda x+ \omega t)\), with \(\lambda =\pi \) and \(\omega =1\). We set \(\eta =1\) and \(\nu =1\), and solve the problem with \(N=10\). We compare the results without fractional term \(\eta =0\) and with the fractional term \(\eta =1\), \(\alpha =0.7\). Figure 6 shows the density of the substance in x direction at various times. This figure is plotted by solving Eq. (49) with fractional term (blue lines) and (48) without fractional term (red lines). In this figure, we observe that blue lines are more accumulated than the red lines with passing time. This can be interpreted as that the density of the substance in the presence of the fractional term decreases. This reduction in the density of particles can be explained by considering the dissipating of energy by friction and other physical parameters which we included by adding fractional terms.

Example 8.2

Lighthill–Whitham–Richards (LWR) equation. The equation

has been extensively studied for describing a vehicular traffic flow on a finite-length highway by using local fractional directive [1]. Here, the parameters λ and \(0<\beta \leq 1\) are known real numbers. Let us consider an example of this model with parameters \(\lambda =1\) and \(\beta =0.5\) subjected to the initial conditions \(h(x)=\sinh _{\beta }(x^{\beta })\), \(g(y)=- \sinh _{\beta }(y^{\beta })\) and \(h_{0}=0\). We recall that

and

are fractional generalizations of hyperbolic functions.

Kumar et al. [1] have obtained the general solution of Eq. (50) with local fractional derivatives as follows:

Now, we solve this equation with fractional derivatives in the Caputo sense by our proposed method. We observe that this solution shows a similar behavior to the solution obtained in (51) despite the differences in their definitions of fractional derivatives. In Fig. 7, the scaled solutions of Eq. (50) with Caputo-fractional derivatives and local fractional derivatives are depicted. This comparison shows that we can also use the Caputo-fractional derivatives for describing a vehicular traffic flow in the Lighthill–Whitham–Richards model.

9 Conclusion

An operational matrix method based on shifted Chebyshev polynomials was introduced for solving fractional partial differential equations. We avoided differentiation in the introduced method by using some smooth condition and approximating of the higher partial derivatives of the solution. We transformed the fractional partial differential equation into a singular Volterra integral equation. Then we addressed the approximate solution obtained by solving an algebraic equation. The numerical examples show that the introduced method gives the exact solution whenever the solution is a polynomial and the approximate solution converges very rapidly with an exponential rate for other examples.

A generalization of our introduced method for nonlinear equations is more challenging than the linear case. Parallel to this work, it seems that the finite-difference methods also can efficiently be generalized and studied for linear and nonlinear equations. Therefore, we consider these topics for future studies and investigations.

References

Kumar, D., Tchier, F., Singh, J., Baleanu, D.: An efficient computational technique for fractal vehicular traffic flow. Entropy 20, 259 (2018)

Kumar, D., Singh, J., Baleanu, D., Rathore, S.: Analysis of a fractional model of the Ambartsumian equation. Eur. Phys. J. Plus 133, 259 (2018)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications, vol. 198. Academic Press, San Diego (1998)

Kilbas, A.A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations, vol. 204. Elsevier Science Limited, Amsterdam (2006)

Kumar, D., Singh, J., Purohit, S.D., Swroop, R.: A hybrid analytical algorithm for nonlinear fractional wave-like equations. Math. Model. Nat. Phenom. 14, 304 (2019)

Baleanu, D.: Fractional Calculus: Models and Numerical Methods, vol. 3. World Scientific, Singapore (2012)

Shiri, B., Baleanu, D.: System of fractional differential algebraic equations with applications. Chaos Solitons Fractals 120, 203–212 (2019)

Kumar, D., Singh, J., Tanwar, K., Baleanu, D.: A new fractional exothermic reactions model having constant heat source in porous media with power, exponential and Mittag-Leffler laws. Int. J. Heat Mass Transf. 138, 1222–1227 (2019)

Kumar, D., Singh, J., Baleanu, D.: A new fractional model for convective straight fins with temperature-dependent thermal conductivity. Therm. Sci. 1, 1–12 (2017)

Singh, J., Kumar, D., Baleanu, D.: New aspects of fractional Biswas–Milovic model with Mittag-Leffler law. Math. Model. Nat. Phenom. 14, 303 (2019)

Sedgar, E., Celik, E., Shiri, B.: Numerical solution of fractional differential equation in a model of HIV infection of CD44 (+) T cells. Int. J. Appl. Math. Stat. 56, 23–32 (2017)

Mohammadi, F., Moradi, L., Baleanu, D., Jajarmi, A.: A hybrid functions numerical scheme for fractional optimal control problems: application to nonanalytic dynamic systems. J. Vib. Control 24, 5030–5043 (2018)

Baleanu, D., Jajarmi, A., Asad, J.: The fractional model of spring pendulum: new features within different kernels. Proc. Rom. Acad., Ser. A 19, 447–454 (2018)

Baleanu, D., Sajjadi, S.S., Jajarmi, A., Asad, J.H.: New features of the fractional Euler–Lagrange equations for a physical system within non-singular derivative operator. Eur. Phys. J. Plus 134, 181 (2019)

Hajipour, M., Jajarmi, A., Baleanu, D., Sun, H.: On an accurate discretization of a variable-order fractional reaction-diffusion equation. Commun. Nonlinear Sci. Numer. Simul. 69, 119–133 (2019)

Odibat, Z., Momani, S.: The variational iteration method: an efficient scheme for handling fractional partial differential equations in fluid mechanics. Comput. Math. Appl. 58, 2199–2208 (2009)

Jafari, H., Nazari, M., Baleanu, D., Khalique, C.M.: A new approach for solving a system of fractional partial differential equations. Comput. Math. Appl. 66, 838–843 (2013)

Gupta, A., Ray, S.S.: Numerical treatment for the solution of fractional fifth-order Sawada–Kotera equation using second kind Chebyshev wavelet method. Appl. Math. Model. 39, 5121–5130 (2015)

Gupta, A., Ray, S.S.: The comparison of two reliable methods for accurate solution of time-fractional Kaup–Kupershmidt equation arising in capillary gravity waves. Math. Methods Appl. Sci. 39, 583–592 (2016)

Ray, S.S., Gupta, A.: Numerical solution of fractional partial differential equation of parabolic type with Dirichlet boundary conditions using two-dimensional Legendre wavelets method. J. Comput. Nonlinear Dyn. 11, 011012 (2016)

Wu, J.-L.: A wavelet operational method for solving fractional partial differential equations numerically. Appl. Math. Comput. 214, 31–40 (2009)

Wang, L., Ma, Y., Meng, Z.: Haar wavelet method for solving fractional partial differential equations numerically. Appl. Math. Comput. 227, 66–76 (2014)

Jafari, H., Daftardar-Gejji, V.: Solving linear and nonlinear fractional diffusion and wave equations by Adomian decomposition. Appl. Math. Comput. 180, 488–497 (2006)

Jafari, H., Seifi, S.: Solving a system of nonlinear fractional partial differential equations using homotopy analysis method. Commun. Nonlinear Sci. Numer. Simul. 14, 1962–1969 (2009)

Chen, Y., Sun, Y., Liu, L.: Numerical solution of fractional partial differential equations with variable coefficients using generalized fractional-order Legendre functions. Appl. Math. Comput. 244, 847–858 (2014)

Baseri, A., Babolian, E., Abbasbandy, S.: Normalized Bernstein polynomials in solving space-time fractional diffusion equation. Adv. Differ. Equ. 1, 346 (2017)

Baleanu, D., Shiri, B.: Collocation methods for fractional differential equations involving non-singular kernel. Chaos Solitons Fractals 116, 136–145 (2018)

Hajipour, M., Jajarmi, A., Baleanu, D.: On the accurate discretization of a highly nonlinear boundary value problem. Numer. Algorithms 79, 679–695 (2018)

Hajipour, M., Jajarmi, A., Malek, A., Baleanu, D.: Positivity-preserving sixth-order implicit finite difference weighted essentially non-oscillatory scheme for the nonlinear heat equation. Appl. Math. Comput. 325, 146–158 (2018)

Baleanu, D., Shiri, B., Srivastava, H., Al Qurashi, M.: A Chebyshev spectral method based on operational matrix for fractional differential equations involving non-singular Mittag-Leffler kernel. Adv. Differ. Equ. 1, 353 (2018)

Bhrawy, A.H., Zaky, M.A., Machado, J.A.T.: Numerical solution of the two-sided space-time fractional telegraph equation via Chebyshev tau approximation. J. Optim. Theory Appl. 174, 321–341 (2017)

Dabiri, A., Butcher, E.A.: Numerical solution of multi-order fractional differential equations with multiple delays via spectral collocation methods. Appl. Math. Model. 56, 424–448 (2018)

Dabiri, A., Butcher, E.A.: Stable fractional Chebyshev differentiation matrix for the numerical solution of multi-order fractional differential equations. Nonlinear Dyn. 90, 185–201 (2017)

Doha, E.H., Bhrawy, A.H., Baleanu, D., Ezz-Eldien, S.S.: The operational matrix formulation of the Jacobi tau approximation for space fractional diffusion equation. Adv. Differ. Equ., 2014, 231 (2014)

Doha, E.H., Bhrawy, A., Ezz-Eldien, S.S.: A Chebyshev spectral method based on operational matrix for initial and boundary value problems of fractional order. Comput. Math. Appl. 62, 2364–2373 (2011)

Han, W., Chen, Y.-M., Liu, D.-Y., Li, X.-L., Boutat, D.: Numerical solution for a class of multi-order fractional differential equations with error correction and convergence analysis. Adv. Differ. Equ. 2018, 253 (2018)

Kojabad, E.A., Rezapour, S.: Approximate solutions of a sum-type fractional integro-differential equation by using Chebyshev and Legendre polynomials. Adv. Differ. Equ. 2017, 351 (2017)

Hou, J., Yang, C.: Numerical solution of fractional-order Riccati differential equation by differential quadrature method based on Chebyshev polynomials. Adv. Differ. Equ. 2017, 365 (2017)

Khaleghi, M., Babolian, E., Abbasbandy, S.: Chebyshev reproducing kernel method: application to two-point boundary value problems. Adv. Differ. Equ. 2017, 26 (2017)

Mason, J.C., Handscomb, D.C.: Chebyshev Polynomials. CRC Press, Boca Raton (2002)

Bhrawy, A., Zaxy, M., Baleanu, D., Abdelkawy, M.: A novel spectral approximation for the two-dimensional fractional sub-diffusion problems. Rom. J. Phys. 60, 344–359 (2015)

Zaky, M.A., Doha, E.H., Taha, T.M., Baleanu, D.: New recursive approximations for variable-order fractional operators with applications. Math. Model. Anal. 23, 227–239 (2018)

Bhrawy, A., Zaky, M.A.: Highly accurate numerical schemes for multi-dimensional space variable-order fractional Schrödinger equations. Comput. Math. Appl. 73, 1100–1117 (2017)

Zaky, M.A.: Recovery of high order accuracy in Jacobi spectral collocation methods for fractional terminal value problems with non-smooth solutions. J. Comput. Appl. Math. 357, 103–122 (2019)

Bhrawy, A., Zaky, M.: A fractional-order Jacobi tau method for a class of time-fractional PDEs with variable coefficients. Math. Methods Appl. Sci. 39, 1765–1779 (2016)

Bhrawy, A., Zaky, M.A.: A method based on the Jacobi tau approximation for solving multi-term time-space fractional partial differential equations. J. Comput. Phys. 281, 876–895 (2015)

Zaky, M.A.: AA Legendre spectral quadrature tau method for the multi-term time-fractional diffusion equations. Comput. Appl. Math. 37, 3525–3538 (2018)

Zaky, M.A.: An improved tau method for the multi-dimensional fractional Rayleigh–Stokes problem for a heated generalized second grade fluid. Comput. Math. Appl. 75, 2243–2258 (2018)

Bhrawy, A., Zaky, M.A., Van Gorder, R.A.: A space-time Legendre spectral tau method for the two-sided space-time Caputo fractional diffusion-wave equation. Numer. Algorithms 71, 151–180 (2016)

Trefethen, L.N.: Is Gauss quadrature better than Clenshaw–Curtis? SIAM Rev. 50, 67–87 (2008)

Chawla, M.: Error estimates for the Clenshaw–Curtis quadrature. Math. Comput. 22, 651–656 (1968)

Srivastava, H.M.: Some families of Mittag-Leffler type functions and associated operators of fractional calculus (survey). TWMS J. Pure Appl. Math. 7, 123–145 (2016)

Tomovski, Ž., Hilfer, R., Srivastava, H.M.: Fractional and operational calculus with generalized fractional derivative operators and Mittag-Leffler type functions. Integral Transforms Spec. Funct. 21, 797–814 (2010)

El-Sayed, A., Behiry, S., Raslan, W.: Adomian’s decomposition method for solving an intermediate fractional advection–dispersion equation. Comput. Math. Appl. 59, 1759–1765 (2010)

Meerschaert, M.M., Tadjeran, C.: Finite difference approximations for fractional advection–dispersion flow equations. J. Comput. Appl. Math. 172, 65–77 (2004)

Availability of data and materials

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All the authors drafted the manuscript, and read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Mockary, S., Babolian, E. & Vahidi, A.R. A fast numerical method for fractional partial differential equations. Adv Differ Equ 2019, 452 (2019). https://doi.org/10.1186/s13662-019-2390-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2390-z