Abstract

After establishing a comparison result of the nonlinear Riemann-Liouville fractional differential equation of order \({p\in(2, 3]}\), we obtain the existence of maximal and minimal solutions, and the uniqueness result for fractional differential equations. As an application, an example is presented to illustrate the main results.

Similar content being viewed by others

1 Introduction

In the past years, much attention has been devoted to the study of fractional differential equations due to the fact that they have many applications in a broad range of areas such as physics, chemistry, aerodynamics, electrodynamics of complex medium and polymer rheology. Many existence results of solutions to initial value problems and boundary value problems for fractional differential equations have been established in terms of all sorts of methods; see, e.g., [1–17] and the references therein. Generally speaking, it is difficult to get the exact solution for fractional differential equations. To obtain approximate solutions of nonlinear fractional differential problems, we can use the monotone iterative technique and the lower and upper solutions. This technique is well known and can be used for both initial value problems and boundary value problems for differential equations [18–20]. Recently, this method has also been applied to initial value problems and boundary value problems for fractional differential equations; see [21–33]. To the best of our knowledge, there is still little utilization of the monotone iterative method to a fractional differential equation of order \(p\in(2,3]\).

Consider the following nonlinear fractional differential equations:

where \(D^{p}\) is the standard Riemann-Liouville derivative and \(p\in(2,3]\). In this paper, we give some sufficient conditions, under which such problems have extremal solutions. To formulate such theorems, we need the corresponding comparison results for fractional differential inequalities by use of the Banach fixed point theorem and the method of successive approximations. An example is added to verify assumptions and theoretical results.

For convenience, let us set the following notations:

2 Preliminaries

For the convenience of the reader, we present here the necessary definitions from fractional calculus theory. These definitions and properties can be found in the recent literature; see [1–4].

Definition 2.1

[1]

The Riemann-Liouville fractional integral of order \(p>0\) of a function \(f:(0,\infty)\to\mathbb{R}\) is given by

provided that the right-hand side is pointwise defined on \((0,\infty)\).

Definition 2.2

[1]

The Riemann-Liouville fractional derivative of order \(p>0\) of a continuous function \(f:(0,\infty)\to\mathbb{R}\) is given by

where \({n-1\leq p< n}\), provided that the right-hand side is pointwise defined on \((0,\infty)\).

Lemma 2.1

[1]

Assume that \(u\in C(0,1)\cap L(0,1)\) with a fractional derivative of order \(p>0\) that belongs to \(C(0,1)\cap L(0,1)\). Then

for some \(c_{i}\in\mathbb{R}\), \(i=1,\ldots,N\), \(N=[p]\).

For brevity, let us take \(E=\{u: D^{p}u(t)\in C(0,1)\cap L(0,1)\}\). In the Banach space \(C[0,1]\), in which the norm is defined by \(\|x\|=\max_{t\in[0,1]}|x(t)|\), we set \(P=\{ x\in C[0,1] \mid x(t)\geq0, \forall t\in[0,1]\} \). P is a positive cone in \(C[0,1]\). Throughout this paper, the partial ordering is always given by P.

The following are the existence and uniqueness results of a solution for a linear boundary value problem, which is important for us in the following analysis.

Lemma 2.2

[6]

Let \(a\in\mathbb{R}\), \(\sigma\in C(0,1)\cap L(0,1)\) and \(2< p\leq3\), then the unique solution of

is given by

where \(G(t,s)\) is Green’s function given by

The following properties of Green’s function play an important part in this paper.

Lemma 2.3

[10]

The function \(G(t,s)\) defined by (2.2) satisfies the following conditions:

-

(1)

\(t^{p-1}(1-t)s(1-s)^{p-1}\leq\Gamma(p)G(t,s)\leq(p-1)s(1-s)^{p-1}\), \(t,s\in(0,1) \),

-

(2)

\(t^{p-1}(1-t)s(1-s)^{p-1}\leq\Gamma(p)G(t,s)\leq(p-1)t^{p-1}(1-t)\), \(t,s\in(0,1) \).

Lemma 2.4

Suppose that \(\sigma\in C(0,1)\cap L(0,1)\), and there exists \(M>0\) satisfying

then the linear boundary value problem

has exactly one solution given by

where

and

Proof

Using Lemma 2.2, it is easy to show that problem (2.4) is equivalent to the following integral equation:

i.e.,

where

We write in the form \(x=Tx\), where T is defined by the right-hand side of (2.6). Clearly, T is an operator from \(C[0,1]\) into \(C[0,1]\). Now, we have to show that the operator T has a unique fixed point. To do this, we will prove that T is a contraction map. In fact, by Lemma 2.3, for \(x, y \in C[0,1]\), we obtain

This and condition (2.3) prove that problem (2.4) has a unique solution \(x(t)\) given by

where

Applying the method of successive approximations, it is easy to see that

Substituting (2.7) into (2.8), we get (2.5) and the proof is complete. □

Lemma 2.5

Suppose that the constant M given in Lemma 2.4 satisfies inequality (2.3) and

Then the function \(H(t,s)\) has the following properties:

Proof

It follows from the expression of \(G_{n}(t,s)\) that \(G_{n}(t,s)\leq0\) when n is odd and \(G_{n}(t,s)\geq0\) when n is even. By Lemma 2.3, we obtain that

and

Consequently, we have

(notice that (2.9) is equivalent to the inequality \(K_{1}>0\)) and

This completes the proof. □

Let

It is obvious that \(P_{1}\) is a cone and \(P_{1}\subset P\). We define the operator \(S:C[0,1]\rightarrow C[0,1]\) by

It is clear that S is a linear operator, and the operator equation \(x=S\sigma\) is equivalent to the existence of a solution for the problem

Lemma 2.6

S is a completely continuous operator and \(S(P)\subset P_{1}\).

Proof

Applying the Arzela-Ascoli theorem and a standard argument, we can prove that S is a completely continuous operator. In the following, we prove that \(S(P)\subset P_{1}\). In fact, for any \(x\in P\), it follows from Lemma 2.5 that

which implies that

On the other hand, by Lemma 2.5 again, we have

which together with (2.11) implies

Therefore, \(S(P)\subset P_{1}\). This completes the proof. □

Lemma 2.7

Suppose that \(x\in E\) satisfies

where M satisfies (2.3), (2.9) and

Then \(x(t)\geq0\) for \(t\in[0,1]\).

Proof

Let \(\sigma(t)=-D^{p} x(t)+Mx(t)\) and \(a=x(1)\). Then

By Lemma 2.4, (2.5) holds. By the proof of Lemma 2.5, we have

Thus, by (2.5) and Lemma 2.5, we have that \(x(t)\geq0\) for \(t\in[0,1]\), and the lemma is proved. □

Lemma 2.8

[34]

Suppose that \(S:C[0,1]\rightarrow C[0,1]\) is a completely continuous linear operator and \(S(P)\subset P\). If there exist \(\psi\in C[0,1]\setminus(-P)\) and a constant \(c>0\) such that \(cS\psi\geq\psi\), then the spectral radius \(r(S)\neq0\) and S has a positive eigenfunction corresponding to its first eigenvalue \(\lambda_{1}=(r(S))^{-1}\), i.e., \(\varphi=\lambda_{1}S\varphi\).

Lemma 2.9

Suppose that S is defined by (2.10), then the spectral radius \(r(S)\neq0\) and S has a positive eigenfunction \(\varphi^{*}(t)\) corresponding to its first eigenvalue \(\lambda_{1}=(r(S))^{-1}\).

Proof

By Lemma 2.5, \(H(t,s)>0\) for all \(t,s\in(0,1)\). Take \(\psi(t)=t^{p-1}(1-t)\). Then, for \(t\in[0,1]\), by Lemma 2.5 we have

So there exists a constant \(c>0\) such that \(c(S\psi)(t)\geq\psi(t)\), \(\forall t\in[0,1]\). From Lemma 2.8, we know that the spectral radius \(r(S)\neq0\) and S has a positive eigenfunction corresponding to its first eigenvalue \(\lambda_{1}=(r(S))^{-1}\). This completes the proof. □

3 Main results

In this section, we prove the existence of extremal solutions and the uniqueness result of differential equation (1.1). We list the following assumptions for convenience.

- \((H_{1})\) :

-

There exist \(\alpha_{0}, \beta_{0}\in E\) with \(\alpha_{0}(t)\leq\beta _{0}(t)\) such that

$$\begin{gathered} D^{p} \alpha_{0}(t)+f \bigl(t, \alpha_{0}(t) \bigr) \geq0,\quad t\in(0,1), \alpha_{0}(0)= \alpha_{0}'(0)=0, \alpha_{0}(1)\leq0, \\ D^{p} \beta_{0}(t)+f \bigl(t, \beta_{0}(t) \bigr) \leq0, \quad t\in(0,1), \beta_{0}(0)= \beta_{0}'(0)=0, \beta_{0}(1)\geq0. \end{gathered} $$ - \((H_{2})\) :

-

\(f\in C([0,1]\times\mathbb{R}, \mathbb{R})\) and there exists \(M>0\) such that

$$f(t,x)-f(t,y)\geq-M(x-y), $$where \(\alpha_{0}(t)\leq y\leq x\leq \beta_{0}(t)\) and M satisfies (2.3), (2.9) and (2.12).

Theorem 3.1

Suppose that \((H_{1})\) and \((H_{2})\) hold. Then there exist monotone iterative sequences \(\{\alpha_{n}(t)\}, \{\beta_{n}(t)\}\) which converge uniformly on \([0,1]\) to the extremal solutions of problem (1.1) in the sector \(\Omega=\{v\in C[0,1]: \alpha_{0}(t)\leq v(t) \leq\beta_{0}(t), t\in[0,1]\}\).

Proof

First, for any \(\alpha_{n-1}\), \(\beta_{n-1}\), \(n\geq1\), we define two sequences \(\{\alpha_{n}(t)\}\), \(\{\beta_{n}(t)\}\) by relations

By Lemma 2.4, \(\{\alpha_{n}(t)\}\), \(\{\beta_{n}(t)\}\) are well defined. Moreover, \(\{\alpha_{n}(t)\}\), \(\{\beta_{n}(t)\}\) can be rewritten as follows:

where \(\mathbf{F}:C[0,1]\rightarrow C[0,1]\) is given by

Next, we show that \(\{\alpha_{n}(t)\}\), \(\{\beta_{n}(t)\}\) satisfy the property

Let \(w(t)=\alpha_{1}-\alpha_{0}\). By condition \((H_{1})\), we obtain

Thus, by Lemma 2.5, we have that \(w(t)\geq0\), \(t\in[0,1]\). By a similar way, we can show that \(\beta_{1}\leq \beta_{0}\).

Let \(w(t)=\beta_{1}-\alpha_{1}\). From condition \((H_{2})\), we obtain

By Lemma 2.5, we obtain \(w(t)\geq0\), \(t\in[0,1]\). Hence, we have the relation \(\alpha_{0} \leq\alpha_{1} \leq \beta_{1} \leq \beta_{0}\). It follows from \((H_{1})\) that S F is nondecreasing in the sector Ω, and then

Thus, by induction, we have

Applying the standard arguments and Lemma 2.6, we have that

uniformly on \([0,1]\), and that \(\alpha^{*}\), \(\beta^{*}\) are the solutions of boundary value problem (1.1). Furthermore, \(\alpha^{*}\) and \(\beta^{*}\) are a minimal solution and a maximal solution of (1.1) in Ω, respectively. □

The uniqueness results of a solution to problem (1.1) are established in the following theorem.

Theorem 3.2

Assume that conditions \((H_{1})\) and \((H_{2})\) hold, and that there exists \(M_{1}>0\) such that

where \(\alpha_{0}(t)\leq y\leq x\leq \beta_{0}(t)\) and \(M_{1}\) satisfies

Then BVP (1.1) has a unique solution in Ω, i.e., \(\alpha^{*}=\beta^{*}\).

Proof

It follows from the proof of Theorem 3.1 that

Let \(u(t)=\beta^{*}(t)-\alpha^{*}(t)\). Then, by (3.2), we have \(u\in P\) and

Applying mathematical induction, for \(n\in\mathbb{N}\), we get

The above inequality guarantees \(\|u\|\leq(M+M_{1})^{n}\|S\|^{n}\|u\|\) for \(n\in\mathbb{N}\). It is easy to see that

In fact, \(\|u\|\neq0\) implies that \(1\leq(M+M_{1})^{n}\|S\|^{n}\) for \(n\in N\), and consequently, \(1\leq\lim_{n\rightarrow\infty}\sqrt[n]{(M+M_{1})^{n}\|S\| ^{n}}=(M+M_{1})r(S)\), in contradiction to (3.3). □

Remark 3.1

From the point of view of differential equation, we know that (3.3) is equivalent to the inequality \(M_{1}r(S_{1})<1 \), where \(S_{1}:C[0,1]\rightarrow C[0,1]\) is defined by

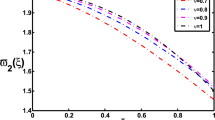

At the end of this section, we give a rough estimate for \(r(S_{1})\). For \(x\in C[0,1]\), we have

which implies \(\|S_{1}\|\leq\frac{(p-1)}{\Gamma(p+2)}\), hence \(r(S_{1})\leq\|S_{1}\|\leq\frac{(p-1)}{\Gamma(p+2)}\). On the other hand, take \(\psi(t)=t^{p-1}(1-t)\), by Lemma 2.3, we have

Thus \(r(S_{1})=\lim_{n\rightarrow\infty}\sqrt[n]{\|S_{1}\|^{n}}\geq\frac {B(p+1,p+1)}{\Gamma(p)}\). So we have

4 Example

Consider the following problem:

Obviously, \(f(t,x)=\frac{\sqrt{\pi}}{112} (t^{2}-x )^{3}-\frac {\sqrt{\pi}}{112}t^{2}x^{2}\). Take \(\alpha_{0}(t)=-t^{2}\), \(\beta_{0}(t)=t^{2}\), then

It shows that condition \((H_{1})\) of Theorem 3.2 holds. On the other hand, it is easy to verify that condition \((H_{2})\) and (3.2) hold for \(M=M_{1}=\frac{\sqrt{\pi}}{8}\) and \(r(S_{1})\leq\|S_{1}\|\leq\frac{4}{35\sqrt{\pi}}\).

Therefore, by Theorem 3.2, there exist iterative sequences \(\{\alpha_{n}\} \), \(\{\beta_{n}\}\) which converge uniformly to the unique solution in \([\alpha_{0},\beta _{0}]\), respectively.

References

Kilbas, AA, Srivastava, HM, Trujillo, JJ: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Miller, KS, Ross, B: An Introduction to the Fractional Calculus and Fractional Differential Equation. Wiley, New York (1993)

Podlubny, I: Fractional Differential Equations. Mathematics in Science and Engineering. Academic Press, New York (1999)

Samko, SG, Kilbas, AA, Marichev, OI: Fractional Integrals and Derivatives, Theory and Applications. Gordon & Breach, Yverdon (1993)

Bai, Z, Chen, Y, Lian, H, Sun, S: On the existence of blow up solutions for a class of fractional differential equations. Fract. Calc. Appl. Anal. 17(4), 1175-1187 (2014)

Cui, Y: Uniqueness of solution for boundary value problems for fractional differential equations. Appl. Math. Lett. 51, 48-54 (2016)

Guo, L, Liu, L, Wu, Y: Existence of positive solutions for singular fractional differential equations with infinite-point boundary conditions. Nonlinear Anal., Model. Control 21(5), 635-650 (2016)

Liu, L, Sun, F, Zhang, X, Wu, Y: Bifurcation analysis for a singular differential system with two parameters via to degree theory. Nonlinear Anal., Model. Control 22(1), 31-50 (2017)

Jiang, J, Liu, L, Wu, Y: Positive solutions to singular fractional differential system with coupled boundary conditions. Commun. Nonlinear Sci. Numer. Simul. 18(11), 3061-3074 (2013)

Zhang, X, Liu, L, Wu, Y: Multiple positive solutions of a singular fractional differential equation with negatively perturbed term. Math. Comput. Model. 55, 1263-1274 (2012)

Zhang, X, Liu, L, Wu, Y: Variational structure and multiple solutions for a fractional advection-dispersion equation. Comput. Math. Appl. 68, 1794-1805 (2014)

Zhang, X, Liu, L, Wu, Y, Wiwatanapataphee, B: The spectral analysis for a singular fractional differential equation with a signed measure. Appl. Math. Comput. 257, 252-263 (2015)

Zhang, X: Positive solutions for a class of singular fractional differential equation with infinite-point boundary value conditions. Appl. Math. Lett. 39, 22-27 (2015)

Zhang, X, Wang, L, Wang, S: Existence of positive solutions for a class of nonlinear fractional differential equations with integral boundary conditions. Appl. Math. Comput. 226, 708-718 (2014)

Zhao, Y, Sun, S, Han, Z, Li, Q: Positive solutions to boundary value problems of nonlinear fractional differential equations. Abstr. Appl. Anal. 2011, Article ID 390543 (2011)

Yuan, C, Jiang, D, O’Regan, D, Agarwal, RP: Multiple positive solutions to systems of nonlinear semipositone fractional differential equations with coupled boundary conditions. Electron. J. Qual. Theory Differ. Equ. 2012, Article ID 13 (2012)

Zou, Y, Liu, L, Cui, Y: The existence of solutions for four-point coupled boundary value problems of fractional differential equations at resonance. Abstr. Appl. Anal. 2014, Article ID 314083 (2014)

Coster, CD, Habets, P: Two-Point Boundary Value Problems: Lower and Upper Solution. Elsevier, Amsterdam (2006)

Guo, D: Extreme solutions of nonlinear second order integro-differential equations in Banach spaces. J. Appl. Math. Stoch. Anal. 8, 319-329 (1995)

Ladde, GS, Lakshmikantham, V, Vatsala, AS: Monotone Iterative Techniques for Nonlinear Differential Equations. Pitman, Boston (1985)

Bai, Z, Zhang, S, Sun, S, Yin, C: Monotone iterative method for a class of fractional differential equations. Electron. J. Differ. Equ. 2016, Article ID 6 (2016)

Al-Refai, M, Hajji, MA: Monotone iterative sequences for nonlinear boundary value problems of fractional order. Nonlinear Anal. 74, 3531-3539 (2011)

Cui, Y, Zou, Y: Existence of solutions for second-order integral boundary value problems. Nonlinear Anal., Model. Control 21(6), 828-838 (2016)

Cui, Y, Zou, Y: Monotone iterative technique for \((k, n-k)\) conjugate boundary value problems. Electron. J. Qual. Theory Differ. Equ. 2015, Article ID 69 (2015)

Jankowski, T: Boundary problems for fractional differential equations. Appl. Math. Lett. 28, 14-19 (2014)

Lin, L, Liu, X, Fang, H: Method of upper and lower solutions for fractional differential equations. Electron. J. Differ. Equ. 2012, Article ID 1 (2012)

Liu, X, Jia, M, Ge, W: The method of lower and upper solutions for mixed fractional four-point boundary value problem with p-Laplacian operator. Appl. Math. Lett. 65, 56-62 (2017)

Ramirez, JD, Vatsala, AS: Monotone iterative technique for fractional differential equations with periodic boundary conditions. Opusc. Math. 29, 289-304 (2009)

Sun, Y, Sun, Y: Positive solutions and monotone iterative sequences for a fractional differential equation with integral boundary conditions. Adv. Differ. Equ. 2014, Article ID 29 (2014)

Syam, M, Al-Refai, M: Positive solutions and monotone iterative sequences for a class of higher order boundary value problems of fractional order. J. Fract. Calc. Appl. 4, Article ID 14 (2013)

Wang, G: Monotone iterative technique for boundary value problems of a nonlinear fractional differential equation with deviating arguments. J. Comput. Appl. Math. 236, 2425-2430 (2012)

Zhang, S, Su, X: The existence of a solution for a fractional differential equation with nonlinear boundary conditions considered using upper and lower solutions in reversed order. Comput. Math. Appl. 62, 1269-1274 (2011)

Zhang, S: Monotone iterative method for initial value problem involving Riemann-Liouville fractional derivatives. Nonlinear Anal. 71, 2087-2093 (2009)

Guo, D, Sun, J: Nonlinear Integral Equations. Shandong Science and Technology Press, Jinan (1987) (in Chinese)

Acknowledgements

The Project supported by the National Natural Science Foundation of China (11371221, 11371364, 11571207), and the Tai’shan Scholar Engineering Construction Fund of Shandong Province of China.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

The authors have equal contributions to each part of this article. All the authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cui, Y., Sun, Q. & Su, X. Monotone iterative technique for nonlinear boundary value problems of fractional order \(p\in(2,3]\) . Adv Differ Equ 2017, 248 (2017). https://doi.org/10.1186/s13662-017-1314-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1314-z