Abstract

In this paper, we investigate synchronization of drive-response neural networks with time-varying delays via impulsive control. Based on impulsive stability theory, we design proper impulsive controllers and derive some sufficient conditions for achieving synchronization. Noticeably, we adopt adaptive strategy to design unified controllers for different neural networks and relax the restrictions on the impulsive interval. All the obtained results are verified by several numerical examples.

Similar content being viewed by others

1 Introduction

Neural networks, including Hopfield neural networks and cellular neural networks, have been widely investigated in past decades [1–23]. Synchronization, as a typical collective dynamical behavior of neural networks, has attracted more and more attention in various fields. For achieving the synchronization of neural networks, especially of chaotic neural networks, many control methods and techniques have been adopted to design proper and effective controllers, such as feedback control, intermittent control, adaptive control, impulsive control, and so on.

In real world, because of switching phenomenon or sudden noise, many real systems have been found to be subject to instantaneous perturbations and abrupt changes at certain instants. That is, these systems cannot be controlled by continuous control and endure continuous disturbance. Therefore, impulsive control, as a typical discontinuous control scheme, has been widely adopted to design proper controllers for achieving synchronization or stability [1–9]. Based on the Lyapunov function method, the Razumikhin technique, or the comparison principle, many valuable results have been obtained, and synchronization criteria have been derived. For a given neural network, we can estimate the largest impulsive interval from the derived criteria by fixing impulsive gains and calculating some system constants, for example, Lipschitz or Lipschitz-like constants with respect to neuron activation functions, and vice versa. As we know, different neural networks usually have totally different system parameters and activation functions, which means that the impulsive controllers with fixed impulsive gains and intervals are not unified. In other words, the system parameters have more restrictions on the choice of impulsive gains and intervals. For relaxing the restrictions, adaptive strategy is introduced to design adaptive impulsive controllers. The Lipschitz (or Lipschitz-like) and other constants with respect to system parameters and activation functions need not be known beforehand and can be calculated according to the proposed adaptive strategy [24–26].

On the other hand, due to the transmission speed of signals or information between neurons is finite, neural networks with coupling delay should be considered. Motivated by the above discussions, in this paper, we investigate the impulsive synchronization of drive-response chaotic delayed neural networks. Firstly, we give some sufficient conditions for achieving synchronization, from which we can easily estimate the largest impulsive intervals for given neural networks and impulsive gains. Secondly, we adopt adaptive strategy to design adaptive impulsive controllers for relaxing the restrictions. Noticeably, the designed controllers are universal for different neural networks. Finally, we perform some numerical examples to verify the obtained results.

The rest of this paper is organized as follows. In Section 2, we introduce the model and some preliminaries. In Section 3, we study the impulsive synchronization of drive-response chaotic delayed neural networks. In Section 4, we provide several numerical simulations to verify the effectiveness of the theoretical results. Section 5 concludes this paper.

2 Model and preliminaries

Consider the following chaotic neural network with time-varying delays:

or, in the compact form,

where \(i=1,2,\ldots,n\), \(x(t)=(x_{1}(t),x_{2}(t),\ldots,x_{n}(t))^{T}\in R^{n}\) is the neuron state vector, n is the number of neurons, \(f(x(t))=(f_{1}(x_{1}(t)),f_{2}(x_{2}(t)),\ldots,f_{n}(x_{n}(t)))^{T}\) and \(g(x(t))=(g_{1}(x_{1}(t)), g_{2}(x_{2}(t)),\ldots,g_{n}(x_{n}(t)))^{T}\) denote the neuron activation functions, \(C=\operatorname{diag}\{c_{1},c_{2},\ldots,c_{n}\}\) is a positive diagonal matrix, \(A=(a_{ij})_{n\times n}\) and \(B=(b_{ij})_{n\times n}\) are the coupling weight matrices, \(\tau(t)\) is the time-varying delay satisfying \(0<\tau(t)\leq\tau_{0}\), \(\phi(t)\) is bounded and continuous on \([-\tau_{0},0]\) and denotes the initial condition, and \(J=(J_{1},J_{2},\ldots,J_{n})^{T}\in R^{n}\) is an external input vector.

We regard neural network (2) as the drive network and consider the following neural network as the response network:

where \(y(t)=(y_{1}(t),y_{2}(t),\ldots,y_{n}(t))^{T}\in R^{n}\) is the neuron state vector, and \(\varphi(t)\) is bounded and continuous on \([-\tau _{0},0]\) and denotes the initial condition.

The drive-response networks (2) and (3) are said to achieve synchronization if \(\lim_{t\to\infty}\|y(t)-x(t)\|=0\).

For achieving the synchronization, the controlled network with impulsive controllers are described by

where \(k=1,2,3,\ldots \) , the impulsive time instants \(t_{k}\) satisfy \(0= t_{0}< t_{1}< t_{2}<\cdots<t_{k}<\cdots\), \(t_{k}\rightarrow\infty\) as \(k\rightarrow \infty\), \(y(t_{k}^{+})=\lim_{t\rightarrow t_{k}^{+}}y(t)\), \(y(t_{k}^{-})=\lim_{t\rightarrow t_{k}^{-}}y(t)\) and \(y(t_{k}^{-})=y(t_{k})\); \(b(t_{k})\in (-2,-1)\cup(-1,0)\) is the impulsive gain at \(t=t_{k}\), \(b(t_{k})=b(t_{k}^{+})=b(t_{k}^{-})\) and \(b(t)=0\) for \(t\neq t_{k}\).

Letting \(e(t)=y(t)-x(t)\) be the synchronization error, we can obtain the following error system:

Assumption 1

The neuron activation functions \(f_{i}(\cdot)\) and \(g_{i}(\cdot)\) are nondecreasing, bounded, and globally Lipschitz, that is, there exist two positive constants \(L_{f}\) and \(L_{g}\) such that

for any \(x,y\in R\), \(i=1,2,\ldots,n\).

Assumption 2

The time-varying delay \(\tau(t)\) is differentiable and satisfies \(\dot{\tau}(t)\leq\mu<1\).

Lemma 1

[27]

For any vectors \(x,y\in R^{n}\) and positive-definite matrix \(Q\in R^{n\times n}\), the following matrix inequality holds:

3 Main result

In what follows, let \(d_{k}=t_{k}-t_{k-1}\), λ be the largest eigenvalue of matrix \(-C+(1-\mu)^{-1}I_{n}+AA^{T}+L_{f}^{2}I_{n}+L_{g}^{2}BB^{T}\), \(\beta(t_{k})=(1+b(t_{k}))^{2}\), and \(\beta(t)=1\) for \(t\neq t_{k}\).

Theorem 1

Suppose that Assumptions 1 and 2 hold. If there exists a constant \(\alpha>0\) such that

where \(L=2\max\{\lambda,0\}\), then the drive-response delayed neural networks (2) and (4) can achieve synchronization.

Proof

Consider the following Lyapunov functional candidate:

When \(t\in(t_{k-1},t_{k})\), the function \(V(e(t))\) can be written as

and the derivative of \(V(t)\) along the trajectory (5) is

According to Assumptions 1 and 2 and Lemma 1, we have

Since \(L=2\max\{\lambda,0\}\geq0\) and \(\frac{1}{1-\mu}\int_{t-\tau(t)}^{t}e^{T}(\theta)e(\theta)\,d\theta\geq0\), we have

and

which gives

When \(t=t_{k}\), we have

By mathematical induction we have

From conditions (6) we have

and

which shows that \(\lim_{k\to\infty}V(t_{k}^{+})=0\). Then, for \(t\in (t_{k},t_{k+1})\), we have

which shows that \(\lim_{t\to\infty}\|e_{i}(t)\|=0\), that is, the synchronization of drive-response delayed neural networks (2) and (4) is achieved, and the proof is completed. □

Remark 1

For any given neural network (2), the positive constants \(L_{f}\) and \(L_{g}\) in Assumption 1 and the largest eigenvalue λ can be estimated by simple calculations. Thus, if the constant α and the impulsive gain \(b(t_{k})\) are fixed, then from conditions (6) the impulsive intervals \(d_{k}\) can be estimated. However, the neuron activation functions and coefficient matrices are usually nonidentical for different neural networks, that is, the proposed impulsive controllers with fixed impulsive intervals are not universal. In the following, adaptive strategy is adopted to design universal impulsive controllers.

Theorem 2

Suppose that Assumptions 1 and 2 hold. If there exists a constant \(\alpha>0\) such that

where \(\hat{L}(t)\) is the time-varying estimation of L satisfying \(\dot{\hat{L}}(t)=\delta e^{T}(t)e(t)\) with \(\hat{L}(0)>0\), and \(\delta >0\) is the adaptive gain, then the synchronization of drive-response delayed neural networks (2) and (4) is achieved.

Proof

Consider the following Lyapunov functional candidate:

When \(t\in(t_{k-1},t_{k})\), the function \(V(e(t))\) can be written as

and the derivative of \(V(t)\) along the trajectory (5) is

Since \(\hat{L}(t)\) is an increasing function, we have \(0<\hat{L}(t)\leq\hat{L}(t_{k})\) for \(t\in(t_{k-1},t_{k})\) and

which gives

When \(t=t_{k}\), we have

Then, similarly to the proof of Theorem 1, the proof can be completed. □

Remark 2

From conditions (9) in Theorem 2 it is clear that some constants with respect to the neuron activation functions and coefficient matrices need not be known beforehand. For any given neural network, the constants can be estimated by \(\hat{L}(t)\) with proper adaptive gain δ. When impulsive instants or intervals are fixed, we can give the updating law of impulsive gain with time from conditions (9). When impulsive gains are fixed, we can give a method for estimating the impulsive instants as well. Detailed methods are provided in the following remarks. That is, the proposed adaptive impulsive control scheme is universal for those neural networks, provided that their activation functions satisfy Assumption 1.

Remark 3

For any given impulsive intervals \(d_{k}\) and positive constant α, we can choose

so that conditions (9) in Theorem 2 hold, where ε is a small positive constant.

Remark 4

By conditions (9), for any given \(b(t_{k})\) and α, we can estimate the control instants \(t_{k}\) through finding the maximum value of \(t_{k}\) subject to \(t_{k}< t_{k-1}-(\ln\beta(t_{k})+\alpha)\hat{L}^{-1}(t_{k})\) with \(t_{0}=0\), \(k=1,2,\ldots\) .

4 Numerical simulations

Example 1

Consider the chaotic delayed Hopfield neural network with time-varying delays as the drive system described by

where \(c_{i}=1\), \(f_{i}(x_{i})=g_{i}(x_{i})=\tanh(x_{i})\), \(\tau(t)=0.9-0.9\sin t\), and

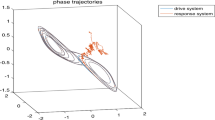

Clearly, we can choose \(L_{f}=L_{g}=1\) and \(\mu=0.9\) such that Assumptions 1 and 2 hold. In numerical simulations, choose \(b(t_{k})=-0.99\), \(d_{k}=0.08\), and \(\alpha =0.2\). By simple calculations we have \(\beta(t_{k})=0.0001\), \(\lambda =54.9719\), \(L=109.9438\), and \(\ln0.0001+0.2+109.9438\times 0.08=-0.2148<0\), that is, conditions (6) in Theorem 1 are satisfied. Therefore, the synchronization can be achieved when \(d_{k}=0.08\). Choose the initial values of \(x(t)\) and \(y(t)\) randomly. Figure 1 shows the orbits of state variables \(x_{i}(t)\) and \(y_{i}(t)\), \(i=1,2\).

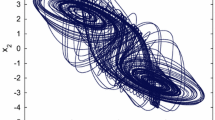

Example 2

Consider the synchronization of the same neural network via the adaptive impulsive control scheme proposed in Theorem 2. In numerical simulations, choose \(\delta=1\), the initial value of \(\hat{L}(t)\) as \(\hat{L}(0)=1\), and the other parameters as in the previous example.

Firstly, fix the impulsive interval \(d_{k}=0.08\) and choose \(b(t_{k})=e^{-\frac{\alpha+\hat{L}(t_{k})d_{k}}{2}}-1-\varepsilon\) with \(\varepsilon=0.001\) according to Remark 3. That is, the impulsive gain adjusts itself to proper value according to the adaptive law. Figure 2 shows the orbits of state variables \(x_{i}(t)\) and \(y_{i}(t)\) and impulsive gain.

Secondly, fix the impulsive gain \(b(t_{k})=-0.99\) and estimate the control instants \(t_{k}\) or impulsive interval \(d_{k}\) according to Remark 4. That is, the impulsive intervals adjust themselves to proper values according to the adaptive law. Figure 3 shows the orbits of state variables \(x_{i}(t)\) and \(y_{i}(t)\) and impulsive intervals. Clearly, the needed value of \(d_{k}\) is much larger than the estimated value from conditions (6). That is, the adaptive pinning impulsive control scheme can make the impulsive interval as large as possible and reduce the control cost.

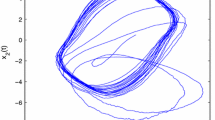

Example 3

Consider the synchronization of the following cellular neural network via the adaptive impulsive control, which is described by [28]:

where \(c_{i}=1\), \(f_{i}(x_{i})=g_{i}(x_{i})=(|x_{i}+1|-|x_{i}-1|)/2\), \(\tau(t)=1\), and

Firstly, fix the impulsive interval \(d_{k}=0.5\) and choose \(b(t_{k})=e^{-\frac{\alpha+\hat{L}(t_{k})d_{k}}{2}}-1-\varepsilon\) with \(\alpha=0.5\) and \(\varepsilon=0.001\). In numerical simulations, choose \(\delta=0.2\), the initial value of \(\hat{L}(t)\) as \(\hat{L}(0)=1\), and the initial values of \(x(t)\) and \(y(t)\) randomly. Figure 4 shows the orbits of state variables \(x_{i}(t)\) and \(y_{i}(t)\) and impulsive gain.

Secondly, fix the impulsive gain \(b(t_{k})=-0.8\) and estimate the control instants \(t_{k}\) or impulsive interval according to Remark 4. In numerical simulations, choose \(\alpha=0.2\), \(\delta=0.2\), the initial value of \(\hat{L}(t)\) as \(\hat{L}(0)=0.2\), and the initial values of \(x(t)\) and \(y(t)\) randomly. Figure 5 shows the orbits of state variables \(x_{i}(t)\) and \(y_{i}(t)\) and impulsive intervals.

5 Conclusions

In this paper, the synchronization problem of drive-response chaotic delayed neural networks has been investigated via impulsive control scheme. Firstly, some sufficient conditions for achieving synchronization were provided according to the Lyapunov function method and impulsive stability theory. For given neural networks, the largest impulsive interval can be estimated by fixing impulsive gains, and vice versa. Secondly, an adaptive strategy, combined with impulsive control scheme, was used to design universal controllers for different neural networks and relax the restrictions on impulsive intervals and gains. Finally, some numerical examples were performed to verify the correctness and effectiveness of the obtained results.

References

Chandrasekar, A, Rakkiyappan, R: Impulsive controller design for exponential synchronization of delayed stochastic memristor-based recurrent neural networks. Neurocomputing 173, 1348-1355 (2016)

Ho, DWC, Liang, J, Lam, J: Global exponential stability of impulsive high-order BAM neural networks with time-varying delays. Neural Netw. 19, 1581-1590 (2006)

Hu, C, Jiang, H, Teng, Z: Impulsive control and synchronization for delayed neural networks with reaction-diffusion terms. IEEE Trans. Neural Netw. 20, 67-81 (2010)

Zhang, W, Tang, Y, Fang, J, Wu, X: Stability of delayed neural networks with time-varying impulses. Neural Netw. 36, 59-63 (2012)

Zhu, Q, Cao, J: Stability of Markovian jump neural networks with impulse control and time varying delays. Nonlinear Anal., Real World Appl. 13, 2259-2270 (2012)

Zhang, S, Jiang, W, Zhang, Z: Exponential stability for a stochastic delay neural network with impulses. Adv. Differ. Equ. 2014, 250 (2014)

Zhu, Q, Cao, J: Stability analysis of Markovian jump stochastic BAM neural networks with impulse control and mixed time delays. IEEE Trans. Neural Netw. Learn. Syst. 23, 467-479 (2012)

Zhu, Q, Rakkiyappan, R, Chandrasekar, A: Stochastic stability of Markovian jump BAM neural networks with leakage delays and impulse control. Neurocomputing 136, 136-151 (2014)

Feng, G, Cao, J: Master-slave synchronization of chaotic systems with a modified impulsive controller. Adv. Differ. Equ. 2013, 24 (2013)

Sun, X, Feng, Z, Liu, X: Pinning adaptive synchronization of neutral-type coupled neural networks with stochastic perturbation. Adv. Differ. Equ. 2014, 77 (2014)

Song, Q, Cao, J: Synchronization of nonidentical chaotic neural networks with leakage delay and mixed time-varying delays. Adv. Differ. Equ. 2011, 16 (2011)

Rakkiyappan, R, Dharani, S, Cao, J: Synchronization of neural networks with control packet loss and time-varying delay via stochastic sampled-data controller. IEEE Trans. Neural Netw. Learn. Syst. 26, 3215-3226 (2015)

Velmurugan, G, Rakkiyappan, R, Cao, J: Finite-time synchronization of fractional-order memristor-based neural networks with time delays. Neural Netw. 73, 36-46 (2016)

Ascoli, A, Lanza, V, Corinto, F, Tetzlaff, R: Synchronization conditions in simple memristor neural networks. J. Franklin Inst. 352, 3196-3220 (2015)

Theesar, SJS, Ratnavelu, K: Synchronization error bound of chaotic delayed neural networks. Nonlinear Dyn. 78, 2349-2357 (2014)

Chen, S, Cao, J: Projective synchronization of neural networks with mixed time-varying delays and parameter mismatch. Nonlinear Dyn. 67, 1397-1406 (2012)

Wang, J, Zhang, H, Wang, Z, Huang, B: Robust synchronization analysis for static delayed neural networks with nonlinear hybrid coupling. Neural Comput. Appl. 25, 839-848 (2014)

Shi, Y, Zhu, P: Adaptive synchronization of different Cohen-Grossberg chaotic neural networks with unknown parameters and time-varying delays. Nonlinear Dyn. 73, 1721-1728 (2013)

Zhu, Q, Cao, J, Rakkiyappan, R: Exponential input-to-state stability of stochastic Cohen-Grossberg neural networks with mixed delays. Nonlinear Dyn. 79, 1085-1098 (2015)

Zhu, Q, Cao, J: Mean-square exponential input-to-state stability of stochastic delayed neural networks. Neurocomputing 131, 157-163 (2014)

Cao, J, Sivasamy, R, Rakkiyappan, R: Sampled-data \(H_{\infty}\) synchronization of chaotic Lur’e systems with time delay. Circuits Syst. Signal Process. 35, 811-835 (2016)

Cao, J, Rakkiyappan, R, Maheswari, K, Chandrasekar, A: Exponential \(H_{\infty}\) filtering analysis for discrete-time switched neural networks with random delays using sojourn probabilities. Sci. China, Technol. Sci. 59, 387-402 (2016)

Cao, J, Alofi, A, Al-Mazrooei, A, Ahmed Elaiw, A: Synchronization of switched interval networks and applications to chaotic neural networks. Abstr. Appl. Anal. 2013, 940573 (2013)

Chen, YS, Hwang, RR, Chang, CC: Adaptive impulsive synchronization of uncertain chaotic systems. Phys. Lett. A 374, 2254-2258 (2010)

Liu, D, Wu, Z, Ye, Q: Adaptive impulsive synchronization of uncertain drive-response complex-variable chaotic systems. Nonlinear Dyn. 75, 209-216 (2014)

Liu, D, Wu, Z, Ye, Q: Structure identification of an uncertain network coupled with complex-variable chaotic systems via adaptive impulsive control. Chin. Phys. B 23, 040504 (2014)

Sanchez, EN, Perez, JP: Input-to-state stability (ISS) analysis for dynamic neural networks. IEEE Trans. Circuits Syst. I 46, 1395-1398 (1999)

Gilli, M: Strange attractors in delayed cellular neural networks. IEEE Trans. Circuits Syst. I 40, 166-173 (1993)

Acknowledgements

This work is jointly supported by the NSFC under Grant No. 61463022 and the NSF of Jiangxi Educational Committee under Grant No. GJJ14273.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All the authors contributed equally to this work. They all read and approved the final version of the manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wu, Z., Leng, H. Impulsive synchronization of drive-response chaotic delayed neural networks. Adv Differ Equ 2016, 206 (2016). https://doi.org/10.1186/s13662-016-0928-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-0928-x