Abstract

In this article, we introduce a numerical technique for solving a general form of the fractional optimal control problem. Fractional derivatives are described in the Caputo sense. Using the properties of the shifted Jacobi orthonormal polynomials together with the operational matrix of fractional integrals (described in the Riemann-Liouville sense), we transform the fractional optimal control problem into an equivalent variational problem that can be reduced to a problem consisting of solving a system of algebraic equations by using the Legendre-Gauss quadrature formula with the Rayleigh-Ritz method. This system can be solved by any standard iteration method. For confirming the efficiency and accuracy of the proposed scheme, we introduce some numerical examples with their approximate solutions and compare our results with those achieved using other methods.

Similar content being viewed by others

1 Introduction

In recent past, there has been a remarkable development in the areas of fractional and functional differential equations and their applications. Many physical and engineering phenomena are modeled by fractional differential equations. Much progress has been made by implementing several numerical methods to approximate the solutions of such problems since most fractional and functional differential equations have no exact solutions, see [1–4].

In recent decades, many scientists in different fields of mathematics, physics and engineering have been interested in studying the fractional calculus (theories of derivatives and integrals with any non-integer arbitrary order) for its several applications in various areas of real life such as thermodynamics [5], modeling of viscoelastic dampers [6], bioengineering [7], psychology [8], finance [9] and others [10–16].

The operational matrix of fractional derivatives has been derived for some types of orthogonal polynomials, such as Chebyshev polynomials [17], Jacobi polynomials [18] and Bernstein polynomials [19], and it has been used to solve distinct types of fractional differential equations, see [20–23]. On the other hand, the operational matrix of fractional integrals has been derived for many types of orthogonal polynomials such as Legendre polynomials [24, 25], Jacobi polynomials [26] and Laguerre polynomials [27].

The optimal control problem refers to the minimization of a performance index subject to dynamic constraints on the state variable and the control variable. If the fractional differential equations are used as the dynamic constraints, this leads to the fractional optimal control problem. In recent years, many researchers have studied the fractional optimal control problems and have found numerical solutions for them, for instance, [28–38].

In this paper, we investigate and develop a direct numerical technique to solve the fractional optimal control problem given by

subjected to the dynamic constraints

where \(n-1 <\nu\leq n\) and \(b(t)\neq0\).

The introduced scheme here consists of expanding the fractional derivative of the state variable \(D^{\nu} x (t) \) with the Jacobi orthonormal polynomials with unknown coefficients using the operational matrix of fractional integrals. Then the equation derived from the dynamic constraint (2) is coupled to the performance index (1) to construct an equivalent variational problem. The integration in the result variational problem may be more difficult to be computed exactly. In this case, the Legendre-Gauss quadrature’s formula is used for this purpose. Using the Rayleigh-Ritz method, the given fractional optimal control problem (1)-(2) is reduced to a problem of solving a system of algebraic equations that can be approximated by any iterative method.

This article is organized as follows. In Section 2, we introduce some definitions and notations of fractional calculus and some properties of the shifted orthonormal polynomials. In Section 3, we derive the operational matrix of fractional integrals based on the shifted Jacobi orthonormal polynomials. In Section 4, the operational matrix of fractional integrals and the properties of the shifted Jacobi orthonormal polynomials are used together with the help of the Legendre-Gauss quadrature formula and the Rayleigh-Ritz method to introduce an approximate solution for the fractional optimal control problem (1)-(2). In Section 5, several numerical examples and comparisons between the results achieved using the presented scheme with those achieved using other methods are introduced.

2 Preliminaries and notations

2.1 Fractional calculus definitions

Riemann-Liouville and Caputo fractional definitions are the two most used from other definitions of fractional derivatives which have been introduced recently.

Definition 2.1

The integral of order \(\gamma\geq0\) (fractional) according to Riemann-Liouville is given by

where

is a gamma function.

The operator \(I^{\nu}\) satisfies the following properties:

Definition 2.2

The Caputo fractional derivative of order γ is defined by

where m is the upper limit of γ.

The operator \(D^{\gamma}\) satisfies the following properties:

2.2 Shifted Jacobi orthonormal polynomials

The Jacobi polynomial of degree j, denoted by \(P^{(\alpha,\beta )}_{j}(z)\); \(\alpha\geq-1\), \(\beta\geq-1\) and defined on the interval \([-1,1]\), constitutes an orthogonal system with respect to the weight function \(w^{(\alpha,\beta)}(z)=(1-z)^{\alpha}(1+z)^{\beta}\), i.e.,

where \(\delta_{jk}\) is the Kronecker function and

The shifted Jacobi polynomial of degree j, denoted by \(P^{(\alpha ,\beta)}_{T,j}(t)\); \(\alpha\geq-1\), \(\beta\geq-1\) and defined on the interval \([0,T]\), is generated by introducing the change of variable \(z=\frac {2t}{T}-1\), i.e., \(P^{(\alpha,\beta)}_{j}(\frac{2t}{T}-1)\equiv P^{(\alpha,\beta)}_{T,j}(t)\). Then the shifted Jacobi polynomials constitute an orthogonal system with respect to the weight function \(w^{(\alpha,\beta)}_{T}(t)=t^{\beta}(T-t)^{\alpha}\) with the orthogonality property

where

Introducing the shifted Jacobi orthonormal polynomials \(\grave {P}^{(\alpha,\beta)}_{T,k}(t)\), where

we have

The shifted Jacobi orthonormal polynomials are generated from the three-term recurrence relations

with

where

The explicit analytic form of the shifted orthonormal Jacobi polynomials \(\grave{P}^{(\alpha,\beta)}_{T,j}{(t)}\) of degree j is given by

and this in turn implies

which will be of important use later.

Assume that \(y(t)\) is a square integrable function with respect to the Jacobi weight function \(w^{(\alpha,\beta)}_{T}(t)\) in \((0,T)\), then it can be expressed in terms of shifted Jacobi orthonormal polynomials as

from which the coefficients \(y_{j}\) are given by

If we approximate \(y(t)\) by the first \((N+1)\)-terms, then we can write

which alternatively may be written in a matrix form

with

3 Operational matrix for fractional integrals

The main objective of this section is to derive the operational matrix of fractional integrals based on the shifted Jacobi orthonormal polynomials.

Theorem 3.1

The fractional integral of order ν (in the sense of Riemann-Liouville) of the shifted Jacobi orthonormal polynomial vector \(\Psi_{T,N}(t)\) is given by

where \(\mathbf{I}^{(\nu)}\) is the \((N+1)\times(N+1)\) operational matrix of fractional integral of order ν and is defined by

where

and

Proof

Using (6) and (13), the fractional integral of order ν for the shifted Jacobi polynomials \(\grave{P}^{(\alpha,\beta)}_{T,i}{(t)}\) is given by

Now we approximate \(t^{k+\nu}\) by \(N+1\) terms of shifted Jacobi orthonormal polynomials \(\grave{P}^{(\alpha,\beta)}_{T,j}(t)\) as

where \(\theta_{kj}\) is given as in Eq. (15) with \(y(t)=t^{k+\nu }\), then

Employing Eqs. (23)-(25), we have

where \(\vartheta_{\nu}(i,j,\alpha,\beta)\) is given by

Finally, we can rewrite Eq. (26) in a vector form as

Equation (28) completes the proof. □

4 The numerical scheme

In this section, we use the properties of the shifted Jacobi orthonormal polynomials together with the operational matrix of fractional integrals in order to solve the following fractional optimal control problem:

subject to

where \(n-1 <\nu\leq n\) and \(b(t)\neq0\).

4.1 Shifted Jacobi orthonormal approximation

First, we approximate \(D^{\nu}x(t)\) by the shifted Jacobi orthonormal polynomials \(\grave{P}^{(\alpha,\beta)}_{T,j}(t)\) as

where C is an unknown coefficients matrix that can be written as

Using (6), we have

from Eq. (19) together with Eq. (31), it is easy to write

In virtue of Eqs. (31)-(34), we get

Using (35), we can write the dynamic constraint (30) in the form

The previous equation leads to

Using Eqs. (35) and (37), the performance index (29) may be written in the form

4.2 Legendre-Gauss quadrature

In general, it is more difficult to compute the previous integral exactly, so we use the Legendre-Gauss quadrature formula for this purpose.

First, we suppose the change of variable

and for simplifying we show

and

Then Eq. (38) is equivalent to

Using the Legendre-Gauss quadrature formula, we can write

where \(t_{T,N,r}\), \(0\leq r \leq N\), are the zeros of Legendre-Gauss quadrature in the interval \((0,T)\), with \(\varpi_{T,N,r}\), \(0\leq r \leq N\), being the corresponding Christoffel numbers.

As in the Rayleigh-Ritz method, the necessary conditions for the optimality of the performance index are

The system of algebraic equations introduced above can be solved by using any standard iteration method for the unknown coefficients \(c_{j}\), \(j=0,1,\ldots,N\). Consequently, C given in (32) can be calculated.

5 Numerical experiments

In order to show the efficiency and accuracy of the numerical scheme introduced, we applied it to solve some examples and compared the results obtained using our scheme with those obtained using other schemes.

5.1 Example 1

As the first example, we consider the following fractional optimal control problem studied in [39]:

subjected to the dynamic constraints

The exact solution of this problem for \(\nu=1\) is

where

Baleanu et al. [39] approximated the fractional derivative by the modified Grünwald-Letnikov approach and divided the entire time domain into several subdomains for introducing approximate solutions of the control variable \(u(t)\) and the state variable \(x(t)\) and did not achieve reasonable results for the approximate values of \(u(t)\) and \(x(t)\) unless a large number of N (N is increased up to 256) is used, see Figures 1-6 in [39].

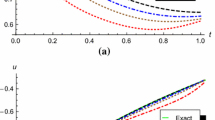

In Figures 1 and 2, we plot the absolute errors of the state variable \(x(t)\) and the control variable \(u(t)\) at \(N=10\), \(\alpha=\beta=0\) and \(\nu=1\), while Figures 3 and 4 present the approximate values of \(x(t)\) and \(u(t)\) as functions of time at \(N=8\), \(\alpha=\beta=0\) and various choices of ν, \(\nu =0.50,0.70,0.90,0.99\mbox{ and }1\). Also, in Tables 1 and 2, we list the absolute errors of \(x(t)\) and \(u(t)\) at \(\alpha=\beta=0\), \(\nu=1\) and various choices of N.

From Figures 1 and 2 and Tables 1 and 2, it is clear that adding few terms of shifted Jacobi orthonormal polynomials, good approximations of the exact state and control variables were achieved, and from the observation of Figures 3 and 4 we conclude that as ν approaches to 1, the solution for the integer order system is recovered.

5.2 Example 2

Consider the following fractional optimal control problem studied in [40]:

subjected to the dynamic constraints

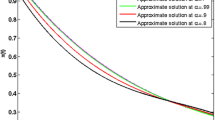

In [40], the authors applied the parameterization method together with the perturbation homotopy method to present a numerical approach to solve this problem. In order to show that our proposed numerical scheme is better than the one introduced in [40], in Table 3 we compare the results of the optimal value of the cost function J obtained using our numerical approach at \(\alpha=\beta=0\) with those obtained in [40]. Figures 5 and 6, present the approximate values of the state variable \(x(t)\) and the control variable \(u(t)\) as functions of time at \(N=8\), \(\alpha=\beta=0\) and various choices of \(\nu, \nu=0.50,0.70,0.90,0.99\mbox{ and }1\).

5.3 Example 3

Consider the following fractional optimal control problem:

subjected to the dynamic constraints

The exact solutions of \(x(t)\) and \(u(t)\) for this problem are

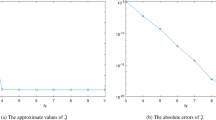

In Table 4, we introduce the maximum absolute errors (MAEs) of the state variable \(x(t)\) at \(\nu=1.9\) and different values of α, β and N while in Table 5 (Table 6), the absolute errors of the state (control) variable are introduced at \(\nu=1.1\), \(\alpha=\beta=1\) (\(\nu=1.5\), \(\alpha=\beta=\frac {1}{2}\)) at various choices of N. In Figure 7 (Figure 8) we plot the absolute errors of the state (control) variable at \(-\alpha=\beta=\frac{1}{2}\), \(\nu=1.3\) (\(\alpha=-\beta=\frac {1}{2}\), \(\nu=1.7\)) at \(N=20\). Finally in Figure 9 (Figure 10) we plot the logarithmic graphs of MAEs (log10 error) of the state (control) variable at \(\alpha=\beta=1\) (\(\alpha=\beta=0\)) at \(\nu=1.1,1.3,1.5,1.7,1.9\) and various choices of N.

References

Podlubny, I: Fractional Differential Equations. Academic Press, New York (1999)

Mainardi, F: Fractional Calculus Continuum Mechanics. Springer, Berlin (1997)

Debnath, L: A brief historical introduction to fractional calculus. Int. J. Math. Educ. Sci. Technol. 35, 487-501 (2004)

David, SA, Linares, JL, Pallone, EMJA: Fractional order calculus: historical apologia, basic concepts and some applications. Rev. Bras. Ensino Fis. 33, 4302 (2011)

Saxena, RK, Mathai, AM, Haubold, HJ: On generalized fractional kinetic equations. Physica A 344, 657-664 (2004)

Lewandowski, R, Chorazyczewski, B: Identification of the parameters of the Kelvin-Voigt and the Maxwell fractional models, used to modeling of viscoelastic dampers. Comput. Struct. 88, 1-17 (2010)

Magin, RL: Fractional Calculus in Bioengineering. Begell House Publishers, Redding (2006)

Ahmad, WM, El-Khazali, R: Fractional-order dynamical models of love. Chaos Solitons Fractals 33, 1367-1375 (2007)

Picozzi, S, West, B: Fractional Langevin model of memory in financial markets. Phys. Rev. E 66, 046118 (2002)

Chen, W: A speculative study of \(2/3\)-order fractional Laplacian modeling of turbulence: some thoughts and conjectures. Chaos 16, 023126 (2006)

Dzielinski, A, Sierociuk, D, Sarwas, G: Some applications of fractional order calculus. Bull. Pol. Acad. Sci., Tech. Sci. 58(4), 583-592 (2010)

Hilfer, R: Applications of Fractional Calculus in Physics. World Scientific, River Edge (2000)

Sierociuk, D, Dzielinski, A, Sarwas, G, Petras, I, Podlubny, I, Skovranek, T: Modelling heat transfer in heterogeneous media using fractional calculus. Philos. Trans. R. Soc. Lond. A 371, 20130146 (2013)

Srivastava, HM: Some applications of fractional calculus operators to certain classes of analytic and multivalent functions. J. Math. Anal. Appl. 122, 187-196 (1987)

Srivastava, HM, Aouf, MK: Some applications of fractional calculus operators to certain subclasses of prestarlike functions with negative coefficients. Comput. Math. Appl. 30, 53-61 (1995)

Tarasov, VE: Fractional vector calculus and fractional Maxwell’s equations. Ann. Phys. 323, 2756-2778 (2008)

Doha, EH, Bhrawy, AH, Ezz-Eldien, SS: A Chebyshev spectral method based on operational matrix for initial and boundary value problems of fractional order. Comput. Math. Appl. 62, 2364-2373 (2011)

Doha, EH, Bhrawy, AH, Ezz-Eldien, SS: A new Jacobi operational matrix: an application for solving fractional differential equations. Appl. Math. Model. 36, 4931-4943 (2012)

Saadatmandi, A: Bernstein operational matrix of fractional derivatives and its applications. Appl. Math. Model. 38, 1365-1372 (2014)

Doha, EH, Bhrawy, AH, Ezz-Eldien, SS: Numerical approximations for fractional diffusion equations via a Chebyshev spectral-tau method. Cent. Eur. J. Phys. 11, 1494-1503 (2013)

Bhrawy, AH, Zaky, MA: A method based on the Jacobi tau approximation for solving multi-term time-space fractional partial differential equations. J. Comput. Phys. 281, 876-895 (2015)

Bhrawy, AH, Zaky, MA, Baleanu, D: New numerical approximations for space-time fractional Burgers’ equations via a Legendre spectral-collocation method. Rom. Rep. Phys. 67(2) (2015)

Bhrawy, AH, Doha, EH, Ezz-Eldien, SS, Gorder, RAV: A new Jacobi spectral collocation method for solving \(1+1\) fractional Schrödinger equations and fractional coupled Schrödinger systems. Eur. Phys. J. Plus 129, 260 (2014). doi:10.1140/epjp/i2014-14260-6

Akrami, MH, Atabakzadeh, MH, Erjaee, GH: The operational matrix of fractional integration for shifted Legendre polynomials. Iran. J. Sci. Technol., Trans. A, Sci. 37, 439-444 (2013)

Doha, EH, Bhrawy, AH, Ezz-Eldien, SS: An efficient Legendre spectral tau matrix formulation for solving fractional sub-diffusion and reaction sub-diffusion equations. J. Comput. Nonlinear Dyn. 10(2), 021019 (2015)

Bhrawy, AH, Doha, EH, Baleanu, D, Ezz-Eldien, SS: A spectral tau algorithm based on Jacobi operational matrix for numerical solution of time fractional diffusion-wave equations. J. Comput. Phys. (2014). doi:10.1016/j.jcp.2014.03.039

Bhrawy, AH, Baleanu, D, Assas, L: Efficient generalized Laguerre spectral methods for solving multi-term fractional differential equations on the half line. J. Vib. Control 20, 973-985 (2014)

Djennoune, S, Bettayeb, M: Optimal synergetic control for fractional-order systems. Automatica 49, 2243-2249 (2013)

Frederico, GSF, Torres, DFM: Fractional conservation laws in optimal control theory. Nonlinear Dyn. 53(3), 215-222 (2008)

Jarad, F, Abdeljawad, T, Baleanu, D: Fractional variational optimal control problems with delayed arguments. Nonlinear Dyn. 62, 609-614 (2010)

Guo, TL: The necessary conditions of fractional optimal control in the sense of Caputo. J. Optim. Theory Appl. 156, 115-126 (2013)

Kamocki, R: On the existence of optimal solutions to fractional optimal control problems. Appl. Math. Comput. 235, 94-104 (2014)

Dorville, R, Mophou, GM, Valmorin, VS: Optimal control of a nonhomogeneous Dirichlet boundary fractional diffusion equation. Comput. Math. Appl. 62, 1472-1481 (2011)

Tohidi, E, Nik, HS: A Bessel collocation method for solving fractional optimal control problems. Appl. Math. Model. 39, 455-465 (2015)

Pooseh, S, Almeida, R, Torres, DFM: A numerical scheme to solve fractional optimal control problems. Conf. Pap. Math. 2013, Article ID 165298 (2013). doi:10.1155/2013/165298

Pooseh, S, Almeida, R, Torres, DFM: Fractional order optimal control problems with free terminal time. J. Ind. Manag. Optim. 10, 363-381 (2014)

Kamocki, R: Pontryagin maximum principle for fractional ordinary optimal control problems. Math. Methods Appl. Sci. 37, 1668-1686 (2014)

Ozdemir, N, Karadeniz, D, Iskender, BB: Fractional optimal control problem of a distributed system in cylindrical coordinates. Phys. Lett. A 373(2), 221-226 (2009)

Baleanu, D, Defterli, O, Agrawal, OMP: A central difference numerical scheme for fractional optimal control problems. J. Vib. Control 15, 547-597 (2009)

Akbarian, T, Keyanpour, M: A new approach to the numerical solution of fractional order optimal control problems. Appl. Appl. Math. 8, 523-534 (2013)

Acknowledgements

This article was funded by the Deanship of Scientific Research DSR, King Abdulaziz University, Jeddah. The authors, therefore, acknowledge with thanks DSR technical and financial support.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

The authors have equal contributions to each part of this paper. All the authors read and approved the final manuscript.

Rights and permissions

Open Access This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly credited.

About this article

Cite this article

Doha, E.H., Bhrawy, A.H., Baleanu, D. et al. An efficient numerical scheme based on the shifted orthonormal Jacobi polynomials for solving fractional optimal control problems. Adv Differ Equ 2015, 15 (2015). https://doi.org/10.1186/s13662-014-0344-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-014-0344-z