Abstract

Background

Stroke survivors often encounter occupational therapy practitioners in rehabilitation practice settings. Occupational therapy researchers have recently begun to examine the implementation strategies that promote the use of evidence-based occupational therapy practices in stroke rehabilitation; however, the heterogeneity in how occupational therapy research is reported has led to confusion about the types of implementation strategies used in occupational therapy and their association with implementation outcomes. This review presents these strategies and corresponding outcomes using uniform language and identifies the extent to which strategy selection has been guided by theories, models, and frameworks (TMFs).

Methods

A scoping review protocol was developed to assess the breadth and depth of occupational therapy literature examining implementation strategies, outcomes, and TMFs in the stroke rehabilitation field. Five electronic databases and two peer-reviewed implementation science journals were searched to identify studies meeting the inclusion criteria. Two reviewers applied the inclusion parameters and consulted with a third reviewer to achieve consensus. The 73-item Expert Recommendations for Implementing Change (ERIC) implementation strategy taxonomy guided the synthesis of implementation strategies. The Implementation Outcomes Framework guided the analysis of measured outcomes.

Results

The initial search yielded 1219 studies, and 26 were included in the final review. A total of 48 out of 73 discrete implementation strategies were described in the included studies. The most used implementation strategies were “distribute educational materials” (n = 11), “assess for readiness and identify barriers and facilitators” (n = 11), and “conduct educational outreach visits” (n = 10). “Adoption” was the most frequently measured implementation outcome, while “cost” was not measured in any included studies. Eleven studies reported findings supporting the effectiveness of their implementation strategy or strategies; eleven reported inconclusive findings, and four found that their strategies did not lead to improved implementation outcomes. In twelve studies, at least partially beneficial outcomes were reported, corresponding with researchers using TMFs to guide implementation strategies.

Conclusions

This scoping review synthesized implementation strategies and outcomes that have been examined in occupational therapy and stroke rehabilitation. With the growth of the stroke survivor population, the occupational therapy profession must identify effective strategies that promote the use of evidence-based practices in routine stroke care and describe those strategies, as well as associated outcomes, using uniform nomenclature. Doing so could advance the occupational therapy field’s ability to draw conclusions about effective implementation strategies across diverse practice settings.

Similar content being viewed by others

Background

Every year, millions of people worldwide experience a stroke [1, 2]. In 2016 alone, there were over 13 million new cases of stroke globally [3]. At elevated risk for stroke are persons who are 65 and older, practice unhealthy behaviors (smoking, poor diet, and physical inactivity), have metabolic risks (high blood pressure, high glucose, decreased kidney function, obesity, and high cholesterol), and represent lower socioeconomic groups [1, 4, 5]. With the rapid growth of the older adult population, the number of stroke survivors is expected to rise dramatically in the coming years, contributing to a shift in increased global disease burden [6,7,8,9]. Stroke is one of the leading causes of long-term disability worldwide, and stroke survivors often face extensive challenges that result in self-care dependency, mobility impairments, underemployment, and cognitive deficits [1, 10]. Frequently, stroke survivors are admitted to stroke rehabilitation settings, such as outpatient care centers, skilled nursing facilities, and home health agencies. Occupational therapy (OT) practitioners work with stroke survivors in these settings to address their physical, cognitive, and psychosocial challenges [10,11,12,13]. Considered allied health professionals, OT practitioners across the stroke rehabilitation continuum are expected to implement a person-centered care plan using evidence-based assessments and interventions intended to maximize stroke survivors’ independence in daily activities and routines (e.g., dressing, bathing, mobility). Furthermore, healthcare users (e.g., stroke survivors) expect practitioners to deliver evidence-based practice and provide the highest quality occupational therapy services.

The benefits of OT in stroke rehabilitation have been well documented [14]. For instance, evidence-based OT interventions can lead to improved upper extremity movement [15, 16], enhanced cognitive performance [17], and increased safety with mobility [18]. However, as with several allied health professions, OT practitioners can experience complex barriers when implementing evidence-based care into routine practice [19,20,21]. Specific to stroke rehabilitation, Juckett et al. [22] identified several barriers that limited OT practitioners’ use of evidence and categorized these barriers according to the Consolidated Framework for Implementation Research (CFIR) [23]. Notable barriers to evidence use were attributed to challenges adapting evidence-based programs and interventions to meet patients’ needs (e.g., adaptability), a lack of equipment and personnel (e.g., available resources), and insufficient internal communication systems (e.g., networks and communication). Although identifying these barriers is a necessary precursor to optimizing evidence implementation, Juckett et al. [22] also emphasized the urgent need for OT researchers and practitioners to identify implementation strategies that facilitate the use of evidence in stroke rehabilitation. Relatedly, Jones et al. [24] examined the literature regarding implementation strategies used in the rehabilitation profession: occupational therapy, physical therapy, and speech–language pathology. While they found some encouraging findings, it is difficult to replicate these strategies given the heterogeneity in how implementation strategies and outcomes were defined and the inconsistency with which implementation strategy selection was informed by implementation theories, models, and frameworks (TMFs) [24]. Just as it is critical to select implementation strategies based on known implementation barriers, the design of implementation studies should be guided by TMFs to optimize the generalizability of findings towards both implementation and patient outcomes [25].

Implementation strategies are broadly defined as methods to enhance the adoption, use, and sustainment of evidence-based interventions, programs, or innovations [26, 27]. Historically, the terminology and definitions used to describe implementation strategies have been inconsistent and lacking details [28,29,30]. Over the past decade, however, these strategies have been compiled into taxonomies and frameworks to facilitate researchers’ and practitioners’ ability to conceptualize, apply, test, and describe implementation strategies utilized in research and practice. The Expert Recommendations for Implementing Change (ERIC) project [28] describes a taxonomy of 73 discrete implementation strategies that have been leveraged to optimize the use of evidence in routine care [29, 31]. Additionally, as part of the ERIC project, an expert panel examined the relationships among the discrete implementation strategies to determine any themes and to categorize strategies in clusters [29]. Table 1 depicts how discrete implementation strategies are organized in the following clusters: use evaluative and iterative strategies, provide interactive assistance, adapt and tailor to the context, develop stakeholder interrelationships, train and educate stakeholders, support clinicians, engage consumers, utilize financial strategies, and change infrastructure.

Discrete and combined implementation strategies may be considered effective if they lead to improvements in implementation outcomes. Proctor et al. [32] defined the following eight outcomes in their Implementation Outcomes Framework (IOF) that are often perceived to be the “gold standard” outcomes in implementation research: acceptability, adoption, appropriateness, cost, feasibility, fidelity, penetration (e.g., reach), and sustainability. In other words, implementation outcomes are the effects of purposeful actions (e.g., strategies) designed to implement evidence-based or evidence-informed innovations and practices [32]. The ERIC taxonomy and IOF serve as examples of TMFs that provide a uniform language for characterizing implementation strategies and their associated implementation outcomes. These common nomenclatures help articulate implementation-related phenomena explanations, leading to an enhanced understanding of the relationship between implementation strategies and implementation outcomes [33]. As such, fields that have recently adopted implementation science principles—such as occupational therapy—should make a concentrated effort to frame their research methodologies using established implementation TMFs.

Although implementation research has seen significant progress in recent years, findings are only beginning to emerge specific to the allied health professions (e.g., OT) [24]. Implementation strategies such as educational meetings, audit and feedback techniques, and the use of clinical reminders hold promise for increasing the use of evidence by allied health professionals [24, 34]; however, there is little guidance for how these findings can be operationalized, particularly in stroke rehabilitation. This knowledge gap is particularly concerning given the Centers for Medicare & Medicaid Services (CMS)’ recent changes in payment models that provide reimbursement based on the value of services delivered. In other words, rehabilitation settings are reimbursed according to the quality of services implemented (as measured by improvements in patient outcomes) rather than the quantity of services provided. The increased attention on patient outcomes from the policy level (e.g., CMS) warrants the immediate need for OT practitioners to implement the highest quality of interventions with patients, such as stroke survivors, to improve patient outcomes and ensure that rehabilitation services are adequately reimbursed [35, 36].

As OT practitioners aim to implement high-quality, evidence-based interventions for stroke survivors, the OT profession must have a clear understanding of the strategies that have been utilized to support the use of evidence and their reported outcomes. To do this, occupational therapy and rehabilitation researchers must articulate explanations of implementation strategies and outcomes using commonly known TMFs, as well as the ERIC taxonomy and IOF. The purpose of this review is to explore the breadth of current implementation research and identify potential gaps in how occupational therapy researchers articulate their implementation strategies and report implementation outcomes for reproducibility in other research and practice contexts. Accordingly, this scoping review will address the following objectives:

-

1.

Synthesize the types of implementation strategies—using the ERIC taxonomy—utilized in occupational therapy research to support the use of evidence-based interventions and assessments in stroke rehabilitation.

-

2.

Synthesize the types of implementation outcomes—using the IOF—that have been measured to determine the effectiveness of implementation strategies in stroke rehabilitation.

-

3.

Identify additional implementation theories, models, and frameworks that have guided occupational therapy research in stroke rehabilitation.

-

4.

Describe the influence between implementation strategies and implementation outcomes.

Methods

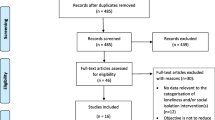

The scoping review methodology was guided by Arksey and O’Malley’s scoping review framework [37] and the Preferred Reporting Items for Systematic Reviews and Meta-Analyses Scoping Review (PRISMA-ScR) reporting recommendations [38]. The review team developed an initial study protocol (unregistered; available upon request) to address the review objectives and identify the breadth of literature examining implementation strategies and outcomes in stroke rehabilitation. The first author conducted preliminary searches to assess the available literature, allowing the team to revise the search strategy and search terms consistent with the iterative nature of scoping reviews. A detailed description of the search strategy can be found in the Appendix in Table 5.

Eligibility criteria

Studies were eligible for inclusion in the review if they (a) examined the implementation of interventions or assessments, (b) had a target population of adult (18 years and older) stroke survivors, (c) included occupational therapy practitioners, and (d) took place in the rehabilitation setting. Studies published in English between Jan 2000 and May 2020 were included as the occupational therapy profession called for immediate improvements in the use of evidence to inform practice at the turn of the millennium [39], the latter date marking when the authors began the bibliographic database search. The “rehabilitation setting” was defined as acute care hospitals and post-acute care home health agencies, skilled nursing facilities, long-term acute care hospitals, hospice, inpatient rehabilitation facilities and units, and outpatient centers. Studies were excluded if they (a) only reported on intervention effectiveness (not implementation strategy effectiveness), (b) assessed psychometrics, (c) were not available in English, (d) examined pediatric patients, (e) were published as a review or conceptual article, and (f) failed to include occupational therapy practitioners as study participants.

Information source and search strategy

The following five electronic databases were accessed to identify relevant studies in the health and mental health fields: PubMed, CINAHL, Scopus, Google Scholar, and PsychINFO. Implementation Science and Implementation Science Communications were also hand searched, as they are the premier peer-reviewed journals in dissemination and implementation research. Given the diverse terminology used to describe implementation strategies in the stroke rehabilitation field, we developed an extensive list of search terms based on previous scoping reviews that have assessed the breadth of implementation research in rehabilitation. The most recent search was conducted in May 2020. Sample search term combinations included “knowledge translation”[All Fields] OR “implement*”[All Fields]) AND “occupational therap*”[All Fields] AND “stroke”[MeSH Terms] OR “stroke” (see Additional file 1 for the complete terminology list and a database search sample). All studies identified through the search strategy were uploaded into Covidence for study selection.

Selection process

Beginning with the study title/abstract screening phase, the first and third authors (JEM and LAJ) applied the inclusion and exclusion criteria to all studies that were identified in the initial search (agreement probability = 0.893). When authors disagreed during title/abstract screening, the second author (JLP) decided on studies to advance to the full-text review phase. Similar to scoping review screening methods conducted in the implementation science field [40], all authors reviewed a random sample (15%) of the full-text articles in the full-text screening phase to decide on study inclusion and evaluate consistency in how each author applied the inclusion/exclusion criteria. The authors achieved 100% agreement and proceeded with screening each full-text article individually.

Data charting—extraction process

An adapted version of Arksey and O’Malley’s data charting form was created to extract variables of interest from each included study. In the data extraction phase, all authors extracted data from another random 15% of included studies to pilot test the charting form and confirm the final variables to be extracted. Authors met biweekly to share progress on independent data extraction and compare the details of data extracted across authors. Variables were extracted that represented study design, population, setting, guiding frameworks, and the description of the intervention/assessment being implemented; however, the review's primary aim was extracting information relative to implementation strategies and associated implementation outcomes.

To do this, a two-step process to extract data on implementation strategies and outcomes was used. In Step 1, team members charted the specific terminology used to describe strategies or outcomes in each study. In Step 2, the review team used a directed content analysis approach to map this charted information and terminology to the ERIC taxonomy [28] and the IOF [32]. For instance, an implementation strategy that authors initially described as “holding in-services with clinicians” was “translated” to “conducting educational meetings.” Likewise, implementation outcomes that were initially described as “adherence” were converted to “fidelity.” This translation process was guided by descriptions of implementation strategies as listed in the original 2015 ERIC project publication (as well as the ERIC ancillary material) and the seminal IOF publication from 2011. The extracted and translated data was entered using the Excel for Microsoft 365 program.

Synthesis process

The authors followed Levac et al.’s [41] recommendations for advancing scoping methodology to synthesize data. One author (JEM) cleaned the data (e.g., spell check, cell formatting) to ensure that Excel accurately and adequately performed operations, calculations, and analyses (e.g., creating pivot tables, charts). As scoping reviews do not seek to aggregate findings from different studies or weigh evidence [37, 41], only descriptive analyses (e.g., frequencies, percentages) were conducted from the extracted data to report the characteristics of the included studies and thematic clusters. The descriptive data and results of the directed content analysis were organized into tables using themes to articulate the review’s findings that addressed the research objectives.

Results

The search yielded 1219 articles. After excluding duplicates, 868 titles and abstracts were reviewed for inclusion. Among those, 49 articles progressed to full-text review, and 26 met the criteria for data extraction, as shown in Fig. 1.

PRISMA flow diagram [42] outlining the review’s selection process

Study characteristics

Table 2 describes the studies’ characteristics. The studies were published between 2005 and 2020, all within the last 10 years except one [43]. Studies were most set in Australia (27%) and most commonly conducted in an inpatient rehabilitation healthcare setting (65%). While two studies targeted practitioners in any healthcare setting by implementing an educational related implementation strategy (e.g., conduct ongoing training) either at an offsite location [44] or nonphysical [45] environment, none of the studies was conducted in a long-term acute care hospital (LTACH) or hospice setting. Most studies used a pre–post research design (50%), followed by process evaluation (14%). Studies used quantitative methods (69%) most frequently, with similar utilization between qualitative (12%) and mixed-method (19%) approaches. While the studies primarily implemented stroke-related interventions (92%), this was not mutually exclusive, as some implemented a combination of an intervention (e.g., TagTrainer), an assessment (e.g., Canadian Occupational Performance Measure (COPM)), or clinical knowledge (e.g., upper limb poststroke impairments).

Implementation strategies

The studies included in this review collectively utilized 48 of the 73 discrete strategies drawn from the ERIC taxonomy. Discrete implementation strategies per study ranged from 1 to 21, with a median of four strategies used per study. The two most commonly used implementation strategies applied in 42% of studies were distribute educational materials [44, 46,47,48,49,50,51,52,53,54,55] and assess for readiness and identify barriers and facilitators [47,48,49, 52, 56,57,58,59,60,61,62]. The latter strategy implies two separate actions; however, only two studies [48, 49] assessed readiness “and” identified barriers and facilitators. Other discrete implementation strategies frequently used included: conduct educational outreach visits, conduct ongoing training, audit & provide feedback, and develop educational materials. Of all studies included in this review, 88% used at least one of these six primary strategies.

Thematic clusters of implementation strategies

Waltz et al. [29] identified nine thematic clusters using the ERIC taxonomy (Table 1), which allowed further exploration of another dimension of the implementation strategies. Table 1 provides a summary of how the implementation strategies were organized in terms of thematic clusters. Twenty three of the 26 studies [43, 44, 46,47,48,49,50,51,52,53,54,55,56,57,58,59,60, 62,63,64,65,66,67] implemented at least one discrete implementation strategy in the cluster, train and educate stakeholders, followed by 17 of 26 studies which examined strategies in the use evaluative and iterative strategies cluster [47,48,49,50, 52, 55,56,57,58,59,60,61,62, 65,66,67,68]. The train and educate stakeholders cluster comprises four of the six most used implementation strategies: conduct ongoing training, develop educational materials, conduct educational outreach visits, and distribute educational material. The other two commonly used implementation strategies, assess for readiness and identify barriers and facilitators and audit and provide feedback, are categorized in the cluster use evaluative and iterative strategies.

Within the change infrastructure cluster, one study used the implementation strategy mandate change [50], and another study used change physical structure & equipment [65]. Within the cluster of utilize financial strategies, one study [50] used the following implementation strategies: alter incentive/allowance structure and fund & contract for the clinical innovation. The included studies applied the least number of strategies from this cluster, with only two out of the nine possible implementation strategies being used—the lowest percentage, 1%, used amongst the thematic clusters.

Implementation outcomes

Table 3 provides a summary of the measurements and implementation outcomes used in each study. The implementation outcomes measured per study ranged from 1 to 4. Studies most frequently included two implementation outcomes, with adoption being frequently measured in 81% of studies [43,44,45, 48,49,50,51,52,53,54,55, 57, 59,60,61,62,63,64, 66,67,68]. Fidelity followed and was measured in 42% of studies [43, 47, 52, 53, 56,57,58, 60, 63, 65, 68]. Seven of the eight implementation outcomes were measured in at least one of the studies, whereas implementation cost was the only implementation outcome not addressed in any of the studies. Moreover, Moore et al.’s [50] study is the only one to measure penetration and sustainability. All the studies used various approaches to measuring implementation outcomes, as shown in Table 3. For example, 11 of 20 studies measuring adoption used administrative data, observations, or qualitative or semi-structured interviews [43, 52, 53, 55, 57, 59, 60, 62,63,64, 66, 68].

Theories, models, and frameworks

Notably, of the 26 included articles, 12 explicitly stated using a TMF to guide the selection and application of implementation strategies (Table 4). The most common supporting TMF employed among the articles (n = 5) was the Knowledge-to-Action Process framework [44, 48,49,50, 61], categorized as a process model. Classic or classic change theory was the next most commonly applied category of TMFs, including the Behavior Change Wheel [47, 57, 60] (n = 3) and Theory of Planned Behavior [44] (n = 1). No implementation evaluation frameworks were utilized (e.g., Reach, Efficacy, Adoption, Implementation, Maintenance (RE-AIM) or Implementation Outcomes Framework). A select number of studies described the components of their implementation strategies following reporting guidelines. Two studies [47, 64] used the Template for Intervention Description and Replication (TIDieR) checklist. One study [47] used the Standards for Quality Improvement Reporting Excellence (SQUIRE). Moreover, one study [57] followed the Standards for Reporting Implementation studies (StaRI) checklist but did not explicitly mention an implementation framework to guide study design.

Association between implementation strategies and implementation outcomes

The findings from studies examining the effect of implementation strategies on implementation outcomes were generally mixed. While 42% of studies used strategies that led to improved implementation outcomes, 50% led to inconclusive results. For instance, McEwen et al. [51] developed a multifaceted implementation strategy that involved conducting educational meetings, providing ongoing education, appointing evidence champions, distributing educational materials, and reminding clinicians to implement evidence in practice. These strategies led to increased adoption of their target EBP, the Cognitive Orientation to daily Occupational Performance (CO-OP) treatment approach, suggesting this multifaceted strategy may facilitate EBP implementation among OTs. Alternatively, Salbach et al. [48] examined the impact of an implementation strategy consisting of educational meetings, evidence champions, educational materials, local funding, and implementation barrier identification that pertained to stroke guideline adoption. However, these strategies only led to the increased adoption of two out of 18 recommendations described in the stroke guidelines. Levac et al. [64] also utilized a combination of educational meetings, dynamic training, reminders, and expert consultation to increase the use of virtual reality therapy with stroke survivors, yet found these combined strategies did not lead to an increase in virtual reality adoption among practitioners serving stroke survivors.

Discussion

This scoping review is the first to examine implementation strategy use, implementation outcome measurement, and the application of theories, models, and frameworks in stroke rehabilitation and occupational therapy. Given that implementation science is still nascent in occupational therapy, this review’s purpose was to synthesize implementation strategies and outcomes using uniform language—as presented by the ERIC and IOF taxonomies—to clearly understand the types of strategies being used and outcomes measured in the occupational therapy and stroke rehabilitation fields. Importantly, this review also calls attention to the value of applying theories, models, and frameworks to guide implementation strategy selection and implementation outcome measurement.

Operationalizing implementation strategies and outcomes are essential for reproducibility in subsequent research studies and in practice. Without a clear language for defining strategies and reported outcomes, stroke rehabilitation and occupational therapy researchers place themselves at risk of contributing to what is currently being referred to as the “secondary” research-to-practice gap. This secondary gap is emerging in implementation science because empirical findings from implementation science have seldom been integrated into clinical practice [70]. For instance, the present review found that the distribution of educational materials was one of the most commonly utilized implementation strategies, yet it has been well established that educational materials alone are typically insufficient for changing clinical practice behaviors [71]. One potential reason that may explain why implementation science discoveries are rarely integrated into real-world practice may pertain to the fact that implementation strategies and outcomes are not consistently named or described, leading to difficulties replicating these strategies in real-world contexts. Using the ERIC and IOF to guide the description of strategies and reported outcomes is a logical first step in enhancing the replication of effective strategies for improving implementation outcomes.

Further, replication can be enhanced by describing strategies according to specification guidelines. Four studies in this review described implementation strategies using reporting standards such as the Template for Intervention Description and Replication (TIDieR) checklist, the Standards for Quality Improvement Reporting Excellence (SQUIRE), and Standards for Reporting Implementation studies (StaRI). Though the use of these reporting standards is promising for optimizing replication, Proctor et al. [27] also provide recommendations for how to specify implementation strategies designed to improve specific implementation outcomes. These recommendations include clearly naming the implementation strategy, describing it, and specifying the strategy according to the following parameters: actor, action, action target, temporality, dose, outcome affected, and justification. These recommendations have been applied in the health and human services body of literature [72, 73], but their application remains scarce in the fields of rehabilitation and occupational therapy [74].

One noteworthy finding from this review was the variation with which studies were guided by implementation TMFs. Fewer than half of the studies (n = 12) were informed by TMFs drawn from the implementation literature. The Knowledge-to-Action Process framework was applied in five studies, followed by the Behavior Change Wheel and Normalization Process Theory, represented in three and two studies, respectively. The lack of TMF application may also explain some of the variability in implementation strategy effectiveness. Interestingly, all 12 studies with TMF underpinnings found either mixed or beneficial outcomes as a result of their implementation strategies.

Conversely, the three studies that found no effect of their strategies on implementation outcomes were not informed by any implementation TMF. While this subset of studies is too small to draw definitive conclusions, the importance of using TMFs to guide implementation studies have been well established and endorsed by leading implementation scientists to identify the determinants that may influence implementation, understand relationships between constructs, and inform implementation project evaluations [25, 69, 75]. Despite their recognized importance, TMFs are often applied haphazardly in implementation projects, and the selection of appropriate TMFs is complicated given the proliferation of TMFs in the implementation literature [33]. While tools (e.g., dissemination-implementation.org/content/select.aspx) are available to help researchers in TMF selection, occupational therapy researchers in stroke rehabilitation who are new to the field of implementation science may be unfamiliar with such tools and resources. For instance, Birken et al. have developed the Theory, Model, and Framework Comparison and Selection Tool (T-CaST) that assesses the “fit” of different TMFs with implementation projects based on four areas: usability, testability, applicability, and acceptability [25]. Similarly, TMF experts have also developed a list of 10 recommendations for selecting and applying TMFs, and published specific case examples of how one TMF, the Exploration, Preparation, Implementation, Sustainment framework, has guided several implementation studies and projects [76].

In addition to synthesizing implementation strategies and outcomes that have been examined in the stroke rehabilitation literature, this review also corroborates other reviews in the rehabilitation field, which have found the mixed effectiveness of implementation strategies. A Cochrane review by Cahill et al. [77] was unable to determine the effect of implementation interventions on healthcare provider adherence to evidence-based practice in stroke rehabilitation due to limited evidence and lower-quality study designs. However, one encouraging finding from the present review, and specific to the occupational therapy field, was the frequent use of the following implementation strategy: assess for readiness and identify barriers and facilitators. The assessment of barriers and facilitators is a central precursor to selecting implementation strategies that effectively facilitate the use of evidence in practice [78]. Implementation strategies that are not responsive to these barriers and facilitators frequently fail to produce sufficient and sustainable practice improvements [78, 79].

Although identifying implementation barriers and facilitators is of paramount importance in implementation studies, the processes researchers use to select relevant implementation strategies based on these barriers and facilitators are often unclear. Vratsistas-Curto et al. [47], for instance, assessed determinants of implementation at the start of their study and mapped determinants to the Theoretical Domains Framework and Behavior Change Wheel to inform implementation strategy selection. This exemplar use of TMFs can strengthen the rigor of implementation strategy selection and elevate strategy effectiveness. However, not all implementation studies are informed by underlying TMFs, calling into question the rationale behind why specific strategies are used in certain contexts. Going forward, as the fields of stroke rehabilitation and occupational therapy grow their interest in implementation, researchers must be transparent when explaining the process and justification of their implementation strategy selection. Without this transparency, occupational therapy stakeholders and other rehabilitation professionals may continue to use implementation strategies without systematically matching them to identified barriers and facilitators. To facilitate strategy selection, Waltz et al. [78] gathered expert opinion data and developed a tool matching implementation barriers to implementation strategies. The tool draws language from the Consolidated Framework for Implementation Research (CFIR) [23] and matches identified CFIR barriers to the ERIC taxonomy of implementation strategies. Using the CFIR-ERIC matching tool may be a viable option for occupational therapy and stroke rehabilitation researchers who understand determinants of evidence implementation but require guidance when selecting relevant implementation strategies.

The other commonly examined implementation strategy identified in this review involved the use of educational meetings and materials. Eleven studies used one or more of these educational techniques to facilitate the implementation of evidence into practice. However, in the context of these educational techniques, all studies examining educational strategies failed to specify their implementation strategies as recommended by reporting guidelines [27]. Perhaps this lack of strategy specification can be attributed to the interdisciplinary divide in implementation nomenclature. Included studies from the present review often examined “knowledge translation interventions” or “knowledge translation strategies” (e.g., [64], [50]), and no studies specifically referenced the ERIC taxonomy or IOF. Across the rehabilitation field, the term “knowledge translation” is commonly used as a synonym for moving research into practice and is a term that has been widely accepted in the rehabilitation field since 2000 [24, 80, 81]. While international rehabilitation leaders have articulated distinctions between “knowledge translation” and “implementation science,” there is still tremendous work to be done in disseminating these distinctions to the broader rehabilitation audience [80, 81].

Further, additional research is also needed to evaluate the cost of implementing particular interventions in practice. Cost was the only implementation outcome that was not evaluated in any of the studies included in this review and points to a major knowledge gap in both the implementation science and stroke rehabilitation fields. Given that the lack of funds to cover implementation costs is a substantial barrier to EBP implementation in stroke rehabilitation [22], we must understand the costs associated with evidence-based interventions, programs, and assessments and the costs of using implementation strategies in stroke rehabilitation settings. One option for assessing these costs is the conduction of economic evaluations. For instance, Howard-Wilsher et al. [82] published a systematic overview of economic evaluations of health-related rehabilitation, including occupational therapy. Economic evaluations may be defined as comparing two or more interventions and examining both the costs and consequences of the intervention alternatives [82, 83]. Economic evaluations most commonly consist of cost-effectiveness analysis (CEA) but can consist of cost-utility, cost-benefit, cost-minimization, or cost-identification analysis [84, 85]. Consideration of resource allocation and costs is critically needed to make clinical and policy decisions about occupational therapy interventions [82] and should be a focus of future implementation work in occupational therapy and rehabilitation.

Limitations

While the present scoping review adds novel contributions to the implementation science field, stroke rehabilitation, and occupational therapy, it includes several limitations. First, scoping review methodologies have been critiqued for not requiring quality and bias assessments of included articles [41, 86]. Given that this review’s focus was to synthesize the breadth of implementation strategies and outcomes measured in a field (e.g., occupational therapy) newer to implementation science, critical appraisals and bias assessments were deemed “not applicable” by the review team, a distinction that is supported by current PRISMA-ScR reporting guidelines. Second, while a comprehensive search was conducted to capture all relevant literature, the review team could have further enhanced their search strategy by consulting with an institutional librarian or performing backward/forward searching to maximize search specificity. Third, the search was restricted to studies that included occupational therapy as the primary service provider of interest. Thus, most of the studies utilized implementation strategies at the provider level. The authors recognize that the effective implementation of best practices often requires organizational- and system-level changes; therefore, the findings do not represent strategies and outcomes applicable to stroke rehabilitation clinics and the more extensive healthcare system. Lastly, the results of this scoping review returned a relatively small sample size, and therefore, conclusions should be interpreted in consideration of the available evidence.

Conclusion

This scoping review revealed the occupational therapy profession’s use of implementation strategies and measurement of implementation outcomes in stroke rehabilitation. The fields of occupational therapy and stroke rehabilitation have begun to create a small body of implementation science literature; however, occupational therapy researchers and practitioners must continue to develop and test implementation strategies to move evidence into practice. Moreover, implementation strategies and outcomes should be described using uniform language that allows for comparisons across studies. The application of this uniform language—such as the language in the ERIC and IOF—will streamline the synthesis of knowledge (e.g., systematic reviews, meta-analyses) that will point researchers and practitioners to effective strategies that promote the use of evidence in practice. Without consistent nomenclature, it may continue to prove challenging to understand the key components of implementation strategies that are linked to improved implementation outcomes and ultimately improved care. By applying the ERIC taxonomy and IOF and using TMFs to guide study activities, occupational therapy and stroke rehabilitation researchers can advance both the fields of rehabilitation and implementation science.

Availability of data and materials

Data analyzed in this review is available in the supplemental file. The review protocol and data collection forms are available by request to the corresponding author.

Abbreviations

- OT:

-

Occupational therapy

- CFIR:

-

Consolidated Framework for Implementation Research

- ERIC:

-

Expert Recommendations for Implementing Change

- IOF:

-

Implementation Outcomes Framework

- CMS:

-

Centers of Medicare and Medicaid Services

- PRISMA-ScR:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses Scoping Review

- CO-OP:

-

Cognitive Orientation to daily Occupational Performance

- TMF:

-

Theory, model, or framework

- RE-AIM:

-

Reach, Efficacy, Adoption, Implementation, and Maintenance

- PRISM:

-

Practical, Robust Implementation and Sustainability Model

- iPARIHS:

-

Integrated Promoting Action on Research Implementation in Health Services

- EPIS:

-

Exploration, Adoption/Preparation, Implementation, Sustainment

- TIDieR:

-

Template for Intervention Description and Replication

- SQUIRE:

-

Standards for Quality Improvement Reporting Excellence

- STaRI:

-

Standards for Reporting Implementation studies

- JBI:

-

Joanna Briggs Institute

References

Virani SS, Alonso A, Benjamin EJ, Bittencourt MS, Callaway CW, Carson AP, Chamberlain AM, Chang AR, Cheng S, Delling FN, et al. Heart Disease and Stroke Statistics—2020 Update: A Report From the American Heart Association. Circulation. 2020;141(9):e139–e596.

Kim J, Thayabaranathan T, Donnan GA, Howard G, Howard VJ, Rothwell PM, Feigin V, Norrving B, Owolabi M, Pandian J, et al. Global Stroke Statistics 2019. Int J Stroke. 2020;15(8):819–38.

Global, regional, and national burden of stroke, 1990-2016: a systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2019;18(5):439–58.

Global atlas on cardiovascular disease prevention and control. In. Edited by Mendis S PP, Norrving, B. Geneva: World Health Organization; 2011. p. 155.

Lindsay MP, Norrving B, Sacco RL, Brainin M, Hacke W, Martins S, Pandian J, Feigin V. World Stroke Organization (WSO): Global Stroke Fact Sheet 2019. Int J Stroke. 2019;14(8):806–17.

Gagliardi AR, Armstrong MJ, Bernhardsson S, Fleuren M, Pardo-Hernandez H, Vernooij RWM, Willson M, Brereton L, Lockwood C, Sami Amer Y. The Clinician Guideline Determinants Questionnaire was developed and validated to support tailored implementation planning. J Clin Epidemiol. 2019;113:129–36.

Vos T, Lim SS, Abbafati C, Abbas KM, Abbasi M, Abbasifard M, Abbasi-Kangevari M, Abbastabar H, Abd-Allah F, Abdelalim A, et al. Global burden of 369 diseases and injuries in 204 countries and territories, 1990–2019: a systematic analysis for the Global Burden of Disease Study 2019. Lancet. 2020;396(10258):1204–22.

Benjamin EJ, Virani SS, Callaway CW, Chamberlain AM, Chang AR, Cheng S, Chiuve SE, Cushman M, Delling FN, Deo R, et al. Heart Disease and Stroke Statistics-2018 Update: A Report From the American Heart Association. Circulation. 2018;137(12):e67–e492.

Gorelick PB. The global burden of stroke: persistent and disabling. Lancet Neurol. 2019;18(5):417–18.

Nilsen D, Geller D. The Role of Occupational Therapy in Stroke Rehabilitation [fact sheet]. In.: American Occupational Therapy Association; 2015.

National Institute of Neurological Disorders and Stroke (NINDS). Post-Stroke Rehabilitation Fact Sheet [NIH Publication 20‐NS‐4846]. In. Bethesda; 2020.

World Health Organization: Access to rehabilitation in primary health care: an ongoing challenge. In. Geneva: World Health Organization; 2018.

Occupational Therapy Practice Framework: Domain and Process—Fourth Edition. The American Journal of Occupational Therapy 2020, 74(Supplement_2):7412410010p7412410011-7412410010p7412410087.

Govender P, Kalra L. Benefits of occupational therapy in stroke rehabilitation. Expert Rev Neurother. 2007;7:1013.

Hatem SM, Saussez G, Della Faille M, Prist V, Zhang X, Dispa D, Bleyenheuft Y. Rehabilitation of Motor Function after Stroke: A Multiple Systematic Review Focused on Techniques to Stimulate Upper Extremity Recovery. Front Hum Neurosci. 2016.

Jolliffe L, Lannin NA, Cadilhac DA, Hoffmann T. Systematic review of clinical practice guidelines to identify recommendations for rehabilitation after stroke and other acquired brain injuries. BMJ Open. 2018;8(2):e018791.

Gillen G, Nilsen DM, Attridge J, Banakos E, Morgan M, Winterbottom L, et al. Effectiveness of interventions to improve occupational performance of people with cognitive impairments after stroke: an evidence-based review. Am J Occup Ther. 2014;69(1):6901180040 p1-p9.

Wolf TJ, Chuh A, Floyd T, McInnis K, Williams E. Effectiveness of occupation-based interventions to improve areas of occupation and social participation after stroke: an evidence-based review. Am J Occup Ther. 2015;69(1):6901180060 p1–11.

Samuelsson K, Wressle E. Turning evidence into practice: barriers to research use among occupational therapists. Br J Occup Ther. 2015;78(3):175–81.

Institute of Medicine (US) Committee on Quality of Health Care in America. Crossing the quality chasm: a new health system for the 21st century. Washington, DC: The National Academies Press; 2001. p. 360.

Gustafsson L, Molineux M, Bennett S. Contemporary occupational therapy practice: the challenges of being evidence based and philosophically congruent. Aust Occup Ther J. 2014;61(2):121–3.

Juckett LA, Wengerd LR, Faieta J, Griffin CE. Evidence-based practice implementation in stroke rehabilitation: a scoping review of barriers and facilitators. Am J Occup Ther. 2020;74(1):7401205050 p1-p14.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50.

Jones CA, Roop SC, Pohar SL, Albrecht L, Scott SD. Translating knowledge in rehabilitation: systematic review. Phys Ther. 2015;95(4):663–77.

Birken SA, Rohweder CL, Powell BJ, Shea CM, Scott J, Leeman J, Grewe ME, Alexis Kirk M, Damschroder L, Aldridge WA, et al. T-CaST: an implementation theory comparison and selection tool. Implement Sci. 2018;13(1).

Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3(1):32.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8(1):139.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):21.

Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, Proctor EK, Kirchner JE. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. 2015;10(1).

Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69(2):123–57.

Bunger AC, Powell BJ, Robertson HA, Macdowell H, Birken SA, Shea C. Tracking implementation strategies: a description of a practical approach and early findings. Health Res Policy Syst. 2017;15(1).

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health Ment Health Serv Res. 2011;38(2):65–76.

Birken SA, Powell BJ, Shea CM, Haines ER, Alexis Kirk M, Leeman J, Rohweder C, Damschroder L, Presseau J. Criteria for selecting implementation science theories and frameworks: results from an international survey. Implement Sci. 2017;12(1).

Cohen AJ, Rudolph JL, Thomas KS, Archambault E, Bowman MM, Going C, et al. Food insecurity among veterans: resources to screen and intervene. Fed Pract. 2020;37(1):16–23.

Centers of Medicare & Medicaid Services: A Medicare Learning Network (MLN) Event: Overview of the Patient-Driven Groupings Model (PDGM). In.; February 2019.

Centers of Medicare & Medicaid Services: A Medicare Learning Network (MLN) Event: SNF PPS: Patient Driven Payment Model. In.; 2018.

Arksey H, O'Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19–32.

Tricco AC, Lillie E, Zarin W, O'Brien KK, Colquhoun H, Levac D, et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–73.

Holm MB. Our Mandate for the New Millennium: Evidence-Based Practice. Am J Occup Ther. 2000;54(6):575–85.

Davis R, D’Lima D. Building capacity in dissemination and implementation science: a systematic review of the academic literature on teaching and training initiatives. Implement Sci. 2020;15(1).

Levac D, Colquhoun H, O'Brien KK. Scoping studies: advancing the methodology. Implement Sci. 2010;5(1):69.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71.

McEwen S, Szurek K, Polatajko HJ, Rappolt S. Rehabilitation education program for stroke (REPS): learning and practice outcomes. J Contin Educ Heal Prof. 2005;25(2):105–15.

Doyle SD, Bennett S. Feasibility and effect of a professional education workshop for occupational therapists' management of upper-limb poststroke sensory impairment. Am J Occup Ther. 2014;68(3):e74–83.

Connell LA, McMahon NE, Watkins CL, Eng JJ. Therapists' use of the Graded Repetitive Arm Supplementary Program (GRASP) intervention: a practice implementation survey study. Phys Ther. 2014;94(5):632–43.

Braun SM, van Haastregt JC, Beurskens AJ, Gielen AI, Wade DT, Schols JM. Feasibility of a mental practice intervention in stroke patients in nursing homes; a process evaluation. BMC Neurol. 2010;10:9.

Vratsistas-Curto A, McCluskey A, Schurr K. Use of audit, feedback and education increased guideline implementation in a multidisciplinary stroke unit. BMJ Open Qual. 2017;6(2):e000212.

Salbach NM, Wood-Dauphinee S, Desrosiers J, Eng JJ, Graham ID, Jaglal SB, et al. Facilitated interprofessional implementation of a physical rehabilitation guideline for stroke in inpatient settings: process evaluation of a cluster randomized trial. Implement Sci. 2017;12:1–11.

Petzold A, Korner-Bitensky N, Salbach NM, Ahmed S, Menon A, Ogourtsova T. Increasing knowledge of best practices for occupational therapists treating post-stroke unilateral spatial neglect: results of a knowledge-translation intervention study. J Rehabil Med. 2012;44(2):118–24.

Moore JL, Carpenter J, Doyle AM, Doyle L, Hansen P, Hahn B, et al. Development, implementation, and use of a process to promote knowledge translation in rehabilitation. Arch Phys Med Rehabil. 2018;99(1):82–90.

McEwen SE, Donald M, Jutzi K, Allen KA, Avery L, Dawson DR, Egan M, Dittmann K, Hunt A, Hutter J, et al. Implementing a function-based cognitive strategy intervention within inter-professional stroke rehabilitation teams: Changes in provider knowledge, self-efficacy and practice. PLoS One. 2019;14(3).

McCluskey A, Ada L, Kelly PJ, Middleton S, Goodall S, Grimshaw JM, et al. A behavior change program to increase outings delivered during therapy to stroke survivors by community rehabilitation teams: the Out-and-About trial. Int J Stroke. 2016;11(4):425–37.

Levac DE, Glegg SM, Sveistrup H, Colquhoun H, Miller P, Finestone H, et al. Promoting therapists' use of motor learning strategies within virtual reality-based stroke rehabilitation. PLoS One. 2016;11(12):e0168311.

Frith J, Hubbard I, James C, Warren-Forward H. In the driver's seat: development and implementation of an e-learning module on return-to-driving after stroke. Occup Ther Health Care. 2017;31(2):150–61.

Eriksson C, Erikson A, Tham K, Guidetti S. Occupational therapists experiences of implementing a new complex intervention in collaboration with researchers: a qualitative longitudinal study. Scand J Occup Ther. 2017;24(2):116–25.

Bland MD, Sturmoski A, Whitson M, Harris H, Connor LT, Fucetola R, et al. Clinician adherence to a standardized assessment battery across settings and disciplines in a poststroke rehabilitation population. Arch Phys Med Rehabil. 2013;94(6):1048–53.e1.

Stewart C, Power E, McCluskey A, Kuys S, Lovarini M. Evaluation of a staff behaviour change intervention to increase the use of ward-based practice books and active practice during inpatient stroke rehabilitation: a phase-1 pre–post observational study. Clin Rehabil. 2020.

Schneider EJ, Lannin NA, Ada L. A professional development program increased the intensity of practice undertaken in an inpatient, upper limb rehabilitation class: a pre-post study. Aust Occup Ther J. 2019;66(3):362–8.

McCluskey A, Middleton S. Increasing delivery of an outdoor journey intervention to people with stroke: a feasibility study involving five community rehabilitation teams. Implement Sci. 2010;5:59.

McCluskey A, Massie L, Gibson G, Pinkerton L, Vandenberg A. Increasing the delivery of upper limb constraint-induced movement therapy post-stroke: A feasibility implementation study. Aust Occup Ther J. 2020.

Luconi F, Rochette A, Grad R, Hallé MC, Chin D, Habib B, Thomas A. A multifaceted continuing professional development intervention to move stroke rehabilitation guidelines into professional practice: a feasibility study. Top Stroke Rehabil. 2020.

Clarke DJ, Godfrey M, Hawkins R, Sadler E, Harding G, Forster A, et al. Implementing a training intervention to support caregivers after stroke: a process evaluation examining the initiation and embedding of programme change. Implement Sci. 2013;8(1):96.

Connell LA, McMahon NE, Harris JE, Watkins CL, Eng JJ. A formative evaluation of the implementation of an upper limb stroke rehabilitation intervention in clinical practice: a qualitative interview study. Implement Sci. 2014;9:90.

Levac D, Glegg SM, Sveistrup H, Colquhoun H, Miller PA, Finestone H, et al. A knowledge translation intervention to enhance clinical application of a virtual reality system in stroke rehabilitation. BMC Health Serv Res. 2016;16(1):557.

Teriö M, Eriksson G, Kamwesiga JT, Guidetti S. What's in it for me? A process evaluation of the implementation of a mobile phone-supported intervention after stroke in Uganda. BMC Public Health. 2019;19(1):562.

Tetteroo D, Timmermans AA, Seelen HA, Markopoulos P. TagTrainer: supporting exercise variability and tailoring in technology supported upper limb training. J Neuroeng Rehabil. 2014;11:140.

Willems M, Schröder C, van der Weijden T, Post MW, Visser-Meily AM. Encouraging post-stroke patients to be active seems possible: results of an intervention study with knowledge brokers. Disabil Rehabil. 2016;38(17):1748–55.

Kristensen H, Hounsgaard L. Evaluating the impact of audits and feedback as methods for implementation of evidence in stroke rehabilitation. Br J Occup Ther. 2014;77(5):251–9.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53.

Albers B, Shlonsky A, Mildon R. Implementation Science 3.0: Springer International Publishing; 2020.

Beidas RS, Edmunds JM, Marcus SC, Kendall PC. Training and consultation to promote implementation of an empirically supported treatment: a randomized trial. Psychiatr Serv. 2012;63(7):660–5.

Gold R, Bunce AE, Cohen DJ, Hollombe C, Nelson CA, Proctor EK, et al. Reporting on the strategies needed to implement proven interventions: an example from a “real-world” cross-setting implementation study. Mayo Clin Proc. 2016;91(8):1074–83.

Bunger AC, Hanson RF, Doogan NJ, Powell BJ, Cao Y, Dunn J. Can learning collaboratives support implementation by rewiring professional networks? Adm Policy Ment Health Ment Health Serv Res. 2016;43(1):79–92.

Juckett LA, Robinson ML. Implementing evidence-based interventions with community-dwelling older adults: a scoping review. Am J Occup Ther. 2018;72(4):7204195010 p1-p9.

Ridde V, Pérez D, Robert E. Using implementation science theories and frameworks in global health. BMJ Glob Health. 2020;5(4):e002269.

Moullin JC, Dickson KS, Stadnick NA, Albers B, Nilsen P, Broder-Fingert S, Mukasa B, Aarons GA. Ten recommendations for using implementation frameworks in research and practice. Implement Sci Commun. 2020;1(1).

Cahill LS, Cahill LS, Carey LM, Lannin NA, Turville M, Neilson CL, et al. Implementation interventions to promote the uptake of evidence-based practices in stroke rehabilitation. Cochrane Libr. 2020;2020(10):CD012575.

Waltz TJ, Powell BJ, Fernández ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement Sci. 2019;14(1).

Elwy AR, Wasan AD, Gillman AG, Johnston KL, Dodds N, Mcfarland C, et al. Using formative evaluation methods to improve clinical implementation efforts: description and an example. Psychiatry Res. 2020;283:112532.

Tetroe JM, Graham ID, Foy R, Robinson N, Eccles MP, Wensing M, et al. Health research funding agencies' support and promotion of knowledge translation: an international study. Milbank Q. 2008;86(1):125–55.

Lane JP, Flagg JL. Translating three states of knowledge–discovery, invention, and innovation. Implement Sci. 2010;5(1):9.

Howard-Wilsher S, Irvine L, Fan H, Shakespeare T, Suhrcke M, Horton S, et al. Systematic overview of economic evaluations of health-related rehabilitation. Disabil Health J. 2016;9(1):11–25.

Drummond MF, Sculpher MJ, Claxton K, Stoddart GL, Torrance GW. Methods for the Economic Evaluation of Health Care Programmes, 4, reprint edn. Oxford University Press; 2015.

Hunink M, Glasziou P, Pliskin J, Weinstein M, Wittenberg E, Drummond M, et al. Decision making in health and medicine: integrating evidence and values. 2nd ed: Cambridge University Press; 2014. p. 446.

Quality Enhancement Research Initiative (QUERI): Health Services Research & Development Service QUERI Economic Analysis Guidelines. In. Edited by US Department of Veterans Affairs; 2021.

Colquhoun HL, Levac D, O'Brien KK, Straus S, Tricco AC, Perrier L, et al. Scoping reviews: time for clarity in definition, methods, and reporting. J Clin Epidemiol. 2014;67(12):1291–4.

Acknowledgments

The authors are also grateful for valuable comments on earlier proposal ideas: Gavin R. Jenkins and Gregory Orewa.

Funding

No funding was received.

Author information

Authors and Affiliations

Contributions

JEM conceptualized the purpose of this scoping review. JEM and LAJ developed a research protocol informed by seminal scoping review and implementation science frameworks. JEM, LAJ, and JLP carried out all aspects of data collection, analysis, and synthesis. JEM and LAJ wrote the first draft of the manuscript, and both revisions were made together with JLP. JLP assisted LAJ with minor revision edits. All authors, JEM, LAJ, and JLP, approved the final version and revisions.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) Checklist. The checklist contains 20 essential reporting items and two optional items when completing a scoping review [38].

Additional file 2.

Supplemental Data File. The spreadsheet contains bibliographic search, taxonomies that guided data extraction, and the data extracted for this review.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Murrell, J.E., Pisegna, J.L. & Juckett, L.A. Implementation strategies and outcomes for occupational therapy in adult stroke rehabilitation: a scoping review. Implementation Sci 16, 105 (2021). https://doi.org/10.1186/s13012-021-01178-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-021-01178-0