Abstract

Background

Theories provide a synthesizing architecture for implementation science. The underuse, superficial use, and misuse of theories pose a substantial scientific challenge for implementation science and may relate to challenges in selecting from the many theories in the field. Implementation scientists may benefit from guidance for selecting a theory for a specific study or project. Understanding how implementation scientists select theories will help inform efforts to develop such guidance. Our objective was to identify which theories implementation scientists use, how they use theories, and the criteria used to select theories.

Methods

We identified initial lists of uses and criteria for selecting implementation theories based on seminal articles and an iterative consensus process. We incorporated these lists into a self-administered survey for completion by self-identified implementation scientists. We recruited potential respondents at the 8th Annual Conference on the Science of Dissemination and Implementation in Health and via several international email lists. We used frequencies and percentages to report results.

Results

Two hundred twenty-three implementation scientists from 12 countries responded to the survey. They reported using more than 100 different theories spanning several disciplines. Respondents reported using theories primarily to identify implementation determinants, inform data collection, enhance conceptual clarity, and guide implementation planning. Of the 19 criteria presented in the survey, the criteria used by the most respondents to select theory included analytic level (58%), logical consistency/plausibility (56%), empirical support (53%), and description of a change process (54%). The criteria used by the fewest respondents included fecundity (10%), uniqueness (12%), and falsifiability (15%).

Conclusions

Implementation scientists use a large number of criteria to select theories, but there is little consensus on which are most important. Our results suggest that the selection of implementation theories is often haphazard or driven by convenience or prior exposure. Variation in approaches to selecting theory warn against prescriptive guidance for theory selection. Instead, implementation scientists may benefit from considering the criteria that we propose in this paper and using them to justify their theory selection. Future research should seek to refine the criteria for theory selection to promote more consistent and appropriate use of theory in implementation science.

Similar content being viewed by others

Background

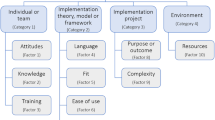

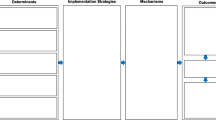

Theories and frameworks offer an efficient way of generalizing findings across diverse settings within implementation science [1]. Theories and frameworks (see Department of Veterans Health Administration’s Quality Enhancement Research Initiative [2013] for a taxonomy of theories, frameworks, and models, hereafter “theories”) generalize findings by providing a synthesizing architecture—that is, an explicit summary of explanations of implementation-related phenomena to promote progress and facilitate shared understanding [2]. Furthermore, theories guide implementation, facilitate the identification of determinants of implementation, guide the selection of implementation strategies, and inform all phases of research by helping to frame study questions and motivate hypotheses, anchor background literature, clarify constructs to be measured, depict relationships to be tested, and contextualize results [3].

Given their potential benefits, the underuse, superficial use, and misuse of theories represent a substantial scientific challenge for implementation science [4,5,6,7,8]. In one review, Tinkle et al. [4] highlighted pervasive underuse of theory (i.e., not using a theory at all); most of the large National Institutes of Health-funded projects that they reviewed did not use a theory. Likewise, a review of evaluations of guideline dissemination and implementation strategies from 1966 to 1998 showed that only a minority (23%) used any theory [5]. A scoping review of guideline dissemination strategies to physicians covering 2006 to 2016 showed that, although theory use had increased over time, fewer than half (47%) of included studies used a theory [8]. While theory use appears to be on the rise, it remains underused. Kirk et al. [9] reviewed studies citing the Consolidated Framework for Implementation Research and found that few applied the framework in a meaningful way (i.e., superficial use). For example, many articles cited the Consolidated Framework for Implementation Research (CFIR) in the “Background” or “Discussion” sections to acknowledge the complexity of implementation but did not apply the CFIR to data collection, analysis, or reporting findings. Similar results were found for studies conducted through 2009 that cited the use of the Promoting Action on Research Implementation in Health Services (PARIHS) framework [10]. Gaglio et al. [11] found that the most frequently studied dimension of the Reach Effectiveness Adoption Implementation Maintenance (RE-AIM) framework (reach) was often used incorrectly (i.e., misuse) [12]. Reach compares intervention participants (numerator) to non-participants (denominator). Examples of misuse include comparisons of participants to each other rather than to non-participants (e.g., [13]). The underuse, superficial use, and misuse of implementation theories may limit both the field’s advancement and its capacity for changing healthcare practice and outcomes.

The underuse, superficial use, and misuse of theories may relate, in part, to the challenge of selecting from among the many that exist in the field [14, 15], each with its own language and syntax, and varying levels of operationalized definitions [16] and validity [17]. Implementation researchers and practitioners (i.e., implementation scientists) have at their disposal myriad theories developed within traditional disciplines (e.g., sociology, health services research, psychology, management science) and increasingly within implementation science itself [18]. A move toward synthesizing theories may address the overlap; however, the question of which theory to select remains [19]. Therefore, implementation scientists would benefit from guidance for selecting a theory for a specific project. Guidance for theory selection may encourage implementation scientists to use theories, discouraging underuse; to use theories meaningfully, discouraging superficial use; and to be mindful of the strengths, weaknesses, and appropriateness of the theories that they select, discouraging misuse. Guidance for theory selection will promote theory testing and identification of needs around theory development, contributing to the advancement of the science. Indeed, applying theory meaningfully provides an opportunity to test, report, and enhance its utility and validity and provides evidence to support adaptation or replacement. As a first step toward the development of guidance for theory selection, this study aimed to identify which theories implementation scientists use, how they use theories, and the criteria used to select theories.

Methods

Survey design, instrument, and procedure

We conducted an observational study of implementation scientists using a self-administered paper and web-based survey. To create the survey instrument, we identified potential uses and criteria for selecting implementation theories using seminal texts and an iterative review process. SB, BP, and JP began with two seminal articles [20, 21] and one conference presentation [22]. Building on these texts, SB, BP, JP, and CS iteratively refined the uses and criteria for selecting theory through independent review in which authors identified uses and criteria for selecting theory, clarified definitions, and eliminated redundancies and then reconciled disagreements through an informal consensus process. The final 19-item instrument incorporated the resulting 12 potential uses (Table 1) and 19 criteria for selecting implementation theories (Table 2). In the survey, we asked respondents to identify:

-

1.

Their demographic and professional characteristics.

-

2.

Theories that they have used as part of their implementation research or practice (open-ended).

-

3.

The ways in which they have used theories (e.g., to inform data collection, analysis) (select all relevant options).

-

4.

Criteria that they use to select a theory (e.g., analytic level, disciplinary origins) (select all relevant options provided based on the seminal text and iterative review and consensus process described above). In addition to the options provided, we included open-ended items to identify criteria that respondents used to select theories other than those listed in the survey. We also asked respondents to rank the top three criteria that they use to select a theory.

-

5.

Any additional thoughts that they had regarding theory selection not addressed in other survey items (open-ended).

We recruited potential respondents at the 8th Annual Conference on the Science of Dissemination and Implementation in Health (2015) in Washington DC, USA, and via several international email lists (Table 3). We contacted potential respondents beginning in December 2015 and closed the survey at the end of February 2016. Potential respondents recruited at the 8th Annual Conference on the Science of Dissemination and Implementation in Health (2015) had the opportunity to complete a paper survey or to receive a link to a web-based version of the survey; all other potential respondents had access to the web-based version of the survey (Qualtrics, Provo, UT). We randomized response options in the web-based version of the survey to minimize bias associated with standard item ordering.

Ethics, consent, and permissions

Before completing the survey, participants read information regarding the study, including their right not to complete the survey and that doing so implied their consent. The institutional review board at the University of North Carolina at Chapel Hill exempted the study from human subject review.

Analysis

We conducted a descriptive analysis to describe which theories respondents used, the ways in which they used theories, and criteria that they considered when selecting theories. Analyses were conducted using Stata/IC statistical software v14.1. We used inductive content analysis to identify criteria that respondents used to select theories other than those listed in the survey and analyze respondents’ thoughts regarding theory selection not addressed in other survey items [23]. EH conducted the initial analysis, and SB, BP, JP, and CS collaborated on identifying criteria not represented elsewhere in the survey and salient quotes regarding theory selection.

Results

Respondent characteristics

After deleting observations for which the majority of survey items were incomplete, the study sample consisted of 223 survey respondents. Demographic characteristics of survey respondents are displayed in Table 4. Respondents included implementation researchers (42%), implementation practitioners (11%), and those who identified as both implementation researchers and practitioners (48%). The majority was female (72%) and white (90%). Other races represented include Asian (5%), black or African American (1%), and “other” or multiple races (4%). Survey respondents represented 12 different countries, with slightly more than half of respondents (55%) reporting the USA as the country in which their institution was located. Other commonly reported countries included Australia (18%), Canada (9%), the UK (7%), and Sweden (5%). Most respondents (67%) reported a PhD as their highest degree earned; 21% reported a Master’s degree; other respondents reported MD, Bachelor’s degree, and “Other.”

Most survey respondents (73%) were based at academic institutions. Other institution types included hospital-based research institutes (14%), government (14%), service providers (e.g., hospitals and public health agencies) (14%), and industry (e.g., contract research organizations) (3%). Respondents reported spending a mean = 14, standard deviation (SD) = 8.9 years conducting research and a mean = 7, SD = 7.1 years conducting implementation research, specifically. They reported having published a mean = 37, SD = 61.4 papers overall and a mean = 10, SD = 18.7 papers related to implementation science. Most respondents (64%) reported having been principal investigator of an externally funded research study.

Theories used

Survey respondents reported using more than 100 different theories from several disciplines including implementation science, health behavior, organizational studies, sociology, and business. The most commonly listed included the Consolidated Framework for Implementation Research (CFIR), Theoretical Domains Frameworks (TDF), PARIHS, Diffusion of Innovations, RE-AIM, Quality Implementation Framework, and Interactive Systems Framework (Table 5). Additionally, many respondents reported using theories in combination and theories developed “in-house.”

Ways in which theories are used

The most common ways in which survey respondents used theories in their implementation work were to identify key constructs that may serve as barriers and facilitators (80%), to inform data collection (77%), to enhance conceptual clarity (66%), and to guide implementation planning (66%). Respondents also used theories to inform data analysis, to specify hypothesized relationships between constructs, to clarify terminology, to frame an evaluation, to specify implementation processes and/or outcomes, to convey the larger context of the study, and to guide the selection of implementation strategies (Table 1).

Criteria that implementation scientists use to select theories

On average, survey respondents reported having used 7 (mean = 7.04; median = 7) of the 19 criteria listed in the survey when selecting an implementation theory. Some reported having used all 19 (Table 2). The criteria used by the most respondents included analytic level (58%), logical consistency/plausibility (56%), empirical support (53%), and description of a change process (54%). The criteria used by the fewest respondents included fecundity (10%), uniqueness (12%), and falsifiability (15%).

Criteria ranking

We eliminated responses with fewer than two criteria ranked (n = 48). Thirty-three percent of respondents ranked empirical support as one of the three most important criteria, but only 17% ranked it number 1 (see Table 6). Application to a specific setting/population and explanatory and power/testability were ranked second and third, respectively.

Additional criteria

We asked survey respondents to list additional criteria they used (if any) when selecting implementation theories, outside of the 19 listed in the survey. After eliminating responses that overlapped conceptually with the 19 listed criteria and synthesizing conceptual duplicates, 3 additional criteria were identified (see Table 2): familiarity (extent to which principal investigator or research team is familiar with the theory), degree of specificity (extent to which included constructs are comprehensive of implementation determinants or specific to a particular set of implementation determinants), and accessibility (extent to which non-experts are able to understand, apply, and operationalize a theory’s proposition).

Qualitative responses

Some respondents expressed concern that political criteria sometimes held more weight than scientific criteria. For example, a UK-based, PhD-prepared implementation researcher noted, “In my experience, frameworks are often selected for the wrong reasons. That is, the basis for selection is political rather than scientific.” Indeed, one US-based, PhD-prepared implementation researcher suggested adding the criterion, “My advisor told me to!” Many respondents reported that the selection of implementation theories is often haphazard or driven by convenience or prior exposure. As a representative example, a US-based, PhD-prepared implementation researcher wrote, “To some degree selection is arbitrary. There are probably several theories that would be fruitful, and I tend to use ones that are familiar to me.” A US-based physician researcher wrote, “I wish there were a simple, systematic process for selecting theories!”

Discussion

An international sample of implementation scientists collectively reported using more than 100 theories to inform implementation planning and evaluation to guide data collection and analysis, characterize features of the project environment and relationships between key constructs, and guide interpretation and dissemination of project outcomes. The theories that were most commonly used, including the CFIR, TDF, and Diffusion of Innovations, were used by respondents from nine different countries across four continents. Some respondents developed theories “in-house,” adapted existing theories, or combined components of multiple theories to meet the needs of their project.

Findings indicate that implementation scientists use a large number of criteria to select theories. It is possible that this large number reflects the varying sets of criteria that implementation scientists must consider depending on a theory’s intended use. (We describe this possibility in more detail below.) It may also be possible that the large number of criteria that implementation scientists consider when selecting theories reflects a lack of clarity regarding how to select theory. Indeed, our findings suggest that there is little consensus on which criteria are the most important. This may contribute to the persistent underuse, superficial use, and misuse of theories [4,5,6,7,8].

Our qualitative results suggest that the process for selecting implementation theories is often haphazard or driven by convenience or prior exposure. Selecting theories based on convenience or prior exposure may deepen knowledge about a given theory with repeated use; however, doing so also has the potential to limit theories’ benefits, particularly if theories are poorly suited to users’ objectives (e.g., selecting implementation strategies, framing study questions, motivating hypotheses). Convenient or familiar theories may contribute to silos in the field, limiting our ability to generalize findings, promote progress, and promote shared understanding.

This study had several limitations. We opted not to conduct a systematic literature review to develop the survey because the literature on this topic is likely diffuse and difficult to identify; thus, we began with key contributions of which we were aware. We included open-ended items to address the bias associated with this approach; however, we acknowledge that the criteria listed in the survey may have influenced responses. Though we tried to capture variation across respondent characteristics, our survey sample might not have been fully representative of perspectives in the field. For example, we did not have as many practitioner respondents or as many respondents from outside North America and Europe as we hoped; nevertheless, this appears to be the first attempt to assess the criteria that implementation scientists use to select theory, and future efforts to understand and streamline theory use should further consider the perspectives of these groups. Additionally, since we recruited a convenience sample, those who completed the survey may be systematically different from those who opted not to complete the survey. We did not ask respondents about the extent to which they value theory in their work. Indeed, we acknowledge that some implementation scientists do not view theory as critical to their work (e.g., [24, 25]).

Conclusions

Our results suggest that implementation scientists may benefit from guidance for theory selection. Developing such guidance is challenging given potential variation in implementation scientists’ roles, priorities, and objectives, limiting the benefit of prescriptive guidance. Indeed, there may not be one “best” theory for a given project. Instead, implementation scientists will benefit from considering the broad range of criteria that we propose in this paper.

The field of implementation science will benefit from transparent reporting of the criteria that implementation scientists use to select theories. Transparent reporting may encourage implementation scientists to carefully consider the relevance of a selected theory instead of defaulting to theories that are convenient or familiar but are poorly suited to implementation scientists’ objectives. In turn, transparent reporting may diminish silos in the field by making explicit scientists’ thinking in selecting a particular theory, thus promoting progress through generalizable findings and shared understanding.

Examples of transparent reporting of the criteria that we identified in this study (or any others) exist. Birken et al. [26] justified the use of a taxonomy of top manager behavior [27] to explore of the relationship between top managers’ support and middle managers’ commitment to implementation by describing three categories of behavior in which top managers might engage to promote middle managers’ commitment (i.e., logical consistency, description of a change process). Alexander et al. [28] described using Klein and Sorra’s [29] theory of innovation implementation to assess the influence of implementation on the effectiveness of patient-centered medical homes [30] because the theory explains how the proficient and consistent use of an innovation influences its effectiveness (i.e., outcome of interest, specificity of causal relationships among construct). Yet transparent reporting of criteria used to justify theory selection is limited. Requiring manuscripts to include a section describing the criteria used to justify theory selection may promote more consistent reporting.

Several areas of future research would extend our initial attempt in this paper to explore criteria for selecting implementation theories. Specifically, the criteria that implementation scientists use to select theory may relate to how they intend to use theory. Understanding this relationship would help to refine the criteria presented here. We also recognize that substantive differences between theories and frameworks likely have implications for the criteria used to select them. For example, specificity of causal relationships among constructs is likely to be of greater importance for selecting theories than frameworks. We are currently refining the criteria and working to develop a useful, practical, and generalizable checklist of criteria based on concept mapping by implementation scientists [31]. The exercise will categorize the criteria and rate their clarity and importance. Our goal is for the checklist to take into account how and what kind of theory implementation scientists intend to use. This represents a first step toward what we hope will be a continued effort to refine the criteria, thus promoting more consistent and appropriate use of theory in implementation science and more effectively building the range of knowledge necessary to help ensure successful implementation across diverse settings.

Abbreviations

- CFIR:

-

Consolidated Framework for Implementation Research

- PARIHS:

-

Promoting Action on Research Implementation in Health Services

- RE-AIM:

-

Reach Effectiveness Adoption Implementation Maintenance

- SD:

-

Standard deviation

- TDF:

-

Theoretical Domains Framework

References

Foy RJ, Ovretveit J, Shekelle PJ, Pronovost PJ, Taylor SL, Dy S, et al. The role of theory in research to develop and evaluate the implementation of patient safety procedures. BMJ Qual Saf. 2011;20(5):453–9.

Department of Veterans Health Administration, Health Services Research and Development, Quality Enhancement Research Initiative. Implementation Guide. 2013. https://www.queri.research.va.gov/implementation/default.cfm#1https://www.queri.research.va.gov/implementation/default.cfm#1. Accessed 30 May 2017.

Proctor EK, Powell BJ, Baumann AA, Hamilton AM, Santens RL. Writing implementation research grant proposals: ten key ingredients. Implement Sci. 2012;7:96.

Tinkle M, Kimball R, Haozous EA, Shuster G, Meize-Grochowski R. Dissemination and implementation research funded by the US National Institutes of Health, 2015-2012. Nurs Res Pract. 2013;2013:909606.

Davies P, Walker AE, Grimshaw JM. A systematic review of the use of theory in the design of guideline dissemination and implementation strategies and interpretation of the results of rigorous evaluations. Implement Sci. 2010;5:14.

Colquhoun HL, Letts LJ, Law MC, MacDermid JC, Missiuna CA. A scoping review of the use of theory in studies of knowledge translation. Can J Occup Ther. 2010;77(5):270–9.

Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Res Soc Work Pract. 2014;24(2):192–212.

Liang L, Bernhardsson S, Vernooj RW, Armstrong MJ, Bussières A, Brouwers MC, Gagliardi AR. Use of theory to plan or evaluate guideline implementation among physicians: a scoping review. Implement Sci. 2017;12:26.

Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of the Consolidated Framework for Implementation Research. Implement Sci. 2016;11:72.

Helfrich CD, Damschroder LJ, Hagedorn HJ, Daggett GS, Sahay A, Ritchie M, et al. A critical synthesis of literature on the promoting action on research implementation in health services (PARIHS) framework. Implement Sci. 2010;5:82.

Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103(6):e38–46.

Kessler RS, Purcell EP, Glasgow RE, Klesges LM, Benkeser RM, Peek CJ. What does it mean to “employ” the RE-AIM model? Eval Health Prof. 2013;36(1):44–66.

Haas JS, Iyer A, Oray EJ, Schiff GD, Bates DW. Participation in an ambulatory e-pharmacovigilance system. Pharmacoepidemiol Drug Saf. 2010;19(9):961–9.

Tabak RG, Khoong EC, Chambers D, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43(3):337–50.

Flottorp SA, Oxman AD, Krause J, Musila NR, Wensing M, Godycki-Cwirko M, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare processional practice. Implement Sci. 2013;8:35.

Tabak RE, Chambers KD, Brownson R. A narrative review and synthesis of frameworks in dissemination and implementation research. Presented at 5th Annual NIH Conference on the Science of Dissemination and Implementation: Research at the Crossroads. Bethesda; 2012.

Sniehotta FF, Presseau J, Araujo-Soares V. Time to retire the theory of planned behaviour. Health Psychol Rev. 2014;8(1):1–7.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53.

Birken SA, Powell BJ, Presseau J, Kirk MA, Lorencatto F, Gould NJ, et al. Combined use of the Consolidated Framework for Implementation Research (CFIR) and the Theoretical Domains Framework (TDF): a systematic review. Implement Sci. 2017;12:2.

The Improved Clinical Effectiveness through Behavioral Research Group (ICEBeRG). Designing theoretically informed implementation interventions. Implement Sci. 2006;1:4.

Wacker JG. A definition of theory: research guidelines for different theory-building research methods in operations management. J Oper Manag. 1998;16(4):361–85.

Holmström J, Truex D. What does it mean to be an informed IS researcher? Some criteria for the selection and use of social theories in IS research. Information Systems Research Seminar in Scandanavia (IRIS). 2001;313-326.

Elo SS, Kyngas H. The qualitative content analysis process. J Adv Nurs. 2008;62(1):107–15.

Oxman AD, Frethein M, Flottorp S. The OFF theory of research utilization. J Clin Epidemiol. 2005;58(2):113–6.

Bhattacharyya O, Reeves S, Garfinkel S, Zwarenstein M. Designing theoretically-informed implementation interventions: fine in theory but evidence of effectiveness in practice is needed. Implement Sci. 2006;1:5.

Birken SA, Lee SY, Weiner BJ, Chin MH, Chiu M, Schaefer CT. From strategy to action: how top managers’ support increases middle managers’ commitment to innovation implementation in health care organizations. Health Care Manag Rev. 2015;40(2):159–68.

Yukl G, Gordon A, Taber T. A hierarchical taxonomy of leadership behavior: integrating a half century of behavior research. JLOS. 2002;9(1):15–32.

Alexander JA, Markovitz AR, Paustian ML, Wise CG, El Reda DK, Green LA, et al. Implementation of patient-centered medical homes in adult primary care practices. Med Care Res Rev. 2015;72(4):438–67.

Klein JK, Sorra JS. The challenge of innovation implementation. Acad Manag Rev. 1996;21:1055–80.

Birken SA. Developing a tool to promote the selection of appropriate implementation frameworks and theories. NC TRaCS Institute (TSMPAR11601), September 2016 – August 2017.

Kane M, Trochim WM. Concept mapping for planning and evaluation. SAGE: Thousand Oaks; 2007.

Acknowledgements

We are grateful for the time and contribution of the implementation scientists who participated in the study, for the administration and data management provided by Alessandra Bassalobre Garcia and Alexandra Zizzi, for the administrative support from Jennifer Scott, and for the input from participants in the May 2017 Advancing Implementation Science call.

Funding

The project described was supported by the National Center for Advancing Translational Sciences (NCATS), National Institutes of Health, through Grant Award Number UL1TR001111. SAB was also supported by KL2TR001109. BJP was also supported by R25MH080916, L30MH108060, K01MH113806, P30AI050410, and R01MH106510. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Availability of data and materials

Please contact author for data requests.

Author information

Authors and Affiliations

Contributions

All authors made significant contributions to manuscript. SB, BP, CS, and JP collected the data. SB and EH analyzed the data. All authors drafted and critically revised the manuscript for important intellectual content. All authors have read and gave final approval of the version of the manuscript submitted for publication.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Before completing the survey, participants read information regarding the study, including their right not to complete the survey and that doing so implied their consent. The institutional review board at the University of North Carolina at Chapel Hill exempted the study from human subject review.

Consent for publication

Our manuscript does not contain any individual person’s data in any form.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Birken, S.A., Powell, B.J., Shea, C.M. et al. Criteria for selecting implementation science theories and frameworks: results from an international survey. Implementation Sci 12, 124 (2017). https://doi.org/10.1186/s13012-017-0656-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-017-0656-y