Abstract

Background

Healthcare systems are working to move towards more integrated, patient-centered care. This study describes the development and testing of a multidimensional self-report measure of patients’ experiences of integrated care.

Methods

Random-digit-dial telephone survey in 2012 of 317 adults aged 40 years or older in the San Francisco region who had used healthcare at least twice in the past 12 months.

One-time cross-sectional survey; psychometric evaluation to confirm dimensions and create multi-item scales. Survey data were analyzed using VARCLUS and confirmatory factor analysis and internal consistency reliability testing.

Results

Scales measuring five domains were confirmed: coordination within and between care teams, navigation (arranging appointments and visits), communication between specialist and primary care doctor, and communication between primary care doctor and specialist. Four of these demonstrated excellent internal consistency reliability. Mean scale scores indicated low levels of integration.

Conclusion

These scales measuring integrated care capture meaningful domains of patients’ experiences of health care. The low levels of care integration reported by patients in the study sample suggest that these types of measures should be considered in ongoing evaluations of health system performance and improvement. Further research should examine whether differences in patient experience of integrated care are associated with differences in the processes and outcomes of care received.

Similar content being viewed by others

Background

Patients often experience health care as fragmented and disjointed. An important health system goal is achieving “integrated care”— a sense of “cohesiveness and connectedness of the health care system” [1]. Much of the prior research on integrated care has considered integration as a structural property, focusing on geographic co-location of services and the organizational attributes of vertically integrated health delivery systems (e.g., Kaiser Permanente) or horizontally integrated entities (e.g., hospital chains) [2–4]. Various authors and organizations have investigated patients’ experience of integrated care, emphasizing functional aspects of integration such as care coordination and integration between health and social care [5]. Even the World Health Organization developed early thoughts on the subject and others have expanded on the proposed WHO framework [6, 7].

Several definitions have been proposed to capture a more holistic and patient-centered concept of integrated care. Singer and colleagues define integrated care as “patient care that is coordinated across professionals, facilities, and support systems; continuous over time and between visits; tailored to the patients’ needs and preferences; and based on shared responsibility between patient and caregivers for optimizing health” [4]. They proposed a conceptual framework consisting of seven domains: 1) coordination within care team, 2) coordination across care teams, 3) coordination between care teams and community resources, 4) continuous familiarity with patient over time, 5) continuous proactive and responsive action between visits, 6) patient-centered, and 7) shared responsibility. In a qualitative study exploring patients’ understanding and experiences of integrated care, we found that patients clearly perceive when integration and coordination are--or are not--happening in their experiences with the health care system, and that they highly value a sense of all members of the care team “being on the same page” [8]. The themes that emerged from these patient focus groups largely aligned with the seven domains of the Singer conceptual model.

Although our qualitative research lends support to the Singer conceptual framework, there are as yet no published, psychometrically sound survey instruments for systematically measuring all aspects of the patient experience of integrated care. Current instruments measure some, but not all of the concepts [9]. Many critical research questions about integrated care cannot be satisfactorily investigated without quantitative measures of patients’ experience of integrated care. For example, do patients receiving care from health care organizations with a high degree of vertical structural integration experience their care as more functionally integrated than patients receiving care in less tightly organized settings? Are minority and low socioeconomic status (SES) patients less likely than their counterparts to experience highly integrated care, and does that difference in experience partly explain disparities in medication adherence, preventive care services, diabetes control, avoidable hospitalizations, and other outcomes?

We developed and tested a self-report survey instrument to quantitatively measure patients’ experience of integrated care. In this article, we report the psychometric properties of several scales to measure patients’ experience of care integration.

Methods

Conceptual model

We used the Singer conceptual model to guide our instrument development, focusing on domains for which well-established self-report scales are unavailable. For this reason, we decided not to test new scales for the domains of patient-centeredness, continuity, and shared responsibility. Validated instruments exist for patient-centeredness, as well as continuity of care (which encompasses continuous familiarity) and shared decision-making (which encompasses shared responsibility) [10–15]. There is also extensive literature on some specific aspects of integration of specialty and primary care services, including co-location between behavioral healthcare providers and primary care providers [16–19]. We focused on the other four domains of the Singer model: coordination within care team, coordination across care teams, coordination between care teams and community resources, and continuous proactive and responsive action between visits. Based on findings from our focus groups, we added an additional domain of navigation using language used by patients. Patients in our focus groups frequently mentioned a desire for assistance in scheduling appointments and related care planning needs in complex health systems. Several questions were added that addressed needs to follow-up with clinics or other next steps for exploration.

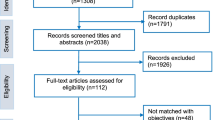

Evidence to select measures of integrated care

Through an extensive search of the literature, we started with a search of Pubmed MESH term “Delivery of Health Care, Integrated” to explode our search, including articles in the English language published between the dates of Jan. 1, 1985 – April 1, 2011. We included articles that were reviews, studies, and commentaries not limited to any specific disease, patient population, method or level of integration. This generated nearly 6000 articles, and based on titles we narrowed this list to less than 100 articles that appeared to include original research on patient experiences. We then narrowed the search further after reviewing article abstracts to identify publications that included patient survey measures of themes and elements of integrated care.

We reviewed existing survey instruments to identify questions that might capture aspects of the five domains we wanted to include in our integrated care instrument. Existing surveys that were most relevant to our study aims were the Consumer Assessment of Health Plans (CAHPS) survey [20], Commonwealth Fund International Patient Experience Survey [21], Tufts Medical Center Institute for Clinical Research and Health Policy Studies Ambulatory Care Experiences Survey [13, 22], the Patient Perceptions of Care Survey [23], and the Johns Hopkins Primary Care Assessment Tool [24]. We adapted items from these sources and developed new items to capture relevant concepts not adequately represented in these existing questionnaires.

Cognitive interview pretesting

We performed cognitive interview pre-testing on 28 items that were either newly written or substantially modified from an existing survey item, and which we were concerned might be misinterpreted, misclassified, or be otherwise problematic, especially among individuals from lower SES groups. Probes were developed to determine whether respondents understood the intended meaning of specific words or phrases, whether similar questions were perceived as redundant, and whether questions were offensive; to identify the cognitive processes used in responding; and to describe examples from respondents’ experience. Individual cognitive interviews were conducted face-to-face with a convenience sample of 20 patients from clinics within San Francisco General Hospital. Patients were eligible if they were 40 years of age or older and had used the clinic at least two times within the past year. Participants were consented in person and were provided a gift card for their time. The UCSF Institutional Review Board approved this study protocol.

The pretest sample was mostly female (70 %), between the age of 40 to 55 (70 %), African-American (45 %), had a high school education or less (78 %), and uninsured (55 %). Interviews were conducted in English, with a translator used for two participants with limited English proficiency. As an example of a probe, for the item “How often did your regular doctor seem informed and up-to-date about the care you got from the specialist”, the interviewer queried, “What does the phrase informed and up-to-date mean to you?” Of the 28 items included in cognitive testing, 21 (75 %) presented either minor or major problems. Of these 21 questions, 11 (52 %) showed similar meaning to a prior question, 3 (14 %) had clarification problems, 11 (52 %) demonstrated response scale problems, and 7 (33 %) questions were repeated during interviews by the interviewer. Seven items were dropped, and several items were revised including refinement of response options and addition of new items to better capture concepts.

We also used the cognitive pretesting to assess the acceptability of our response scales, which consisted of respondents rating “how often” an item occurred in the past 12 months, using a scale ranging from never to always. This is the same response format used in the CAHPS survey, and our pretest sample of patients reported no difficulty in understanding this response scale [20].

Fielded questionnaire items

The fielded questionnaire included 46 items addressing elements of integrated care. Additional file 1 lists these items and indicates the source of those items if they were adapted from existing questionnaires. All items used response options on a 6-level frequency scale regarding how often the integration experience occurred: 1 = never, 2 = almost never, 3 = sometimes, 4 = usually, 5 = almost always, or 6 = always. In addition to the items on experience of integrated care, the questionnaire included items on patient demographics, use of health care and general perceptions of the health care system. The survey was pretested with 10 participants before fielding the final survey.

Survey sample

The surveys were administered by landline telephone interview in February 2012 to March 2012 using random digit dialing of households in the San Francisco Bay Area. Household members were eligible to participate in the survey if they were age 40 years or older and had used healthcare at least twice in the past 12 months, assessed in initial screening questions in the telephone interview. These eligibility criteria were used to preferentially recruit individuals who were active health care users and might have experiences in different facets of care integration. In our prior focus group work, we found that individuals who infrequently used health services could not describe the experience of integrated care. The concept is best described by those who have used multiple settings of healthcare. For this purpose, we estimated the likelihood based on the point estimates from the 2007 public dataset of the California Health Interview Survey [25]. On exploratory analyses of this survey data, we found that to recruit patients likely to have relatively high care coordination needs, we needed to include those who were 40 years of age or older, had one or more chronic condition (diabetes, hypertension, chronic lung disease, depression, chronic kidney disease, osteoarthritis, congestive heart failure, or mild cognitive impairment) and had at least two medical visits in the past 12 months. Each household was called back up to 6 times before abandoning the telephone number. The random digit dial approach precluded reaching those with only cell phones. Participants were consented orally at the time of survey administration and provided a $20 gift card for their time. The survey averaged 12 min in duration across participants.

Analytical methods

Our analyses aimed to identify a set of multi-item scales to capture the different domains of integrated care that could be incorporated into a survey instrument for administration to a general population of adult patients. We began by examining item variability and missing data and then performed VARCLUS and confirmatory factor analyses, as well as scale-scale intercorrelations and internal consistency assessments, as part of an iterative process for determining final scales. Our analysis used SAS PROC VARCLUS, which is a SAS procedure to help a statistician quickly reduce the number of variables used to build a segmentation model. PROC VARCLUS clusters variables by finding groups of variables that are as correlated as possible among themselves and as uncorrelated as possible with variables in other clusters [26]. As input to PROC VARCLUS, we imputed a covariance matrix in SAS (Version 9.2). At this stage we made a decision to exclude items with a relatively high frequency of missing data. Missing data principally occurred because many respondents were not eligible to answer several item sets that were contingent on the individual receiving a specific service in the past 2 years or that were relatively low frequency events, such as a hospitalization or an emergency department visit. We excluded items from the analysis for which less than 40 % of the participants were eligible to answer. Our rationale for this decision was twofold. First, we did not want to excessively compromise the size of the study sample for psychometric testing. Second, we wanted to design survey scales that would be applicable to a wide population of patients and not just the relatively small proportion with the highest care needs.

The VARCLUS model suggested several clusters of items that were the basis for a final confirmatory factor analysis model. Because VARCLUS does not provide a means of assessing the empirical fit of the cluster solution, we used confirmatory factor analysis model to determine whether the VARCLUS solution provided a reasonable fit to the data.

For the confirmatory factor analysis, we used Mplus Version 5.21 with the same imputed covariance matrix as with PROC VARCLUS to test whether the items loaded on their theorized constructs, using 0.32 as the minimum acceptable value for factor loading, indicating that the item shares at least 10 % of its variation with the factor. Decisions on the adequacy of model fit to the hypothesized clusters were guided by the comparative fit index and the root mean square error of approximation), as well as modification indices. For all final scales, we calculated the internal-consistency reliability and examined the item-scale correlations corrected for overlap, to determine if these met a minimal criterion of being greater than 0.30. For internal consistency reliability, we used Cronbach’s alpha and considered an alpha reliability of 0.70 or greater to be acceptable. Values for final scales were computed as the mean of all scale items, with the minimum and maximum possible range of all item and scale scores being 1 and 6, respectively. Items worded in a negative fashion were reverse coded when computing scales, so that higher values for all items reflected better care integration.

Preliminary test of construct validity

Rigorously testing construct validity was not one of the primary aims for this stage of scale development. However, we explored construct validity by testing the association of the scales with the insurance status of respondents. We hypothesized that patients with public insurance (Medicaid or Medicare) would report a less integrated experience of care than patients with private insurance. In California, because of the strong presence of managed care in the private health insurance market, including the vertically integrated Kaiser Permanente organization, patients with private insurance might be expected to experience care that is more proactively coordinated than adults with Medicaid and Medicare, which at the time of the survey were not predominantly managed care models in California. Our survey did not specifically ask about enrollment in a managed care plan, but did ask about the overall type of insurance.

Results

Surveys were completed by 317 individuals, representing 64 % of contacted telephone numbers. The cooperation rate among those contacted and eligible was 87 %, and the overall response rate among those estimated to be eligible was 56 %. If eligibility rates were similar in participants who could not be reached, the response rate of eligible participants would be 63 % (12 % refusal rate; 87 % cooperation rate) [27]. The characteristics of respondents are shown in Table 1. The majority of respondents were female (62 %), of white race-ethnicity (80 %), and had graduated from college (61 %); 39 % were older than 65 years of age or older, and 21 % reported being in fair or poor health. Forty five percent had private insurance, 43 % had Medicare or Medicaid coverage, 12 % had other types of insurance and 2 % were uninsured.

Most respondents reported having a regular source of care (N = 296, 93 %), a blood test or other diagnostic test in the prior 12 months (N = 305, 96 %), an attempt to call their regular doctor’s office in the prior 12 months (N = 258, 81 %), and a visit to a specialist in the prior 2 years (N = 229, 72 %). Far fewer than half reported seeing a doctor at their regular place of care other than their own regular doctor in the prior 12 months (N = 110, 35 %), an attempt to email their regular doctor’s office in the prior 12 months (N = 99, 31 %), an overnight hospitalization in the prior 2 years (N = 57, 18 %), an emergency department visit in the prior 2 years (N = 110, 35 %), or use of community service organizations such as Meals on Wheels or wellness programs (N = 48, 15 %).

The number of participants responding to each of the integrated care items is shown in Additional file 1. As noted in the methods section, because a distinct minority of patients had recent experiences with seeing more than one doctor at their regular place of care, email communication with doctors, inpatient hospital care, and emergency department care, we excluded items addressing these care experiences from the factor analysis in order to test scales applicable to the majority of respondents. Table 2 lists the 26 items with sufficient numbers of respondents to be included in the VARCLUS, grouped by six hypothesized domains. Of the 26 items included in the VARCLUS, 21 loaded on five scales (Table 3). Items in one hypothesized domain (continuous/proactive action between visits) did not form a cluster, and one item in the general coordination domain did not cluster with its domain. The final five domains thus differed from the hypothesized domains slightly. The six items hypothesized to measure coordination between care teams distinguished themselves in terms of the direction of coordination - splitting into coordination between primary care doctors and specialists, and between specialists and primary care doctors. Items in the hypothesized “general coordination” and “coordination within care team” domain combined into one cluster we relabeled “coordination within and between care teams”.

For the confirmatory factor analysis, we therefore tested the 5-domain structure identified in the final VARCLUS. All 21 items loaded on their theorized constructs, using 0.32 as the minimum acceptable value for factor loading. The comparative fit index (CFI) was 0.889 and the root mean square error of approximation (RMSEA) was 0.080.

The “coordination within and between care teams” scale comprised 7 items (received conflicting information, needed to repeat self, confused because of conflicting information, doctors know medical history, staff knows medical history, time wasted because of poor coordination/organization of care, trouble getting doctors coordinated). The “navigation” scale comprised 6 items (help scheduling follow-up appointments, made arrangements for visits with regular doctor, made arrangements for visits with other doctors, made arrangements for lab and other tests, made arrangements in a timely manner, made arrangements at preferred location). The “communication between specialist and primary care doctor” scale comprised 3 items (specialists gave information to primary doctor, primary care doctor seemed informed after specialist visit, and primary care doctor talked to patient about specialist visit). Only two items loaded on the “coordination with community resources” dimension (discussed different places for help/treatment, help with other resources). The “communication between primary care doctor and specialist” scale also had three items (specialist had all basic information, patient knew why needed specialist visit, specialist had enough information from primary care doctor).

Table 4 shows the summary statistics for the five scales including number with complete data, unstandardized alpha, the range of item-scale correlations corrected for overlap, the mean and standard deviation, and the interquartile range. Internal-consistency reliabilities ranged from 0.55 to 0.87; alphas were greater than 0.70 for four of the five scales, but did not meet our minimum criteria of 0.70 for the “coordination with community resources” scale. Mean scale scores were relatively low, ranging from 1.9 (coordination within and between care teams) to 2.8 (communication between specialist and primary care doctor). These means represent ratings of integration falling in the “almost never” or “sometimes” occurring range.

Table 5 presents preliminary construct validity results. As hypothesized, there was a consistent trend across all scales for the mean ratings of integration to be higher among patients with private insurance than with public insurance, with the difference achieving statistical significance for “coordination with community resources”.

Discussion

We succeeded in developing five scales to measure several dimensions of patients’ experience of care integration that have acceptable psychometric and factor loading properties. Four of the scales demonstrate excellent internal consistency reliability; one scale (coordination with community resources) had inadequate internal consistency, possibly because it only consisted of two items. Nonetheless, this scale demonstrated significant differences between patients with private and public insurance indicating some degree of validity, suggesting that the internal-consistency statistic may be an incomplete estimate of its reliability. All these scales measure dimensions of integrated care that generally align with the conceptual model developed by Singer and colleagues and were endorsed by patients in our prior qualitative study of patients’ experience of care integration [4, 8]. The “coordination within and between care teams” scale appears to represent the patient’s overall sense of integration, including the adequacy of informational continuity and care planning when multiple doctors and other personnel participate in a patient’s care. We hypothesized that all items addressing communication between the patient’s primary care physician and specialists participating in the patient’s care would fall into a single construct. However, the VARCLUS indicated that there were two distinct constructs in this domain, distinguished by the direction of the communication. One scale included items on communication to the specialist from the patient’s primary care physician and others involved in the patient’s care, and the other included items on communication from the specialist back to the primary care physician. The “navigation” scale straddles two of the domains in the Singer conceptual model: patient centered and continuous proactive and responsive action between visits. We labeled this scale “navigation” because it captured a logistical aspect of care integration involving patients getting assistance with scheduling services and tests in a patient-centered, coordinated manner.

The low mean scores on all scales suggest that the patients we studied are experiencing care that is anything but highly integrated from their point of view. The highest mean score - 2.8 for “communication between specialist and primary care doctor” - falls below a value of 3 which would represent an experience of “sometimes” experiencing care in that domain as being well integrated. We used response categories that assessed the frequency with which various features of care were experienced (a never-to-always scale) rather than assessing their ratings of the quality of those experiences (e.g., a poor-to-excellent scale) because of our focus on what occurred rather than patients’ evaluation of their experience. We believe that most health systems would consider it inadequate for the average patient to experience care being integrated only some of the time and not on a consistent basis.

We hoped to measure additional domains of care integration, such as coordination involving hospital and emergency department visits. However, most individuals in the community do not have hospitalizations or emergency department visits within the prior 1 to 2 years, making it difficult to include items addressing these care components in scales of overall care integration applicable to the general population. Even using inclusion criteria which were designed to target patients with greater health care needs, only about one-third or fewer of the patients in our sample had recent encounters in several of the health care areas we considered relevant for understanding care integration. We could have created separate scales focusing only on these less frequent types of care experiences, but our goal for this first generation of scale development was to emphasize scales applicable to a large segment of the population who are active users of health care.

Our study has several limitations. Our questionnaire items should not be considered an exhaustive set of items for measuring important aspects of the experience of care integration, and there is undoubtedly opportunity to expand upon and further refine scales on care integration domains. We surveyed patients in one geographic region of California, and their experiences and responses may not be representative of individuals living in other regions. Survey participants were age 40 years or older; responses may not reflect pediatric or geriatric populations’ experiences with care. We did not design our questionnaire and sample size to perform a rigorous assessment of construct validity or test its use in a real-world setting. In real-world situations, patients would not be consented to participate in the survey for research purposes or receive an incentive, although patients likely would answer questions such as these outside of the clinical encounter, on a phone call and not at random. While the trends for an association between type of insurance and patient reports of care integration are suggestive, further study will be needed to more systematically examine the construct validity of these scales.

Although the response rate was relatively high for a random digit dial telephone survey, there is always a possibility that response bias may have influenced our findings. Our sample also reflects a higher percentage of those with a college education, white and older than the Census data [28]. However, similar findings have resulted from other household telephone based surveys. Younger people, minorities, and lower SES are more likely to be found in cell-phone-only homes and may bias the results of a landline based survey [29]. The major limitation of our study is that we were not able to test further the construct validity of the scales or test predictive validity. We also note that some domains used less frequently are difficult to assess. Coordination with community resources and communication between providers may be highly situational. For this reason additional measures from existing validated surveys may be added to supplement this scale. Future research should pursue validation studies, for example by investigating if low scores on these self-report scales of care integration predict outcomes such as patients changing their regular source of care, duplication of diagnostic tests, preventable hospitalizations, and adverse medication events.

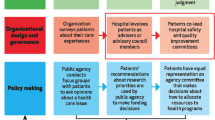

Our study has several policy implications. Foremost is the suggestion that health systems should engage patients in order to understand their experiences as systems attempt to deliver care that is more seamlessly coordinated and patient-centered. Much of the effort in care integration in the US has focused on structurally integrating various delivery components under a more corporate model [30], as well as placing great hope in health information technology as a solution to poorly coordinated care [31, 32]. Although we did not ascertain which patients in our study may have received care in more structurally integrated systems such as Kaiser Permanente or whether their caregivers used electronic health records, the California health care market is distinguished by a high prevalence of managed care, structurally integrated delivery systems, and adoption of electronic health records [33]. The relatively low ratings that most respondents gave to their experience of care integration suggests that systems should carefully assess whether strategies for promoting integration are making patients experience care as more functionally integrated and enhancing patients’ perceptions that everyone involved in their care is “on the same team [8]”. Engaging patients in advisory councils and as partners on care redesign teams is one approach that may help health systems to tune into the patient voice and patient insights about what makes care truly patient-centered and well-coordinated [34]. If further validated, health systems may find these patient-reported scales of care integration to be helpful in informing them about current strengths and weaknesses in these aspects of care coordination. These scales also may provide a tool for assessing whether interventions to improve health system performance are producing improvements in patients’ experience of care integration.

Future implications of having five scales that measure several dimensions of patients’ experience of care integration suggest that in broader settings we may be able to further explore which parts of integrated care matter most. After the scales are further evaluated and tested in real-world settings, we may have further insights about how the instrument may be used and linked to outcomes. Expanding this evidence base may require additional work and inquiry.

Several areas of future research are important to note. It is clear that a complete understanding of integrated care will require a combination of perspectives from patients, clinicians, and administrators. Even then, several survey instruments may need to be combined to gauge the appropriate measurement of integration. Future studies may need to assess how to combine scales across perspectives and instruments.

Conclusions

In conclusion, we have developed several patient self-report scales to measure important domains of the patient experience of integrated care. These scales may prove to be useful to efforts that more systematically evaluating health care reforms. The scales may also provide a starting point for researchers seeking to study, evaluate and analyze delivery models that strive to promote greater coordination of health services. The end goal is to better understand common elements that can provide patients with a more seamless experience with care and promote optimal health outcomes.

Abbreviations

CAHPS, consumer assessment of health plans; CFI, comparative fit index; MeSH, medical subject headings; RMSEA, root mean square error of approximation; UCSF, University of California, San Francisco; WHO, World Health Organization.

References

Kodner DL. All together now: a conceptual exploration of integrated care. Healthc Q. 2009;13(Spec No):6–15.

Budetti PP, Shortell SM, Waters TM, Alexander JA, Burns LR, Gillies RR, Zuckerman H. Physician and health system integration. Health Aff. 2002;21(1):203–10.

Burns LR, Pauly MV. Integrated delivery networks: a detour on the road to integrated health care? Health Aff. 2002;21(4):128–43.

Singer SJ, Burgers J, Friedberg M, Rosenthal MB, Leape L, Schneider E. Defining and measuring integrated patient care: promoting the next frontier in health care delivery. Med Care Res Rev. 2011;68(1):112–27.

Sun X, Tang W, Ye T, Zhang Y, Wen B, Zhang L. Integrated care: a comprehensive bibliometric analysis and literature review. Int J Integr Care. 2014;14:e017.

Grone O, Garcia-Barbero M, Services WHOEOfIHC. Integrated care: a position paper of the WHO European Office for Integrated Health Care Services. Int J Integr Care. 2001;1:e21.

Jones R, Van den Bruel A, Gerada C, Hamilton W, Kendrick T, Watt G. What should integrated care look like … ? Br J Gen Pract. 2015;65(632):149–51.

Walker KL, K, Choi J, Schmittdiel J, Stewart AL, Grumbach, K: Patient perceptions of integrated care: confused by the term, clear on the concept. Int J Integr Care. 2012;13:e004.

Malouin RA, Starfield B, Sepulveda MJ. Evaluating the tools used to assess the medical home. Manag care. 2009;18(6):44–8.

Elwyn G, Edwards A, Mowle S, Wensing M, Wilkinson C, Kinnersley P, Grol R. Measuring the involvement of patients in shared decision-making: a systematic review of instruments. Patient Educ Couns. 2001;43(1):5–22.

Flocke SA. Measuring attributes of primary care: development of a new instrument. J Fam Pract. 1997;45(1):64–74.

Mead NBP. Measuring patient-centeredness: a comparison of three observational-based instruments. Patient Educ Couns. 2000;39(1):71–80.

Safran DG, Kosinski M, Tarlov AR, Rogers WH, Taira DH, Lieberman N, Ware JE. The Primary Care Assessment Survey: tests of data quality and measurement performance. Med Care. 1998;36(5):728–39.

Shi L, Starfield B. The effect of primary care physician supply and income inequality on mortality among blacks and whites in US metropolitan areas. Am J Public Health. 2001;91(8):1246–50.

Stewart AL, Napoles-Springer AM, Gregorich SE, Santoyo-Olsson J. Interpersonal processes of care survey: patient-reported measures for diverse groups. Health Serv Res. 2007;42(3 Pt 1):1235–56.

Benzer JK, Sullivan JL, Williams S, Burgess JF. One-year cost implications of using mental health care after discharge from a general medical hospitalization. Psychiatr Serv. 2012;63(7):672–8.

Chang ET, Rose DE, Yano EM, Wells KB, Metzger ME, Post EP, Lee ML, Rubenstein LV. Determinants of readiness for primary care-mental health integration (PC-MHI) in the VA Health Care System. J Gen Intern Med. 2013;28(3):353–62.

Miller BF, Druss B. The role of family physicians in mental health care delivery in the United States: implications for health reform. J Am Board Fam Med. 2013;26(2):111–3.

Peek CJ. In: AHRQ, editor. Lexicon for Behavioral Health and Primary Care Integration: Concepts and Definitions Developed by Expert Consensus. 2013.

The Consumer Assessment of Healthcare Providers and Systems Surveys [www.ahrq.gov/cahps]. Accessed 9 Sept 2015.

Schoen C, Osborn R, Squires D, Doty MM, Pierson R, Applebaum S. How health insurance design affects access to care and costs, by income, in eleven countries. Health Aff. 2010;29(12):2323–34.

Safran DG, Rogers, W.H.: Survey-based measures of physician-level performance. In.: Commissioned by NCQA for its Physician-Level Performance Measurement Conference

Borowsky SJND, Fortney JC, Hedeen AN, Bradley JL, Chapko MK. VA Community-Based Outpatient Clinics: Performance Measures Based on Patient Perceptions of Care. Med Care. 2002;40(7):578–86.

Shi LSB. Validating the adult primary care assessment tool. J Fam Pract. 2001;50(2):161.

California Health Interview Survey [http://healthpolicy.ucla.edu/Pages/home.aspx]. Accessed 9 Sept 2015.

Muthen BDK. A comparison of some methodologies for the factor analysis of non-normal Likert variables. Br J Math Stat Psychol. 1985;18(38):171–89.

Frankel L. The report of the CASRO task force on response rates. Cambridge: Marketing Science Institute; 1983.

California Health Interview Survey (CHIS) 2009 Methodology Series: Report 1 - Sample Design

Blumberg SJ LJ: Wireless substitution: Early release of estimates from the National Health Interview Survey. In., vol. January-June2010. National Center for Health Statistics; 2010. http://www.cdc.gov/nchs/nhis/index.htm.

Lewis VA, Colla CH, Carluzzo KL, Kler SE, Fisher ES. Accountable care organizations in the United States: market and demographic factors associated with formation. Health Serv Res. 2013;48(6 Pt 1):1840–58.

Graetz I, Reed M, Shortell SM, Rundall TG, Belows J, Hsu J: The Association between EHRs and Care Coordination Varies by Team Cohesion. Health Serv Res. 2014;49(1 Pt 2):438–52.

Kuperman GJ, McGowan JJ. Potential unintended consequences of health information exchange. J Gen Intern Med. 2013;28(12):1663–6.

Rittenhouse DR, Grumbach K, O’Neil EH, Dower C, Bindman A. Physician organization and care management in California: from cottage to Kaiser. Health Aff. 2004;23(6):51–62.

Cosgrove DM, Fisher M, Gabow P, Gottlieb G, Halvorson GC, James BC, et al. Ten strategies to lower costs, improve quality, and engage patients: the view from leading health system CEOs. Health Aff. 2013;32(2):321–7.

Acknowledgements

The authors thank Alanna Labat for her support in the early stages of this work. We also thank Steve Gregorich, PhD and Michael Acree, PhD for their statistical consultation and support. All authors have read and approved the final version of the manuscript. This study was supported by a grant from The Aetna Foundation (grant number 4051077), a national foundation based in Hartford, Connecticut that supports projects to promote wellness, health and access to high quality health care for everyone. Additional support was provided by a grant from the National Institute on Aging (grant P30AG015272) by under the Resource Centers for Minority Aging Research program by the National Institute on Aging, National Institutes of Health to the UCSF Center for Aging in Diverse Communities, and by the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through UCSF-CTSI Grant Number UL1 RR024131. The contents are solely the responsibility of the authors and not necessarily those of The Aetna Foundation, its directors, officers, or staff and do not necessarily represent the official views of the foundation. Similarly, this work was not funded by the Patient Centered Outcomes Research Institute nor represent the official views of the organization.

Availability of data and materials

We are happy to provide a copy of the survey items and de-identified data upon request.

Authors’ contributions

KW drafted the original study protocol, conducted the analyses and drafted the manuscript. AS and KG reviewed and finalized the study protocol, reviewed all analyses and edited the manuscript. KW, AS, and KG conceived of the study, and participated in its design and coordination and helped to finalized the manuscript. All authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Additional file

Additional file 1:

Survey Items. (DOCX 30 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Walker, K.O., Stewart, A.L. & Grumbach, K. Development of a survey instrument to measure patient experience of integrated care. BMC Health Serv Res 16, 193 (2016). https://doi.org/10.1186/s12913-016-1437-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-016-1437-z