Abstract

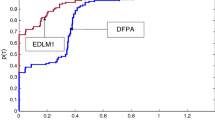

Two new conjugate residual algorithms are presented and analyzed in this article. Specifically, the main functions in the system considered are continuous and monotone. The methods are adaptations of the scheme presented by Narushima et al. (SIAM J Optim 21: 212–230, 2011). By employing the famous conjugacy condition of Dai and Liao (Appl Math Optim 43(1): 87–101, 2001), two different search directions are obtained and combined with the projection technique. Apart from being suitable for solving smooth monotone nonlinear problems, the schemes are also ideal for non-smooth nonlinear problems. By employing basic conditions, global convergence of the schemes is established. Report of numerical experiments indicates that the methods are promising.

Similar content being viewed by others

References

Hively, G.A.: On a class of nonlinear integral equations arising in transport theory. SIAM J. Math. Anal. 9(5), 787–792 (1978)

Xiao, Y., Zhu, H.: A conjugate gradient method to solve convex constrained monotone equations with applications in compressive sensing. J. Math. Anal. Appl. 405, 310–319 (2013)

Halilu, A.S., Majumder, A., Waziri, M.Y., Ahmed, K.: Signal recovery with convex constrained nonlinear monotone equations through conjugate gradient hybrid approach. Math. Comp. Simul. 187, 520–539 (2021)

Halilu, A.S., Majumder, A., Waziri, M.Y., Awwal, A.M., Ahmed, K.: On solving double direction methods for convex constrained monotone nonlinear equations with image restoration. Comput. Appl. Math. 40, 1–27 (2021)

Waziri, M.Y., Ahmed, K., Halilu, A.S., Sabiu, J.: Two new Hager-Zhang iterative schemes with improved parameter choices for monotone nonlinear systems and their applications in compressed sensing. Rairo Oper. Res. https://doi.org/10.1051/ro/2021190

Waziri, M.Y., Ahmed, K., Halilu, A.S.: A modified PRP-type conjugate gradient projection algorithm for solving large-scale monotone nonlinear equations with convex constraint. J. Comput. Appl. Math. 407, 114035 (2022)

Waziri, M.Y., Ahmed, K., Halilu, A.S., Awwal, A.M.: Modified Dai-Yuan iterative scheme for nonlinear systems and its application. Numer. Alg. Control Optim. https://doi.org/10.3934/naco.2021044

Ortega, J.M., Rheinboldt, W.C.: Iterative solution of nonlinear equations in several variables. Academic Press, New York (1970)

Solodov, V.M., Iusem, A.N.: Newton-type methods with generalized distances for constrained optimization. Optimization 41(3), 257–27 (1997)

Zhao, Y.B., Li, D.: Monotonicity of fixed point and normal mappings associated with variational inequality and its application. SIAM J. Optim. 11, 962–973 (2001)

Dennis, J.E., Mo\(\acute{r}\)e , J.J.: A characterization of superlinear convergence and its application to quasi-Newton methods. Math. Comput., 28, 549–560 (1974)

Dennis, J.E., Mor\(\acute{e}\), J.J.: Quasi-Newton method, motivation and theory. SIAM Rev. 19, 46–89 (1977)

Waziri, M.Y., Leong, W.J., Hassan, M.A.: Jacobian free-diagonal Newtons method for nonlinear systems with singular Jacobian. Malay. J. Math. Sci. 5(2), 241–255 (2011)

Halilu, A.S., Waziri, M.Y.: A transformed double step length method for solving large-scale systems of nonlinear equations. J. Num. Math. Stoch. 9, 20–32 (2017)

Halilu, A.S., Waziri, M.Y.: Enhanced matrix-free method via double step length approach for solving systems of nonlinear equations. Int. J. App. Math. Res. 6, 147–156 (2017)

Halilu, A.S., Majumder, A., Waziri, M.Y., Ahmed, K., Awwal, A.M.: Motion control of the two joint planar robotic manipulators through accelerated Dai-Liao method for solving system of nonlinear equations. Eng. Comput. (2022). https://doi.org/10.1108/EC-06-2021-0317

Halilu, A.S., Waziri, M.Y.: Inexact double step length method for solving systems of nonlinear equations. Stat. Optim. Inf. Comput. 8, 165–174 (2020)

Abdullahi, H., Halilu, A.S., Waziri, M.Y.: A modified conjugate gradient method via a double direction approach for solving large-scale symmetric nonlinear systems. J. Numer. Math. Stoch. 10(1), 32–44 (2018)

Abdullahi, H., Awasthi, A.K., Waziri, M.Y., Halilu, A.S.: Descent three-term DY-type conjugate gradient methods for constrained monotone equations with application. Comput. Appl. Math. 41(1), 1–28 (2022)

Halilu, A.S., Waziri, M.Y.: Solving systems of nonlinear equations using improved double direction method. J. Nigerian Mathl. Soc. 32(2), 287–301 (2020)

Bouaricha, A., Schnabel, R.B.: Tensor methods for large sparse systems of nonlinear equations. Math. Program. 82, 377–400 (1998)

Fasano, G., Lampariello, F., Sciandrone, M.: A truncated nonmonotone Gauss-Newton method for large-scale nonlinear least-squares problems. Comput. Optim. Appl. 34, 343–358 (2006)

Halilu, A.S., Waziri, M.Y.: An improved derivative-free method via double direction approach for solving systems of nonlinear equations. J. Ramanujan Math. Soc. 33(1), 75–89 (2018)

Levenberg, K.: A method for the solution of certain non-linear problems in least squares. Q. Appl. Math. 2, 164–166 (1944)

Waziri, M.Y., Sabiu, J.: A derivative-free conjugate gradient method and its global convergence for solving symmetric nonlinear equations. Int. J. Math. Math. Sci. (2015). https://doi.org/10.1155/2015/961487

Zhang, J., Wang, Y.: A new trust region method for nonlinear equations. Math. Methods Oper. Res. 58, 283–298 (2003)

Sabiu, J., Shah, A., Waziri, M.Y., Ahmed, K.: Modified Hager-Zhang conjugate gradient methods via singular value analysis for solving monotone nonlinear equations with convex constraint. Int. J. Comput. Meth. 18, 2050043 (2021)

Waziri, M.Y., Usman, H., Halilu, A.S., Ahmed, K.: Modified matrix-free methods for solving systems of nonlinear equations. Optimization 70, 2321–2340 (2021)

Babaie-Kafaki, S., Ghanbari, R.: A descent family of Dai-Liao conjugate gradient methods. Optim. Meth. Softw. 29(3), 583–591 (2013)

Livieris, I.E., Pintelas, P.: Globally convergent modified Perrys conjugate gradient method. Appl. Math. Comput. 218, 9197–9207 (2012)

Andrei, N.: Open problems in conjugate gradient algorithms for unconstrained optimization. Bull. Malays. Math. Sci. Soc. 34(2), 319–330 (2011)

Dai, Y.H., Yuan, Y.X.: Nonlinear conjugate gradient methods. Shanghai Scientific and Technical Publishers, Shanghai (2000)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2(1), 35–58 (2006)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (1999)

Sun, W., Yuan, Y.X.: Optimization Theory and Methods: Nonlinear Programming. Springer, NewYork (2006)

Perry, A.: A modified conjugate gradient algorithm. Oper. Res. Tech. Notes 26(6), 1073–1078 (1978)

Dai, Y.H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43(1), 87–101 (2001)

Babaie-Kafaki, S., Ghanbari, R.: The Dai-Liao nonlinear conjugate gradient method with optimal parameter choices. Eur. J. Oper. Res. 234, 625–630 (2014)

Babaie-Kafaki, S., Ghanbari, R.: Two optimal Dai-Liao conjugate gradient methods. Optimization 64, 2277–2287 (2015)

Babaie-Kafaki, S., Ghanbari, R.: A descent extension of of the Polak–Ribi\(\grave{e}\)re–Polyak conjugate gradient method. Comput. Math. Appl. 68(2014), 2005–2011 (2014)

Fatemi, M.: An optimal parameter for Dai-Liao family of conjugate gradient methods. J. Optim. Theory Appl. 169(2), 587–605 (2016)

Waziri, M.Y., Ahmed, K., Sabiu, J.: A Dai-Liao conjugate gradient method via modified secant equation for system of nonlinear equations. Arab. J. Math. 9, 443–457 (2020)

Waziri, M.Y., Ahmed, K., Sabiu, J.: Descent Perry conjugate gradient methods for systems of monotone nonlinear equations. Numer. Algor. 85, 763–785 (2020)

Arazm, M.R., Babaie-Kafaki, S., Ghanbari, R.: An extended Dai-Liao conjugate gradient method with global convergence for nonconvex functions. Glasnik Matematic. 52(72) (2017)

Babaie-Kafaki, S., Ghanbari, R., Mahdavi-Amiri, N.: Two new conjugate gradient methods based on modified secant equations. J. Comput. Appl. Math. 234(5), 1374–1386 (2010)

Ford, J.A., Narushima, Y., Yabe, H.: Multi-step nonlinear conjugate gradient methods for unconstrained minimization. Comput. Optim. Appl. 40(2), 191–216 (2008)

Li, G., Tang, C., Wei, Z.: New conjugacy condition and related new conjugate gradient methods for unconstrained optimization. J. Comput. Appl. Math. 202(2), 523–539 (2007)

Livieris, I.E., Pintelas, P.: A new class of spectral conjugate gradient methods based on a modified secant equation for unconstrained optimization. J. Comput. Appl. Math. 239, 396–405 (2013)

Livieris, I.E., Pintelas, P.: A descent Dai-Liao conjugate gradient method based on a modified secant equation and its global convergence. ISRN Comput. Math. (2012). https://doi.org/10.5402/2012/435495

Liu, D.Y., Shang, Y.F.: A new Perry conjugate gradient method with the generalized conjugacy condition. In: Computational Intelligence and Software Engineering (CiSE), 2010 International Conference on Issue Date: 10–12 (2010)

Liu, D.Y., Xu, G.Q.: A Perry descent conjugate gradient method with restricted spectrum, pp. 1–19. Optimization Online, Nonlinear Optimization (unconstrained optimization) (2011)

Andrei, N.: Accelerated adaptive Perry conjugate gradient algorithms based on the self-scaling BFGS update. J. Comput. Appl. Math. 325, 149–164 (2017)

Cheng, W.: A PRP type method for systems of monotone equations. Math. Comput. Model. 50, 15–20 (2009)

Polak, B.T.: The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 4, 94–112 (1969)

Polak, E., Ribière, G.: Note sur la convergence de méthodes de directions conjuguées. Rev. Fr. Inform. Rech. Oper. 16, 35–43 (1969)

Solodov, M.V., Svaiter, B.F.: A globally convergent inexact Newton method for systems of monotone equations. In: Reformulation: Nonsmooth, Piecewise Smooth, Semismooth and Smoothing Methods, Kluwer Academic Publishers, 355–369(1999)

Yu, G.: A derivative-free method for solving large-scale nonlinear systems of equations. J. Ind. Manag. Optim. 6, 149–160 (2010)

Yu, G.: Nonmonotone spectral gradient-type methods for large-scale unconstrained optimization and nonlinear systems of equations. Pac. J. Optim. 7, 387–404 (2011)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone linesearch technique for Newtons method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Li, D.H., Fukushima, M.: A derivative-free line search and global convergence of Broyden-like method for nonlinear equations. Optim. Methods Softw. 13, 583–599 (2000)

Waziri, M.Y., Ahmed, K., Sabiu, J.: A family of Hager-Zhang conjugate gradient methods for system of monotone nonlinear equations. Appl. Math. Comput. 361, 645–660 (2019)

Waziri, M.Y., Ahmed, K., Sabiu, J., Halilu, A.S.: Enhanced Dai-Liao conjugate gradient methods for systems of monotone nonlinear equations. SeMA 78, 15–51 (2021)

Waziri, M.Y., Ahmed, K.: Two descent Dai-Yuan conjugate gradient methods for systems of monotone nonlinear equations. J. Sci. Comput. 90, 36 (2022). https://doi.org/10.1007/s10915-021-01713-7

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10, 177–182 (1999)

Narushima, Y., Yabe, H., Ford, J.A.: A three-term conjugate gradient method with sufficient descent property for unconstrained optimization. SIAM J. Optim. 21, 212–230 (2011)

Zhang, L., Zhou, W., Li, D.H.: A descent modified Polak–Ribi\(\grave{e}\)re–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26, 629–640 (2006)

Zhang, L., Zhou, W., Li, D.H.: Global convergence of a modified Fletcher-Reeves conjugate gradient method with Armijo-type line search. Numerische Mathematik 104, 561–572 (2006)

Zhang, L., Zhou, W., Li, D.H.: Some descent three-term conjugate gradient methods and their global convergence. Optim. Meth. Soft. 22, 697–711 (2007)

Andrei, N.: A simple three-term conjugate gradient algorithm for unconstrained optimization. J. Comput. Appl. Math. 241, 19–29 (2013)

Arzuka, I., Abu Bakar, M., Leon, W.J.: A scaled three-term conjugate gradient method for unconstrained optimization. J. Ineq. Appl. 2016, 325 (2016). https://doi.org/10.1186/s13660-016-1239-1

Kobayashi, H., Narushima, Y., Yabe, H.: Descent three-term conjugate gradient methods based on secant conditions for unconstrained optimization. Optim. Meth. Soft. (2017). https://doi.org/10.1080/10556788.2017.1338288

Liu, J., Du, S.: Modified three-term conjugate gradient method and its applications. Math. Prob. Eng. (2019). https://doi.org/10.1155/2019/5976595

Liu, J., Wu, X.: New three-term conjugate gradient method for solving unconstrained optimization problems. ScienceAsia 40, 295–300 (2014)

Wu, G., Li, Y., Yuan, G.: A three-term conjugate gradient algorithm with quadratic convergence for unconstrained optimization problems. Math. Prob. Eng. (2018). https://doi.org/10.1155/2018/4813030

Nocedal, J.: Updating quasi-Newton matrices with limited storage. Math. Comput. 35, 773–782 (1980)

Shanno, D.F.: Conjugate gradient methods with inexact searches. Math. Oper. Res. 3, 244–256 (1978)

Zhang, J., Xiao, Y., Wei, Z.: Nonlinear conjugate gradient methods with sufficient descent condition for large-scale unconstrained optimization. Math. Prob. Eng. (2009). https://doi.org/10.1155/2009/243290

Andrei, N.: A modified Polak–Ribi\(\grave{e}\)re–Polyak conjugate gradient algorithm for unconstrained optimization. Optimization 60, 1457–1471 (2011)

Hestenes, M.R., Stiefel, E.L.: Methods of conjugate gradients for solving linear systems. J. Res. Nat. Bur. Stand. 49, 409–436 (1952)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Dai, Y.H., Kou, C.X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23(1), 296–320 (2013)

Abubakar, A.B., Kumam, P.: An improved three-term derivative-free method for solving nonlinear equations. Comput. Appl. Math. 37, 6760–6773 (2018)

Yuan, G., Zhang, M.: A three term Polak–Ribi\(\grave{e}\)re–Polyak conjugate gradient algorithm for large-scale nonlinear equations. J. Comput. Appl. Math. 286, 186–195 (2015)

Dolan, E.D., Mor\(\acute{e}\), J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–2013 (2002)

Zhou, W., Li, D.: Limited memory MBFGS method for nonlinear monotone equations. J. Comput. Math. 25(1), 89–96 (2007)

Koorapetse, M., Kaelo, P.: Self adaptive spectral conjugate gradient method for solving nonlinear monotone equations. J. Egy. Math. Soc. 28(4) (2020)

La Cruz, W., Martínez, J., Raydan, M.: Spectral residual method without gradient information for solving large-scale nonlinear systems of equations. Math. Comput. 75(255), 1429–1448 (2006)

La Cruz, W., Martinez, J., Raydan, M.: Spectral residual method without gradient information for solving large-scale nonlinear systems of equations: Theory and experiments. Technical Report RT-04-08 (2004)

Zhou, W., Shen, D.: Convergence properties of an iterative method for solving symmetric non-linear equations. J. Optim. Theory Appl. 164(1), 277–289 (2015)

Liu, J.K., Li, S.J.: A projection method for convex constrained monotone nonlinear equations with applications. Comput. Math. Appl. 70(10), 2442–2453 (2015)

Yan, Q.R., Peng, X.Z., Li, D.H.: A globally convergent derivative-free method for solving large-scale nonlinear monotone equations. J. Comput. Appl. Math. 234, 649–657 (2010)

Acknowledgements

We would like to extend our gratitude to the anonymous reviewers for their comments and suggestions that helped in improving the work. Also, our gratitude goes to the entire members of the numerical optimization research group, Bayero University, Kano for their encouragements in the course of this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No conflict of interest was presented by the authors.

Additional information

Communicated by José Alberto Cuminato.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

By applying the same approach as for Algorithm 3.1, we obtain that the sequence \(\{x_k\}\) generated by Algorithm 3.2 is bounded.

Lemma 6.1

Given that Assumption 3.3 holds and \(\{x_{k}\}\) is the sequence generated by Algorithm 3.2, then a constant \({\hat{M}}>0\) exists such that

Proof

From (3.33) we analyze two cases: Case(1): \(t\frac{\left\langle F_k,s_{k-1}\right\rangle \left\langle d_{k-1},{\bar{y}}_{k-1}\right\rangle }{\Vert {\bar{y}}_{k-1}\Vert ^2\left\langle F_k,d_{k-1}\right\rangle }>\xi -\psi \frac{\Vert {\bar{y}}_{k-1}\Vert ^2\Vert s_{k-1}\Vert ^2}{\left\langle s_{k-1},{\bar{y}}_{k-1}\right\rangle ^2}\). Then

which from (3.11), (3.34), (4.15) and the Cauchy Schwartz inequality, we have

Similarly, applying (3.11), (3.34), (4.15) and the Cauchy inequality, we can write

So, using (3.35), (6.3), (6.4) and the Cauchy Schwartz inequality, we obtain

Setting \(\left( 1+2\left( \frac{(L+m)}{m}+\frac{t}{m}\right) \right) \kappa =M_1\), we obtain

Case(2): \(t\frac{\left\langle F_k,s_{k-1}\right\rangle \left\langle d_{k-1},{\bar{y}}_{k-1}\right\rangle }{\Vert {\bar{y}}_{k-1}\Vert ^2\left\langle F_k,d_{k-1}\right\rangle }<\xi -\psi \frac{\Vert {\bar{y}}_{k-1}\Vert ^2\Vert s_{k-1}\Vert ^2}{\left\langle s_{k-1},{\bar{y}}_{k-1}\right\rangle ^2}\). Then

So, using (3.11), (3.34), (4.15) and the Cauchy Schwartz inequality, we have

Similarly, applying (3.11), (3.34), (4.15) and the Cauchy Schwartz inequality, we can write

So, using (3.35), (6.8), (6.9) and the Cauchy Schwartz inequality, we obtain

Again setting \(\left( 1+2\left( \frac{(L+m)^2}{m}+\xi \frac{(L+m)^2}{m^2}+\psi \frac{(L+m)^4}{m^4}\right) \right) \kappa =M_2\), we obtain

Therefore, from (6.6) and (6.11), we have

So, setting \({\hat{M}}=\max \{M_1,M_2 \}\), we obtain the result. \(\square\)

Theorem 6.2

Given Assumptions 3.1–3.3 hold and the sequences \(\{x_k\}\) and \(\{{z}_k\}\) are generated by Algorithm 3.2. Then

The proof is established in a similar manner as Algorithm 3.1.

Appendix 2

Appendix 3

See Table 5.

The twelve test problems used for the experiments reported in Tables 1, 2, 3, 4.

Problem 5.1

Strictly Convex Function [83]. \(F_i(x)=e^{x_i}-1, \quad i=1,2,\ldots ,n\).

Problem 5.2

[85].

-

\(F_1(x)=2x_1+\sin {x_1}-1\),

-

\(F_i(x)=2x_i-x_{i-1}+\sin {x_i}-1\),

-

\(F_n(x)=2x_n+\sin {x_n}-1,\quad i=2,3,\ldots ,n-1\).

Problem 5.3

[86].

-

\(F_1(x)=2.5x_{1}+x_{2}-1\),

-

\(F_i(x)=x_{i-1}+2.5x_{i}+x_{i+1}-1, \quad i=2,\ldots ,n-1\),

-

\(F_n(x)=2x_{n-1}+2.5x_{n}-1\).

Problem 5.4

Exponential Function [87].

-

\(F_1(x)=e^{x_1}-1\),

-

\(F_i(x)=e^{x_i}+x_i-1\), \(\quad i=2,\ldots ,n\).

Problem 5.5

Non-smooth Function [42]. \(F_i(x)=2x_i-\sin {|x_i|}\), \(\quad i=1,2, \ldots ,n\).

Problem 5.6

Decretized Chandrasekhar Equation [88].

-

\(F_i(x)=x_i-\left( 1-\frac{c}{2n} \displaystyle \sum _{j=1}^{n} \frac{\mu _ix_j}{\mu _i+\mu _j}\right) ^{-1},\quad i=1,2,\ldots ,n\),

-

with \(c\in [0,1)\) and \(\mu =\frac{ i-0.5}{n},\) for \(1\le i \le n.\) (c is taken as 0.9 in the experiment).

Problem 5.7

[89] The Function F(x) is given by: \(F(x) = A_1x + b_2\),

where \(b_2 = (\sin x_1-1, \ldots ,\sin x_n-1)^T\), and

\(A_1= \left( \begin{matrix} 2&-1&&&\\ 0&2&-1&&\\ &\ddots & \ddots & \ddots &\\ & &\ddots & \ddots & -1\\ &&&0&2 \end{matrix} \right)\).

Problem 5.8

[85] Nonsmooth Function. \(F_i(x)=x_i-\sin |x_i-1|\), \(\quad i=1, \ldots ,n\).

Problem 5.9

Nonsmooth Function [85]. \(F_i(x)=x_i-2\sin |x_i-1|\), \(\quad i=1, \ldots ,n\).

Problem 5.10

Tridiagonal Exponential Function [90].

-

\(F_1(x)= x_{1}-e^{\left( cos{\frac{x_{1}+x_{2}}{n+1}}\right) }\),

-

\(F_i(x)=x_{i}-e^{\left( cos{\frac{x_{i-1}+x_{i}+x_{i+1}}{n+1}}\right) }, \quad i=2,3,\ldots ,n-1\),

-

\(F_n(x)=x_{n}-e^{\left( cos{\frac{x_{n-1}+x_{n}}{n+1}}\right) }\).

Problem 5.11

The Logarithmic Function obtained from [83]. \(F_i(x)=\ln {(x_i+1)}-\frac{x_i}{n}\), \(\quad i=2, \dots n\).

Problem 5.12

[91]. \(F_i(x)=x_{i}-\frac{1}{n}x_{i}^2+\frac{1}{n}\displaystyle \sum _{j=1}^{n}x_{i}+i\), \(\quad i=1,2,\ldots ,n.\)

Remark 6.3

From the selected 12 problems, it can be observed that some have a diagonal Jacobian. Such a separability of the variables turns the outcomes quite independent of the dimensions, as can be observed in the results of Tables 1, 2, 3, 4. Second, although Problem 5.9 is not globally monotone, it seems that local monotonicity is enough to the good behavior of the proposed algorithms, at least with the selected initial points.

Rights and permissions

About this article

Cite this article

Waziri, M.Y., Ahmed, K. & Halilu, A.S. Adaptive three-term family of conjugate residual methods for system of monotone nonlinear equations. São Paulo J. Math. Sci. 16, 957–996 (2022). https://doi.org/10.1007/s40863-022-00293-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40863-022-00293-0

Keywords

- Non-smooth functions

- Backtracking line search

- Projection technique

- Conjugacy condition

- Descent condition